A Review of Image Sensors Used in Near-Infrared and Shortwave Infrared Fluorescence Imaging

Abstract

1. Introduction

2. The Types of Image Sensors

2.1. Image Sensors Used in NIR Fluorescence Imaging Systems

2.1.1. CCD Cameras

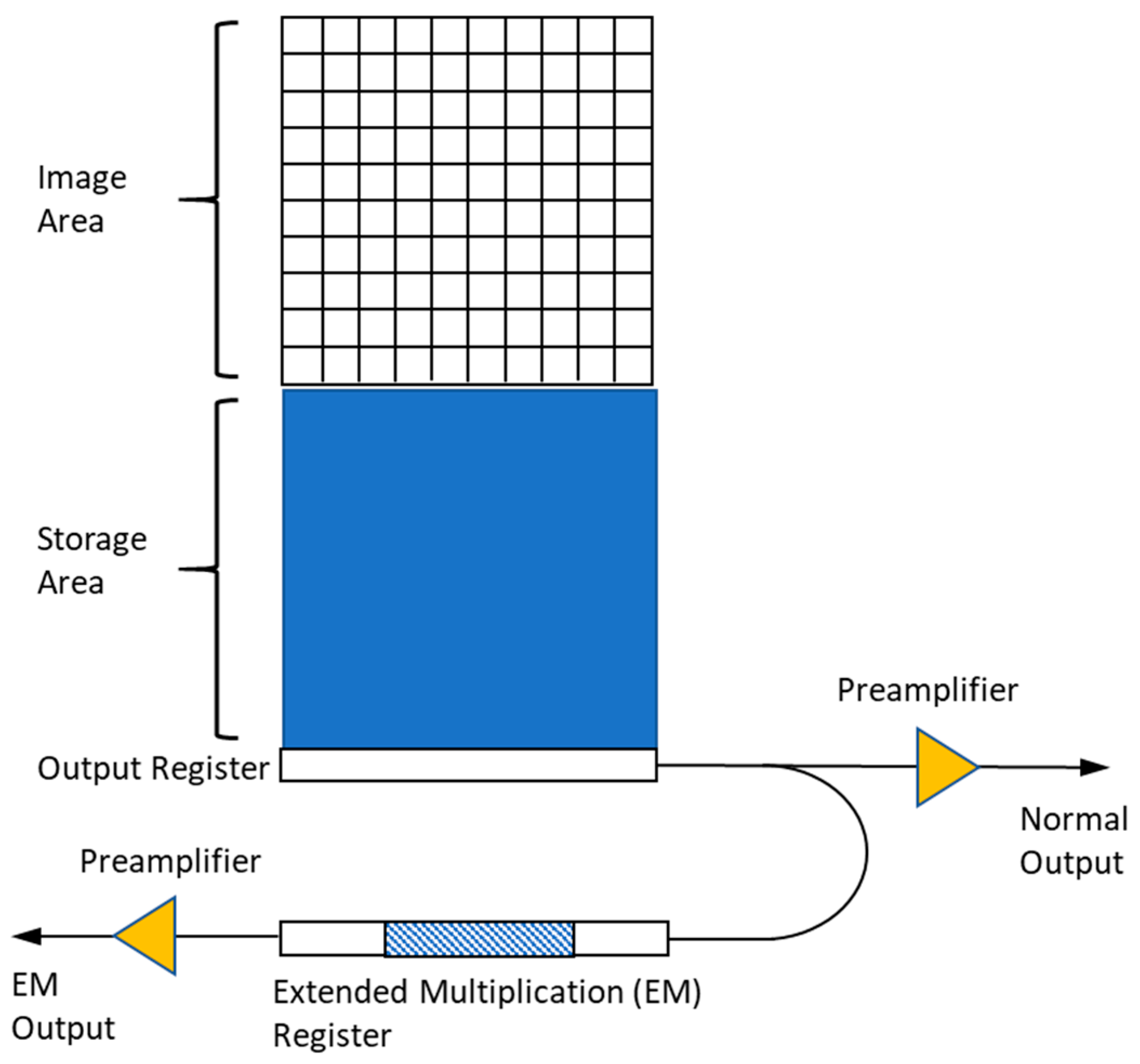

2.1.2. EMCCD Cameras

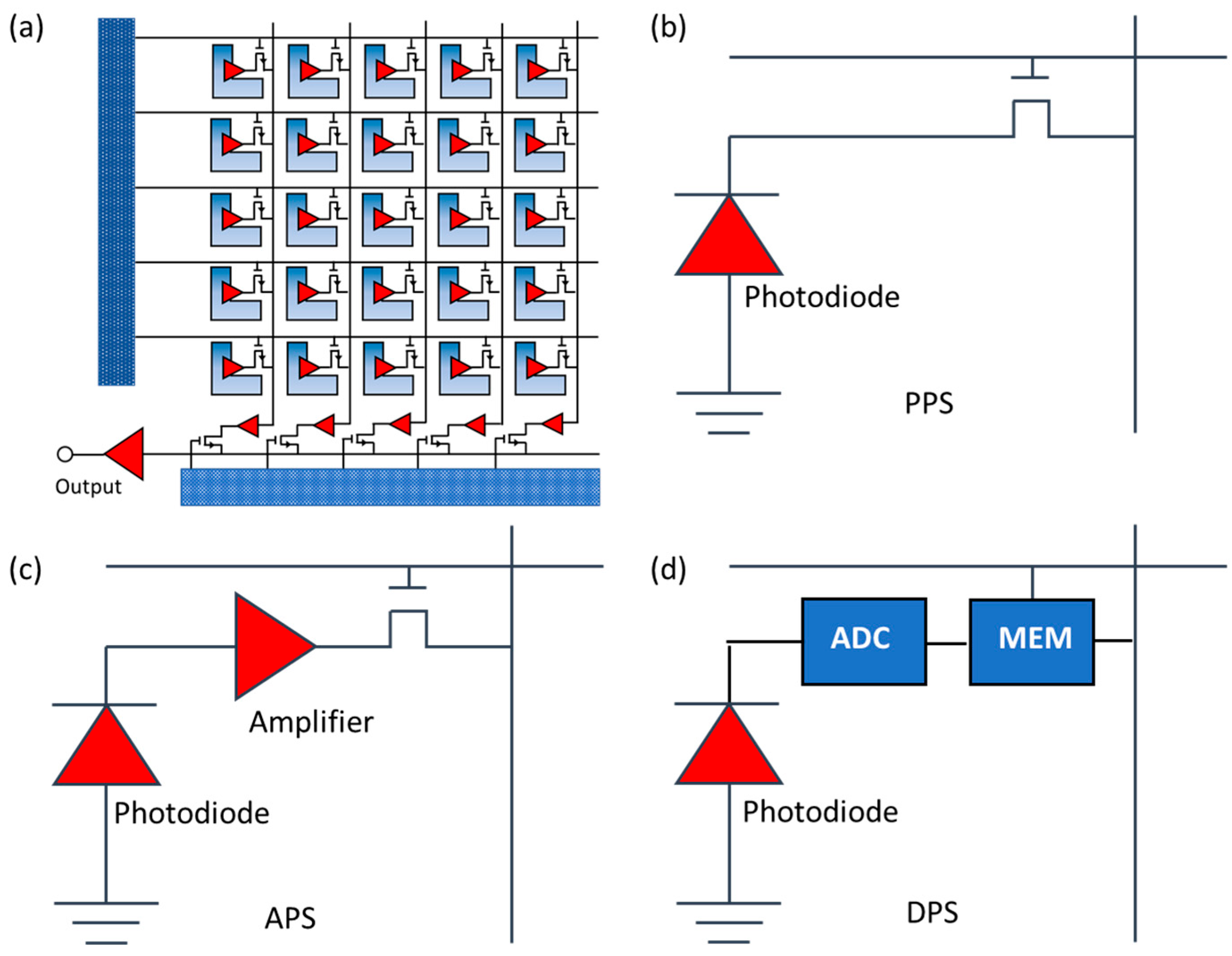

2.1.3. CMOS Cameras

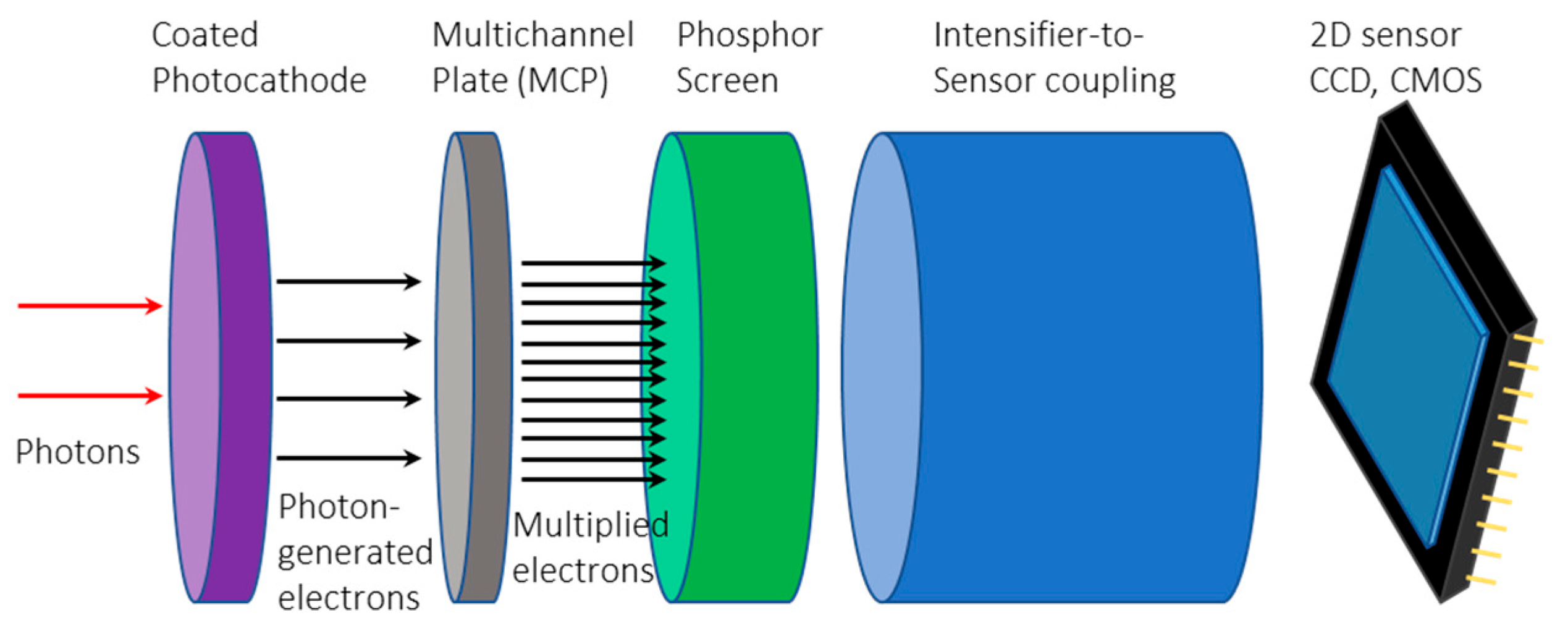

2.1.4. Intensified Image Sensors

2.2. Image Sensors Used in SWIR Imaging Systems

2.2.1. InGaAs

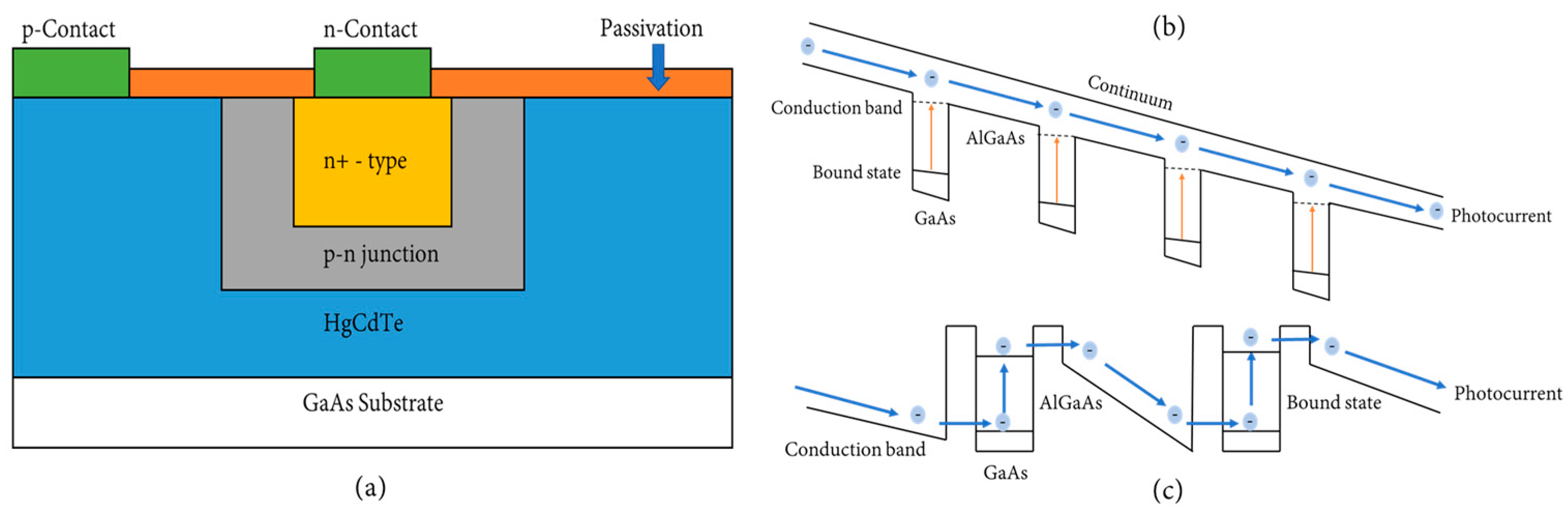

2.2.2. HgCdTe (MCT)

2.2.3. Quantum Well Infrared Photodetectors (QWIPs)

2.2.4. QD Detectors

2.3. FLIM-Based Image Sensors

2.3.1. PMT-Based Sensor

2.3.2. SPAD-Array Sensor

2.3.3. Streak Cameras

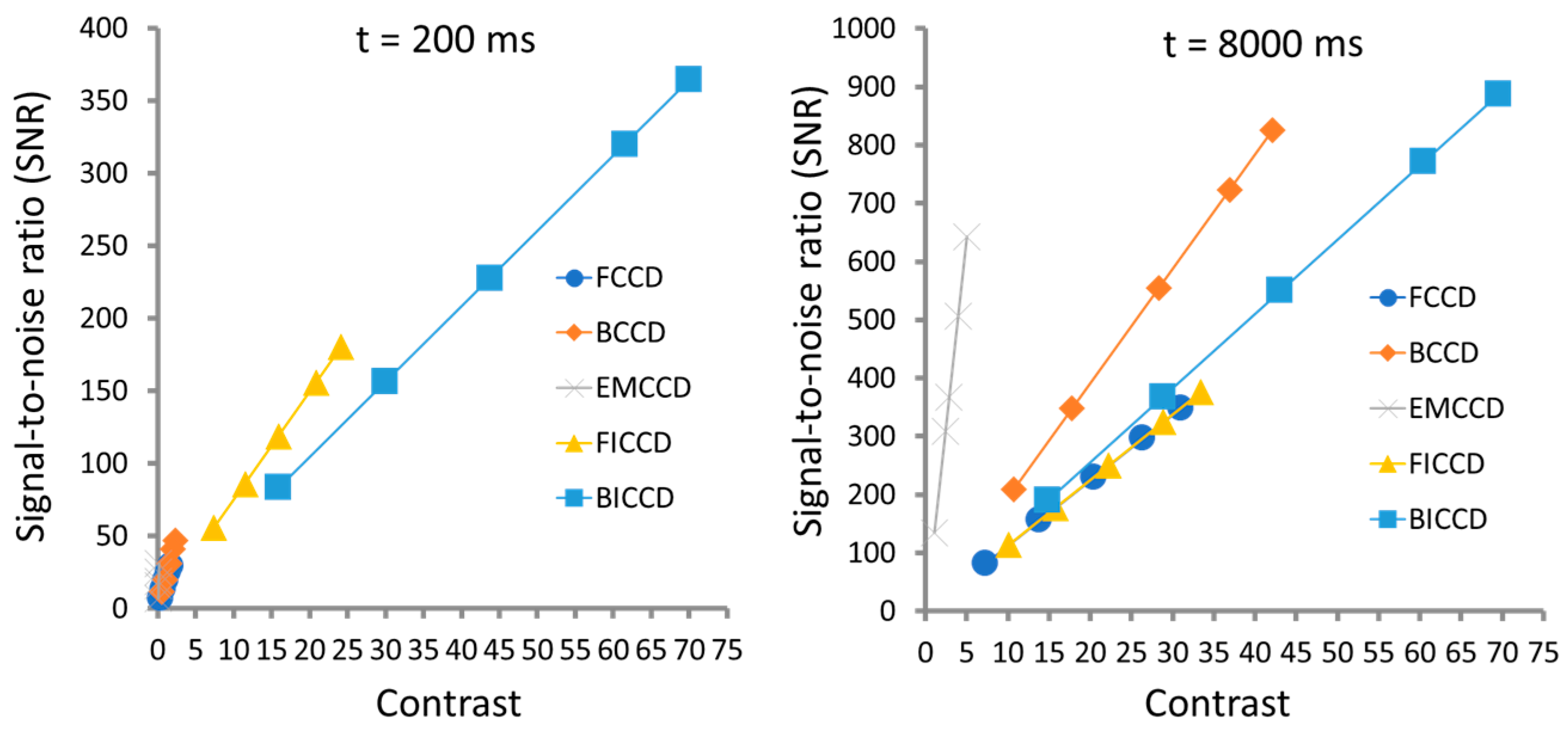

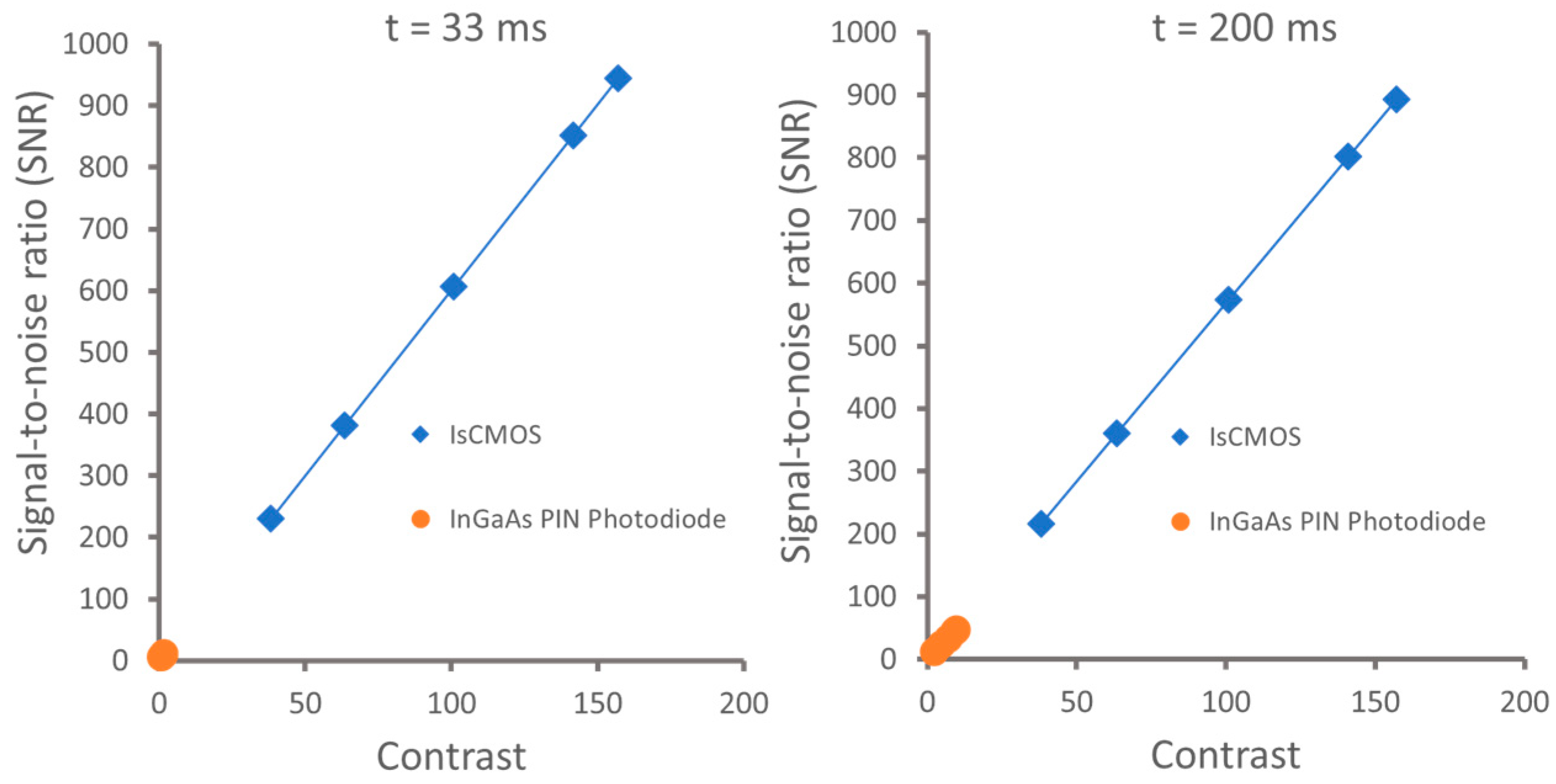

3. Imaging Performance Comparison of Image Sensors

4. Conclusions and Outlook

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhu, B.; Sevick-Muraca, E. A review of performance of near-infrared fluorescence imaging devices used in clinical studies. Br. J. Radiol. 2015, 88, 20140547. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Xu, Y.; Xu, K.; Dai, Z. Current trends and key considerations in the clinical translation of targeted fluorescent probes for intraoperative navigation. Aggregate 2021, 2, e23. [Google Scholar] [CrossRef]

- Xu, Y.; Pang, Y.; Luo, L.; Sharma, A.; Yang, J.; Li, C.; Liu, S.; Zhan, J.; Sun, Y. De Novo Designed Ru (II) Metallacycle as a Microenvironment-Adaptive Sonosensitizer and Sonocatalyst for Multidrug-Resistant Biofilms Eradication. Angew. Chem. 2024, 136, e202319966. [Google Scholar] [CrossRef]

- Tanaka, E.; Chen, F.Y.; Flaumenhaft, R.; Graham, G.J.; Laurence, R.G.; and Frangioni, J.V. Real-time assessment of cardiac perfusion, coronary angiography, and acute intravascular thrombi using dual-channel near-infrared fluorescence imaging. J. Thorac. Cardiovasc. Surg. 2009, 138, 33–140. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Kato, Y.; Oda, J.; Kumar, A.; Watabe, T.; Imizu, S.; Oguri, D.; Sano, H.; Hirose, Y. The application of intraoperative near-infrared indocyanine green videoangiography and analysis of fluorescence intensity in cerebrovascular surgery. Surg. Neurol. Int. 2011, 2, 42. [Google Scholar] [PubMed]

- Marshall, M.V.; Rasmussen, J.C.; Tan, I.-C.; Aldrich, M.B.; Adams, K.E.; Wang, X.; Fife, C.E.; Maus, E.A.; Smith, L.A.; Sevick-Muraca, E.M. Near-infrared fluorescence imaging in humans with indocyanine green: A review and update. Open Surg. Oncol. J. Online 2010, 2, 12. [Google Scholar] [CrossRef] [PubMed]

- Pop, C.; Barbieux, R.; Moreau, M.; Noterman, D.; De Neubourg, F.; Chintinne, M.; Liberale, G.; Larsimont, D.; Nogaret, J.; Bourgeois, P.; et al. Tumor localization and surgical margins identification in breast cancer using ICG-fluorescence imaging. Eur. J. Surg. Oncol. 2019, 45, e101. [Google Scholar] [CrossRef]

- Hong, G.; Antaris, A.L.; Dai, H. Near-infrared fluorophores for biomedical imaging. Nat. Biomed. Eng. 2017, 1, 0010. [Google Scholar] [CrossRef]

- Hong, G.; Diao, S.; Chang, J.; Antaris, A.L.; Chen, C.; Zhang, B.; Zhao, S.; Atochin, D.N.; Huang, P.L.; Andreasson, K.I.; et al. Through-skull fluorescence imaging of the brain in a new near-infrared window. Nat. Photonics 2014, 8, 723–730. [Google Scholar] [CrossRef]

- Hong, G.; Lee, J.C.; Robinson, J.T.; Raaz, U.; Xie, L.; Huang, N.F.; Cooke, J.P.; Dai, H. Multifunctional in vivo vascular imaging using near-infrared II fluorescence. Nat. Med. 2012, 18, 1841–1846. [Google Scholar] [CrossRef]

- Bruns, O.T.; Bischof, T.S.; Harris, D.K.; Franke, D.; Shi, Y.; Riedemann, L.; Bartelt, A.; Jaworski, F.B.; Carr, J.A.; Rowlands, C.J.; et al. Next-generation in vivo optical imaging with short-wave infrared quantum dots. Nat. Biomed. Eng. 2017, 1, 0056. [Google Scholar] [CrossRef]

- Naczynski, D.; Tan, M.; Zevon, M.; Wall, B.; Kohl, J.; Kulesa, A.; Chen, S.; Roth, C.; Riman, R.; Moghe, P.V. Rare-earth-doped biological composites as in vivo shortwave infrared reporters. Nat. Commun. 2013, 4, 2199. [Google Scholar] [CrossRef]

- Yakovliev, A.; Ziniuk, R.; Wang, D.; Xue, B.; Vretik, L.; Nikolaeva, O.; Tan, M.; Chen, G.; Slominskii, Y.L.; Qu, J.; et al. Hyperspectral multiplexed biological imaging of nanoprobes emitting in the short-wave infrared region. Nanoscale Res. Lett. 2019, 14, 1–11. [Google Scholar] [CrossRef]

- Tu, L.; Li, C.; Ding, Q.; Sharma, A.; Li, M.; Li, J.; Kim, J.S.; Sun, Y. Augmenting Cancer Therapy with a Supramolecular Immunogenic Cell Death Inducer: A Lysosome-Targeted NIR-Light-Activated Ruthenium (II) Metallacycle. J. Am. Chem. Soc. 2024, 146, 8991–9003. [Google Scholar] [CrossRef]

- Tu, L.; Li, C.; Xiong, X.; Hyeon Kim, J.; Li, Q.; Mei, L.; Li, J.; Liu, S.; Seung Kim, J.; Sun, Y. Engineered metallacycle-based supramolecular photosensitizers for effective photodynamic therapy. Angew. Chem. 2023, 135, e202301560. [Google Scholar] [CrossRef]

- Jia, S.; Lin, E.Y.; Mobley, E.B.; Lim, I.; Guo, L.; Kallepu, S.; Low, P.S.; Sletten, E.M. Water-soluble chromenylium dyes for shortwave infrared imaging in mice. Chem 2023, 9, 3648–3665. [Google Scholar] [CrossRef]

- Starosolski, Z.; Bhavane, R.; Ghaghada, K.B.; Vasudevan, S.A.; Kaay, A.; Annapragada, A. Indocyanine green fluorescence in second near-infrared (NIR-II) window. PLoS ONE 2017, 12, e0187563. [Google Scholar] [CrossRef]

- Carr, J.A.; Franke, D.; Caram, J.R.; Perkinson, C.F.; Saif, M.; Askoxylakis, V.; Datta, M.; Fukumura, D.; Jain, R.K.; Bawendi, M.G. Shortwave infrared fluorescence imaging with the clinically approved near-infrared dye indocyanine green. Proc. Natl. Acad. Sci. USA 2018, 115, 4465–4470. [Google Scholar] [CrossRef]

- Zhu, B.; Rasmussen, J.C.; Sevick-Muraca, E.M. A matter of collection and detection for intraoperative and noninvasive near-infrared fluorescence molecular imaging: To see or not to see? Med. Phys. 2014, 41, 022105. [Google Scholar] [CrossRef]

- Zhu, B.; Sevick-Muraca, E.M. Minimizing excitation light leakage and maximizing measurement sensitivity for molecular imaging with near-infrared fluorescence. J. Innov. Opt. Health Sci. 2011, 4, 301–307. [Google Scholar] [CrossRef]

- Zhu, B.; Tan, I.-C.; Rasmussen, J.; Sevick-Muraca, E. Validating the sensitivity and performance of near-infrared fluorescence imaging and tomography devices using a novel solid phantom and measurement approach. Technol. Cancer Res. Treat. 2012, 11, 95–104. [Google Scholar] [CrossRef]

- Zhu, B.; Rasmussen, J.C.; Litorja, M.; Sevick-Muraca, E.M. Determining the performance of fluorescence molecular imaging devices using traceable working standards with SI units of radiance. IEEE Trans. Med. Imaging 2015, 35, 802–811. [Google Scholar] [CrossRef]

- Zhu, B.; Kwon, S.; Rasmussen, J.C.; Litorja, M.; Sevick-Muraca, E.M. Comparison of NIR versus SWIR fluorescence image device performance using working standards calibrated with SI units. IEEE Trans. Med. Imaging 2019, 39, 944–951. [Google Scholar] [CrossRef]

- Dhar, N.K.; Dat, R.; Sood, A.K. Advances in infrared detector array technology. Optoelectron. Adv. Mater. Devices 2013, 7, 149–188. [Google Scholar]

- Li, C.; Chen, G.; Zhang, Y.; Wu, F.; Wang, Q. Advanced fluorescence imaging technology in the near-infrared-II window for biomedical applications. J. Am. Chem. Soc. 2020, 142, 14789–14804. [Google Scholar] [CrossRef]

- Dussault, D.; Hoess, P. ICCDs edge out electron-multiplying CCDs in low light. Laser Focus World 2004, 40, 69–75. [Google Scholar]

- Janesick, J.; Putnam, G. Developments and applications of high-performance CCD and CMOS imaging arrays. Annu. Rev. Nucl. Part. Sci. 2003, 53, 263–300. [Google Scholar] [CrossRef]

- El Gamal, A.; Eltoukhy, H. CMOS image sensors. IEEE Circuits Devices Mag. 2005, 21, 6–20. [Google Scholar] [CrossRef]

- Bigas, M.; Cabruja, E.; Forest, J.; Salvi, J. Review of CMOS image sensors. Microelectron. J. 2006, 37, 433–451. [Google Scholar] [CrossRef]

- Ntziachristos, V.; Tung, C.-H.; Bremer, C.; Weissleder, R. Fluorescence Molecular Tomography Resolves Protease Activity In Vivo; Nature Publishing Group US: New York, NY, USA, 2002. [Google Scholar]

- Bjurlin, M.A.; McClintock, T.R.; Stifelman, M.D. Near-infrared fluorescence imaging with intraoperative administration of indocyanine green for robotic partial nephrectomy. Curr. Urol. Rep. 2015, 16, 1–7. [Google Scholar] [CrossRef]

- Denvir, D.J.; Coates, C.G. Electron-multiplying CCD technology: Application to ultrasensitive detection of biomolecules. In Biomedical Nanotechnology Architectures and Applications; SPIE: San Jose, CA, USA, 2002; Volume 4626, pp. 502–512. [Google Scholar]

- Denvir, D.J.; Conroy, E. Electron multiplying ccds. In Opto-Ireland 2002: Optical Metrology, Imaging, and Machine Vision; SPIE: Galway, Ireland, 2003; Volume 4877, pp. 55–68. [Google Scholar]

- Mullikin, J.C.; van Vliet, L.J.; Netten, H.; Boddeke, F.R.; Van der Feltz, G.; Young, I.T. Methods for CCD camera characterization. In Image Acquisition and Scientific Imaging Systems; SPIE: San Jose, CA, USA, 1994; Volume 2173, pp. 73–84. [Google Scholar]

- Dussault, D.; Hoess, P. Noise performance comparison of ICCD with CCD and EMCCD cameras. In Infrared Systems and Photoelectronic Technology; SPIE: Denver, CO, USA, 2004; Volume 5563, pp. 195–204. [Google Scholar]

- Zhu, B.; Robinson, H.; Zhang, S.; Wu, G.; Sevick-Muraca, E.M. Longitudinal far red gene-reporter imaging of cancer metastasis in preclinical models: A tool for accelerating drug discovery. Biomed. Opt. Express 2015, 6, 3346–3351. [Google Scholar] [CrossRef]

- Robinson, H.A.; Kwon, S.; Hall, M.A.; Rasmussen, J.C.; Aldrich, M.B.; Sevick-Muraca, E.M. Non-invasive optical imaging of the lymphatic vasculature of a mouse. JoVE J. Vis. Exp. 2013, 73, e4326. [Google Scholar]

- Theuwissen, A. CMOS image sensors: State-of-the-art and future perspectives. In Proceedings of the ESSDERC 2007—37th European Solid State Device Research Conference, Munich, Germany, 11–13 September 2007; pp. 21–27. [Google Scholar] [CrossRef]

- Rasmussen, J.C.; Zhu, B.; Morrow, J.R.; Aldrich, M.B.; Sahihi, A.; Harlin, S.A.; Fife, C.E.; O’Donnell, T.F., Jr.; Sevick-Muraca, E.M. Degradation of lymphatic anatomy and function in early venous insufficiency. J. Vasc. Surg. Venous Lymphat. Disord. 2021, 9, 720–730.e2. [Google Scholar] [CrossRef]

- Rasmussen, J.C.; Aldrich, M.B.; Fife, C.E.; Herbst, K.L.; Sevick-Muraca, E.M. Lymphatic function and anatomy in early stages of lipedema. Obesity 2022, 30, 1391–1400. [Google Scholar] [CrossRef]

- Sevick-Muraca, E.M.; Fife, C.E.; Rasmussen, J.C. Imaging peripheral lymphatic dysfunction in chronic conditions. Front. Physiol. 2023, 14, 1132097. [Google Scholar] [CrossRef]

- Choi, J.; Shin, J.G.; Kwon, H.-S.; Tak, Y.-O.; Park, H.J.; Ahn, J.-C.; Eom, J.B.; Seo, Y.; Park, J.W.; Choi, Y.; et al. Development of intraoperative near-infrared fluorescence imaging system using a dual-CMOS single camera. Sensors 2022, 22, 5597. [Google Scholar] [CrossRef]

- Choi, J.; Shin, J.-G.; Tak, Y.-O.; Seo, Y.; Eom, J. Single camera-based dual-channel near-infrared fluorescence imaging system. Sensors 2022, 22, 9758. [Google Scholar] [CrossRef]

- Garcia, M.; Edmiston, C.; York, T.; Marinov, R.; Mondal, S.; Zhu, N.; Sudlow, G.P.; Akers, W.J.; Margenthaler, J.; Achilefu, S.; et al. Bio-inspired imager improves sensitivity in near-infrared fluorescence image-guided surgery. Optica 2018, 5, 413–422. [Google Scholar] [CrossRef]

- Chen, Z.; Zhu, N.; Pacheco, S.; Wang, X.; Liang, R. Single camera imaging system for color and near-infrared fluorescence image guided surgery. Biomed. Opt. Express 2014, 5, 2791–2797. [Google Scholar] [CrossRef]

- Zhu, B.; Rasmussen, J.C.; Lu, Y.; Sevick-Muraca, E.M. Reduction of excitation light leakage to improve near-infrared fluorescence imaging for tissue surface and deep tissue imaging. Med. Phys. 2010, 37, 5961–5970. [Google Scholar] [CrossRef]

- Chrzanowski, K. Review of night vision technology. Opto-Electron. Rev. 2013, 21, 153–181. [Google Scholar] [CrossRef]

- Hain, R.; Kähler, C.J.; Tropea, C. Comparison of CCD, CMOS and intensified cameras. Exp. Fluids 2007, 42, 403–411. [Google Scholar] [CrossRef]

- Godavarty, A.; Eppstein, M.J.; Zhang, C.; Theru, S.; Thompson, A.B.; Gurfinkel, M.; Sevick-Muraca, E.M. Fluorescence-enhanced optical imaging in large tissue volumes using a gain-modulated ICCD camera. Phys. Med. Biol. 2003, 48, 1701. [Google Scholar] [CrossRef]

- Darne, C.D.; Lu, Y.; Tan, I.-C.; Zhu, B.; Rasmussen, J.C.; Smith, A.M.; Yan, S.; Sevick-Muraca, E.M. A compact frequency-domain photon migration system for integration into commercial hybrid small animal imaging scanners for fluorescence tomography. Phys. Med. Biol. 2012, 57, 8135. [Google Scholar] [CrossRef] [PubMed]

- Rasmussen, J.C.; Kwon, S.; Sevick-Muraca, E.M.; Cormier, J.N. The role of lymphatics in cancer as assessed by near-infrared fluorescence imaging. Ann. Biomed. Eng. 2012, 40, 408–421. [Google Scholar] [CrossRef]

- Rutz, F.; Bächle, A.; Aidam, R.; Niemasz, J.; Bronner, W.; Zibold, A.; Rehm, R. (Eds.) InGaAs SWIR photodetectors for night vision. In Infrared Technology and Applications XLV; SPIE: Baltimore, MD, USA, 2019; Volume 11002, pp. 202–208. [Google Scholar]

- Arslan, Y.; Oguz, F.; Besikci, C. Extended wavelength SWIR InGaAs focal plane array: Characteristics and limitations. Infrared Phys. Technol. 2015, 70, 134–137. [Google Scholar] [CrossRef]

- Princton Instruments. Introduction to Scientific InGaAs FPA Cameras. ed: Technical Report. 2019. Available online: https://www.princetoninstruments.com/wp-content/uploads/2020/04/TechNote_InGaAsFPACameras.pdf (accessed on 28 May 2024).

- Li, C.; Zhang, Y.; Wang, M.; Zhang, Y.; Chen, G.; Li, L.; Wu, D.; Wang, Q. In vivo real-time visualization of tissue blood flow and angiogenesis using Ag2S quantum dots in the NIR-II window. Biomaterials 2014, 35, 393–400. [Google Scholar] [CrossRef]

- Antaris, A.L.; Chen, H.; Diao, S.; Ma, Z.; Zhang, Z.; Zhu, S.; Wang, J.; Lozano, A.X.; Fan, Q.; Chew, L.; et al. A high quantum yield molecule-protein complex fluorophore for near-infrared II imaging. Nat. Commun. 2017, 8, 15269. [Google Scholar] [CrossRef]

- Wan, H.; Yue, J.; Zhu, S.; Uno, T.; Zhang, X.; Yang, Q.; Yu, K.; Hong, G.; Wang, J.; Li, L.; et al. A bright organic NIR-II nanofluorophore for three-dimensional imaging into biological tissues. Nat. Commun. 2018, 9, 1171. [Google Scholar] [CrossRef] [PubMed]

- Rogalski, A. Infrared detectors: An overview. Infrared Phys. Technol. 2002, 43, 187–210. [Google Scholar] [CrossRef]

- Rogalski, A. HgCdTe infrared detector material: History, status and outlook. Rep. Prog. Phys. 2005, 68, 2267. [Google Scholar] [CrossRef]

- Hashagen, J. Swir applications and challenges: A primer. EuroPhotonics 2014, 22, 2014. [Google Scholar]

- Chang, Y.; Chen, H.; Xie, X.; Wan, Y.; Li, Q.; Wu, F.; Yang, R.; Wang, W.; Kong, X. Bright Tm3+-based downshifting luminescence nanoprobe operating around 1800 nm for NIR-IIb and c bioimaging. Nat. Commun. 2023, 14, 1079. [Google Scholar] [CrossRef]

- Tan, C.L.; Mohseni, H. Emerging technologies for high performance infrared detectors. Nanophotonics 2018, 7, 169–197. [Google Scholar] [CrossRef]

- Jagadish, C.; Gunapala, S.; Rhiger, D. Advances in Infrared Photodetectors, 1st ed.; Academic Press Elsevier: Cambridge, MA, USA, 2011. [Google Scholar]

- Phillips, J. Evaluation of the fundamental properties of quantum dot infrared detectors. J. Appl. Phys. 2002, 91, 4590–4594. [Google Scholar] [CrossRef]

- Stiff-Roberts, A.D. Quantum-dot infrared photodetectors: A review. J. Nanophotonics 2009, 3, 031607. [Google Scholar] [CrossRef]

- Deng, Y.H.; Pang, C.; Kheradmand, E.; Leemans, J.; Bai, J.; Minjauw, M.; Liu, J.; Molkens, K.; Beeckman, J.; Detavernier, C.; et al. Short-Wave Infrared Colloidal QDs Photodetector with Nanosecond Response Times Enabled by Ultrathin Absorber Layers. Adv. Mater. 2024, 2402002. [Google Scholar] [CrossRef] [PubMed]

- Leemans, J.; Pejović, V.; Georgitzikis, E.; Minjauw, M.; Siddik, A.B.; Deng, Y.H.; Kuang, Y.; Roelkens, G.; Detavernier, C.; Lieberman, I.; et al. Colloidal III–V quantum dot photodiodes for short-wave infrared photodetection. Adv. Sci. 2022, 9, 2200844. [Google Scholar] [CrossRef]

- Hirvonen, L.M.; Suhling, K. Fast timing techniques in FLIM applications. Front. Phys. 2020, 8, 161. [Google Scholar] [CrossRef]

- Becker, W.; Hirvonen, L.M.; Milnes, J.; Conneely, T.; Jagutzki, O.; Netz, H.; Smietana, S.; Suhling, K. A wide-field TCSPC FLIM system based on an MCP PMT with a delay-line anode. Rev. Sci. Instrum. 2016, 87, 093710. [Google Scholar] [CrossRef]

- Tyndall, D.; Rae, B.R.; Li, D.D.-U.; Arlt, J.; Johnston, A.; Richardson, J.A.; Henderson, R.K. A High-Throughput Time-Resolved Mini-Silicon Photomultiplier with Embedded Fluorescence Lifetime Estimation in 0.13 µm CMOS. IEEE Trans. Biomed. Circuits Syst. 2012, 6, 562–570. [Google Scholar] [CrossRef] [PubMed]

- Bruschini, C.; Homulle, H.; Antolovic, I.M.; Burri, S.; Charbon, E. Single-photon avalanche diode imagers in biophotonics: Review and outlook. Light: Sci. Appl. 2019, 8, 87. [Google Scholar] [CrossRef] [PubMed]

- Samimi, K.; Desa, D.E.; Lin, W.; Weiss, K.; Li, J.; Huisken, J.; Miskolci, V.; Huttenlocher, A.; Chacko, J.V.; Velten, A.; et al. Light-sheet autofluorescence lifetime imaging with a single-photon avalanche diode array. J. Biomed. Opt. 2023, 28, 066502. [Google Scholar] [CrossRef] [PubMed]

- Zickus, V.; Wu, M.-L.; Morimoto, K.; Kapitany, V.; Fatima, A.; Turpin, A.; Insall, R.; Whitelaw, J.; Machesky, L.; Bruschini, C.; et al. Fluorescence lifetime imaging with a megapixel SPAD camera and neural network lifetime estimation. Sci. Rep. 2020, 10, 20986. [Google Scholar] [CrossRef] [PubMed]

- Paschotta, R. Streak Camera. RP Photonics Encyclopedia. 2015. Available online: https://www.rp-photonics.com/streak_cameras.html (accessed on 28 May 2024).

- Komura, M.; Itoh, S. Fluorescence measurement by a streak camera in a single-photon-counting mode. Photosynth. Res. 2009, 101, 119–133. [Google Scholar] [CrossRef] [PubMed]

- Qu, J.; Liu, L.; Guo, B.; Lin, Z.; Hu, T.; Tian, J.; Wang, S.; Zhang, J.; Niu, H. (Eds.) Development of a multispectral multiphoton fluorescence lifetime imaging microscopy system using a streak camera. In Optics in Health Care and Biomedical Optics: Diagnostics and Treatment II; SPIE: Beijing, China, 2005; Volume 5630, pp. 510–516. [Google Scholar]

- Blum, C.; Cesa, Y.; Escalante, M.; Subramaniam, V. Multimode microscopy: Spectral and lifetime imaging. J. R. Soc. Interface 2009, 6, S35–S43. [Google Scholar] [CrossRef]

- Becker, W. Fluorescence lifetime imaging—Techniques and applications. J. Microsc. 2012, 247, 119–136. [Google Scholar] [CrossRef]

- Thompson, J.; Mason, J.; Beier, H.; Bixler, J. High speed fluorescence imaging with compressed ultrafast photography. In High-Speed Biomedical Imaging and Spectroscopy: Toward Big Data Instrumentation and Management II; SPIE: San Francisco, CA, USA, 2017; Volume 10076, pp. 74–79. [Google Scholar]

- Stark, B.; McGee, M.; Chen, Y. Short wave infrared (SWIR) imaging systems using small Unmanned Aerial Systems (sUAS). In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 495–501. [Google Scholar]

- Cao, F.; Liu, L.; Li, L. Short-wave infrared photodetector. Mater. Today 2023, 62, 327–349. [Google Scholar] [CrossRef]

| Image Sensor | Noise | SNR | Imaging Speed | Cost | Spectral Range | Applications |

|---|---|---|---|---|---|---|

| CCD | Low | Medium | Slow | Medium | NIR | NIR fluorescence molecular tomography; NIR fluorescence-guided surgery imaging |

| EMCCD | High | Low | Average | High | NIR | NIR fluorescence imaging of the lymphatic vasculatures in small animal models |

| CMOS | Medium | Medium | Average | Low | NIR | NIR fluorescence imaging of lymphatic system |

| ICCD/ ICMOS | Low/ Medium | High | Fast | High | NIR | Fluorescence tomographic imaging; rapid imaging of lymphatic function |

| InGaAs | High | Medium | Average | High | SWIR | SWIR imaging of the blood vascular system, lymphatic system, and tumors |

| MCT | High | Low | Fast | High | NIR SWIR | IR spectrum including the NIR and SWIR regions |

| QWIP | High | Low | Average | Low | NIR SWIR | Medical imaging |

| QD | Low | High | Average | Low | NIR SWIR | Medical imaging; microscopy |

| PMT | Low | High | Fast | High | NIR | Wide-field TCSPC in FLIM |

| SPAD Array | Low | High | Fast | High | NIR SWIR | High-speed and dynamic FLIM studies |

| Streak Camera | Low | High | Fast | High | NIR SWIR | Multispectral FLIM; compressed ultrafast photography |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, B.; Jonathan, H. A Review of Image Sensors Used in Near-Infrared and Shortwave Infrared Fluorescence Imaging. Sensors 2024, 24, 3539. https://doi.org/10.3390/s24113539

Zhu B, Jonathan H. A Review of Image Sensors Used in Near-Infrared and Shortwave Infrared Fluorescence Imaging. Sensors. 2024; 24(11):3539. https://doi.org/10.3390/s24113539

Chicago/Turabian StyleZhu, Banghe, and Henry Jonathan. 2024. "A Review of Image Sensors Used in Near-Infrared and Shortwave Infrared Fluorescence Imaging" Sensors 24, no. 11: 3539. https://doi.org/10.3390/s24113539

APA StyleZhu, B., & Jonathan, H. (2024). A Review of Image Sensors Used in Near-Infrared and Shortwave Infrared Fluorescence Imaging. Sensors, 24(11), 3539. https://doi.org/10.3390/s24113539