Three-Dimensional-Scanning of Pipe Inner Walls Based on Line Laser

Abstract

:1. Introduction

2. Literature Review

2.1. 3D-Scanning Technology

2.2. Extraction of Light Stripe Center

2.3. Contributions

- This study proposes an image-processing strategy based on tracking speckles to solve the influence of speckle noise in the image on subsequent light stripe center extractions. The strategy consists of speckle aggregation region extraction, weak speckle grayscale enhancement, and accurate speckle recognition. The problem that the traditional filtering method can not remove the speckle completely is solved by the targeted processing of the speckle.

- Aiming at the morphological characteristics of arc-shaped light stripes, this study improved the gray barycenter method. On the basis of the traditional gray barycenter method, the center point is modified through fitting a Gaussian curve, and the breakpoint problem in the process of light stripe-center extraction is solved with interpolation based on tangent direction guidance.

- Utilizing a camera, an annular-structured light emitter, and a mobile control system, this study develops and builds an automatic 3D scanner for the inner wall of multi-size pipes, which enhances the cost efficiency, improves the operational adaptability, and achieves non-contact inner wall detection, providing an effective instrument for the accurate detection of the inner wall of the multi-size pipe.

3. Methodology

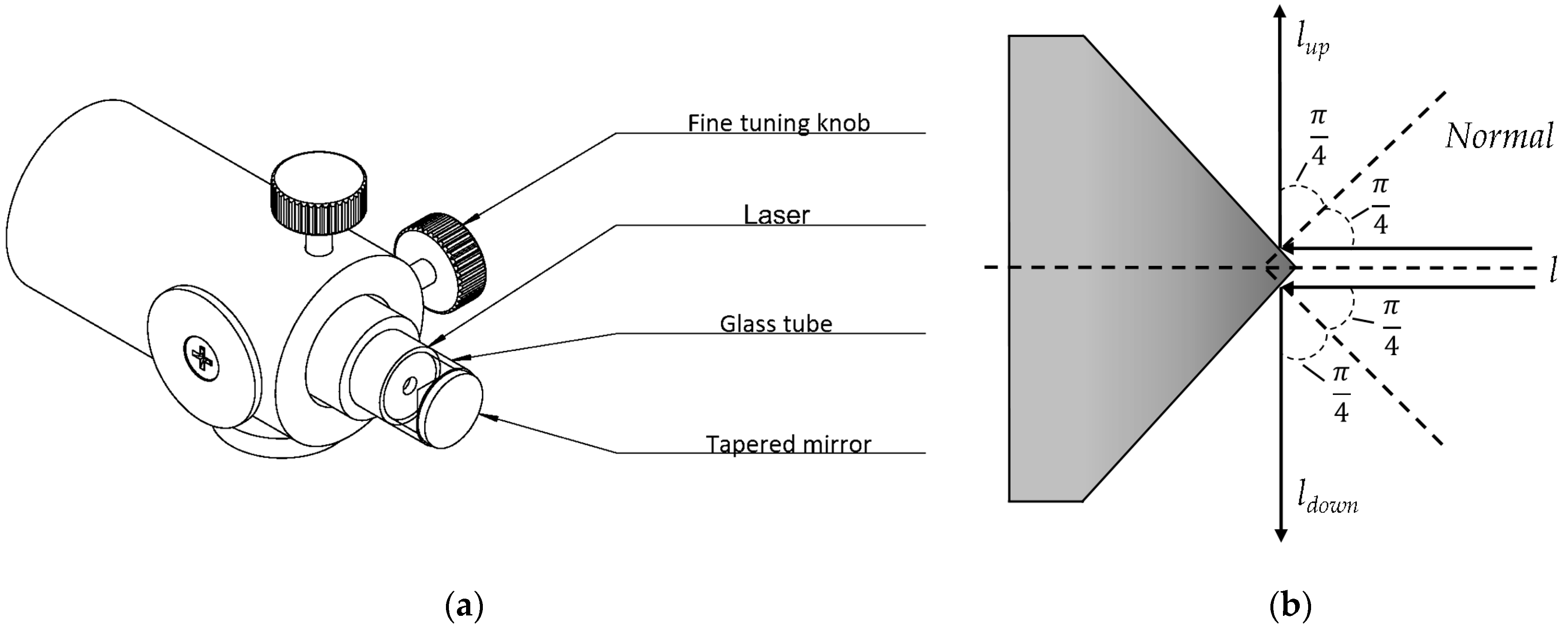

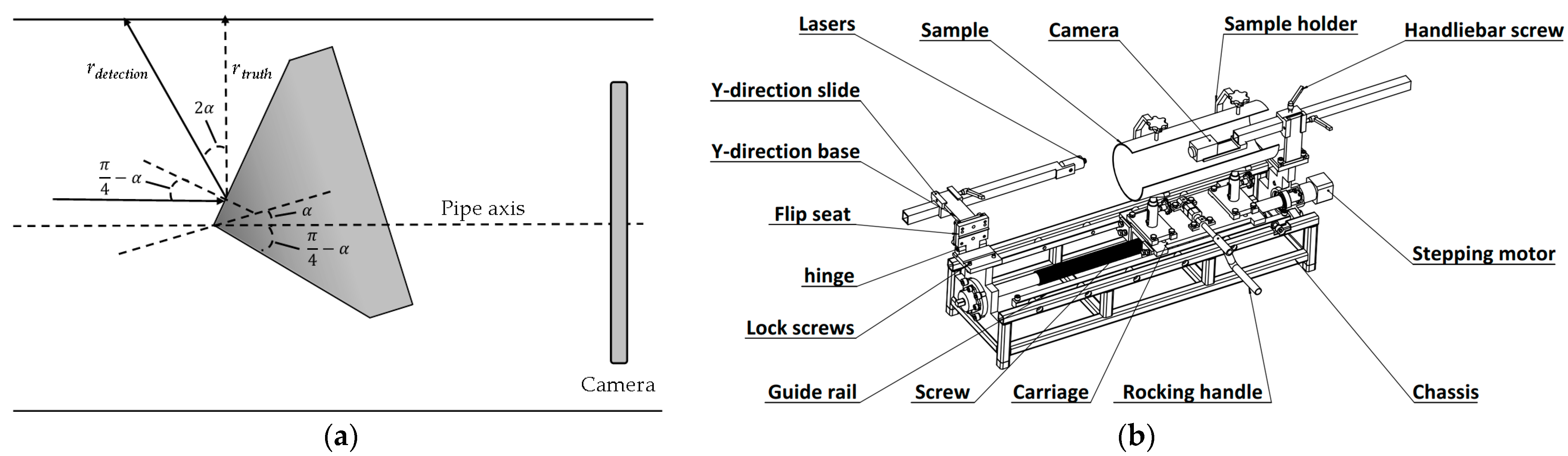

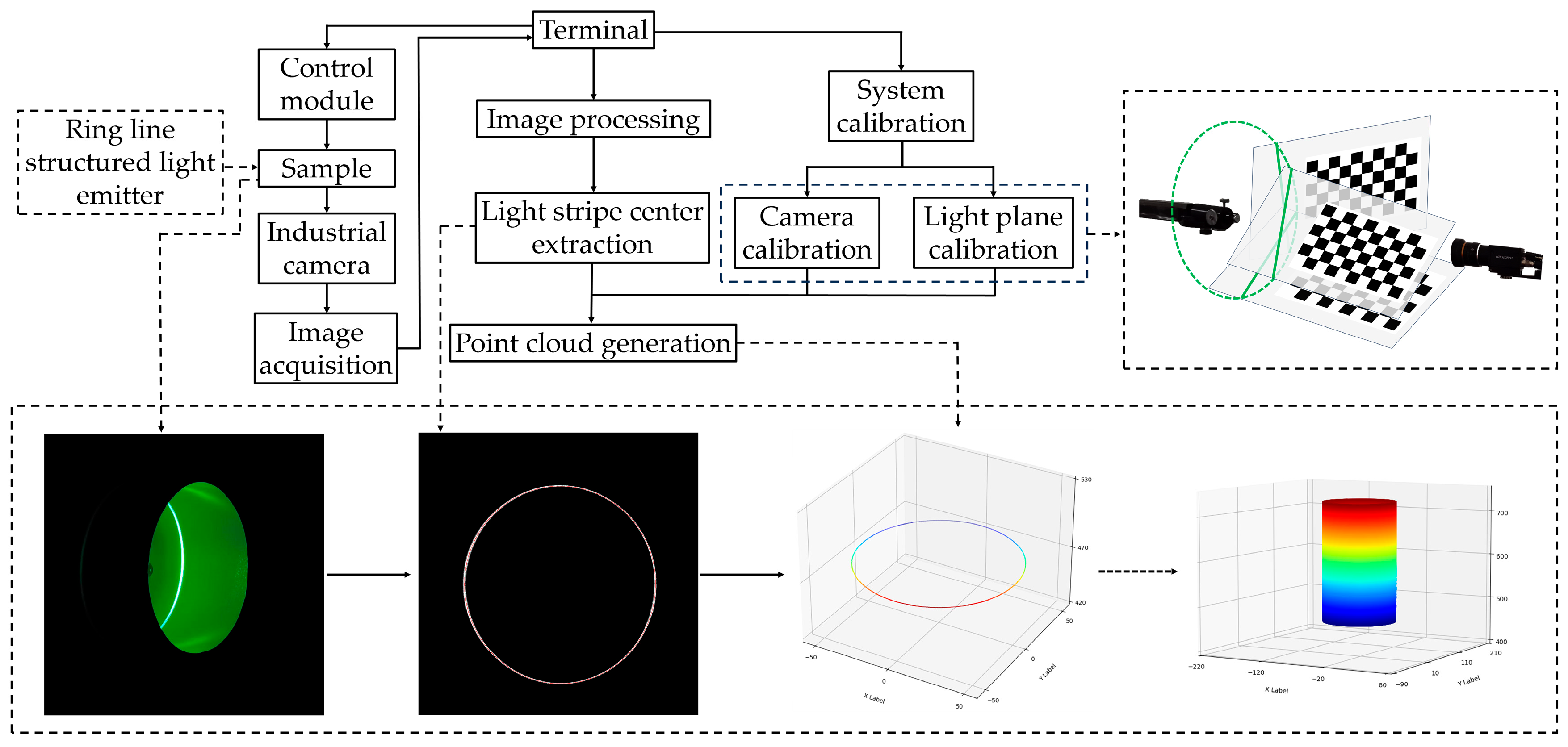

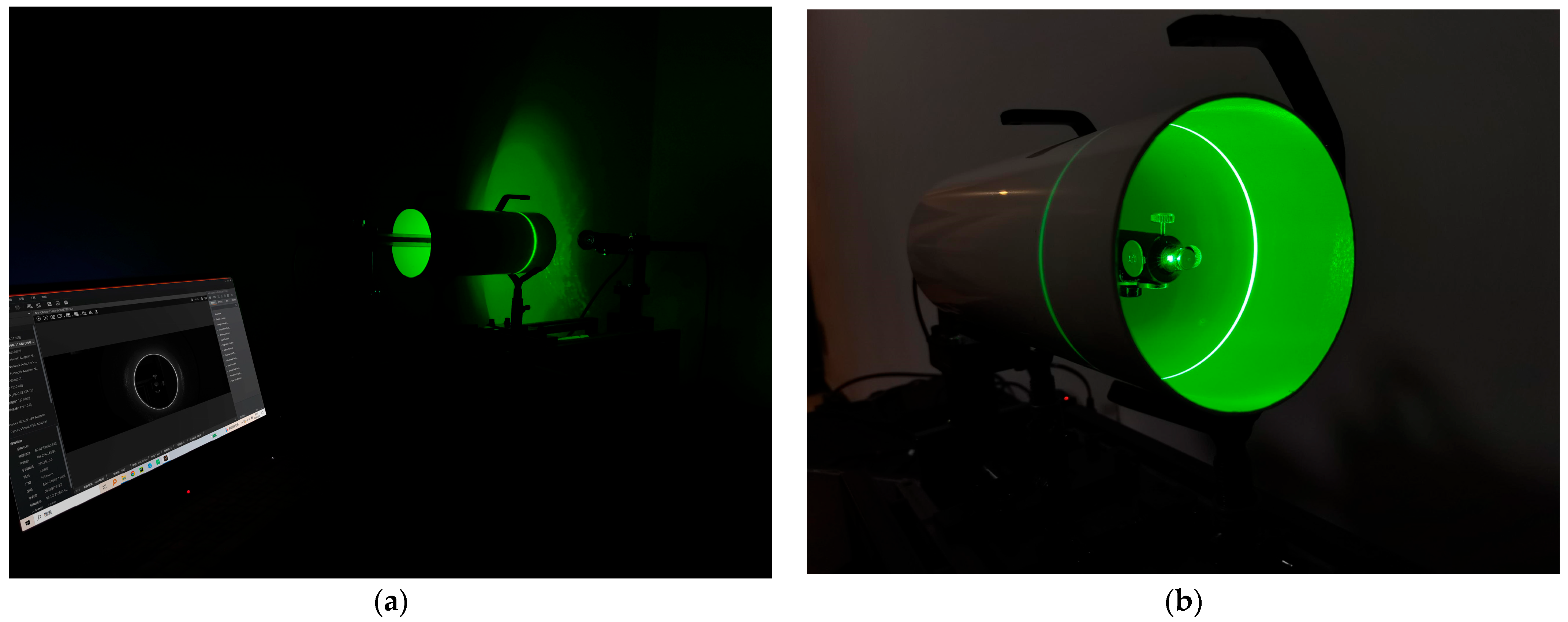

3.1. Creation and Assembly of a Monocular Structured Light 3D Scanner

3.1.1. Elements and Operations of the Scanner

3.1.2. Adjusting the Annular Structure Light Emitter

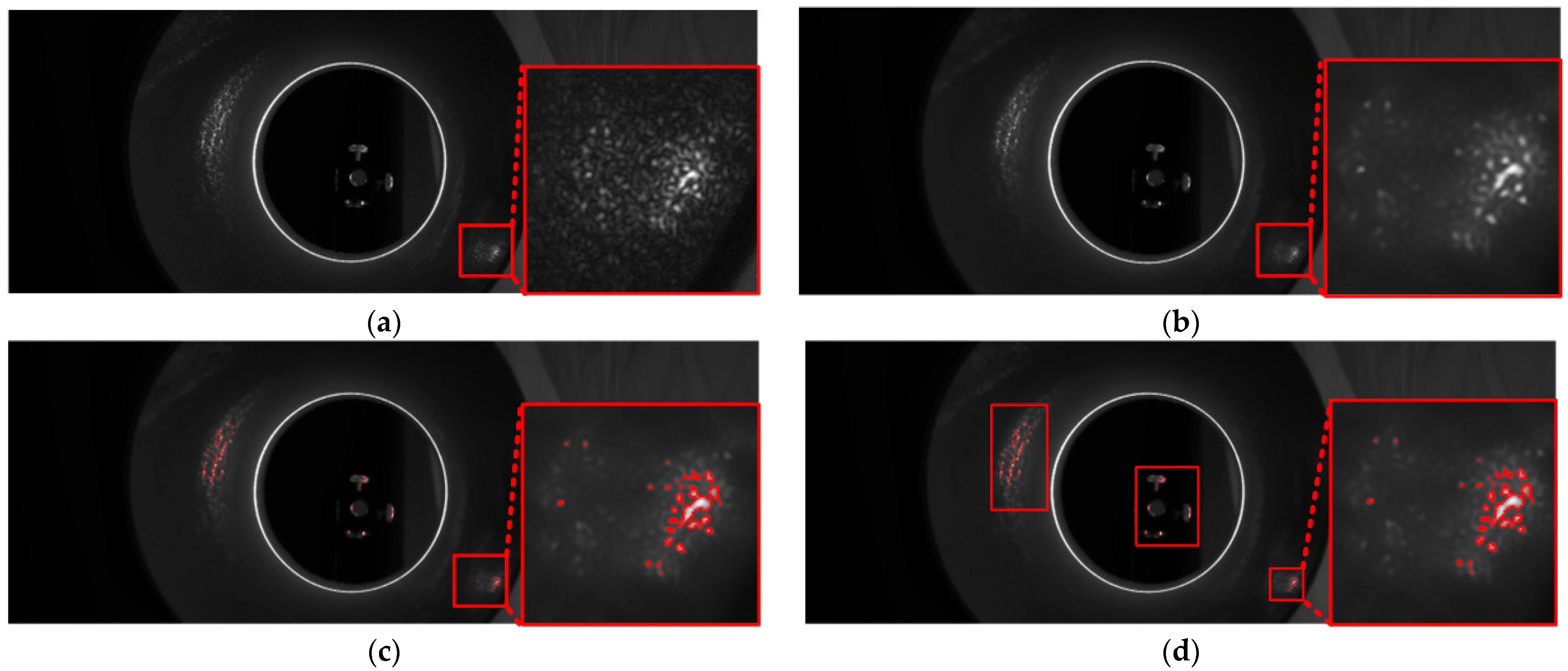

3.2. Image Processing Strategy Based on Tracking Speckles

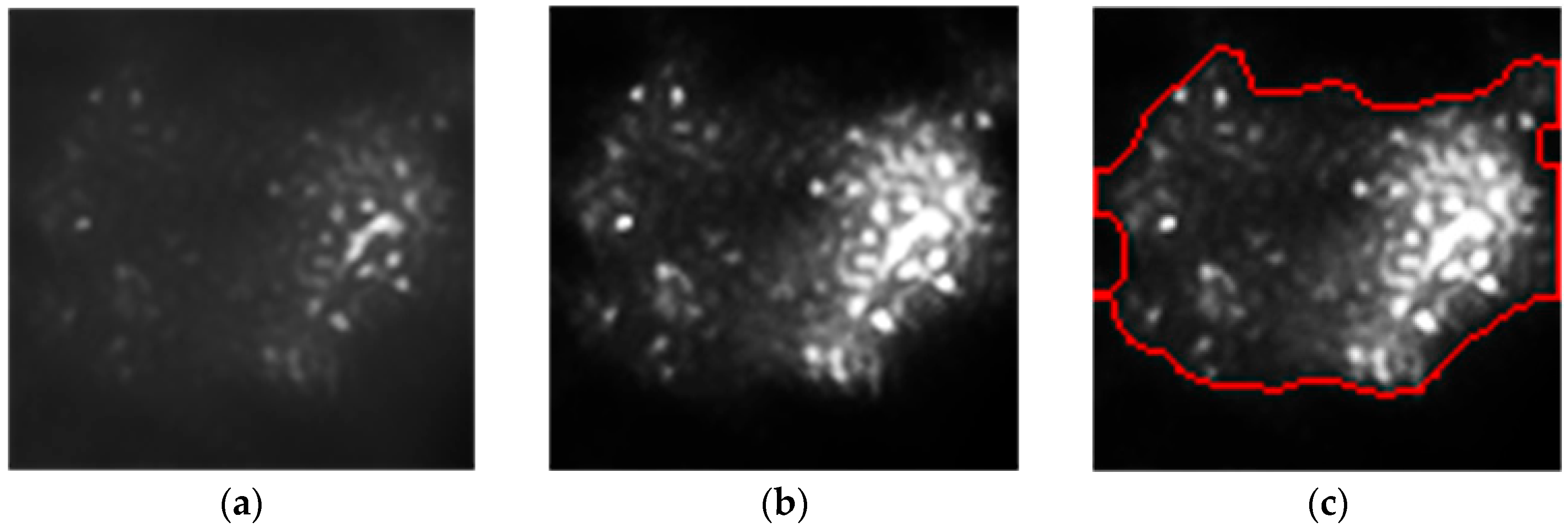

3.2.1. Extraction of Speckle Aggregation Regions

3.2.2. Accurate Extraction of Speckles

3.2.3. Binarization Processing

3.3. Improved Gray Barycenter Method

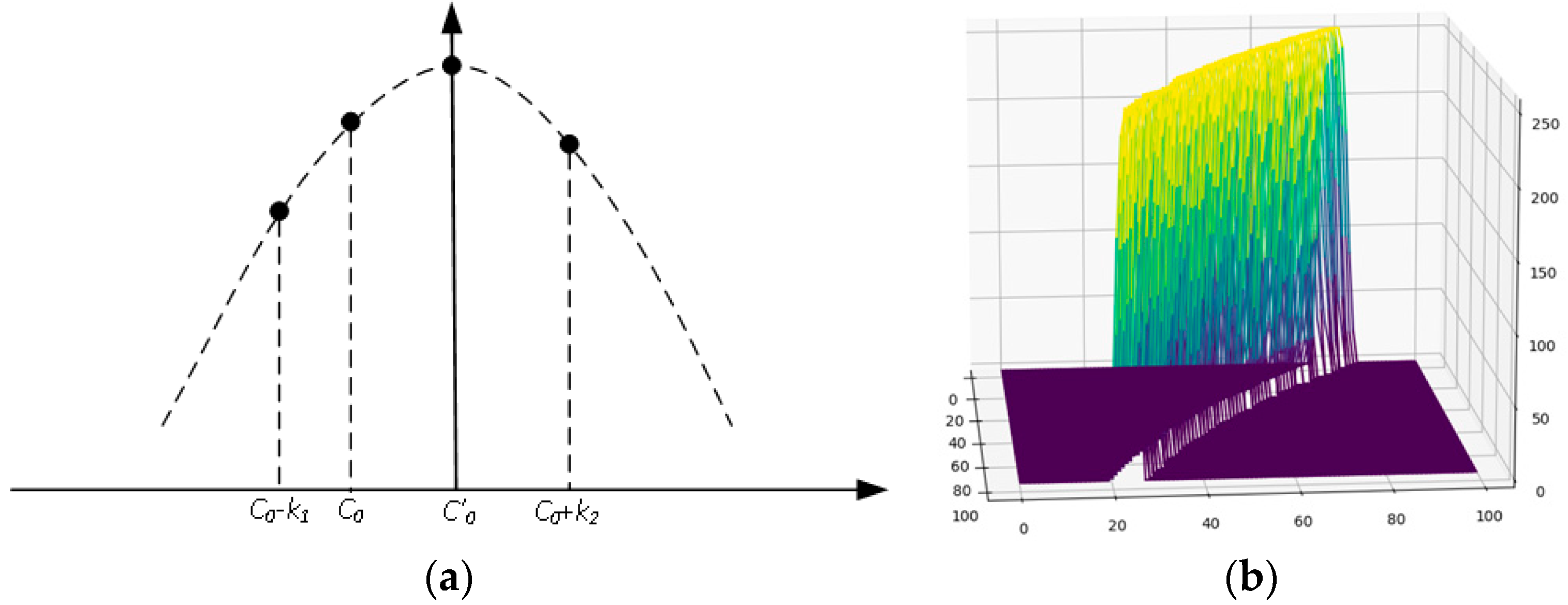

3.3.1. Optimized Gray Barycenter Method through Fitting Gaussian Curve

3.3.2. Interpolation Guided by the Tangent Direction

3.4. Point Cloud Generation

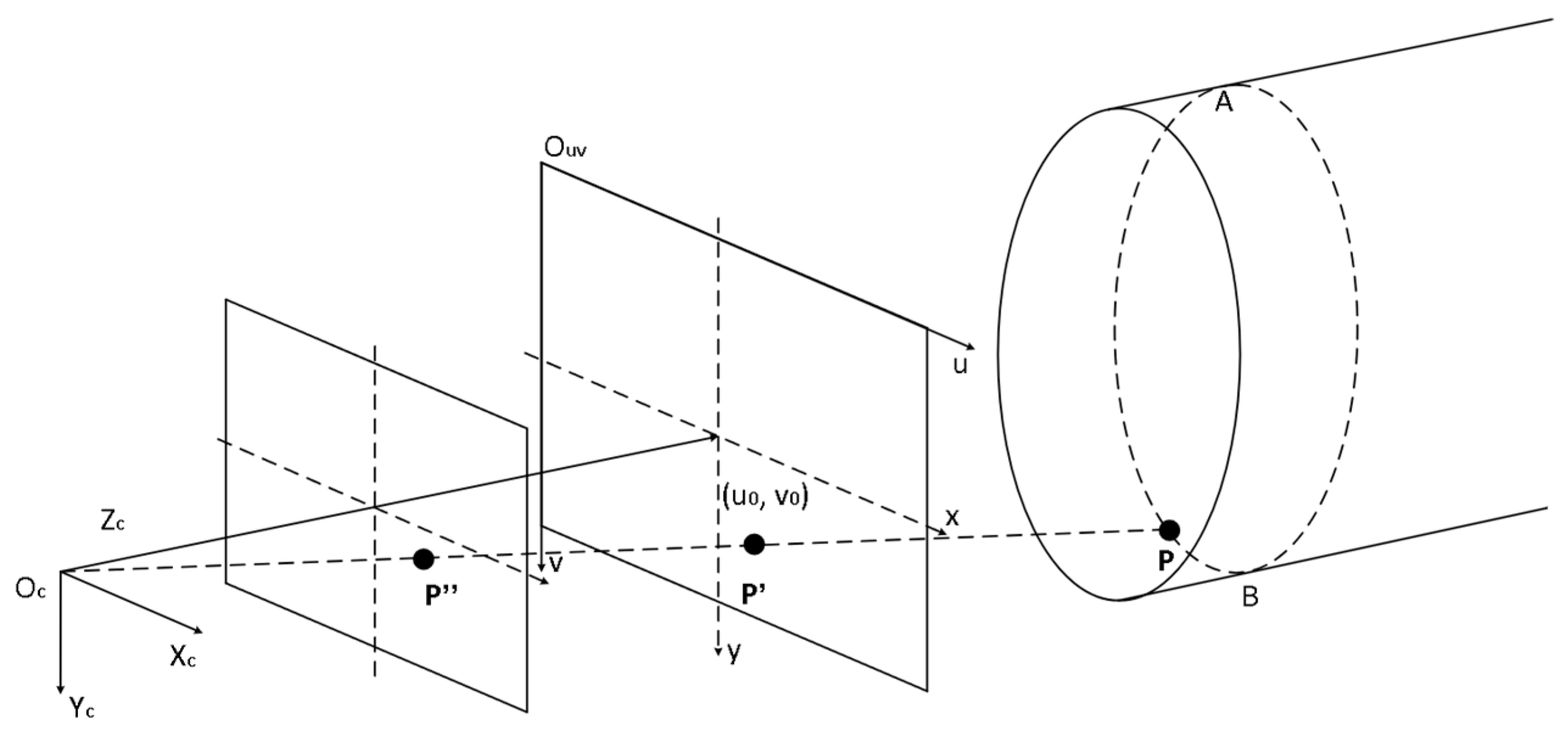

3.4.1. Monocular Line-Structured Light 3D-Reconstruction Model

3.4.2. Pipe Inner Wall Reconstruction

4. Analysis of Results

4.1. Experimental Setup

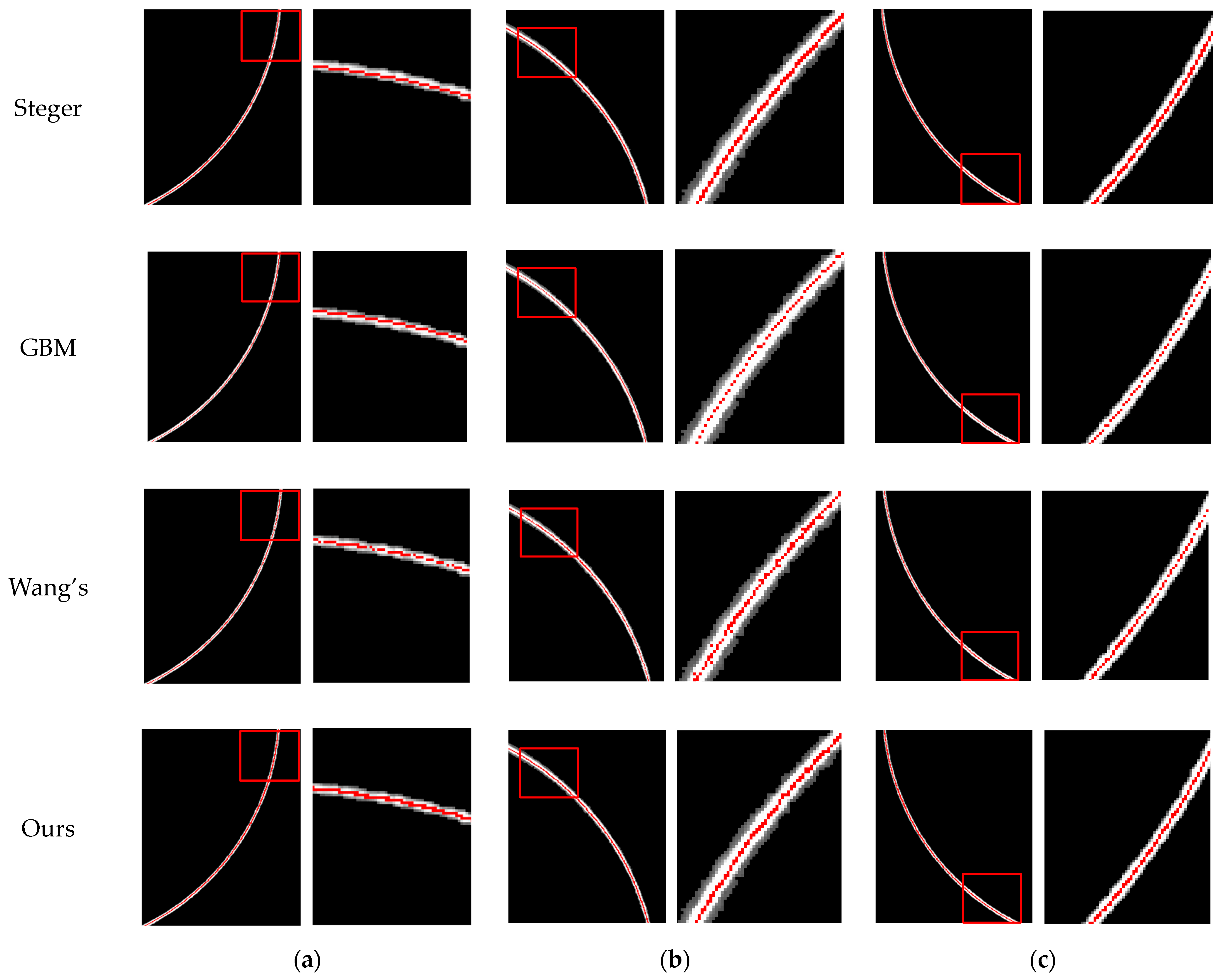

4.2. Evaluation of the Extraction of the Light Stripe Center

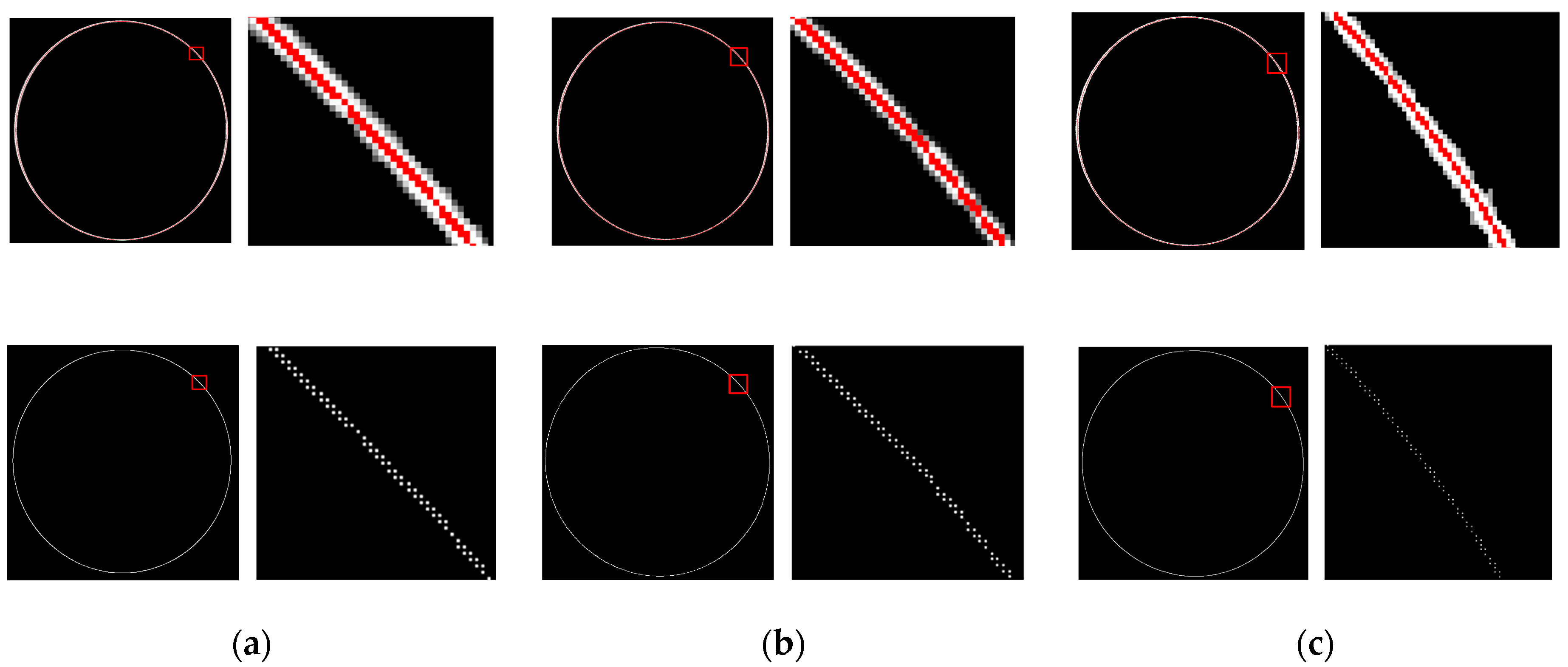

4.3. Evaluation of the Accuracy of Local Measurements

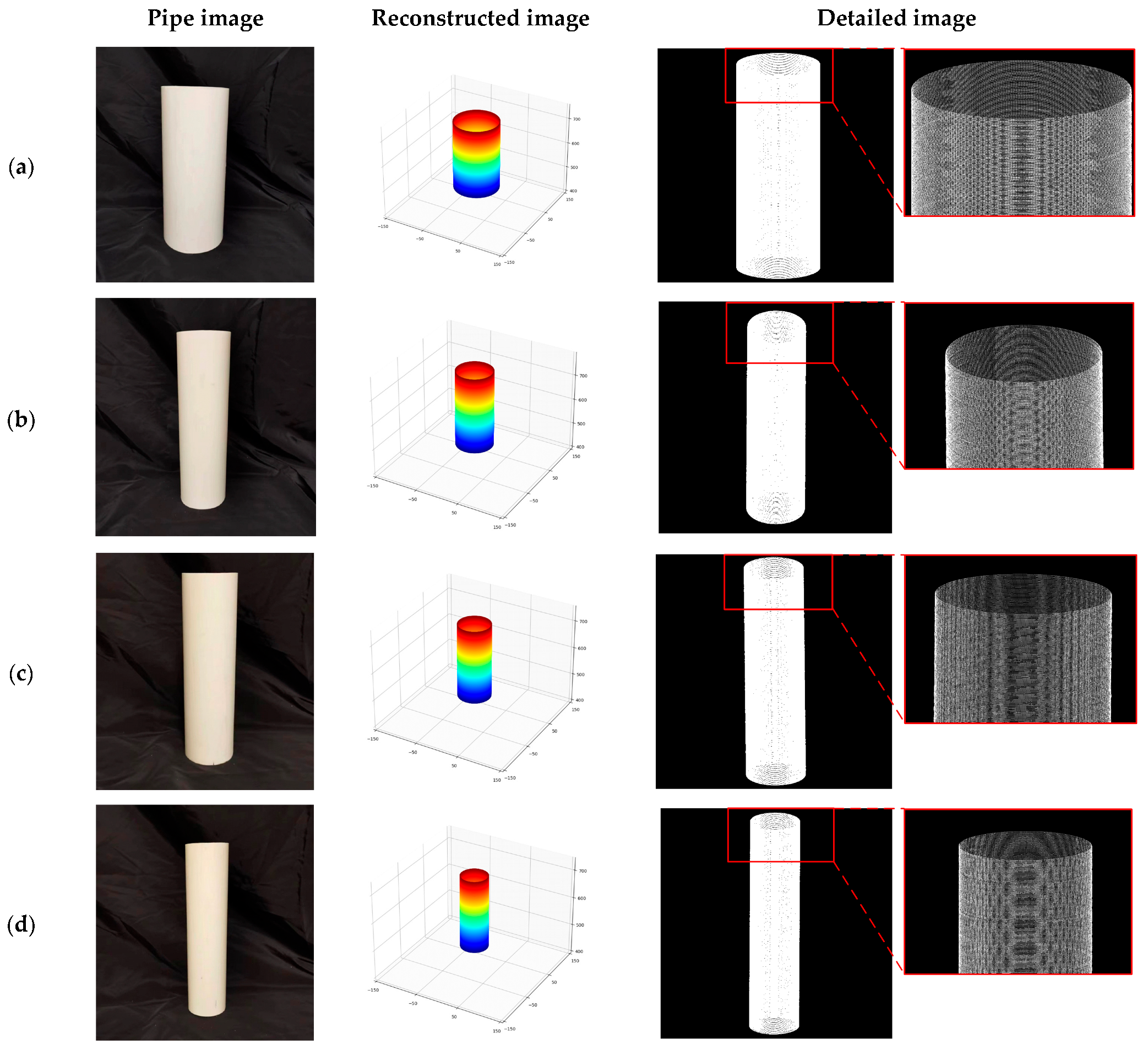

4.4. Evaluation of the Accuracy of 3D Surface Morphology Acquisition on Pipe Inner Walls

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, X.; Zheng, J.; Fu, J.; Nie, Z.; Chen, G. Optimal inspection planning of corroded pipelines using BN and GA. J. Pet. Sci. Eng. 2018, 163, 546–555. [Google Scholar] [CrossRef]

- Piciarelli, C.; Avola, D.; Pannone, D.; Foresti, G.L. A vision-based system for internal pipeline inspection. IEEE Trans. Ind. Inf. 2018, 15, 3289–3299. [Google Scholar] [CrossRef]

- Buschinelli, P.; Pinto, T.; Silva, F.; Santos, J.; Albertazzi, A. Laser triangulation profilometer for inner surface inspection of 100 millimeters (4″) nominal diameter. J. Phys. Conf. Ser. 2015, 648, 12010. [Google Scholar] [CrossRef]

- Gao, Y. Mathematical Modeling of Pipeline Features for Robotic Inspection. Ph.D. Thesis, Louisiana Tech University, Ruston, LA, USA, 2012. [Google Scholar]

- Yokota, M.; Koyama, T.; Takeda, K. Digital holographic inspection system for the inner surface of a straight pipe. Opt. Lasers Eng. 2017, 97, 62–70. [Google Scholar] [CrossRef]

- Song, S.; Ni, Y. Ultrasound imaging of pipeline crack based on composite transducer array. Chin. J. Mech. Eng. 2018, 31, 1–10. [Google Scholar] [CrossRef]

- Heo, C.G.; Im, S.H.; Jeong, H.S.; Cho, S.H.; Park, G.S. Magnetic hysteresis analysis of a pipeline re-inspection by using preisach model. IEEE Trans. Magn. 2020, 56, 1–4. [Google Scholar] [CrossRef]

- Ye, Z.; Lianpo, W.; Yonggang, G.; Songlin, B.; Chao, Z.; Jiang, B.; Ni, J. Three-dimensional inner surface inspection system based on circle-structured light. J. Manuf. Sci. Eng. 2018, 140, 121007. [Google Scholar] [CrossRef]

- Demori, M.; Ferrari, V.; Strazza, D.; Poesio, P. A capacitive sensor system for the analysis of two-phase flows of oil and conductive water. Sens. Actuators A Phys. 2010, 163, 172–179. [Google Scholar] [CrossRef]

- Dong, Y.; Fang, C.; Zhu, L.; Yan, N.; Zhang, X. The calibration method of the circle-structured light measurement system for inner surfaces considering systematic errors. Meas. Sci. Technol. 2021, 32, 75012. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P.; Suman, R. Industrial perspectives of 3D scanning: Features, roles and it’s analytical applications. Sens. Int. 2021, 2, 100114. [Google Scholar] [CrossRef]

- Guerra, M.G.; De Chiffre, L.; Lavecchia, F.; Galantucci, L.M. Use of miniature step gauges to assess the performance of 3D optical scanners and to evaluate the accuracy of a novel additive manufacture process. Sensors 2020, 20, 738. [Google Scholar] [CrossRef] [PubMed]

- Almaraz-Cabral, C.; Gonzalez-Barbosa, J.; Villa, J.; Hurtado-Ramos, J.; Ornelas-Rodriguez, F.; Córdova-Esparza, D. Fringe projection profilometry for panoramic 3D reconstruction. Opt. Lasers Eng. 2016, 78, 106–112. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, J.; Huang, F. High precision calibration of line structured light sensors based on linear transformation over triangular domain. In Proceedings of the 8th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Optical Test, Measurement Technology, and Equipment, Suzhou, China, 26–29 April 2016; SPIE: Bellingham, WA, USA, 2016; Volume 9684, pp. 40–46. [Google Scholar]

- Coramik, M.; Ege, Y. Discontinuity inspection in pipelines: A comparison review. Measurement 2017, 111, 359–373. [Google Scholar] [CrossRef]

- Sutton, M.A.; McNeill, S.R.; Helm, J.D.; Chao, Y.J. Advances in two-dimensional and three-dimensional computer vision. In Photomechanics; Springer: Berlin/Heidelberg, Germany, 2000; pp. 323–372. [Google Scholar]

- Zhang, Z.; Yuan, L. Building a 3D scanner system based on monocular vision. Appl. Opt. 2012, 51, 1638–1644. [Google Scholar] [CrossRef] [PubMed]

- Haleem, A.; Javaid, M. 3D scanning applications in medical field: A literature-based review. Clin. Epidemiol. Glob. Health 2019, 7, 199–210. [Google Scholar] [CrossRef]

- Vázquez-Arellano, M.; Griepentrog, H.W.; Reiser, D.; Paraforos, D.S. 3-D imaging systems for agricultural applications—A review. Sensors 2016, 16, 618. [Google Scholar] [CrossRef] [PubMed]

- Shang, Z.; Shen, Z. Single-pass inline pipeline 3D reconstruction using depth camera array. Autom. Constr. 2022, 138, 104231. [Google Scholar] [CrossRef]

- Bahnsen, C.H.; Johansen, A.S.; Philipsen, M.P.; Henriksen, J.W.; Nasrollahi, K.; Moeslund, T.B. 3d sensors for sewer inspection: A quantitative review and analysis. Sensors 2021, 21, 2553. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Lu, S.; Wu, T.; Yuan, G.; Tang, Y. Detection of morphology defects in pipeline based on 3D active stereo omnidirectional vision sensor. IET Image Process 2018, 12, 588–595. [Google Scholar] [CrossRef]

- Forest, J.; Salvi, J.; Cabruja, E.; Pous, C. Laser stripe peak detector for 3D scanners: A FIR filter approach. In Proceedings of the 17th International Conference on Pattern Recognition, 2004, ICPR 2004, Cambridge, UK, 23–26 August 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 646–649. [Google Scholar]

- Sun, Q.; Chen, J.; Li, C. A robust method to extract a laser stripe centre based on grey level moment. Opt. Lasers Eng. 2015, 67, 122–127. [Google Scholar] [CrossRef]

- Bazen, A.M.; Gerez, S.H. Systematic methods for the computation of the directional fields and singular points of fingerprints. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 905–919. [Google Scholar] [CrossRef]

- Bo, J.; Xianyu, S.; Lurong, G. 3D measurement of turbine blade profile by light knife. Chin. J. Lasers A 1992, 19. [Google Scholar] [CrossRef]

- Fan, J.; Jing, F.; Fang, Z.; Liang, Z. A simple calibration method of structured light plane parameters for welding robots. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 6127–6132. [Google Scholar]

- Wang, H.; Wang, Y.; Zhang, J.; Cao, J. Laser stripe center detection under the condition of uneven scattering metal surface for geometric measurement. IEEE Trans. Instrum. Meas. 2019, 69, 2182–2192. [Google Scholar] [CrossRef]

- Pang, S.; Yang, H. An algorithm for extracting the center of linear structured light fringe based on directional template. In Proceedings of the 2021 4th International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Changsha, China, 26–28 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 203–207. [Google Scholar]

- Xue, B.; Chang, B.; Peng, G.; Gao, Y.; Tian, Z.; Du, D.; Wang, G. A vision based detection method for narrow butt joints and a robotic seam tracking system. Sensors 2019, 19, 1144. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Cao, N.; Guo, H. Novel sub-pixel feature point extracting algorithm for three-dimensional measurement system with linear-structure light. In Proceedings of the 5th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Optical Test and Measurement Technology and Equipment, Dalian, China, 26–29 April 2010; SPIE: Bellingham, WA, USA, 2010; Volume 7656, pp. 936–941. [Google Scholar]

- Cai, H.; Feng, Z.; Huang, Z. Centerline extraction of structured light stripe based on principal component analysis. Chin. J. Lasers 2015, 42, 10–3788. [Google Scholar]

- Liu, J.; Liu, L. Laser stripe center extraction based on hessian matrix and regional growth. Laser Optoelectron. Prog. 2019, 56, 21203. [Google Scholar]

- Wang, J.; Wu, J.; Jiao, X.; Ding, Y. Research on the center extraction algorithm of structured light fringe based on an improved gray gravity center method. J. Intell. Syst. 2023, 32, 20220195. [Google Scholar] [CrossRef]

- Piao, W.; Yuan, Y.; Lin, H. A digital image denoising algorithm based on gaussian filtering and bilateral filtering. ITM Web Conf. 2018, 17, 1006. [Google Scholar] [CrossRef]

- Zhao, Y.Q.; Wang, X.F.; Li, G.Y. Liver image segmentation based on multi-scale and multi-structure elements. J. Optoelectron. Laser 2009, 20, 563–566. [Google Scholar]

- Malladi, R.; Sethian, J.A.; Vemuri, B.C. Shape modeling with front propagation: A level set approach. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 158–175. [Google Scholar] [CrossRef]

- Li, C.; Xu, C.; Gui, C.; Fox, M.D. Distance regularized level set evolution and its application to image segmentation. IEEE Trans. Image Process 2010, 19, 3243–3254. [Google Scholar] [CrossRef] [PubMed]

- Osher, S.; Fedkiw, R.; Piechor, K. Level set methods and dynamic implicit surfaces. Appl. Mech. Rev. 2004, 57, B15. [Google Scholar] [CrossRef]

- Peng, D.; Merriman, B.; Osher, S.; Zhao, H.; Kang, M. A PDE-based fast local level set method. J. Comput. Phys. 1999, 155, 410–438. [Google Scholar] [CrossRef]

- Aubert, G.; Kornprobst, P.; Aubert, G. Mathematical Problems in Image Processing: Partial Differential Equations and the Calculus of Variations; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Fasogbon, P.; Duvieubourg, L.; Macaire, L. Fast laser stripe extraction for 3D metallic object measurement. In Proceedings of the IECON 2016—42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 24–27 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 923–927. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Huang, Z.; Zhao, S.; Qi, P.; Li, J.; Wang, H.; Li, X.; Zhu, F. A high-accuracy measurement method for shield tail clearance based on line structured light. Measurement 2023, 222, 113583. [Google Scholar] [CrossRef]

| Type | Parameter |

|---|---|

| Internal parameter matrix | |

| Radial distortion (K1, K2, K3) | [0.1460, −0.8254, 2.7391] |

| Tangential distortion (P1, P2) | [−0.0136, −0.0082] |

| Method Type | 648 pixel | 449 pixel | 362 pixel | |||

|---|---|---|---|---|---|---|

| /pixel | Time/ms | /pixel | Time/ms | Time/ms | ||

| Steger [31] | 0.40 | 171.13 | 0.60 | 74.02 | 0.64 | 50.83 |

| GBM [28] | 0.95 | 17.21 | 1.33 | 7.93 | 1.36 | 6.21 |

| Wang’s [34] | 0.46 | 41.45 | 0.68 | 20.13 | 0.76 | 14.22 |

| Ours | 0.44 | 23.62 | 0.63 | 12.35 | 0.68 | 9.96 |

| Number | (a) | (b) | (c) |

|---|---|---|---|

| Reference/mm | 95.00 | 105.00 | 115.00 |

| Measurement/mm | 94.91 | 104.91 | 114.93 |

| Error/mm | 0.09 | 0.09 | 0.07 |

| Number | (a) | (b) | (c) | (d) |

|---|---|---|---|---|

| Reference/mm | 106.94 | 82.66 | 70.70 | 60.46 |

| Measurement/mm | 106.86 | 82.54 | 70.55 | 60.29 |

| Error/mm | 0.08 | 0.12 | 0.15 | 0.17 |

| Number | (a) | (b) | (c) | (d) |

|---|---|---|---|---|

| Reference/mm | 265.28 | 265.38 | 263.50 | 254.98 |

| Measurement/mm | 265.00 | 265.00 | 263.00 | 254.50 |

| Error/mm | 0.28 | 0.38 | 0.50 | 0.48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, L.; Ma, L.; Wang, K.; Peng, X.; Geng, N. Three-Dimensional-Scanning of Pipe Inner Walls Based on Line Laser. Sensors 2024, 24, 3554. https://doi.org/10.3390/s24113554

Kong L, Ma L, Wang K, Peng X, Geng N. Three-Dimensional-Scanning of Pipe Inner Walls Based on Line Laser. Sensors. 2024; 24(11):3554. https://doi.org/10.3390/s24113554

Chicago/Turabian StyleKong, Lingyuan, Linqian Ma, Keyuan Wang, Xingshuo Peng, and Nan Geng. 2024. "Three-Dimensional-Scanning of Pipe Inner Walls Based on Line Laser" Sensors 24, no. 11: 3554. https://doi.org/10.3390/s24113554