Feasibility of Observing Cerebrovascular Disease Phenotypes with Smartphone Monitoring: Study Design Considerations for Real-World Studies

Abstract

:1. Introduction

2. Methods

2.1. Protocol and Scientific Rationale

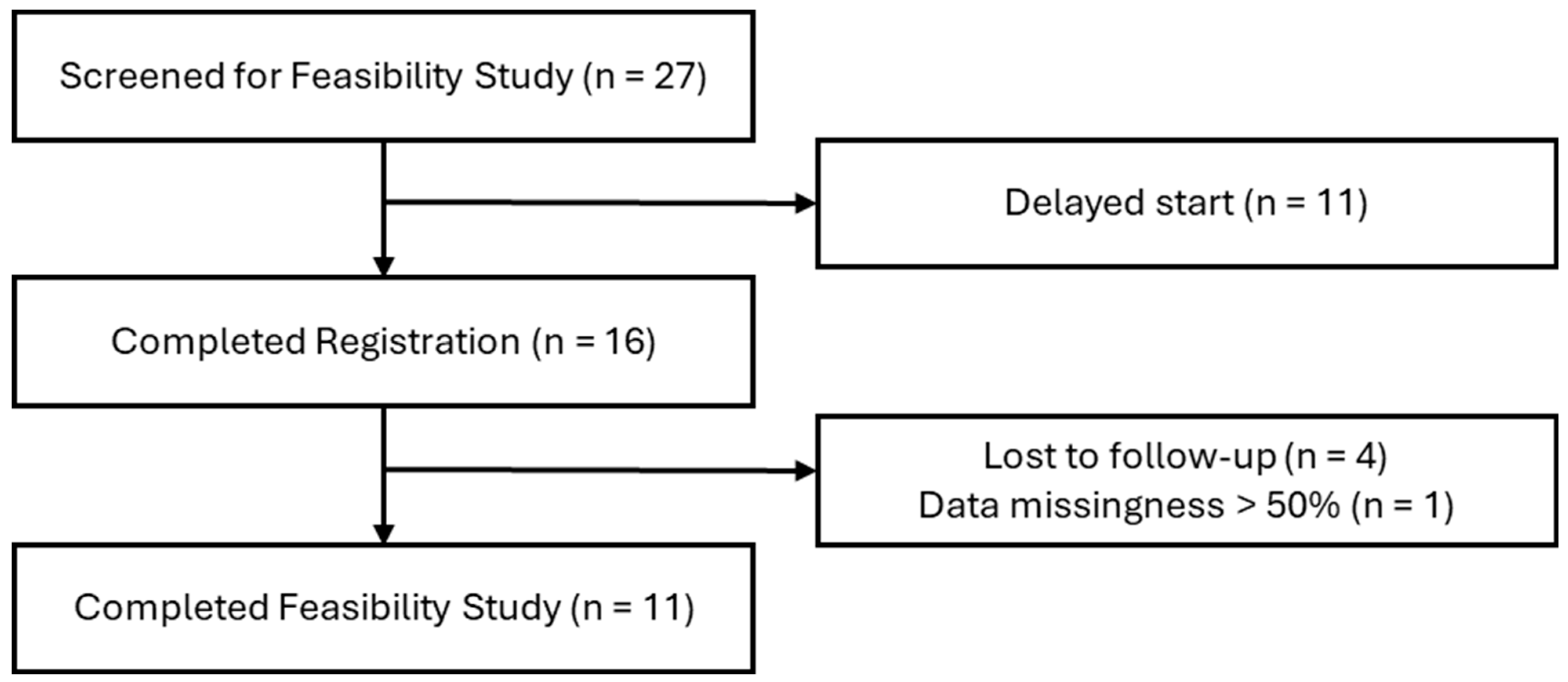

2.2. Protocol Feasibility Study Design

2.3. Sample Population

2.4. Data Collection and Analysis

3. Results

4. Conclusions and Future Work

4.1. Participant Device Ownership and Sharing

4.2. Participant Wireless Network and Technology Access Proficiency

4.3. Active Data Capture Complexity

4.4. Passive Data Collection with a Fail-Safe Strategy

4.5. Follow-up Assessment Scheduling to Accommodate Patients, Family, and Caregiver(s)

4.6. Embedded Study Designs

4.7. Mindful of Data’s Role in Multimodal Studies

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bukhari, S.; Yaghi, S.; Bashir, Z. Stroke in Young Adults. J. Clin. Med. 2023, 12, 4999. [Google Scholar] [CrossRef] [PubMed]

- Kleindorfer, D.O.; Towfighi, A.; Chaturvedi, S.; Cockroft, K.M.; Gutierrez, J.; Lombardi-Hill, D.; Kamel, H.; Kernan, W.N.; Kittner, S.J.; Leira, E.C.; et al. 2021 Guideline for the Prevention of Stroke in Patients With Stroke and Transient Ischemic Attack: A Guideline From the American Heart Association/American Stroke Association. Stroke 2021, 52, e364–e467. [Google Scholar] [CrossRef] [PubMed]

- Lorio, A.; Garcia-Rodriguez, C.; Seifi, A. Two Decades of Stroke in the United States: A Healthcare Economic Perspective. Neuroepidemiology 2024, 58, 143–150. [Google Scholar] [CrossRef]

- Brown, D.L.; Boden-Albala, B.; Langa, K.M.; Lisabeth, L.D.; Fair, M.; Smith, M.A.; Sacco, R.L.; Morgenstern, L.B. Projected costs of ischemic stroke in the United States. Neurology 2006, 67, 1390–1395. [Google Scholar] [CrossRef] [PubMed]

- 2021 Alzheimer’s disease facts and figures. Alzheimer’s Dement. J. Alzheimer’s Assoc. 2021, 17, 327–406. [CrossRef] [PubMed]

- Towfighi, A.; Ovbiagele, B.; El Husseini, N.; Hackett, M.L.; Jorge, R.E.; Kissela, B.M.; Mitchell, P.H.; Skolarus, L.E.; Whooley, M.A.; Williams, L.S. Poststroke Depression: A Scientific Statement for Healthcare Professionals From the American Heart Association/American Stroke Association. Stroke 2017, 48, e30–e43. [Google Scholar] [CrossRef]

- Dar, S.K.; Venigalla, H.; Khan, A.M.; Ahmed, R.; Mekala, H.M.; Zain, H.; Shagufta, S. Post-Stroke Depression Frequently Overlooked, Undiagnosed, Untreated. Neuropsychiatry 2017, 7, 906–919. [Google Scholar] [CrossRef]

- Fuentes, B.; Ortiz, X.; Sanjose, B.; Frank, A.; Díez-Tejedor, E. Post-stroke depression: Can we predict its development from the acute stroke phase? Acta Neurol. Scand. 2009, 120, 150–156. [Google Scholar] [CrossRef] [PubMed]

- Oestreich, L.K.L.; Wright, P.; O’Sullivan, M.J. Microstructural changes in the reward system are associated with post-stroke depression. NeuroImage. Clin. 2020, 28, 102360. [Google Scholar] [CrossRef] [PubMed]

- Kimura, M.; Robinson, R.G.; Kosier, J.T. Treatment of cognitive impairment after poststroke depression: A double-blind treatment trial. Stroke 2000, 31, 1482–1486. [Google Scholar] [CrossRef]

- Iqbal, F.M.; Lam, K.; Joshi, M.; Khan, S.; Ashrafian, H.; Darzi, A. Clinical outcomes of digital sensor alerting systems in remote monitoring: A systematic review and meta-analysis. NPJ Digit. Med. 2021, 4, 7. [Google Scholar] [CrossRef] [PubMed]

- Jean, F.A.; Swendsen, J.D.; Sibon, I.; Fehér, K.; Husky, M. Daily life behaviors and depression risk following stroke: A preliminary study using ecological momentary assessment. J. Geriatr. Psychiatry Neurol. 2013, 26, 138–143. [Google Scholar] [CrossRef]

- Johnson, E.I.; Sibon, I.; Renou, P.; Rouanet, F.; Allard, M.; Swendsen, J. Feasibility and validity of computerized ambulatory monitoring in stroke patients. Neurology 2009, 73, 1579–1583. [Google Scholar] [CrossRef] [PubMed]

- Sibon, I.; Lassalle-Lagadec, S.; Renou, P.; Swendsen, J. Evolution of depression symptoms following stroke: A prospective study using computerized ambulatory monitoring. Cerebrovasc. Dis. 2012, 33, 280–285. [Google Scholar] [CrossRef] [PubMed]

- Castelyn, G.; Laranjo, L.; Schreier, G.; Gallego, B. Predictive performance and impact of algorithms in remote monitoring of chronic conditions: A systematic review and meta-analysis. Int. J. Med. Inform. 2021, 156, 104620. [Google Scholar] [CrossRef] [PubMed]

- Zawada, S.J.; Ganjizadeh, A.; Conte, G.M.; Demaerschalk, B.M.; Erickson, B.J. Accelerometer-Measured Behavior Patterns in Incident Cerebrovascular Disease: Insights for Preventative Monitoring from UK Biobank. J. Am. Heart Assoc. 2024, 13, e032965. [Google Scholar] [CrossRef]

- Heshmatollah, A.; Dommershuijsen, L.J.; Fani, L.; Koudstaal, P.J.; Ikram, M.A.; Ikram, M.K. Long-term trajectories of decline in cognition and daily functioning before and after stroke. J. Neurol. Neurosurg. Psychiatry 2021, 92, 1158–1163. [Google Scholar] [CrossRef] [PubMed]

- Johnson, S.A.; Karas, M.; Burke, K.M.; Straczkiewicz, M.; Scheier, Z.A.; Clark, A.P.; Iwasaki, S.; Lahav, A.; Iyer, A.S.; Onnela, J.-P.; et al. Wearable device and smartphone data quantify ALS progression and may provide novel outcome measures. NPJ Digit. Med. 2023, 6, 34. [Google Scholar] [CrossRef] [PubMed]

- Mohr, D.C.; Meyerhoff, J.; Schueller, S.M. Postmarket Surveillance for Effective Regulation of Digital Mental Health Treatments. Psychiatr. Serv. 2023, 74, 1114–1115. [Google Scholar] [CrossRef] [PubMed]

- Demanuele, C.; Lokker, C.; Jhaveri, K.; Georgiev, P.; Sezgin, E.; Geoghegan, C.; Zou, K.H.; Izmailova, E.; McCarthy, M. Considerations for Conducting Bring Your Own “Device” (BYOD) Clinical Studies. Digit. Biomark. 2022, 6, 47–60. [Google Scholar] [CrossRef] [PubMed]

- Kersting, C.; Weltermann, B. Evaluating the Feasibility of a Software Prototype Supporting the Management of Multimorbid Seniors: Mixed Methods Study in General Practices. JMIR Hum. Factors 2019, 6, e12695. [Google Scholar] [CrossRef]

- Beukenhorst, A.L.; Collins, E.; Burke, K.M.; Rahman, S.M.; Clapp, M.; Konanki, S.C.; Paganoni, S.; Miller, T.M.; Chan, J.; Onnela, J.P.; et al. Smartphone data during the COVID-19 pandemic can quantify behavioral changes in people with ALS. Muscle Nerve 2021, 63, 258–262. [Google Scholar] [CrossRef] [PubMed]

- Gilbody, S.; House, A.O.; Sheldon, T.A. Screening and case finding instruments for depression. Cochrane Database Syst. Rev. 2005, 4, CD002792. [Google Scholar] [CrossRef] [PubMed]

- Kaser, M.; Foley, É.M.; Khandaker, G.M. Neurocognitive Performance in Depressed Patients with low-grade inflammation and somatic symptoms. Brain Behav. Immun. Health 2021, 19, 100409. [Google Scholar] [CrossRef] [PubMed]

- Helgadóttir, B.; Forsell, Y.; Ekblom, Ö. Physical activity patterns of people affected by depressive and anxiety disorders as measured by accelerometers: A cross-sectional study. PLoS ONE 2015, 10, e0115894. [Google Scholar] [CrossRef] [PubMed]

- Lowry, C.A.; Jin, A.Y. Improving the Social Relevance of Experimental Stroke Models: Social Isolation, Social Defeat Stress and Stroke Outcome in Animals and Humans. Front. Neurol. 2020, 11, 427. [Google Scholar] [CrossRef] [PubMed]

- Shah, S.G.; Robinson, I.; AlShawi, S. Developing medical device technologies from users’ perspectives: A theoretical framework for involving users in the development process. Int. J. Technol. Assess. Health Care 2009, 25, 514–521. [Google Scholar] [CrossRef] [PubMed]

- Pastorino, R.; De Vito, C.; Migliara, G.; Glocker, K.; Binenbaum, I.; Ricciardi, W.; Boccia, S. Benefits and challenges of Big Data in healthcare: An overview of the European initiatives. Eur. J. Public Health 2019, 29 (Suppl. S3), 23–27. [Google Scholar] [CrossRef] [PubMed]

- Alroobaea, R.; Mayhew, P.J. How many participants are really enough for usability studies? In Proceedings of the 2014 Science and Information Conference, London, UK, 27–29 August 2014; pp. 48–56. [Google Scholar] [CrossRef]

- Brady, R.R.; Hunt, A.C.; Visvanathan, A.; Rodrigues, M.A.; Graham, C.; Rae, C.; Kalima, P.; Paterson, H.M.; Gibb, A.P. Mobile phone technology and hospitalized patients: A cross-sectional surveillance study of bacterial colonization, and patient opinions and behaviours. Clin. Microbiol. Infect. 2011, 17, 830–835. [Google Scholar] [CrossRef] [PubMed]

- Wei, W.; Munteanu, C.; Halvey, M. Partners in life and online search: Investigating older couples’ collaborative infomation seeking. In Proceedings of the CHIIR 2022—Proceedings of the 2022 Conference on Human Information Interaction and Retrieval, Regensburg, Germany, 14–18 March 2022; pp. 47–55. [Google Scholar] [CrossRef]

- Blackwood-Brown, C.; Levy, Y.; D’Arcy, J. Cybersecurity awareness and skills of senior citizens: A motivation perspective Journal of Computer Information Systems. J. Comput. Inf. Syst. 2021, 61, 195–206. [Google Scholar] [CrossRef]

- Roque, N.A.; Boot, W.R. A New Tool for Assessing Older Adults’ Wireless Network Proficiency: The Wireless Network Proficiency Questionnaire. J. Appl. Gerontol. 2021, 40, 541–546. [Google Scholar] [CrossRef] [PubMed]

- Gordon, M.L.; Gatys, L.; Guestrin, C.; Bigham, J.P.; Trister, A.; Patel, K. App Usage Predicts Cognitive Ability in Older Adults. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 August 2019; Available online: https://www.cs.cmu.edu/~jbigham/pubs/pdfs/2019/app-usage-older-adults.pdf (accessed on 28 December 2023).

- Ford, E.; Milne, R.; Curlewis, K. Ethical issues when using digital biomarkers and artificial intelligence for the early detection of dementia. WIREs Data Min. Know Disc. 2023, 13, e1492. [Google Scholar] [CrossRef] [PubMed]

- Wilson, S.A.; Byrne, P.; Rodgers, S.E.; Maden, M. A Systematic Review of Smartphone and Tablet Use by Older Adults With and Without Cognitive Impairment. Innov. Aging 2022, 6, igac002. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Luan, W. Research progress on digital health literacy of older adults: A scoping review. Front. Public Health 2022, 10, 906089. [Google Scholar] [CrossRef]

- González-Roz, A.; Gaalema, D.E.; Pericot-Valverde, I.; Elliott, R.J.; Ades, P.A. A Systematic Review of the Diagnostic Accuracy of Depression Questionnaires for Cardiac Populations: Implications for Cardiac Rehabilitation. J. Cardiopulm. Rehabil. Prev. 2019, 39, 354–364. [Google Scholar] [CrossRef] [PubMed]

- Gomez-Hernandez, M.; Ferre, X.; Moral, C.; Villalba-Mora, E. Design Guidelines of Mobile Apps for Older Adults: Systematic Review and Thematic Analysis. JMIR mHealth uHealth 2023, 11, e43186. [Google Scholar] [CrossRef] [PubMed]

- Briançon-Marjollet, A.; Weiszenstein, M.; Henri, M.; Thomas, A.; Godin-Ribuot, D.; Polak, J. The impact of sleep disorders on glucose metabolism: Endocrine and molecular mechanisms. Diabetol. Metab. Syndr. 2015, 7, 25. [Google Scholar] [CrossRef] [PubMed]

- Calhoun, D.A.; Harding, S.M. Sleep and hypertension. Chest 2010, 138, 434–443. [Google Scholar] [CrossRef] [PubMed]

- Arnardottir, E.S.; Islind, A.S.; Óskarsdóttir, M. The Future of Sleep Measurements: A Review and Perspective. Sleep Med. Clin. 2021, 16, 447–464. [Google Scholar] [CrossRef] [PubMed]

- Braun, B.J.; Histing, T.; Menger, M.M.; Platte, J.; Grimm, B.; Hanflik, A.M.; Richter, P.H.; Sivananthan, S.; Yarboro, S.R.; Gueorguiev, B.; et al. “Bring Your Own Device”—A New Approach to Wearable Outcome Assessment in Trauma. Medicina 2023, 59, 403. [Google Scholar] [CrossRef] [PubMed]

- Etkin, C.D.; Farran, C.J.; Barnes, L.L.; Shah, R.C. Recruitment and enrollment of caregivers for a lifestyle physical activity clinical trial. Res. Nurs. Health 2012, 35, 70–81. [Google Scholar] [CrossRef] [PubMed]

- Zawada, S.J.; Haj Aissa, N.; Conte, G.M.; Pollock, B.D.; Athreya, A.P.; Erickson, B.J.; Demaerschalk, B.M. In Situ Physiologic and Behavioral Monitoring With Digital Sensors for Cerebrovascular Disease: A Scoping Review. Mayo Clin. Proc. Digit. Health 2023, 1, 139–160. [Google Scholar] [CrossRef]

- Motahari-Nezhad, H.; Fgaier, M.; Mahdi Abid, M.; Péntek, M.; Gulácsi, L.; Zrubka, Z. Digital Biomarker–Based Studies: Scoping Review of Systematic Reviews. JMIR mHealth uHealth 2022, 10, e35722. [Google Scholar] [CrossRef] [PubMed]

- Daffertshofer, M.; Mielke, O.; Pullwitt, A.; Felsenstein, M.; Hennerici, M. Transient ischemic attacks are more than “ministrokes”. Stroke 2004, 35, 2453–2458. [Google Scholar] [CrossRef] [PubMed]

- Howard-Jones, A.R.; Webb, S.A. Embedding clinical trials within routine health-care delivery: Challenges and opportunities. J. Paediatr. Child Health 2021, 57, 474–476. [Google Scholar] [CrossRef] [PubMed]

- Demaerschalk, B.M.; Hollander, J.E.; Krupinski, E.; Scott, J.; Albert, D.; Bobokalonova, Z.; Bolster, M.; Chan, A.; Christopherson, L.; Coffey, J.D.; et al. Quality Frameworks for Virtual Care: Expert Panel Recommendations. Mayo Clin. Proc. Innov. Qual. Outcomes 2022, 7, 31–44. [Google Scholar] [CrossRef] [PubMed]

- Chiauzzi, E.; Rodarte, C.; DasMahapatra, P. Patient-centered activity monitoring in the self-management of chronic health conditions. BMC Med. 2015, 13, 77. [Google Scholar] [CrossRef] [PubMed]

- Abernethy, A.; Adams, L.; Barrett, M.; Bechtel, C.; Brennan, P.; Butte, A.; Faulkner, J.; Fontaine, E.; Friedhoff, S.; Halamka, J.; et al. The Promise of Digital Health: Then, Now, and the Future. NAM Perspect. 2022. [Google Scholar] [CrossRef] [PubMed]

- Coravos, A.; Khozin, S.; Mandl, K.D. Developing and adopting safe and effective digital biomarkers to improve patient outcomes. NPJ Digit. Med. 2019, 2, 14. [Google Scholar] [CrossRef] [PubMed]

| Active Data Feature | Feasibility Assessment—Summary Metric | Future Clinical Use—Intra-Individual Metric |

|---|---|---|

| Mood Survey | Survey completion and submission rate (%) | Survey scores will increase from controls to PSD |

| Passive Data Feature | Feasibility Assessment—Summary Metric | Future Clinical Use—Intra-Individual Metric |

| Mood Survey Response Time | Survey completion time recorded (mean, standard deviation) | Survey completion time will increase from controls to PSD |

| Activity (Accelerometer Quantified) | Average of weekly sum of accelerometer volume generated (mean, standard deviation) | Average weekly activity will decline from controls to PSD |

| Social Engagement (GPS Quantified) | Hours with GPS location data per week (%) | Average number of trips outside the home will decline from controls to PSD |

| Characteristics | Participants | |

|---|---|---|

| Ischemic Stroke (n = 4) | TIA (n = 12) | |

| Age (years), mean (SD) | 47 (14.9) | 56 (18.1) |

| Age (years): min-max | 25–56 | 23–81 |

| Male (n) | 3 | 7 |

| Ethnicity (n) | ||

| White | 3 | 9 |

| Hispanic | 0 | 1 |

| Native American | 1 | 0 |

| Asian | 0 | 1 |

| Black | 0 | 0 |

| Unknown | 0 | 1 |

| Use of phone (n) | 4 | 12 |

| Use of e-mail (n) | 3 | 12 |

| Resides in Phoenix Metro Area (n) | 1 | 10 |

| Mood Status at Discharge (n) | ||

| Normal | 3 | 11 |

| Depressed | 0 | 0 |

| Missing | 1 | 1 |

| Cognitive Status at Discharge (n) | ||

| Normal | 3 | 8 |

| Confused | 0 | 1 |

| Missing | 1 | 3 |

| Antidepressant Use? (n) | 1 | 4 |

| Participants | |||

|---|---|---|---|

| Data Feature | Feasibility Assessment—Summary Metric | Ischemic Stroke (n = 3) | TIA (n = 8) |

| Mood Survey (Active) | Survey completion and submission rate (%) | ||

| Week 1 | 66.7 | 75.0 | |

| Week 2 | 100.0 | 75.0 | |

| Week 3 | 100.0 | 87.5 | |

| Week 4 | 66.7 | 75.0 | |

| Mood Survey Response Time (Passive) | Survey completion time recorded (mean seconds, standard deviation) | ||

| Week 1 | 182.7 (175.6) | 75.6 (51.1) | |

| Week 2 | 50.8 (27.7) | 63.9 (54.7) | |

| Week 3 | 41.3 (15.2) | 42.5 (25.7) | |

| Week 4 | 31.4 (17.5) | 72.9 (38.2) | |

| Activity (Passive) | Average of weekly sum of accelerometer volume generated (mean bytes, standard deviation) | ||

| Week 1 | 23,031,109.0 (10,838,334.6) | 30,837,116.7 (29,557,213.9) | |

| Week 2 | 19,975,074.2 (12,015,996.8) | 29,580,727.3 (21,580,446.0) | |

| Week 3 | 18,130,797.6 (7,425,125.0) | 27,469,212.8 (26,079,380.8) | |

| Week 4 | 27,868,016.3 (11,006,512.0) | 25,536,878.1 (22,581,041.5) | |

| Social Engagement (Passive) | Hours with GPS location data per week (%) | ||

| Week 1 | 81.2 | 77.9 | |

| Week 2 | 79.0 | 91.6 | |

| Week 3 | 100.0 | 81.3 | |

| Week 4 | 99.0 | 85.6 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zawada, S.J.; Ganjizadeh, A.; Hagen, C.E.; Demaerschalk, B.M.; Erickson, B.J. Feasibility of Observing Cerebrovascular Disease Phenotypes with Smartphone Monitoring: Study Design Considerations for Real-World Studies. Sensors 2024, 24, 3595. https://doi.org/10.3390/s24113595

Zawada SJ, Ganjizadeh A, Hagen CE, Demaerschalk BM, Erickson BJ. Feasibility of Observing Cerebrovascular Disease Phenotypes with Smartphone Monitoring: Study Design Considerations for Real-World Studies. Sensors. 2024; 24(11):3595. https://doi.org/10.3390/s24113595

Chicago/Turabian StyleZawada, Stephanie J., Ali Ganjizadeh, Clint E. Hagen, Bart M. Demaerschalk, and Bradley J. Erickson. 2024. "Feasibility of Observing Cerebrovascular Disease Phenotypes with Smartphone Monitoring: Study Design Considerations for Real-World Studies" Sensors 24, no. 11: 3595. https://doi.org/10.3390/s24113595