A Method for Predicting Inertial Navigation System Positioning Errors Using a Back Propagation Neural Network Based on a Particle Swarm Optimization Algorithm

Abstract

:1. Introduction

2. Error Equations of Strapdown Inertial Navigation [20]

2.1. Attitude Error Equation

2.2. Velocity Error Equation

2.3. Position Error Equation

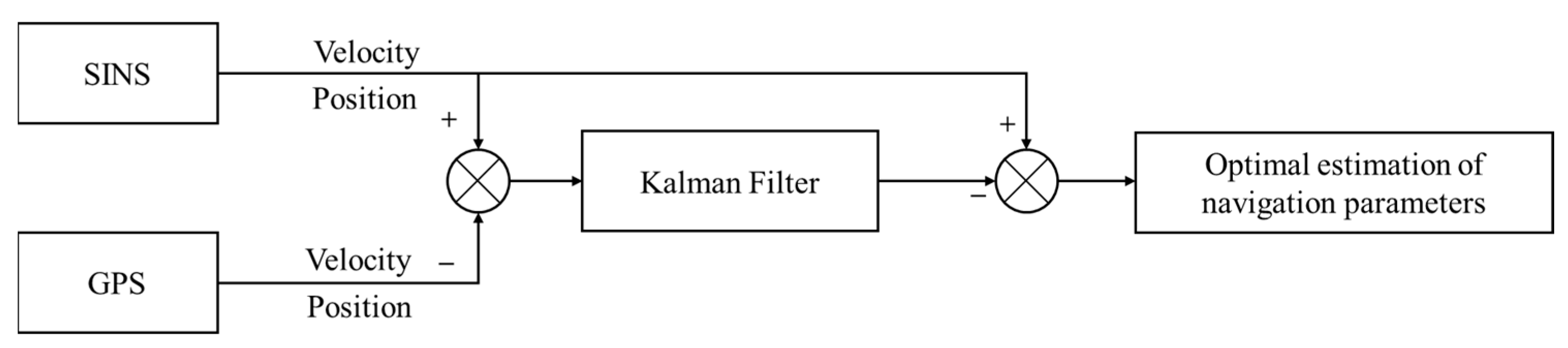

2.4. Kalman Filtering

3. Optimization Method for the Backpropagation Neural Network

3.1. Principle of the Backpropagation Neural Network

3.2. The Backpropagation Neural Network Based on Particle Swarm Optimization Algorithm

3.2.1. The Principle of the Particle Swarm Optimization Algorithm

| Algorithm 1 Steps of the PSO algorithm |

| Step 1: Initialize the position and velocity vectors of the particle swarm. |

| Step 2: Calculate the fitness value corresponding to each particle’s position based on the objective function, and evaluate the quality of the solutions based on the magnitude of the fitness values. |

| Step 3: Identify the individual extreme values and the global extreme values of the particle swarm based on the fitness values. |

| Step 4: Update the velocity and position of the particle swarm using the following formulas:

|

| Step 5: Determine whether the termination criteria are met. If the criteria are satisfied, output the global optimum and conclude the algorithm; otherwise, revert to step 2 and continue with further iterations. |

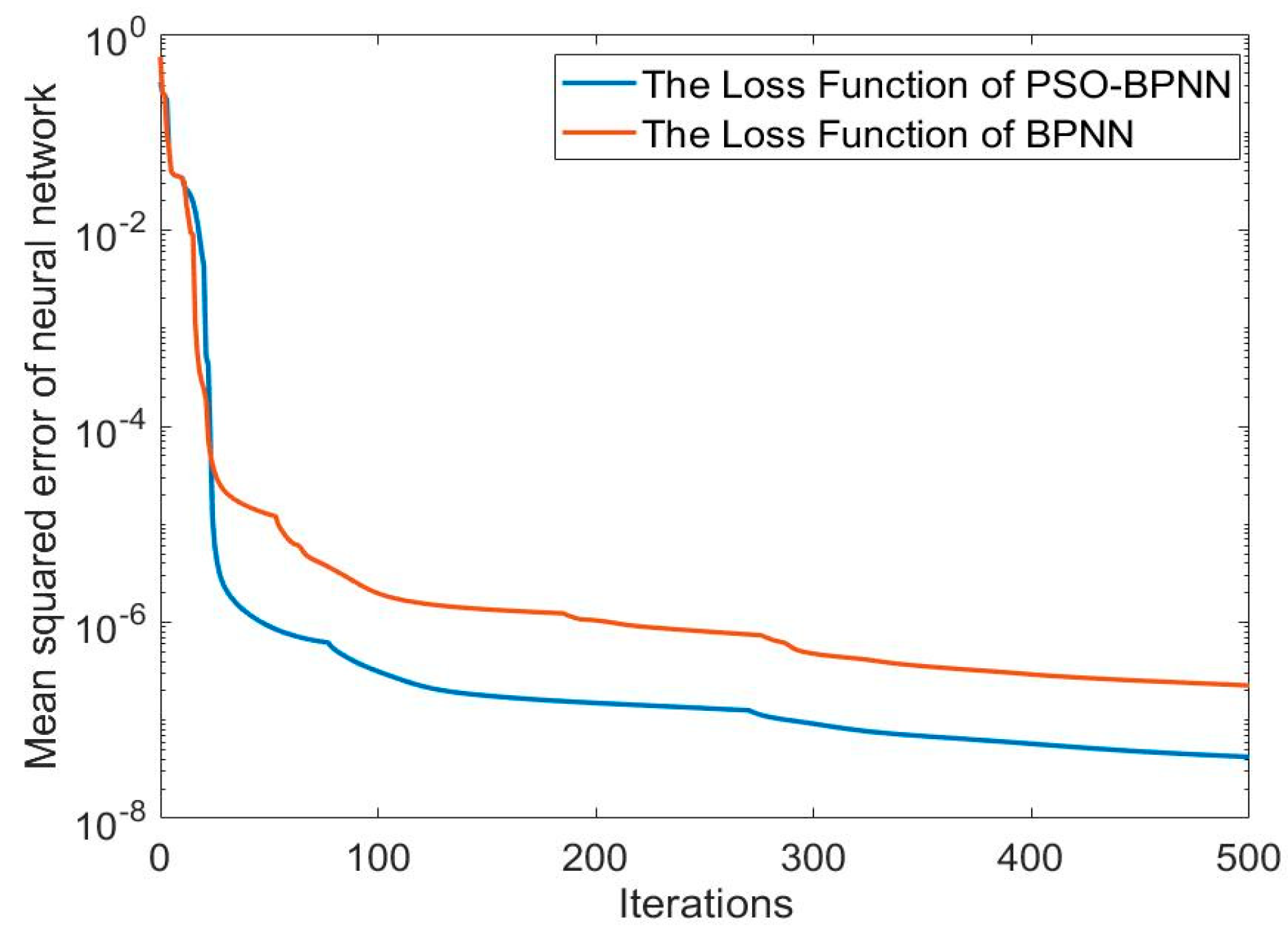

3.2.2. PSO-Based Optimization Algorithm for Backpropagation Neural Network

| Algorithm 2 Steps of the PSO-BP algorithm |

| Step 1: Transform the initial weights of the BPNN into particles within the framework of the PSO algorithm, with random initialization of the particles’ velocities and positions. |

| Step 2: Establish the number of training set samples, test set samples, hidden layer nodes, particle swarm size, iteration count, inertia weight, and acceleration factors for the network. |

| Step 3: Calculate the fitness values for each particle. |

| Step 4: When the conditions for fitness value updating are satisfied, update the positions and velocities of each particle according to (11) and (12), and record the best positions of each particle. |

| Step 5: Record the global best position. |

| Step 6: Assess whether the termination criteria are satisfied; if so, output the global optima, obtain the optimal weights, and conclude the algorithm; otherwise, reinitialize the velocities and positions of the particles and reiterate the process. |

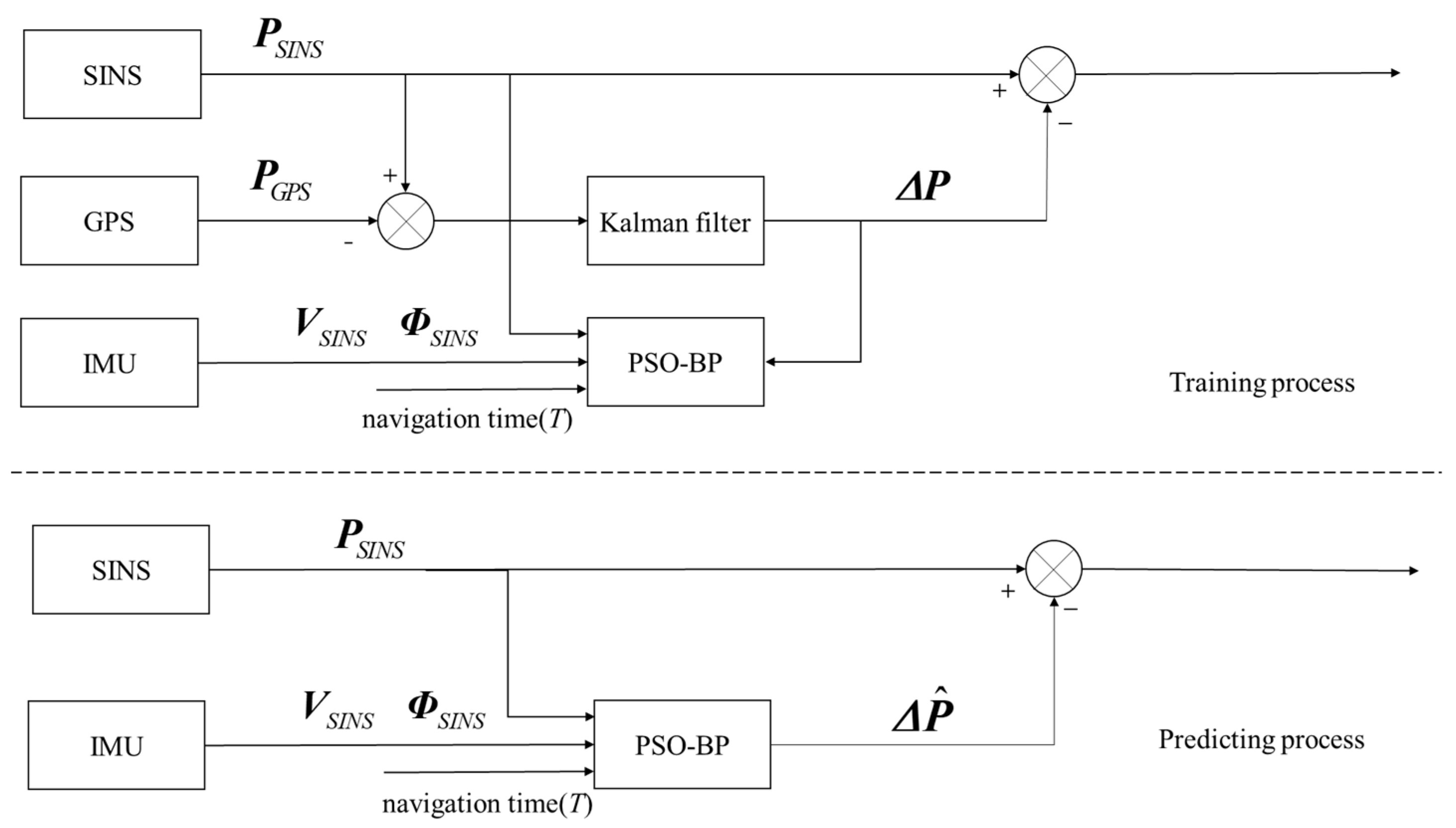

3.3. Error Prediction and Compensation Methods for Integrated Navigation Systems

4. Experimental Validation on an Actual Ship and Result Analysis

4.1. The Design of the Actual Ship Experiment

4.2. Results and Analysis of the Actual Ship Experiment

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, Q.; Ben, Y.; Yu, F.; Tan, J. Transversal strapdown INS based on reference ellipsoid for vehicle in the polar region. IEEE Trans. Veh. Technol. 2016, 65, 7791–7795. [Google Scholar] [CrossRef]

- Jiang, C.; Chen, S.; Chen, Y.; Zhang, B.; Feng, Z.; Zhou, H.; Bo, Y. A MEMS IMU de-noising method using long short term memory recurrent neural networks (LSTM-RNN). Sensors 2018, 18, 3470. [Google Scholar] [CrossRef]

- Badu, R.; Wang, J. Comparative study of interpolation techniques for ultra-tight integration of GPS/INS/PL sensors. J. Glob. Position. Syst. 2005, 4, 192–200. [Google Scholar]

- Chen, J.; Yang, Z.; Ye, S.; Lu, Q. Design of Strapdown Inertial Navigation System Based on MEMS. Acad. J. Comput. Inf. Sci. 2020, 3, 110–121. [Google Scholar]

- Shaukat, N.; Ali, A.; Javed Iqbal, M.; Moinuddin, M.; Otero, P. Multi-sensor fusion for underwater vehicle localization by augmentation of RBF neural network and error-state Kalman filter. Sensors 2021, 21, 1149. [Google Scholar] [CrossRef]

- Jaradat, M.A.; Abdel-Hafez, M.F. Non-linear autoregressive delay-dependent INS/GPS navigation system using neural networks. IEEE Sens. 2017, 17, 1105–1115. [Google Scholar] [CrossRef]

- Yang, Y.; Gao, S.; Zhong, Y.; Gu, C. Model Predictive Forward Neural Network Algorithm and Its Application in Integrated Navigation. J. Inert. Technol. China 2014, 4, 222–226. [Google Scholar]

- Cao, J.; Fang, J.; Wei, S.; Bai, H. Research on the Error Feedback Correction Method of MEMS-SINS Based on Neural Network Prediction During GPS Signal Loss. J. Astronaut. 2009, 30, 2231–2236+2264. [Google Scholar]

- Zhang, Y.; Wang, L. A hybrid intelligent algorithm DGP-MLP for GNSS/INS integration during GNSS outages. Navigation 2019, 72, 375–388. [Google Scholar] [CrossRef]

- Wang, Q.; Li, H. Research on Indoor Positioning Technology Based on Machine Learning and Inertial Navigation. Electron. Meas. Technol. 2016, 6. [Google Scholar]

- Zhang, G.P. Time series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 2003, 50, 159–175. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, G.; Gao, Q. Application of BP Neural Network in the Transfer Alignment of Inertial Navigation System. J. Nav. Aeronaut. Eng. Coll. 2008, 5, 489–492. [Google Scholar]

- Wang, G.; Xu, X.; Yao, Y.; Tong, J. A Novel BPNN-Based Method to Overcome the GPS Outages for INS/GPS System. IEEE Access 2019, 7, 82134–82143. [Google Scholar] [CrossRef]

- Yan, F.; Zhao, W.; Wang, X.; Wan, K.; Wang, Y. Research on master-slave filtering of Celestial Navigation System/Inertial Navigation System. J. Phys. Conf. Ser. 2021, 1732, 012189. [Google Scholar] [CrossRef]

- Ma, S.; Liu, S.; Meng, X. Optimized BP Neural Network Algorithm for Predicting Ship Trajectory; IEEE: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Fan, Y.; Wang, Z.; Lin, Y.; Tan, H. Enhance the Performance of Navigation: A Two-Stage Machine Learning Approach. In Proceedings of the 2020 6th International Conference on Big Data Computing and Communications (BIGCOM), Deqing, China, 24–25 July 2020; pp. 212–219. [Google Scholar]

- Liu, N.; Zhao, H.; Su, Z.; Qiao, L.; Dong, Y. Integrated navigation on vehicle based on low-cost SINS/GNSS using Deep Learning. Wirel. Pers. Commun. 2021, 126, 1–22. [Google Scholar] [CrossRef]

- Yu, X. Research on Gyroscope Random Error Modeling and Filtering Technology Based on LSTM Neural Network. Ph.D. Thesis, Huazhong University of Science and Technology, Wuhan, China, 2022. [Google Scholar]

- Milad, M.; Bryan, M.; Ilya, A. Unsupervised anomaly detection in flight data using convolutional variational auto-encoder. Aerospace 2020, 7, 115. [Google Scholar] [CrossRef]

- Chen, Z. Principles of Strapdown Inertial Navigation Systems. Master’s Thesis, Beijing Aerospace Press, Beijing, China, 1986. [Google Scholar]

- Wang, X.; Shi, F. MATLAB Neural Network: Analysis of 43 Cases. Master’s Thesis, Beihang University Press, Beijing, China, 2013. [Google Scholar]

| Error | AM | RMSE |

|---|---|---|

| Longitude error (m) | 4070.6 | 30.459 |

| Latitude error (m) | 11,950 | 21.540 |

| Method | BPNN | PSO-BPNN | ||||||

|---|---|---|---|---|---|---|---|---|

| Error Index | AM | RMSE | MAX | MIN | AM | RMSE | MAX | MIN |

| Longitude error (m) | 7.9319 | 9.7788 | 45.03 | −42.06 | 3.3339 | 4.3494 | 23.29 | −25.35 |

| Latitude error (m) | 11.4662 | 14.6223 | 70.84 | −77.69 | 3.4728 | 4.3309 | 16.85 | −16.16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Jiao, R.; Wei, T.; Guo, Z.; Ben, Y. A Method for Predicting Inertial Navigation System Positioning Errors Using a Back Propagation Neural Network Based on a Particle Swarm Optimization Algorithm. Sensors 2024, 24, 3722. https://doi.org/10.3390/s24123722

Wang Y, Jiao R, Wei T, Guo Z, Ben Y. A Method for Predicting Inertial Navigation System Positioning Errors Using a Back Propagation Neural Network Based on a Particle Swarm Optimization Algorithm. Sensors. 2024; 24(12):3722. https://doi.org/10.3390/s24123722

Chicago/Turabian StyleWang, Yabo, Ruihan Jiao, Tingxiao Wei, Zhaoxing Guo, and Yueyang Ben. 2024. "A Method for Predicting Inertial Navigation System Positioning Errors Using a Back Propagation Neural Network Based on a Particle Swarm Optimization Algorithm" Sensors 24, no. 12: 3722. https://doi.org/10.3390/s24123722

APA StyleWang, Y., Jiao, R., Wei, T., Guo, Z., & Ben, Y. (2024). A Method for Predicting Inertial Navigation System Positioning Errors Using a Back Propagation Neural Network Based on a Particle Swarm Optimization Algorithm. Sensors, 24(12), 3722. https://doi.org/10.3390/s24123722