Abstract

The estimation of spatiotemporal data from limited sensor measurements is a required task across many scientific disciplines. In this paper, we consider the use of mobile sensors for estimating spatiotemporal data via Kalman filtering. The sensor selection problem, which aims to optimize the placement of sensors, leverages innovations in greedy algorithms and low-rank subspace projection to provide model-free, data-driven estimates. Alternatively, Kalman filter estimation balances model-based information and sparsely observed measurements to collectively make better estimation with limited sensors. It is especially important with mobile sensors to utilize historical measurements. We show that mobile sensing along dynamic trajectories can achieve the equivalent performance of a larger number of stationary sensors, with performance gains related to three distinct timescales: (i) the timescale of the spatiotemporal dynamics, (ii) the velocity of the sensors, and (iii) the rate of sampling. Taken together, these timescales strongly influence how well-conditioned the estimation task is. We draw connections between the Kalman filter performance and the observability of the state space model and propose a greedy path planning algorithm based on minimizing the condition number of the observability matrix. This approach has better scalability and computational efficiency compared to previous works. Through a series of examples of increasing complexity, we show that mobile sensing along our paths improves Kalman filter performance in terms of better limiting estimation and faster convergence. Moreover, it is particularly effective for spatiotemporal data that contain spatially localized structures, whose features are captured along dynamic trajectories.

1. Introduction and Related Work

Many scientific disciplines require the estimation of spatiotemporal data from limited, point-source sensor measurements for the purpose of characterization, forecasting, reconstructing, and/or controlling a given system. Traditionally, limited stationary sensors are placed in the system of interest, while mobile sensors and autonomous vehicles have also gained interest lately. In this paper, we consider the task of estimating the spatiotemporal system given measurements from limited mobile sensors, by utilizing Kalman filtering and low-dimensional representation of the system. Under this setup, our goal is to efficiently plan sensor trajectories so that estimation can be achieved with very few sensors.

The goal of optimal sensor placement, also known as sensor selection, is to find the optimal locations in the state space to place only a few sensors so as to achieve the best performance in one or more of the above listed metrics. The combinatorial optimization problem of sensor selection is NP-hard, so most algorithms aim to find suboptimal solutions by leveraging greedy searches and low-rank subspace representations of the system in order to efficiently find a near-optimal solution []. Greedy methods [,] are computationally efficient and include QR decomposition [] with column pivoting [,,], (Q)DEIM [,], and GappyPOD [,,], all of which take advantage of the submodularity, or near-submodularity, of criteria such as the trace, spectral norm, condition number, determinant, and/or its low-rank projection basis. Greedy searches can also be modified to include cost constraints in the sensor placement problem []. Other objectives, such as the reconstruction error [] and the observability matrix [], can also be used for sensor selection. Statistical methods using Gaussian process models [,,] also are effective in leveraging entropy or mutual information as the main objective for optimization. Furthermore, more recently, shallow decoder networks can be trained within the context of greedy algorithms [,].

In contrast to instantaneous estimation from sensor measurements, Kalman filtering provides a recursive method that estimates based on collective information from prior knowledge of the dynamical model and a time-history of the sensor measurements [,]. In the sensor placement problem, it is often required to have the number of sensors to be at least the same or more than the latent rank of the system in order to be able to capture enough information for reconstruction []. However, with Kalman filter estimation, fewer sensors can be used to achieve the same performance given that the system is observable with these sensor measurements []. Commonly, the Kalman filter sensor selection (KFSS) problem studies the objective based on a posteriori error covariance, which is a metric in Kalman filtering for how much the estimates deviate from the truth. The metric can be considered within an observation period [], but it is more commonly taken to the limit at the infinite-time horizon when the full convergence of Kalman filtering is reached. Although optimization over the trace of the error covariance matrix, which represents the mean squared error (MSE), does not have a constant-factor polynomial-time approximation [,,], greedy methods are still near-optimal [].

The diversity of mathematical methods highlighted above for optimal sensor placement typically focus on stationary point sensors. However, in many applications, sensors can be mobile, in which case sensors are allowed to freely move in the measurement space while collecting measurements along the way. The problem concerning the design of trajectories or paths of sensors is called the sensor path planning problem. In the field of engineering and robotics, path planning problem has been long considered for the purposes of navigation as well as estimation in a dynamical environment [,,,,]. The task of tracking and estimating a flow field has often been tackled by constructing a simplified, restricted problem that focuses on a network of sensors with a simple formation for efficient paramerization and optimization [,,,,]. Different control laws for the path of the sensors are considered for different tasks, including a simple circular or elliptical control [], gradient climbing control [], control along level curves [], or control based on smoothed particle hydrodynamics []. Lynch et al. [] proposed a decentralized mobile network to collectively estimate environmental functions through communication networks, while the sensors move according to a gradient control law that maximizes information. Shriwastav et al. [] built a trajectory by connecting a cost-efficient path among optimal sensor placement locations under proper orthogonal decomposition (POD)-based reconstruction. For many of these work, the emphasis is on modeling and control of the sensor positions. Sensor scheduling [,] is a similar problem that concerns a schedule of densely placed sensors. Unlike the path planning problem, the sensors do not move in the scheduling problem, although it still can be formulated and solved as a special case of the path planning problem.

While many consider the sensor path in an infinite-time horizon, theoretical studies [,,] have shown that the optimal infinite-time schedule is independent of the initial error covariance and can be approximated arbitrarily closely by a periodic schedule. This provides a mathematical foundation for studying problems that consider the planning of a periodic sensor trajectory for spatiotemporal estimation with Kalman filtering. Lan and Schwager [,] approached the periodic path planning problem with a rapidly exploring random cycles (RRC) method that constructs and evaluates cycles found by randomly exploring the state space using a tree structure; Chen et al. [] utilized deep reinforcement learning instead as a learnable deterministic method for finding cycles. The problem extends to multiple sensors that do not have a set network formation, each following its individual path. These works are most closely related to the problem considered here. They approach the combinatorial optimization with a randomized or active search method, first searching for possible cycles, then evaluating their costs. By assumption, the sensors move to a different location at each discrete time step based on the trajectory found in this way.

In this paper, we consider the use of mobile sensors to improve the performance of estimating spatiotemporal data with Kalman filtering, where we focus on planning a periodic sensor trajectory that optimizes estimation. We assume that the sensor has free movement within a certain radius distance constrained by a speed limit. We consider the condition number of the observability matrix of the model as a metric for the Kalman filter estimation. The study of observability is not new and has been discussed previously in different sensor problems [,,,,]. In particular, Manohar et al. [] presented a balanced model reduction for sensor and actuator selection through observability and controllability in a linear quadratic Gaussian (LQG) controller setting. We build on these ideas, developing an optimization for the path planning of mobile sensors with the objective of dynamic Kalman filter estimation. We identify three distinct timescales related to Kalman filter design and estimation with mobile sensors: (i) the timescale of the spatiotemporal dynamics, (ii) the velocity of the sensors, and (iii) the rate of sampling. We propose an approach for greedy selection based on the empirical observability matrix for path planning, and leverage low-rank representation of the system to promote efficient computation complexity. Figure 1 shows how our overall strategy leverages low-rank approximations in order to determine trajectories in the spatiotemporal fields of interest. Compared with previous works, our approach does not restrict the formation of the sensor network nor the shape of the trajectory and builds the path by leveraging a low-rank system representation and greedy optimization. Our approach provides a scalable and efficient periodic path planning procedure for multi-sensor and high-dimensional problems. We conduct a series of experiments on synthetic data, the Kuramoto–Sivashinsky system, and sea surface temperature data to show that mobile sensing improves Kalman filter performance in terms of better limiting estimation and faster convergence.

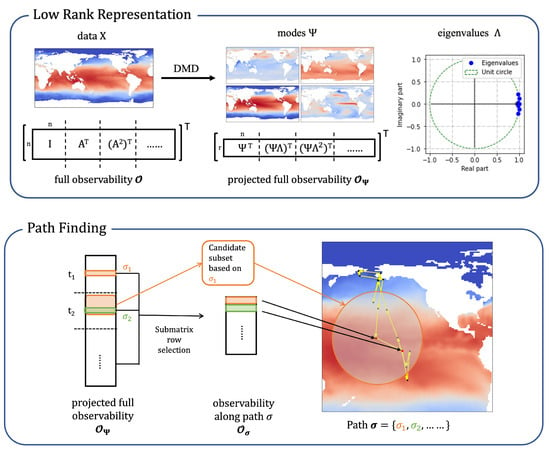

Figure 1.

Overview of the proposed approach to sensor path planning for dynamic estimation. The panels are divided into two main steps for estimating spatiotemporal data under a Kalman filter setting. The top panel shows the construction of a low-rank representation of the data as the prior model for Kalman filter through dynamic mode decomposition (DMD). The DMD modes and eigenvalues make up a linear dynamical model in a reduced dimension and a projection back to the original dimension. The dimension of the observability matrix is also reduced by the low-rank representation for efficient computation. The bottom panel illustrates the greedy path finding algorithm that optimizes the observability matrix along the path and improves Kalman filter estimation performance. It leverages a greedy row selection on the projected full observability matrix. Conceptually, at each time step, based on the historical selection of sensor locations, the sensors are led to the next valid locations within a velocity constraint.

2. Problem Formulation and Background Methods

The mathematical formulation of the sensor selection problem considers a discrete-time linear system model:

where , , and denote the system disturbance following a Gaussian distribution with zero mean and a covariance matrix . The measurements from k sensors are of the form:

where , , and refer to the measurement noise following a Gaussian distribution with zero mean and a covariance matrix . Directly measuring in the state space, we write the matrix as a selection matrix made up of standard unit vectors as columns. We further assume that the measurement noise are independent and identical across sensors with variance , so the covariance matrix for is . In a time-invariant system with a stationary sensing scenario at fixed locations, .

We denote a sensor trajectory of k sensors. is a set containing sensor locations at time t, which determine the selection matrix responsible for collecting measurements along the trajectory. In general, can extend to an infinite-time horizon. Zhang et al. [,] show that any infinite-time trajectory can be approximated by a periodic trajectory. Therefore, we focus on a periodic trajectory of fixed cycle rather than a trajectory over infinite-time. In practice, periodic trajectories also make sense since many systems contain some periodic or quasi-periodic characteristics. Furthermore, it is often favorable to plan a trajectory such that the sensor can return to a specified location periodically for maintenance and sensor recharging. Then, we write to be a periodic trajectory of length l, so that , , and so on.

In Section 2.1, we discuss the use of low-rank representation of the system for sparse sampling. We then introduce observability of the system in Section 2.2 and relate it to Kalman filter estimation performance in Section 2.3. Finally, we give attention to three key timescales in the Kalman filter model design in Section 2.4.

2.1. Reduced-Order Model and Sparse Sampling

In order to promote efficient computation and better model representation for sparse sampling, we consider a reduced-order model (ROM). Specifically, we consider a system with a low-rank linear representation:

where () is the internal low-rank dynamics state, is the low-rank dynamical system, and is the linear projection basis. Measurements are collected in the original high-dimensional state space.

One can define a projection basis to be a universal basis for compressed sensing, or a tailored POD basis for a data-driven approach []. However, such basis does not necessarily project to a proper low-rank dynamical system. To find a low-rank representation, supposing that the dynamics are known, we can take a spectral decomposition of and truncate the eigenvalues and eigenvectors to a low-rank representation. Alternatively, a data-driven approach is to find a close estimation of the model from the data by using dynamic mode decomposition (DMD) and its many variants [,,,,] that can be useful in sparse sensing []. DMD modes constitute the linear projection from high-dimensional data to the low-rank representation. The DMD eigenvalues form a diagonal dynamics matrix for the low-rank system.

ROMs are commonly utilized in the stationary sensor placement problem [,,]. Assuming no disturbance and noise, the measurements can be expressed as . Then, we can obtain through a simple linear reconstruction via the Moore–Penrose pseudoinverse, . It is clear that the reconstruction depends on the conditioning of matrix . Given a tailored basis, Q-DEIM [] uses QR factorization with column pivoting (QRcp) to greedily find near optimal selections. At each step, QRcp selects a new pivot column with the largest norm and removes the orthogonal projections onto the pivot column from the remaining columns. Controlling the condition number by maximizing the matrix volume, QRcp enforces a diagonal dominance structure through column pivoting and expands the submatrix volume. In a more recent work, GappyPOD+E [] extends the Q-DEIM method to an “oversampling” case where the sample/selection size is larger than the basis rank to improve stability. Based on the theory of random sampling in GappyPOD [], it is a deterministic method that utilizes a lower bound for the smallest eigenvalue of the submatrix to continue sensor selection over model rank.

2.2. Observability

Observability is concerned with the possibility of finding the states of the system from the observations. A time-varying system of the form , or a pair , is observable at time t if the system state can be determined from the observations in for some []. The system is said to be observable if it is true for all time. Observability of a system is examined through the observability Gramian. In our discrete-time system, it is equivalent to study the observability matrix:

The system is observable if and only if the observability matrix has full (column) rank. When all states are measured, , the full observability matrix is:

In the reduced-order model representation, the projected full observability matrix is:

In a time invariant system where is fixed in time, it may need multiple sensors or a long period in time to achieve full rank of the observability matrix. For example, for a fully measured system, is trivially full rank and the system states can be determined immediately at each time step. However, for sparse sensing on a state space of large dimension, observability of the system is harder to achieve. By considering mobile sensing, it opens up possibilities to generate better observability with limited sensors.

A fully observable system is necessary for an accurate estimation using sparse measurements. In particular, it allows Kalman filter estimation to converge to steady-state values on an infinite-time horizon. We discuss in more detail the connection between observability and Kalman filter estimation in the following section.

2.3. Kalman Filter

We use a Kalman filtering for spatiotemporal estimation on a linear model. Under the assumption of Gaussian noise, it is known to be the best linear estimator for minimizing the mean squared error []. Kalman filter algorithms combine the information from the prior knowledge of the system and the observed measurements over time to find an optimal estimate of the system. Let denote the error covariance matrix at time t in the Kalman filter estimation. By definition, its trace is the expected squared estimation error at time t. The error covariance follows a recurrence relation:

In the time-invariant case, when , the limiting error covariance satisfies , which is known as the discrete algebraic Riccati equation (DARE). When the system is observable, the error covariance is guaranteed to converge to a limit or a limit cycle in a periodic schedule [].

The limiting error covariance is a common metric to evaluate a Kalman filter model. However, finding this limit by solving the DARE is difficult and computationally expensive since it does not have a closed-form solution. Therefore, knowing that observability is a necessary condition for Kalman filter estimation performance, we further show how the conditioning of the observability matrix drives better estimation. We first relate the limiting expected squared error to the conditioning of in a time-invariant model with full-rank measurements. We then show that any model with sparse measurements or periodic trajectory can be reformulated at a larger time step to a time-invariant representation with full-rank measurements. Furthermore, the reformulated selection matrix is the same as the observability matrix in the original form.

The expected squared error is represented as the trace of the error covariance matrix, whose limit is the solution of a DARE. Since the DARE does not have a closed-form solution, we consider an upper and a lower bound for the trace of the solution. While various works have derived different bounds on the DARE solution [,], our analysis leverages the following results that isolate the selection matrix to draw connections between the error covariance and the selection matrix:

Theorem 1.

Consider the DARE with dimension n, assuming that , . We then have bounds:

- [] , where ;

- [] , where .

represents the i-th largest eigenvalue of .

We can easily show that the lower bound is monotonically decreasing with , and the upper bound is monotonically decreasing with given that . In the special case , , , is trivially satisfied. The condition is usually satisfied as well for a stable system in general when the disturbance covariance does not have a heavily dominant eigenvalue.

Since we consider the model with independent and identical measurement noise, , so , where is the i-th largest singular value of C. Therefore, in a time-invariant model where is full rank, we can minimize the condition number in order to achieve lower squared error.

However, in most scenarios, the system model is more complicated. When using limited sensors, the measurements will not be full rank. In the mobile sensor with periodic trajectory scenario where depends on time, the system is not time-invariant. We can show a reformulation of these models to a time-invariant representation in which is full rank. Then, the above result applies to these models as well. Consider the general model , . Let be a larger time step where n is the dimension of the state space or multiples of the sensor trajectory period. Then, we can follow [] and rewrite the system in the form of Equations (4) and (5):

In the reformation, is time-invariant, and it is exactly the observability matrix in the original form. If the system is observable, has full rank, and so does . By representing a time-variant system of mobile sensors in a time-invariant form, we can draw the same conclusion as the time-invariant system that the condition number of the observability matrix bounds the limiting error covariance matrix of Kalman filter estimation. Thus, lowering the condition number of the observability matrix leads to better estimation performance.

2.4. Kalman Filter Design Factors

In the mobile sensor scenario, besides planning the trajectory of the sensors, we should also consider in the model design the following key factors: the system Nyquist rate, discrete sampling rate, and sensor speed. These three timescales relate to the conditioning of the observability matrix of the system and the performance of Kalman filter estimation. Although not a definite guide, the following provides useful heuristics for estimation performance based on these timescales.

The Nyquist rate represents the internal time scale of the continuous-time dynamics. It is defined to be twice the highest frequency of the spatiotemporal dynamics. The discretization of the continuous-time system is considered good if it samples faster than the Nyquist rate. We believe the same applies to mobile sensing with Kalman filter estimation. At least one measurement should be collected within the Nyquist rate to capture the highest frequency feature of the system at the most relevant location.

The sampling rate refers to the rate at which the measurements are collected. It also represents the time step of the discrete model. A faster sampling rate, above the Nyquist rate, adds more measurements in a fixed time interval. In the stationary sensor setting, this improves stability of the estimation. With mobile sensors, faster sampling rate further adds more information since the measurement locations change. This leads to better system observability and Kalman filter estimation until the statistical independence of the measurements no longer holds.

The sensor speed determines the maximum region a sensor can measure in a fixed time interval. A faster sensor can reach and observe at a farther location in the state space to achieve better observability. More importantly, when the data contain local structures, it is essential to plan the sensor trajectories to capture those structures. Faster sensors can achieve this purpose when local structures are well separated in the state space, without the need to assign additional sensors.

The effect of these timescales will be further discussed in the numerical experiments in Section 4.

3. Computing Mobile Sensor Trajectories

With the problem formulation (3), and the discussion in Section 2.3, we consider the objective to minimize the condition number of the observability matrix under the schedule :

The observability matrix with respect to trajectory of length l is written as:

where is the projected observability matrix with full measurements and is a block-diagonal selection matrix determined by . The minimization problem described in Equation (6) becomes a submatrix selection problem minimizing the condition number. In the special case when the length of the periodic trajectory is 1, the objective becomes , which is identical to that of a stationary sensor placement problem under the DMD basis. Solving such a problem is in general NP-hard, but just as in the stationary sensor placement problem, we can leverage greedy algorithms and utilize the same idea as QRcp/Q-DEIM for under-sampling and GappyPOD+E or over-sampling to solve it approximately.

We introduce our greedy time-forwarding algorithm in Section 3.1 and illustrate it on a synthetic example of sparse linear dynamics on a torus in Section 3.2 before presenting the main results in Section 4.

3.1. Algorithm

The projected full observability matrix is by definition segmented into blocks, so for the purpose of efficient computation, we propose a greedy algorithm that finds sensor locations by sequentially focusing on each block .

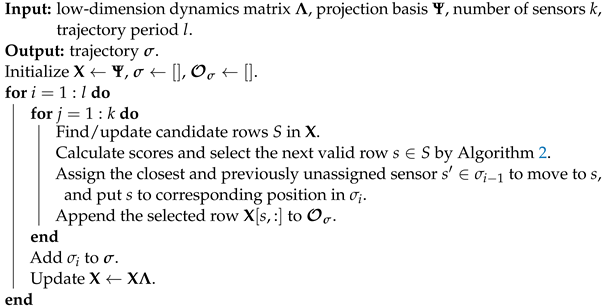

The approach is detailed in Algorithms 1 and 2.

In the selection step, we want to find a row in from the candidate set S to append to the current observability matrix in order to minimize . We use the same selecting rules as in QRcp and GappyPOD+E. The candidate set S is critical when sensor movement constraints are present. When the sensor is unrestricted to move in time, we can simply set . However, in practice, the sensors have a limit on their speed so there is a restricted region in which the sensors can move between time steps. Additionally, the state space can have special multiply-connected topological structure such that not all locations are accessible from every other. Future work will incorporate the background flow field in this estimated restriction region, although this is neglected for simplicity in the present work.

| Algorithm 1: Greedy Time-forwarding Observability-based Path Planning Algorithm |

|

| Algorithm 2: Selection Step |

|

Under a sensor speed constraint, we only consider the locations where

- A sensor can move to within a time step from its current location;

- It can go back to its initial location at the end of the period to form a cycle.

These requirements guide the selection of the candidate set S in the algorithm. When the topology of the state space is regularly shaped, a simple Euclidean distance can be used; while it is irregular with obstructions or complex network structures, we can resolve to other types of distance functions.

3.2. Illustrative Example: Sparse Linear Dynamics on a Torus

To show the effectiveness of mobile sensors, we demonstrate the algorithm with a random simulation of sparse linear dynamical system on a torus. We design the system to contain two types of structures: the 2D discrete inverse Fourier transform function and the Gaussian basis function. A Fourier mode is a global feature present across the state space, while a Gaussian mode is a local feature that only concentrates in a small neighbor around a center.

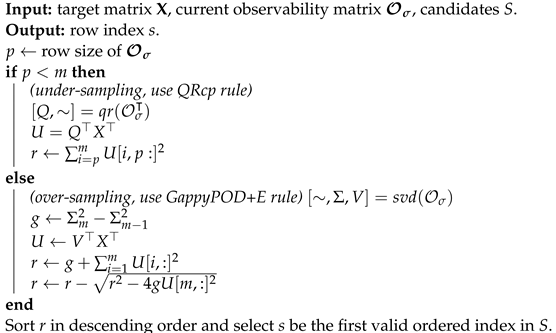

On a 128 × 128 spatial grid, we build the sparse system with two conjugate Fourier modes and three conjugate Gaussian modes by generating randomly their oscillation frequencies and damping rates (Figure 2). This is a system of size 16,384 with a low-dimensional linear representation of rank , where the projection basis contains the modes, and the low-dimension linear dynamics matrix is diagonal with the oscillation and damping information. The sampling rate is at . We generate the data by adding system disturbances and measurement noise. Since all parameters in the model are known in the synthetic example, we use the trace of the error covariance matrix as an accurate representation of the expected squared error to evaluate the estimation.

Figure 2.

A snapshot of the random system in 2D (left) and on a 3D torus (right).

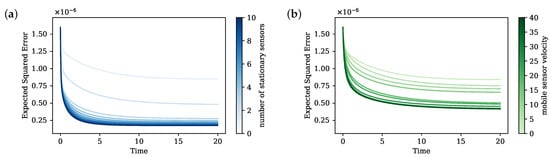

First, we estimate the system with sensors at fixed locations selected by applying QRcp on the basis , a common sensor placement strategy. We see from Figure 3 that there is a significant performance improvement as we increase the number of fixed sensors up to three. At least three sensors are needed to obtain a good estimation of the system so that they can be placed to observe the local regions of the Gaussian modes.

Figure 3.

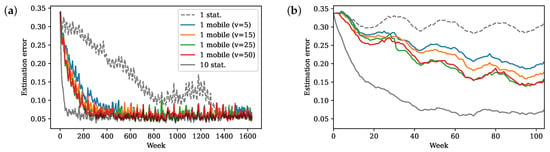

Expected squared error of the KF estimation in time, with (a) stationary sensor placement by number of sensors and (b) one mobile sensor by velocity constraints.

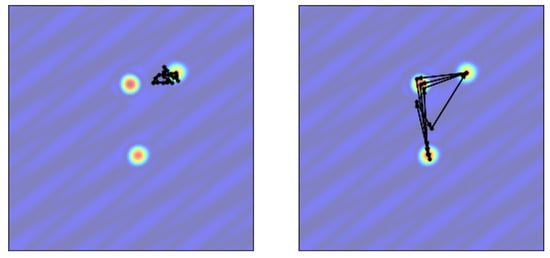

We then show that equivalent performance can be achieve using only one mobile sensor instead with the same sampling rate and fast enough speed. We choose a trajectory period such that the cycle is complete within the Nyquist rate of the system. When the sensor is slow, there is no significant improvement since the sensor cannot move to other local features within a cycle. However, with fast enough speed, our algorithm is able to direct the sensor to reach the localization of all three Gaussian modes and make a better estimation (Figure 4). Under the same sampling rate, three sensors collect three times as many measurements as only one sensor within any time interval. This fundamental differences in measurement size due to number of sensors contributes to the difference between three stationary sensors and one mobile sensors. We can narrow this performance gap by increasing the sampling rate. At a sampling rate of 0.001, the difference in estimation error is minimal.

Figure 4.

Planned sensor trajectory in black arrows with speed constraint of 5 (left) and 37 (right) units per time step of 0.01. The trajectory with smaller speed constraint is only able to explore one Gaussian mode, while that with higher speed constraint freely oscillates among all three Gaussian modes.

Through this synthetic experiment we see that a mobile sensor can indeed improve Kalman filter estimation, and the trajectory planned by our greedy method is effective to pinpoint local structures and achieve good observability.

4. Numerical Experiments

In practice, it is often the case that for spatiotemporal data and systems, the underlying low-rank dynamic model, the disturbance, and the noise are not known. In this case, we would fit an estimated model representation from data via DMD, and approximate disturbance and noise covariances either from data or through hyperparameter tuning. Here, we look at two examples: (i) the Kuramoto–Sivashinsky (KS) system and (ii) the Sea Surface Temperature (SST) dataset from NOAA [,]. We study the performance when we use a DMD approximated model for Kalman filter estimation and sensor path planning by our greedy algorithm. In both examples, we fit a linear DMD model with a chosen low rank. The dynamics matrix is diagonal with DMD eigenvalues and the basis consists of the DMD modes. We further add a white measurement noise with variance to the data, and we set the system disturbance to be where the uniform variance q is a hyperparameter tuned by experiment.

These two examples are representative in different aspects. The KS system is known for its chaotic behavior. Therefore, a linear representation of the system is extremely difficult. Additionally, the modes from the linear approximated model are mostly local since linear correlation between locations is small, so that full observability is hard to achieve with few fixed sensors. We show in Section 4.1 that mobile sensors can perform particularly well comparing to fixed sensors by reaching more locations and capturing more local structures.

The SST dataset from NOAA contains weekly mean optimum interpolated sea surface temperature measurements from global satellite data. The dataset can be well approximated by a linear model and most modes in the approximated system are global so that observability is easily achieved with even just one stationary sensor. We show then in Section 4.2 that mobile sensors further accelerate the convergence of error.

4.1. Kuramoto–Sivashinsky System

The KS system is given by the equation . We consider the numerical solution of the system on a spatial grid of size 2048 over . The initial condition is randomly generated over a standard normal distribution. With a random initial condition, we numerically solve the KS equation and collect data on the time interval with a time step of . We first perform singular value decomposition (SVD) to find a low rank representation of the data. The first 100 singular values capture 99.99% of the energy, so we estimate a low-dimensional linear representation of the system by fitting a standard DMD model [] with an SVD rank of 100.

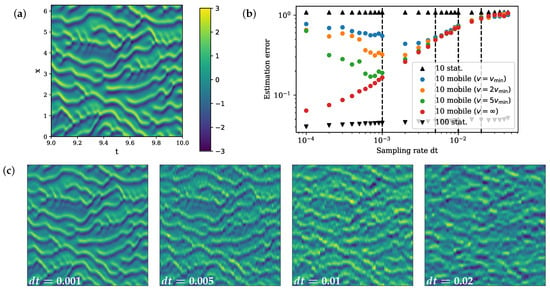

Because of the chaotic nature of the system, accurate estimation is not possible with a limited number of 10 sparse fixed sensors. Indeed, we need 100 fixed sensors, equivalent to the full rank of our approximated linear system, to effectively estimate the system (Figure 5). Additionally, we see that there is no significant improvement in performance with increasing sampling rate using fixed sensors since more frequent measurements at the same locations add little information of the unobserved states.

Figure 5.

(a) True spatiotemporal dynamics of the KS system in . (b) Bode plot of estimation error against sampling rate. (c) Estimated x-t plot by 10 mobile sensors in with sampling rate (corresponding with the dashed vertial lines on the bode plot).

On the other hand, mobile sensors can move to measure different locations and gain more information of the entire state space. With fast enough sensor speed, 10 moving sensors can achieve a significantly improved estimation comparing to 10 fixed sensors by increasing the sampling rate (Figure 5). The improvement in performance is limited by the sensor speed. We set the minimum speed in this example to be so that the sensor is able to move to its left and right neighbor at a discrete sampling time step of . With higher sensor speed, sensors can make observations over a wider spatial range, thus giving better estimation. As , the performance of 10 moving sensors approaches that of 100 fixed sensors with fast enough sampling rate.

Due to the greedy nature of our algorithm, it selects based on the immediate reward at the next time step and cannot look ahead. When the sampling is sufficiently fast, the greedy algorithm makes a decision based on the closest neighbors of the current location. Such a decision is not informative, and the trajectory planned fails to have a good performance; as we can see in Figure 5, the estimation error rises at faster sampling rate for mobile sensors with a speed constraint. One way to reduce the greediness of the algorithm is to perform a multiscale path completion. We start by finding a trajectory at a slower sampling rate. Then, we gradually decrease the time step and apply the same path planning algorithm, except using the previously found trajectory as guidance and filling in the gap to construct a more complete trajectory at the faster sampling rate. We apply this multiscale expansion procedure on the KS example, initiated at the sampling rate with the smallest error, and expand to faster sampling rate based on that path. We see the performance is no longer worse with a fast sampling rate in the KS experiments, but it flattens and reaches a limit determined by the sensor speed (Figure 6).

Figure 6.

Bode plot of the estimation error against sampling rate. Estimation errors against sampling rate using multiscale path completion are plotted in full transparency connected by line. The dots with partial transparency are previous results by the greedy approach.

4.2. Sea Surface Temperature

The SST dataset contains weekly collection from satellite data of sea surface temperature measurements on the 1 degree latitude by 1 degree longitude (180 by 360) global grid from 1990 to the present. We fit a standard DMD model and obtain a low-dimensional representation.

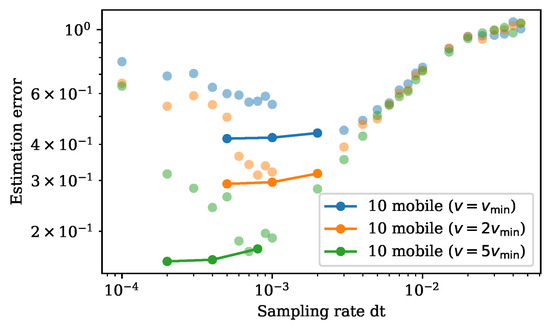

First, we perform Kalman filter estimation using stationary sensor measurements and observe that one stationary sensor achieves comparable performance in the end to ten stationary sensors (Figure 7). This verifies that the approximated linear model contains mostly global features that can be observed well with very few sensors. However, with a bad initial estimation, Kalman filtering with 10 fixed sensors converges to low error very quickly (below 0.1 within one year), while it takes 1 sensor almost 28 years to reach a comparable error.

Figure 7.

Estimation error over (a) all time and (b) the first two years (104 weekly measurements).

The issue of slow Kalman filter convergence can be solved using a mobile sensor instead, while still maintaining a good limiting estimation. The DMD eigenvalues suggest the max frequency of the system to be around a half-year, so we choose the period of mobile sensor trajectory to be about a quarter-year (14 weeks). Since the globe has continental land as obstructions, we need to ensure the planned trajectory does not cross any land as the sensor moves in water. We build a connectivity graph and adjacency matrix for the candidate selection step in our algorithm instead of a simple Euclidean distance function.

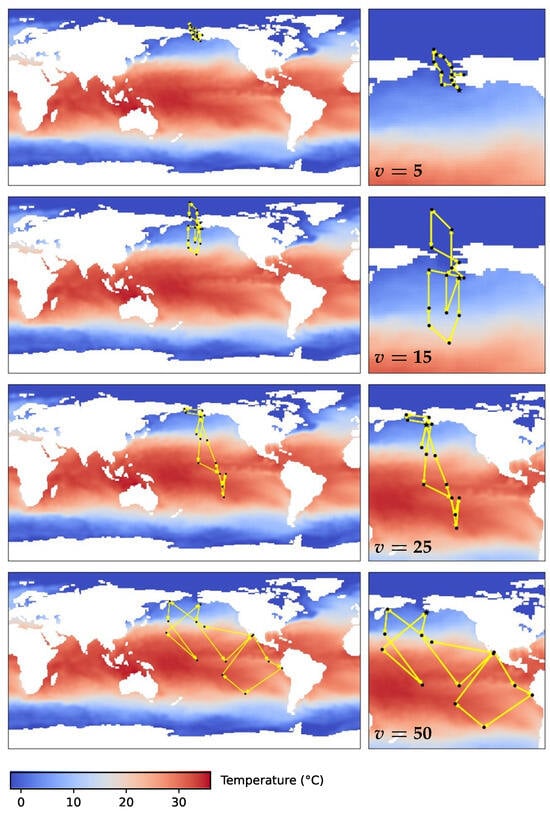

Figure 7 shows the results of one mobile sensor with different sensor speed limits. Mobile sensor estimation indeed produces much faster convergence compared to a stationary sensor. As the speed limit increases, the sensor can move to farther locations with better observability, further improving the convergence of estimation. Figure 8 shows the paths of the sensor. When v is small, the initial location plays an important role since the trajectory does not move far from it. The first location picked by the algorithm is close to Alaska, so the first two trajectories with low speed center around the North Pacific and the Arctic Ocean. As v increases, the sensor explores the equator and south hemisphere regions, especially the El Niño regions around the equatorial Pacific, which is an important local feature. Figure 9 shows the planned trajectory using two mobile sensors.

Figure 8.

Planned sensor trajectory (black dots connected by yellow arrows) with a cycle period of 14 weeks, where the movement speed is limited to 5, 15, 25, and 50 spatial units (1 degree of latitude or longitude). Zoomed in map on the right. The initial location picked by the algorithm is close to Alaska, so all trajectories expand from the region.

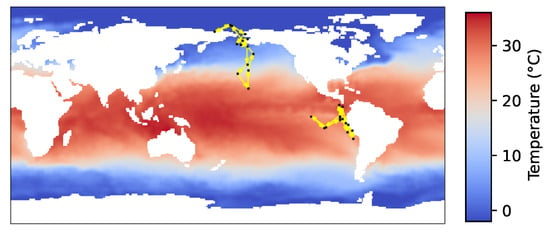

Figure 9.

Planned sensor trajectory for 2 sensors with a cycle period of 14 weeks, with sensor speed limit at 15 spatial units (1 degree of latitude or longitude).

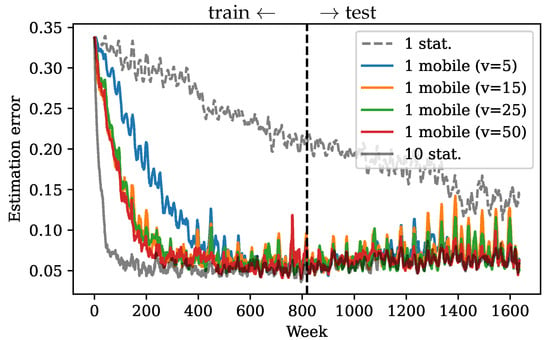

Convergence speed also matters when the underlying dynamics is nonstationary and changes over time. If the estimation does not reach a meaningful error in time, the shifting dynamics will further slow down the convergence and increase the limiting error. To show this, we instead fit a DMD model using only the first half of the SST data and use it as the approximated linear model for Kalman filter estimation. In this case, the fitted linear model is representative and relevant only in the first training half, and does not reflect any possible changes in the data dynamics afterwards. Then, one stationary sensor performs significantly worse due to slow convergence (Figure 10). On the other hand, the error from one mobile sensor converges fast enough within the training period so that in the second half the error is still relatively low. Therefore, fast convergence with mobile sensors ensures a fast adjustment in estimation when the dynamics change in time.

Figure 10.

Kalman filter estimation error in time using approximated DMD model trained on the first half of the data.

5. Conclusions and Future Work

In this work, we developed a mathematical strategy for planning a periodic trajectory for limited mobile sensors to estimate a spatiotemporal system using Kalman filter estimation. We examine the system observability as a metric that influences the estimation performance in terms of the limiting squared error as well as the convergence rate. We consider an objective to minimize the condition number of the discrete observability matrix along the trajectory and formulate it as a submatrix selection problem. We then propose a time-forwarding greedy algorithm that selects sensor locations along the trajectory using the same rules as QRcp and GappyPOD+E from a carefully chosen candidate subset.

The experiments show that the method is able to plan a trajectory that locates the local features and improves the estimation performance. In these experiments, we explore Kalman filter design factors and their impact on estimation as they relate to the three important timescales: the Nyquist rate of the underlying dynamics, the rate of sampling, and the velocity of the sensors. We find that mobile sensors are especially beneficial for a complex, nonlinear system to capture local features in an approximated linear model, without deploying a large amount of sensors. We also see an improvement in estimation convergence rate using mobile sensors, which more rapidly reaches an accurate estimation.

The greedy approach depends on the key assumption that the sensor moves freely towards any location only restricted to a radius distance defined by a movement speed limit. In future work, a weighted cost function can be added to the objective to better incorporate different costs to the path planning. Sensor speed can be formulated as a cost instead of a hard constraint imposed in the selection process. Furthermore, energy consumption caused by sensor movement can also be included to plan a trajectory that is also more energy efficient. For example, as in the flow field applications, we can consider the flow field information and the energy cost associated with it as the sensor moves with or against the flow. Incorporating the background flow velocity in the set of possible next locations is an important future extension of this work. We can also refer to many different sensor control laws as cost constraints for incorporating other tasks such as simultaneous structure tracking.

For multi-sensor planning, it will be interesting to consider different asynchronous periodic trajectory for each individual sensor instead of all having the same period. This will be particularly useful for multiscale systems so that each sensor can be responsible for estimating features of different timescales.

As shown in the numerical experiments, the performance of Kalman filter estimation fundamentally depends on the accurate modeling of the linear system and the correct choices of hyperparameters. Better data-driven linear or possibly nonlinear system identification can be explored. An alternating model fitting and estimation approach can be explored to update both the model and the sensor trajectory continuously to achieve even better performance.

Author Contributions

Conceptualization, J.M., S.L.B. and J.N.K.; methodology, J.M.; software, J.M.; validation, J.M.; formal analysis, J.M.; investigation, J.M.; writing—original draft preparation, J.M.; writing—review and editing, J.M., S.L.B. and J.N.K.; visualization, J.M.; supervision, S.L.B. and J.N.K.; project administration, S.L.B. and J.N.K.; funding acquisition, S.L.B. and J.N.K. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge support from the National Science Foundation AI Institute in Dynamic Systems (grant number 2112085). S.L.B. acknowledges support from the Air Force Office of Scientific Research (FA9550-21-1-0178). J.N.K. acknowledges support from the Air Force Office of Scientific Research (FA9550-19-1-0011).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The sea surface temperature data is available at https://psl.noaa.gov. Other simulation data presented in this study are available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Manohar, K.; Brunton, B.W.; Kutz, J.N.; Brunton, S.L. Data-driven sparse sensor placement for reconstruction: Demonstrating the benefits of exploiting known patterns. IEEE Control Syst. Mag. 2018, 38, 63–86. [Google Scholar]

- Tropp, J.A. Greed is good: Algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 2004, 50, 2231–2242. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C.; Strauss, M.J. Algorithms for simultaneous sparse approximation. Part I: Greedy pursuit. Signal Process. 2006, 86, 572–588. [Google Scholar] [CrossRef]

- Trefethen, L.N.; Bau, D., III. Numerical Linear Algebra; SIAM: Philadelphia, PA, USA, 1997; Volume 50. [Google Scholar]

- Clark, E.; Askham, T.; Brunton, S.L.; Kutz, J.N. Greedy sensor placement with cost constraints. IEEE Sens. J. 2018, 19, 2642–2656. [Google Scholar] [CrossRef]

- Saito, Y.; Nonomura, T.; Yamada, K.; Nakai, K.; Nagata, T.; Asai, K.; Sasaki, Y.; Tsubakino, D. Determinant-based fast greedy sensor selection algorithm. IEEE Access 2021, 9, 68535–68551. [Google Scholar] [CrossRef]

- Chaturantabut, S.; Sorensen, D.C. Nonlinear model reduction via discrete empirical interpolation. SIAM J. Sci. Comput. 2010, 32, 2737–2764. [Google Scholar] [CrossRef]

- Drmac, Z.; Gugercin, S. A new selection operator for the discrete empirical interpolation method—Improved a priori error bound and extensions. SIAM J. Sci. Comput. 2016, 38, A631–A648. [Google Scholar] [CrossRef]

- Everson, R.; Sirovich, L. Karhunen–Loeve procedure for gappy data. JOSA A 1995, 12, 1657–1664. [Google Scholar] [CrossRef]

- Astrid, P.; Weiland, S.; Willcox, K.; Backx, T. Missing point estimation in models described by proper orthogonal decomposition. IEEE Trans. Autom. Control 2008, 53, 2237–2251. [Google Scholar] [CrossRef]

- Peherstorfer, B.; Drmac, Z.; Gugercin, S. Stability of discrete empirical interpolation and gappy proper orthogonal decomposition with randomized and deterministic sampling points. SIAM J. Sci. Comput. 2020, 42, A2837–A2864. [Google Scholar] [CrossRef]

- Li, B.; Liu, H.; Wang, R. Efficient Sensor Placement for Signal Reconstruction Based on Recursive Methods. IEEE Trans. Signal Process. 2021, 69, 1885–1898. [Google Scholar] [CrossRef]

- Ilkturk, U. Observability Methods in Sensor Scheduling; Order No. 3718711; Arizona State University: Tempe, AZ, USA, 2015; Available online: https://www.proquest.com/dissertations-theses/observability-methods-sensor-scheduling/docview/1712400301/se-2 (accessed on 15 April 2024).

- Caselton, W.F.; Zidek, J.V. Optimal monitoring network designs. Stat. Probab. Lett. 1984, 2, 223–227. [Google Scholar] [CrossRef]

- Krause, A.; Singh, A.; Guestrin, C. Near-optimal sensor placements in Gaussian processes: Theory, efficient algorithms and empirical studies. J. Mach. Learn. Res. 2008, 9, 235–284. [Google Scholar]

- Wang, L.; Zhao, Y.; Liu, J. A Kriging-based decoupled non-probability reliability-based design optimization scheme for piezoelectric PID control systems. Mech. Syst. Signal Process. 2023, 203, 110714. [Google Scholar] [CrossRef]

- Erichson, N.B.; Mathelin, L.; Yao, Z.; Brunton, S.L.; Mahoney, M.W.; Kutz, J.N. Shallow neural networks for fluid flow reconstruction with limited sensors. Proc. R. Soc. A 2020, 476, 20200097. [Google Scholar] [CrossRef]

- Williams, J.; Zahn, O.; Kutz, J.N. Data-driven sensor placement with shallow decoder networks. arXiv 2022, arXiv:2202.05330. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Brunton, S.L.; Kutz, J.N. Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Tzoumas, V.; Jadbabaie, A.; Pappas, G.J. Sensor placement for optimal Kalman filtering: Fundamental limits, submodularity, and algorithms. In Proceedings of the 2016 American Control Conference (ACC), Boston, MA, USA, 6–8 July 2016; pp. 191–196. [Google Scholar]

- Ye, L.; Roy, S.; Sundaram, S. On the complexity and approximability of optimal sensor selection for Kalman filtering. In Proceedings of the 2018 Annual American Control Conference (ACC), Milwaukee, WI, USA, 27–29 June 2018; pp. 5049–5054. [Google Scholar]

- Zhang, H.; Ayoub, R.; Sundaram, S. Sensor selection for Kalman filtering of linear dynamical systems: Complexity, limitations and greedy algorithms. Automatica 2017, 78, 202–210. [Google Scholar] [CrossRef]

- Dhingra, N.K.; Jovanović, M.R.; Luo, Z.Q. An ADMM algorithm for optimal sensor onand actuator selecti. In Proceedings of the 53rd IEEE Conference on Decision and Control, Los Angeles, CA, USA, 15–17 December 2014; pp. 4039–4044. [Google Scholar]

- Chamon, L.F.; Pappas, G.J.; Ribeiro, A. Approximate supermodularity of Kalman filter sensor selection. IEEE Trans. Autom. Control 2020, 66, 49–63. [Google Scholar] [CrossRef]

- Gunnarson, P.; Mandralis, I.; Novati, G.; Koumoutsakos, P.; Dabiri, J.O. Learning efficient navigation in vortical flow fields. Nat. Commun. 2021, 12, 7143. [Google Scholar] [CrossRef]

- Krishna, K.; Song, Z.; Brunton, S.L. Finite-horizon, energy-efficient trajectories in unsteady flows. Proc. R. Soc. A 2022, 478, 20210255. [Google Scholar] [CrossRef]

- Biferale, L.; Bonaccorso, F.; Buzzicotti, M.; Clark Di Leoni, P.; Gustavsson, K. Zermelo’s problem: Optimal point-to-point navigation in 2D turbulent flows using reinforcement learning. Chaos Interdiscip. J. Nonlinear Sci. 2019, 29, 103138. [Google Scholar] [CrossRef]

- Buzzicotti, M.; Biferale, L.; Bonaccorso, F.; Clark di Leoni, P.; Gustavsson, K. Optimal Control of Point-to-Point Navigation in Turbulent Time Dependent Flows using Reinforcement Learning. In Proceedings of the International Conference of the Italian Association for Artificial Intelligence, Milano, Italy, 24–27 November 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 223–234. [Google Scholar]

- Madridano, Á.; Al-Kaff, A.; Martín, D.; de la Escalera, A. Trajectory planning for multi-robot systems: Methods and applications. Expert Syst. Appl. 2021, 173, 114660. [Google Scholar] [CrossRef]

- Leonard, N.E.; Paley, D.A.; Lekien, F.; Sepulchre, R.; Fratantoni, D.M.; Davis, R.E. Collective motion, sensor networks, and ocean sampling. Proc. IEEE 2007, 95, 48–74. [Google Scholar] [CrossRef]

- DeVries, L.; Majumdar, S.J.; Paley, D.A. Observability-based optimization of coordinated sampling trajectories for recursive estimation of a strong, spatially varying flowfield. J. Intell. Robot. Syst. 2013, 70, 527–544. [Google Scholar] [CrossRef]

- Ogren, P.; Fiorelli, E.; Leonard, N.E. Cooperative control of mobile sensor networks: Adaptive gradient climbing in a distributed environment. IEEE Trans. Autom. Control 2004, 49, 1292–1302. [Google Scholar] [CrossRef]

- Zhang, F.; Leonard, N.E. Cooperative filters and control for cooperative exploration. IEEE Trans. Autom. Control 2010, 55, 650–663. [Google Scholar] [CrossRef]

- Paley, D.A.; Wolek, A. Mobile sensor networks and control: Adaptive sampling of spatiotemporal processes. Annu. Rev. Control. Robot. Auton. Syst. 2020, 3, 91–114. [Google Scholar] [CrossRef]

- Peng, L.; Lipinski, D.; Mohseni, K. Dynamic data driven application system for plume estimation using UAVs. J. Intell. Robot. Syst. 2014, 74, 421–436. [Google Scholar] [CrossRef]

- Lynch, K.M.; Schwartz, I.B.; Yang, P.; Freeman, R.A. Decentralized environmental modeling by mobile sensor networks. IEEE Trans. Robot. 2008, 24, 710–724. [Google Scholar] [CrossRef]

- Shriwastav, S.; Snyder, G.; Song, Z. Dynamic Compressed Sensing of Unsteady Flows with a Mobile Robot. arXiv 2021, arXiv:2110.08658. [Google Scholar]

- Liu, S.; Fardad, M.; Masazade, E.; Varshney, P.K. Optimal periodic sensor scheduling in networks of dynamical systems. IEEE Trans. Signal Process. 2014, 62, 3055–3068. [Google Scholar] [CrossRef]

- Shi, D.; Chen, T. Approximate optimal periodic scheduling of multiple sensors with constraints. Automatica 2013, 49, 993–1000. [Google Scholar] [CrossRef]

- Zhang, W.; Vitus, M.P.; Hu, J.; Abate, A.; Tomlin, C.J. On the optimal solutions of the infinite-horizon linear sensor scheduling problem. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; pp. 396–401. [Google Scholar] [CrossRef][Green Version]

- Zhao, L.; Zhang, W.; Hu, J.; Abate, A.; Tomlin, C.J. On the optimal solutions of the infinite-horizon linear sensor scheduling problem. IEEE Trans. Autom. Control 2014, 59, 2825–2830. [Google Scholar] [CrossRef]

- Mo, Y.; Garone, E.; Sinopoli, B. On infinite-horizon sensor scheduling. Syst. Control Lett. 2014, 67, 65–70. [Google Scholar] [CrossRef]

- Lan, X.; Schwager, M. Planning periodic persistent monitoring trajectories for sensing robots in gaussian random fields. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2415–2420. [Google Scholar]

- Lan, X.; Schwager, M. Rapidly exploring random cycles: Persistent estimation of spatiotemporal fields with multiple sensing robots. IEEE Trans. Robot. 2016, 32, 1230–1244. [Google Scholar] [CrossRef]

- Chen, J.; Shu, T.; Li, T.; de Silva, C.W. Deep reinforced learning tree for spatiotemporal monitoring with mobile robotic wireless sensor networks. IEEE Trans. Syst. Man Cybern. Syst. 2019, 50, 4197–4211. [Google Scholar] [CrossRef]

- Manohar, K.; Kutz, J.N.; Brunton, S.L. Optimal sensor and actuator selection using balanced model reduction. IEEE Trans. Autom. Control 2021, 67, 2108–2115. [Google Scholar] [CrossRef]

- Asghar, A.B.; Jawaid, S.T.; Smith, S.L. A complete greedy algorithm for infinite-horizon sensor scheduling. Automatica 2017, 81, 335–341. [Google Scholar] [CrossRef]

- Rafieisakhaei, M.; Chakravorty, S.; Kumar, P.R. On the use of the observability gramian for partially observed robotic path planning problems. In Proceedings of the 2017 IEEE 56th Annual Conference on Decision and Control (CDC), Melbourne, VIC, Australia, 12–15 December 2017; pp. 1523–1528. [Google Scholar] [CrossRef]

- Tu, J.H.; Rowley, C.W.; Luchtenburg, D.M.; Brunton, S.L.; Kutz, J.N. On dynamic mode decomposition: Theory and applications. J. Comput. Dyn. 2014, 1, 391–421. [Google Scholar] [CrossRef]

- Kutz, J.N.; Brunton, S.L.; Brunton, B.W.; Proctor, J.L. Dynamic Mode Decomposition: Data-Driven Modeling of Complex Systems; SIAM: Philadelphia, PA, USA, 2016. [Google Scholar]

- Jovanović, M.R.; Schmid, P.J.; Nichols, J.W. Sparsity-promoting dynamic mode decomposition. Phys. Fluids 2014, 26, 024103. [Google Scholar] [CrossRef]

- Askham, T.; Kutz, J.N. Variable projection methods for an optimized dynamic mode decomposition. SIAM J. Appl. Dyn. Syst. 2018, 17, 380–416. [Google Scholar] [CrossRef]

- Brunton, S.L.; Budišić, M.; Kaiser, E.; Kutz, J.N. Modern Koopman Theory for Dynamical Systems. SIAM Rev. 2022, 64, 229–340. [Google Scholar] [CrossRef]

- Kramer, B.; Grover, P.; Boufounos, P.; Nabi, S.; Benosman, M. Sparse sensing and DMD-based identification of flow regimes and bifurcations in complex flows. SIAM J. Appl. Dyn. Syst. 2017, 16, 1164–1196. [Google Scholar] [CrossRef]

- Kalman, R.E. On the general theory of control systems. In Proceedings of the First International Conference on Automatic Control, Moscow, Russia, 27 June–2 July 1960; pp. 481–492. [Google Scholar]

- Dai, H.; Bai, Z.Z. On eigenvalue bounds and iteration methods for discrete algebraic Riccati equations. J. Comput. Math. 2011, 29, 341–366. [Google Scholar]

- Kwon, W.H.; Moon, Y.S.; Ahn, S.C. Bounds in algebraic Riccati and Lyapunov equations: A survey and some new results. Int. J. Control 1996, 64, 377–389. [Google Scholar] [CrossRef]

- Komaroff, N. Upper bounds for the solution of the discrete Riccati equation. IEEE Trans. Autom. Control 1992, 37, 1370–1373. [Google Scholar] [CrossRef]

- Komaroff, N.; Shahian, B. Lower summation bounds for the discrete Riccati and Lyapunov equations. IEEE Trans. Autom. Control 1992, 37, 1078–1080. [Google Scholar] [CrossRef]

- Bittanti, S.; Colaneri, P.; Nicolao, G.D. The periodic Riccati equation. In The Riccati Equation; Springer: Berlin/Heidelberg, Germany, 1991; pp. 127–162. [Google Scholar]

- NOAA Optimum Interpolation (OI) SST V2 Data Provided by the NOAA PSL, Boulder, Colorado, USA. Available online: https://psl.noaa.gov (accessed on 15 April 2024).

- Reynolds, R.W.; Rayner, N.A.; Smith, T.M.; Stokes, D.C.; Wang, W. An improved in situ and satellite SST analysis for climate. J. Clim. 2002, 15, 1609–1625. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).