Static and Dynamic Hand Gestures: A Review of Techniques of Virtual Reality Manipulation

Abstract

:1. Introduction

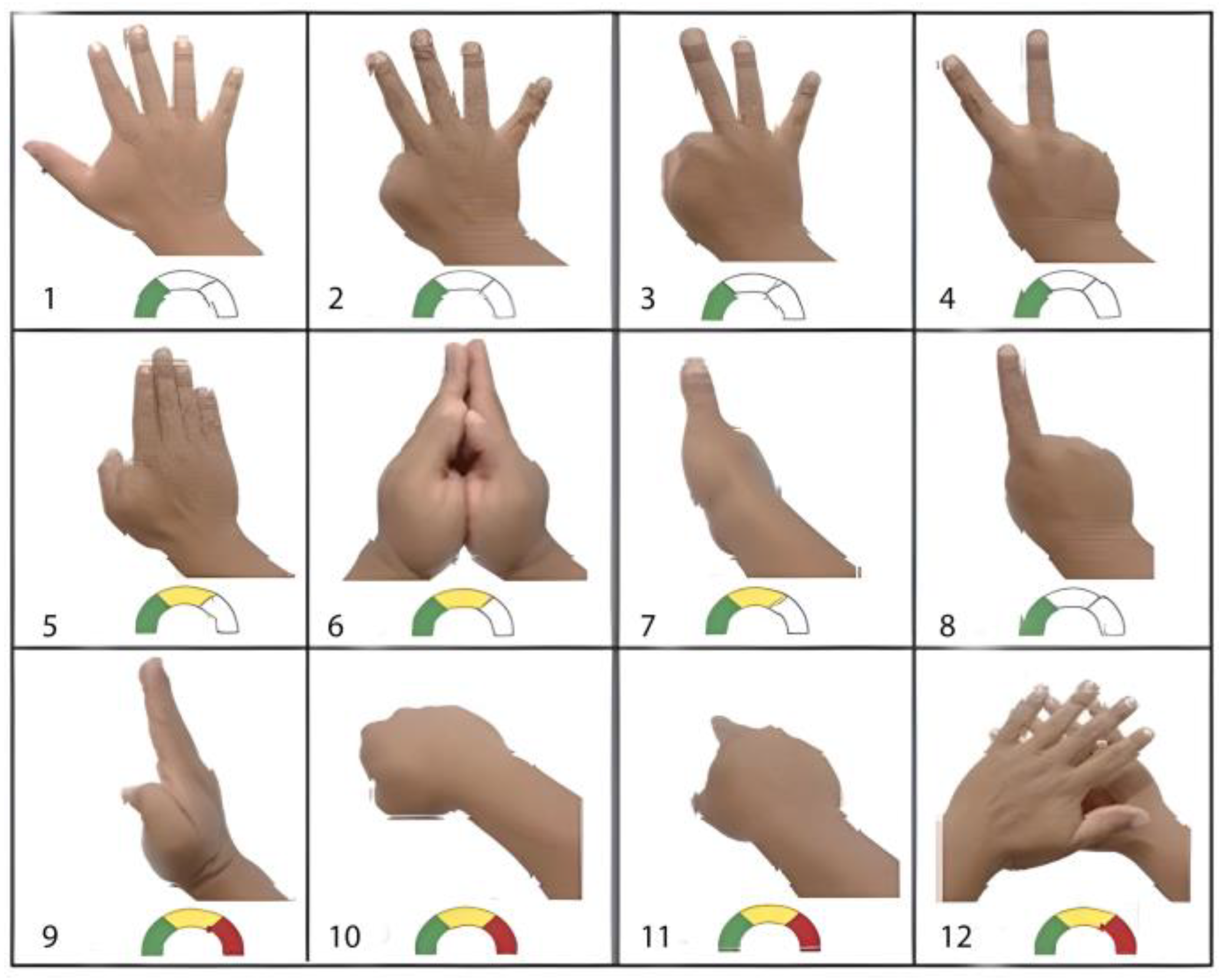

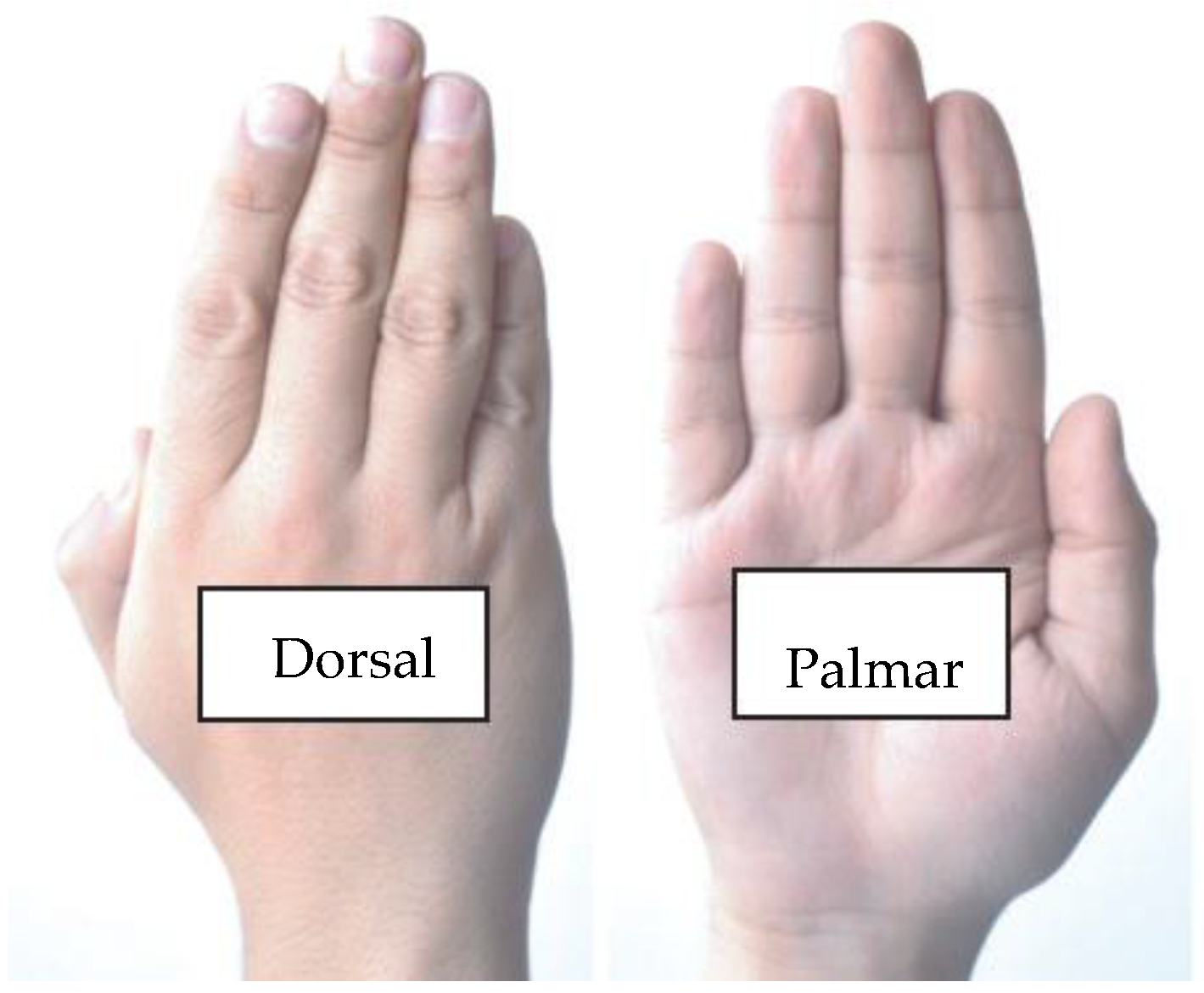

2. Characteristics of the Gestures

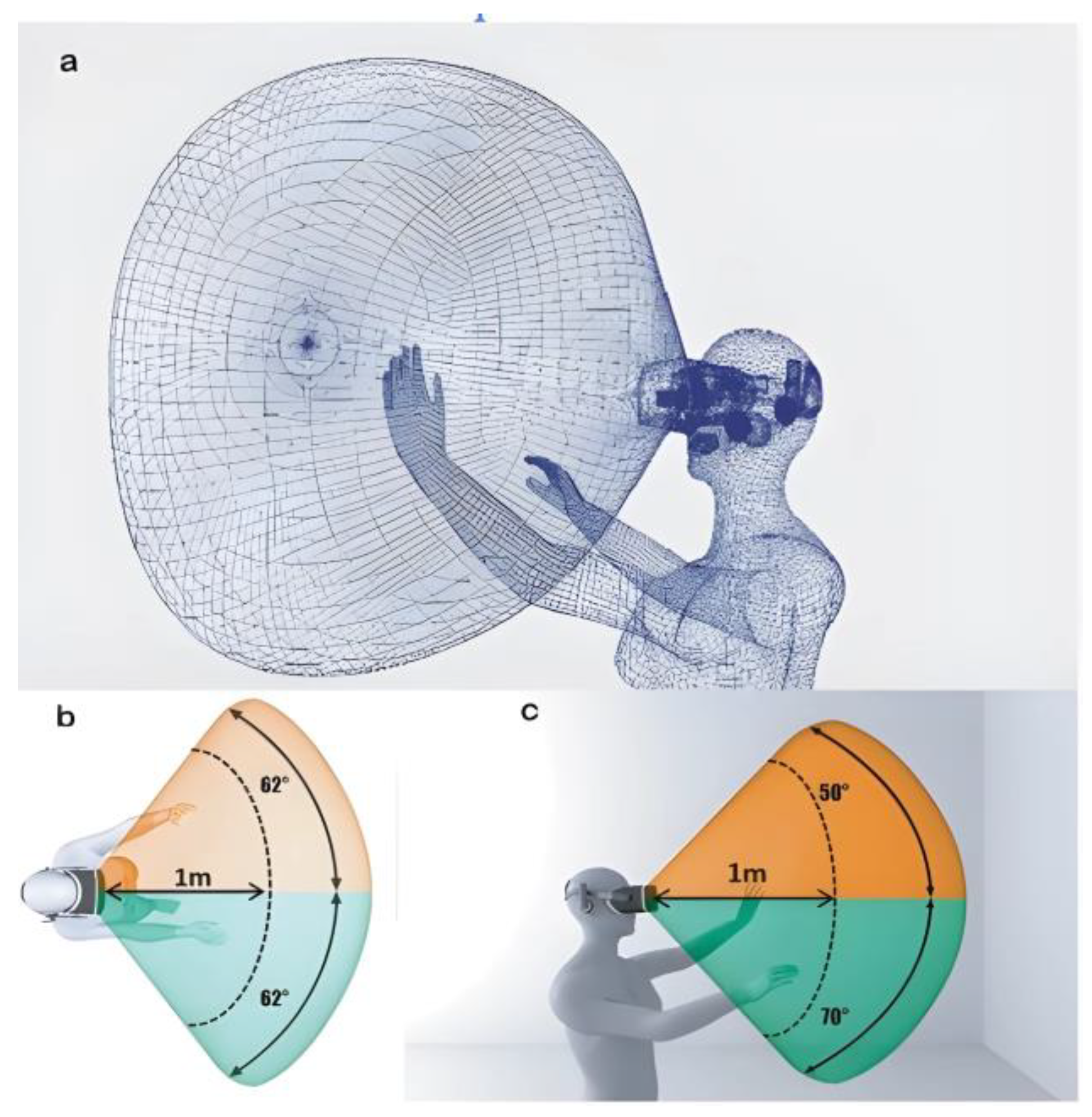

3. Visual System

4. Haptic System

5. Methodology

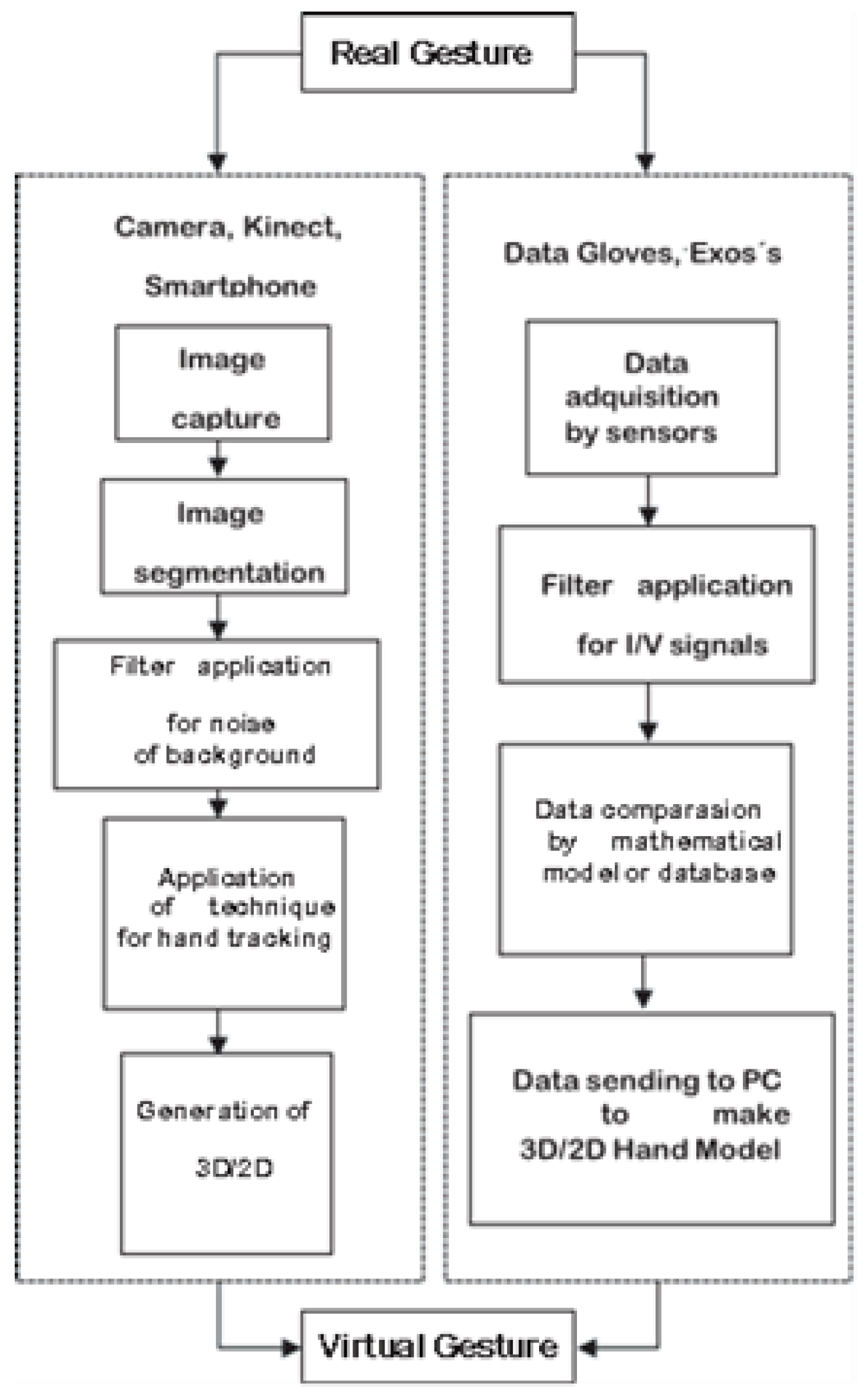

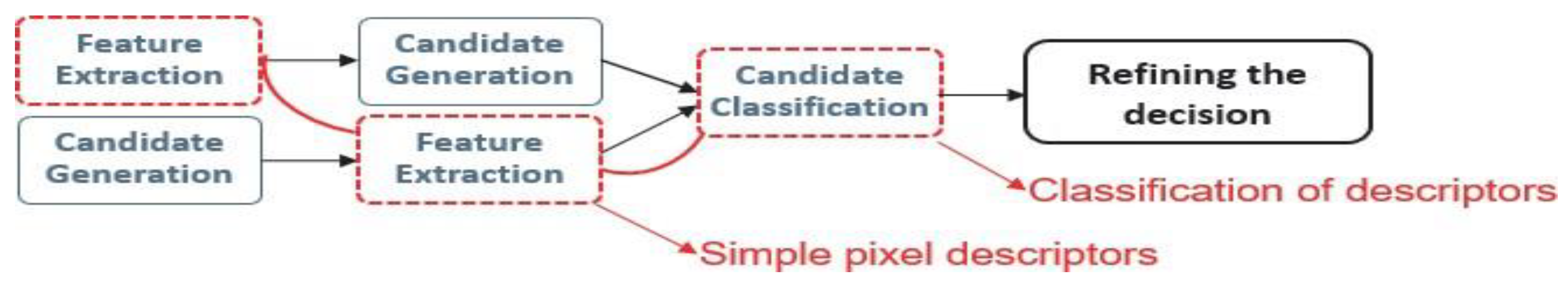

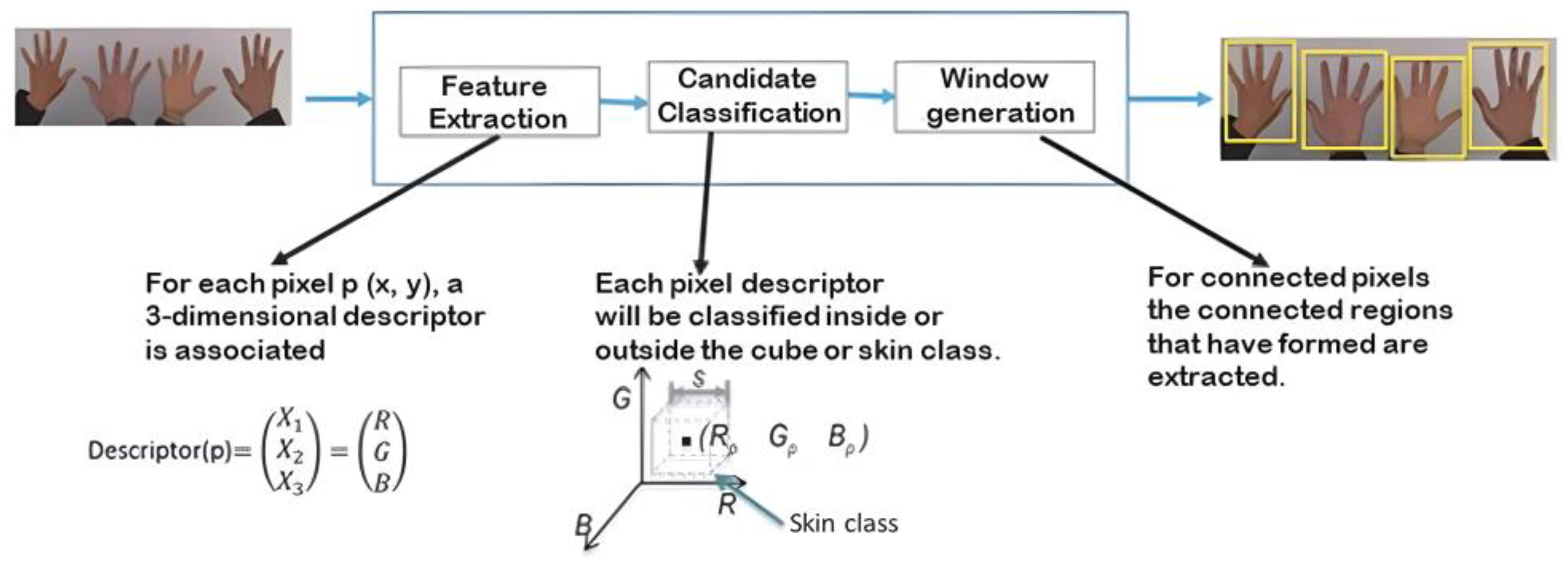

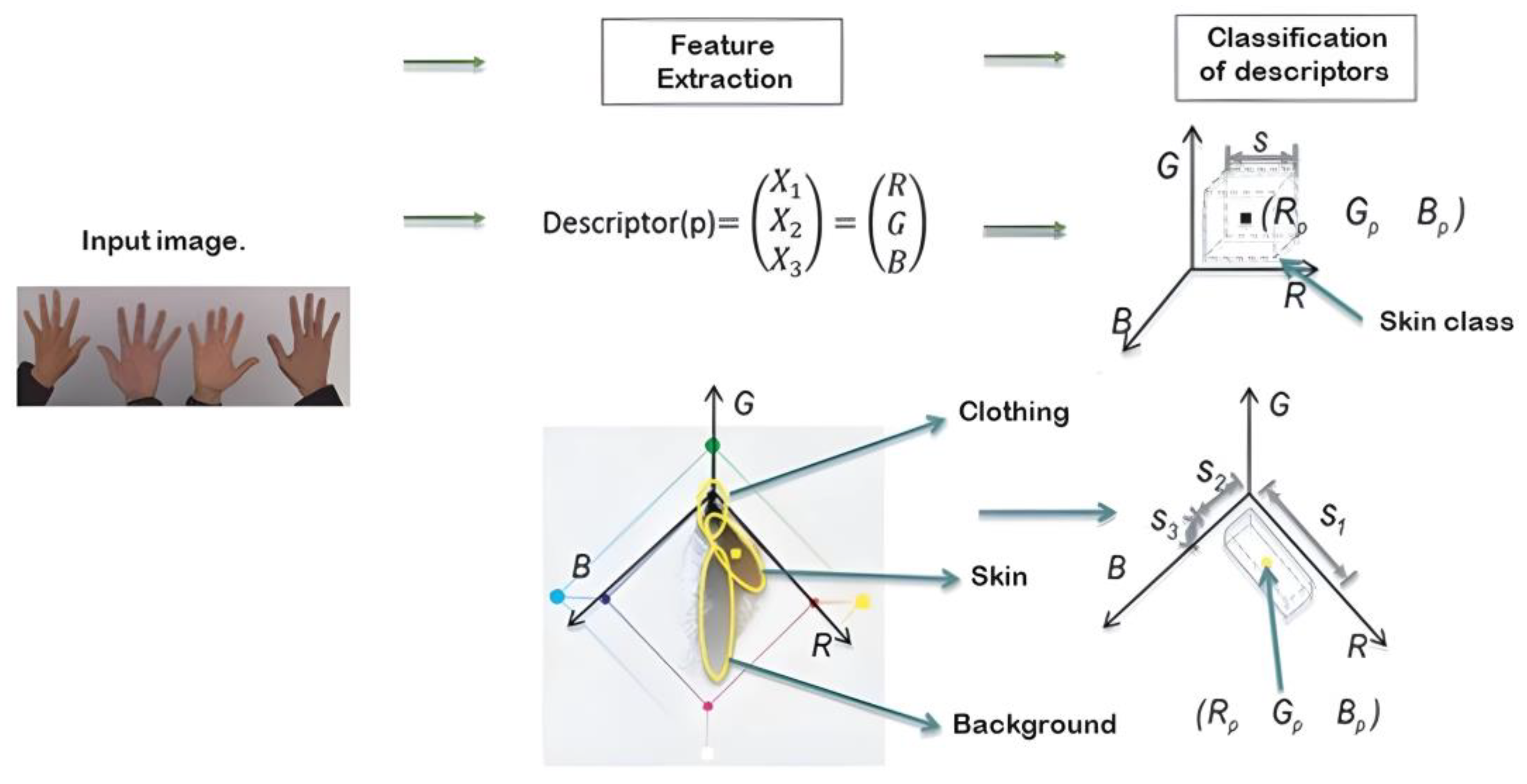

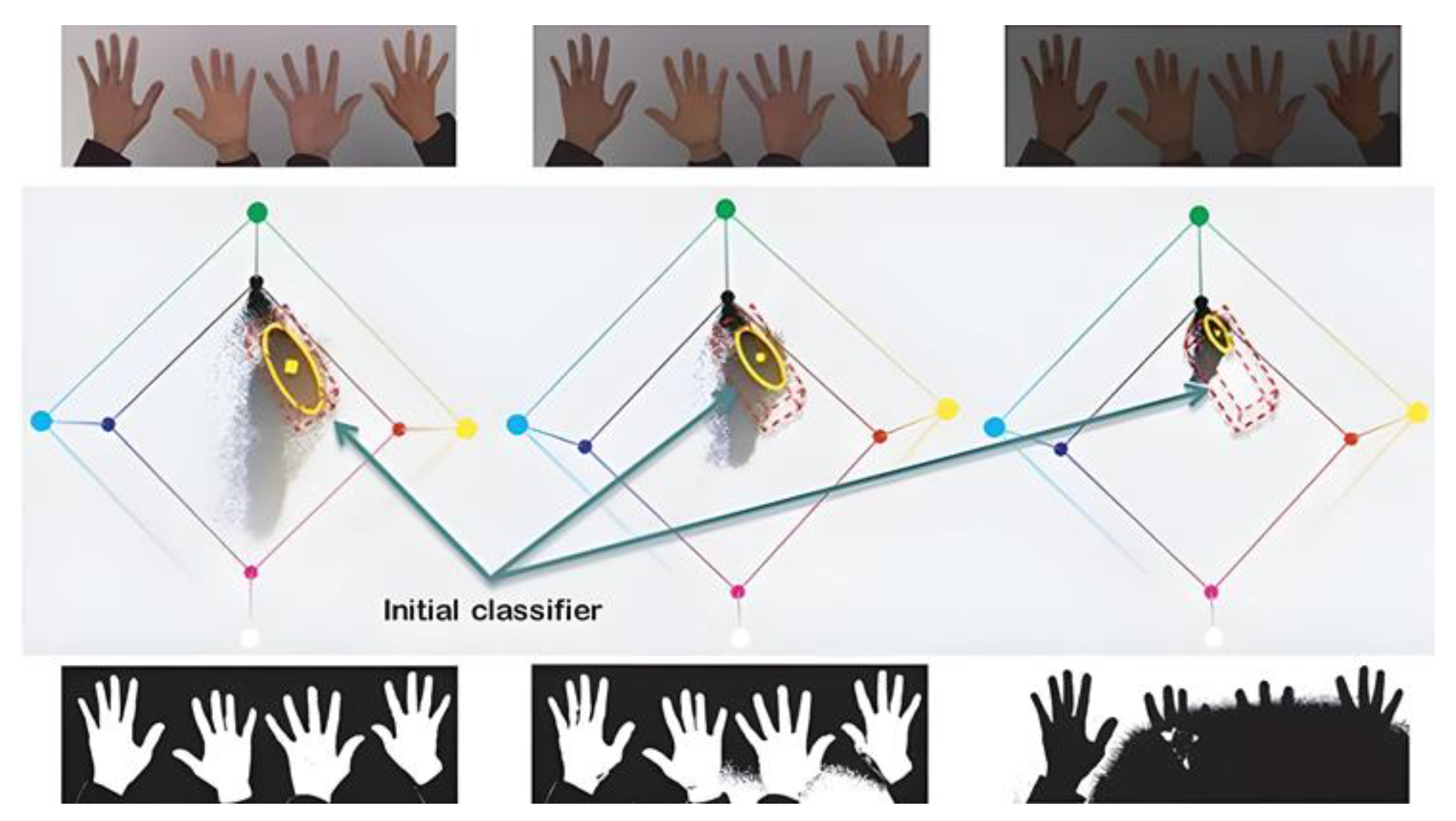

5.1. Gesture Detection Process for Virtual Interfaces

5.2. Gesture Detection Techniques

5.3. Gesture Capture Using a Smartphone

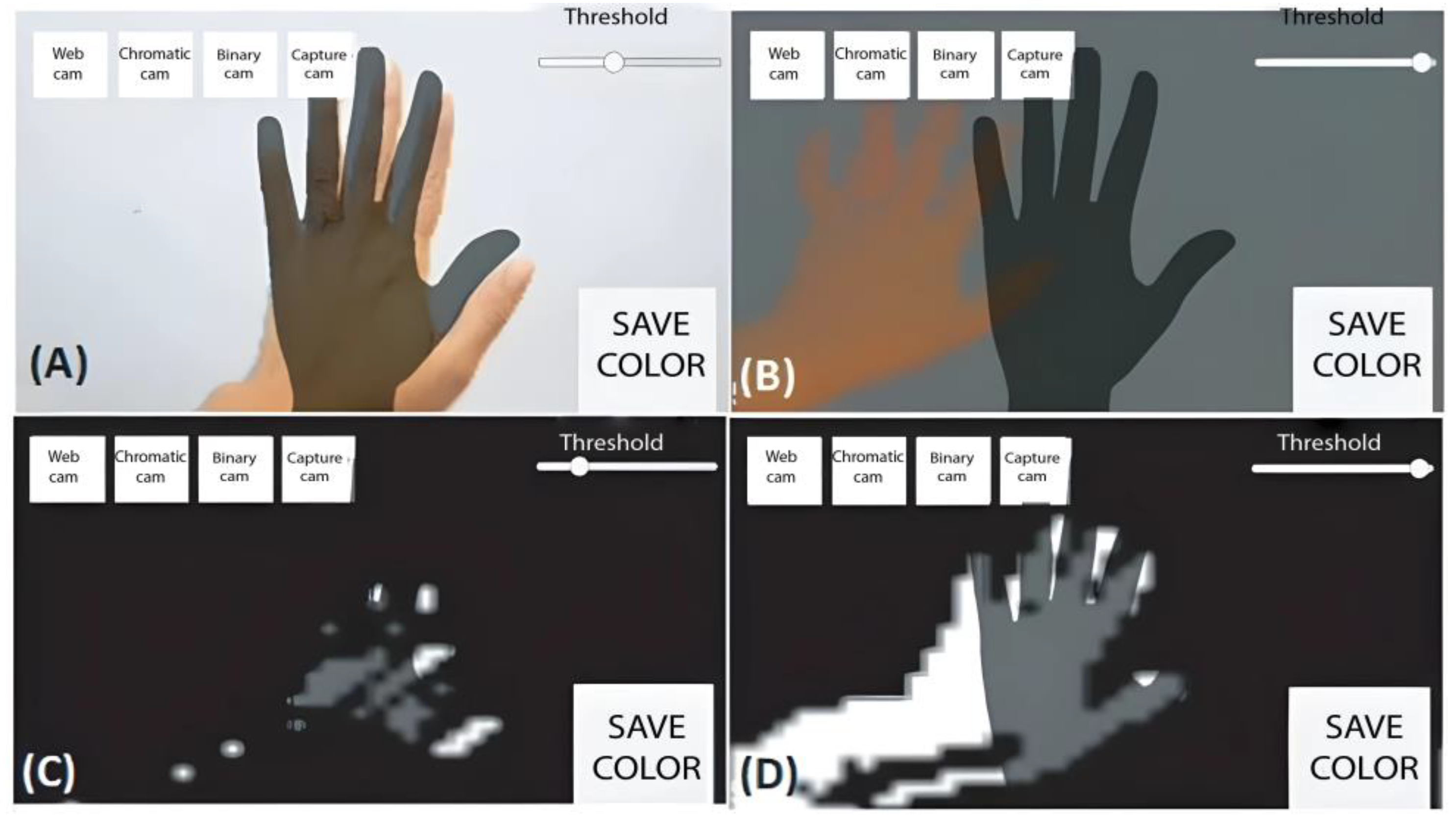

5.4. Image Capture

5.5. Application of Filters

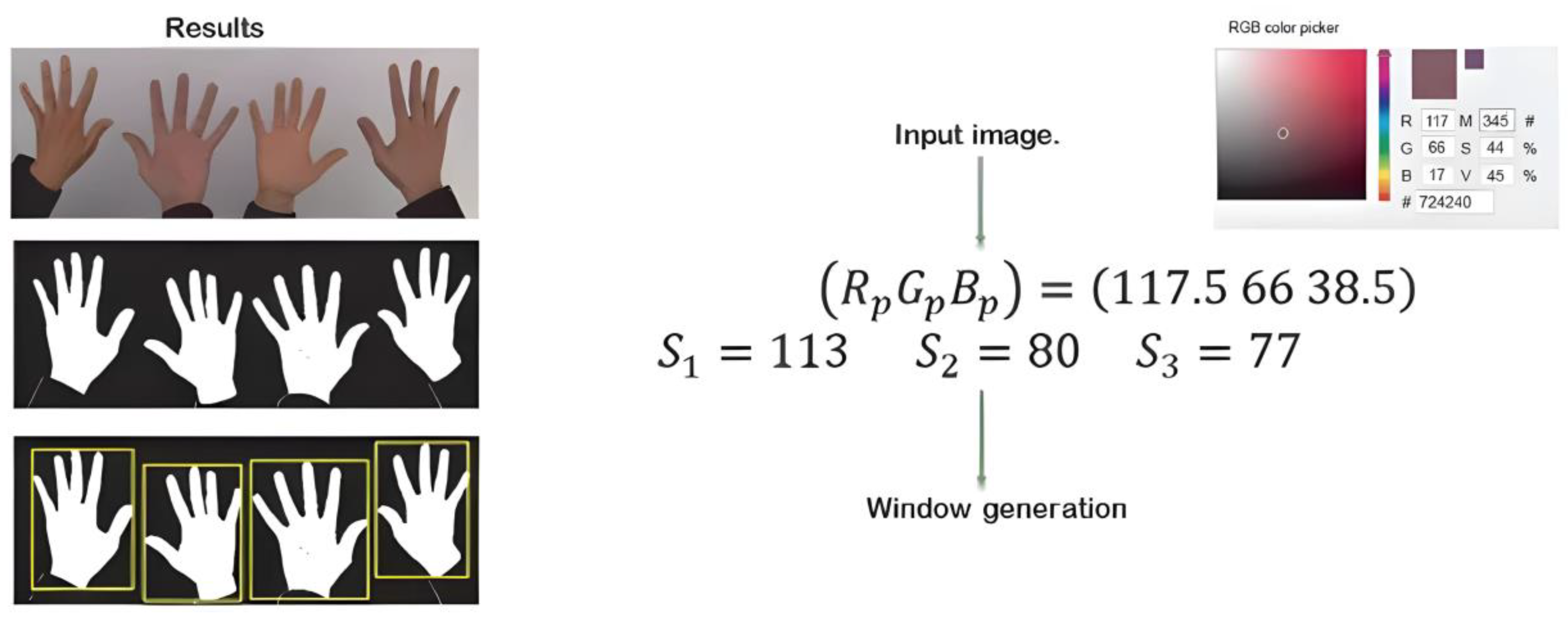

6. Results

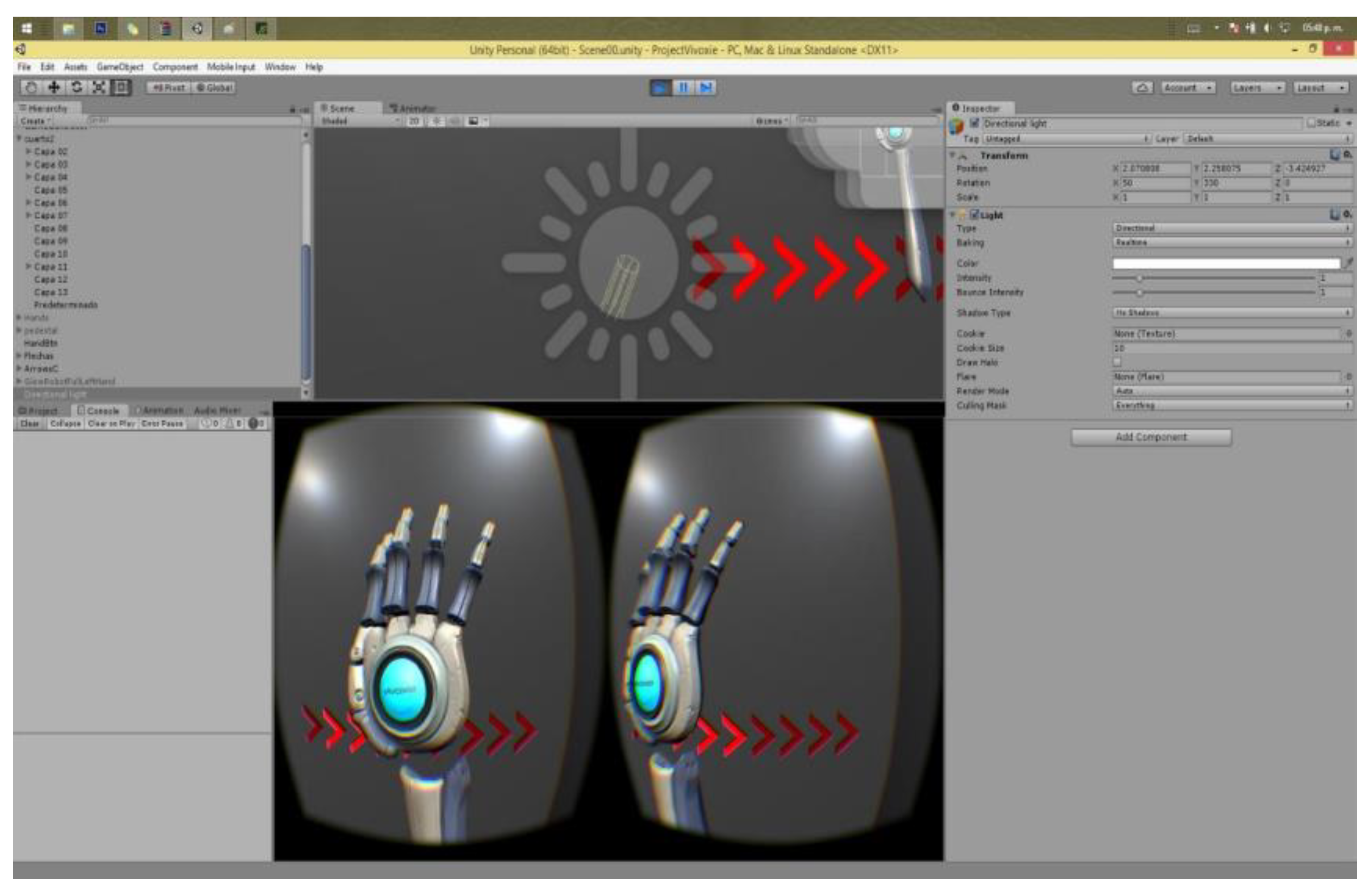

Virtual Interface Results

7. Conclusions and Discussion

7.1. Discussion

7.2. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bowman, D.A.; McMahan, R.P. Virtual Reality: How Much Immersion Is Enough? Computer 2007, 40, 36–43. [Google Scholar] [CrossRef]

- Sherman, W.R.; Craig, A.B. Understanding Virtual Reality: Interface, Application, and Design; Morgan Kaufmann: Burlington, MA, USA, 2002. [Google Scholar]

- Cruz-Neira, C.; Fernández, M.; Portalés, C. Virtual Reality and Games. Multimodal Technol. Interact. 2018, 2, 8. [Google Scholar] [CrossRef]

- Wang, P.; Wu, P.; Wang, J.; Chi, H.L.; Wang, X. A Critical Review of the Use of Virtual Reality in Construction Engineering Education and Training. Int. J. Environ. Res. Public Health 2018, 15, 1204. [Google Scholar] [CrossRef]

- Boletsis, C. The New Era of Virtual Reality Locomotion: A Systematic Literature Review of Techniques and a Proposed Typology. Multimodal Technol. Interact. 2017, 1, 24. [Google Scholar] [CrossRef]

- Yu, J. A Light-Field Journey to Virtual Reality. IEEE Multimed. 2017, 24, 104–112. [Google Scholar] [CrossRef]

- Parsons, T.D.; Gaggioli, A.; Riva, G. Virtual Reality for Research in Social Neuroscience. Brain Sci. 2017, 7, 42. [Google Scholar] [CrossRef]

- Lytridis, C.; Tsinakos, A.; Kazanidis, I. ARTutor—An Augmented Reality Platform for Interactive Distance Learning. Educ. Sci. 2018, 8, 6. [Google Scholar] [CrossRef]

- Ullrich, S.; Kuhlen, T. Haptic Palpation for Medical Simulation in Virtual Environments. IEEE Trans. Vis. Comput. Graph. 2012, 18, 617–625. [Google Scholar] [CrossRef]

- Shukor, A.Z.; Miskon, M.F.; Jamaluddin, M.H.; Ali Ibrahim, F.B.; Asyraf, M.F.; Bin Bahar, M.B. A New Data Glove Approach for Malaysian Sign Language Detection. Procedia Comput. Sci. 2015, 76, 60–67. [Google Scholar] [CrossRef]

- Hirafuji Neiva, D.; Zanchettin, C. Gesture Recognition: A Review Focusing on Sign Language in a Mobile Context. Expert Syst. Appl. 2018, 103, 159–183. [Google Scholar] [CrossRef]

- Das, A.; Yadav, L.; Singhal, M.; Sachan, R.; Goyal, H.; Taparia, K.; Gulati, R.; Singh, A.; Trivedi, G. Smart Glove for Sign Language Communications. In Proceedings of the 2016 International Conference on Accessibility to Digital World, ICADW 2016, Guwahati, India, 16–18 December 2016; pp. 27–31. [Google Scholar] [CrossRef]

- Yin, F.; Chai, X.; Chen, X. Iterative Reference Driven Metric Learning for Signer Independent Isolated Sign Language Recognition. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part VII 14; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Chang, H.J.; Garcia-Hernando, G.; Tang, D.; Kim, T.K. Spatio-Temporal Hough Forest for Efficient Detection–Localisation–Recognition of Fingerwriting in Egocentric Camera. Comput. Vis. Image Underst. 2016, 148, 87–96. [Google Scholar] [CrossRef]

- Santello, M.; Baud-Bovy, G.; Jörntell, H. Neural Bases of Hand Synergies. Front. Comput. Neurosci. 2013, 7, 42740. [Google Scholar] [CrossRef]

- Kuo, L.C.; Chiu, H.Y.; Chang, C.W.; Hsu, H.Y.; Sun, Y.N. Functional Workspace for Precision Manipulation between Thumb and Fingers in Normal Hands. J. Electromyogr. Kinesiol. 2009, 19, 829–839. [Google Scholar] [CrossRef]

- Habibi, E.; Kazemi, M.; Dehghan, H.; Mahaki, B.; Hassanzadeh, A. Hand Grip and Pinch Strength: Effects of Workload, Hand Dominance, Age, and Body Mass Index. Pak. J. Med. Sci. Old Website 2013, 29 (Suppl. 1), 363–367. [Google Scholar] [CrossRef]

- Chen, Z.; Huang, W.; Liu, H.; Wang, Z.; Wen, Y.; Wang, S. ST-TGR: Spatio-Temporal Representation Learning for Skeleton-Based Teaching Gesture Recognition. Sensors 2024, 24, 2589. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Sinclair, S.; Solazzi, M.; Frisoli, A.; Hayward, V.; Prattichizzo, D. Wearable Haptic Systems for the Fingertip and the Hand: Taxonomy, Review, and Perspectives. IEEE Trans. Haptics 2017, 10, 580–600. [Google Scholar] [CrossRef]

- Sreelakshmi, M.; Subash, T.D. Haptic Technology: A Comprehensive Review on Its Applications and Future Prospects. Mater. Today Proc. 2017, 4, 4182–4187. [Google Scholar] [CrossRef]

- Shull, P.B.; Damian, D.D. Haptic Wearables as Sensory Replacement, Sensory Augmentation and Trainer—A Review. J. Neuroeng. Rehabil. 2015, 12, 59. [Google Scholar]

- Song, Y.; Guo, S.; Yin, X.; Zhang, L.; Wang, Y.; Hirata, H.; Ishihara, H. Design and Performance Evaluation of a Haptic Interface Based on MR Fluids for Endovascular Tele-Surgery. Microsyst. Technol. 2018, 24, 909–918. [Google Scholar]

- Choi, I.; Ofek, E.; Benko, H.; Sinclair, M.; Holz, C. CLAW: A Multifunctional Handheld Haptic Controller for Grasping, Touching, and Triggering in Virtual Reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar] [CrossRef]

- Bordegoni, M.; Colombo, G.; Formentini, L. Haptic Technologies for the Conceptual and Validation Phases of Product Design. Comput. Graph 2006, 30, 377–390. [Google Scholar] [CrossRef]

- Sorgini, F.; Caliò, R.; Carrozza, M.C.; Oddo, C.M. Haptic-Assistive Technologies for Audition and Vision Sensory Disabilities. Disabil. Rehabil. Assist. Technol. 2018, 13, 394–421. [Google Scholar] [CrossRef]

- Hayward, V.; Astley, O.R. Performance Measures for Haptic Interfaces. In Robotics Research; Springer: London, UK, 1996; pp. 195–206. [Google Scholar] [CrossRef]

- Squeri, V.; Sciutti, A.; Gori, M.; Masia, L.; Sandini, G.; Konczak, J. Two Hands, One Perception: How Bimanual Haptic Information Is Combined by the Brain. J. Neurophysiol. 2012, 107, 544–550. [Google Scholar] [CrossRef]

- US9891709B2-Systems and Methods for Content- and Context Specific Haptic Effects Using Predefined Haptic Effects-Google Patents. Available online: https://patents.google.com/patent/US9891709B2/en (accessed on 10 May 2024).

- Haptics 2018–2028: Technologies, Markets and Players: IDTechEx. Available online: https://www.idtechex.com/en/research-report/haptics-2018-2028-technologies-markets-and-players/596 (accessed on 10 May 2024).

- Culbertson, H.; Schorr, S.B.; Okamura, A.M. Haptics: The Present and Future of Artificial Touch Sensation. Annu. Rev. Control. Robot. Auton. Syst. 2018, 1, 385–409. [Google Scholar]

- Zhai, S.; Milgram, P. Finger Manipulatable 6 Degree-of-Freedom Input Device. Available online: https://patents.justia.com/patent/5923318 (accessed on 10 May 2024).

- ACTUATOR 2018: 16th International Conference on New Actuators: 25–27 June … -Institute of Electrical and Electronics Engineers-Google Libros. Available online: https://books.google.com.mx/books/about/ACTUATOR_2018.html?id=BFE0vwEACAAJ&redir_esc=y (accessed on 10 May 2024).

- Samur, E. Performance Metrics for Haptic Interfaces; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Maisto, M.; Pacchierotti, C.; Chinello, F.; Salvietti, G.; De Luca, A.; Prattichizzo, D. Evaluation of Wearable Haptic Systems for the Fingers in Augmented Reality Applications. IEEE Trans. Haptics 2017, 10, 511–522. [Google Scholar] [CrossRef]

- Douglas, D.B.; Wilke, C.A.; Gibson, J.D.; Boone, J.M.; Wintermark, M. Augmented Reality: Advances in Diagnostic Imaging. Multimodal Technol. Interact. 2017, 1, 29. [Google Scholar] [CrossRef]

- Schulze, J.; Ramamoorthi, R.; Weibel, N. A Prototype for Text Input in Virtual Reality with a Swype-like Process Using a Hand-Tracking Device; University of California: San Diego, CA, USA, 2017. [Google Scholar]

- Gu, X.; Zhang, Y.; Sun, W.; Bian, Y.; Zhou, D.; Kristensson, P.O. Dexmo: An Inexpensive and Lightweight Mechanical Exoskeleton for Motion Capture and Force Feedback in VR. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 1991–1995. [Google Scholar] [CrossRef]

- Qi, J.; Ma, L.; Cui, Z.; Yu, Y. Computer Vision-Based Hand Gesture Recognition for Human-Robot Interaction: A Review. Complex Intell. Syst. 2024, 10, 1581–1606. [Google Scholar]

- Shimada, K.; Ikeda, R.; Kikura, H.; Takahashi, H.; Khan, H.; Li, S.; Rehan, M.; Chen, D. Morphological Fabrication of Rubber Cutaneous Receptors Embedded in a Stretchable Skin-Mimicking Human Tissue by the Utilization of Hybrid Fluid. Sensors 2021, 21, 6834. [Google Scholar] [CrossRef]

- Hudson, C. To Have and to Hold: Touch and the Objects of the Dead. In Handbook of Research on the Relationship between Autobiographical Memory and Photography; IGI Global: Hershey, PA, USA; pp. 307–325. [CrossRef]

- Sonneveld, M.H.; Schifferstein, H.N.J. The Tactual Experience of Objects. In Product Experience; Elsevier: Amsterdam, The Netherlands, 2007; pp. 41–67. [Google Scholar] [CrossRef]

- Kearney, R. Touch; DE GRUYTER: Berlin, Germany, 2021. [Google Scholar] [CrossRef]

- Sheng, Y.; Cheng, H.; Wang, Y.; Zhao, H.; Ding, H. Teleoperated Surgical Robot with Adaptive Interactive Control Architecture for Tissue Identification. Bioengineering 2023, 10, 1157. [Google Scholar] [CrossRef]

- Fang, Y.; Yamadac, K.; Ninomiya, Y.; Horn, B.; Masaki, I. Comparison between Infrared-Image-Based and Visible-Image-Based Approaches for Pedestrian Detection. In Proceedings of the IEEE IV2003 Intelligent Vehicles Symposium. Proceedings (Cat. No.03TH8683), Columbus, OH, USA, 9–11 June 2003; pp. 505–510. [Google Scholar] [CrossRef]

- Zhao, L.; Jiao, J.; Yang, L.; Pan, W.; Zeng, F.; Li, X.; Chen, F.A.; Zhao, L.; Jiao, J.; Yang, L.; et al. A CNN-Based Layer-Adaptive GCPs Extraction Method for TIR Remote Sensing Images. Remote Sens. 2023, 15, 2628. [Google Scholar] [CrossRef]

- Cui, K.; Qin, X. Virtual Reality Research of the Dynamic Characteristics of Soft Soil under Metro Vibration Loads Based on BP Neural Networks. Neural Comput. Appl. 2018, 29, 1233–1242. [Google Scholar]

- Caeiro-Rodríguez, M.; Otero-González, I.; Mikic-Fonte, F.A.; Llamas-Nistal, M. A Systematic Review of Commercial Smart Gloves: Current Status and Applications. Sensors 2021, 21, 2667. [Google Scholar] [CrossRef]

- Tchantchane, R.; Zhou, H.; Zhang, S.; Alici, G. A Review of Hand Gesture Recognition Systems Based on Noninvasive Wearable Sensors. Adv. Intell. Syst. 2023, 5, 2300207. [Google Scholar] [CrossRef]

- Saypulaev, G.R.; Merkuryev, I.V.; Saypulaev, M.R.; Shestakov, V.K.; Glazkov, N.V.; Andreev, D.R. A Review of Robotic Gloves Applied for Remote Control in Various Systems. In Proceedings of the 2023 5th International Youth Conference on Radio Electronics, Electrical and Power Engineering, REEPE 2023, Moscow, Russia, 16–18 March 2023. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, Z.; Emu, L.; Wei, P.; Chen, S.; Zhai, Z.; Kong, L.; Wang, Y.; Jiang, H. Active Mechanical Haptics with High-Fidelity Perceptions for Immersive Virtual Reality. Nat. Mach. Intell. 2023, 5, 643–655. [Google Scholar] [CrossRef]

- Rose, F.D.; Brooks, B.M.; Rizzo, A.A. Virtual Reality in Brain Damage Rehabilitation: Review. Cyberpsychology Behav. 2005, 8, 241–262. [Google Scholar] [CrossRef]

- Stenger, B.; Thayananthan, A.; Torr, P.H.S.; Cipolla, R. Model-Based Hand Tracking Using a Hierarchical Bayesian Filter. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1372–1384. [Google Scholar] [CrossRef]

- Yang, K.; Kim, M.; Jung, Y.; Lee, S. Hand Gesture Recognition Using FSK Radar Sensors. Sensors 2024, 24, 349. [Google Scholar] [CrossRef]

- Haji Mohd, M.N.; Mohd Asaari, M.S.; Lay Ping, O.; Rosdi, B.A. Vision-Based Hand Detection and Tracking Using Fusion of Kernelized Correlation Filter and Single-Shot Detection. Appl. Sci. 2023, 13, 7433. [Google Scholar] [CrossRef]

- Abdallah, M.S.; Samaan, G.H.; Wadie, A.R.; Makhmudov, F.; Cho, Y.I. Light-Weight Deep Learning Techniques with Advanced Processing for Real-Time Hand Gesture Recognition. Sensors 2022, 23, 2. [Google Scholar] [CrossRef]

- Chen, S.H.; Hernawan, A.; Lee, Y.S.; Wang, J.C. Hand Gesture Recognition Based on Bayesian Sensing Hidden Markov Models and Bhattacharyya Divergence. In Proceedings of the International Conference on Image Processing, ICIP 2017, Beijing, China, 17–20 September 2017; pp. 3535–3539. [Google Scholar] [CrossRef]

- Caputo, A.; Giachetti, A.; Soso, S.; Pintani, D.; D’Eusanio, A.; Pini, S.; Borghi, G.; Simoni, A.; Vezzani, R.; Cucchiara, R.; et al. SHREC 2021: Skeleton-Based Hand Gesture Recognition in the Wild. Comput. Graph. 2021, 99, 201–211. [Google Scholar] [CrossRef]

- Ali, H.; Jirak, D.; Wermter, S. Snapture—A Novel Neural Architecture for Combined Static and Dynamic Hand Gesture Recognition. Cognit. Comput. 2023, 15, 2014–2033. [Google Scholar]

- Achenbach, P.; Laux, S.; Purdack, D.; Müller, P.N.; Göbel, S. Give Me a Sign: Using Data Gloves for Static Hand-Shape Recognition. Sensors 2023, 23, 9847. [Google Scholar] [CrossRef]

- Narayan, S.; Mazumdar, A.P.; Vipparthi, S.K. SBI-DHGR: Skeleton-Based Intelligent Dynamic Hand Gestures Recognition. Expert Syst. Appl. 2023, 232, 120735. [Google Scholar] [CrossRef]

- Lu, Z.; Qin, S.; Lv, P.; Sun, L.; Tang, B. Real-Time Continuous Detection and Recognition of Dynamic Hand Gestures in Untrimmed Sequences Based on End-to-End Architecture with 3D DenseNet and LSTM. Multimed. Tools Appl. 2024, 83, 16275–16312. [Google Scholar]

- Sarma, D.; Dutta, H.P.J.; Yadav, K.S.; Bhuyan, M.K.; Laskar, R.H. Attention-Based Hand Semantic Segmentation and Gesture Recognition Using Deep Networks. Evol. Syst. 2024, 15, 185–201. [Google Scholar]

- Mahmud, H.; Morshed, M.M.; Hasan, M.K. Quantized Depth Image and Skeleton-Based Multimodal Dynamic Hand Gesture Recognition. Vis. Comput. 2024, 40, 11–25. [Google Scholar]

- Karsh, B.; Laskar, R.H.; Karsh, R.K. MIV3Net: Modified Inception V3 Network for Hand Gesture Recognition. Multimed. Tools Appl. 2024, 83, 10587–10613. [Google Scholar]

- Miah, A.S.M.; Hasan, M.A.M.; Shin, J. Dynamic Hand Gesture Recognition Using Multi-Branch Attention Based Graph and General Deep Learning Model. IEEE Access 2023, 11, 4703–4716. [Google Scholar] [CrossRef]

- Rastgoo, R.; Kiani, K.; Escalera, S.; Sabokrou, M. Multi-Modal Zero-Shot Dynamic Hand Gesture Recognition. Expert Syst. Appl. 2024, 247, 123349. [Google Scholar] [CrossRef]

- Piskozub, J.; Strumillo, P. Reducing the Number of Sensors in the Data Glove for Recognition of Static Hand Gestures. Appl. Sci. 2022, 12, 7388. [Google Scholar] [CrossRef]

- Shanthakumar, V.A.; Peng, C.; Hansberger, J.; Cao, L.; Meacham, S.; Blakely, V. Design and Evaluation of a Hand Gesture Recognition Approach for Real-Time Interactions. Multimed. Tools Appl. 2020, 79, 17707–17730. [Google Scholar]

- Mehatari, B. Hand Gesture Recognition and Classification Using Computer Vision and Deep Learning Techniques. Doctoral Dissertation, National College of Ireland, Dublin, Ireland, 2022. [Google Scholar]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced Computer Vision with Microsoft Kinect Sensor: A Review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar] [CrossRef]

- Park, K.; Kim, S.; Yoon, Y.; Kim, T.K.; Lee, G. DeepFisheye: Near-Surface Multi-Finger Tracking Technology Using Fisheye Camera. In Proceedings of the UIST 2020-33rd Annual ACM Symposium on User Interface Software and Technology, Virtual, 20–23 October 2020; pp. 1132–1146. [Google Scholar] [CrossRef]

- Oumaima, D.; Mohamed, L.; Hamid, H.; Mohamed, H. Application of Artificial Intelligence in Virtual Reality. In International Conference on Trends in Sustainable Computing and Machine Intelligence; Springer Nature: Singapore, 2024; pp. 67–85. [Google Scholar] [CrossRef]

- Jin, H.; Chen, Q.; Chen, Z.; Hu, Y.; Zhang, J. Multi-LeapMotion Sensor Based Demonstration for Robotic Refine Tabletop Object Manipulation Task. CAAI Trans. Intell. Technol. 2016, 1, 104–113. [Google Scholar] [CrossRef]

- Borgmann, H. Actuator 16. In Proceedings of the 15th International Conference on New Actuators & 9th International Exhibition on Smart Actuators and Drive Systems, Bremen, Germany, 13–15 June 2016; WFB Wirtschaftsförderung Bremen GmbH: Bremen, Germany, 2016. [Google Scholar]

| Company | Device Name | Tracking | SDK | Range (m) | Weight (g) | Grade of Immersive | Status |

|---|---|---|---|---|---|---|---|

| Microsoft | Kinect | Infrared projector and RGB camera | Tes | 2.5 | 430 | ++ | On Sale |

| - | Camera WEB | Camera | Depends on the algorithm | 1 | 100–400 | + | On Sale |

| LeapMotion (San Francisco, CA, USA) | LeapMotion | Two monochromatic IR cameras and three infrared LEDs | Yes | 0.4 | 4+ | + | On sale |

| Mobile phones | Mobile camera | Camera | Depends on the algorithm | 0.6 | Depends on the mobile phone | + | On sale |

| Facebook (Cambridge, MA, USA) | Oculus Touch | Infrared sensor, gyroscope, and accelerometer | Yes | 2.5 | 156 | ++ | On sale |

| HTC (Taoyuan City, Taiwan) | HTC Vive touch | Lighthouse (2 base stations emitting pulsed IR lasers), gyroscope | Yes | 3 | 160 | ++ | On sale |

| Sony (Tokyo, Japan) | PlayStation VR bundle | Two-pixel cameras with lenses | No | 2.1 | 120 | ++ | On sale |

| Autor/Company | Device Name | Type | Tracking | DOF (Degrees of Freedom) | SDK | Range (m) | Weight (g) |

|---|---|---|---|---|---|---|---|

| Cyber Glove System (San Jose, CA, USA) | Cybergrasp | Exo | Accelerometers | 11 | No | 1 | 450 |

| Neurodigital technologies (Almería, Spain) | Glove one | Glove | IMU’S (accelerometers and gyroscope) | 9 | Yes | 1 | 100 |

| Manus VR (Geldrop, The Netherlands) | MANUS VR | Glove | IMU’S (accelerometers and gyroscope) | 11 | Yes | 2 | 100 |

| Dexta Robotics (Shenzhen, China) | DEXMO | EXO | IMU’S (accelerometers and gyroscope) | 11 | Yes | 2 | 190 |

| Sensable Robotics (Berkeley, CA, USA) | PHANToM | Robotic arm | Encoders | 2–6 | Yes | - | 1786 |

| Mounrad Boutzit, George Popescu, Grigore Burdea and Rares Boian, 2002 | Rutgers Master II ND | Glove | Flex and hall effect sensors | 5 | No | 2 | 80 |

| Robot Hand Unit for Research | HIRO III | Robotic arm and glove | Encoder | 15 for the hand, 6 for the arm | No | 0.09 m3 | 780 |

| Vivoxie (Ciudad de México, Mexico) | Power Claw | Glove | Monochromatic IR cameras and infrared LEDs (Leapmotion) | 12 | Yes | 650 mm3 | 120 |

| Technique | Accuracy (%) | Researchers | Advantage | Hardware |

|---|---|---|---|---|

| Bhattacharyya divergence into Bayesian sensing hidden Markov models | 82.08–96.69 | Sih-Huei Chen et al., 2017. Kumar, 2017 [56] | Ability to deal with probabilistic features | Kinect |

| SHREC 2021 | 95–98 | Caputo et al., 2021 [57] | Recognition of 18 gesture classes | Camera |

| Artificial Neural Network with Data fusion | 98 | Ali et al., 2023 [58] | The ANN achieved increased recognition accuracy | Kinect |

| Manus Prime X data gloves, data acquisition, data preprocessing, and data classification to enable non-verbal communication within VR. | 95 | Achenbach et al., 2023 [59] | Data acquisition, data preprocessing, and data classification to enable nonverbal communication within VR | Meta-classifier (VL2) |

| The multi-scale CNN employs LSTM to extract and optimize temporal and spatial features. A computationally efficient SBI-DHGR approach | 90–97 | Narayan et al., 2023 [60] | Does not need the power of processing data | The algorithm can be extended to recognize a broader set of gestures |

| Technique | Accuracy (%) | Researchers | Advantage | Hardware |

|---|---|---|---|---|

| Deep learning and CNN | 90 | Jing Qi et al. (2023) [37] | Using deep learning framework to obtain initial parameters for the optimization algorithm is effective in the sense that it allows us to correctly track complex gestures and very fast movements in the finger | Web Camera |

| LDA, SVM, ANN | 98.33 | Qi, J et al., 2018 [38] | The ANN achieved the lowest error rate and outperformed LDA and SVM in all the test | IMUd’s in Data Glove |

| Use of a well-designed end-to-end architecture based on 3D DenseNet and LSTM variants | 92–99 | Lu et al. (2023) [61] | Use of a well-designed end-to-end architecture based on 3D DenseNet and LSTM variants | Web Camera |

| Use of Deep Neural Models to achieve better results for both static and dynamic gestures. | 95 | Sarma et al. (2023) [62] | Improves segmentation accuracy. | Video |

| Deep learning | 92 | Mahmud et al. (2023) [63] | CRNN (convolutional recurrent neural networks) | Web Camera |

| Deep learning | 99 | Karsh et al. (2023) [64] | A two-phase deep learning-based HGR system reduces computational resource requirements | Web Camera |

| Technique | Accuracy (%) | Researchers | Advantage/Main Feature | Hardware |

|---|---|---|---|---|

| Filters: Gaussian Blur, CLAHE, HSV, Binary Mask, Dilated and Eroded | 87.89 | Maisto, M. et al., 2017 [34] | It can be used in VR and requires little information of the captured image. | Camera and Leap Motion |

| Euclidean distance | 84 | Culbertson, H et al., 2018 [30] | No training algorithm is needed | Leap Motion and Data Glove |

| Deep learning | 99 | Miah et al. (2023) [65] | Used two graph-based neural network channels in the multi-branch architectures and one general neural network channel; high-performance accuracy and low computational cost | Web Camera |

| Deep learning | 97 | Rastgoo et al. (2023) [66] | Dynamic Hand Gesture Recognition (DHGR) Zero-Shot from multimodal data and hybrid features. | Web Camera |

| Technique | Description | Advantage | Disadvantage |

|---|---|---|---|

| Kinect | Infrared projector and RGB camera | Ease of implementation algorithms, Open Source | It stopped being manufactured in 2017; it must be connected to a PC. |

| Conventional camera | 60 fps camera | Low-cost and universal PC driver | It must be connected to a PC |

| GoPro | 4K resolution up to 256 fps | High image quality, greater image capture range | High cost, slow process of sending information to a PC |

| Mobile phone camera | Device embedded in all Smartphones | Easy access to algorithm implementation, low cost, and high transfer speed. | Limited to the mobile phone processor. |

| Item | Specification |

|---|---|

| Screen | 5.1-inch QHD SMOLED |

| Resolution | 2.560 × 1.440 pixels |

| Pixel density | 577 ppp |

| Operating system | Android |

| Processor | 2.1 GHz Samsung Exynos (eight cores) |

| Camera | 16 Mega-pixels |

| RAM | 3 GB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Herbert, O.M.; Pérez-Granados, D.; Ruiz, M.A.O.; Cadena Martínez, R.; Gutiérrez, C.A.G.; Antuñano, M.A.Z. Static and Dynamic Hand Gestures: A Review of Techniques of Virtual Reality Manipulation. Sensors 2024, 24, 3760. https://doi.org/10.3390/s24123760

Herbert OM, Pérez-Granados D, Ruiz MAO, Cadena Martínez R, Gutiérrez CAG, Antuñano MAZ. Static and Dynamic Hand Gestures: A Review of Techniques of Virtual Reality Manipulation. Sensors. 2024; 24(12):3760. https://doi.org/10.3390/s24123760

Chicago/Turabian StyleHerbert, Oswaldo Mendoza, David Pérez-Granados, Mauricio Alberto Ortega Ruiz, Rodrigo Cadena Martínez, Carlos Alberto González Gutiérrez, and Marco Antonio Zamora Antuñano. 2024. "Static and Dynamic Hand Gestures: A Review of Techniques of Virtual Reality Manipulation" Sensors 24, no. 12: 3760. https://doi.org/10.3390/s24123760

APA StyleHerbert, O. M., Pérez-Granados, D., Ruiz, M. A. O., Cadena Martínez, R., Gutiérrez, C. A. G., & Antuñano, M. A. Z. (2024). Static and Dynamic Hand Gestures: A Review of Techniques of Virtual Reality Manipulation. Sensors, 24(12), 3760. https://doi.org/10.3390/s24123760