A Novel Dynamic Light-Section 3D Reconstruction Method for Wide-Range Sensing

Abstract

1. Introduction

- (1)

- A novel dynamic light-section 3D reconstruction system is designed based on multiple galvanometers. To the best of our knowledge, this system is the first to synchronize laser scanning and the FOV switching of camera, thus enabling high-precision and wide-range 3D reconstruction simultaneously.

- (2)

- A novel high-precision and flexible calibration method for the dynamic 3D system is proposed by constructing an error model and objective function based on the combined model of the dynamic camera and dynamic laser. This method is not only applicable to the proposed system but also to other single galvanometer-based dynamic laser or camera systems.

- (3)

- Experiments were conducted to validate the proposed dynamic 3D reconstruction method and demonstrate its accuracy. To the best of our knowledge, compared to all existing galvanometer-based laser-scanning methods, our approach has the highest measurement range while maintaining the same level of measurement accuracy.

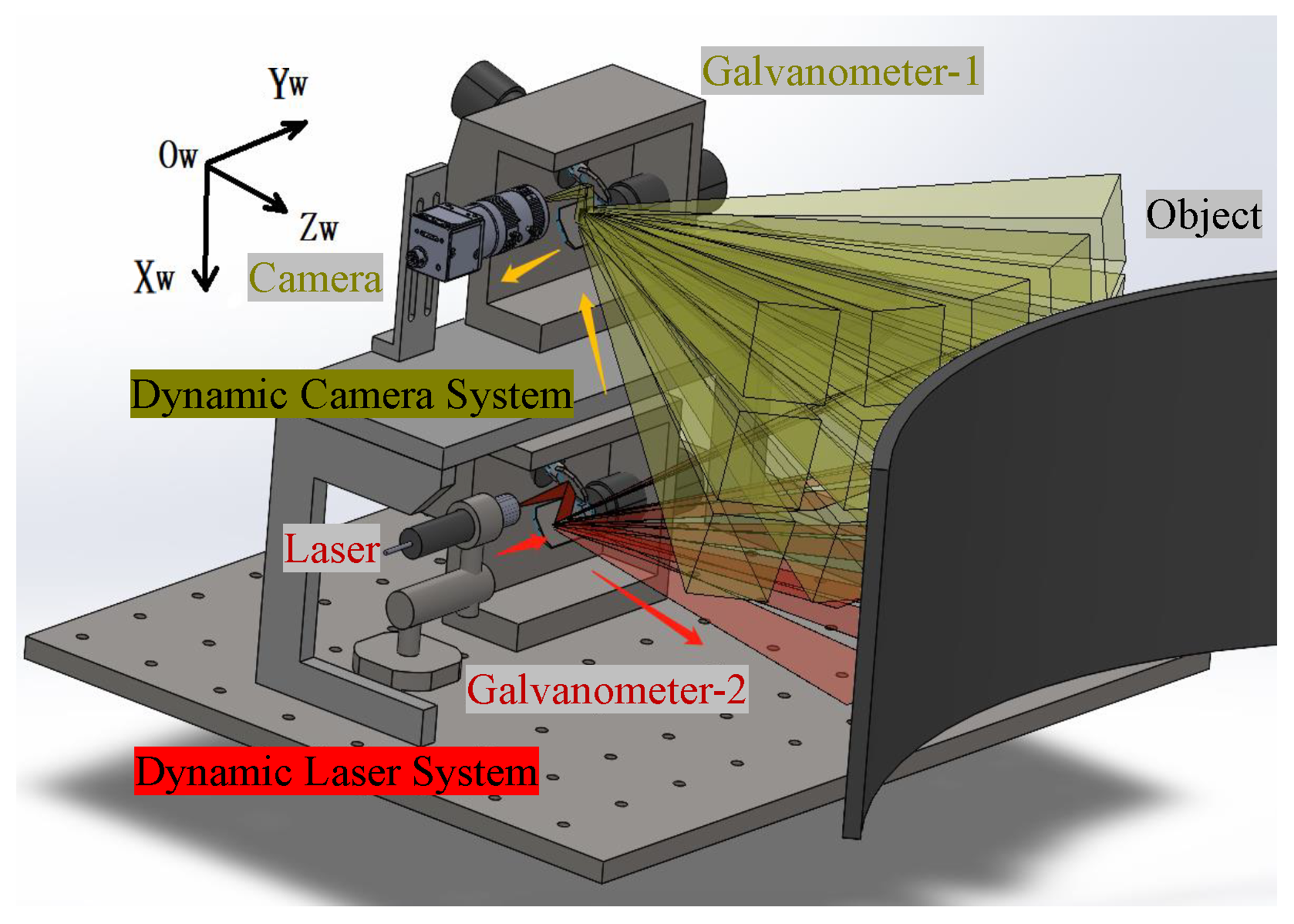

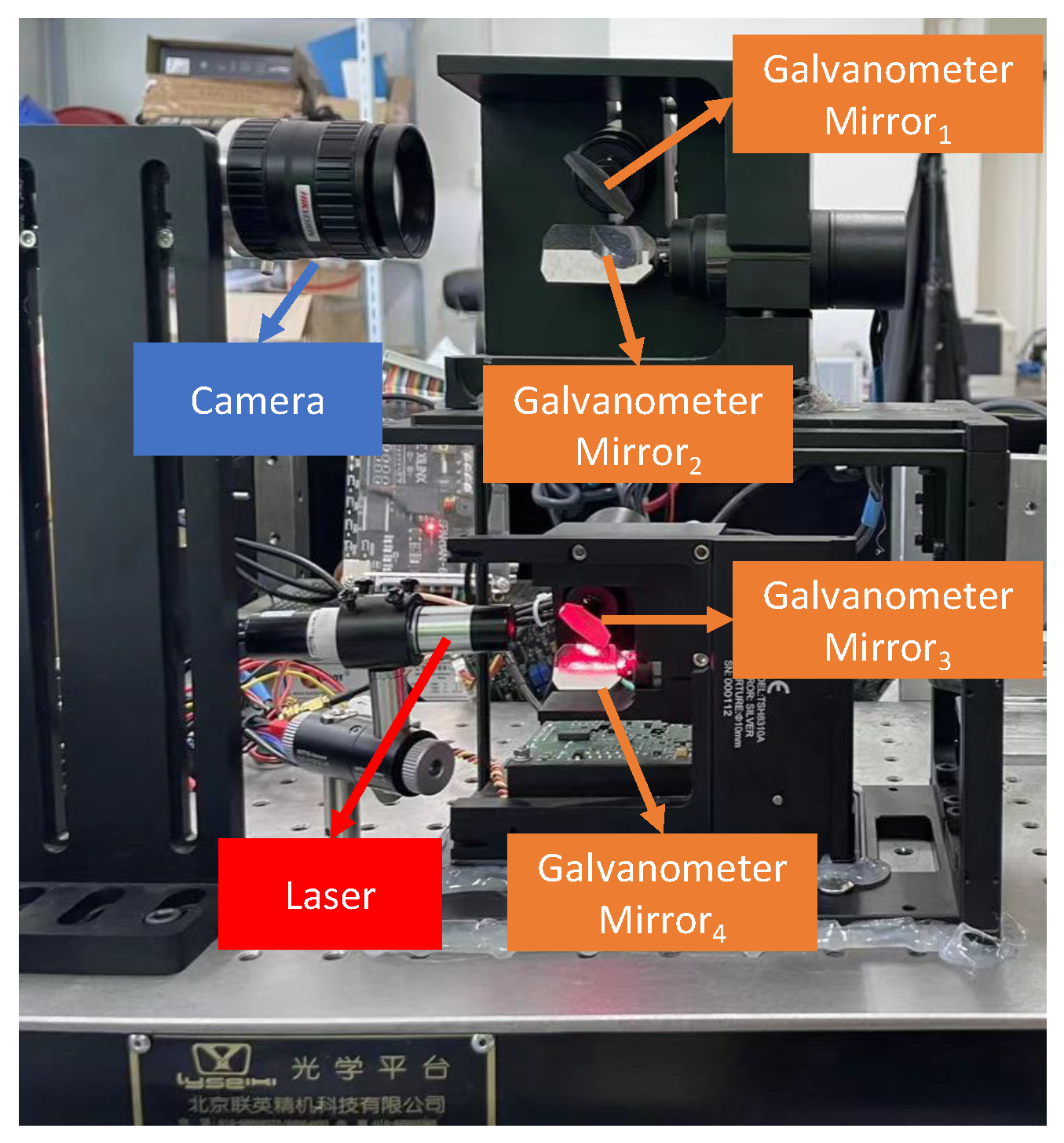

2. System Design and Geometric Model

2.1. System Design

2.2. Geometric Model

3. Calibration Method

3.1. Dynamic Camera Calibration

3.2. Dynamic Laser Calibration

3.3. Joint Calibration for Error Correction

| Algorithm 1 Joint Calibration for Error Correction |

|

4. Experiment

4.1. Calibration Accuracy Verification

4.1.1. Dynamic Camera Calibration Accuracy

4.1.2. Dynamic Laser Calibration Accuracy

4.1.3. Joint Calibration Accuracy

4.2. 3D Reconstruction Accuracy Verification

4.2.1. Standard Blocks Reconstruction Test

4.2.2. Accuracy and Reconstruction Range Comparison with Existing Methods

4.2.3. Large Object Scanning Test

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, J.; Lian, K.; Deng, Y.; Jiang, P.; Zhang, C. Multi-Objective Parameter Optimization of Fiber Laser Welding Considering Energy Consumption and Bead Geometry. IEEE Trans. Autom. Sci. Eng. 2022, 19, 3561–3574. [Google Scholar] [CrossRef]

- Du, X.; Chen, Q. Dual-Laser Goniometer: A Flexible Optical Angular Sensor for Joint Angle Measurement. IEEE Trans. Ind. Electron. 2021, 68, 6328–6338. [Google Scholar] [CrossRef]

- Deng, B.; Wu, W.; Li, X.; Wang, H.; He, Y.; Shen, G.; Tang, Y.; Zhou, K.; Zhang, Z.; Wang, Y. Active 3-D Thermography Based on Feature-Free Registration of Thermogram Sequence and 3-D Shape Via a Single Thermal Camera. IEEE Trans. Ind. Electron. 2022, 69, 11774–11784. [Google Scholar] [CrossRef]

- Gazafrudi, S.M.M.; Younesian, D.; Torabi, M. A High Accuracy and High Speed Imaging and Measurement System for Rail Corrugation Inspection. IEEE Trans. Ind. Electron. 2021, 68, 8894–8903. [Google Scholar] [CrossRef]

- Zhao, Y.j.; Xiong, Y.x.; Wang, Y. Three-dimensional accuracy of facial scan for facial deformities in clinics: A new evaluation method for facial scanner accuracy. PLoS ONE 2017, 12, e0169402. [Google Scholar] [CrossRef] [PubMed]

- Wei, C.; Sihai, C.; Dong, L.; Guohua, J. A compact two-dimensional laser scanner based on piezoelectric actuators. Rev. Sci. Instrum. 2015, 86, 013102. [Google Scholar] [CrossRef]

- Czimmermann, T.; Chiurazzi, M.; Milazzo, M.; Roccella, S.; Barbieri, M.; Dario, P.; Oddo, C.M.; Ciuti, G. An Autonomous Robotic Platform for Manipulation and Inspection of Metallic Surfaces in Industry 4.0. IEEE Trans. Autom. Sci. Eng. 2022, 19, 1691–1706. [Google Scholar] [CrossRef]

- Xu, X.; Fei, Z.; Yang, J.; Tan, Z.; Luo, M. Line structured light calibration method and centerline extraction: A review. Results Phys. 2020, 19, 103637. [Google Scholar] [CrossRef]

- Yin, S.; Ren, Y.; Guo, Y.; Zhu, J.; Yang, S.; Ye, S. Development and calibration of an integrated 3D scanning system for high-accuracy large-scale metrology. Measurement 2014, 54, 65–76. [Google Scholar] [CrossRef]

- Xiao, J.; Hu, X.; Lu, W.; Ma, J.; Guo, X. A new three-dimensional laser scanner design and its performance analysis. Optik 2015, 126, 701–707. [Google Scholar] [CrossRef]

- Jiang, T.; Cui, H.; Cheng, X. Accurate Calibration for Large-Scale Tracking-Based Visual Measurement System. IEEE Trans. Instrum. Meas. 2021, 70, 5003011. [Google Scholar] [CrossRef]

- Wang, T.; Yang, S.; Li, S.; Yuan, Y.; Hu, P.; Liu, T.; Jia, S. Error Analysis and Compensation of Galvanometer Laser Scanning Measurement System. Acta Opt. Sin. 2020, 40, 1–13. [Google Scholar]

- Eisert, P.; Polthier, K.; Hornegger, J. A mathematical model and calibration procedure for galvanometric laser scanning systems. In Proceedings of the Vision, Modeling, and Visualization, Berlin, Germany, 4–6 October 2011; Volume 591, pp. 207–214. [Google Scholar]

- Yu, C.; Chen, X.; Xi, J. Modeling and calibration of a novel one-mirror galvanometric laser scanner. Sensors 2017, 17, 164. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Yang, L.; Zhang, G.; Wang, T.; Yang, X. Modeling and calibration of the galvanometric laser scanning three-dimensional measurement system. Nanomanuf. Metrol. 2018, 1, 180–192. [Google Scholar] [CrossRef]

- Ying, X.; Peng, K.; Hou, Y.; Guan, S.; Kong, J.; Zha, H. Self-Calibration of Catadioptric Camera with Two Planar Mirrors from Silhouettes. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1206–1220. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Radke, R.J. Keeping a Pan-Tilt-Zoom Camera Calibrated. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1994–2007. [Google Scholar] [CrossRef]

- Schmidt, A.; Sun, L.; Aragon-Camarasa, G.; Siebert, J.P. The Calibration of the Pan-Tilt Units for the Active Stereo Head. In Proceedings of the Vision, Modeling, and Visualization, Bayreuth, Germany, 10–12 October 2016; Volme 389, pp. 213–221. [Google Scholar]

- Kumar, S.; Micheloni, C.; Piciarelli, C. Stereo localization using dual ptz cameras. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Münster, Germany, 2–4 September 2009; Volume 5702, pp. 1061–1069. [Google Scholar]

- Junejo, I.N.; Foroosh, H. Optimizing PTZ camera calibration from two images. Mach. Vis. Appl. 2012, 23, 375–389. [Google Scholar] [CrossRef]

- Kumar, S.; Micheloni, C.; Piciarelli, C.; Foresti, G.L. Stereo rectification of uncalibrated and heterogeneous images. Pattern Recognit. Lett. 2010, 31, 1445–1452. [Google Scholar] [CrossRef]

- Han, Z.; Zhang, L. Modeling and Calibration of a Galvanometer-Camera Imaging System. IEEE Trans. Instrum. Meas. 2022, 71, 5016809. [Google Scholar] [CrossRef]

- De Boi, I.; Sels, S.; Penne, R. Semidata-Driven Calibration of Galvanometric Setups Using Gaussian Processes. IEEE Trans. Instrum. Meas. 2022, 71, 2503408. [Google Scholar] [CrossRef]

- Boi, I.D.; Sels, S.; De Moor, O.; Vanlanduit, S.; Penne, R. Input and Output Manifold Constrained Gaussian Process Regression for Galvanometric Setup Calibration. IEEE Trans. Instrum. Meas. 2022, 71, 2509408. [Google Scholar] [CrossRef]

- Hu, S.; Matsumoto, Y.; Takaki, T.; Ishii, I. Monocular stereo measurement using high-speed catadioptric tracking. Sensors 2017, 17, 1839. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 1, pp. 666–673. [Google Scholar]

- Yi, S.; Min, S. A Practical Calibration Method for Stripe Laser Imaging System. IEEE Trans. Instrum. Meas. 2021, 70, 5003307. [Google Scholar] [CrossRef]

- Li, Z.; Ma, L.; Long, X.; Chen, Y.; Deng, H.; Yan, F.; Gu, Q. Hardware-Oriented Algorithm for High-Speed Laser Centerline Extraction Based on Hessian Matrix. IEEE Trans. Instrum. Meas. 2021, 70, 5010514. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor Fusion IV: Control Paradigms and Data Structures, SPIE, Boston, MA, USA, 14–15 November 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Li, X.; Liu, B.; Mei, X.; Wang, W.; Wang, X.; Li, X. Development of an in-situ laser machining system using a three-dimensional galvanometer scanner. Engineering 2020, 6, 68–76. [Google Scholar] [CrossRef]

- He, K.; Sui, C.; Huang, T.; Zhang, Y.; Zhou, W.; Chen, X.; Liu, Y.H. 3D surface reconstruction of transparent objects using laser scanning with a four-layers refinement process. Opt. Express 2022, 30, 8571–8591. [Google Scholar] [CrossRef] [PubMed]

- Zexiao, X.; Jianguo, W.; Ming, J. Study on a full field of view laser scanning system. Int. J. Mach. Tools Manuf. 2007, 47, 33–43. [Google Scholar] [CrossRef]

- Chi, S.; Xie, Z.; Chen, W. A laser line auto-scanning system for underwater 3D reconstruction. Sensors 2016, 16, 1534. [Google Scholar] [CrossRef]

| Error | Source and Analysis | |

|---|---|---|

| 1 | Rotation angle of galvanometer mirror | Non-linear deviations exist between voltage and rotation angle of Galvanometer-1. |

| 2 | Dynamic camera geometric model | The spectacular reflection geometric model has deviations with mechanical structure. |

| 3 | Calibration of parameter | This error has been optimized by the proposed error model and objective function in this paper. |

| 4 | Camera intrinsic parameters calibration | This non-linear error is optimized using Zhang’s [26] calibration method. |

| 5 | Rotation angle of galvanometer mirror | Non-linear deviations exist between voltage and rotation angle of Galvanometer-2. |

| 6 | Dynamic laser geometric model | This error depends on the accuracy of the laser’s mechanical installation. |

| 7 | Calibration of laser rotation axis | This error is optimized by the proposed objective function. |

| 8 | Laser center curve extraction | Center extraction algorithm is based on the Hessian Matrix and ensures a high extraction accuracy. |

|

Working

Distance/mm | Traditional Methods | Proposed Method | ||||

|---|---|---|---|---|---|---|

| Name Year | Accuracy/mm | Range/mm | Accuracy/mm | Range/mm |

Factor of

Expanded Range | |

| 100 | NOM-LSS 2017 [16] | 0.01 | 10 × 10 | 0.01 | 130 × 200 | 260 |

| 250 | 3DM-LS 2018 [17] | 0.1 | 80 × 80 | |||

| EAC-LSS 2020 [14] | 0.061 | 80 × 80 | 0.057 | 350 × 500 | 27.3 | |

| 350 | IS-LSS 2020 [30] | 0.08 | 200 × 200 | 0.08 | 500 × 700 | 8.75 |

| 400 | FFV-LSS 2007 [32] | 0.222 | 150 × 200 | |||

| FLR-LSS 2022 [31] | 0.1 | 150 × 200 | 0.092 | 650 × 800 | 17.3 | |

| 1000 | U3D-LSS 2016 [33] | 0.382 | 200 × 300 | 0.314 | 1600 × 2000 | 53.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.; Li, Q.; Shimasaki, K.; Hu, S.; Gu, Q.; Ishii, I. A Novel Dynamic Light-Section 3D Reconstruction Method for Wide-Range Sensing. Sensors 2024, 24, 3793. https://doi.org/10.3390/s24123793

Chen M, Li Q, Shimasaki K, Hu S, Gu Q, Ishii I. A Novel Dynamic Light-Section 3D Reconstruction Method for Wide-Range Sensing. Sensors. 2024; 24(12):3793. https://doi.org/10.3390/s24123793

Chicago/Turabian StyleChen, Mengjuan, Qing Li, Kohei Shimasaki, Shaopeng Hu, Qingyi Gu, and Idaku Ishii. 2024. "A Novel Dynamic Light-Section 3D Reconstruction Method for Wide-Range Sensing" Sensors 24, no. 12: 3793. https://doi.org/10.3390/s24123793

APA StyleChen, M., Li, Q., Shimasaki, K., Hu, S., Gu, Q., & Ishii, I. (2024). A Novel Dynamic Light-Section 3D Reconstruction Method for Wide-Range Sensing. Sensors, 24(12), 3793. https://doi.org/10.3390/s24123793