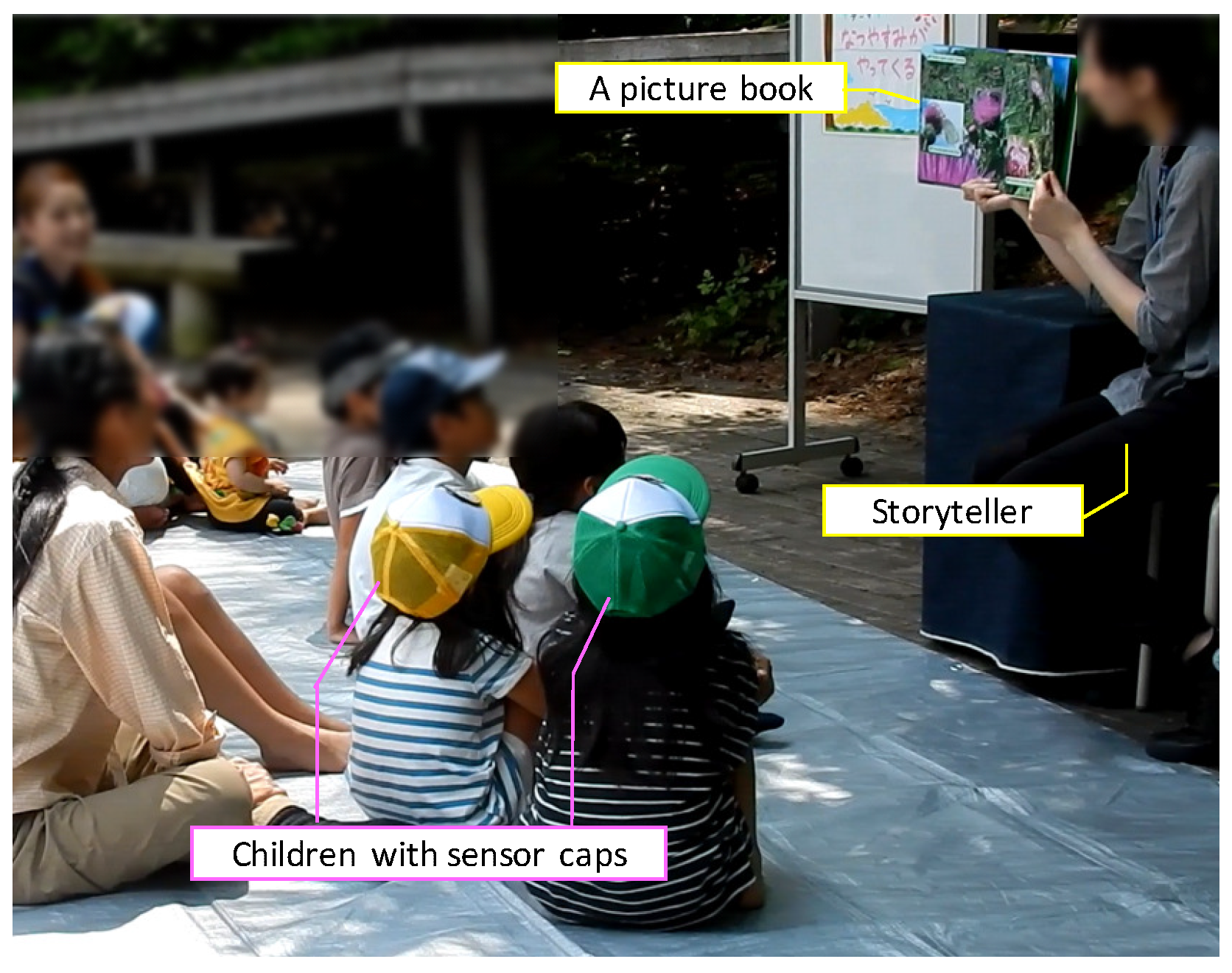

A Curiosity Estimation in Storytelling with Picture Books for Children Using Wearable Sensors †

Abstract

:1. Introduction

- This paper proposed a method for estimating the curiosity level using a motion sensor attached to the head to evaluate improvisational behavior. Four evaluation experiments in actual workshops confirmed the accuracy of the curiosity estimation.

- This paper proposed a method to investigate the relationship between curiosity and behavior. We investigated and summarized the evaluation behaviors that can be used for reading aloud with the proposed method. In addition, we calculated the accuracy of motion recognition and summarized the evaluation behaviors that can be recognized with high accuracy by a motion sensor.

2. Related Research

2.1. Research on Motion Recognition during Conversation

2.2. Research on Evaluation Methods of Children’s Learning Activities

2.3. Research on Automatic Estimations of Human Intentions

3. Proposed Method

Method for Estimating the Degree of Curiosity

- (a)

- We define a five-level evaluation index to quantify the levels of curiosity.

- (b)

- Two observers manually annotate each child’s level of curiosity from the recorded video, with one observer also noting the behavior. Annotations are performed sequentially for each child.

- (c)

- We calculate the accuracy of curiosity level estimation (defined as for each action) for each action by assessing the correspondence between the action performed by the participant and the indicator in the part of the activity that the observer considered to be of high curiosity.

- (d)

- We calculate the recognition accuracy of the behaviors observed during the narrative activity (defined as M) and estimate the curiosity level (defined as ) from those behaviors using the following formula:

4. Evaluation Experiment

5. Results

5.1. Curiosity Estimation

5.2. Summary on the Relationship between Motions and Curiosity

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Trelease, J. The Read-Aloud Handbook; Penguin: London, UK, 2013. [Google Scholar]

- Gagne, R.M.; Wager, W.W.; Golas, K.C.; Keller, J.M.; Russell, J.D. Principles of instructional Design. Perform. Improv. 2005, 44, 44–46. [Google Scholar] [CrossRef]

- Punch, S. Research with Children: The Same or Different from Research with Adults? J. Child. 2002, 9, 321–341. [Google Scholar] [CrossRef]

- Dodane, J.B.; Hirayama, T.; Kawashima, H.; Matsuyama, T. Estimation of User Interest using Time Delay Features between Proactive Content Presentation and Eye Movements. In Proceedings of the 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops 2009 (ACII 2009), Amsterdam, The Netherlands, 10–12 September 2009; pp. 1–8. [Google Scholar]

- Lukac, M.; Kameyama, M.; Migranova, Y. Live-feeling Communication: Multi-algorithm Approach to the Estimation of Human Intentions. In Proceedings of the Conference on IEEE International Conference on Systems, Man and Cybernetics 2017 (SMC 2017), Banff, AB, Canada, 5–8 October 2017; pp. 2152–2157. [Google Scholar]

- Batliner, A.; Steidl, S.; Nöth, E. Associating Children’s Non-verbal and Verbal Behaviour: Body Movements, Emotions, and Laughter in a Human-robot Interaction. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech, 22–27 May 2011; pp. 5828–5831. [Google Scholar]

- Ohnishi, A.; Saito, K.; Terada, T.; Tsukamoto, M. Toward Interest Estimation from Head Motion Using Wearable Sensors: A Case Study in Story Time for Children. In Proceedings of the 19th International Conference on Human—Computer Interaction (HCII 2017), Vancouver, BC, Canada, 9–14 July 2017; pp. 9–14. [Google Scholar]

- Choudhury, T.; Pentland, A. Sensing and Modeling Human Networks using the Sociometer. In Proceedings of the 7th IEEE International Symposium on Wearable Computers (ISWC 2003), White Plains, NY, USA, 21–23 October 2003; p. 216. [Google Scholar]

- Carletta, J.; Ashby, S.; Bourban, S.; Flynn, M.; Guillemot, M.; Hain, T.; Kadlec, J.; Karaiskos, V.; Kraaij, W.; Kronenthal, M.; et al. The AMI Meeting Corpus: A Pre-announcement. In Proceedings of the International Workshop on Machine Learning for Multimodal Interaction (ICMI-MLMI 2005), Edinburgh, UK, 11–13 July 2005; pp. 28–39. [Google Scholar]

- Sumi, Y.; Yano, M.; Nishida, T. Analysis Environment of Conversational Structure with Nonverbal Multimodal Data. In Proceedings of the International Conference on Multimodal Interfaces and the Workshop on Machine Learning for Multimodal Interaction (ICMI-MLMI 2010), Beijing, China, 8–12 November 2010; p. 44. [Google Scholar]

- Tung, T.; Gomez, R.; Kawahara, T.; Matsuyama, T. Multiparty Interaction Understanding Using Smart Multimodal Digital Signage. IEEE Trans. Hum.-Mach. Syst. 2014, 44, 625–637. [Google Scholar] [CrossRef]

- Mana, N.; Lepri, B.; Chippendale, P.; Cappelletti, A.; Pianesi, F.; Svaizer, P.; Zancanaro, M. Multimodal corpus of multi-party meetings for automatic social behavior analysis and personality traits detection. In Proceedings of the International Conference on Multimodal Interfaces and the 2007 Workshop on Tagging, Mining and Retrieval of Human Related Activity Information (ICMI-TMR 2007), Nagoya, Japan, 15 November 2007; pp. 9–14. [Google Scholar]

- Okada, S.; Bono, M.; Takanashi, K.; Sumi, Y.; Nitta, K. Context-based Conversational Hand Gesture Classification in Narrative Interaction. In Proceedings of the 15th ACM on International Conference on Multimodal Interaction (ICMI 2013), Sydney, Australia, 9–13 December 2013; pp. 303–310. [Google Scholar]

- Yamashita, J.; Kato, H.; Ichimaru, T.; Suzuki, H. Sounding Board: A Handheld Device for Mutual Assessment in Education. In Proceedings of the Extended Abstracts on Human Factors in Computing Systems (CHI 2007), San Jose, CA, USA, 28 April–3 May 2007; pp. 2783–2788. [Google Scholar]

- Wang, Z.; Williamson, R.A.; Meltzoff, A.N. Preschool Physics: Using the Invisible Property of Weight in Causal Reasoning Tasks. PLoS ONE 2018, 13, e0192054. [Google Scholar] [CrossRef] [PubMed]

- Waismeyer, A.; Meltzoff, A.N. Learning to Make Things Happen: Infants’ Observational Learning of Social and Physical Causal Events. J. Exp. Child Psychol. 2017, 162, 58–71. [Google Scholar] [CrossRef] [PubMed]

- Kamizono, T.; Abe, H.; Baba, K.; Takano, S.; Murakami, K. Towards Activity Recognition of Learners by Kinect. In Proceedings of the IIAI 3rd International Conference on Advanced Applied Informatics (IIAIAAI 2014), Kitakyushu, Japan, 31 August–4 September 2014; pp. 177–180. [Google Scholar]

- Hwang, I.; Jang, H.; Park, T.; Choi, A.; Hwang, C.; Choi, Y.; Song, J. Toward Delegated Observation of Kindergarten Children’s Exploratory Behaviors in Field Trips. In Proceedings of the 13th international conference on Ubiquitous computing (UbiComp 2011), Beijing, China, 17–21 September 2011; pp. 555–556. [Google Scholar]

- Won, A.S.; Bailenson, J.N.; Janssen, J.H. Automatic Detection of Nonverbal Behavior Predicts Learning in Dyadic Interactions. IEEE Trans. Affect. Comput. 2014, 5, 112–125. [Google Scholar] [CrossRef]

- Gatica-Perez, D.; McCowan, L.; Zhang, D.; Bengio, S. Detecting Group Interest-level in Meetings. In Proceedings of the IEEE International Conference on Acoustics Speech and Signal Processing 2005 (ICASSP 2005), Philadelphia, PA, USA, 18–23 March 2005; Volume 1, pp. I/489–I/492. [Google Scholar]

- Wrede, B.; Shriberg, E. Spotting “Hot spots” in Meetings: Human Judgments and Prosodic Cues. In Proceedings of the Eighth European Conference on Speech Communication and Technology, Geneva, Switzerland, 1–4 September 2003; pp. 2805–2808. [Google Scholar]

- Selene, M.; Rosalind, W.P. Automated Posture Analysis for Detecting Learner’s Interest Level. In Proceedings of the Conference on IEEE Computer Vision and Pattern Recognition Workshop 2003 (CVPRW 2003), Madison, WI, USA, 16–22 June 2003; Volume 5, p. 49. [Google Scholar]

- TSND121, ATR-Promotions. Available online: http://www.atr-p.com/products/TSND121.html (accessed on 15 April 2024).

- Cohen, J. Weighted kappa: Nominal Scale Agreement with Provision for Scaled Disagreement or Partial Credit. Psychol. Bull. 1968, 70, 213–220. [Google Scholar] [CrossRef] [PubMed]

- Fleiss, J.L.; Cohen, J.; Everitt, B.S. Large Sample Standard Errors of Kappa and Weighted Kappa. Psychol. Bull. 1969, 72, 323–327. [Google Scholar] [CrossRef]

- ELAN, Max Planck Institute. Available online: https://tla.mpi.nl/tools/tla-tools/elan/ (accessed on 15 April 2024).

- Weka 3, The University of Waikato. Available online: http://www.cs.waikato.ac.nz/ml/weka/ (accessed on 1 June 2019).

- Harris, P.L.; Donnelly, K.; Guz, G.R.; Pitt-Watson, R. Children’s Understanding of the Distinction Between Real and Apparent Emotion. Child Dev. 1986, 57, 895–909. [Google Scholar] [CrossRef] [PubMed]

- Lewis, M.; Haviland-Jones J., M.; Barrett L., F. The Emergence of Human Emotions. In Handbook of Emotions; Guilford Press: New York, NY, USA, 2010; pp. 304–319. [Google Scholar]

- Zahn-Waxler, C.; Friedman, R.J.; Cole, P.M.; Mizuta, I.; Hiruma, N. Japanese and United States Preschool Children’s Responses to Conflict and Distress. J. Child Dev. 1996, 67, 2462–2477. [Google Scholar] [CrossRef]

| 5 | 4 | 3 | 2 | 1 | |

| Curiosity level | Look of curiosity | Listening, Seems curious | Undecided | Does not seem to be listening | Obviously not interested |

| The Number of Subjects | Day 1 | Day 2 | ||

|---|---|---|---|---|

| 1st Time | 2nd Time | 1st Time | 2nd Time | |

| All subjects [persons] | 5 | 1 | 5 | 3 |

| Valid subjects 1 [persons] | 0 | 1 | 5 | 3 |

| Observed or Not, Curiosity Levels of Each Motions | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Observed Motion | Sub. 0 | Sub. 1 | Sub. 2 | Sub. 3 | Sub. 4 | Sub. 5 | Sub. 6 | Sub. 7 | Sub. 8 |

| Looking around | 1 | 1 | 2 | 1 | 5 | 5 | 1 | 1 | 5 |

| Looking down | 3 | 1 | 1 | 1 | 3 or 2 | 2 | 1 | 2 or 1 | 2 |

| Sitting state | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 |

| Clapping | 3 | 1 or 2 | 3 | - | 3 | 3 | 4 | 4 | 4 |

| Playing with hands | 5 | 5 | 4 or 5 | 4 | 5 | 5 | - | 5 | 5 |

| Wriggling | 3 | 1 | 4 | 4 | 3 | 4 | - | 4 | 4 |

| Sitting again | 4 | - | 4 or 2 | 4 or 3 | - | 3 or 5 | - | 4 | 4 |

| Talking | - | 3 or 1 | 5 | 3 or 2 | 5 | 5 | - | - | 5 |

| Looking other person | - | 1 | - | - | 1 | - | 1 | 5 | 1 |

| Finger pointing | 4 | 5 | 4 or 2 | ||||||

| Looking back | 2 | 1 | 1 | ||||||

| Laugh | 5 | 5 | |||||||

| Lying down | 1 | 2 | |||||||

| Nodding | 4 | 5 | |||||||

| Playing with hands and talking | 5 | 5 | |||||||

| Sitting state and laugh | 5 | 5 | |||||||

| Stretching | 4 or 5 | 4 | |||||||

| Touch a face | 2 | 4 | |||||||

| Attention to insects | 1 | ||||||||

| Clapping and looking around | 1 | ||||||||

| Close ears | 4 or 2 | ||||||||

| Close ears and looking down | 1 | ||||||||

| Close ears and looking side | 1 or 2 | ||||||||

| Close eyes and ears | 2 | ||||||||

| Driving away insects | 1 | ||||||||

| Looking around and pointing | 5 | ||||||||

| Looking forward | 1 or 5 | ||||||||

| Looking side | 2 or 4 | ||||||||

| Mother’s movement | 4 | ||||||||

| Moving location | 1 | ||||||||

| Origami | 1 | ||||||||

| Playing with hands and looking around | 5 | ||||||||

| Scratch a head | 4 | ||||||||

| Scratch arms | 4 | ||||||||

| Sneezing | 3 | ||||||||

| Stand up | 1 | ||||||||

| Stand up and laugh | 4 | ||||||||

| Talking and Laugh | 5 | ||||||||

| To tilt a head | 3 or 4 | ||||||||

| Touching a cap | 2 | ||||||||

| Touching own arm | 1 | ||||||||

| Walking | 1 | ||||||||

| Subject No. | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | Ave. | |

| C4.5 | 0.77 | 0.72 | 0.84 | 0.78 | 0.84 | 0.72 | 0.91 | 0.83 | 0.87 | 0.81 |

| Random Forest | 0.88 | 0.89 | 0.95 | 0.92 | 0.93 | 0.93 | 0.95 | 0.92 | 0.93 | 0.92 |

| Subject No. | Cohen’s Weighted Kappa Statistics |

|---|---|

| 0 | 0.95 |

| 1 | 0.96 |

| 2 | 0.95 |

| 3 | 0.95 |

| 4 | 0.99 |

| 5 | 0.92 |

| 6 | 0.99 |

| 7 | 0.95 |

| 8 | 0.97 |

| Ave. | 0.96 |

| Ave. of Curiosity Level | Var. of Curiosity Level | Number of People | Motion Recognition Rate | Ideal Curiosity Estimation Rate | Ave. of Actual Curiosity Estimation Rate | |

|---|---|---|---|---|---|---|

| Looking back | 1.3 | 0.22 | 3 | 0.81 | 0.95 | 0.87 |

| Lying down | 1.5 | 0.25 | 2 | 0.85 | 0.69 | 0.84 |

| Looking down | 1.6 | 0.53 | 9 | 0.92 | 0.92 | 0.71 |

| Looking other person | 1.8 | 2.56 | 5 | 0.90 | 0.96 | 0.64 |

| Looking around | 2.4 | 3.36 | 9 | 0.72 | 0.90 | 0.58 |

| Touching face | 3.0 | 1.00 | 2 | 0.96 | 0.88 | 0.89 |

| Wriggling | 3.4 | 0.98 | 8 | 0.69 | 0.96 | 0.66 |

| Clapping | 3.4 | 0.24 | 8 | 0.93 | 0.71 | 0.70 |

| Sitting again | 4.0 | 0.00 | 6 | 0.87 | 0.96 | 0.68 |

| Sitting state | 4.0 | 0.10 | 9 | 0.91 | 0.92 | 0.85 |

| Nodding | 4.5 | 0.25 | 2 | 0.80 | 0.84 | 0.44 |

| Finger pointing | 4.5 | 0.25 | 3 | 0.94 | 0.90 | 0.60 |

| Playing with hands | 4.9 | 0.12 | 8 | 0.88 | 0.99 | 0.79 |

| Laughing | 5.0 | 0.00 | 2 | 0.71 | 0.99 | 0.77 |

| Playing with hands | 5.0 | 0.00 | 2 | 0.83 | 0.73 | 0.70 |

| Playing with hands | 5.0 | 0.00 | 2 | 0.83 | 0.73 | 0.70 |

| and talking | ||||||

| Sitting state and laughing | 5.0 | 0.00 | 2 | 0.88 | 0.99 | 0.87 |

| Talking | 5.0 | 0.05 | 6 | 0.93 | 0.85 | 0.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ohnishi, A.; Kosaka, S.; Hama, Y.; Saito, K.; Terada, T. A Curiosity Estimation in Storytelling with Picture Books for Children Using Wearable Sensors. Sensors 2024, 24, 4043. https://doi.org/10.3390/s24134043

Ohnishi A, Kosaka S, Hama Y, Saito K, Terada T. A Curiosity Estimation in Storytelling with Picture Books for Children Using Wearable Sensors. Sensors. 2024; 24(13):4043. https://doi.org/10.3390/s24134043

Chicago/Turabian StyleOhnishi, Ayumi, Sayo Kosaka, Yasukazu Hama, Kaoru Saito, and Tsutomu Terada. 2024. "A Curiosity Estimation in Storytelling with Picture Books for Children Using Wearable Sensors" Sensors 24, no. 13: 4043. https://doi.org/10.3390/s24134043