A Deep Learning-Based Emergency Alert Wake-Up Signal Detection Method for the UHD Broadcasting System

Abstract

:1. Introduction

1.1. Related Works

1.2. Motivation and Contribution

- -

- Improve DNN structure by adding four fully connected (FC) layer to learn high-dimensional complex patterns and features.

- -

- Reconstruct an appropriate training dataset to achieve high detection performance in wireless channel and prevent overfitting.

- -

- Optimize received complex signal data used as an input DNN structure.

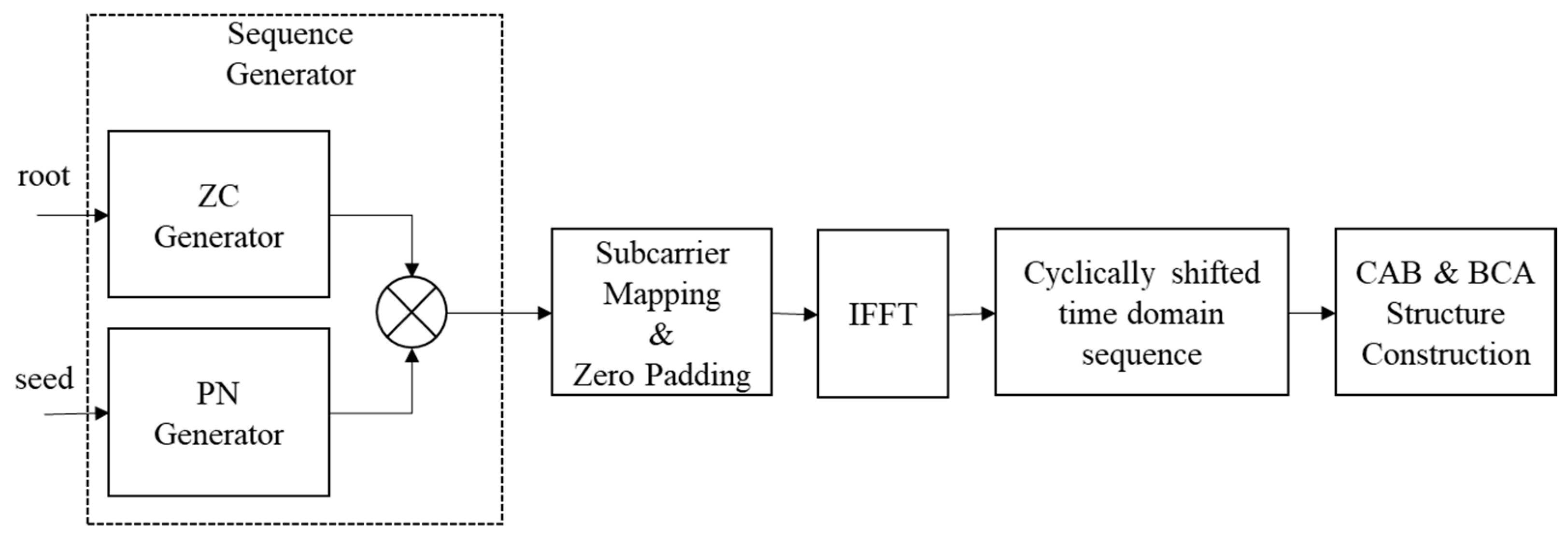

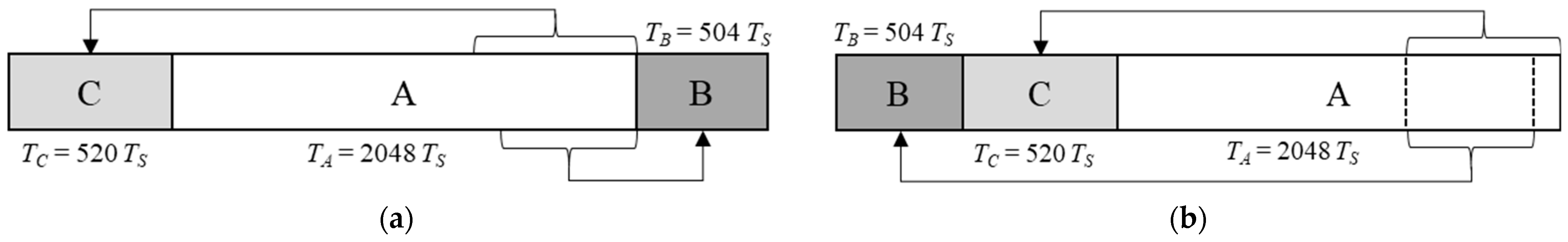

2. Bootstrap Generation and Structure of ATSC 3.0 Standard

- -

- 00: No active emergency message.

- -

- 01, 10, and 11: Rotating through these values will inform the receiver that there is either a new emergency message or that there is new and substantial information being added to an existing message.

3. Proposed Method

| Algorithm 1 Emergency alert wake-up signal detection method |

| Input: The received 3072 complex samples Output: Wake-up 2 bits (00, 01, 10, and 11) |

| 1: if bootstrap detection is False: 2: go back to step 1 using next received 3072 complex samples 3: else: 4: symbol time offset estimation and compensation 5. acquisition of time-synchronized 2nd and 3rd bootstrap symbols 6: demodulation emergency wake-up bits 7: if any wake-up bit is 1: 8: occur emergency disaster situations 9: wake-up any connected device 10: go back to step 6 and demodulation the next bootstrap symbols 11: else: 12: go back to step 6 and demodulation the next bootstrap symbols 13: end if 14: end if |

3.1. Bootstrap Detection Method Based on Deep Learning Structure

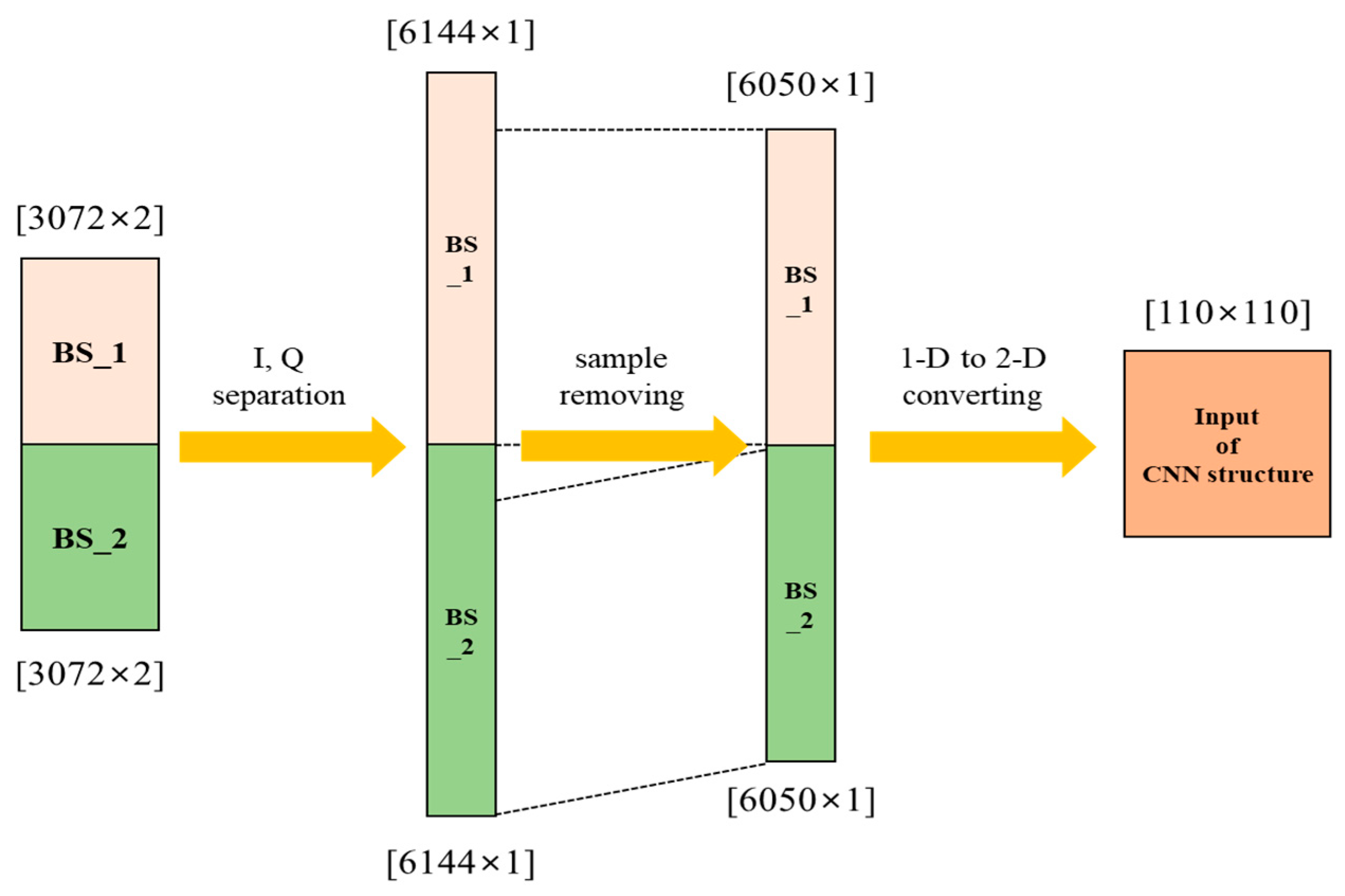

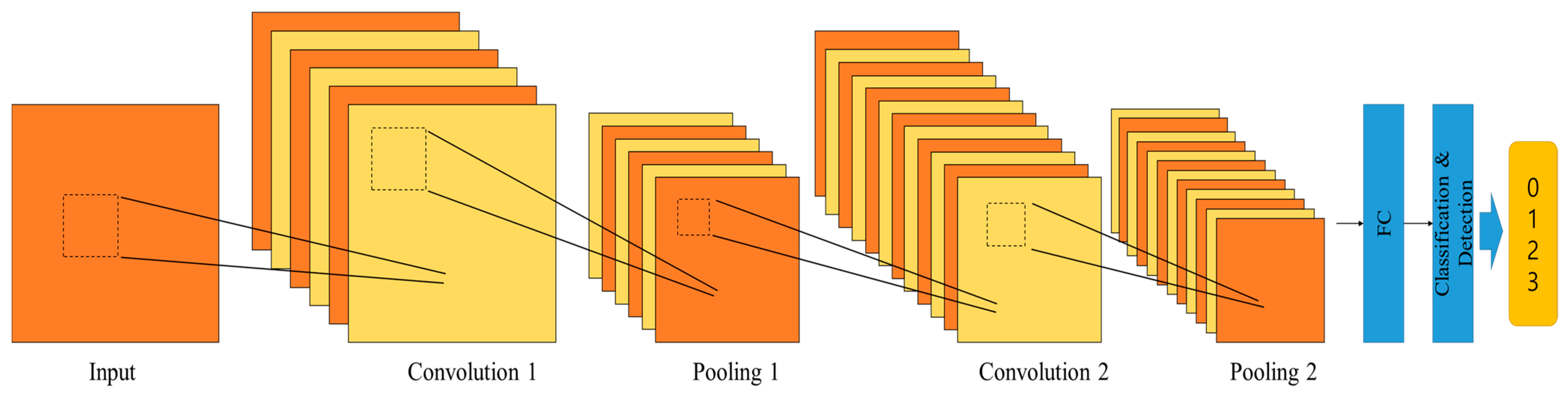

3.2. Emergency Wake-Up Signal Demodulator Based on Deep Learning

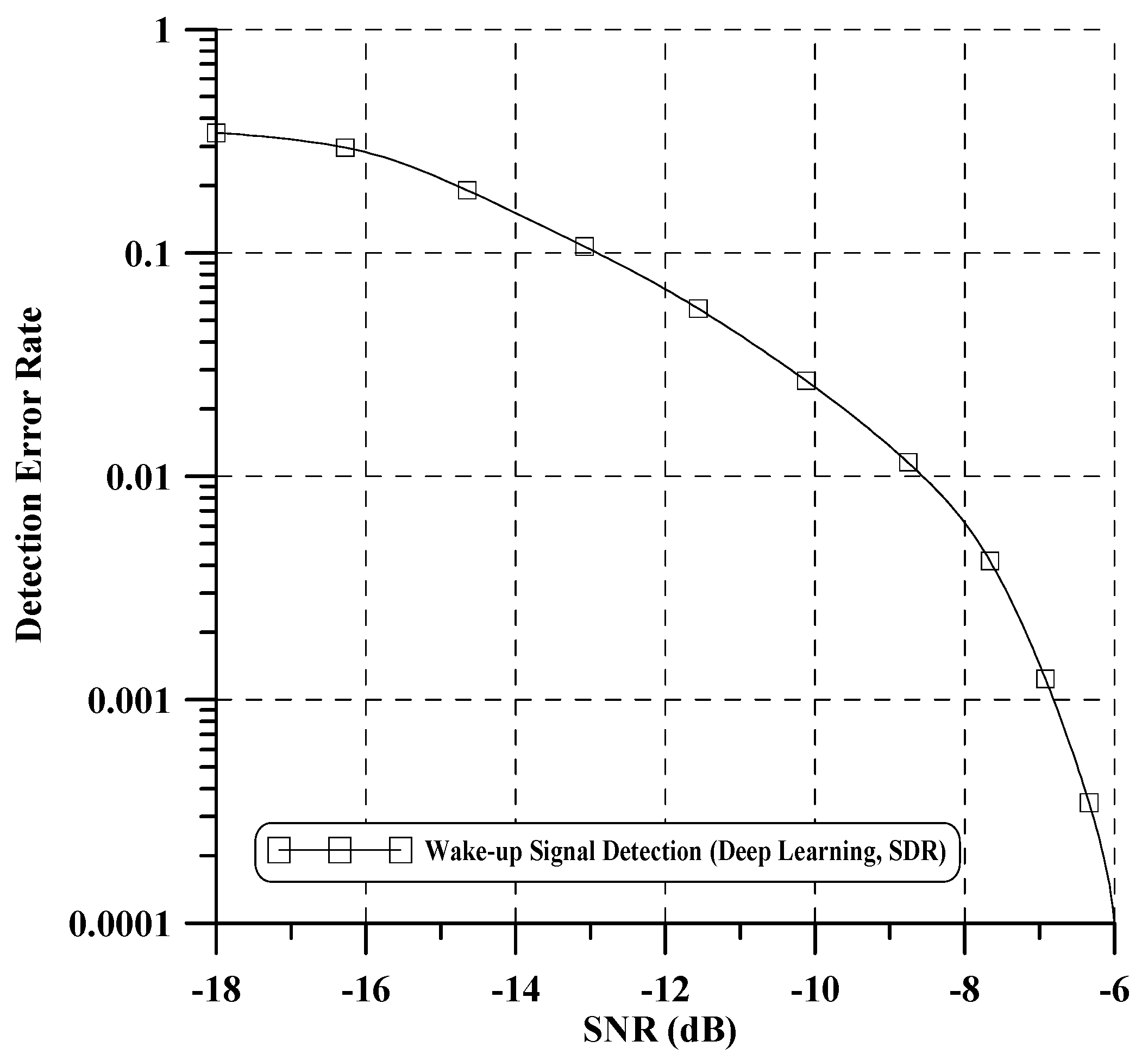

4. Simulation Results and Discussions

- -

- The minimum time interval to the next frame: 100 ms.

- -

- System bandwidth: 6 MHz.

- -

- Sample rate of post-bootstrap: 6.912 MHz.

- -

- Bootstrap signal detection: SNR = [−19, −16, −13 dB].

- -

- Wake-up signal detection: SNR = [−22, −19, −16 dB].

- -

- It effectively models intricate nonlinear relationships, including channel characteristics, interference, noise, and other factors.

- -

- It learns the interactions between system components, leading to more efficient optimization and improved overall system performance.

- -

- Center frequency: 768 MHz.

- -

- Channel bandwidth: 6 MHz.

- -

- ADC sampling rate: 1.536 MHz.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Byun, Y.-K.; Chang, S.; Choi, S.J. An Emergency Alert Broadcast Based on the Convergence of 5G and ATSC 3.0. Electronics 2021, 10, 758. [Google Scholar] [CrossRef]

- Lee, A.; Kwon, Y.; Park, H.; Lee, H. Deep Learning-based Scalable and Robust Channel Estimator for Wireless Cellular Networks. ETRI J. 2022, 6, 915–924. [Google Scholar] [CrossRef]

- Damaševičius, R.; Bacanin, N.; Misra, S. From Sensors to Safety: Internet of Emergency Services (IoES) for Emergency Response and Disaster Management. J. Sens. Actuator Netw. 2023, 12, 41. [Google Scholar] [CrossRef]

- Kim, H.; Kim, J.; Park, S.-I.; Lee, J.-Y.; Hur, N.; Simon, M.; Aitken, M.; Gage, K. An Improved Decoding Scheme for Emergency Alert Wake-Up Bits in ATSC 3.0. IEEE Trans. Broadcast. 2020, 66, 1–8. [Google Scholar] [CrossRef]

- Albalawi, U. A Device-to-Device System for Safety and Emergency Services of Mobile Users. IEEE Consum. Electron. Mag. 2019, 5, 42–45. [Google Scholar] [CrossRef]

- Panda, K.G.; Das, S.; Sen, D.; Arif, W. Design and Deployment of UAV-Aided Post-Disaster Emergency Network. IEEE Access 2019, 7, 102985–102999. [Google Scholar] [CrossRef]

- ATSC A/331:2024; Signaling, Delivery, Synchronization, and Error Protection. ATSC: Salt Lake City, UT, USA, 2024. Available online: https://www.atsc.org/atsc-documents/3312017-signaling-delivery-synchronization-error-protection (accessed on 1 June 2024).

- ATSC A/322:2024; PHYSICAL LAYER PROTOCOL. ATSC: Salt Lake City, UT, USA, 2024. Available online: https://www.atsc.org/atsc-documents/a3222016-physical-layer-protocol (accessed on 1 June 2024).

- ATSC A/321:2024; SYSTEM DISCOVERY AND SIGNALING. ATSC: Salt Lake City, UT, USA, 2024. Available online: https://www.atsc.org/atsc-documents/a3212016-system-discovery-signaling (accessed on 1 June 2024).

- Kim, H.; Kim, J.; Park, S.I.; Hur, N.; Simon, M.; Aitken, M. A Novel Iterative Detection Scheme of Bootstrap Signals for ATSC 3.0 System. IEEE Trans. Broadcast. 2019, 2, 211–219. [Google Scholar] [CrossRef]

- Digital Video Broadcasting (DVB): Frame Structure, Channel Coding and Modulation for Digital Terrestrial Television (DVB-T). Available online: https://dvb.org/wp-content/uploads/2019/12/a012_dvb-t_june_2015.pdf (accessed on 1 June 2024).

- Zaki, A.; Métwalli, A.; Aly, M.H.; Badawi, W.K. Wireless Communication Channel Scenarios: Machine-Learning-Based Identification and Performance Enhancement. Electronics 2022, 11, 3253. [Google Scholar] [CrossRef]

- Ahn, S.; Kwon, S.; Kwon, H.C.; Kim, Y.; Lee, J.; Shin, Y.S.; Park, S.I. Implementation and Test Results of On-Channel Repeater for ATSC 3.0 Systems. ETRI J. 2022, 44, 715–732. [Google Scholar] [CrossRef]

- Baek, M.-S.; You, Y.-H.; Song, H.-K. Combined QRD-M and DFE Detection Technique for Simple and Efficient Signal Detection in MIMO OFDM Systems. IEEE Trans. Commun. 2009, 4, 1632–1638. [Google Scholar]

- Li, Y.; Wang, J.; Gao, Z. Learning-Based Multi-Domain Anti-Jamming Communication with Unknown Information. Electronics 2023, 12, 3901. [Google Scholar] [CrossRef]

- Rekkas, V.P.; Sotiroudis, S.; Sarigiannidis, P.; Wan, S.; Karagiannidis, G.K.; Goudos, S.K. Machine Learning in Beyond 5G/6G Networks—State-of-the-Art and Future Trends. Electronics 2021, 10, 2786. [Google Scholar] [CrossRef]

- Thar, K.; Oo, T.Z.; Tun, Y.K.; Kim, K.T.; Hong, C.S. A Deep Learning Model Generation Framework for Virtualized Multi-Access Edge Cache Management. IEEE Access 2019, 7, 62734–62749. [Google Scholar] [CrossRef]

- Baek, M.S.; Kwak, S.; Jung, J.Y.; Kim, H.M.; Choi, D.J. Implementation Methodologies of Deep Learning-Based Signal Detection for Conventional MIMO Transmitters. IEEE Trans. Broadcast. 2019, 3, 636–642. [Google Scholar] [CrossRef]

- Chun, C.J.; Kang, J.M.; Kim, I.M. Deep Learning-Based Joint Pilot Design and Channel Estimation for Multiuser MIMO Channels. IEEE Comm. Lett. 2019, 11, 1999–2003. [Google Scholar] [CrossRef]

- Chen, Q.; Zhang, S.; Xu, S.; Cao, S. Efficient MIMO Detection with Imperfect Channel Knowledge: A Deep Learning Approach. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakesh, Morocco, 15–18 April 2019; pp. 1–6. [Google Scholar]

- Baek, M.-S.; Song, J.-H.; Bae, B. ATSC 3.0 Bootstrap Detection Based on Machine Learning Technique for Fast Detection of Emergency Alert Signal. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 4–6 January 2020. [Google Scholar]

| Syntax | No. of Bits | Format | |

|---|---|---|---|

| Bootstrap Symbol 0 (BS_0) | - | - | - |

| Bootstrap Symbol 1 (BS_1) | ea wake-up 1 | 1 | uimsbf |

| min time to next | 5 | uimsbf | |

| system bandwidth | 2 | uimsbf | |

| Bootstrap Symbol 2 (BS_2) | ea wake-up 2 | 1 | uimsbf |

| bsr coefficient | 7 | uimsbf | |

| Bootstrap Symbol 3 (BS_3) | preamble structure | 8 | uimsbf |

| Parameters | Value |

|---|---|

| Sampling rate ( | 6.144 Msamples/s |

| Bandwidth (BW) | 4.5 MHz |

| FFT size ( | 2048 |

| Subcarrier spacing | 3 kHz |

| OFDM symbol duration ( | 500 μs |

| Layer | Size | Activation |

|---|---|---|

| Input layer | 6144 | - |

| FC layer 1 | 6144 | ReLU |

| FC layer 2 | 3072 | ReLU |

| FC layer 3 | 1536 | ReLU |

| FC layer 4 | 1002 | None |

| Detection | 1002 : decision process is as Equation (4) | Softmax |

| Layer | Size | Filter Size | Activation |

|---|---|---|---|

| Input layer | 110 × 110 | - | - |

| Conv. 1 | 32@110 × 110 | 11 × 11 | ReLU |

| Pool. 1 | 32@55 × 55 | 2 × 2 | Max |

| Conv. 2 | 64@55 × 55 | 11 × 11 | ReLU |

| Pool. 2 | 64@28 × 28 | 2 × 2 | Max |

| FC layer | 50,176 | - | None |

| Detection | 4 | - | Softmax |

| i | |||

|---|---|---|---|

| 1 | 0.95346 | 0 | 0 |

| 2 | 0.01618 | 1.003019 | 4.855121 |

| 3 | 0.04963 | 5.442091 | 3.419109 |

| 4 | 0.11430 | 0.518650 | 5.864470 |

| 5 | 0.08522 | 2.751772 | 2.215894 |

| 6 | 0.07264 | 0.602895 | 3.758058 |

| 7 | 0.01735 | 1.016585 | 5.430202 |

| 8 | 0.04220 | 0.143556 | 3.952093 |

| 9 | 0.01446 | 0.153832 | 1.093586 |

| 10 | 0.05195 | 3.324866 | 5.775198 |

| 11 | 0.11265 | 1.935570 | 0.154459 |

| 12 | 0.08301 | 0.429948 | 5.928282 |

| 13 | 0.09848 | 3.228872 | 3.053023 |

| 14 | 0.07380 | 0.848831 | 0.628578 |

| 15 | 0.06341 | 0.073883 | 2.128544 |

| 16 | 0.04800 | 0.203952 | 1.099463 |

| 17 | 0.04203 | 0.194450 | 3.462951 |

| 18 | 0.06741 | 0.924450 | 3.664773 |

| 19 | 0.03272 | 1.381320 | 2.833799 |

| 20 | 0.06208 | 0.640512 | 3.334290 |

| 21 | 0.07291 | 1.368671 | 0.393889 |

| Item | Conventional Method | Proposed Method | |

|---|---|---|---|

| Bootstrap Synchronization | input unit | a sample | block (=3072 samples) |

| range | 4 bootstrap symbols | 1st bootstrap symbol | |

| scheme | correlation | DNN | |

| Channel compensation | O | X | |

| Bootstrap information demodulation | demodulation range | 24 bits | 2 bits (only wake-up bits) |

| scheme | ML decision of the absolute cyclic shift | CNN | |

| domain | frequency | time | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, J.-H.; Baek, M.-S.; Bae, B.; Song, H.-K. A Deep Learning-Based Emergency Alert Wake-Up Signal Detection Method for the UHD Broadcasting System. Sensors 2024, 24, 4162. https://doi.org/10.3390/s24134162

Song J-H, Baek M-S, Bae B, Song H-K. A Deep Learning-Based Emergency Alert Wake-Up Signal Detection Method for the UHD Broadcasting System. Sensors. 2024; 24(13):4162. https://doi.org/10.3390/s24134162

Chicago/Turabian StyleSong, Jin-Hyuk, Myung-Sun Baek, Byungjun Bae, and Hyoung-Kyu Song. 2024. "A Deep Learning-Based Emergency Alert Wake-Up Signal Detection Method for the UHD Broadcasting System" Sensors 24, no. 13: 4162. https://doi.org/10.3390/s24134162

APA StyleSong, J.-H., Baek, M.-S., Bae, B., & Song, H.-K. (2024). A Deep Learning-Based Emergency Alert Wake-Up Signal Detection Method for the UHD Broadcasting System. Sensors, 24(13), 4162. https://doi.org/10.3390/s24134162