1. Introduction

The problem of simultaneous localization and classification of objects in images is referred to as object detection. Localization means the capacity to draw a bounding box around each object of interest in the image, whereas classification means assigning to each bounding box a class label (for the localized object) [

1]. As reported in [

2], there are factors that complicate these two tasks, like large variations in viewpoints, poses, occlusions, and lighting conditions.

Despite these difficulties, there have already been remarkable achievements in object detection [

3]. An example is the Viola–Jones (VJ) detector, which was the first algorithm that obtained high accuracy in the face detection problem in real time [

4,

5]. Due to advances in neural network architectures, the computational power of modern computers, and access to huge databases of images, detectors based on Convolutional Neural Networks (CNNs) have been successfully applied in the detection of different types of objects [

6,

7,

8].

On the other hand, if databases used in the training of neural networks are not large enough, then the performance of CNN based detectors may be poor, which is a well-known problem of model over-fitting that commonly occurs as a consequence of limitations in manually acquiring and labeling large amounts of images. Indeed, very large deep neural models have many degrees of freedom (free parameters), which demands an equally large amount of labeled examples to mitigate the risks of over-fitting [

9].

Among the proposed approaches to deal with over-fitting are the learning transfer [

10] and pre-training [

11] techniques, which use as initial neural network parameters those of another network that has been trained on another dataset. An alternative approach is data augmentation which, unlike the previous ones, deals with the problem of over-fitting at its root, the scarcity of data in the training base [

6].

In the taxonomy presented in [

6], the image data augmentation approach is divided into two branches. The first branch includes techniques that create new images from basic manipulations of existing ones, like color space and geometric transformations. A problem with the approaches included in this branch is that they need initial data, which may not be available in some cases. The other branch includes techniques based on deep learning, such as Generative Adversarial Networks, in which a generative neural network is designed to create new images in such a way that a discriminating neural network is unable to differentiate between created and real images [

12,

13]. Another approach that also deals with the over-fitting problem, but that does not depend on the previous existence of data is image synthesis [

14]. In this approach, images are generated from scratch by computer graphics algorithms in a process known as rendering. This process uses descriptive models that involve the geometry and sometimes textures of the target objects such as technical drawings, CAD models, and mathematical equations.

The use of synthesized images is an attractive approach in object detection problems where the target object has a rigid body (which is thus easily synthesized because the distance between any two points of it is constant), but real images of them are scarce, as is the case with thermal images of electrical power equipment. Additionally, during their synthesis, the images can be automatically labeled.

Indeed, visual inspection of electrical equipment in power plants can prevent economic losses caused by power outages. These inspections are usually performed through infrared images, in which temperature distributions can be measured and used to detect early insulation failure, overloading, and inefficient operation [

15,

16,

17,

18,

19]. However, other kinds of images can also be used, including images taken in the visible spectrum. In all cases, any such inspection automation must rely on the explicit or implicit equipment localization in the image, along with its classification [

20], which makes it a well-suited application for the data augmentation approach studied in this work.

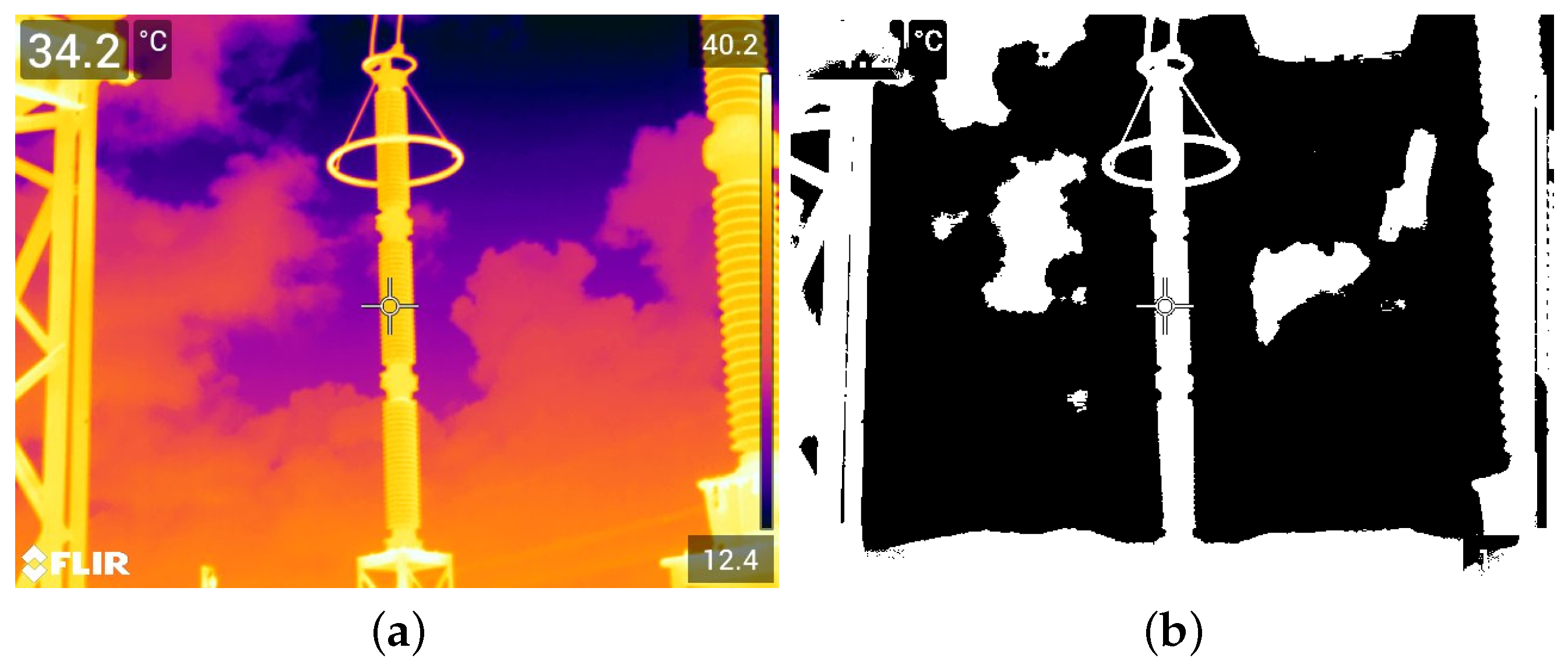

The primary focus of this paper is to present a case study conducted under the hypothetic restriction that images from the target objects are not available at all, as in the early stage of an industrial plant design, where only detailed geometric information about the object is available. The study focuses on the performance assessment of two well-established machine learning approaches belonging to different paradigms when all positive training examples are synthetic images. Additionally, training datasets also included non-synthetic instances of non-target images, all preprocessed and converted to black and white (binarized). The binarization process of these images discards most information not related to the geometry of the targeted rigid objects in images, and allows the use of the same detector for both visible and infrared spectra, as studied in this work. Another suitable consequence of systematic image binarization is its simplification of the synthetic image rendering process. In this case, only the geometric shapes of the devices are needed, eliminating the necessity for precise colors and textures, which are considered to be less relevant information for detecting well-defined rigid bodies in images.

The study focuses on two distinct classes of power electrical equipment images: lightning rods and potential transformers, which were arbitrarily selected for examination. The chosen learning machines for this investigation are the Viola–Jones and YOLO detectors. The Viola–Jones detector was selected due to its expandability and efficiency. The YOLO detector was also selected as a prominent representative of the last trend in the connectionist paradigm, deep learning, which combines high accuracy with runtime speed [

21]. Subsequently, both detectors were tested using non-synthetic images from the GImpSI database (Gestão dos Impactos da Salinidade em Isolamentos), encompassing both the visible and infrared spectra. In addition to lightning rods and potential transformers, the GImpSI database includes images containing other electrical equipment such as transformers, current transformers, circuit breakers, disconnect switches, and pedestal insulators.

Although we assume that no images of the target object are initially available, we also assume that the user can specify the angles at which future images will be acquired. In our case, the real images from the GImpsi dataset serve as a simulation of the future data that the detector will use. Therefore, we generated synthetic images specifically for the angles that are anticipated to be used, ensuring that our model is trained and evaluated under realistic conditions.

The aim of this study is to obtain a better understanding of the effects of exclusively using synthetic images as positive examples for training, thus addressing situations where no real images of the target objects are accessible during the training phase.

This paper is organized as follows: in

Section 2, a brief literature review is conducted on the use of synthetic images in training datasets; in

Section 3, the computer graphics approach used to synthesize images of power electrical devices is described;

Section 4 contains the description of both the VJ and YOLO detectors; in

Section 5, the databases used to train and test the detectors are presented; the experiments conducted using the synthesized images and the detectors are displayed and discussed in

Section 6; in

Section 7, the conclusions of the work are presented.

2. Related Work

The use of synthetic data in pattern recognition tasks such as object detection and segmentation has emerged as a solution to three problems: the scarcity of data, the storage of large amounts of data, and their laborious manual labeling. The scarcity is solved because computer graphics techniques can generate the desired amount of images. In addition, these techniques also solve the labeling problem, since when rendering each object, its class and location are already known. Storage can also be tackled because synthetic images can be rendered and immediately used for training, after which they can be discarded, freeing up memory space [

14]. Because of these benefits, synthetic data is used for many types of objects. For example, in [

22], several approaches based on CNNs were trained only with synthetic data to detect vehicles, pedestrians, and animals. The validation results with synthetic data were similar to those obtained with real data.

Another context in which synthetic images are used is the segmentation of table objects, to help robotic systems to grab them. In [

23], experiments were performed with a CNN that was trained with synthetic and real data to segment table objects with results that indicated that performance is positively correlated with the number of synthetic images.

Performance improvement was also noticed in the experiments carried out in [

24], which also indicated that supplementing a database with synthetic images is better than other data augmentation approaches. The task in which these experiments were performed was the detection of flaws in wafer mapping images, to identify irregularities in semiconductor manufacturing processes.

Synthetic images are also useful in infrequently occurring contexts. For example, in [

25], a VJ detector was trained in the task of detecting lifeboats using fully synthetic data, which were generated through graphical simulations of the 3D model of a lifeboat and sea waves. To validate the detector performance, images taken from the rescue operation of the Russian fishing trawler “Dalniy Vostok” in 2015 were used, with recall and precision rates of 89% and 75%, respectively.

In the healthcare domain, synthetic data has been explored in many works as a solution to challenges involving privacy risks and regulations that restrict researchers’ access to patient data [

26]. A comprehensive review of the use of synthetic medical images is provided in [

27]. This review covers important applications, including magnetic resonance imaging and computerized tomography.

Synthetic images have been explored in various other applications, such as insect detection [

28], spacecraft fly-by scenarios [

29], hand localization in industrial settings [

30], and industrial object detection with pose estimation [

31].

It should be further highlighted that an interesting source of synthetic images are electronic games, since their developers have been striving to make virtual scenarios increasingly realistic. However, famous games like Grand Theft Auto (GTA) do not usually support labeling automation. As a solution, researchers have been developing their own virtual worlds through the Unreal Engine (UE4) platform, since there are extensions to it that automate data generation and its labeling, as through the open source project UnrealCV [

32].

In this work, however, synthetic images are created from scratch, by means of straightforward programming codes corresponding to what is described in

Section 3. This approach was preferred because synthetic images used here are simple black-and-white renderings of targeted devices projection, in a limited range of poses, and the from-scratch approach allowed more control of this rendering process.

7. Conclusions

The main contribution of this work is a case study where two learning machines from different paradigms are assessed when synthetic images are used for training, thus simulating a scenario where an industrial plant is designed but not yet built, and visual targets are rigid bodies whose designs are precisely known. Two classes of power electrical equipment images were arbitrarily chosen for this study. The learning machines selected were the Viola–Jones and the YOLO detectors. The Viola–Jones is a relevant instance of explainable and efficiency-tuned methods, whereas the YOLO is a popular machine learning approach in the context of the so-called deep-learning paradigm. Both machine learning models were trained using synthetic images of the mentioned two types of equipment as targets for detection. Additionally, non-synthetic instances of images not related to the targets were included in the training dataset, all of which had previously undergone binarization, meaning that they were converted into black and white. For each of the targeted equipment types, namely lightning rods and potential transformers, both detectors underwent tests using binarized versions of non-synthetic images obtained from the GImpSI database, encompassing images from both the visible and infrared spectra. It is worth highlighting that the binarization process facilitates the use of the same detector for both spectra and also simplifies the rendering process, as only the geometrical descriptions of the devices were required.

For all the spectra and devices tested, the measure for YOLO was smaller than 26%, while the measure for the Viola–Jones detector ranged approximately from 38% to 61%. YOLO may have performed poorly compared to the VJ detector because it was pre-trained on color images and may not have been able to fully learn to generalize detection in black-and-white images, even after being retrained on a dataset of black-and-white images.

By contrast, the performance of the VJ detector indicates that a detector trained with synthetic images of rigid equipment can achieve useful results. Furthermore, it was observed that some images not detected by the VJ detector presented strong distortion caused by sunlight. Thus, the performance of the detector can be improved if a better binarization method is used, in terms of robustness to sunlight/shadow effects.

In addition to the distortion caused by sunlight, complex and cluttered backgrounds also affected the performance of the VJ detector. This was illustrated through images where the VJ detector only succeeded after a portion of the background was manually removed. The difficulty of finding this type of equipment was already expected because, during the synthesis stage, objects were free from structured background noise. Indeed, the only noise simulated during training was a logical inversion in 10% of the pixels of half of the synthetic images used in the training.

Thus, one should expect further improvement in equipment detection through the synthesis of images with more representative background noise, including non-targeted types of equipment and/or objects and textures typically found around that kind of industrial environment. However, even results obtained so far seem to corroborate the belief that rigid bodies, especially those whose precise descriptions are easily available (such as industrially manufactured equipment), are indeed strong candidates for this kind of approach, where machine learning methods can be adjusted even without any real images of the equipment.

Although this paper does not focus on enhancing detector performance in a general sense, it does provide valuable insights into the utilization of prior knowledge concerning rigid bodies for fine-tuning operational detectors in the absence of genuine target images.

In terms of future research, our objectives include experimenting with the synthesis of equipment images that incorporate additional objects in the background and implementing thresholding techniques that demonstrate increased resilience to issues related to sunlight and shadow effects.