Abstract

Recently, posture recognition technology has advanced rapidly. Herein, we present a novel posture angle calculation system utilizing a single inertial measurement unit and a spatial geometric equation to accurately identify the three-dimensional (3D) motion angles and postures of both the upper and lower limbs of the human body. This wearable system facilitates continuous monitoring of body movements without the spatial limitations or occlusion issues associated with camera-based methods. This posture-recognition system has many benefits. Providing precise posture change information helps users assess the accuracy of their movements, prevent sports injuries, and enhance sports performance. This system employs a single inertial sensor, coupled with a filtering mechanism, to calculate the sensor’s trajectory and coordinates in 3D space. Subsequently, the spatial geometry equation devised herein accurately computed the joint angles for changing body postures. To validate its effectiveness, the joint angles estimated from the proposed system were compared with those from dual inertial sensors and image recognition technology. The joint angle discrepancies for this system were within 10° and 5° when compared with dual inertial sensors and image recognition technology, respectively. Such reliability and accuracy of the proposed angle estimation system make it a valuable reference for assessing joint angles.

1. Introduction

Human posture-recognition systems have attracted significant attention and advancements in recent years. This technology is important in healthcare, sports, gaming, and security [1,2,3,4]. These systems use devices that can track movement patterns, measure changes in posture and joint angles, and analyze data in real time to meet exercise and recovery needs. Human posture recognition has a wide range of uses, including capturing human body movements in movies, monitoring home care, training athletes, and assisting in post-accident recuperation [5,6,7,8]. In order to accurately monitor and examine human posture, it is crucial to possess a comprehensive knowledge of human anatomical terminology and measurements. The nomenclature of the human body consists of standardized names used to describe its many parts and structures. The worldwide accepted system for this purpose is called Terminologia Anatomica (TA). Furthermore, anthropometry encompasses the quantification of physical attributes such as stature, mass, and bodily girths (e.g., waist, chest), which are essential for evaluating body ratios and well-being. Precise data gathering is enhanced by the use of instruments such as scales, rulers, and imaging techniques such as MRI and CT scans [9,10,11].

Motion capture technology, often known as motion capture, is a technique used to monitor and record human movement activities. Originally used by McDonald to improve animation effects by recording performers’ motions, modern motion capture technologies mostly depend on optical tracking techniques. Vivid markers (or dots) on the actor’s body bounce light back to the cameras and capture their locations [12]. Picture data from several cameras are then analyzed to determine the differences in the markers on the X, Y, and Z axes. Kinect is a popular choice among the several types of cameras used to detect human motion. Image recognition is one of the most common applications in this field. This study discusses the extensive usage of Kinect in several areas, including research, medical applications, entertainment, and human–computer interaction [13,14,15,16]. It evaluated several methods for recognizing gestures in Kinect to determine the most effective method in terms of precision and speed [17].

With advances in electrical technology, inertial measurement units (IMU) have become smaller and more stable. This development enhances the overall efficiency and adaptability of human posture-recognition technology [18,19,20,21,22,23]. In addition to utilizing Kinect cameras to analyze human posture, the use of IMUs has seen significant advancements in research conducted at universities [24,25,26]. Bellitti et al. [27] used an inertial measurement unit combined with a stretch sensor to design a low-cost knee-joint ligament relaxation system. In this system, two inertial sensors were placed on the upper and lower ends of the knee and connected using a stretch sensor. A gyroscope, accelerometer, and magnetometer were combined to obtain the attitude information of the knee ligament. Kim et al. [28] used the OpiTrack sportswear device of the Xsens IMU to record the posture information of the human body with six inertial sensors at 30 Hz and estimated the joint position and rotation angle through the bidirectional recurrent neural network of ConvLSTM. A large IMU database is required for training. The experimental results showed that each joint position had an error of approximately 50 mm, and the joint angle had an average error of 11.31°. McGrath et al. [29] used a wearable IMU to estimate the angles of human hip and knee joints while walking on a treadmill. The experimental results showed that the internal and external rotation angles of the hip joint were quite different, and it was found that the movement of the human soft tissue affects the accuracy of the sensor. Slyper et al. [30] placed five inertial measurement units on clothes and used the acceleration value read to match the acceleration value in the dynamic capture database to find the most suitable action and project it on the screen. However, they cannot provide a real-time representation of the tester’s posture. Huang et al. [31] designed a rehabilitation effectiveness monitoring system using a six-axis inertial sensor combined with a sensor-fusion algorithm, which was primarily used to monitor the rehabilitation actions of patients with Parkinson’s disease. Ten inertial sensors were placed on the patient’s body, specifically on the upper extremities and forearms, femur and crus, thorax, and abdomen. The patient then participated in the Lee Silverman Voice Treatment Big (LSVT BIG) rehabilitation course. Data on the body postures of various parts during the rehabilitation course were collected and applied to 3D character objects on the Unity 3D game platform. The different postures and movements of the patient’s body were then simulated, and the characteristic angles of the limbs were calculated through the posture inclination angles. These characteristic angles allowed for viewing the patient’s movements during the rehabilitation course and continuous tracking of the rehabilitation progress, effectively providing physicians with the ability to judge the patient’s rehabilitation effect. However, the disadvantage is that ten inertial sensors must be placed on the patient, which causes discomfort and incurs high costs.

Utilizing the Kinect camera and IMU together aided in validating the kinematics. Banos et al. [32] investigated the transferability of activity detection in an Internet of Things (IoT) sensor environment using IMU and Kinect sensors. This study demonstrated the effective translation of data from IMUs recording three-dimensional acceleration on various body segments to Kinect sensors recording three-dimensional locations, resulting in high levels of precision. Tian et al. [33] proposed the use of an inertial sensor with an unscented Kalman filter (UKF) for sensor fusion to increase signal stability and avoid signal loss. In the experiment, the IMU and Kinect were used to capture the attitude information. Combining an inertial sensor with a physical geometry constraint method can yield a better tracking effect and provide stable position information. One disadvantage is the error caused by the use of additional inertial sensors.

In the literature discussed above, to estimate the joint angles of the body and limbs, more than two IMUs were required to collect attitude signals, increasing the cost of use and reducing user comfort. Therefore, the goal of this study is to use a minimum number of IMUs to estimate the joint angles of the body and limbs. Shiao et al. [34] used an IMU to track the position of the object. Assuming that the arm is projected on the XY plane, a set of mathematics is established using the relevant geometry model; pixels are used as units to simulate joint positions, and then camera image recognition technology and a spatial pyramid matching algorithm are used for verification. However, that research is only for 2D motion recognition, so this research will establish 3D motion identification technology. The objective of this study is to introduce an innovative system capable of identifying three-dimensional motion angles and postures of both the upper and lower human limbs using a single inertial measurement unit and a spatial geometric equation. The primary advantage of this wearable system is its ability to continuously monitor body movements without the typical constraints associated with camera-based methods, such as spatial limitations or occlusion.

2. The Material for Estimation by IMU

2.1. Extended Kalman Filter

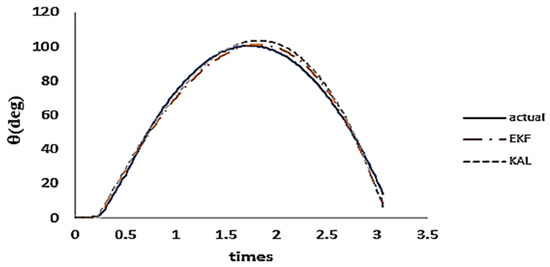

A range of techniques were used to assess the precision of the angle measurement, including acceleration estimation, gyro estimation, Kalman filter (KF), complementary filter, gradient descent, and extended Kalman filter (EKF). Following our investigation, we determined that extended Kalman had the most favorable outcomes, as shown in Figure 1.

Figure 1.

Comparison of curve errors among estimation algorithms.

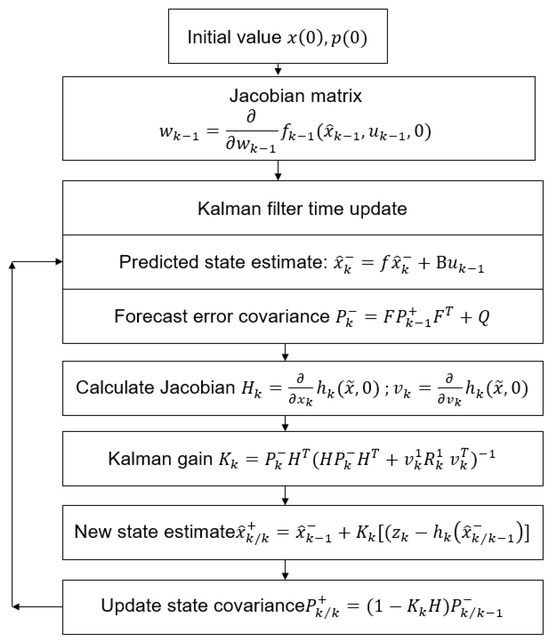

The EKF is more suitable for nonlinear problems than the KF, which is mainly used for general linear problems in discrete time-controlled linear systems. Many systems often exhibit nonlinear behavior. Consequently, this study uses an EKF. The EKF uses the KF on a model that is approximately linearized using the Jacobian series expansion of nonlinear functions. It then employs a KF to predict and update the measurement and state equations [29], as depicted in Figure 2.

Figure 2.

Diagram and formulas related to the use of Kalman filter in estimating the system state.

The EKF algorithm comprises the following primary steps: Initially, the values of and are established, where represents the initial estimation of the state and represents the initial covariance of the error. Subsequently, the Jacobian matrix is computed based on the process model in relation to the state. During the time update step of the Kalman filter, the anticipated state estimate is determined by combining the process model and the control input . At the same time, the forecast error covariance is derived by using the Jacobian matrix and the process noise covariance . The Jacobian matrix of the measurement model with respect to the state and the Jacobian matrix with respect to the noise are calculated thereafter. The Kalman gain is calculated by using the forecast error covariance , the Jacobian matrix , and the measurement noise covariance . The revised state estimate incorporates the measurement . Ultimately, the state covariance is revised. The procedure involves iterating through each time step and updating the state estimate and error covariance using the process model, measurement model, and their corresponding Jacobian matrices.

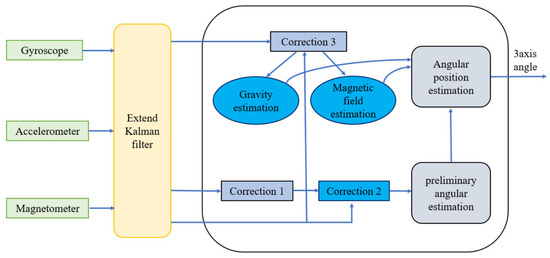

Figure 3 shows a schematic of the integration of sensors and estimation methods used in motion-tracking systems. The system is based on an EKF algorithm that processes data from three separate sensors: a gyroscope, an accelerometer, and a magnetometer. The sensors feed the data into the filter, which processes this information through several correction procedures to optimize the accuracy of motion tracking. The process began with an initial estimation of gravity, which was then refined using correction 1. Concurrently, the magnetic field was estimated and refined using correction 2. The refined estimates were combined to form an initial angular estimate. This initial estimate was further improved by correction 3, leading to the final output of the system: the angular position estimation. The result is represented as a 3-axis angle, which precisely indicates the orientation of the object being tracked in three-dimensional space. The figure illustrates a sophisticated algorithm that merges data from multiple sensors to track motion accurately, which is a critical capability for a wide range of applications in the technology and engineering domains.

Figure 3.

Sensor integration and estimation techniques in motion tracking systems.

2.2. Mathematical Model for Joint Angle Estimation

- (a) Estimation of upper extremity joint angles

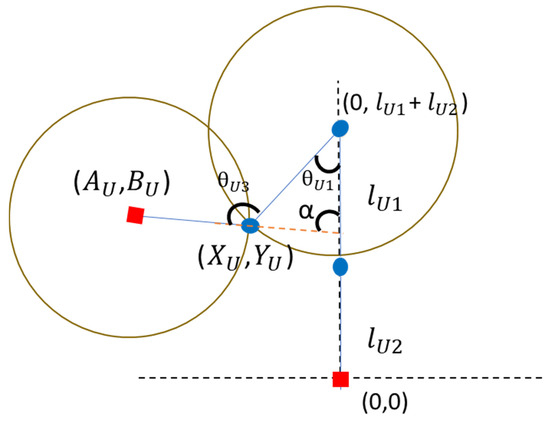

The joint angle estimation equation in this study was extended based on a mathematical model reported in the literature [34]. The angle of each body joint is estimated using spatial geometric equations. Figure 4 shows a schematic of the elbow joint angle estimation. First, the coordinates of the shoulder joint are set as (0, ). Equations (1) and (2) can be readily expressed with centers located at (0, ) and ( correspondingly. The solution for the intersection of these two circles represents the precise location of the elbow joint. Furthermore, the validity of this solution is supported by the equation for a straight line, (3). The inverse trigonometric function, the radian R calculated from Formula (4) can be brought into Formula (5) to estimate the angle between the upper arm and the body (shoulder joint angle), and finally, the elbow joint angle can be deduced according to Formula (6). The meanings of the symbols are explained in Table 1.

Figure 4.

Diagram depicting the calculation of joint angles in the upper limb.

Table 1.

Upper body code.

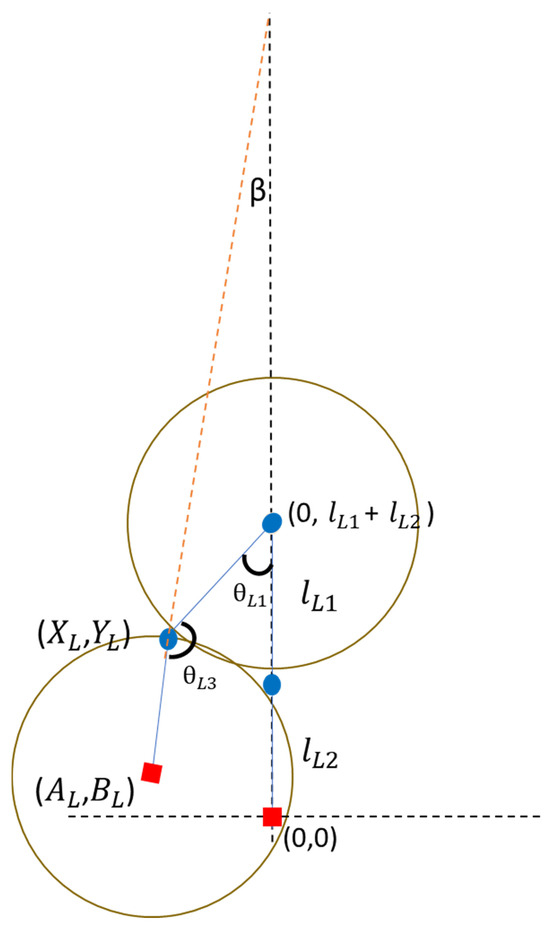

- (b) Estimation of lower extremity joint angles

Figure 5 shows a schematic of the knee joint angle estimation. First, the coordinates of the hip joint are set to (0, ), and the same solution is obtained according to the circle Equations (7) and (8), and the same solution is obtained from the linear Equation (9) to confirm the correct coordinate position of the knee joint. After obtaining the correct coordinates of the knee joint, use the inverse trigonometric function to calculate the radian R from Formula (10) and bring it into Formula (11) to calculate the angle between the thigh and the body (hip joint angle) , and finally deduce the knee joint angle according to Formula (12). The meanings of the symbols are explained in Table 2.

Figure 5.

Schematic diagram for estimation of lower extremity joint angles.

Table 2.

Lower body code.

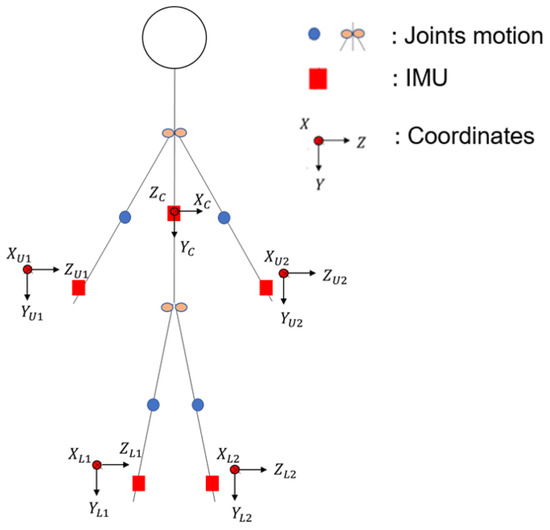

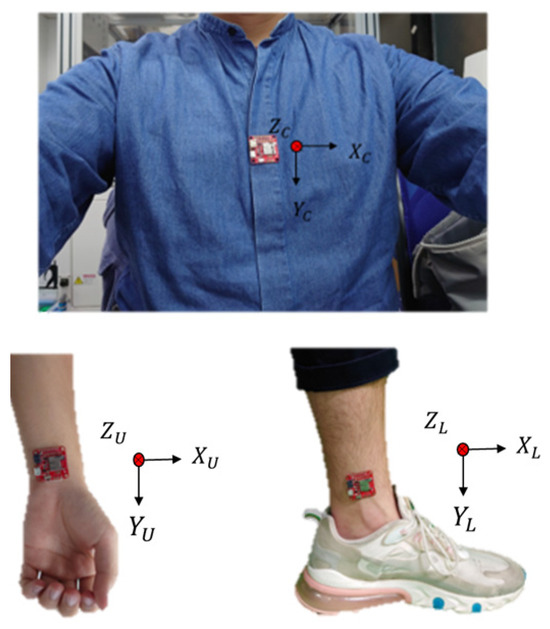

2.3. Minimal IMU Model for Dynamic Motion Observation

To represent the movement posture of the human body fully, the human body undergoes a displacement phenomenon during movement. This research uses spatial geometry to obtain the angle; therefore, when the human body experiences a movement phenomenon, the displacement distance must be considered. Therefore, the displacement of the human body was obtained by using an inertial sensor on the chest, as shown in Figure 6. In the geometrical algorithm of hand space , add the body displacement , , and add , to the leg in the algorithm , such as Formulas (13)–(18), so that the moving position can be estimated as a joint angle.

Figure 6.

Minimal IMU sensor placement on body.

2.4. The Impact of IMU Placement Position

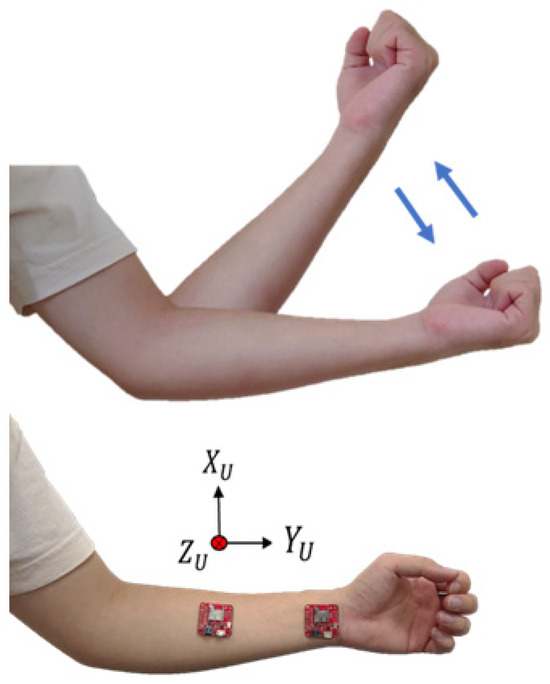

In previous studies, to estimate the joint angles of the upper limbs of the body, more than two IMUs were required to collect the posture signals of the upper limbs [35,36], which not only increased the cost of use but also caused poor user comfort. The unit is used to estimate the joint angle of the body limb; therefore, a relatively stable position must be selected to place the IMU. For the upper limb, when the upper arm of the human body moves, the lower arm must move accordingly. Therefore, we chose to place the inertial sensors on the wrist and forearm, as shown in Figure 7. In this study, acceleration was an important signal when estimating the joint angles. Therefore, five sets of flexing exercises were performed to measure acceleration values, as shown in Figure 7.

Figure 7.

The position of the upper limb inertial sensors.

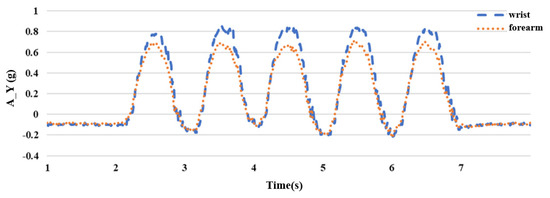

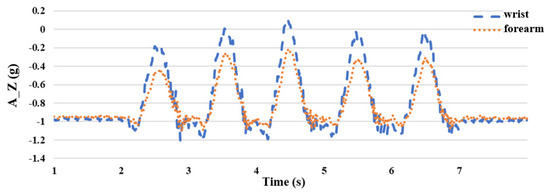

Because the hand of the upper extremity lifts upward during the flexing exercise, the rotation axis is the X axis with a smaller acceleration value; therefore, it is obtained from the Y and Z axes with larger acceleration values. After the experiment, it was found that placing the sensor on the wrist had a greater acceleration value than that on the forearm, as shown in Figure 8 and Figure 9, which is beneficial to the subsequent joint angle estimation; therefore, we chose to place the inertial sensor on the wrist. The vertical axis (A_Y) has units of “g,” where 1 g is equivalent to an acceleration of 9.8 m/s2, corresponding to the acceleration due to gravity. The horizontal axis represents time in seconds (s).

Figure 8.

Comparison diagram of wrist and forearm Y-axis acceleration A_Y.

Figure 9.

Comparison diagram of wrist and forearm Z-axis acceleration A_Z.

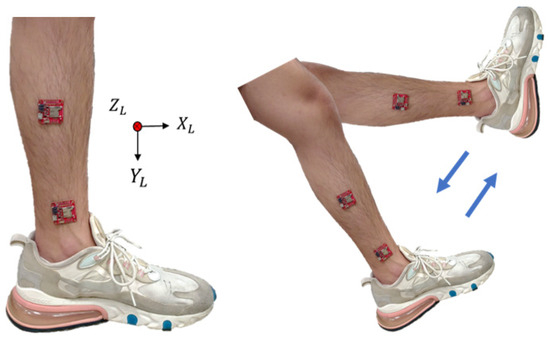

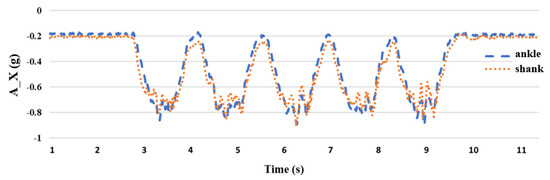

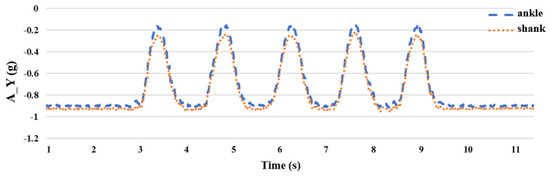

Prior research has shown that the collection of attitude signals to estimate the joint angles of the lower limbs of the body required the use of more than two IMUs [26]. For the lower body part, this study uses the leg stretching exercise to carry out the experiment. During the leg stretching exercise, the shank is mainly in motion; therefore, we chose to place inertial sensors on the ankle and shank and performed five sets of leg stretching movements as shown in Figure 10. Because the rotation axis of the leg-stretching movement is the Z axis with a smaller acceleration value, it is obtained from the X and Y axes with larger acceleration values, as shown in Figure 11 and Figure 12, respectively. After the experiment, it can be seen that when the sensor is placed on the ankle, the acceleration value of the X axis has a small signal fluctuation, and the acceleration value of the Y axis has a larger acceleration value, which is beneficial to the estimation of the joint angle later; therefore, the inertial sensor is selected, and the measuring device is placed at the ankle.

Figure 10.

The position of the inertial sensor of the lower extremity.

Figure 11.

Comparison of X-axis acceleration A_X between the ankle and the shank.

Figure 12.

Comparison of Y-axis acceleration A_Y between the ankle and the shank.

In this study, it was decided to place the upper part of the inertial measurement unit at the wrist and the lower part at the ankle. The hand placement direction is that the X axis is perpendicular to the arm and the Y axis is parallel to the arm; the leg placement direction is that the X axis is perpendicular to the shank and the Y axis is parallel to the shank. The body’s inertial sensor is placed on the chest because, when the human body rotates, there is mainly a large displacement.

The chest undergoes minimal movement, particularly the abdominal region. Therefore, positioning the inertial measurement unit (IMU) at this location yields more accurate signals with an optimal placement direction. It is recommended that the IMU be oriented along the Z axis perpendicular to the body and along the Y axis parallel to the body, as illustrated in Figure 13. Once the sensor position is determined, data such as the angular velocity, acceleration, and rotational angles along the three axes can be captured. Subsequently, an algorithm was employed to infer the motion trajectory. The primary objective of the experiment was to utilize a single inertial sensor to estimate the joint angles of specific body parts. Thus, a precise directional definition is crucial. Using the standing posture as a reference point, the alignment of the sensor direction with that of the wrist and ankle is essential. The X-axis acceleration defines the left-right swing direction (roll), the Y-axis acceleration denotes the rotation direction (yaw), and the Z-axis acceleration signifies the forward–backward swing direction (pitch). As gravity maintains a consistent direction, any change in attitude alters the gravity component. The attitude angle can be accurately calculated by integrating the transformation data with sensor fusion.

Figure 13.

IMU placement and three-axis direction.

3. Motion Joint Angle Verification

3.1. Image Recognition Technology

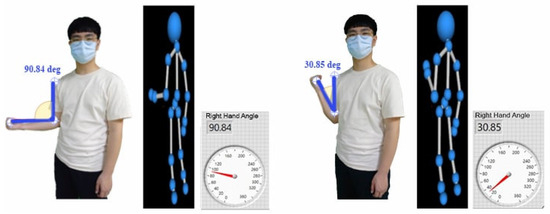

A Kinect sensor is used in picture recognition technology to compare the joint angles determined by a solitary inertial sensor. Kinect is equipped with a 3D depth sensor and an infrared emitter that enables real-time tracking of the user in front of the camera. The random forest method, which is an effective ensemble learning technique, was used for classification and prediction purposes. Random forests can effectively categorize joints such as shoulders and elbows using bone data obtained from the Kinect camera in the context of recognizing significant locations on the human body. Random forests are renowned for their resilience and precision in managing intricate classification and prediction tasks. Ensemble learning is a strategy that integrates many decision trees to improve prediction accuracy. The random forests model constructs each decision tree individually, and the final forecast is determined by combining the predictions of all the individual trees. Random forests excel at efficiently processing large datasets and high-dimensional data. Random forests may enhance generalization performance and mitigate overfitting by using the technique of randomly selecting subsets of features and data points for each tree. This feature makes it especially well suited for applications such as picture identification, where the input data might be of great dimensionality and complexity. Random forests may use bone data obtained from the Kinect sensor to effectively categorize and forecast the positions of crucial joints, including the shoulders, elbows, and wrists. Significantly, the angle of the elbow joint undergoes a transition from approximately 90° to 30° during activity, as shown in Figure 14. The Random forests algorithm effectively captures and interprets tiny variations in joint angles during physical activities, as indicated by this range-of-motion detection.

Figure 14.

Kinect camera-based angle measurement for upper extremity positions.

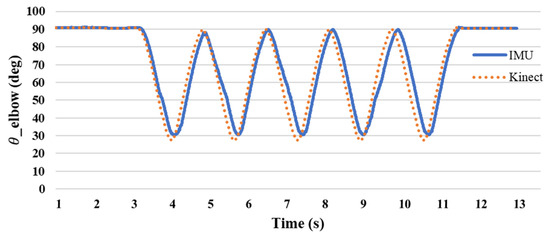

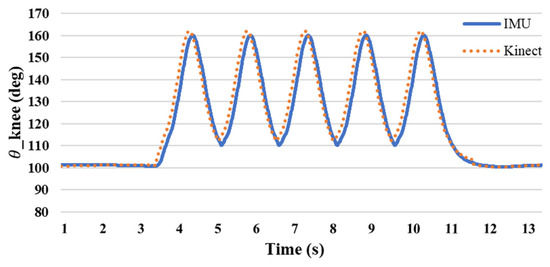

3.2. The Difference between Image Recognition Technology and Single-Sensor Estimation of Joint Angle

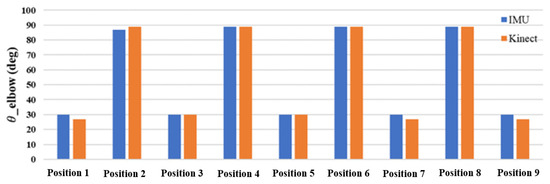

The experimental methods included five groups each of flexing and five groups of leg stretching exercises. Figure 15 shows the angle of the elbow joint during curling. The angle measured during the preparatory movement was 90°. When the forearm is raised, the angle decreases as the shoulder approaches. The ange changed from 90 to 30 degrees, with an average difference rate of 5.8%. Figure 16 shows the knee joint angles measured during stretching exercises. The measured angle was 100° when the shank was raised and changed from 110° to 160° during further passive flexion. The average difference rate is 0.8%, and it can be seen that the joint angles obtained by the single inertial sensor and the Kinect sensor are similar.

Figure 15.

The difference between IMU and Kinect elbow joint angle.

Figure 16.

IMU and Kinect knee joint angle difference.

The difference between the joint angles measured by a single inertial sensor and the Kinect image recognition technology was within 5°, and the angle changes were similar. Figure 17 and Figure 18 show the comparison, and the differences in the values can be clearly seen. The testing findings of elbow and knee motions demonstrate that the new posture angle calculation technique and the Kinect image recognition technology provide almost comparable measurements of joint angles. The elbow joint has a standard deviation of roughly 1.838 for the inaccuracy between the IMU and Kinect, with a variance of about 3.377. This suggests a strong correlation between the two measuring instruments, with negligible errors and few fluctuations. The IMU and Kinect measurements exhibit slight differences. The knee joint exhibits a standard deviation of roughly 1.062 and a variation of about 1.128 in the inaccuracy between the IMU and Kinect. Furthermore, this suggests a strong correlation between the two measuring instruments, with negligible errors and low fluctuations. The results obtained from the IMU and Kinect exhibit slight disparities.

Figure 17.

Comparison of elbow angles at various positions using IMU and Kinect.

Figure 18.

Comparison of knee angles at various positions using IMU and Kinect.

Based on these findings, it is evident that the novel posture angle calculation technique used in this work may serve as a reliable benchmark for measuring joint angles. It exhibits a reasonably high level of accuracy and minimal mistakes when compared to the Kinect image recognition technology. The congruity between the data of the IMU and Kinect indicates that this novel technology has promise for extensive use in research and applications pertaining to the monitoring and analysis of bodily movements.

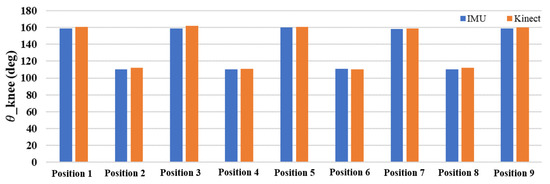

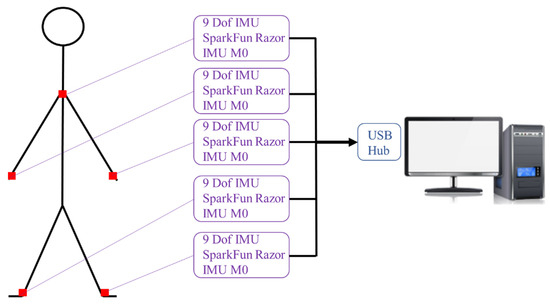

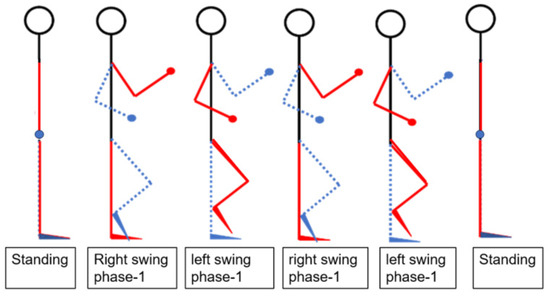

4. Experiment on Human Walking Posture

We use five IMU sensors to track human gait. Five inertial sensors were placed on both the wrists and ankles, and one IMU was placed on the chest to analyze the human walking motion for posture recognition in Figure 19. As there was no displacement of the chest during the walking experiment and the chest remained upright, no chest measurements were taken. The study focused on designing a human–machine interface to provide data on the angles of the shoulder, elbow, hip, and knee joints. This experiment focused on the posture and joint angles of the human body while walking in Figure 20. First, we confirmed the lengths of the upper arm , forearm , thigh , and shank of the subject and then performed a walking exercise, as shown in Figure 21. The image depicts a sequence of figures illustrating the stages of bipedal walking. The series of six states arranged horizontally captures the progression from standing to walking and back to standing. Starting from the left, the first state portrays the “Standing” phase, showing a stick figure in an upright position with a circle representing the head, a vertical line for the body, and two straight lines for the legs. Moving to the right: The second state, labeled “Right swing phase-1”, demonstrates the initial movement of the right leg forward while the left leg remains stationary and vertical. The third state, labeled “Left swing phase-1”, mirrors the second state, with the left leg in motion and the right leg supporting the body. The fourth and fifth states repeat the right and left swing phases, respectively, indicating a continuous walking cycle. The sixth and final sate returns to the “Standing” phase, with the stick state standing upright and legs together. Throughout the states, the dashed blue lines trace the trajectory of the leg movement relative to the standing position. This schematic representation serves to analyze the mechanics of walking motion, which is commonly utilized in biomechanics to study the gait cycle.

Figure 19.

The hardware architecture of the new angle calculation system.

Figure 20.

Virtual model walking motion posture simulation.

Figure 21.

Schematic diagram of walking motion.

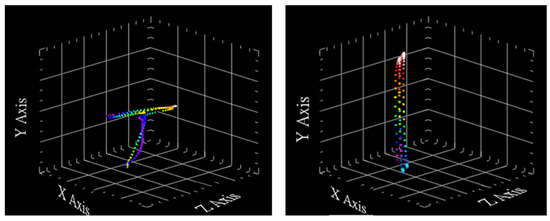

By determining the lengths of the subject’s upper arms , arms , thighs , and calves of the subject, along with the tester’s upper arm length of 26 cm, forearm length of 27 cm, thigh length of 36 cm, and shank length of 35 cm, we applied the coordinate algorithm to each inertial sensor. The trajectories of the wrist and ankle inertial sensors during walking, as depicted in Figure 22, provide the moving coordinates required to calculate the joint angles using spatial geometric equations. The colors of the points represent the positions of the points over time. As time progresses, the points change color, allowing us to visualize the movement or trajectory of the points in 3D space. This color-coding helps in understanding the sequence and progression of the points’ positions over the given period. Furthermore, its color changes continuously, so that each color is distinct from the previous one. This continuous color change makes it easy for the reader to follow and understand the path of the sensors during movement, allowing for a clear understanding of the movement path over time.

Figure 22.

IMU trajectory diagram at the wrist and ankle during walking.

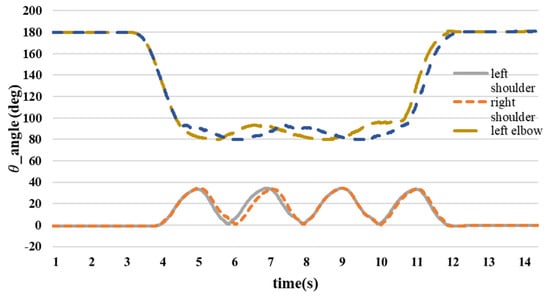

Figure 23 illustrates the variation in the joint angles throughout the walking motion. The shoulder joint angle remained close to 0° during the preparatory phase, and both directions were considered positive. During the exercise, the shoulder joint swung back and forth, reaching a maximum angle of 35°. Following the exercise, the shoulder joint angle returned to approximately 0°, transitioning from 0° to 35°.

Figure 23.

Upper limb joint angle.

At the initiation of the walking motion, the forearm is raised and moved in tandem with the upper arm in a swinging motion. Their hands remained in a consistent position while swinging back and forth. The angle of the elbow joint fluctuated during the swing and did not remain fixed at 90°. Consequently, the elbow joint angle varied from approximately 80° to 90°, returning to 180° at the conclusion of the movement.

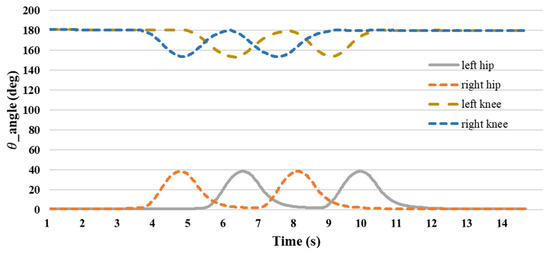

Figure 24 depicts the evolution of joint angles during the walking motion. The hip joint angle remained close to 0° during the preparatory phase. Initially, the right foot was raised and reached a maximum angle of approximately 40°, followed by the left foot, which had a similar maximum angle. This alternating pattern continued, with the right foot lifting first, followed by the left foot. As the walking motion progressed, the hip joint angle returned to nearly 0° and transitioned during flexion from 0° to 40°. At the onset of the walking motion, the right foot was initially raised to a minimum joint angle of 153°, followed by the left foot reaching a minimum angle of 153°. Subsequently, the right foot was lifted first, followed by the left foot, and the knee joint angle reached 180° at the end of the walking motion. The knee angle transitioned from 180° to 153° during movement.

Figure 24.

Lower extremity joint angle.

Based on the findings of the walking exercise experiment, it is evident that the shoulder joint angle oscillates back and forth, reaching a peak of approximately 35° during exercise. The elbow joint maintained a consistent posture, with an angle ranging between 80° and 90° throughout the exercise. When the thigh is lifted during movement, the hip joint angle is approximately 38°, and the knee joint angle reaches approximately 155° when the shank is raised. The data of Table 3 detailing the use of the IMU to measure the initial state prior to lifting the foot or hand and displaying the angular positions reveal several key observations and analyses. Initially, both the feet and hands exhibited angular values close to 0°, indicating their starting positions before any movement occurred. When the foot was raised, there were noticeable changes in both foot and body angles, highlighting significant variations in their angular values. Similarly, lifting the hand results in alterations in the angles of both the hand and body, showing the body’s response to the action of raising the hand. Motion angle graphs visually represent these angular changes during the process of lifting the foot or hand, offering a comprehensive view of the motion dynamics and reactions of the body. Overall, the data of Table 3 provided valuable insights into the angular adjustments of body parts during specific movements, thereby enhancing our understanding of body structure and motion patterns during various activities.

Table 3.

Joint angles involved in the motion of walking.

Our study has several limitations. Firstly, it requires five IMU sensors to track body movements, which can be cumbersome and inconvenient for practical applications. Secondly, while the system demonstrates relatively high accuracy, there are still inherent errors that may not suffice for applications requiring extremely high precision. Thirdly, the use of multiple sensors and complex algorithms increases the system’s cost and complexity. Lastly, the current system focuses on specific exercises and may not be scalable or easily integrated with other systems. Future research should aim to further reduce the number of sensors, enhance accuracy, optimize cost and complexity, and expand tracking and integration capabilities. Table 4 below provides a detailed analysis of the limitations of the IMU-based posture recognition system and discusses potential areas for improvement. Each limitation is described, followed by a discussion of its implications and suggestions for future research.

Table 4.

Limitations and discussions of IMU-based posture recognition system.

5. Conclusions

This study utilized the extended Kalman filter (EKF) to acquire precise attitude information, followed by the implementation of a novel attitude angle estimation system for estimating joint angles and attitude presentation. This system enables the estimation of body position using a single inertial sensor for one extremity, which is the objective of the study. The verification of the joint angles involves the use of dual inertial sensors and high-accuracy image recognition technology to cross-validate each other. The experimental results of the flexion and leg extension exercises demonstrated that the difference between the single inertial sensor and the dual inertial sensor was within 10°. Similarly, the variance between the single inertial sensor and the image recognition technology was within 5°, which closely approximated the actual motion angles. The user-friendly man-machine interface allows users to intuitively grasp their current posture and conveniently store all movement tracks while wearing the device. For athletes, immediate feedback on posture correctness and effectiveness can enhance training outcomes. Moreover, the system can be beneficial in medical settings for tracking user movements in a more private manner than using cameras, thereby avoiding blind spots during monitoring. In the rapidly evolving field of posture-recognition technology, a cutting-edge posture-angle calculation system leveraging a single inertial measurement unit (IMU) and spatial geometric equation was introduced. This wearable system facilitates continuous monitoring of body movements without the spatial limitations or occlusion issues commonly associated with camera-based methods. The advantages of this system are manifold, providing precise insights into posture changes to assist users in evaluating movement accuracy, preventing sports-related injuries, and optimizing sports performance. By integrating a single inertial sensor with a filtering mechanism for trajectory and coordinate calculations in 3D space in conjunction with the spatial geometry equation developed in this study, accurate joint angle computations can be achieved in response to changes in body posture. The effectiveness of the system was validated by comparing the estimated joint angles with those obtained using dual inertial sensors and image recognition technology. These outcomes underscore the reliability and precision of the new attitude angle estimation system, which serves as a valuable tool for joint angle assessment.

Author Contributions

Conceptualization, Y.S.; methodology, Y.S. and T.H.; software, T.H.; validation, Y.S. and T.H.; Formal analysis, Y.S. and T.H.; Visualization, T.H.; investigation, T.H.; data curation, G.-Y.C.; writing—original draft preparation, G.-Y.C.; writing—review and editing, Y.S.; supervision, Y.S.; project administration, Y.S.; funding acquisition, Y.S and T.H. All authors have read and agreed to the published version of the manuscript.

Funding

We thank the financial support from MOST, Taiwan (Project number: MOST 108-2221-E-027-097) and the support by The University of Danang, University of Science and Technology (project code number: T2023-02–09).

Institutional Review Board Statement

Ethical review and approval were waived for this study due to all participants are laboratory project members.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lin, C.Y.; Chuang, L.W.; Huang, C.C.; Lin, K.J.; Fahn, C.S. Development of hand posture recognition system for finger gaming robot. In Proceedings of the 2013 International Conference on Advanced Robotics and Intelligent Systems (ARIS), Tainan, Taiwan, 31 May–2 June 2013; pp. 86–91. [Google Scholar] [CrossRef]

- Lee, M.F.R.; Chen, Y.C.; Tsai, C.Y. Deep Learning-Based Human Body Posture Recognition and Tracking for Unmanned Aerial Vehicles. Processes 2022, 10, 2295. [Google Scholar] [CrossRef]

- Yang, X.; Ren, X.; Chen, M.; Wang, L.; Ding, Y. Human Posture Recognition in Intelligent Healthcare. J. Phys. Conf. Ser. 2020, 1437, 012014. [Google Scholar] [CrossRef]

- Hong, Z.; Hong, M.; Wang, N.; Ma, Y.; Zhou, X.; Wang, W. A wearable-based posture recognition system with AI-assisted approach for healthcare IoT. Futur. Gener. Comput. Syst. 2022, 127, 286–296. [Google Scholar] [CrossRef]

- Wang, J.; Qiu, K.; Fu, J.; Peng, H.; Zhu, J. AI Coach: Deep Human Pose Estimation and Analysis for Personalized Athletic Training Assistance. In Proceedings of the 27th ACM International Conference on Multimedia—MM’19, Nice, France, 21–25 October 2019; pp. 374–382. [Google Scholar] [CrossRef]

- Dong, Z.; Wang, X. An improved deep neural network method for an athlete’s human motion posture recognition. Int. J. Inf. Commun. Technol. 2023, 22, 45–59. [Google Scholar] [CrossRef]

- Wang, Q. Application of Human Posture Recognition Based on the Convolutional Neural Network in Physical Training Guidance. Comput. Intell. Neurosci. 2022, 2022, 5277157. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Xiao, B.; Tian, Y.; Wu, Z.; Liu, Q.; Wang, J.; Sun, M.; Liu, X. A Control and Posture Recognition Strategy for Upper-Limb Rehabilitation of Stroke Patients. Wirel. Commun. Mob. Comput. 2021, 2021, 6630492. [Google Scholar] [CrossRef]

- Lahner, C.R. Adult weight measurement: Decoding the terminology used in literature. S. Afr. J. Clin. Nutr. 2019, 32, 28–31. [Google Scholar] [CrossRef]

- Kachlik, D.; Varga, I.; Báča, V.; Musil, V. Variant anatomy and its terminology. Medicina 2020, 56, 713. [Google Scholar] [CrossRef] [PubMed]

- Duren, D.L.; Sherwood, R.J.; Czerwinski, S.A.; Lee, M.; Choh, A.C.; Siervogel, R.M.; Chumlea, W.C. Body composition methods: Comparisons and interpretation. J. Diabetes Sci. Technol. 2008, 2, 1139–1146. [Google Scholar] [CrossRef]

- Gan, Q.; Li, Y.; Wang, G.; Zhang, Y. Application Research of Optical Tracking Point Layout in Computer Motion Capture Technology: Take Walking Motion as an Example. In Proceedings of the 2020 International Conference on Innovation Design and Digital Technology (ICIDDT), Zhenjing, China, 5–6 December 2020; pp. 548–552. [Google Scholar] [CrossRef]

- Li, N.; Dai, Y.; Wang, R.; Shao, Y. Study on Action Recognition Based on Kinect and Its Application in Rehabilitation Training. In Proceedings of the 5th IEEE Fifth International Conference on Big Data and Cloud Computing, Dalian, China, 26–28 August 2015; pp. 265–269. [Google Scholar] [CrossRef]

- Gahlot, A.; Agarwal, P.; Agarwal, A.; Singh, V.; Gautam, A.K. Skeleton based Human Action Recognition using Kinect. Int. J. Comput. Appl. 2016, 21, 975–8887. [Google Scholar]

- Wang, W.J.; Chang, J.W.; Haung, S.F.; Wang, R.J. Human Posture Recognition Based on Images Captured by the Kinect Sensor. Int. J. Adv. Robot. Syst. 2016, 13, 54. [Google Scholar] [CrossRef]

- Jais, H.M.; Mahayuddin, Z.R.; Arshad, H. A review on gesture recognition using kinect. In Proceedings of the 2015 International Conference on Electrical Engineering and Informatics (ICEEI), Denpasar, Indonesia, 10–11 August 2015; pp. 594–599. [Google Scholar] [CrossRef]

- Li, Y.; Chu, Z.; Xin, Y. Posture recognition technology based on kinect. IEICE Trans. Inf. Syst. 2020, E103D, 621–630. [Google Scholar] [CrossRef]

- Yang, D.; Huang, J.; Tu, X.; Ding, G.; Shen, T.; Xiao, X. A Wearable Activity Recognition Device Using Air-Pressure and IMU Sensors. IEEE Access 2019, 7, 6611–6621. [Google Scholar] [CrossRef]

- Zhuang, W.; Chen, Y.; Su, J.; Wang, B.; Gao, C. Design of human activity recognition algorithms based on a single wearable IMU sensor. Int. J. Sens. Netw. 2019, 30, 193–206. [Google Scholar] [CrossRef]

- Valarezo, E.; Rivera, P.; Park, J.M.; Gi, G.; Kim, T.Y.; Al-Antari, M.A.; Al-Masni, M.; Kim, T.S. Human Activity Recognition Using a Single Wrist IMU Sensor via Deep Learning Convolutional and Recurrent Neural Nets Index Terms—Human Activities, Inertial Measurement Units (IMUs), Convolutional Neural Nets (CNN), Recurrent Neural Nets (RNN), HAR System. J. ICT Des. Eng. Technol. Sci. JITDETS 2017, 1, 1–5. [Google Scholar]

- Patterson, M.; McGrath, D.; Caulfield, B. Using a tri-axial accelerometer to detect technique breakdown due to fatigue in distance runners: A preliminary perspective. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 6511–6514. [Google Scholar] [CrossRef]

- Liang, J.; Duan, H.; Li, J.; Sun, H.; Sha, X.; Zhao, Y.; Liu, L. Accurate Estimation of Gait Altitude Using One Wearable IMU Sensor. In Proceedings of the 2018 IEEE 1st International Conference on Micro/Nano Sensors for AI, Healthcare, and Robotics (NSENS), Shenzhen, China, 5–7 December 2018; pp. 64–67. [Google Scholar] [CrossRef]

- Fourati, H. Heterogeneous data fusion algorithm for pedestrian navigation via foot-mounted inertial measurement unit and complementary filter. IEEE Trans. Instrum. Meas. 2015, 64, 221–229. [Google Scholar] [CrossRef]

- Marins, J.L.; Yun, X.; Bachmann, E.R.; McGhee, R.B.; Zyda, M.J. An extended Kalman filter for quaternion-based orientation estimation using MARG sensors. IEEE Int. Conf. Intell. Robot. Syst. 2001, 4, 2003–2011. [Google Scholar] [CrossRef]

- Bachmann, E.R.; Duman, I.; Usta, U.Y.; Mcghee, R.B.; Yun, X.P.; Zyda, M.J. Orientation Tracking for Humans and Robots Using Inertial Sensors A Quaternion Attitude Filter. In Proceedings of the 1999 IEEE International Symposium on Computational Intelligence in Robotics and Automation, Monterey, CA, USA, 8–9 November 1999; pp. 187–194. [Google Scholar]

- Huang, Y.-P.; Chuang, C.-H. An Intelligent Identification Model for Classifying Trembling Patterns of Parkinson’s Disease. In Advances in Swarm Intelligence; Tan, Y., Shi, Y., Li, L., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 215–222. [Google Scholar] [CrossRef]

- Bellitti, P.; Borghetti, M.; Lopomo, N.F.; Sardini, E.; Serpelloni, M. Smart Brace for Static and Dynamic Knee Laxity Measurement. Sensors 2022, 22, 5815. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Lee, S. Fusion Poser: 3D Human Pose Estimation Using Sparse IMUs and Head Trackers in Real Time. Sensors 2022, 22, 4846. [Google Scholar] [CrossRef]

- McGrath, T.; Stirling, L. Body-Worn IMU-Based Human Hip and Knee Kinematics Estimation during Treadmill Walking. Sensors 2022, 22, 2544. [Google Scholar] [CrossRef]

- Slyper, R.; Hodgins, J.K. Action capture with accelerometers. In Proceedings of the Eurographics/ACM SIGGRAPH Symposium on Computer Animation, Dublin, Ireland, 7–9 July 2008; pp. 193–199. [Google Scholar]

- Huang, Y.-P. System and Method Rehabilitation Monitoring System and Method. 82119-TW-PA. Available online: https://rndc.ntut.edu.tw/p/16-1037-107369.php?Lang=en (accessed on 1 April 2024).

- Banos, O.; Calatroni, A.; Damas, M.; Pomares, H.; Roggen, D.; Rojas, I.; Villalonga, C. Opportunistic Activity Recognition in IoT Sensor Ecosystems via Multimodal Transfer Learning. Neural Process. Lett. 2021, 53, 3169–3197. [Google Scholar] [CrossRef]

- Tian, Y.; Meng, X.; Tao, D.; Liu, D.; Feng, C. Upper limb motion tracking with the integration of IMU and Kinect. Neurocomputing 2015, 159, 207–218. [Google Scholar] [CrossRef]

- Hoang, T.; Shiao, Y. New Method for Reduced-Number IMU Estimation in Observing Human Joint Motion. Sensors 2023, 23, 5712. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.P.; Chou, Y.J.; Lee, S.H. An OpenPose-based System for Evaluating Rehabilitation Actions in Parkinson’s Disease. In Proceedings of the 2022 International Automatic Control Conference (CACS), Kaohsiung, Taiwan, 3–6 November 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Huang, Y.P.; Liu, Y.Y.; Hsu, W.H.; Lai, L.J.; Lee, M.S. Monitoring and assessment of rehabilitation progress on range of motion after total knee replacement by sensor-based system. Sensors 2020, 20, 1703. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).