FireSonic: Design and Implementation of an Ultrasound Sensing-Based Fire Type Identification System

Abstract

:1. Introduction

- We address the shortcomings of current fire detection systems by incorporating a critical feature into fire monitoring systems. To the best of our knowledge, FireSonic is the first system that leverages acoustic signals for determining fire types.

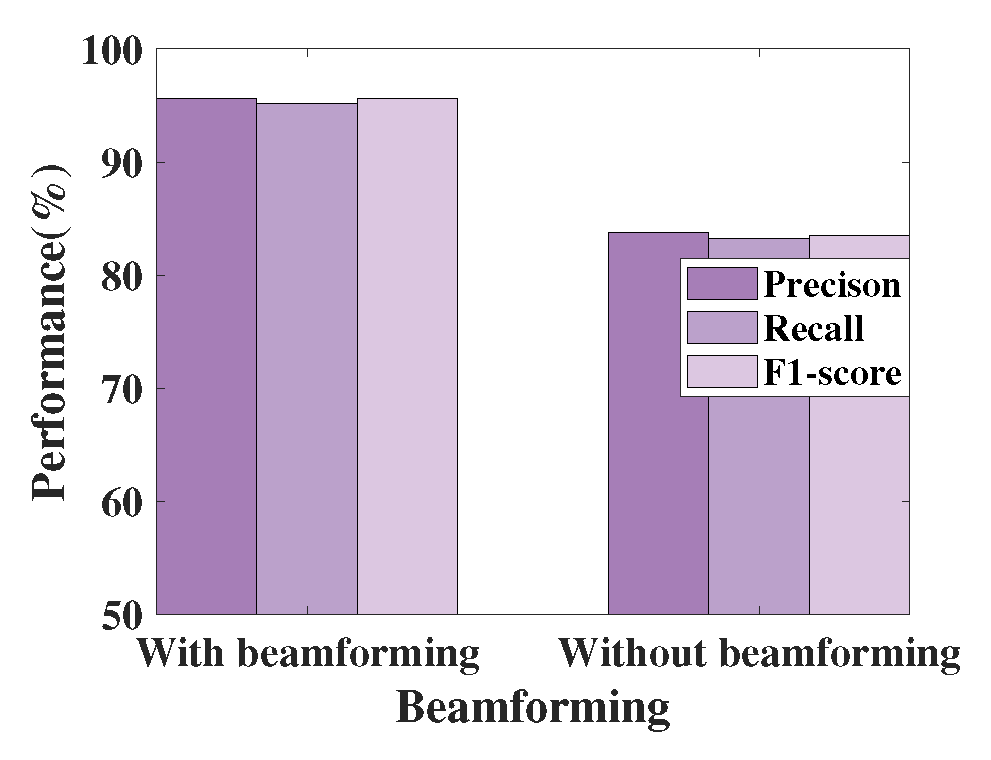

- We employ beamforming technology to enhance signal quality by reducing interference and noise, while our flame HRR monitoring scheme utilizes acoustic signals to quantify the correlation between a fire’s heat release regions and sound propagation delays, facilitating fire type determination and accuracy enhancement.

- We implement a prototype of FireSonic using low-cost commodity acoustic devices. Our experiments indicate that FireSonic achieves an overall accuracy of in determining fire types (experiments were conducted with the presence of professional firefighters and were approved by the Institutional Review Board).

2. Background

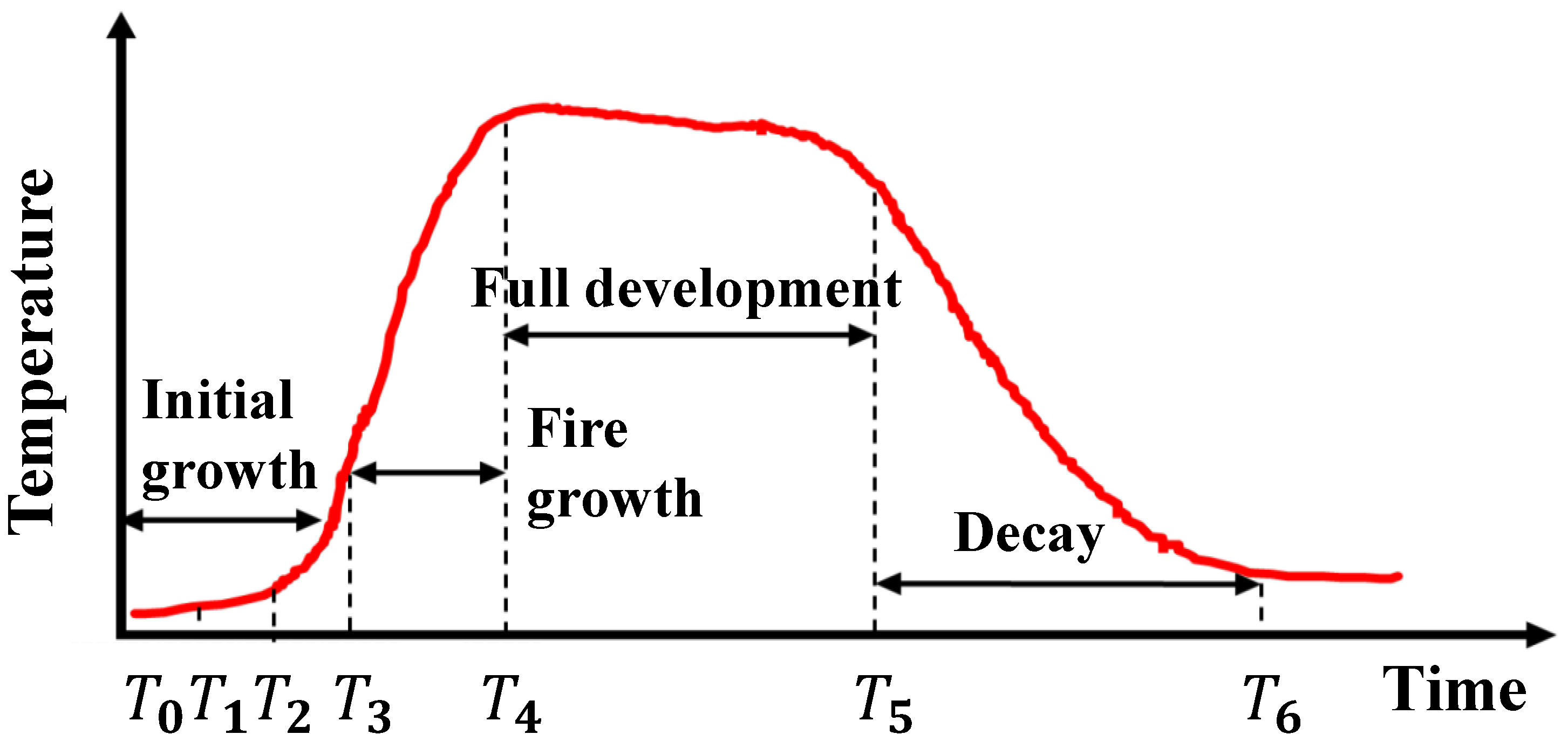

2.1. Heat Release Rate

2.2. Channel Impulse Response

2.3. Beamforming

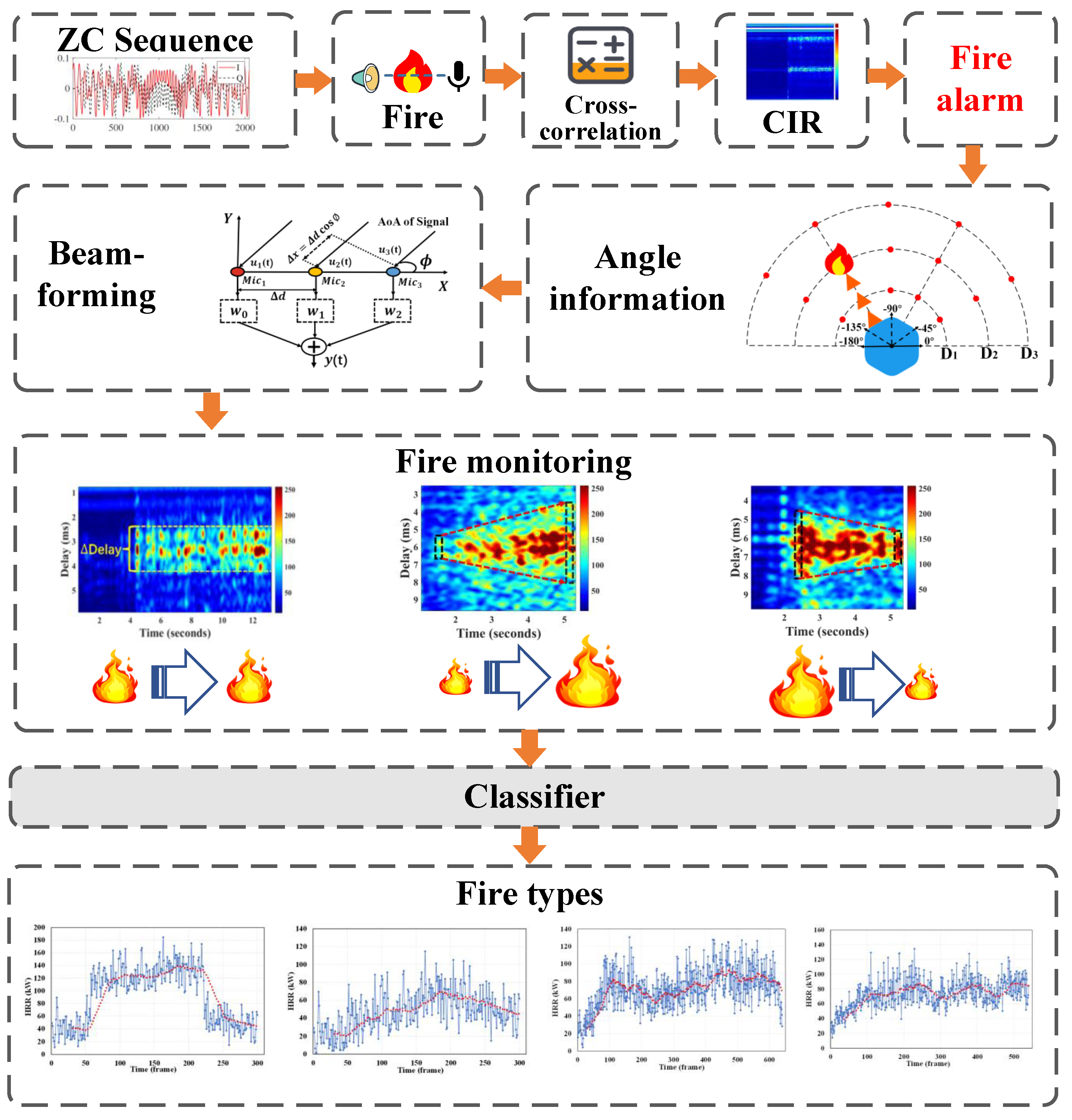

3. System Design

3.1. Overview

3.2. Transceiver

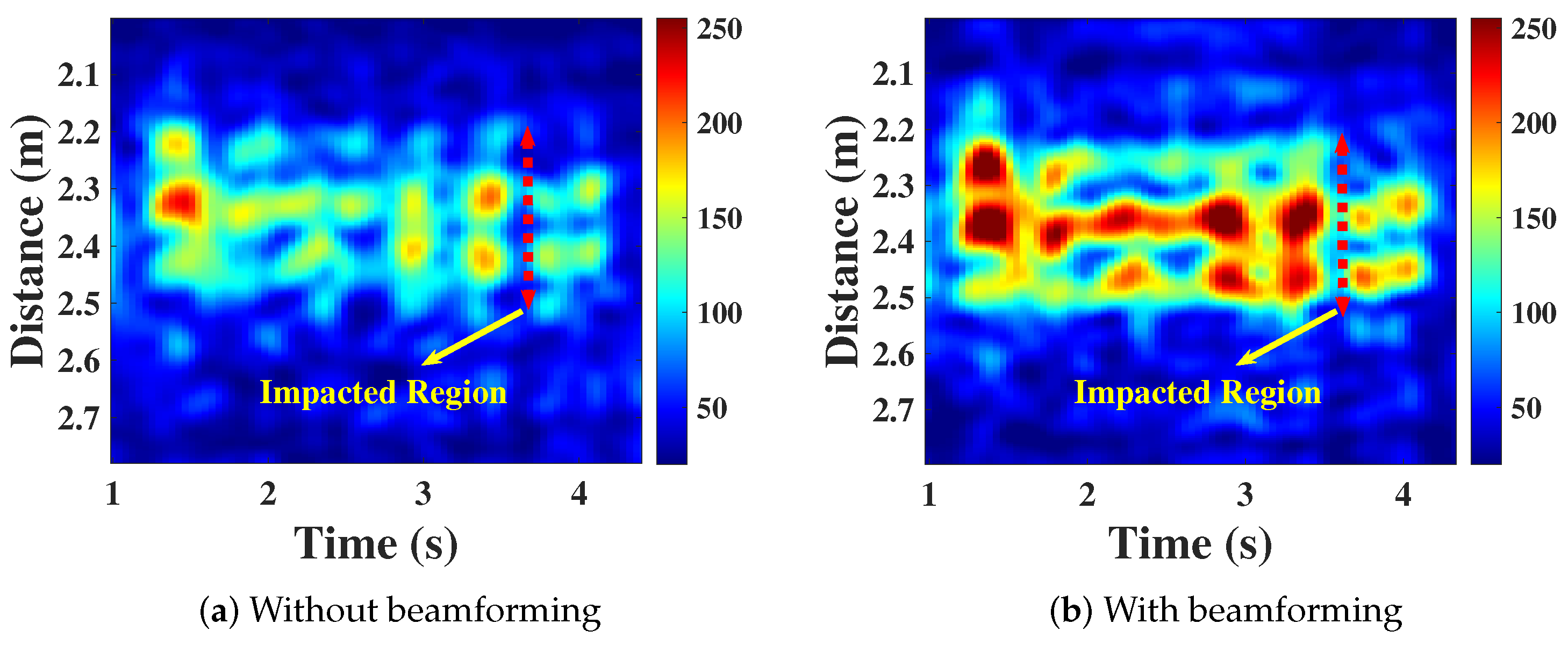

3.3. Signal Enhancement Based on Beamforming

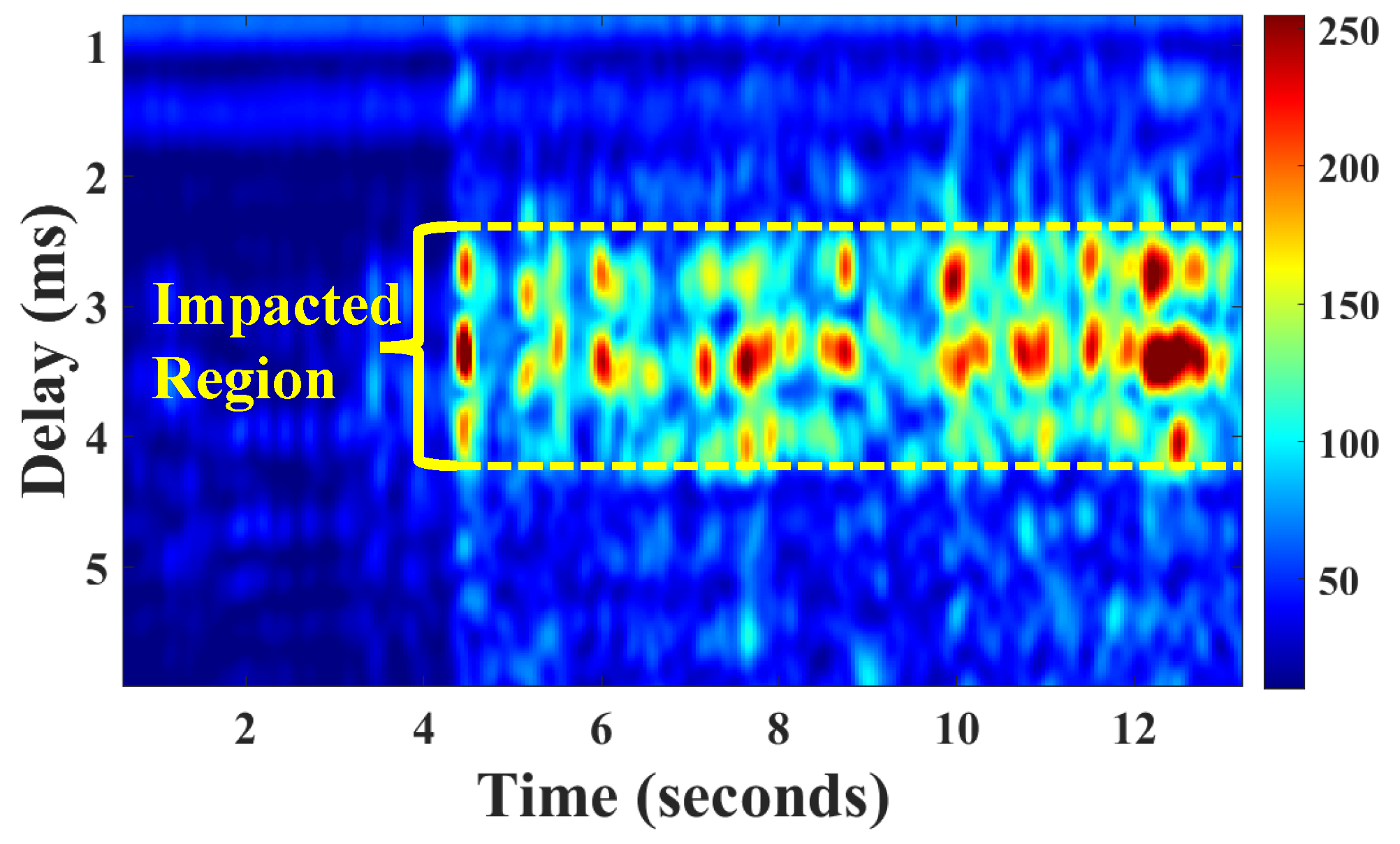

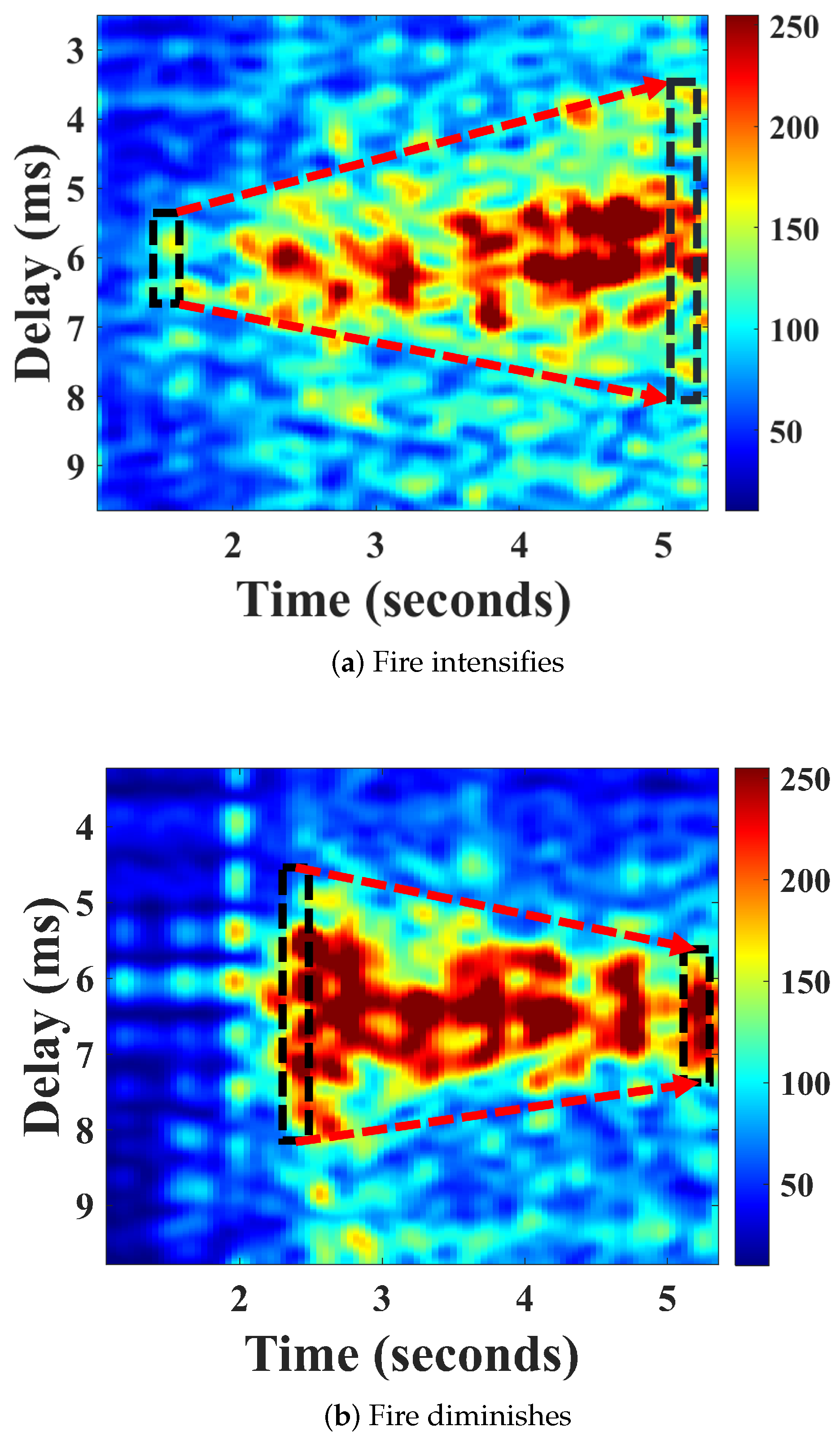

3.4. Mining Fire-Related Information in CIR

4. Results

4.1. CIR Measurements before and after Using Beamforming

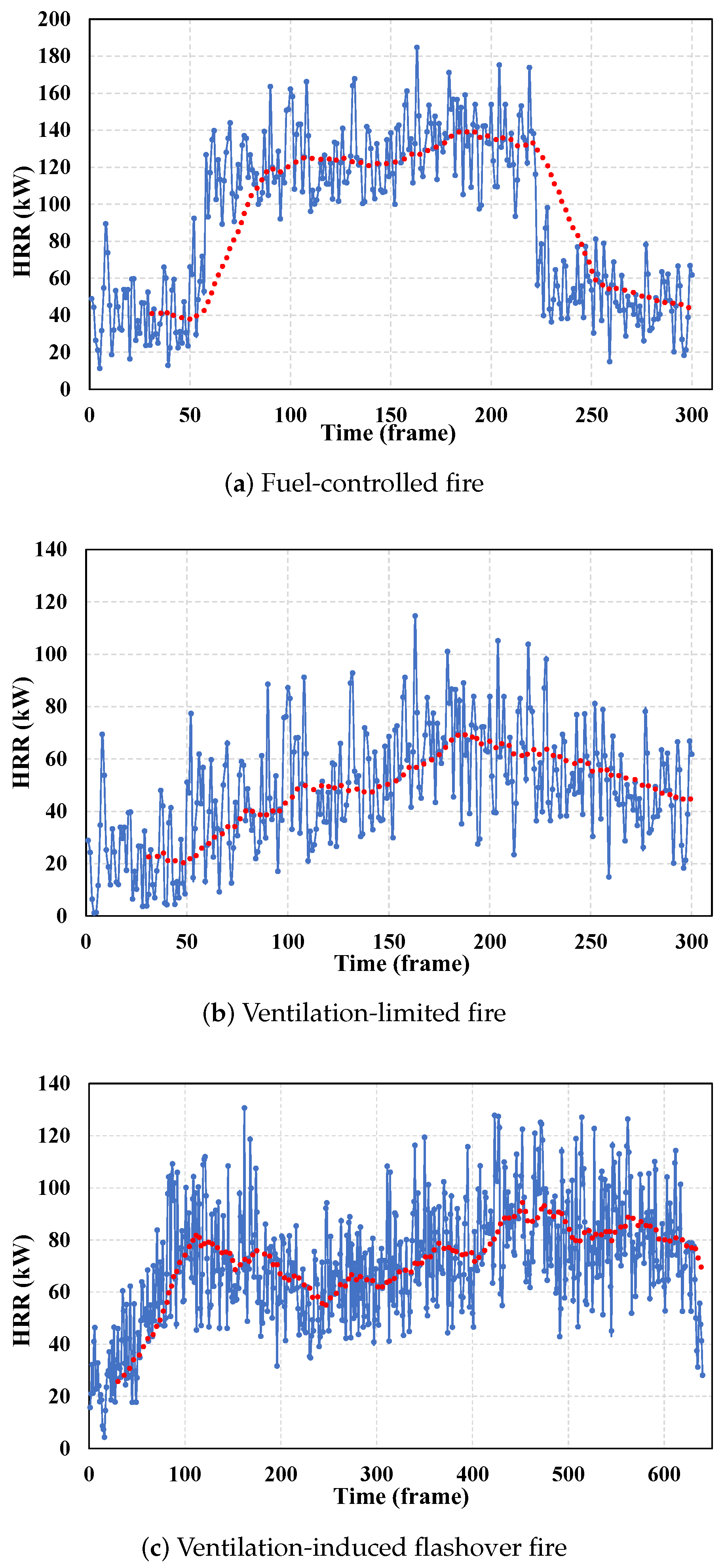

4.2. Fire Type Identification

4.3. Classifier

5. Experiments and Evaluation

5.1. Experiment Setup

5.1.1. Hardware

5.1.2. Data Collection

5.1.3. Model Training, Testing, and Validation

5.2. Evaluation

5.2.1. Overall Performance

5.2.2. Performance on Different Classifiers

5.2.3. Performance in Different Locations

5.2.4. Performance in Smoky Environments

5.2.5. Performance on Different Fuels

5.2.6. Comparison of Performance with and without Beamforming

5.2.7. Detection Time

5.2.8. Comparison with State-of-the-Art Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kodur, V.; Kumar, P.; Rafi, M.M. Fire hazard in buildings: Review, assessment and strategies for improving fire safety. PSU Res. Rev. 2019, 4, 1–23. [Google Scholar] [CrossRef]

- Kerber, S. Impact of Ventilation on Fire Behavior in Legacy and Contemporary Residential Construction; Underwriters Laboratories, Incorporated: Northbrook, IL, USA, 2010. [Google Scholar]

- Drysdale, D. An Introduction to Fire Dynamics; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Foroutannia, A.; Ghasemi, M.; Parastesh, F.; Jafari, S.; Perc, M. Complete dynamical analysis of a neocortical network model. Nonlinear Dyn. 2020, 100, 2699–2714. [Google Scholar] [CrossRef]

- Ghasemi, M.; Foroutannia, A.; Nikdelfaz, F. A PID controller for synchronization between master-slave neurons in fractional-order of neocortical network model. J. Theor. Biol. 2023, 556, 111311. [Google Scholar] [CrossRef] [PubMed]

- Perera, E.; Litton, D. A Detailed Study of the Properties of Smoke Particles Produced from both Flaming and Non-Flaming Combustion of Common Mine Combustibles. Fire Saf. Sci. 2011, 10, 213–226. [Google Scholar] [CrossRef]

- Drysdale, D.D. Thermochemistry. In SFPE Handbook of Fire Protection Engineering; Hurley, M.J., Gottuk, D., Hall, J.R., Harada, K., Kuligowski, E., Puchovsky, M., Torero, J., Watts, J.M., Wieczorek, C., Eds.; Springer: New York, NY, USA, 2016; pp. 138–150. [Google Scholar]

- Gaur, A.; Singh, A.; Kumar, A.; Kulkarni, K.S.; Lala, S.; Kapoor, K.; Srivastava, V.; Kumar, A.; Mukhopadhyay, S.C. Fire Sensing Technologies: A Review. IEEE Sens. J. 2019, 19, 3191–3202. [Google Scholar] [CrossRef]

- Vojtisek-Lom, M. Total Diesel Exhaust Particulate Length Measurements Using a Modified Household Smoke Alarm Ionization Chamber. J. Air Waste Manag. Assoc. 2011, 61, 126–134. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Baik, S.W. Early fire detection using convolutional neural networks during surveillance for effective disaster management. Neurocomputing 2018, 288, 30–42. [Google Scholar] [CrossRef]

- Çelik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Liu, Z.; Kim, A.K. Review of Recent Developments in Fire Detection Technologies. J. Fire Prot. Eng. 2003, 13, 129–151. [Google Scholar] [CrossRef]

- Chen, J.; He, Y.; Wang, J. Multi-feature fusion based fast video flame detection. Build. Environ. 2010, 45, 1113–1122. [Google Scholar] [CrossRef]

- Kahn, J.M.; Katz, R.H.; Pister, K.S.J. Emerging challenges: Mobile networking for “Smart Dust”. J. Commun. Netw. 2000, 2, 188–196. [Google Scholar] [CrossRef]

- Zhong, S.; Huang, Y.; Ruby, R.; Wang, L.; Qiu, Y.X.; Wu, K. Wi-fire: Device-free fire detection using WiFi networks. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- Li, J.; Sharma, A.; Mishra, D.; Seneviratne, A. Fire Detection Using Commodity WiFi Devices. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM), Madrid, Spain, 7–11 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Radke, D.; Abari, O.; Brecht, T.; Larson, K. Can Future Wireless Networks Detect Fires? In Proceedings of the 7th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, BuildSys ’20, Virtual, 18–20 November 2020; pp. 286–289. [Google Scholar]

- Park, K.H.; Lee, S.Q. Early stage fire sensing based on audible sound pressure spectra with multi-tone frequencies. Sens. Actuators A Phys. 2016, 247, 418–429. [Google Scholar] [CrossRef]

- Martinsson, J.; Runefors, M.; Frantzich, H.; Glebe, D.; McNamee, M.; Mogren, O. A Novel Method for Smart Fire Detection Using Acoustic Measurements and Machine Learning: Proof of Concept. Fire Technol. 2022, 58, 3385–3403. [Google Scholar] [CrossRef]

- Zhang, F.; Niu, K.; Fu, X.; Jin, B. AcousticThermo: Temperature Monitoring Using Acoustic Pulse Signal. In Proceedings of the 2020 16th International Conference on Mobility, Sensing and Networking (MSN), Tokyo, Japan, 17–19 December 2020; pp. 683–687. [Google Scholar]

- Cai, C.; Pu, H.; Ye, L.; Jiang, H.; Luo, J. Active Acoustic Sensing for “Hearing” Temperature Under Acoustic Interference. IEEE Trans. Mob. Comput. 2023, 22, 661–673. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Y.; Tian, M.; Shen, J. HearFire: Indoor Fire Detection via Inaudible Acoustic Sensing. Proc. ACM Interactive Mobile, Wearable Ubiquitous Technol. 2023, 6, 185. [Google Scholar] [CrossRef]

- Savari, R.; Savaloni, H.; Abbasi, S.; Placido, F. Design and engineering of ionization gas sensor based on Mn nano-flower sculptured thin film as cathode and a stainless steel ball as anode. Sens. Actuators B Chem. 2018, 266, 620–636. [Google Scholar] [CrossRef]

- Wang, Y.; Shen, J.; Zheng, Y. Push the Limit of Acoustic Gesture Recognition. IEEE Trans. Mob. Comput. 2022, 21, 1798–1811. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, Y.; Cao, J.; Chen, J. HearLiquid: Non-intrusive Liquid Fraud Detection Using Commodity Acoustic Devices. IEEE Internet Things J. 2022, 9, 13582–13597. [Google Scholar] [CrossRef]

- Chen, H.; Li, F.; Wang, Y. EchoTrack: Acoustic device-free hand tracking on smart phones. In Proceedings of the IEEE INFOCOM 2017—IEEE Conference on Computer Communications, Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar] [CrossRef]

- Sun, K.; Zhao, T.; Wang, W.; Xie, L. VSkin: Sensing Touch Gestures on Surfaces of Mobile Devices Using Acoustic Signals. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, MobiCom ’18, New Delhi, India, 29 October–2 November 2018; pp. 591–605. [Google Scholar] [CrossRef]

- Tung, Y.C.; Bui, D.; Shin, K.G. Cross-Platform Support for Rapid Development of Mobile Acoustic Sensing Applications. In Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ’18, Munich, Germany, 10–15 June 2018; pp. 455–467. [Google Scholar] [CrossRef]

- Liu, C.; Wang, P.; Jiang, R.; Zhu, Y. AMT: Acoustic Multi-target Tracking with Smartphone MIMO System. In Proceedings of the IEEE INFOCOM 2021—IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Li, D.; Liu, J.; Lee, S.I.; Xiong, J. LASense: Pushing the Limits of Fine-Grained Activity Sensing Using Acoustic Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6, 1–27. [Google Scholar] [CrossRef]

- Yang, Q.; Zheng, Y. Model-Based Head Orientation Estimation for Smart Devices. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–24. [Google Scholar] [CrossRef]

- Mao, W.; Wang, M.; Qiu, L. AIM: Acoustic Imaging on a Mobile. In Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ’18, Munich, Germany, 10–15 June 2018; pp. 468–481. [Google Scholar] [CrossRef]

- Nandakumar, R.; Gollakota, S.; Watson, N. Contactless Sleep Apnea Detection on Smartphones. In Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ’15, Florence, Italy, 18–20 May 2015; pp. 45–57. [Google Scholar] [CrossRef]

- Nandakumar, R.; Iyer, V.; Tan, D.; Gollakota, S. FingerIO: Using Active Sonar for Fine-Grained Finger Tracking. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, CHI ’16, San Jose, CA, USA, 7–12 May 2016; pp. 1515–1525. [Google Scholar] [CrossRef]

- Ruan, W.; Sheng, Q.Z.; Yang, L.; Gu, T.; Xu, P.; Shangguan, L. AudioGest: Enabling Fine-Grained Hand Gesture Detection by Decoding Echo Signal. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, UbiComp ’16, Heidelberg, Germany, 12–15 September 2016; pp. 474–485. [Google Scholar] [CrossRef]

- Ahmad, A.D.; Abubaker, A.M.; Salaimeh, A.; Akafuah, N.K.; Finney, M.; Forthofer, J.M.; Saito, K. Ignition and burning mechanisms of live spruce needles. Fuel 2021, 304, 121371. [Google Scholar] [CrossRef]

- Erez, G.; Collin, A.; Parent, G.; Boulet, P.; Suzanne, M.; Thiry-Muller, A. Measurements and models to characterise flame radiation from multi-scale kerosene fires. Fire Saf. J. 2021, 120, 103179. [Google Scholar] [CrossRef]

- Wang, P.; Jiang, R.; Liu, C. Amaging: Acoustic Hand Imaging for Self-adaptive Gesture Recognition. In Proceedings of the IEEE INFOCOM 2022—IEEE Conference on Computer Communications, London, UK, 2–5 May 2022; pp. 80–89. [Google Scholar]

- Ling, K.; Dai, H.; Liu, Y.; Liu, A.X.; Wang, W.; Gu, Q. UltraGesture: Fine-Grained Gesture Sensing and Recognition. IEEE Trans. Mob. Comput. 2022, 21, 2620–2636. [Google Scholar] [CrossRef]

- Tian, M.; Wang, Y.; Wang, Z.; Situ, J.; Sun, X.; Shi, X.; Zhang, C.; Shen, J. RemoteGesture: Room-scale Acoustic Gesture Recognition for Multiple Users. In Proceedings of the 2023 20th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Madrid, Spain, 11–14 September 2023; pp. 231–239. [Google Scholar]

- Licitra, G.; Artuso, F.; Bernardini, M.; Moro, A.; Fidecaro, F.; Fredianelli, L. Acoustic beamforming algorithms and their applications in environmental noise. Curr. Pollut. Rep. 2023, 9, 486–509. [Google Scholar] [CrossRef]

- Martinka, J.; Rantuch, P.; Martinka, F.; Wachter, I.; Štefko, T. Improvement of Heat Release Rate Measurement from Woods Based on Their Combustion Products Temperature Rise. Processes 2023, 11, 1206. [Google Scholar] [CrossRef]

- Ingason, H.; Li, Y.Z.; Lönnermark, A. Fuel and Ventilation-Controlled Fires. In Tunnel Fire Dynamics; Springer: Berlin/Heidelberg, Germany, 2024; pp. 23–45. [Google Scholar]

- Cfbt-us. Available online: http://cfbt-us.com/index.html/ (accessed on 13 May 2013).

| Classifier | SVM | BP | RF | KNN |

|---|---|---|---|---|

| Best performance | 97.6% | 95.2% | 89.3% | 75.9% |

| Worst performance | 93.3% | 90.3% | 78.5% | 71.5% |

| Average performance | 95.5% | 93.5% | 84.7% | 72.8% |

| Type of Fuel | Ethanol | Paper | Charcoal | Woods | Plastics | Liquid Fuel |

|---|---|---|---|---|---|---|

| Performance | 97.2% | 98.6% | 95.1% | 94.3% | 98.2% | 97.5% |

| Sensing Range | Accuracy (1 m) | Performance Across Different Materials | Resistance to Daily Interference | Ability to Classify Fire Types | |

|---|---|---|---|---|---|

| FireSonic | 7m | 98.7% | Varies minimally | Strong | Yes |

| State-of- the-art Work | 1m | 97.3% | Varies significantly | Weak | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Wang, Y.; Liao, M.; Sun, Y.; Wang, S.; Sun, X.; Shi, X.; Kang, Y.; Tian, M.; Bao, T.; et al. FireSonic: Design and Implementation of an Ultrasound Sensing-Based Fire Type Identification System. Sensors 2024, 24, 4360. https://doi.org/10.3390/s24134360

Wang Z, Wang Y, Liao M, Sun Y, Wang S, Sun X, Shi X, Kang Y, Tian M, Bao T, et al. FireSonic: Design and Implementation of an Ultrasound Sensing-Based Fire Type Identification System. Sensors. 2024; 24(13):4360. https://doi.org/10.3390/s24134360

Chicago/Turabian StyleWang, Zheng, Yanwen Wang, Mingyuan Liao, Yi Sun, Shuke Wang, Xiaoqi Sun, Xiaokang Shi, Yisen Kang, Mi Tian, Tong Bao, and et al. 2024. "FireSonic: Design and Implementation of an Ultrasound Sensing-Based Fire Type Identification System" Sensors 24, no. 13: 4360. https://doi.org/10.3390/s24134360

APA StyleWang, Z., Wang, Y., Liao, M., Sun, Y., Wang, S., Sun, X., Shi, X., Kang, Y., Tian, M., Bao, T., & Lu, R. (2024). FireSonic: Design and Implementation of an Ultrasound Sensing-Based Fire Type Identification System. Sensors, 24(13), 4360. https://doi.org/10.3390/s24134360