Polarimetric Imaging for Robot Perception: A Review

Abstract

:1. Introduction

2. Principles of Polarization

2.1. Stokes Parameters

2.2. Light Characterization Using Stokes Parameters

2.3. Polarimetric Image Acquisition

2.4. Weaknesses and Drawbacks

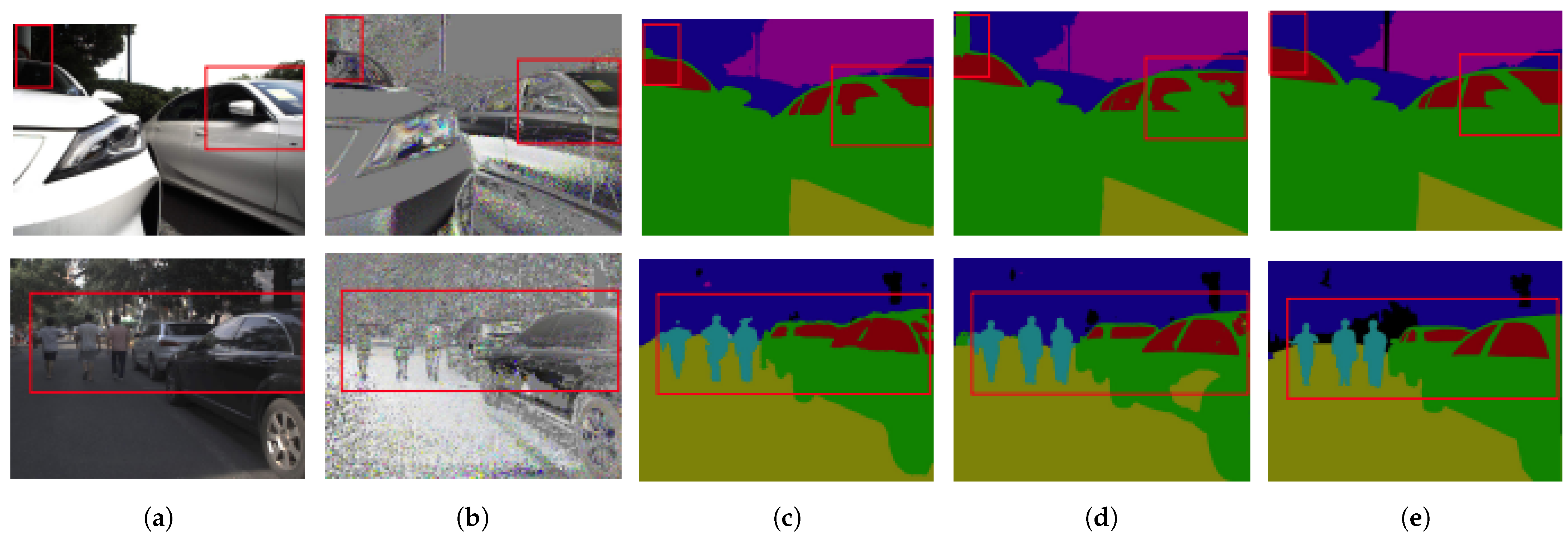

3. Image Segmentation Using Polarimetry

3.1. State-of-the-Art Methods in Polarimetric Segmentation

3.2. Datasets for Polarimetric Image Segmentation

4. Shape Characterization

4.1. Depth from Polarimetry

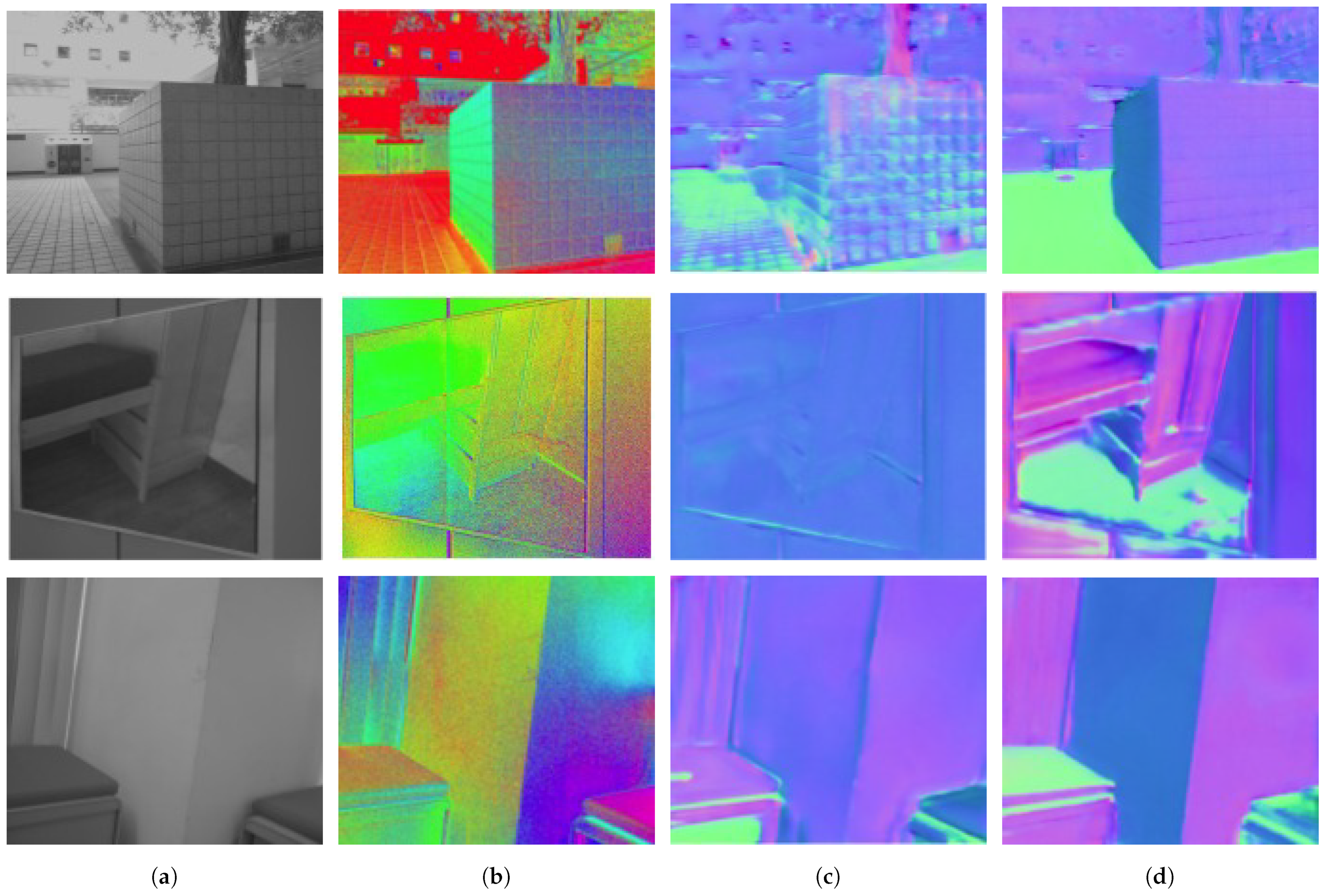

4.2. Normal from Polarimetry

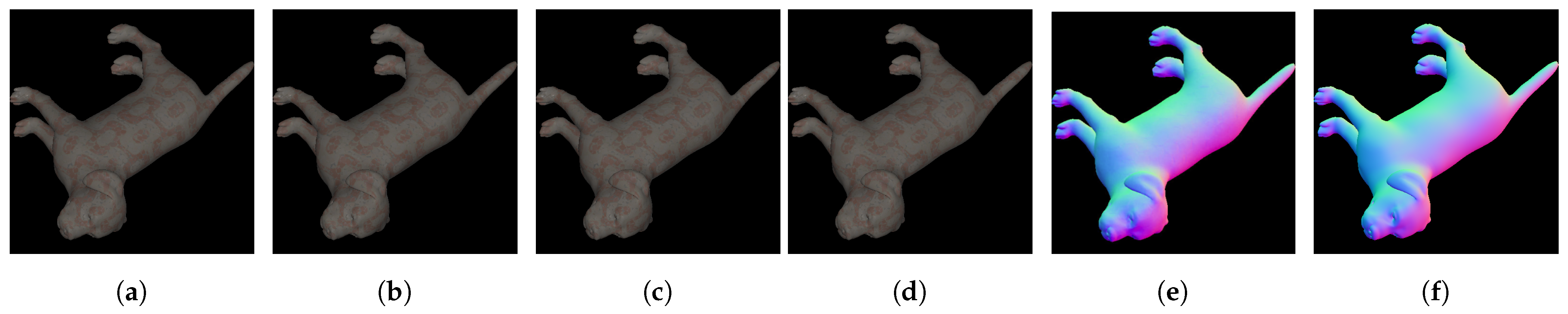

4.3. Three-Dimensional Reconstruction by Polarization

4.4. State-of-the-Art Methods for Shape Characterization

4.5. Datasets for Polarimetric Shape Estimation

5. Pose Estimation Using Polarimetry

6. Experimental Robotic Applications Using Polarimetry

7. Experimentation

7.1. Performance Evaluation of Polarimetry Segmentation

7.2. Performance Evaluation on Shape Characterization

8. Open Challenges

9. Discussion

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Azzam, R.M.A. The intertwined history of polarimetry and ellipsometry. Thin Solid Films 2011, 519, 2584–2588. [Google Scholar] [CrossRef]

- Atkinson, G.A.; Hancock, E.R. Recovery of surface orientation from diffuse polarization. IEEE Trans. Image Process. 2006, 15, 1653–1664. [Google Scholar] [CrossRef]

- Vašiček, A. Polarimetric Methods for the Determination of the Refractive Index and the Thickness of Thin Films on Glass. J. Opt. Soc. Am. 1947, 37, 145–153. [Google Scholar] [CrossRef]

- Stokes, G.G. On the numerical calculation of a class of definite integrals and infinite series. Trans. Camb. Philos. Soc. 1851, 9, 166. [Google Scholar]

- Jütte, L.; Sharma, G.; Patel, H.; Roth, B. Registration of polarimetric images for in vivo skin diagnostics. J. Biomed. Opt. 2022, 27, 96001. [Google Scholar] [CrossRef]

- Picart, P.; Leval, J. General theoretical formulation of image formation in digital Fresnel holography. J. Opt. Soc. Am. A 2008, 25, 1744–1761. [Google Scholar] [CrossRef]

- Huynh, C.P.; Robles-Kelly, A.; Hancock, E. Shape and refractive index recovery from single-view polarisation images. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1229–1236. [Google Scholar] [CrossRef]

- Kurt, M.; Szirmay-Kalos, L.; Křivánek, J. An anisotropic BRDF model for fitting and Monte Carlo rendering. ACM SIGGRAPH Comput. Graph. 2010, 44, 1–15. [Google Scholar] [CrossRef]

- Deschaintre, V.; Drettakis, G.; Bousseau, A. Guided Fine-Tuning for Large-Scale Material Transfer. arXiv 2020, arXiv:2007.03059. [Google Scholar] [CrossRef]

- Lapray, P.J.; Bigué, L. Performance comparison of division of time and division of focal plan polarimeters. In Proceedings of the Sixteenth International Conference on Quality Control by Artificial Vision, Albi, France, 28 July 2023; Orteu, J.J., Jovančević, I., Eds.; SPIE: Paris, France, 2023; p. 6. [Google Scholar] [CrossRef]

- Liu, J.; Duan, J.; Hao, Y.; Chen, G.; Zhang, H.; Zheng, Y. Polarization image demosaicing and RGB image enhancement for a color polarization sparse focal plane array. Opt. Express 2023, 31, 23475–23490. [Google Scholar] [CrossRef]

- Zhang, J.; Luo, H.; Hui, B.; Chang, Z. Image interpolation for division of focal plane polarimeters with intensity correlation. Opt. Express 2016, 24, 20799–20807. [Google Scholar] [CrossRef]

- Drouet, F.; Stolz, C.; Laligant, O.; Aubreton, O. 3D reconstruction of external and internal surfaces of transparent objects from polarization state of highlights. Opt. Lett. 2014, 39, 2955–2958. [Google Scholar] [CrossRef]

- Deschaintre, V.; Lin, Y.; Ghosh, A. Deep Polarization Imaging for 3D shape and SVBRDF Acquisition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15567–15576. [Google Scholar]

- Baek, S.H.; Jeon, D.S.; Tong, X.; Kim, M.H. Simultaneous acquisition of polarimetric SVBRDF and normals. In Proceedings of the SIGGRAPH Asia 2018 Technical Papers, SIGGRAPH Asia 2018, Tokyo, Japan, 4–7 December 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Hwang, I.; Jeon, D.S.; Muñoz, A.; Gutierrez, D.; Tong, X.; Kim, M.H. Sparse ellipsometry: Portable Acquisition of Polarimetric SVBRDF and Shape with Unstructured Flash Photography. ACM Trans. Graph. 2022, 41, 133. [Google Scholar] [CrossRef]

- Cui, Z.; Gu, J.; Shi, B.; Tan, P.; Kautz, J. Polarimetric Multi-View Stereo. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Zhao, J.; Monno, Y.; Okutomi, M. Polarimetric Multi-View Inverse Rendering. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 450, 8798–8812. [Google Scholar] [CrossRef]

- Yang, X.; Cheng, C.; Duan, J.; Hao, Y.F.; Zhu, Y.; Zhang, H. Polarized Object Surface Reconstruction Algorithm Based on RU-GAN Network. Sensors 2023, 23, 3638. [Google Scholar] [CrossRef]

- Chen, G.; He, L.; Guan, Y.; Zhang, H. Perspective Phase Angle Model for Polarimetric 3D Reconstruction. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Gabriel, B., Moustapha, C., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 398–414. [Google Scholar]

- Rantoson, R.; Stolz, C.; Fofi, D.; Mériaudeau, F. 3D reconstruction by polarimetric imaging method based on perspective model. In Proceedings of the Optical Measurement Systems for Industrial Inspection VI, Munich, Germany, 17 June 2009; Lehmann, P.H., Ed.; SPIE: Paris, France, 2009; Volume 7389, p. 73890C. [Google Scholar] [CrossRef]

- Shen, X.; Carnicer, A.; Javidi, B. Three-dimensional polarimetric integral imaging under low illumination conditions. Opt. Lett. 2019, 44, 3230–3233. [Google Scholar] [CrossRef]

- Lei, C.; Huang, X.; Zhang, M.; Yan, Q.; Sun, W.; Chen, Q. Polarized Reflection Removal with Perfect Alignment in the Wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1750–1758. [Google Scholar]

- Kalra, A.; Taamazyan, V.; Rao, S.K.; Venkataraman, K.; Raskar, R.; Kadambi, A. Deep Polarization Cues for Transparent Object Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Zhang, Y.; Morel, O.; Blanchon, M.; Seulin, R.; Rastgoo, M.; Sidibé, D. Exploration of Deep Learning-based Multimodal Fusion for Semantic Road Scene Segmentation. In Proceedings of the VISAPP 2019 14th International Conference on Computer Vision Theory and Applications, Prague, Czech Republic, 25–27 February 2019. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, H.; Yang, K.; Hu, X.; Liu, R.; Stiefelhagen, R. CMX: Cross-Modal Fusion for RGB-X Semantic Segmentation with Transformers. arXiv 2023, arXiv:2203.04838. [Google Scholar] [CrossRef]

- Blanchon, M.; Morel, O.; Zhang, Y.; Seulin, R.; Crombez, N.; Sidibé, D. Outdoor Scenes Pixel-Wise Semantic Segmentation using Polarimetry and Fully Convolutional Network. In Proceedings of the 4th International Conference on Computer Vision Theory and Applications (VISAPP 2019), Prague, Czech Republic, 25–27 February 2019. [Google Scholar] [CrossRef]

- Morel, O.; Stolz, C.; Meriaudeau, F.; Gorria, P. Active lighting applied to three-dimensional reconstruction of specular metallic surfaces by polarization imaging. Appl. Opt. 2006, 45, 4062–4068. [Google Scholar] [CrossRef]

- Zhaole, S.; Zhou, H.; Nanbo, L.; Chen, L.; Zhu, J.; Fisher, R.B. A Robust Deformable Linear Object Perception Pipeline in 3D: From Segmentation to Reconstruction. IEEE Robot. Autom. Lett. 2024, 9, 843–850. [Google Scholar] [CrossRef]

- Mora, A.; Prados, A.; González, P.; Moreno, L.; Barber, R. Intensity-Based Identification of Reflective Surfaces for Occupancy Grid Map Modification. IEEE Access 2023, 11, 23517–23530. [Google Scholar] [CrossRef]

- Huy, D.Q.; Sadjoli, N.; Azam, A.B.; Elhadidi, B.; Cai, Y.; Seet, G. Object perception in underwater environments: A survey on sensors and sensing methodologies. Ocean Eng. 2023, 267, 113202. [Google Scholar] [CrossRef]

- Wang, J.; Liang, W.; Yang, J.; Wang, S.; Yang, Z.X. An adaptive image enhancement approach for safety monitoring robot under insufficient illumination condition. Comput. Ind. 2023, 147, 103862. [Google Scholar] [CrossRef]

- Hu, K.; Chen, Z.; Kang, H.; Tang, Y. 3D vision technologies for a self-developed structural external crack damage recognition robot. Autom. Constr. 2024, 159, 105262. [Google Scholar] [CrossRef]

- He, C.; He, H.; Chang, J.; Chen, B.; Ma, H.; Booth, M.J. Polarisation optics for biomedical and clinical applications: A review. Nature 2021, 10, 194. [Google Scholar] [CrossRef]

- Louie, D.C.; Tchvialeva, L.; Kalia, S.; Lui, H.; Lee, T.K. Constructing a portable optical polarimetry probe for in-vivo skin cancer detection. J. Biomed. Opt. 2021, 26, 35001. [Google Scholar] [CrossRef]

- Badieyan, S.; Abedini, M.; Razzaghi, M.; Moradi, A.; Masjedi, M. Polarimetric imaging-based cancer bladder tissue’s detection: A comparative study of bulk and formalin-fixed paraffin-embedded samples. Photodiagn. Photodyn. Ther. 2023, 44, 103698. [Google Scholar] [CrossRef]

- Shabayek, A.E.R.; Morel, O.; Fofi, D. Visual Behavior Based Bio-Inspired Polarization Techniques in Computer Vision and Robotics; IGI Global: Hershey, PA, USA, 2013; pp. 243–272. [Google Scholar] [CrossRef]

- Kong, F.; Guo, Y.; Zhang, J.; Fan, X.; Guo, X. Review on bio-inspired polarized skylight navigation. Chin. J. Aeronaut. 2023, 36, 14–37. [Google Scholar] [CrossRef]

- Ahsan, M.; Cai, Y.; Zhang, W. Information Extraction of Bionic Camera-Based Polarization Navigation Patterns Under Noisy Weather Conditions. J. Shanghai Jiaotong Univ. Sci. 2020, 25, 18–26. [Google Scholar] [CrossRef]

- Gratiet, A.L.; Dubreuil, M.; Rivet, S.; Grand, Y.L. Scanning Mueller polarimetric microscopy. Opt. Lett. 2016, 41, 4336–4339. [Google Scholar] [CrossRef]

- Kontenis, L.; Samim, M.; Karunendiran, A.; Krouglov, S.; Stewart, B.; Barzda, V. Second harmonic generation double stokes Mueller polarimetric microscopy of myofilaments. Biomed. Opt. Express 2016, 7, 559–569. [Google Scholar] [CrossRef]

- Miyazaki, D.; Saito, M.; Sato, Y.; Ikeuchi, K. Determining surface orientations of transparent objects based on polarization degrees in visible and infrared wavelengths. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2002, 19, 687–694. [Google Scholar] [CrossRef]

- Mei, H.; Dong, B.; Dong, W.; Yang, J.; Baek, S.H.; Heide, F.; Peers, P.; Wei, X.; Yang, X. Glass Segmentation using Intensity and Spectral Polarization Cues. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Kondo, Y.; Ono, T.; Sun, L.; Hirasawa, Y.; Murayama, J. Accurate Polarimetric BRDF for Real Polarization Scene Rendering. In Proceedings of the Computer Vision—ECCV 2020 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar] [CrossRef]

- Gao, D.; Li, Y.; Ruhkamp, P.; Skobleva, I.; Wysocki, M.; Jung, H.; Wang, P.; Guridi, A.; Navab, N.; Busam, B. Polarimetric Pose Prediction. arXiv 2021, arXiv:2112.03810. [Google Scholar]

- Dennis, M.; Dayton, S. Polarimetric Imagery for Object Pose Estimation; University of Dayton: Dayton, OH, USA, 2023. [Google Scholar]

- Khlynov, R.D.; Ryzhova, V.A.; Konyakhin, I.A.; Korotaev, V.V. Robotic Polarimetry System Based on Image Sensors for Monitoring the Rheological Properties of Blood in Emergency Situations. In Smart Electromechanical Systems: Recognition, Identification, Modeling, Measurement Systems, Sensors; Springer International Publishing: Cham, Switzerland, 2022; pp. 201–218. [Google Scholar] [CrossRef]

- Roa, C.; Le, V.N.D.; Mahendroo, M.; Saytashev, I.; Ramella-Roman, J.C. Auto-detection of cervical collagen and elastin in Mueller matrix polarimetry microscopic images using K-NN and semantic segmentation classification. Biomed. Opt. Express 2021, 12, 2236–2249. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Ainouz, S.; Lian, C.; Bensrhair, A. Multimodality Semantic Segmentation based on Polarization and color Images. Neurocomputing 2017, 253, 193–200. [Google Scholar] [CrossRef]

- Blanchon, M. Polarization Based Urban Scenes Understanding. Ph.D. Thesis, Université Bourgogne Franche-Comté, Dijon, France, 2021. [Google Scholar]

- Xiang, K.; Yang, K.; Wang, K. Polarization-driven Semantic Segmentation via Efficient Attention-bridged Fusion. Opt. Express 2021, 29, 4802–4820. [Google Scholar] [CrossRef] [PubMed]

- Blin, R.; Ainouz, S.; Canu, S.; Meriaudeau, F. Road scenes analysis in adverse weather conditions by polarization-encoded images and adapted deep learning. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019. [Google Scholar]

- Liang, J.; Ren, L.; Qu, E.; Hu, B.; Wang, Y. Method for enhancing visibility of hazy images based on polarimetric imaging. Photon. Res. 2014, 2, 38–44. [Google Scholar] [CrossRef]

- Zhang, W.; Liang, J.; Ren, L.; Ju, H.; Qu, E.; Bai, Z.; Tang, Y.; Wu, Z. Real-time image haze removal using an aperture-division polarimetric camera. Appl. Opt. 2017, 56, 942–947. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Liang, J.; Ju, H.; Ren, L.; Qu, E.; Wu, Z. A robust haze-removal scheme in polarimetric dehazing imaging based on automatic identification of sky region. Opt. Laser Technol. 2016, 86, 145–151. [Google Scholar] [CrossRef]

- Zhang, W.; Liang, J.; Ren, L. Haze-removal polarimetric imaging schemes with the consideration of airlight’s circular polarization effect. Optik 2019, 182, 1099–1105. [Google Scholar] [CrossRef]

- Shi, Y.; Guo, E.; Bai, L.; Han, J. Polarization-based haze removal using self-supervised network. Front. Phys. 2022, 9, 746. [Google Scholar] [CrossRef]

- Meriaudeau, F.; Ferraton, M.; Stolz, C.; Morel, O.; Bigué, L. Polarization imaging for industrial inspection. In Proceedings of the Image Processing: Machine Vision Applications, San Jose, CA, USA, 3 March 2008; p. 681308. [Google Scholar] [CrossRef]

- Zhou, C.; Teng, M.; Lyu, Y.; Li, S.; Xu, C.; Shi, B. Polarization-Aware Low-Light Image Enhancement. Proc. AAAI Conf. Artif. Intell. 2023, 37, 3742–3750. [Google Scholar] [CrossRef]

- Trippe, S. Polarization and Polarimetry: A Review. arXiv 2014, arXiv:1401.1911. [Google Scholar] [CrossRef]

- Garcia, N.M.; de Erausquin, I.; Edmiston, C.; Gruev, V. Surface normal reconstruction using circularly polarized light. Opt. Express 2015, 23, 14391–14406. [Google Scholar] [CrossRef] [PubMed]

- Zhu, D.; Smith, W.A.P. Depth from a polarisation + RGB stereo pair. arXiv 2019, arXiv:1903.12061. [Google Scholar]

- Dave, A.; Zhao, Y.; Veeraraghavan, A. PANDORA: Polarization-Aided Neural Decomposition Of Radiance. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 538–556. [Google Scholar]

- Tozza, S.; Smith, W.A.P.; Zhu, D.; Ramamoorthi, R.; Hancock, E.R. Linear Differential Constraints for Photo-polarimetric Height Estimation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Ngo, T.T.; Nagahara, H.; Taniguchi, R.I. Shape and Light Directions from Shading and Polarization. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Yang, F.; Wei, H. Fusion of infrared polarization and intensity images using support value transform and fuzzy combination rules. Infrared Phys. Technol. 2013, 60, 235–243. [Google Scholar] [CrossRef]

- Sattar, S.; Lapray, P.J.; Foulonneau, A.; Bigué, L. Review of Spectral and Polarization Imaging Systems; SPIE: Paris, France, 2020; Volume 11351, p. 113511Q. [Google Scholar] [CrossRef]

- Kadambi, A.; Taamazyan, V.; Shi, B.; Raskar, R. Polarized 3D: High-Quality Depth Sensing with Polarization Cues. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Kadambi, A.; Taamazyan, V.; Shi, B.; Raskar, R. Depth Sensing Using Geometrically Constrained Polarization Normals. Int. J. Comput. Vis. 2017, 125, 34–51. [Google Scholar] [CrossRef]

- Farlow, C.A.; Chenault, D.B.; Pezzaniti, J.L.; Spradley, K.D.; Gulley, M.G. Imaging Polarimeter Development and Applications; SPIE: Paris, France, 2002; Volume 4481, pp. 118–125. [Google Scholar] [CrossRef]

- Lee, J.H.; Choi, H.Y.; Shin, S.K.; Chung, Y.C. A Review of the Polarization-Nulling Technique for Monitoring Optical-Signal-to-Noise Ratio in Dynamic WDM Networks. J. Light. Technol. 2006, 24, 4162–4171. [Google Scholar] [CrossRef]

- Baliga, S.; Hanany, E.; Klibanoff, P. Polarization and Ambiguity. Am. Econ. Rev. 2013, 103, 3071–3083. [Google Scholar] [CrossRef]

- Dupertuis, M.A.; Proctor, M.; Acklin, B. Generalization of complex Snell–Descartes and Fresnel laws. J. Opt. Soc. Am. A 1994, 11, 1159–1166. [Google Scholar] [CrossRef]

- Atkinson, G.A.; Hancock, E.R. Shape Estimation Using Polarization and Shading from Two Views. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 2001–2017. [Google Scholar] [CrossRef]

- Stolz, C.; Ferraton, M.; Meriaudeau, F. Shape from polarization: A method for solving zenithal angle ambiguity. Opt. Lett. 2012, 37, 4218–4220. [Google Scholar] [CrossRef]

- Zhao, P.; Deng, Y.; Wang, W.; Liu, D.; Wang, R. Azimuth Ambiguity Suppression for Hybrid Polarimetric Synthetic Aperture Radar via Waveform Diversity. Remote Sens. 2020, 12, 1226. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. arXiv 2023, arXiv:2304.02643. [Google Scholar]

- Blanchon, M.; Sidibé, D.; Morel, O.; Seulin, R.; Meriaudeau, F. Towards urban scenes understanding through polarization cues. arXiv 2021, arXiv:2106.01717. [Google Scholar]

- Suárez-Bermejo, J.C.; Gorgas, J.; Pascual, S.; Santarsiero, M.; de Sande, J.C.G.; Piquero, G. Bayesian inference approach for Full Poincaré Mueller polarimetry. Opt. Laser Technol. 2024, 168, 109983. [Google Scholar] [CrossRef]

- Bansal, S.; Senthilkumaran, P. Stokes polarimetry with Poincaré–Hopf index beams. Opt. Lasers Eng. 2023, 160, 107295. [Google Scholar] [CrossRef]

- Yu, R.; Ren, W.; Zhao, M.; Wang, J.; Wu, D.; Xie, Y. Transparent objects segmentation based on polarization imaging and deep learning. Opt. Commun. 2024, 555, 130246. [Google Scholar] [CrossRef]

- Blanchon, M.; Morel, O.; Meriaudeau, F.; Seulin, R.; Sidibé, D. Polarimetric image augmentation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7365–7371. [Google Scholar] [CrossRef]

- Liu, Y.; Jiang, J.; Sun, J.; Bai, L.; Wang, Q. A survey of depth estimation based on computer vision. In Proceedings of the 2020 IEEE Fifth International Conference on Data Science in Cyberspace (DSC), Hong Kong, China, 27–30 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 135–141. [Google Scholar]

- Laga, H.; Jospin, L.V.; Boussaid, F.; Bennamoun, M. A survey on deep learning techniques for stereo-based depth estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1738–1764. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Chao, W.L.; Garg, D.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object detection for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8445–8453. [Google Scholar]

- Yang, L.; Tan, F.; Li, A.; Cui, Z.; Furukawa, Y.; Tan, P. Polarimetric dense monocular slam. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3857–3866. [Google Scholar]

- Ikemura, K.; Huang, Y.; Heide, F.; Zhang, Z.; Chen, Q.; Lei, C. Robust Depth Enhancement via Polarization Prompt Fusion Tuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 20710–20720. [Google Scholar]

- Hochwald, B.; Nehorai, A. Polarimetric modeling and parameter estimation with applications to remote sensing. IEEE Trans. Signal Process. 1995, 43, 1923–1935. [Google Scholar] [CrossRef]

- Kumar, A.C.S.; Bhandarkar, S.M.; Prasad, M. DepthNet: A Recurrent Neural Network Architecture for Monocular Depth Prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 396–3968. [Google Scholar] [CrossRef]

- Makarov, I.; Bakhanova, M.; Nikolenko, S.; Gerasimova, O. Self-supervised recurrent depth estimation with attention mechanisms. PeerJ Comput. Sci. 2022, 8, e865. [Google Scholar] [CrossRef]

- Li, B.; Hua, Y.; Liu, Y.; Lu, M. Dilated Fully Convolutional Neural Network for Depth Estimation from a Single Image. arXiv 2021, arXiv:2103.07570. [Google Scholar] [CrossRef]

- Shi, C.; Chen, J.; Chen, J.; Zhang, Z. Feature Enhanced Fully Convolutional Networks for Monocular Depth Estimation. In Proceedings of the 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Washington, DC, USA, 5–8 October 2019; pp. 270–276. [Google Scholar] [CrossRef]

- Chen, S.; Tang, M.; Kan, J. Encoder–decoder with densely convolutional networks for monocular depth estimation. J. Opt. Soc. Am. A 2019, 36, 1709–1718. [Google Scholar] [CrossRef]

- Sheng, H.; Cheng, K.; Jin, X.; Han, T.; Jiang, X.; Dong, C. Attention-based encoder–decoder network for depth estimation from color-coded light fields. AIP Adv. 2023, 13, 035118. [Google Scholar] [CrossRef]

- Cao, Y.; Wu, Z.; Shen, C. Estimating Depth From Monocular Images as Classification Using Deep Fully Convolutional Residual Networks. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 3174–3182. [Google Scholar] [CrossRef]

- Laina, I.; Rupprecht, C.; Belagiannis, V.; Tombari, F.; Navab, N. Deeper Depth Prediction with Fully Convolutional Residual Networks. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 239–248. [Google Scholar] [CrossRef]

- Goldman, M.; Hassner, T.; Avidan, S. Learn Stereo, Infer Mono: Siamese Networks for Self-Supervised, Monocular, Depth Estimation. arXiv 2019, arXiv:1905.00401. [Google Scholar]

- Bardozzo, F.; Collins, T.; Forgione, A.; Hostettler, A.; Tagliaferri, R. StaSiS-Net: A stacked and siamese disparity estimation network for depth reconstruction in modern 3D laparoscopy. Med. Image Anal. 2022, 77, 102380. [Google Scholar] [CrossRef]

- Prantl, M.; Váša, L. Estimation of differential quantities using Hermite RBF interpolation. Vis. Comput. 2018, 34, 1645–1659. [Google Scholar] [CrossRef]

- Muneeswaran, V.; Rajasekaran, M.P. Gallbladder shape estimation using tree-seed optimization tuned radial basis function network for assessment of acute cholecystitis. In Intelligent Engineering Informatics; Advances in Intelligent Systems and Computing; Springer: Singapore, 2018; Volume 695, pp. 229–239. [Google Scholar] [CrossRef]

- Reid, R.B.; Oxley, M.E.; Eismann, M.T.; Goda, M.E. Quantifying surface normal estimation. In Proceedings of the Polarization: Measurement, Analysis, and Remote Sensing VII, Orlando, FL, USA, 20–21 April 2006; SPIE: Paris, France, 2006; Volume 6240, p. 624001. [Google Scholar]

- Wang, X.; Fouhey, D.F.; Gupta, A. Designing Deep Networks for Surface Normal Estimation. arXiv 2014, arXiv:1411.4958. [Google Scholar]

- Zhan, H.; Weerasekera, C.S.; Garg, R.; Reid, I.D. Self-supervised Learning for Single View Depth and Surface Normal Estimation. arXiv 2019, arXiv:1903.00112. [Google Scholar]

- Bors, A.; Pitas, I. Median Radial Basis Functions Neural Network. IEEE Trans. Neural Netw. 1996, 7, 1351–1364. [Google Scholar] [CrossRef]

- Grabec, I. The Normalized Radial Basis Function Neural Network and its Relation to the Perceptron. arXiv 2007, arXiv:physics/0703229. [Google Scholar]

- Kirchengast, M.; Watzenig, D. A Depth-Buffer-Based Lidar Model with Surface Normal Estimation. IEEE Trans. Intell. Transp. Syst. 2024, 1–12. [Google Scholar] [CrossRef]

- Han, P.; Li, X.; Liu, F.; Cai, Y.; Yang, K.; Yan, M.; Sun, S.; Liu, Y.; Shao, X. Accurate Passive 3D Polarization Face Reconstruction under Complex Conditions Assisted with Deep Learning. Photonics 2022, 9, 924. [Google Scholar] [CrossRef]

- Fangmin, L.; Ke, C.; Xinhua, L. 3D Face Reconstruction Based on Convolutional Neural Network. In Proceedings of the 10th International Conference on Intelligent Computation Technology and Automation, ICICTA 2017, Changsha, China, 9–10 October 2017; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2017; Volume 2017, pp. 71–74. [Google Scholar] [CrossRef]

- Fan, H.; Zhao, Y.; Su, G.; Zhao, T.; Jin, S. The Multi-View Deep Visual Adaptive Graph Convolution Network and Its Application in Point Cloud. Trait. Signal 2023, 40, 31–41. [Google Scholar] [CrossRef]

- Taamazyan, V.; Kadambi, A.; Raskar, R. Shape from Mixed Polarization. arXiv 2016, arXiv:1605.02066. [Google Scholar] [CrossRef]

- Usmani, K.; O’Connor, T.; Javidi, B. Three-dimensional polarimetric image restoration in low light with deep residual learning and integral imaging. Opt. Express 2021, 29, 29505–29517. [Google Scholar] [CrossRef] [PubMed]

- Ning, T.; Ma, X.; Li, Y.; Li, Y.; Liu, K. Efficient acquisition of Mueller matrix via spatially modulated polarimetry at low light field. Opt. Express 2023, 31, 14532–14559. [Google Scholar] [CrossRef]

- Ba, Y.; Chen, R.; Wang, Y.; Yan, L.; Shi, B.; Kadambi, A. Physics-based Neural Networks for Shape from Polarization. arXiv 2019, arXiv:1903.10210. [Google Scholar]

- Mortazavi, F.S.; Dajkhosh, S.; Saadatseresht, M. Surface Normal Reconstruction Using Polarization-UNET. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, X-4/W1-2022, 537–543. [Google Scholar] [CrossRef]

- Yaqub, M.; Jinchao, F.; Ahmed, S.; Arshid, K.; Bilal, M.A.; Akhter, M.P.; Zia, M.S. GAN-TL: Generative Adversarial Networks with Transfer Learning for MRI Reconstruction. Appl. Sci. 2022, 12, 8841. [Google Scholar] [CrossRef]

- Cardoen, T.; Leroux, S.; Simoens, P. Iterative Online 3D Reconstruction from RGB Images. Sensors 2022, 22, 9782. [Google Scholar] [CrossRef]

- Kang, I.; Pang, S.; Zhang, Q.; Fang, N.; Barbastathis, G. Recurrent neural network reveals transparent objects through scattering media. Opt. Express 2021, 29, 5316–5326. [Google Scholar] [CrossRef]

- Heydari, M.J.; Ghidary, S.S. 3D Motion Reconstruction From 2D Motion Data Using Multimodal Conditional Deep Belief Network. IEEE Access 2019, 7, 56389–56408. [Google Scholar] [CrossRef]

- Smith, W.A.P.; Ramamoorthi, R.; Tozza, S. Height-from-Polarisation with Unknown Lighting or Albedo. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2875–2888. [Google Scholar] [CrossRef]

- Yu, Y.; Zhu, D.; Smith, W.A.P. Shape-from-Polarisation: A Nonlinear Least Squares Approach. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2969–2976. [Google Scholar] [CrossRef]

- Lei, C.; Qi, C.; Xie, J.; Fan, N.; Koltun, V.; Chen, Q. Shape From Polarization for Complex Scenes in the Wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12632–12641. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Kerr, J.; Fu, L.; Huang, H.; Avigal, Y.; Tancik, M.; Ichnowski, J.; Kanazawa, A.; Goldberg, K. Evo-NeRF: Evolving NeRF for Sequential Robot Grasping of Transparent Objects. In Proceedings of the 6th Conference on Robot Learning, PMLR, Auckland, New Zealand, 14–18 December 2023. [Google Scholar]

- Zhu, H.; Sun, Y.; Liu, C.; Xia, L.; Luo, J.; Qiao, N.; Nevatia, R.; Kuo, C.H. Multimodal Neural Radiance Field. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023. [Google Scholar] [CrossRef]

- Boss, M.; Jampani, V.; Kim, K.; Lensch, H.P.A.; Kautz, J. Two-shot Spatially-varying BRDF and Shape Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3982–3991. [Google Scholar] [CrossRef]

- Jakob, W.; Speierer, S.; Roussel, N.; Nimier-David, M.; Vicini, D.; Zeltner, T.; Nicolet, B.; Crespo, M.; Leroy, V.; Zhang, Z. Mitsuba 3 Renderer. 2022. Available online: https://mitsuba-renderer.org (accessed on 20 July 2022).

- Deschaintre, V.; Aittala, M.; Durand, F.; Drettakis, G.; Bousseau, A. Single-image SVBRDF capture with a rendering-aware deep network. ACM Trans. Graph. 2018, 37, 1–15. [Google Scholar] [CrossRef]

- He, Z.; Feng, W.; Zhao, X.; Lv, Y. 6D Pose Estimation of Objects: Recent Technologies and Challenges. Appl. Sci. 2020, 11, 228. [Google Scholar] [CrossRef]

- Wang, C.; Xu, D.; Zhu, Y.; Martín-Martín, R.; Lu, C.; Fei-Fei, L.; Savarese, S. DenseFusion: 6D Object Pose Estimation by Iterative Dense Fusion. arXiv 2019, arXiv:1901.04780. [Google Scholar]

- Trabelsi, A.; Chaabane, M.; Blanchard, N.; Beveridge, R. A Pose Proposal and Refinement Network for Better 6D Object Pose Estimation. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021. [Google Scholar]

- Sock, J.; Kasaei, S.H.; Lopes, L.S. Multi-view 6D Object Pose Estimation and Camera Motion Planning using RGBD Images. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Shah, S.H.; Lin, C.Y.; Tran, C.C.; Ahmad, A.R. Robot Pose Estimation and Normal Trajectory Generation on Curved Surface Using an Enhanced Non-Contact Approach. Sensors 2023, 23, 3816. [Google Scholar] [CrossRef]

- Martelo, J.B.; Lundgren, J.; Andersson, M. Paperboard Coating Detection Based on Full-Stokes Imaging Polarimetry. Sensors 2020, 21, 208. [Google Scholar] [CrossRef]

- Nezadal, M.; Schur, J.; Schmidt, L.P. Non-destructive testing of glass fibre reinforced plastics with a full polarimetric imaging system. In Proceedings of the 2014 39th International Conference on Infrared, Millimeter, and Terahertz waves (IRMMW-THz), Tucson, AZ, USA, 14–19 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–2. [Google Scholar] [CrossRef]

- Zhang, H.; Kidera, S. Polarimetric Signature CNN based Complex Permittivity Estimation for Microwave Non-destructive Testing. In Proceedings of the 2020 International Symposium on Antennas and Propagation (ISAP), Osaka, Japan, 25–28 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 781–782. [Google Scholar] [CrossRef]

- Ding, Y.; Ye, J.; Barbalata, C.; Oubre, J.; Lemoine, C.; Agostinho, J.; Palardy, G. Next-generation perception system for automated defects detection in composite laminates via polarized computational imaging. arXiv 2021, arXiv:2108.10819. [Google Scholar]

- Parkinson, J.C.; Coronato, P.A.; Greivenkamp, J.; Vukobratovich, D.; Kupinski, M. Mueller polarimetry for quantifying the stress optic coefficient in the infrared. In Proceedings of the Polarization Science and Remote Sensing XI, San Diego, CA, USA, 3 October 2023; Snik, F., Kupinski, M.K., Shaw, J.A., Eds.; SPIE: Paris, France, 2023; Volume 12690, pp. 95–107. [Google Scholar] [CrossRef]

- Li, H.; Liao, R.; Zhang, H.; Ma, G.; Guo, Z.; Tu, H.; Chen, Y.; Ma, H. Stress Detection of Conical Frustum Windows in Submersibles Based on Polarization Imaging. Sensors 2022, 22, 2282. [Google Scholar] [CrossRef] [PubMed]

- Harfenmeister, K.; Itzerott, S.; Weltzien, C.; Spengler, D. Agricultural Monitoring Using Polarimetric Decomposition Parameters of Sentinel-1 Data. Remote Sens. 2021, 13, 575. [Google Scholar] [CrossRef]

- Yang, W.; Yang, J.; Zhao, K.; Gao, Q.; Liu, L.; Zhou, Z.; Hou, S.; Wang, X.; Shen, G.; Pang, X.; et al. Low-Noise Dual-Band Polarimetric Image Sensor Based on 1D Bi2S3 Nanowire. Adv. Sci. 2021, 8, 2100075. [Google Scholar] [CrossRef] [PubMed]

- Usmani, K.; Krishnan, G.; O’Connor, T.; Javidi, B. Deep learning polarimetric three-dimensional integral imaging object recognition in adverse environmental conditions. Opt. Express 2021, 29, 12215–12228. [Google Scholar] [CrossRef] [PubMed]

- Shao, M.; Xia, C.; Duan, D.; Wang, X. Polarimetric Inverse Rendering for Transparent Shapes Reconstruction. IEEE Trans. Multimed. 2024, 26, 7801–7811. [Google Scholar] [CrossRef]

- Niemitz, L.; Sorensen, S.T.; Wang, Y.; Messina, W.; Burke, R.; Andersson-Engels, S. Towards a flexible polarimetric camera-on-tip miniature endoscope for 3 × 3 Mueller matrix measurements of biological tissue. In Proceedings of the Translational Biophotonics: Diagnostics and Therapeutics III, Munich, Germany, 25–29 June 2023; Lilge, L.D., Huang, Z., Eds.; SPIE: Paris, France, 2023; p. 110. [Google Scholar] [CrossRef]

- Fernández, A.; Demczylo, R. Real-time polarimetric microscopy of biological tissue. In Proceedings of the Biophotonics Congress: Optics in the Life Sciences 2023 (OMA, NTM, BODA, OMP, BRAIN), Vancouver, BC, Canada, 24–27 April 2023; Optica Publishing Group: Washington, DC, USA, 2023; p. 1. [Google Scholar] [CrossRef]

- Yang, B.; Yan, C.; Zhang, J.; Zhang, H. Refractive index and surface roughness estimation using passive multispectral and multiangular polarimetric measurements. Opt. Commun. 2016, 381, 336–345. [Google Scholar] [CrossRef]

- Huynh, C.P.; Robles-Kelly, A.; Hancock, E.R. Shape and refractive index from single-view spectro-polarimetric images. Int. J. Comput. Vis. 2013, 101, 64–94. [Google Scholar] [CrossRef]

- Kawahara, R.; Kuo, M.Y.J.; Okabe, T. Polarimetric Underwater Stereo. In Proceedings of the Scandinavian Conference on Image Analysis, Sirkka, Finland, 18–21 April 2023; Springer: Cham, Switzerland, 2023; pp. 534–550. [Google Scholar] [CrossRef]

- Gao, J.; Wang, G.; Chen, Y.; Wang, X.; Li, Y.; Chew, K.H.; Chen, R.P. Mueller transform matrix neural network for underwater polarimetric dehazing imaging. Opt. Express 2023, 31, 27213–27222. [Google Scholar] [CrossRef]

- Hu, H.; Zhang, Y.; Li, X.; Lin, Y.; Cheng, Z.; Liu, T. Polarimetric underwater image recovery via deep learning. Opt. Lasers Eng. 2020, 133, 106152. [Google Scholar] [CrossRef]

- Qi, J.; Tatla, T.; Nissanka-Jayasuriya, E.; Yuan, A.Y.; Stoyanov, D.; Elson, D.S. Surgical polarimetric endoscopy for the detection of laryngeal cancer. Nat. Biomed. Eng. 2023, 7, 971–985. [Google Scholar] [CrossRef]

- Qi, J.; Elson, D.S. Polarimetric endoscopy. In Polarized Light in Biomedical Imaging and Sensing: Clinical and Preclinical Applications; Springer: Cham, Switzerland, 2022; pp. 179–204. [Google Scholar]

- Castaño, L.U.; Mirsanaye, K.; Kontenis, L.; Krouglov, S.; Žurauskas, E.; Navab, R.; Yasufuku, K.; Tsao, M.; Akens, M.K.; Wilson, B.C.; et al. Wide-field Stokes polarimetric microscopy for second harmonic generation imaging. J. Biophotonics 2023, 16, e202200284. [Google Scholar] [CrossRef]

- Novikova, T.; Pierangelo, A.; Martino, A.D.; Benali, A.; Validire, P. Polarimetric Imaging for Cancer Diagnosis and Staging. Opt. Photon. News 2012, 23, 26–33. [Google Scholar] [CrossRef]

- Hachkevych, O.R.; Matyash, I.Y.; Minaylova, I.A.; Mishchuk, O.M.; Serdega, B.K.; Terlets’kyi, R.F.; Brukhal’, M.B. Mathematical Modeling and Polarimetry of the Thermal Stressed State of a Partially Transparent Solid Subjected to the Action of Thermal Radiation. J. Math. Sci. 2023, 273, 982–998. [Google Scholar] [CrossRef]

- Miyazaki, D.; Kagesawa, M.; Ikeuchi, K. Determining Shapes of Transparent Objects from Two Polarization Images. In Proceedings of the MVA, Nara, Japan, 11–13 December 2002; pp. 26–31. [Google Scholar]

- Carterette, B.; Voorhees, E.M. Overview of information retrieval evaluation. In Current Challenges in Patent Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2011; pp. 69–85. [Google Scholar]

- Oršić, M.; Krešo, I.; Bevandić, P.; Šegvić, S. In Defense of Pre-trained ImageNet Architectures for Real-time Semantic Segmentation of Road-driving Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12607–12616. [Google Scholar]

- Yan, R.; Yang, K.; Wang, K. NLFNet: Non-Local Fusion Towards Generalized Multimodal Semantic Segmentation across RGB-Depth, Polarization, and Thermal Images. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1129–1135. [Google Scholar] [CrossRef]

- Shakeri, M.; Loo, S.Y.; Zhang, H. Polarimetric Monocular Dense Mapping Using Relative Deep Depth Prior. IEEE Robot. Autom. Lett. 2021, 6, 4512–4519. [Google Scholar] [CrossRef]

- Alouini, M.; Goudail, F.; Refregier, P.; Grisard, A.; Lallier, E.; Dolfi, D. Multispectral polarimetric imaging with coherent illumination: Towards higher image contrast. In Proceedings of the Polarization: Measurement, Analysis, and Remote Sensing VI, Orlando, FL, USA, 15 July 2004; Goldstein, D.H., Chenault, D.B., Eds.; SPIE: Paris, France, 2004; Volume 5432, pp. 133–144. [Google Scholar] [CrossRef]

- Hagen, N. Review of thermal infrared polarimetry, part 2: Experiment. Opt. Eng. 2022, 61, 080901. [Google Scholar] [CrossRef]

- Mihoubi, S.; Lapray, P.J.; Bigué, L. Survey of Demosaicking Methods for Polarization Filter Array Images. Sensors 2018, 18, 3688. [Google Scholar] [CrossRef]

- Pistellato, M.; Bergamasco, F.; Fatima, T.; Torsello, A. Deep Demosaicing for Polarimetric Filter Array Cameras. IEEE Trans. Image Process. 2022, 31, 2017–2026. [Google Scholar] [CrossRef]

| Application | Condition | Papers |

|---|---|---|

| Transparent object | 3D reconstruction | [13,42] |

| Segmentation | [24,43] | |

| Multi-fields | Shape estimation from diffuse polarization | [2] |

| Surface and shape reconstruction by inverse rendering | [14,15,44] | |

| Reflection removal | [23] | |

| Reconstruction of metallic surfaces | [28] | |

| Pose Estimation | [45,46] | |

| Navigation | [37,38,39] | |

| Medical | Mueller microscopy | [40,41] |

| Robotic imaging | [47] | |

| Semantic segmentation | [48] | |

| Autonomous driving | Semantic segmentation | [25,26,27,49,50,51] |

| Object detection under bad weather | [52] | |

| Landscape imaging | Haze removal | [51,53,54,55,56,57] |

| Industry | Piece inspection | [58] |

| Sorting by semantic segmentation | [24] | |

| Low-light applications | Image restoration | [22,59] |

| CMX SwinT | CMX Segf | |||

|---|---|---|---|---|

| Input | RGB | RGB | AoLP | DoLP |

| mIoU | 0.496 | 0.543 | 0.732 | 0.757 |

| Pre-Train | Non Pre-Train | |||

|---|---|---|---|---|

| Non-Augmented | Augmented | Non-Augmented | Augmented | |

| Accuracy | 0.766 | 0.831 | 0.743 | 0.809 |

| Recall | 0.772 | 0.826 | 0.729 | 0.809 |

| F1-Score | 0.769 | 0.817 | 0.736 | 0.802 |

| Precision | 0.770 | 0.821 | 0.732 | 0.806 |

| mIoU | 0.3143 | 0.4426 | 0.2971 | 0.3857 |

| Conditions | Glass | Car | Sky | Road | Building | |

|---|---|---|---|---|---|---|

| CMX Segf | RGB | 0.57 | 0.71 | 0.68 | 0.75 | 0.73 |

| CMX SwinT | RGB | 0.54 | 0.66 | 0.63 | 0.74 | 0.60 |

| CMX Segf | AoLP | 0.73 | 0.78 | 0.76 | 0.81 | 0.76 |

| CMX Segf | DoLP | 0.74 | 0.78 | 0.75 | 0.80 | 0.76 |

| Vibotorch | Non-Augmented/pre-train | 0.11 | 0.42 | 0.28 | 0.32 | 0.41 |

| Augmented/pre-train | 0.30 | 0.44 | 0.39 | 0.41 | 0.48 | |

| Non-Augmented/Non-Pre-train | 0.19 | 0.24 | 0.22 | 0.29 | 0.39 | |

| Augmented/Non-Pre-train | 0.16 | 0.31 | 0.21 | 0.26 | 0.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taglione, C.; Mateo, C.; Stolz, C. Polarimetric Imaging for Robot Perception: A Review. Sensors 2024, 24, 4440. https://doi.org/10.3390/s24144440

Taglione C, Mateo C, Stolz C. Polarimetric Imaging for Robot Perception: A Review. Sensors. 2024; 24(14):4440. https://doi.org/10.3390/s24144440

Chicago/Turabian StyleTaglione, Camille, Carlos Mateo, and Christophe Stolz. 2024. "Polarimetric Imaging for Robot Perception: A Review" Sensors 24, no. 14: 4440. https://doi.org/10.3390/s24144440