Open-Vocabulary Predictive World Models from Sensor Observations

Abstract

1. Introduction

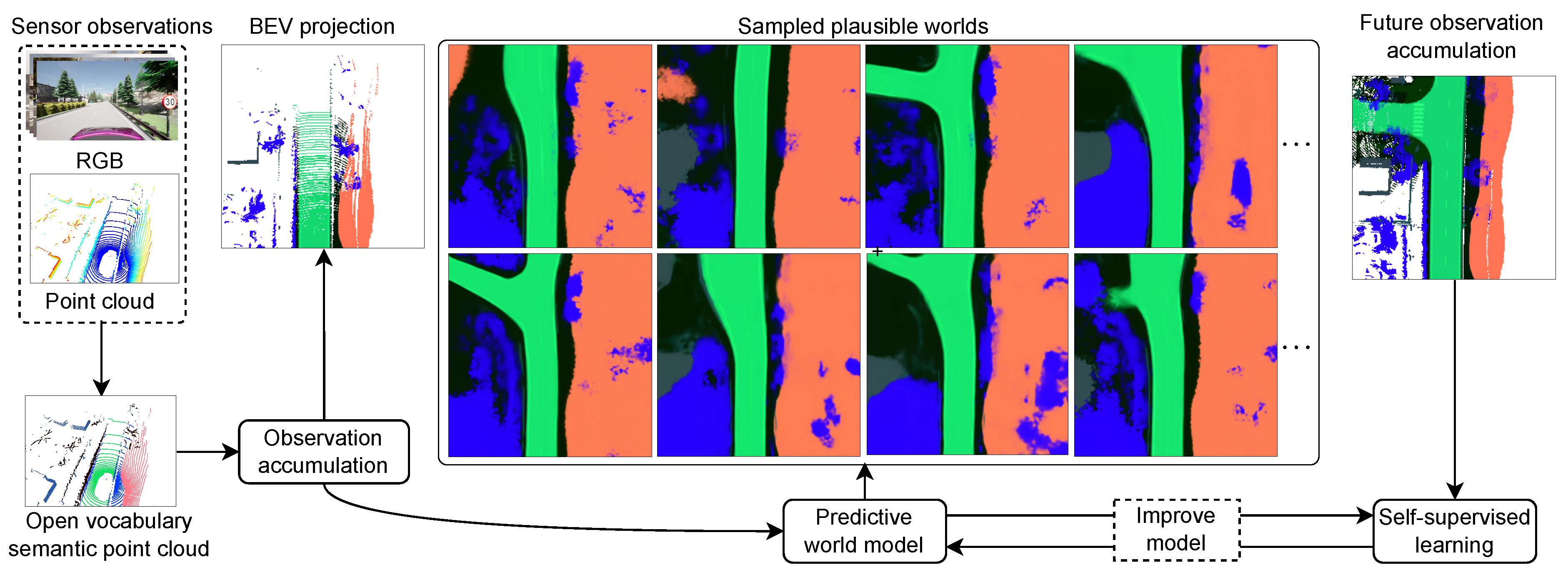

- We propose an open-vocabulary predictive world model (OV-PWM) capable of predicting a diverse set of complete environment states represented by compositional latent semantic embeddings [41] by learning from observational experience only.

- We mathematically and empirically show that OV-PWMs can be learned end-to-end in a single stage, in contrast to prior conventional closed-set semantic PWMs with a two-stage optimization scheme [6].

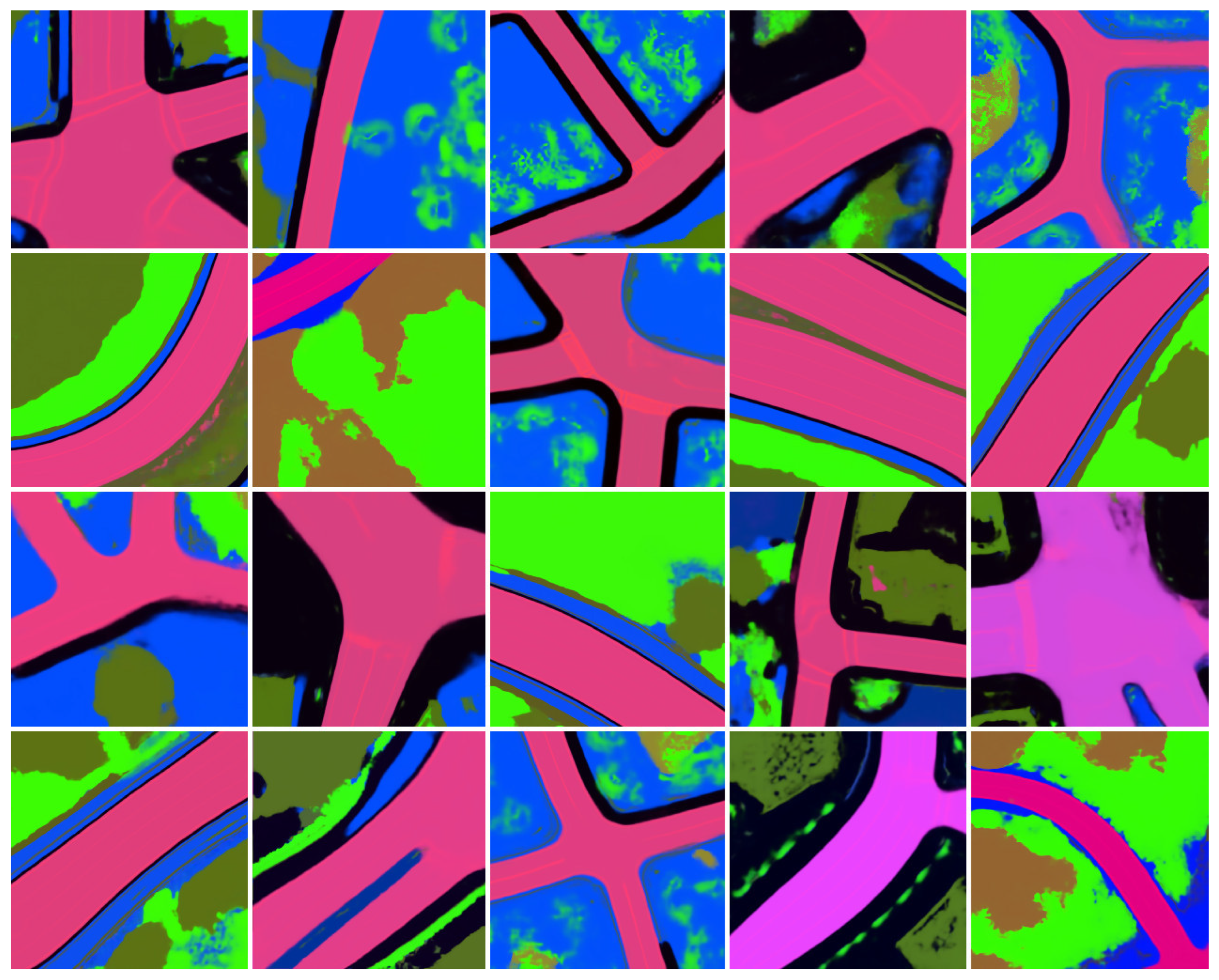

- We empirically demonstrate that OV-PWMs can generate accurate and diverse plausible predictions in a new urban environment with fine semantic detail like road markings, reaching 69.19 mIoU on six query semantics.

2. Background and Related Works

2.1. Arbitrary Conditional Density Estimation

2.2. Bird’s-Eye View Generation

2.3. World Models

2.4. Spatial AI

2.5. Open-Vocabulary Semantic Segmentation

3. Open-Vocabulary Partial World States

3.1. Sensor Observation Processing

3.2. Open-Vocabulary Semantics

3.3. Observation Accumulation

3.4. Partial World State Representation

4. Open-Vocabulary Predictive World Model

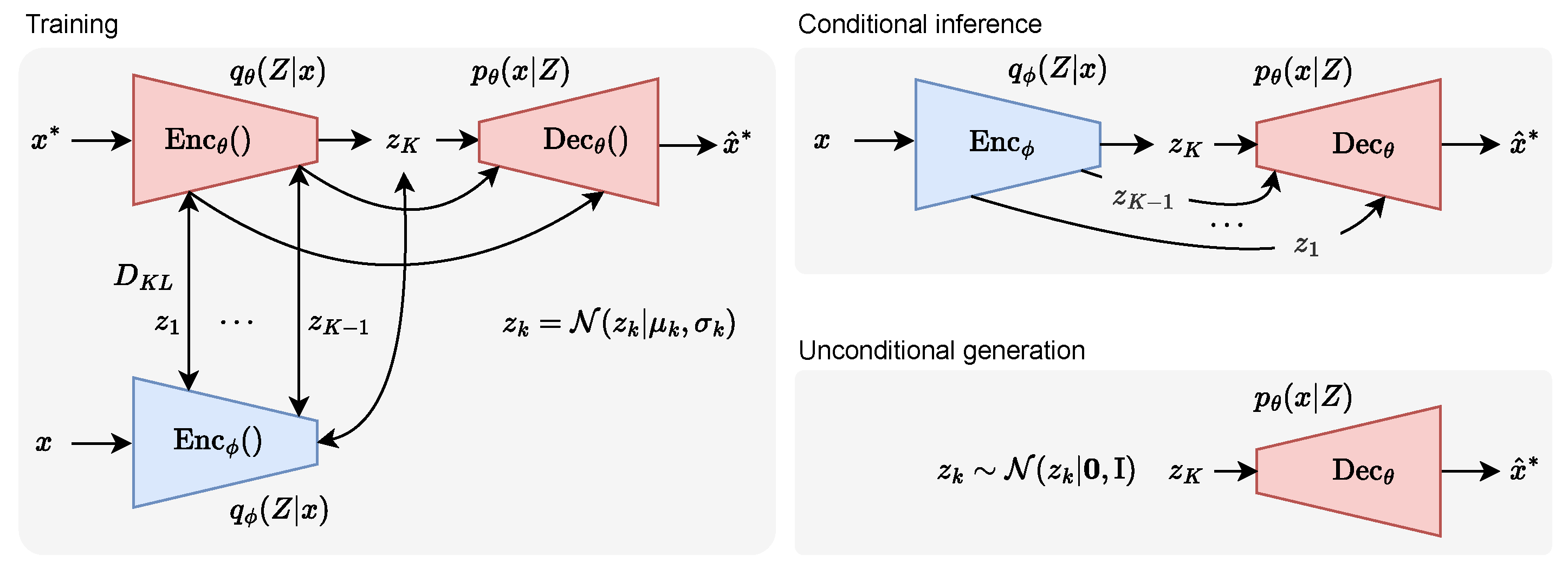

4.1. Latent Variable Generative Models

4.2. Model Implementation and Training

4.3. Model Inference

5. Experiments

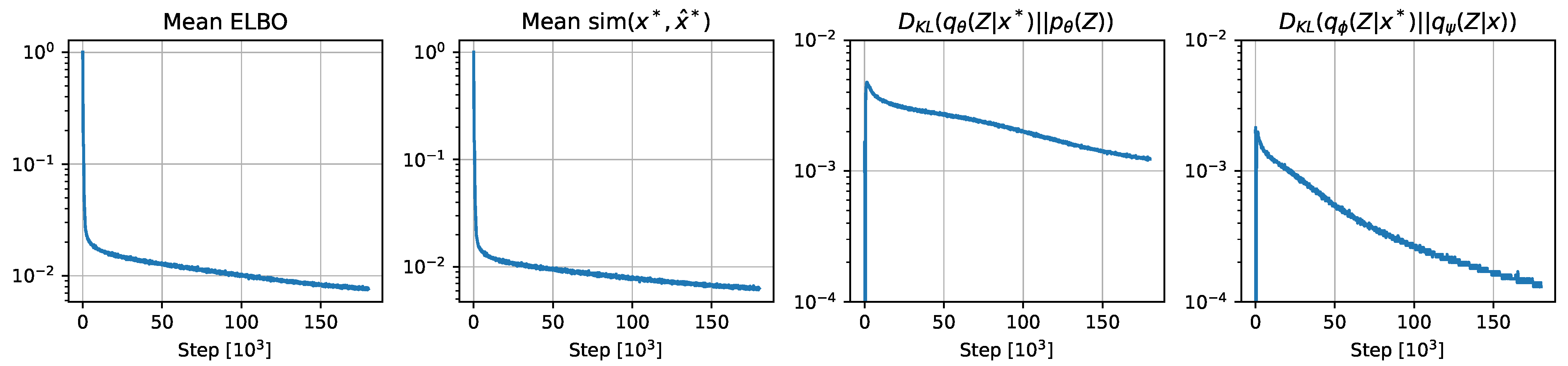

6. Results

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Semantic Taxonomy

| Level 1 (CARLA) | Level 2 | Level 3 |

|---|---|---|

| unlabeled | unlabeled | unlabeled |

| road | drivable | static |

| side walk | ground | static |

| building | construction | static |

| wall | construction | static |

| fence | structural | static |

| pole | structural | static |

| traffic light | traffic information | static |

| traffic sign | traffic information | static |

| vegetation | plant | static |

| terrain | ground | static |

| sky | sky | sky |

| pedestrian | person | dynamic |

| rider | person | dynamic |

| car | vehicle | dynamic |

| truck | vehicle | dynamic |

| bus | vehicle | dynamic |

| train | vehicle | dynamic |

| motorcycle | vehicle | dynamic |

| bicycle | vehicle | dynamic |

| static | static | static |

| dynamic | dynamic | dynamic |

| other | other | other |

| water | fluid | dynamic |

| road marking | road | drivable |

| ground | static | static |

| bridge | construction | static |

| rail track | metal | static |

| guard rail | structural | static |

Appendix B. Sufficient Similarity Threshold Values

| Semantic | Suff. Sim. |

|---|---|

| road | 0.5934 |

| road marking | 0.3944 |

| side walk | 0.481 |

| vegetation | 0.4872 |

| static | 0.456 |

| drivable | 0.5429 |

| dynamic | 0.6300 |

Appendix C. Deriving Cosine Distance from Negative Log Likelihood Minimization

References

- Spelke, E.; Kinzler, K. Core knowledge. Dev. Sci. 2007, 10, 89–96. [Google Scholar] [CrossRef] [PubMed]

- Lake, B.; Ullman, T.; Tenenbaum, J.; Gershman, S. Building machines that learn and think like people. Behav. Brain Sci. 2017, 40, e253. [Google Scholar] [CrossRef]

- Schmidhuber, J. Making the World Differentiable: On Using Self-Supervised Fully Recurrent Neural Networks for Dynamic Reinforcement Learning and Planning in Non-Stationary Environments. In Forschungsberichte Kunstliche Intelligenz; Technische Universitat Miinchen: Munich, Germany, 1990; Volume 126. [Google Scholar]

- Schmidhuber, J. A possibility for implementing curiosity and boredom in model-building neural controllers. In From Animals to Animats: Proceedings of the First International Conference on Simulation of Adaptive Behavior; MIT Press: Cambridge, MA, USA, 1991; pp. 222–227. [Google Scholar]

- Schmidhuber, J. Formal Theory of Creativity, Fun, and Intrinsic Motivation. IEEE Trans. Auton. Ment. Dev. 2010, 2, 230–247. [Google Scholar] [CrossRef]

- Karlsson, R.; Carballo, A.; Fujii, K.; Ohtani, K.; Takeda, K. Predictive World Models from Real-World Partial Observations. In Proceedings of the IEEE International Conference on Mobility, Operations, Services and Technologies (MOST), Detroit, MI, USA, 17–19 May 2023; pp. 152–166. [Google Scholar]

- Ahn, M.; Brohan, A.; Brown, N.; Chebotar, Y.; Cortes, O.; David, B.; Finn, C.; Fu, C.; Gopalakrishnan, K.; Hausman, K.; et al. Do As I Can and Not As I Say: Grounding Language in Robotic Affordances. arXiv 2022, arXiv:2204.01691. [Google Scholar]

- Shah, D.; Osinski, B.; Ichter, B.; Levine, S. LM-Nav: Robotic Navigation with Large Pre-Trained Models of Language, Vision, and Action. In Proceedings of the 6th Annual Conference on Robot Learning (CoRL), Auckland, New Zealand, 14–18 December 2022. [Google Scholar]

- Huang, W.; Abbeel, P.; Pathak, D.; Mordatch, I. Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents. arXiv 2022, arXiv:2201.07207. [Google Scholar]

- Zeng, A.; Attarian, M.; Ichter, B.; Choromanski, K.; Wong, A.; Welker, S.; Tombari, F.; Purohit, A.; Ryoo, M.; Sindhwani, V.; et al. Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Huang, W.; Xia, F.; Xiao, T.; Chan, H.; Liang, J.; Florence, P.; Zeng, A.; Tompson, J.; Mordatch, I.; Chebotar, Y.; et al. Inner Monologue: Embodied Reasoning through Planning with Language Models. In Proceedings of the 6th Conference on Robot Learning (CoRL), Munich, Germany, 5–9 May 2023; pp. 1769–1782. [Google Scholar]

- Liang, J.; Huang, W.; Xia, F.; Xu, P.; Hausman, K.; Ichter, B.; Florence, P.; Zeng, A. Code as Policies: Language Model Programs for Embodied Control. arXiv 2022, arXiv:2209.07753. [Google Scholar]

- Nottingham, K.; Ammanabrolu, P.; Suhr, A.; Choi, Y.; Hajishirzi, H.; Singh, S.; Fox, R. Do Embodied Agents Dream of Pixelated Sheep?: Embodied Decision Making using Language Guided World Modelling. In Proceedings of the Workshop on Reincarnating Reinforcement Learning at ICLR, Kigali, Rwanda, 5 May 2023. [Google Scholar]

- Singh, I.; Blukis, V.; Mousavian, A.; Goyal, A.; Xu, D.; Tremblay, J.; Fox, D.; Thomason, J.; Garg, A. ProgPrompt: Generating Situated Robot Task Plans using Large Language Models. In Proceedings of the International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 11523–11530. [Google Scholar]

- Brohan, A.; Brown, N.; Carbajal, J.; Chebotar, Y.; Chen, X.; Choromanski, K.; Ding, T.; Driess, D.; Dubey, A.; Finn, C.; et al. RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control. arXiv 2023, arXiv:2307.15818. [Google Scholar]

- Song, H.; Wu, J.; Washington, C.; Sadler, B.; Chao, W.; Su, Y. LLM-Planner: Few-Shot Grounded Planning for Embodied Agents with Large Language Models. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. In Proceedings of the 36th Advances in Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 24824–24837. [Google Scholar]

- Wang, G.; Xie, Y.; Jiang, Y.; Mandlekar, A.; Xiao, C.; Zhu, Y.; Fan, L.; Anandkumar, A. Voyager: An Open-Ended Embodied Agent with Large Language Models. arXiv 2023, arXiv:2305.16291. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning (ICML), Vienna, Austria, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Schuhmann, C.; Beaumont, R.; Vencu, R.; Gordon, C.; Wightman, R.; Cherti, M.; Coombes, T.; Katta, A.; Mullis, C.; Wortsman, M.; et al. LAION-5B: An open large-scale dataset for training next generation image-text models. In Proceedings of the Thirty-Sixth Conference on Neural Information Processing Systems Datasets and Benchmarks Track, Virtual, 28 November 2022. [Google Scholar]

- Li, B.; Weinberger, K.Q.; Belongie, S.; Koltun, V.; Ranftl, R. Language-driven Semantic Segmentation. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 25–29 April 2022. [Google Scholar]

- Ghiasi, G.; Gu, X.; Cui, Y.; Lin, T.Y. Scaling Open-Vocabulary Image Segmentation with Image-Level Labels. In Proceedings of the IEEE/CVF European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Xu, M.; Zhang, Z.; Wei, F.; Lin, Y.; Cao, Y.; Hu, H.; Bai, X. A Simple Baseline for Open Vocabulary Semantic Segmentation with Pre-trained Vision-language Model. In Proceedings of the IEEE/CVF European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Rao, Y.; Zhao, W.; Chen, G.; Tang, Y.; Zhu, Z.; Huang, G.; Zhou, J.; Lu, J. DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Zhou, C.; Loy, C.C.; Dai, B. Extract Free Dense Labels from CLIP. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Ding, Z.; Wang, J.; Tu, Z. Open-Vocabulary Universal Image Segmentation with MaskCLIP. In Proceedings of the International Conference on Machine Learning (ICLR), Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Xu, M.; Zhang, Z.; Wei, F.; Hu, H.; Bai, X. Side Adapter Network for Open-Vocabulary Semantic Segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Zou, X.; Dou, Z.Y.; Yang, J.; Gan, Z.; Li, L.; Li, C.; Dai, X.; Behl, H.; Wang, J.; Yuan, L.; et al. Generalized Decoding for Pixel, Image, and Language. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 15116–15127. [Google Scholar]

- Liang, F.; Wu, B.; Dai, X.; Li, K.; Zhao, Y.; Zhang, H.; Zhang, P.; Vajda, P.; Marculescu, D. Open-vocabulary semantic segmentation with mask-adapted clip. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7061–7070. [Google Scholar]

- Rana, K.; Haviland, J.; Garg, S.; Abou-Chakra, J.; Reid, I.; Suenderhauf, N. SayPlan: Grounding Large Language Models using 3D Scene Graphs for Scalable Task Planning. In Proceedings of the 7th Annual Conference on Robot Learning (CoRL), Atlanta, GA, USA, 6–9 November 2023. [Google Scholar]

- Karlsson, R.; Carballo, A.; Lepe-Salazar, F.; Fujii, K.; Ohtani, K.; Takeda, K. Learning to Predict Navigational Patterns From Partial Observations. IEEE Robot. Autom. Lett. 2023, 8, 5592–5599. [Google Scholar] [CrossRef]

- McNamara, T.P.; Hardy, J.K.; Hirtle, S.C. Subjective hierarchies in spatial memory. J. Exp. Psychol. Learn. Mem. Cogn. 1989, 15, 211–227. [Google Scholar] [CrossRef]

- Davison, A.J. FutureMapping: The Computational Structure of Spatial AI Systems. arXiv 2018, arXiv:1803.11288. [Google Scholar]

- Ha, H.; Song, S. Semantic Abstraction: Open-World 3D Scene Understanding from 2D Vision-Language Models. In Proceedings of the 2022 Conference on Robot Learning (CoRL), Auckland, New Zealand, 14–18 December 2022. [Google Scholar]

- Peng, S.; Genova, K.; Jiang, C.; Tagliasacchi, A.; Pollefeys, M.; Funkhouser, T. OpenScene: 3D Scene Understanding with Open Vocabularies. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Jatavallabhula, K.; Kuwajerwala, A.; Gu, Q.; Omama, M.; Chen, T.; Maalouf, A.; Li, S.; Iyer, G.; Saryazdi, S.; Keetha, N.; et al. ConceptFusion: Open-set Multimodal 3D Mapping. In Proceedings of the Robotics: Science and System (RSS), Daegu, Republic of Korea, 10–14 July 2023. [Google Scholar]

- Huang, C.; Mees, O.; Zeng, A.; Burgard, W. Visual Language Maps for Robot Navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023. [Google Scholar]

- Xia, F.; Zamir, A.R.; He, Z.; Sax, A.; Malik, J.; Savarese, S. Gibson Env: Real-World Perception for Embodied Agents. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9068–9079. [Google Scholar] [CrossRef]

- Chen, K.; Chen, J.K.; Chuang, J.; Vázquez, M.; Savarese, S. Topological Planning with Transformers for Vision-and-Language Navigation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11271–11281. [Google Scholar]

- Armeni, I.; He, Z.Y.; Gwak, J.; Zamir, A.R.; Fischer, M.; Malik, J.; Savarese, S. 3D Scene Graph: A Structure for Unified Semantics, 3D Space, and Camera. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Karlsson, R.; Lepe-Salazar, F.; Takeda, K. Compositional Semantics for Open Vocabulary Spatio-semantic Representations. arXiv 2023, arXiv:2310.04981. [Google Scholar]

- LeCun, Y. A Path towards Autonomous Machine Intelligence. OpenReview. 2022. Available online: https://openreview.net/forum?id=BZ5a1r-kVsf (accessed on 8 June 2024).

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning (CoRL), Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Ivanov, O.; Figurnov, M.; Vetrov, D. Variational Autoencoder with Arbitrary Conditioning. In Proceedings of the ICLR, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Li, Y.; Akbar, S.; Oliva, J. ACFlow: Flow Models for Arbitrary Conditional Likelihoods. In Proceedings of the 37th International Conference on Machine Learning (ICML), Virtual, 13–18 July 2020. [Google Scholar]

- Strauss, R.; Oliva, J. Arbitrary Conditional Distributions with Energy. In Proceedings of the NeurIPS, Virtual, 6–14 December 2021. [Google Scholar]

- Ballard, D. Modular learning in neural networks. In Proceedings of the AAAI, Seattle, WA, USA, 13–17 July 1987. [Google Scholar]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A. Context Encoders: Feature Learning by Inpainting. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and locally consistent image completion. Acm Trans. Graph. 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Yeh, R.; Chen, C.; Lim, T.; Schwing, A.; Hasegawa-Johnson, M.; Do, M. Semantic Image Inpainting with Deep Generative Models. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T. Generative Image Inpainting with Contextual Attention. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Li, Y.; Liu, S.; Yang, J.; Yang, M.H. Generative Face Completion. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5892–5900. [Google Scholar] [CrossRef]

- Child, R. Very Deep VAEs Generalize Autoregressive Models and Can Outperform Them on Images. In Proceedings of the ICLR, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Liu, G.; Reda, F.; Shih, K.; Wang, T.; Tao, A.; Catanzaro, B. Image Inpainting for Irregular Holes Using Partial Convolutions. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T. Free-Form Image Inpainting with Gated Convolution. In Proceedings of the ICCV, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Cai, W.; Wei, Z. PiiGAN: Generative Adversarial Networks for Pluralistic Image Inpainting. IEEE Access 2019, 8, 48451–48463. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z.; Zeng, Y.; Zeng, H.; Zhao, D. PD-GAN: Perceptual-Details GAN for Extremely Noisy Low Light Image Enhancement. In Proceedings of the ICASSP, Toronto, ON, Canada, 6–11 June 2021. [Google Scholar]

- Kingma, D.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2013. [Google Scholar]

- Zheng, C.; Cham, T.; Cai, J. Pluralistic Image Completion. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhao, L.; Mo, Q.; Lin, S.; Wang, Z.; Zuo, Z.; Chen, H.; Xing, W.; Lu, D. UCTGAN: Diverse Image Inpainting Based on Unsupervised Cross-Space Translation. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Peng, J.; Liu, D.; Xu, S.; Li, H. Generating Diverse Structure for Image Inpainting With Hierarchical VQ-VAE. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Strauss, R.; Oliva, J. Posterior Matching for Arbitrary Conditioning. In Proceedings of the NeurIPS, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Nazabal, A.; Olmos, P.; Ghahramani, Z.; Valera, I. Handling Incomplete Heterogeneous Data using VAEs. Pattern Recognit. 2018, 107, 107501. [Google Scholar] [CrossRef]

- Ma, C.; Tschiatschek, S.; Palla, K.; Hernández-Lobato, J.; Nowozin, S.; Zhang, C. EDDI: Efficient Dynamic Discovery of High-Value Information with Partial VAE. In Proceedings of the ICML, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ma, C.; Tschiatschek, S.; Hernández-Lobato, J.M.; Turner, R.E.; Zhang, C. VAEM: A Deep Generative Model for Heterogeneous Mixed Type Data. In Proceedings of the NeurIPS, Virtual, 6–12 December 2020. [Google Scholar]

- Peis, I.; Ma, C.; Hernández-Lobato, J.M. Missing Data Imputation and Acquisition with Deep Hierarchical Models and Hamiltonian Monte Carlo. In Proceedings of the NeurIPS, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Collier, M.; Nazabal, A.; Williams, C.K. VAEs in the Presence of Missing Data. In Proceedings of the ICML Workshop on the Art of Learning with Missing Values (Artemiss), Virtual, 17 July 2020. [Google Scholar]

- Karlsson, R.; Wong, D.; Thompson, S.; Takeda, K. Learning a Model for Inferring a Spatial Road Lane Network Graph using Self-Supervision. In Proceedings of the ITSC, Indianapolis, IN, USA, 19–22 September 2021. [Google Scholar]

- Mallot, H.A.; Bülthoff, H.H.; Little, J.; Bohrer, S. Inverse perspective mapping simplifies optical flow computation and obstacle detection. Biol. Cybern. 1991, 64, 177–185. [Google Scholar] [CrossRef] [PubMed]

- Bertozzi, M.; Broggi, A.; Fascioli, A. An Extension to The Inverse Perspective Mapping to Handle Non-flat Roads. In Proceedings of the 1998 IEEE International Conference on Intelligent Vehicles, Stuttgart, Germany, 28–30 October 1998. [Google Scholar]

- Bertozzi, M.; Broggi, A.; Fascioli, A. Stereo inverse perspective mapping: Theory and applications. Image Vis. Comput. 1998, 16, 585–590. [Google Scholar] [CrossRef]

- Reiher, L.; Lampe, B.; Eckstein, L. A Sim2Real Deep Learning Approach for the Transformation of Images from Multiple Vehicle-Mounted Cameras to a Semantically Segmented Image in Bird’s Eye View. In Proceedings of the ITSC, Rhodes, Greece, 20–23 September 2020. [Google Scholar]

- Wang, Y.; Chao, W.L.; Garg, D.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- End-to-End Pseudo-LiDAR for Image-Based 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022.

- You, Y.; Yan Wang, W.L.C.; Garg, D.; Pleiss, G.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-LiDAR++: Accurate Depth for 3D Object Detection in Autonomous Driving. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 27–30 April 2020. [Google Scholar]

- Guizilini, V.; Hou, R.; Li, J.; Ambrus, R.; Gaidon, A. Semantically-Guided Representation Learning for Self-Supervised Monocular Depth. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 27–30 April 2020. [Google Scholar]

- Guizilini, V.; Ambrus, R.; Pillai, S.; Raventos, A.; Gaidon, A. 3D Packing for Self-Supervised Monocular Depth Estimation. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Guizilini, V.; Ambruş, R.; Burgard, W.; Gaidon, A. Sparse Auxiliary Networks for Unified Monocular Depth Prediction and Completion. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Schulter, S.; Zhai, M.; Jacobs, N.; Chandraker, M. Learning to Look around Objects for Top-View Representations of Outdoor Scenes. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Mani, K.; Daga, S.; Garg, S.; Shankar, N.S.; Krishna Murthy, J.; Krishna, K.M. Mono Lay out: Amodal scene layout from a single image. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 1678–1686. [Google Scholar]

- Philion, J.; Fidler, S. Lift, Splat, Shoot: Encoding Images From Arbitrary Camera Rigs by Implicitly Unprojecting to 3D. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Reading, C.; Harakeh, A.; Chae, J.; Waslander, S. Categorical Depth Distribution Network for Monocular 3D Object Detection. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Hu, A.; Murez, Z.; Mohan, N.; Dudas, S.; Hawke, J.; Badrinarayanan, V.; Cipolla, R.; Kendall, A. FIERY: Future Instance Prediction in Bird’s-Eye View from Surround Monocular Cameras. In Proceedings of the ICCV, Virtual, 16 October 2021. [Google Scholar]

- Lu, C.; van de Molengraft, M.; Dubbelman, G. Monocular Semantic Occupancy Grid Mapping with Convolutional Variational Encoder-Decoder Networks. IEEE Robot. Autom. Lett. 2018, 4, 445–452. [Google Scholar] [CrossRef]

- Roddick, T.; Kendall, A.; Cipolla, R. Orthographic Feature Transform for Monocular 3D Object Detection. In Proceedings of the 29th Brittish Machine Vision Conference (BMVC), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Roddick, T.; Cipolla, R. Predicting Semantic Map Representations from Images using Pyramid Occupancy Networks. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Hendy, N.; Sloan, C.; Tian, F.; Duan, P.; Charchut, N.; Yuan, Y.; Wang, X.; Philbin, J. FISHING Net: Future Inference of Semantic Heatmaps In Grids. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Luo, K.Z.; Weng, X.; Wang, Y.; Wu, S.; Li, J.; Weinberger, K.Q.; Wang, Y.; Pavone, M. Augmenting Lane Perception and Topology Understanding with Standard Definition Navigation Maps. arXiv 2023, arXiv:2311.04079. [Google Scholar]

- Yang, W.; Li, Q.; Liu, W.; Yu, Y.; Liu, S.; He, H.; Pan, J. Projecting Your View Attentively: Monocular Road Scene Layout Estimation via Cross-view Transformation. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Wang, Y.; Guizilini, V.C.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. DETR3D: 3D Object Detection from Multi-view Images via 3D-to-2D Queries. In Proceedings of the CoRL, London, UK, 8 November 2021. [Google Scholar]

- Chitta, K.; Prakash, A.; Geiger, A. NEAT: Neural Attention Fields for End-to-End Autonomous Driving. In Proceedings of the ICCV, Virtual, 16 October 2021. [Google Scholar]

- Casas, S.; Sadat, A.; Urtasun, R. MP3: A Unified Model to Map, Perceive, Predict and Plan. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Li, Q.; Wang, Y.; Wang, Y.; Zhao, H. HDMapNet: An Online HD Map Construction and Evaluation Framework. In Proceedings of the ICRA, Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Corneil, D.; Gerstner, W.; Brea, J. Efficient Model-Based Deep Reinforcement Learning with Variational State Tabulation. In Proceedings of the ICML, Stockholm, Sweden, 10–18 July 2018. [Google Scholar]

- Ha, D.; Schmidhuber, J. World Models. arXiv 2018, arXiv:1803.10122. [Google Scholar]

- Kurutach, T.; Tamar, A.; Yang, G.; Russell, S.J.; Abbeel, P. Learning Plannable Representations with Causal InfoGAN. In Proceedings of the NeurIPS, Montreal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Wang, A.; Kurutach, T.; Liu, K.; Abbeel, P.; Tamar, A. Learning Robotic Manipulation through Visual Planning and Acting. In Proceedings of the Robotics: Science and Systems (RSS), Delft, The Netherlands, 15–19 July 2024. [Google Scholar]

- Watters, N.; Zoran, D.; Weber, T.; Battaglia, P.; Pascanu, R.; Tacchetti, A. Visual Interaction Networks: Learning a Physics Simulator from Video. In Proceedings of the NeurIPS, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Hafner, D.; Lillicrap, T.; Fischer, I.; Villegas, R.; Ha, D.; Lee, H.; Davidson, J. Learning Latent Dynamics for Planning from Pixels. In Proceedings of the PMLR, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 2555–2565. [Google Scholar]

- Laversanne-Finot, A.; Pere, A.; Oudeyer, P.Y. Curiosity Driven Exploration of Learned Disentangled Goal Spaces. In Proceedings of the CoRL, Zürich, Switzerland, 29–31 October 2018. [Google Scholar]

- Burgess, C.P.; Matthey, L.; Watters, N.; Kabra, R.; Higgins, I.; Botvinick, M.; Lerchner, A. MONet: Unsupervised Scene Decomposition and Representation. arXiv 2019, arXiv:1901.11390. [Google Scholar]

- Kipf, T.; Van der Pol, E.; Welling, M. Contrastive Learning of Structured World Models. In Proceedings of the ICLR, Virtual, 27–30 April 2020. [Google Scholar]

- Watters, N.; Matthey, L.; Bosnjak, M.; Burgess, C.P.; Lerchner, A. COBRA: Data-Efficient Model-Based RL through Unsupervised Object Discovery and Curiosity-Driven Exploration. arXiv 2019, arXiv:1905.09275. [Google Scholar]

- Hafner, D.; Lillicrap, T.; Norouzi, M.; Ba, J. Mastering Atari with Discrete World Models. In Proceedings of the ICLR, Vienna, Austria, 4 May 2021. [Google Scholar]

- Dabney, W.; Ostrovski, G.; Silver, D.; Munos, R. Implicit Quantile Networks for Distributional Reinforcement Learning. In Proceedings of the ICML, Stockholm, Sweden, 10–18 July 2018. [Google Scholar]

- Hessel, M.; Modayil, J.; Hasselt, H.V.; Schaul, T.; Ostrovski, G.; Dabney, W.; Horgan, D.; Piot, B.; Azar, M.; Silver, D. Rainbow: Combining Improvements in Deep Reinforcement Learning. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Smith, R.; Cheeseman, P. On the Representation and Estimation of Spatial Uncertainty. Int. J. Robot. Res. 1986, 5, 56–68. [Google Scholar] [CrossRef]

- Smith, R.; Cheeseman, P. Estimating Uncertain Spatial Relationships in Robotics. In Proceedings of the Second Annual Conference on Uncertainty in Artificial Intelligence, Philadelphia, PA, USA, 8–10 August 1986. [Google Scholar]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The Robot That Won the DARPA Grand Challenge. In Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2007; Volume 36. [Google Scholar]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.; Davison, A.J. SLAM++: Simultaneous Localisation and Mapping at the Level of Objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar]

- McCormac, J.; Handa, A.; Davison, A.; Leutenegger, S. SemanticFusion: Dense 3D semantic mapping with convolutional neural networks. In Proceedings of the International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4628–4635. [Google Scholar]

- Chen, B.; Xia, F.; Ichter, B.; Rao, K.; Gopalakrishnan, K.; Ryoo, M.S.; Stone, A.; Kappler, D. Open-vocabulary Queryable Scene Representations for Real World Planning. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Ost, J.; Mannan, F.; Thuerey, N.; Knodt, J.; Heide, F. Neural Scene Graphs for Dynamic Scenes. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Mildenhall, B.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P.P.; Barron, J.T. NeRF in the Dark: High Dynamic Range View Synthesis from Noisy Raw Images. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Martin-Brualla, R.; Radwan, N.; Sajjadi, M.S.M.; Barron, J.T.; Dosovitskiy, A.; Duckworth, D. NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Rematas, K.; Liu, A.; Srinivasan, P.P.; Barron, J.T.; Tagliasacchi, A.; Funkhouser, T.; Ferrari, V. Urban Radiance Fields. In Proceedings of the CVPR, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Muhammad, N.; Paxton, C.; Pinto, L.; Chintala, S.; Szlam, A. CLIP-Fields: Weakly Supervised Semantic Fields for Robotic Memory. In Proceedings of the Robotics: Science and Systems 2023, Daegu, Republic of Korea, 10–14 July 2023. [Google Scholar]

- Pi, R.; Gao, J.; Diao, S.; Pan, R.; Dong, H.; Zhang, J.; Yao, L.; Han, J.; Xu, H.; Kong, L.; et al. DetGPT: Detect What You Need via Reasoning. arXiv 2023, arXiv:2305.14167. [Google Scholar]

- Gibson, J.J. The Ecological Approach to Visual Perception; Houghton, Mifflin and Company: Boston, MA, USA, 1979. [Google Scholar]

- Milner, A.; Goodale, M. Two visual systems re-viewed. Neuropsychologia 2008, 46, 774–785. [Google Scholar] [CrossRef] [PubMed]

- Han, Z.; Sereno, A. Modeling the Ventral and Dorsal Cortical Visual Pathways Using Artificial Neural Networks. Neural Comput. 2022, 34, 138–171. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Li, D.; Savarese, S.; Hoi, S.C.H. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. arXiv 2023, arXiv:2203.03897. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. arXiv 2023, arXiv:2304.0848. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.; Kirillov, A.; Girdhar, R. Masked-attention Mask Transformer for Universal Image Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Zhong, Y.; Yang, J.; Zhang, P.; Li, C.; Codella, N.; Li, L.H.; Zhou, L.; Dai, X.; Yuan, L.; Li, Y.; et al. Regionclip: Region-based language-image pretraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16793–16803. [Google Scholar]

- Kerr, J.; Kim, C.M.; Goldberg, K.; Kanazawa, A.; Tancik, M. LERF: Language Embedded Radiance Fields. In Proceedings of the International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023. [Google Scholar]

- Gu, X.; Lin, T.Y.; Kuo, W.; Cui, Y. Open-vocabulary Object Detection via Vision and Language Knowledge Distillation. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 25–29 April 2022. [Google Scholar]

- Ding, J.; Xue, N.; Xia, G.S.; Dai, D. Decoupling Zero-Shot Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Lüddecke, T.; Ecker, A. Image Segmentation Using Text and Image Prompts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 7086–7096. [Google Scholar]

- Chen, Z.; Duan, Y.; Wang, W.; He, J.; Lu, T.; Dai, J.; Qiao, Y. Vision Transformer Adapter for Dense Predictions. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 4 May 2021. [Google Scholar]

- Salimans, T.; Karpathy, A.; Chen, X.; Kingma, D.P. PixelCNN++: Improving the PixelCNN with Discretized Logistic Mixture Likelihood and Other Modifications. arXiv 2017, arXiv:1701.05517. [Google Scholar]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- Saharia, C.; Chan, W.; Saxena, S.; Li, L.; Whang, J.; Denton, E.; Ghasemipour, K.; Lopes, R.G.; Ayan, B.K.; Salimans, T.; et al. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding. In Proceedings of the NeurIPS, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Lotter, W.; Kreiman, G.; Cox, D. Deep Predictive Coding Networks for Video Prediction and Unsupervised Learning. In Proceedings of the ICML, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Marino, J. Predictive Coding, Variational Autoencoders, and Biological Connections. Neural Comput. 2019, 34, 1–44. [Google Scholar] [CrossRef] [PubMed]

- Ranganath, R.; Tran, D.; Blei, D. Hierarchical Variational Models. In Proceedings of the ICML, New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Vahdat, A.; Kautz, J. NVAE: A Deep Hierarchical Variational Autoencoder. In Proceedings of the NeurIPS, Virtual, 6–12 December 2020. [Google Scholar]

- Kendall, A.; Gal, Y. What uncertainties do we need in Bayesian deep learning for computer vision? In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5580–5590. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Liao, Y.; Xie, J.; Geiger, A. KITTI-360: A Novel Dataset and Benchmarks for Urban Scene Understanding in 2D and 3D. arXiv 2021, arXiv:2109.06074. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing (EMNLP), Hong Kong, China, 7 November 2019. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Jatavallabhula, K.M.; Iyer, G.; Paull, L. ∇SLAM: Dense SLAM meets Automatic Differentiation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–3 August 2020; pp. 2130–2137. [Google Scholar]

- Vizzo, I.; Guadagnino, T.; Mersch, B.; Wiesmann, L.; Behley, J.; Cyrill, S. KISS-ICP: In Defense of Point-to-Point ICP Simple, Accurate, and Robust Registration If Done the Right Way. IEEE Robot. Autom. Lett. 2023, 8, 1029–1036. [Google Scholar] [CrossRef]

| IoU | |||||||

|---|---|---|---|---|---|---|---|

| #Samples | 1 | 2 | 4 | 8 | 16 | 32 | |

| road | All | 92.75 | 93.36 | 93.61 | 93.89 | 94.20 | 94.33 |

| Unobs. | 84.07 | 85.70 | 86.10 | 86.69 | 87.54 | 87.74 | |

| road marking | All | 21.02 | 21.21 | 21.95 | 22.31 | 22.91 | 22.99 |

| Unobs. | 12.85 | 13.77 | 14.24 | 14.84 | 15.47 | 16.00 | |

| side walk | All | 51.39 | 53.45 | 56.49 | 57.38 | 59.57 | 60.49 |

| Unobs. | 41.50 | 45.51 | 48.72 | 50.33 | 52.07 | 52.53 | |

| vegetation | All | 34.91 | 37.25 | 40.54 | 41.67 | 43.42 | 44.36 |

| Unobs. | 28.11 | 31.97 | 35.08 | 36.27 | 37.96 | 40.02 | |

| static | All | 97.61 | 97.61 | 97.85 | 98.08 | 98.12 | 98.23 |

| Unobs. | 97.73 | 97.88 | 98.15 | 98.22 | 98.35 | 98.40 | |

| drivable | All | 93.10 | 93.69 | 93.94 | 94.25 | 94.63 | 94.71 |

| Unobs. | 84.89 | 86.60 | 87.00 | 87.55 | 88.52 | 88.72 | |

| mIoU | All | 65.13 | 66.10 | 67.40 | 67.93 | 68.81 | 69.19 |

| Unobs. | 58.19 | 60.24 | 61.55 | 62.32 | 63.32 | 63.90 | |

| IoU | |||||||

|---|---|---|---|---|---|---|---|

| #Samples | 1 | 2 | 4 | 8 | 16 | 32 | |

| road | All | 98.01 | 98.15 | 98.20 | 98.29 | 98.31 | 98.34 |

| Unobs. | 95.93 | 96.68 | 96.96 | 97.14 | 97.28 | 97.44 | |

| road marking | All | 9.90 | 11.15 | 11.19 | 12.02 | 12.19 | 13.20 |

| Unobs. | 9.51 | 10.61 | 10.67 | 11.53 | 12.09 | 12.49 | |

| vegetation | All | 38.29 | 38.64 | 39.22 | 40.01 | 40.23 | 40.45 |

| Unobs. | 43.11 | 43.68 | 44.27 | 44.83 | 45.37 | 45.33 | |

| static | All | 98.54 | 98.73 | 98.79 | 98.83 | 98.88 | 98.90 |

| Unobs. | 95.10 | 96.40 | 96.64 | 97.10 | 97.36 | 97.49 | |

| drivable | All | 98.02 | 98.15 | 98.21 | 98.29 | 98.31 | 98.34 |

| Unobs. | 95.91 | 96.62 | 96.92 | 97.07 | 97.23 | 97.41 | |

| mIoU | All | 68.55 | 68.96 | 69.12 | 69.49 | 69.58 | 69.85 |

| Unobs. | 67.91 | 68.80 | 69.09 | 69.53 | 69.87 | 70.03 | |

| IoU | |||||||

|---|---|---|---|---|---|---|---|

| #Samples | 1 | 2 | 4 | 8 | 16 | 32 | |

| road | All | 97.16 | 97.20 | 97.30 | 97.35 | 97.38 | 97.42 |

| Unobs. | 95.00 | 95.27 | 95.45 | 95.63 | 95.79 | 95.89 | |

| road marking | Obs. | 34.14 | 34.33 | 34.62 | 34.83 | 35.04 | 35.24 |

| Unobs. | 26.79 | 27.06 | 27.57 | 28.13 | 28.37 | 28.53 | |

| side walk | All | 58.23 | 58.36 | 58.56 | 58.93 | 59.10 | 59.11 |

| Unobs. | 55.03 | 56.17 | 56.20 | 57.12 | 57.54 | 57.69 | |

| vegetation | All | 75.44 | 76.06 | 76.71 | 76.98 | 77.21 | 77.57 |

| Unobs. | 66.33 | 68.01 | 68.67 | 70.03 | 71.29 | 71.89 | |

| static | All | 98.79 | 98.81 | 98.81 | 98.82 | 98.83 | 98.84 |

| Unobs. | 98.47 | 98.56 | 98.56 | 98.59 | 98.61 | 98.62 | |

| drivable | All | 97.27 | 97.32 | 97.41 | 97.47 | 97.51 | 97.55 |

| Unobs. | 95.47 | 95.83 | 96.09 | 96.25 | 96.27 | 96.39 | |

| mIoU | All | 76.84 | 77.01 | 77.24 | 77.40 | 77.51 | 77.62 |

| Unobs. | 72.85 | 73.48 | 73.76 | 74.29 | 74.65 | 74.84 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karlsson, R.; Asfandiyarov, R.; Carballo, A.; Fujii, K.; Ohtani, K.; Takeda, K. Open-Vocabulary Predictive World Models from Sensor Observations. Sensors 2024, 24, 4735. https://doi.org/10.3390/s24144735

Karlsson R, Asfandiyarov R, Carballo A, Fujii K, Ohtani K, Takeda K. Open-Vocabulary Predictive World Models from Sensor Observations. Sensors. 2024; 24(14):4735. https://doi.org/10.3390/s24144735

Chicago/Turabian StyleKarlsson, Robin, Ruslan Asfandiyarov, Alexander Carballo, Keisuke Fujii, Kento Ohtani, and Kazuya Takeda. 2024. "Open-Vocabulary Predictive World Models from Sensor Observations" Sensors 24, no. 14: 4735. https://doi.org/10.3390/s24144735

APA StyleKarlsson, R., Asfandiyarov, R., Carballo, A., Fujii, K., Ohtani, K., & Takeda, K. (2024). Open-Vocabulary Predictive World Models from Sensor Observations. Sensors, 24(14), 4735. https://doi.org/10.3390/s24144735