Security in Transformer Visual Trackers: A Case Study on the Adversarial Robustness of Two Models

Abstract

1. Introduction

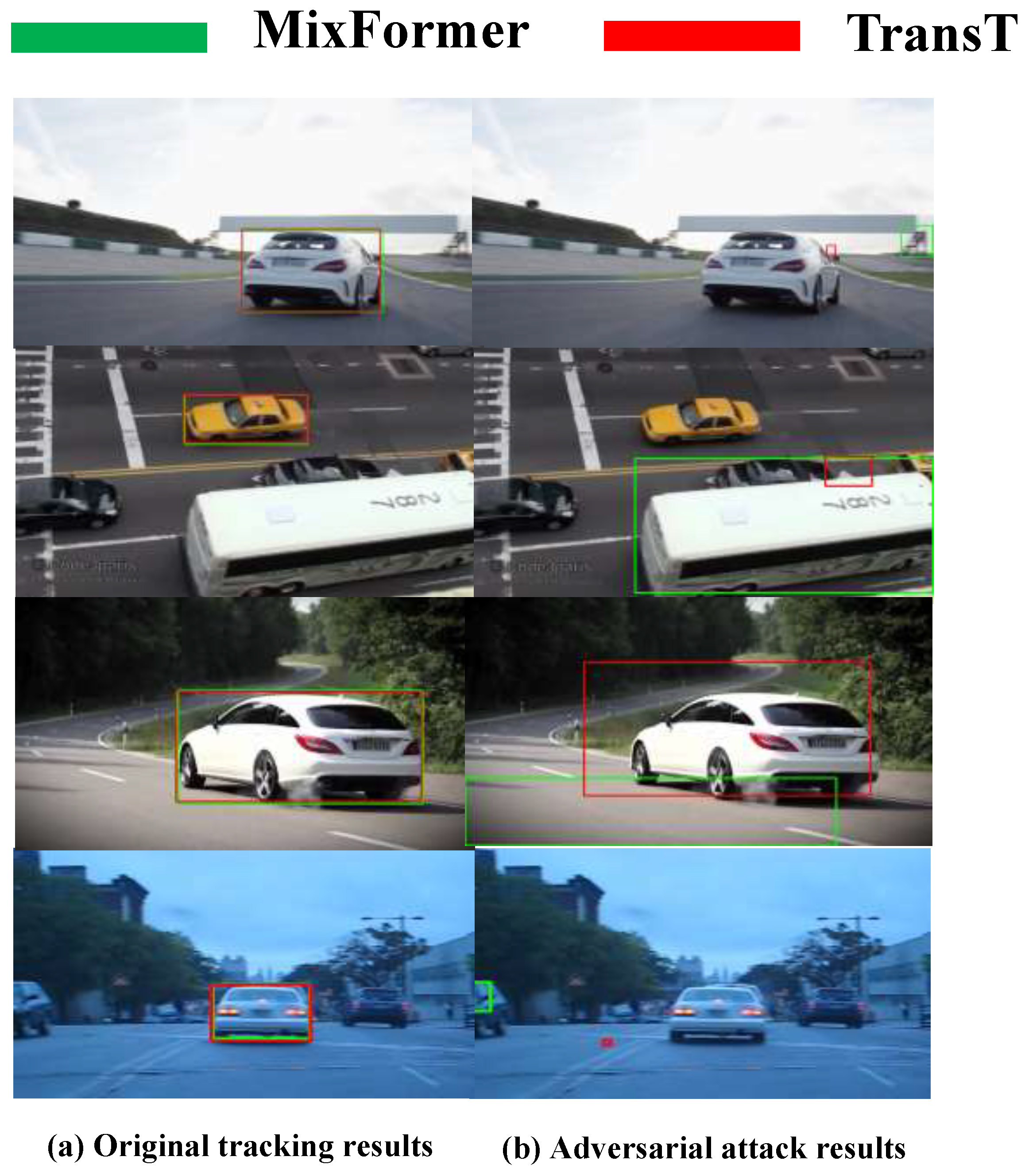

- Investigation and analysis: Adversarial attacks against visual tracking tasks were investigated to analyze the tracking principle and the advantages and weaknesses of the transformer-model-based trackers. Moreover, the influences of the adversarial attacks were studied. It is important to direct the design of robust and secure deep-learning-based trackers for visual tracking.

- Implementation and verification: three adversarial attacks were implemented to perform the attacks on the transformer-model-based visual tracking, and the effectiveness of these attacks was verified on three data sets.

2. Adversarial Attacks on Transformer-Based Visual Tracking

2.1. Transformer Architecture

2.2. Transformer Tracking

2.3. Adversarial Attacks on Transformer Tracking

2.4. Defense Methods

3. Generating Adversarial Examples

3.1. Attack Principles

3.2. Advantages and Weaknesses of Attacks

3.3. Transformer Tracking Principles

3.4. Investigation Experiments and Analyses

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Buehler, M.; Iagnemma, K.; Singh, S. The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009; Volume 56. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Proceedings, Part II 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4293–4302. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4660–4669. [Google Scholar]

- Wiyatno, R.R.; Xu, A. Physical adversarial textures that fool visual object tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4822–4831. [Google Scholar]

- Yan, B.; Wang, D.; Lu, H.; Yang, X. Cooling-shrinking attack: Blinding the tracker with imperceptible noises. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 990–999. [Google Scholar]

- Jia, S.; Song, Y.; Ma, C.; Yang, X. Iou attack: Towards temporally coherent black-box adversarial attack for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6709–6718. [Google Scholar]

- Liang, S.; Wei, X.; Yao, S.; Cao, X. Efficient adversarial attacks for visual object tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 34–50. [Google Scholar]

- Nakka, K.K.; Salzmann, M. Temporally-transferable perturbations: Efficient, one-shot adversarial attacks for online visual object trackers. arXiv 2020, arXiv:2012.15183. [Google Scholar]

- Zhou, Z.; Sun, Y.; Sun, Q.; Li, C.; Ren, Z. Only Once Attack: Fooling the Tracker with Adversarial Template. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3173–3184. [Google Scholar] [CrossRef]

- Jia, S.; Ma, C.; Song, Y.; Yang, X. Robust tracking against adversarial attacks. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 69–84. [Google Scholar]

- Guo, Q.; Xie, X.; Juefei-Xu, F.; Ma, L.; Li, Z.; Xue, W.; Feng, W.; Liu, Y. Spark: Spatial-aware online incremental attack against visual tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 202–219. [Google Scholar]

- Yin, X.; Ruan, W.; Fieldsend, J. Dimba: Discretely masked black-box attack in single object tracking. Mach. Learn. 2022, 113, 1705–1723. [Google Scholar] [CrossRef]

- Chen, X.; Yan, X.; Zheng, F.; Jiang, Y.; Xia, S.T.; Zhao, Y.; Ji, R. One-shot adversarial attacks on visual tracking with dual attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10176–10185. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Cehovin Zajc, L.; Vojir, T.; Bhat, G.; Lukezic, A.; Eldesokey, A.; et al. The sixth visual object tracking vot2018 challenge results. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8126–8135. [Google Scholar]

- Cui, Y.; Jiang, C.; Wang, L.; Wu, G. Mixformer: End-to-end tracking with iterative mixed attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13608–13618. [Google Scholar]

- Choi, J.; Kwon, J.; Lee, K.M. Deep meta learning for real-time target-aware visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 911–920. [Google Scholar]

- Yu, Y.; Xiong, Y.; Huang, W.; Scott, M.R. Deformable siamese attention networks for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2020; pp. 6728–6737. [Google Scholar]

- Du, F.; Liu, P.; Zhao, W.; Tang, X. Correlation-guided attention for corner detection based visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6836–6845. [Google Scholar]

- Wang, N.; Zhou, W.; Wang, J.; Li, H. Transformer meets tracker: Exploiting temporal context for robust visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1571–1580. [Google Scholar]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10448–10457. [Google Scholar]

- Gao, S.; Zhou, C.; Ma, C.; Wang, X.; Yuan, J. Aiatrack: Attention in attention for transformer visual tracking. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 146–164. [Google Scholar]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 341–357. [Google Scholar]

- Lin, L.; Fan, H.; Zhang, Z.; Xu, Y.; Ling, H. Swintrack: A simple and strong baseline for transformer tracking. Adv. Neural Inf. Process. Syst. 2022, 35, 16743–16754. [Google Scholar]

- Hu, X.; Liu, H.; Li, S.; Zhao, J.; Hui, Y. TFITrack: Transformer Feature Integration Network for Object Tracking. Int. J. Comput. Intell. Syst. 2024, 17, 107. [Google Scholar] [CrossRef]

- Zhao, M.; Okada, K.; Inaba, M. Trtr: Visual tracking with transformer. arXiv 2021, arXiv:2105.03817. [Google Scholar]

- Bhojanapalli, S.; Chakrabarti, A.; Glasner, D.; Li, D.; Unterthiner, T.; Veit, A. Understanding robustness of transformers for image classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10231–10241. [Google Scholar]

- Gu, J.; Tresp, V.; Qin, Y. Are vision transformers robust to patch perturbations? In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XII. Springer: Berlin/Heidelberg, Germany, 2022; pp. 404–421. [Google Scholar]

- Fu, Y.; Zhang, S.; Wu, S.; Wan, C.; Lin, Y. Patch-fool: Are vision transformers always robust against adversarial perturbations? arXiv 2022, arXiv:2203.08392. [Google Scholar]

- Bai, J.; Yuan, L.; Xia, S.T.; Yan, S.; Li, Z.; Liu, W. Improving vision transformers by revisiting high-frequency components. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XXIV. Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–18. [Google Scholar]

- Wu, B.; Gu, J.; Li, Z.; Cai, D.; He, X.; Liu, W. Towards efficient adversarial training on vision transformers. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XIII. Springer: Berlin/Heidelberg, Germany, 2022; pp. 307–325. [Google Scholar]

- Suttapak, W.; Zhang, J.; Zhao, H.; Zhang, L. Multi-Model UNet: An Adversarial Defense Mechanism for Robust Visual Tracking. Neural Process. Lett. 2024, 56, 132. [Google Scholar] [CrossRef]

- Amirkhani, A.; Karimi, M.P.; Banitalebi-Dehkordi, A. A survey on adversarial attacks and defenses for object detection and their applications in autonomous vehicles. Vis. Comput. 2023, 39, 5293–5307. [Google Scholar] [CrossRef]

| Tracker | Success | Precision | ||||||

|---|---|---|---|---|---|---|---|---|

| Original | Attack_CSA | Attack_IoU | Attack_RTAA | Original | Attack_CSA | Attack_IoU | Attack_RTAA | |

| MixFormer | 0.696 | 0.640 | 0.555 | 0.047 | 0.908 | 0.839 | 0.741 | 0.050 |

| TransT | 0.690 | 0.661 | 0.625 | 0.018 | 0.888 | 0.859 | 0.847 | 0.038 |

| Tracker | Accuracy | Robustness | ||||||

|---|---|---|---|---|---|---|---|---|

| Original | Attack_CSA | Attack_IoU | Attack_RTAA | Original | Attack_CSA | Attack_IoU | Attack_RTAA | |

| MixFormer | 0.614 | 0.625 | 0.599 | 0.198 | 0.698 | 0.819 | 1.288 | 10.339 |

| TransT | 0.595 | 0.592 | 0.578 | 0.111 | 0.337 | 0.323 | 0.899 | 5.984 |

| Tracker | Failures | EAO | ||||||

|---|---|---|---|---|---|---|---|---|

| Original | Attack_CSA | Attack_IoU | Attack_RTAA | Original | Attack_CSA | Attack_IoU | Attack_RTAA | |

| MixFormer | 149 | 175 | 275 | 2208 | 0.180 | 0.162 | 0.110 | 0.007 |

| TransT | 72 | 69 | 192 | 1278 | 0.302 | 0.304 | 0.160 | 0.014 |

| Tracker | AO (%) | (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Original | Attack_CSA | Attack_IoU | Attack_RTAA | Original | Attack_CSA | Attack_IoU | Attack_RTAA | |

| MixFormer | 0.716 | 0.680 | 0.554 | 0.048 | 0.815 | 0.768 | 0.629 | 0.037 |

| TransT | 0.720 | 0.702 | 0.529 | 0.046 | 0.821 | 0.798 | 0.609 | 0.051 |

| Tracker | (%) | |||

|---|---|---|---|---|

| Original | Attack_CSA | Attack_IoU | Attack_RTAA | |

| MixFormer | 0.687 | 0.633 | 0.428 | 0.013 |

| TransT | 0.680 | 0.661 | 0.433 | 0.021 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, P.; Chen, Y.; Ma, S.; Xue, F.; Crespi, N.; Chen, X.; Fang, X. Security in Transformer Visual Trackers: A Case Study on the Adversarial Robustness of Two Models. Sensors 2024, 24, 4761. https://doi.org/10.3390/s24144761

Ye P, Chen Y, Ma S, Xue F, Crespi N, Chen X, Fang X. Security in Transformer Visual Trackers: A Case Study on the Adversarial Robustness of Two Models. Sensors. 2024; 24(14):4761. https://doi.org/10.3390/s24144761

Chicago/Turabian StyleYe, Peng, Yuanfang Chen, Sihang Ma, Feng Xue, Noel Crespi, Xiaohan Chen, and Xing Fang. 2024. "Security in Transformer Visual Trackers: A Case Study on the Adversarial Robustness of Two Models" Sensors 24, no. 14: 4761. https://doi.org/10.3390/s24144761

APA StyleYe, P., Chen, Y., Ma, S., Xue, F., Crespi, N., Chen, X., & Fang, X. (2024). Security in Transformer Visual Trackers: A Case Study on the Adversarial Robustness of Two Models. Sensors, 24(14), 4761. https://doi.org/10.3390/s24144761