Flight Attitude Estimation with Radar for Remote Sensing Applications

Abstract

1. Introduction

2. Materials and Methods

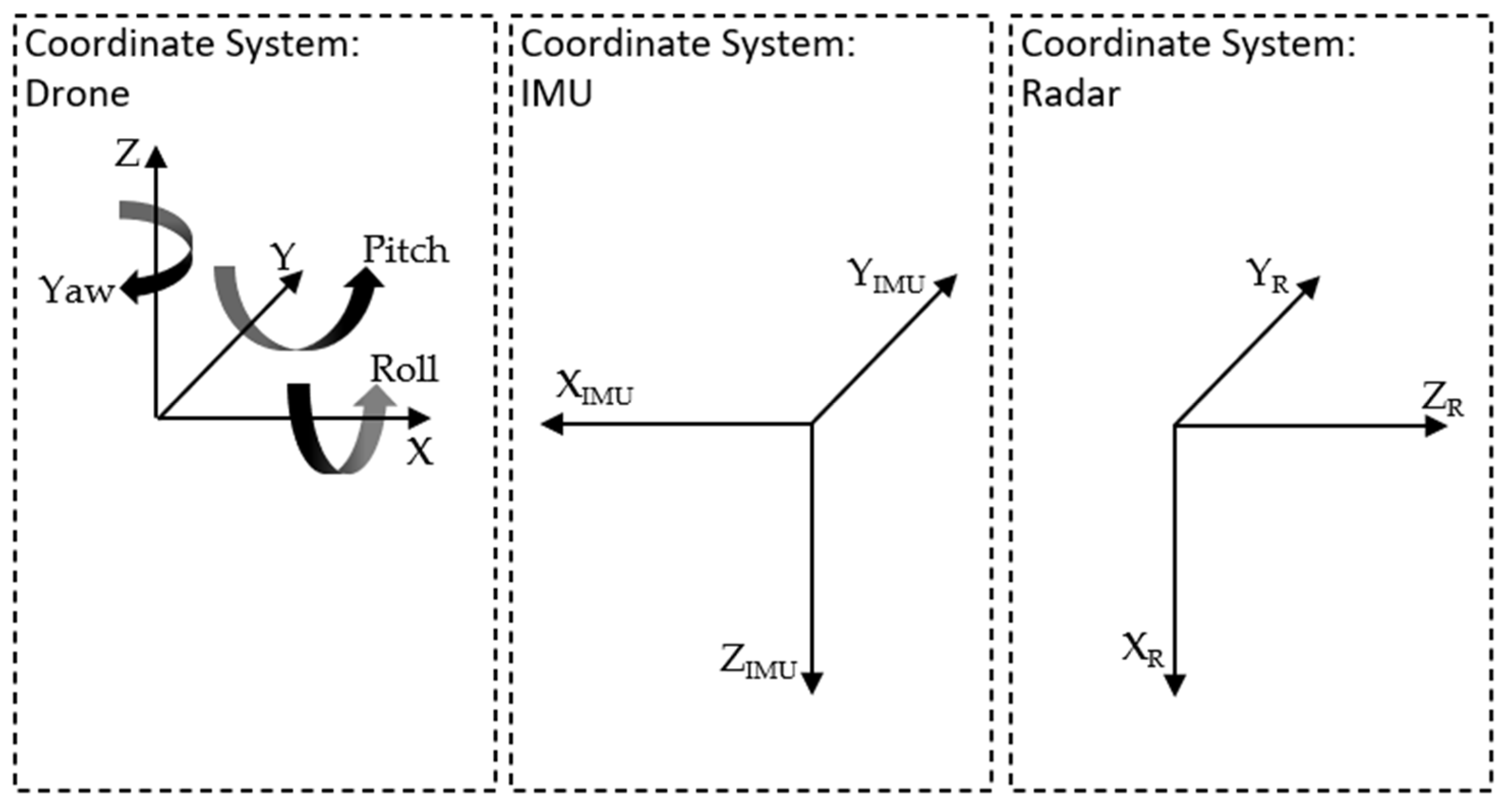

2.1. Drone and Radar Sensor

- XR: The distance measured from the radar sensor straight ahead;

- YR: The azimuth angle (left and right);

- ZR: The elevation angle (up and down).

- X = −XIMU = ZR: Area in front of and behind the drone;

- Y = YIMU = YR: Area to the left and right sides of the drone;

- Z = −ZIMU = −XR: Flight height above the ground (AGL) or distance from the ground.

2.2. Recordings

2.3. Calculation Process

- gRadar: Data from the radar sensor;

- gJet: Sensor data attached to the Nvidia Jetson Nano;

- gUav: Sensor data from the DJI Matrice M300 drone.

3. Results

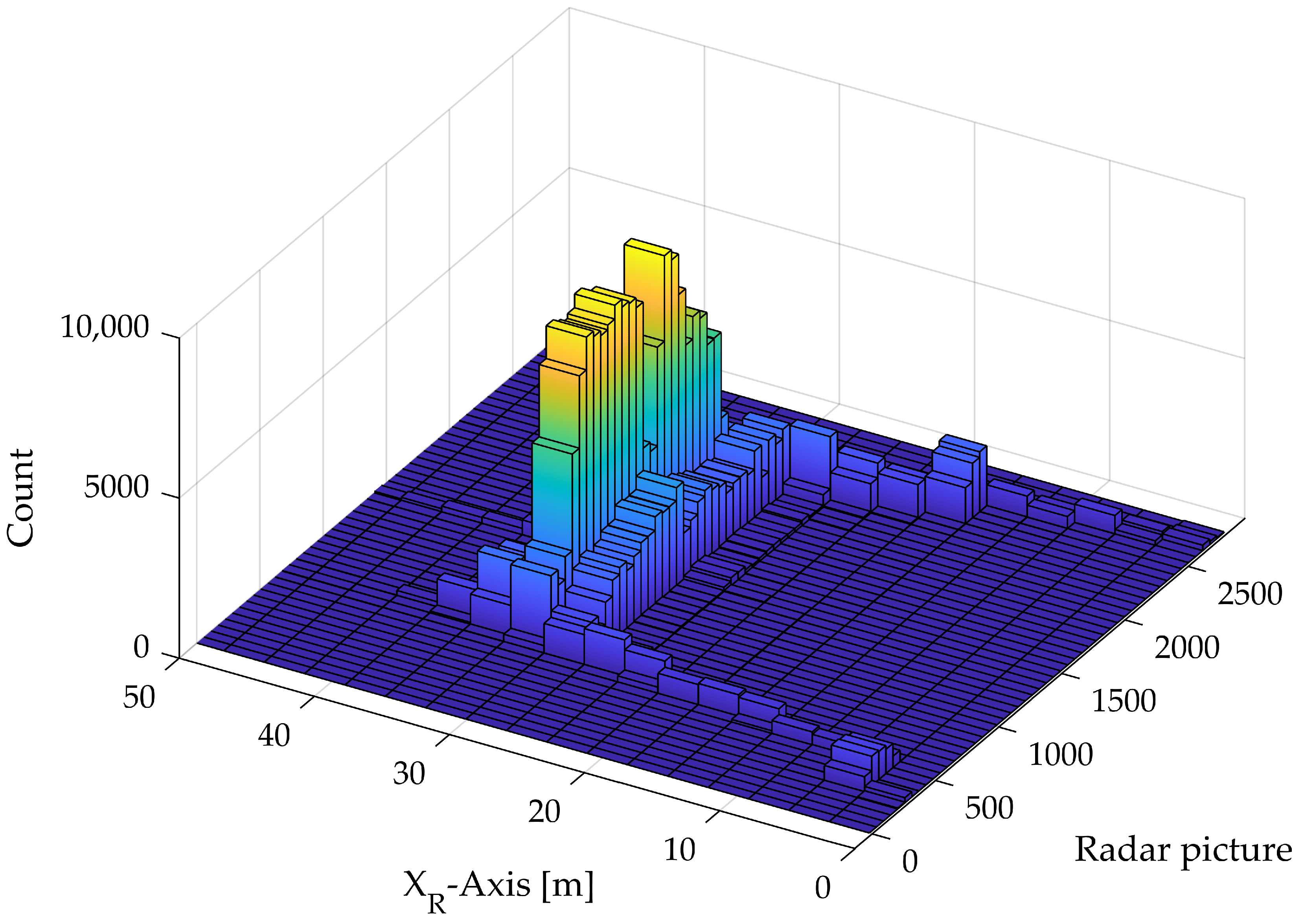

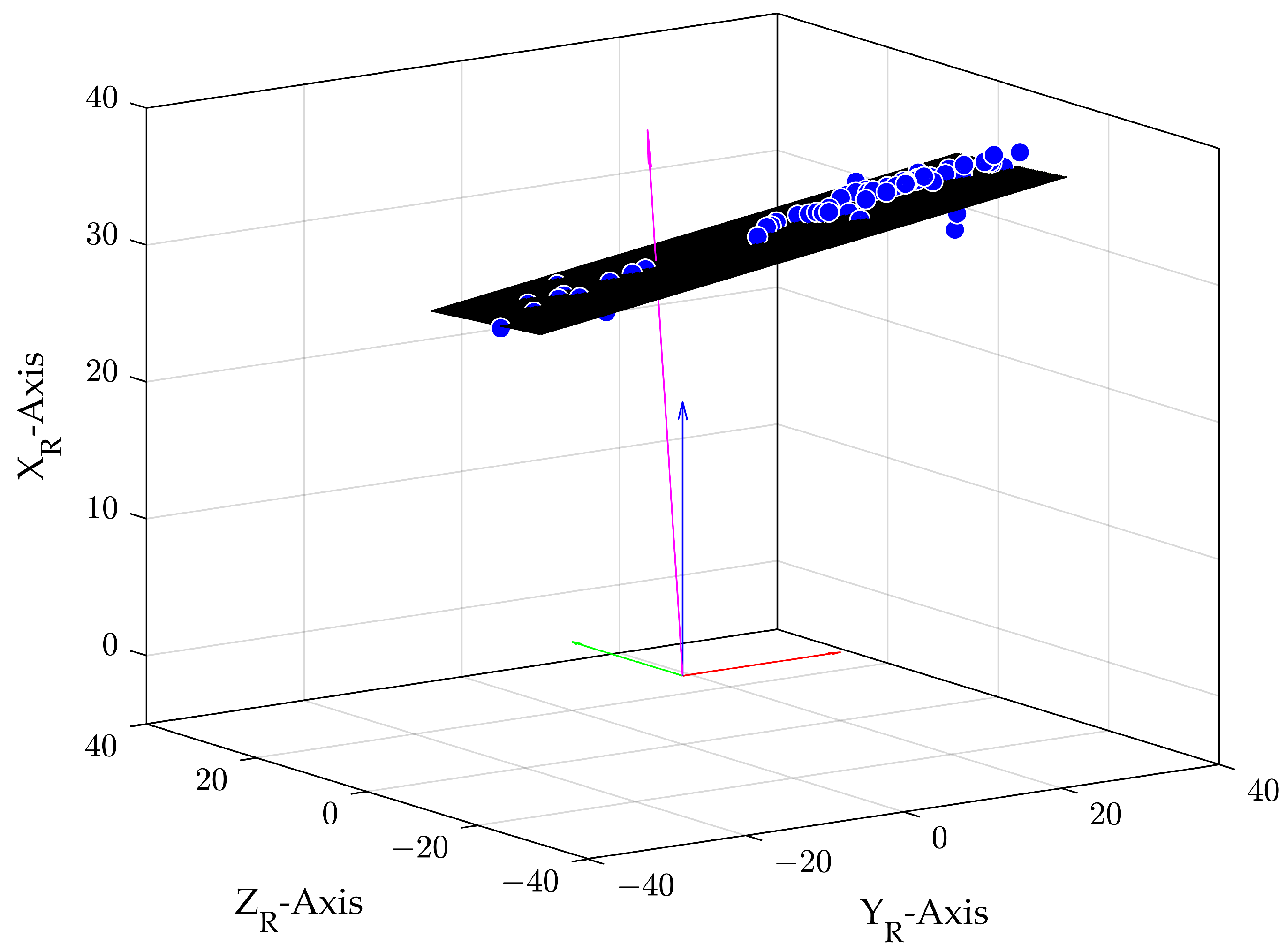

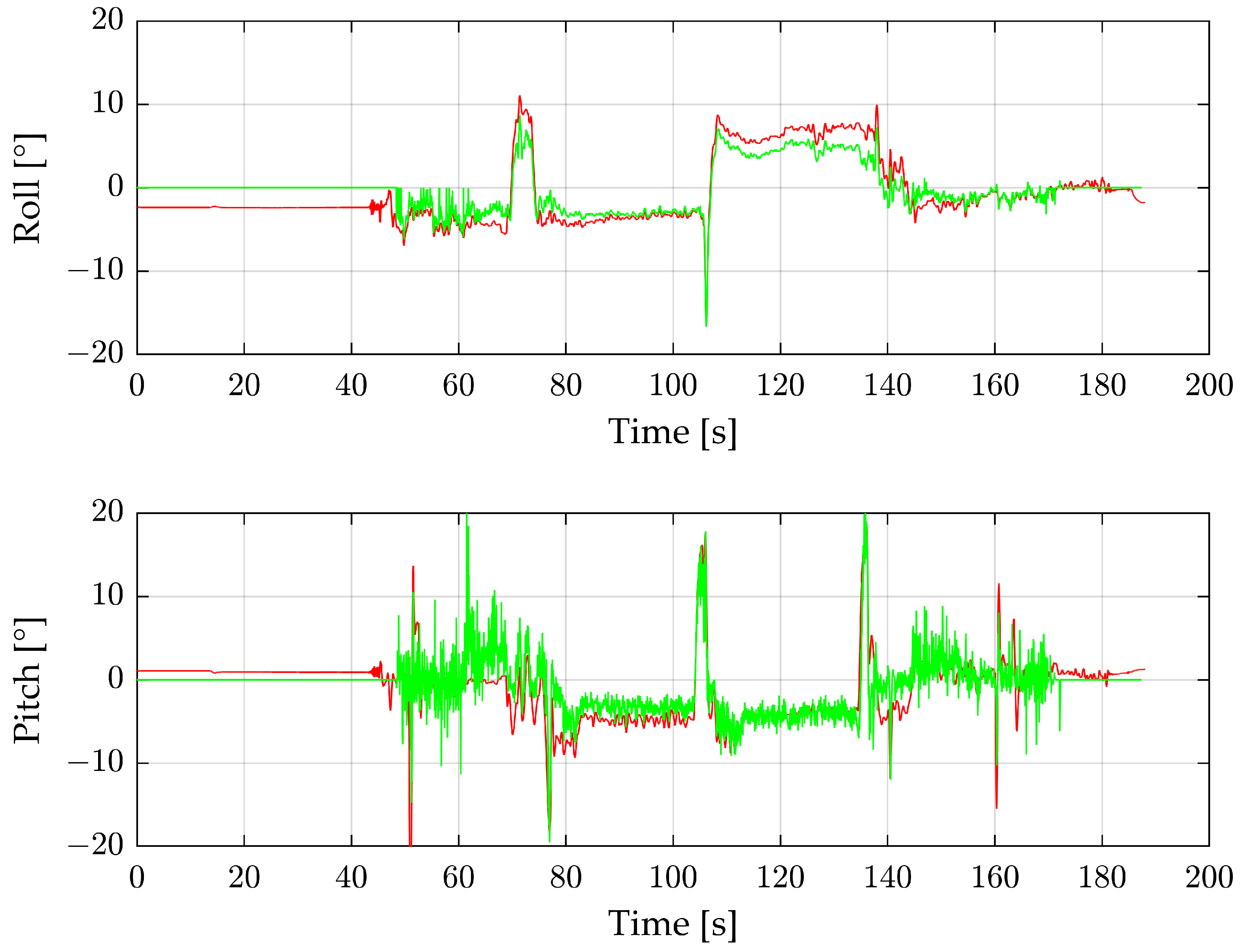

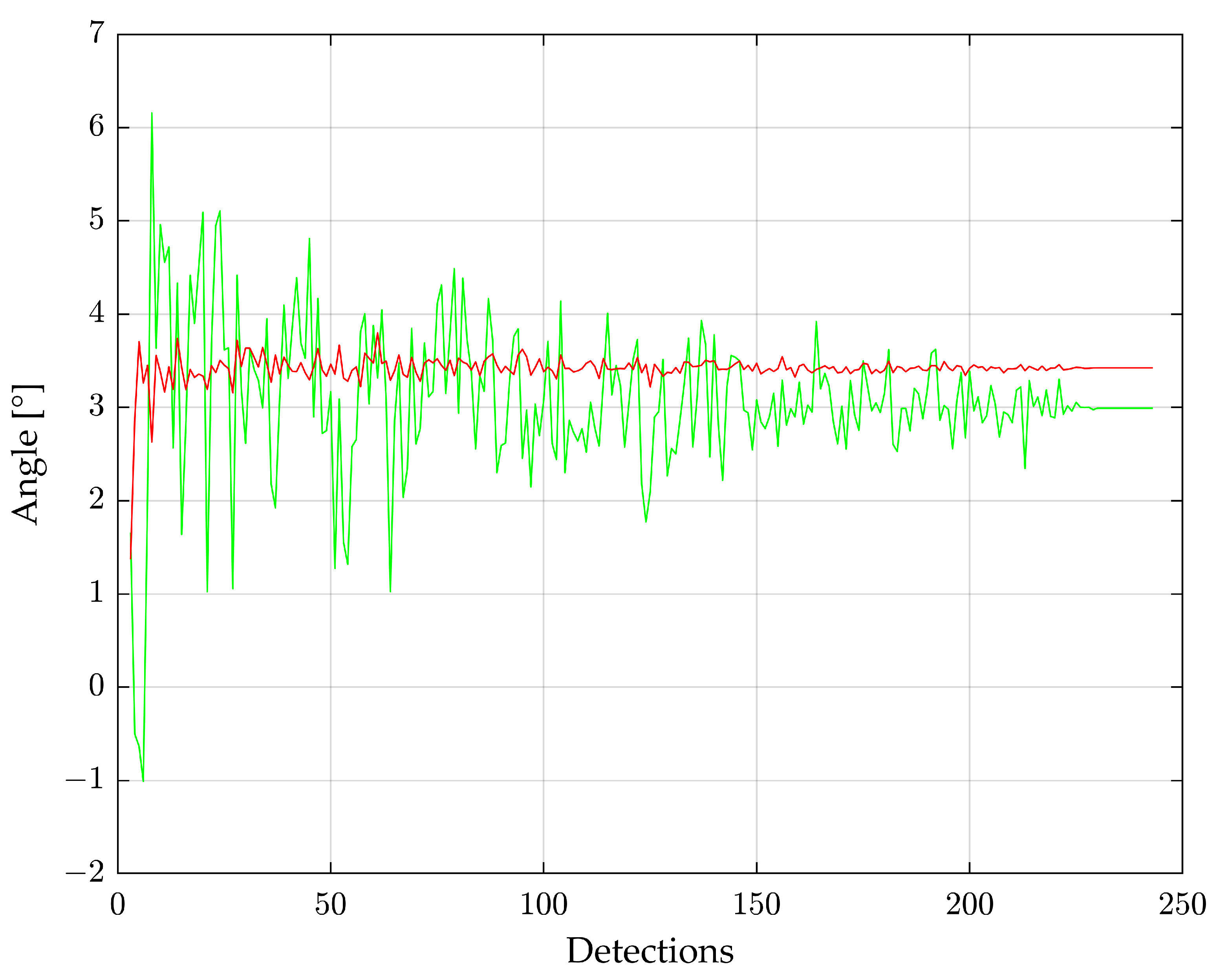

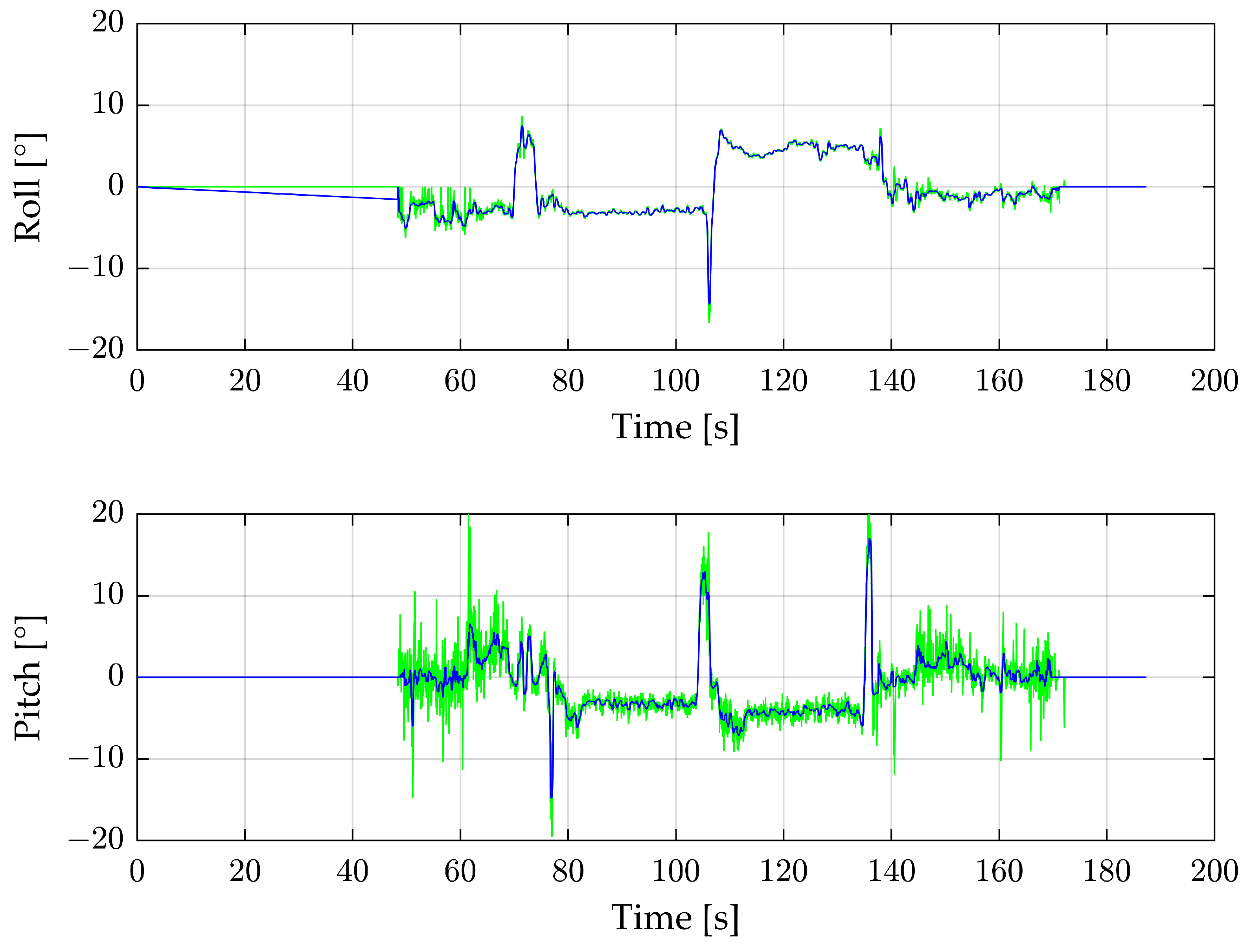

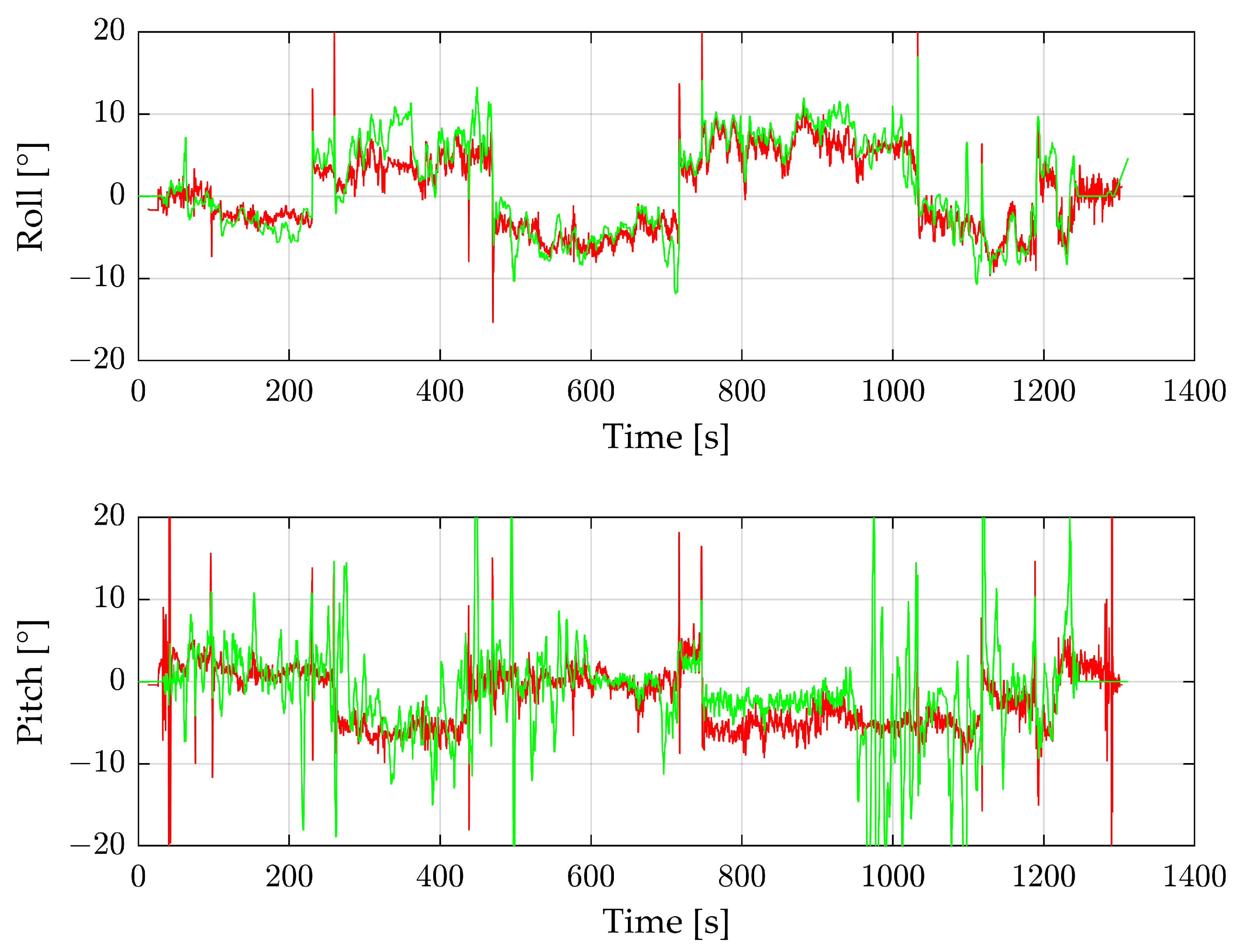

3.1. Open Field Flight

- IMU data—Radar data.

- IMU data—Filtered radar data.

- IMU data—Filtered radar data, only for the main flight parts.

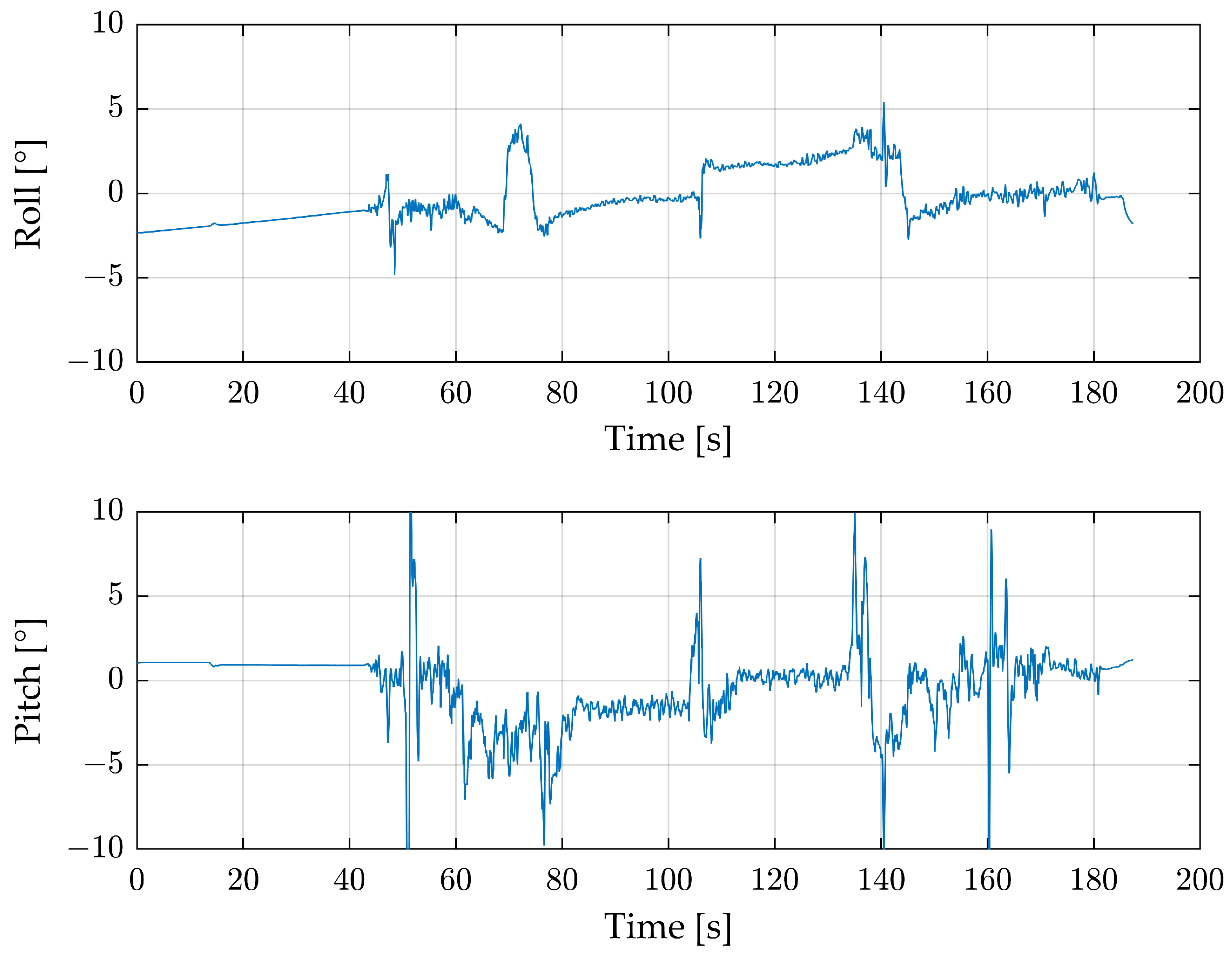

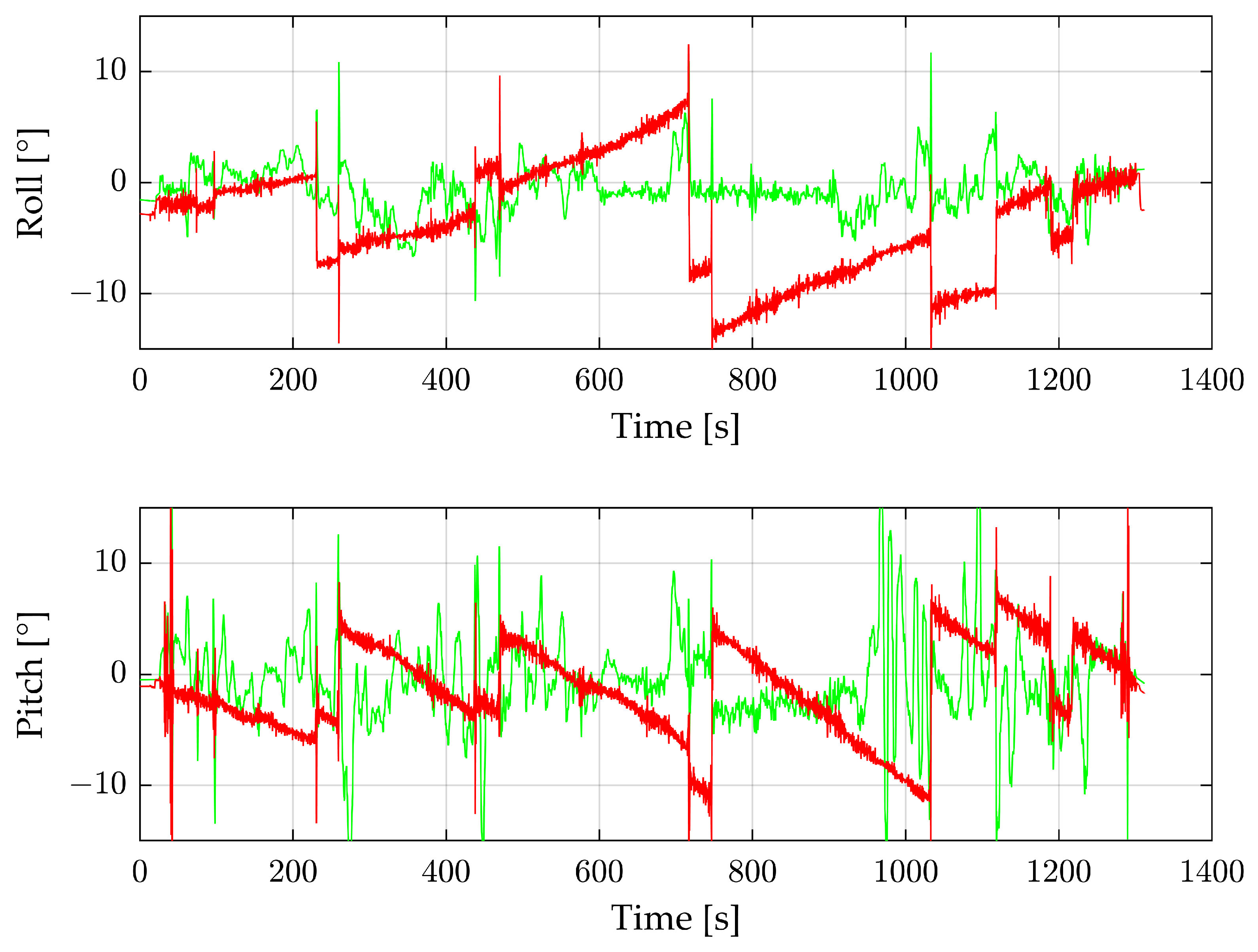

3.2. High-Voltage Pylon Flight

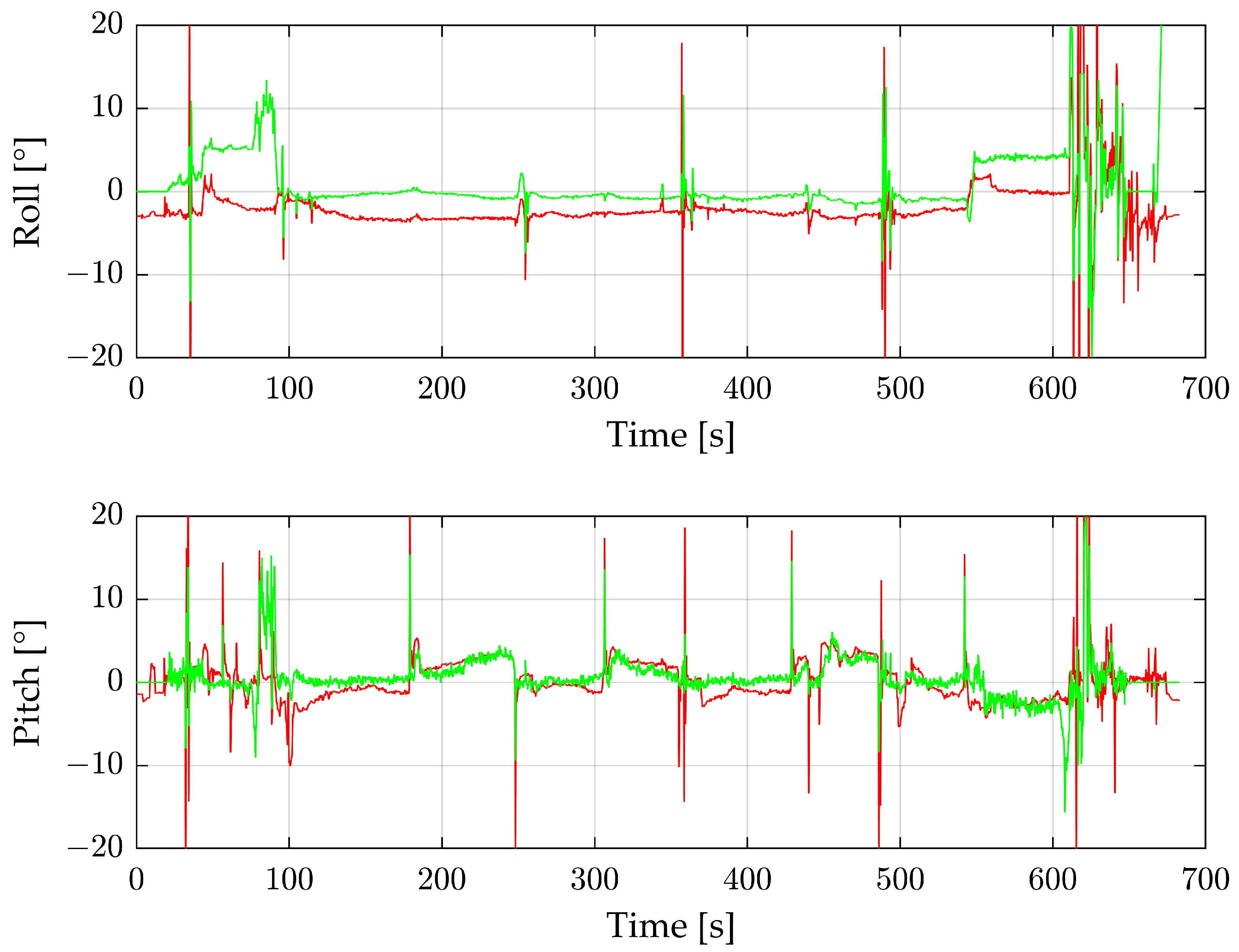

3.3. Industrial Plant Flight

4. Discussion and Further Challenges

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yin, N.; Liu, R.; Zeng, B.; Liu, N. A review: UAV-based Remote Sensing. IOP Conf. Ser. Mater. Sci. Eng. 2019, 490, 062014. [Google Scholar] [CrossRef]

- González-Jorge, H.; Martínez-Sánchez, J.; Bueno, M.; Arias, A.P. Unmanned Aerial Systems for Civil Applications: A Review. Drones 2017, 1, 2. [Google Scholar] [CrossRef]

- Al-Naji, A.; Perera, A.G.; Mohammed, S.L.; Chahl, J. Life Signs Detector Using a Drone in Disaster Zones. Remote Sens. 2019, 11, 2441. [Google Scholar] [CrossRef]

- Erdelj, M.; Natalizio, E.; Chowdhury, K.R.; Akyildiz, I.F. Help from the Sky: Leveraging UAVs for Disaster Management. IEEE Pervasive Comput. 2017, 16, 24–32. [Google Scholar] [CrossRef]

- Mogili, U.R.; Deepak, B.B.V.L. Review on Application of Drone Systems in Precision Agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Huang, Y.; Chen, Z.; Yu, T.; Huang, X.; Gu, X. Agricultural remote sensing big data: Management and applications. J. Integr. Agric. 2018, 17, 1915–1931. [Google Scholar] [CrossRef]

- Chen, Y.; Hakala, T.; Karjalainen, M.; Feng, Z.; Tang, J.; Litkey, P.; Kukko, A.; Jaakkola, A.; Hyyppä, J. UAV-Borne Profiling Radar for Forest Research. Remote Sens. 2017, 9, 58. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Muñoz, G.; Barrado, C.; Çetin, E.; Salami, E. Deep Reinforcement Learning for Drone Delivery. Drones 2019, 3, 72. [Google Scholar] [CrossRef]

- Torabbeigi, M.; Lim, G.J.; Kim, S.J. Drone Delivery Scheduling Optimization Considering Payload-induced Battery Consumption Rates. J. Intell. Robot. Syst. 2019, 97, 471–487. [Google Scholar] [CrossRef]

- Jiang, Y.; Bai, Y. Estimation of Construction Site Elevations Using Drone-Based Orthoimagery and Deep Learning. J. Constr. Eng. Manag. 2020, 146, 04020086. [Google Scholar] [CrossRef]

- Yi, W.; Sutrisna, M. Drone scheduling for construction site surveillance. Comput.-Aided Civ. Infrastruct. Eng. 2021, 36, 3–13. [Google Scholar] [CrossRef]

- Memon, S.A.; Kim, W.-G.; Khan, S.U.; Memon, T.D.; Alsaleem, F.N.; Alhassoon, K.; Alsunaydih, F.N. Tracking Multiple Autonomous Ground Vehicles Using Motion Capture System Operating in a Wireless Network. IEEE Access 2024, 12, 61780–61794. [Google Scholar] [CrossRef]

- Memon, S.A.; Ullah, I. Detection and tracking of the trajectories of dynamic UAVs in restricted and cluttered environment. Expert Syst. Appl. 2021, 183, 115309. [Google Scholar] [CrossRef]

- Memon, S.A.; Son, H.; Kim, W.-G.; Khan, A.M.; Shahzad, M.; Khan, U. Tracking Multiple Unmanned Aerial Vehicles through Occlusion in Low-Altitude Airspace. Drones 2023, 7, 241. [Google Scholar] [CrossRef]

- Zhou, T.; Yang, M.; Jiang, K.; Wong, H.; Yang, D. MMW Radar-Based Technologies in Autonomous Driving: A Review. Sensors 2020, 20, 7283. [Google Scholar] [CrossRef] [PubMed]

- Weber, C.; von Eichel-Streiber, J.; Rodrigo-Comino, J.; Altenburg, J.; Udelhoven, T. Automotive Radar in a UAV to Assess Earth Surface Processes and Land Responses. Sensors 2020, 20, 4463. [Google Scholar] [CrossRef]

- Loeffler, A.; Zergiebel, R.; Wache, J.; Mejdoub, M. Advances in Automotive Radar for 2023. In Proceedings of the 2023 24th International Radar Symposium (IRS), Berlin, Germany, 24–26 May 2023; pp. 1–8. [Google Scholar]

- Abosekeen, A.; Karamat, T.B.; Noureldin, A.; Korenberg, M.J. Adaptive cruise control radar-based positioning in GNSS challenging environment. IET Radar Sonar Navig. 2019, 13, 1666–1677. [Google Scholar] [CrossRef]

- Morris, P.J.B.; Hari, K.V.S. Detection and Localization of Unmanned Aircraft Systems Using Millimeter-Wave Automotive Radar Sensors. IEEE Sens. Lett. 2021, 5, 1–4. [Google Scholar] [CrossRef]

- Nie, W.; Han, Z.-C.; Li, Y.; He, W.; Xie, L.-B.; Yang, X.-L.; Zhou, M. UAV Detection and Localization Based on Multi-Dimensional Signal Features. IEEE Sens. J. 2022, 22, 5150–5162. [Google Scholar] [CrossRef]

- Introduction to Synthetic Aperture Radar (SAR). Available online: https://apps.dtic.mil/sti/citations/ADA470686 (accessed on 16 December 2023).

- Khaleghian, S.; Ullah, H.; Kræmer, T.; Hughes, N.; Eltoft, T.; Marinoni, A. Sea Ice Classification of SAR Imagery Based on Convolution Neural Networks. Remote Sens. 2021, 13, 1734. [Google Scholar] [CrossRef]

- Zakhvatkina, N.; Smirnov, V.; Bychkova, I. Satellite SAR Data-based Sea Ice Classification: An Overview. Geosciences 2019, 9, 152. [Google Scholar] [CrossRef]

- Zhang, B.; Perrie, W.; Zhang, J.A.; Uhlhorn, E.W.; He, Y. High-Resolution Hurricane Vector Winds from C-Band Dual-Polarization SAR Observations. J. Atmos. Ocean. Technol. 2014, 31, 272–286. [Google Scholar] [CrossRef]

- Zhang, B.; Perrie, W. Recent progress on high wind-speed retrieval from multi-polarization SAR imagery: A review. Int. J. Remote Sens. 2014, 35, 4031–4045. [Google Scholar] [CrossRef]

- Yu, Y.; Saatchi, S. Sensitivity of L-Band SAR Backscatter to Aboveground Biomass of Global Forests. Remote Sens. 2016, 8, 522. [Google Scholar] [CrossRef]

- Le Toan, T.; Quegan, S.; Davidson, M.W.J.; Balzter, H.; Paillou, P.; Papathanassiou, K.; Plummer, S.; Rocca, F.; Saatchi, S.; Shugart, H.; et al. The BIOMASS mission: Mapping global forest biomass to better understand the terrestrial carbon cycle. Remote Sens. Environ. 2011, 115, 2850–2860. [Google Scholar] [CrossRef]

- Iizuka, K.; Itoh, M.; Shiodera, S.; Matsubara, T.; Dohar, M.; Watanabe, K. Advantages of unmanned aerial vehicle (UAV) photogrammetry for landscape analysis compared with satellite data: A case study of postmining sites in Indonesia. Cogent Geosci. 2018, 4, 1498180. [Google Scholar] [CrossRef]

- Devoto, S.; Macovaz, V.; Mantovani, M.; Soldati, M.; Furlani, S. Advantages of Using UAV Digital Photogrammetry in the Study of Slow-Moving Coastal Landslides. Remote Sens. 2020, 12, 3566. [Google Scholar] [CrossRef]

- Noor, N.M.; Abdullah, A.; Hashim, M. Remote sensing UAV/drones and its applications for urban areas: A review. IOP Conf. Ser. Earth Environ. Sci. 2018, 169, 012003. [Google Scholar] [CrossRef]

- von Eichel-Streiber, J.; Weber, C.; Rodrigo-Comino, J.; Altenburg, J.; Controller for a Low-Altitude Fixed-Wing UAV on an Embedded System to Assess Specific Environmental Conditions. Int. J. Aerosp. Eng. Available online: https://www.hindawi.com/journals/ijae/2020/1360702/ (accessed on 1 July 2020).

- Xu, P.; Wang, H.; Yang, S.; Zheng, Y. Detection of crop heights by UAVs based on the Adaptive Kalman Filter. Int. J. Precis. Agric. Aviat. 2021, 4, 52–58. Available online: http://www.ijpaa.org/index.php/ijpaa/article/view/166 (accessed on 3 December 2023). [CrossRef]

- Tao, H.; Feng, H.; Xu, L.; Miao, M.; Long, H.; Yue, J.; Li, Z.; Yang, G.; Yang, X.; Fan, L. Estimation of Crop Growth Parameters Using UAV-Based Hyperspectral Remote Sensing Data. Sensors 2020, 20, 1296. [Google Scholar] [CrossRef] [PubMed]

- Prager, S.; Sexstone, G.; McGrath, D.; Fulton, J.; Moghaddam, M. Snow Depth Retrieval With an Autonomous UAV-Mounted Software-Defined Radar. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Tan, A.; Eccleston, K.; Platt, I.; Woodhead, I.; Rack, W.; McCulloch, J. The design of a UAV mounted snow depth radar: Results of measurements on Antarctic sea ice. In Proceedings of the 2017 IEEE Conference on Antenna Measurements & Applications (CAMA), Tsukuba, Japan, 4–6 December 2017; pp. 316–319. [Google Scholar] [CrossRef]

- Bauer-Marschallinger, B.; Paulik, C.; Hochstöger, S.; Mistelbauer, T.; Modanesi, S.; Ciabatta, L.; Massari, C.; Brocca, L.; Wagner, W. Soil Moisture from Fusion of Scatterometer and SAR: Closing the Scale Gap with Temporal Filtering. Remote Sens. 2018, 10, 1030. [Google Scholar] [CrossRef]

- Ding, R.; Jin, H.; Xiang, D.; Wang, X.; Zhang, Y.; Shen, D.; Su, L.; Hao, W.; Tao, M.; Wang, X.; et al. Soil Moisture Sensing with UAV-Mounted IR-UWB Radar and Deep Learning. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2023, 7, 1–25. [Google Scholar] [CrossRef]

- Šipoš, D.; Gleich, D. A Lightweight and Low-Power UAV-Borne Ground Penetrating Radar Design for Landmine Detection. Sensors 2020, 20, 2234. [Google Scholar] [CrossRef]

- García Fernández, M.; Álvarez López, Y.; Arboleya Arboleya, A.; González Valdés, B.; Rodríguez Vaqueiro, Y.; Las-Heras Andrés, F.; Pino García, A. Synthetic Aperture Radar Imaging System for Landmine Detection Using a Ground Penetrating Radar on Board a Unmanned Aerial Vehicle. IEEE Access 2018, 6, 45100–45112. [Google Scholar] [CrossRef]

- Huang, X.; Dong, X.; Ma, J.; Liu, K.; Ahmed, S.; Lin, J.; Qiu, B. The Improved A* Obstacle Avoidance Algorithm for the Plant Protection UAV with Millimeter Wave Radar and Monocular Camera Data Fusion. Remote Sens. 2021, 13, 3364. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, F.; Huang, P.; Wang, C.; Li, Y. Autonomous Obstacle Avoidance for UAV based on Fusion of Radar and Monocular Camera. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 5954–5961. [Google Scholar] [CrossRef]

- Weber, C.; Eggert, M.; Rodrigo-Comino, J.; Udelhoven, T. Transforming 2D Radar Remote Sensor Information from a UAV into a 3D World-View. Remote Sens. 2022, 14, 1633. [Google Scholar] [CrossRef]

- Batini, C.; Blaschke, T.; Lang, S.; Albrecht, F.; Abdulmutalib, H.M.; Barsi, Á.; Szabó, G.; Kugler, Z. DATA QUALITY IN REMOTE SENSING. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2-W7, 447–453. [Google Scholar] [CrossRef]

- Barsi, Á.; Kugler, Z.; Juhász, A.; Szabó, G.; Batini, C.; Abdulmuttalib, H.; Huang, G.; Shen, H. Remote sensing data quality model: From data sources to lifecycle phases. Int. J. Image Data Fusion 2019, 10, 280–299. [Google Scholar] [CrossRef]

- Kyriou, A.; Nikolakopoulos, K.; Koukouvelas, I.; Lampropoulou, P. Repeated UAV Campaigns, GNSS Measurements, GIS, and Petrographic Analyses for Landslide Mapping and Monitoring. Minerals 2021, 11, 300. [Google Scholar] [CrossRef]

- Al-Rawabdeh, A.; Moussa, A.; Foroutan, M.; El-Sheimy, N.; Habib, A. Time Series UAV Image-Based Point Clouds for Landslide Progression Evaluation Applications. Sensors 2017, 17, 2378. [Google Scholar] [CrossRef] [PubMed]

- Thiele, S.T.; Lorenz, S.; Kirsch, M.; Cecilia Contreras Acosta, I.; Tusa, L.; Herrmann, E.; Möckel, R.; Gloaguen, R. Multi-scale, multi-sensor data integration for automated 3-D geological mapping. Ore Geol. Rev. 2021, 136, 104252. [Google Scholar] [CrossRef]

- Tridawati, A.; Wikantika, K.; Susantoro, T.M.; Harto, A.B.; Darmawan, S.; Yayusman, L.F.; Ghazali, M.F. Mapping the Distribution of Coffee Plantations from Multi-Resolution, Multi-Temporal, and Multi-Sensor Data Using a Random Forest Algorithm. Remote Sens. 2020, 12, 3933. [Google Scholar] [CrossRef]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.R.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.T.A.; et al. A Review of the Application of Optical and Radar Remote Sensing Data Fusion to Land Use Mapping and Monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef]

- Hinton, J.C. GIS and remote sensing integration for environmental applications. Int. J. Geogr. Inf. Syst. 1996, 10, 877–890. [Google Scholar] [CrossRef]

- Wu, S.; Qiu, X.; Wang, L. Population Estimation Methods in GIS and Remote Sensing: A Review. GIScience Remote Sens. 2005, 42, 80–96. [Google Scholar] [CrossRef]

- Sandamini, C.; Maduranga, M.W.P.; Tilwari, V.; Yahaya, J.; Qamar, F.; Nguyen, Q.N.; Ibrahim, S.R.A. A Review of Indoor Positioning Systems for UAV Localization with Machine Learning Algorithms. Electronics 2023, 12, 1533. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Hügler, P.; Roos, F.; Schartel, M.; Geiger, M.; Waldschmidt, C. Radar Taking Off: New Capabilities for UAVs. IEEE Microw. Mag. 2018, 19, 43–53. [Google Scholar] [CrossRef]

- Mao, G.; Drake, S.; Anderson, B.D.O. Design of an Extended Kalman Filter for UAV Localization. In Proceedings of the 2007 Information, Decision and Control, Adelaide, Australia, 12–14 February 2007; pp. 224–229. [Google Scholar]

- Famiglietti, N.A.; Cecere, G.; Grasso, C.; Memmolo, A.; Vicari, A. A Test on the Potential of a Low Cost Unmanned Aerial Vehicle RTK/PPK Solution for Precision Positioning. Sensors 2021, 21, 3882. [Google Scholar] [CrossRef] [PubMed]

- Stateczny, A.; Specht, C.; Specht, M.; Brčić, D.; Jugović, A.; Widźgowski, S.; Wiśniewska, M.; Lewicka, O. Study on the Positioning Accuracy of GNSS/INS Systems Supported by DGPS and RTK Receivers for Hydrographic Surveys. Energies 2021, 14, 7413. [Google Scholar] [CrossRef]

- Mughal, M.H.; Khokhar, M.J.; Shahzad, M. Assisting UAV Localization Via Deep Contextual Image Matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2445–2457. [Google Scholar] [CrossRef]

- Hajiyev, C.; Vural, S.Y. LQR Controller with Kalman Estimator Applied to UAV Longitudinal Dynamics. Positioning 2013, 2013, 28381. [Google Scholar] [CrossRef]

- Dong, Y.; Fu, J.; Yu, B.; Zhang, Y.; Ai, J. Position and heading angle control of an unmanned quadrotor helicopter using LQR method. In Proceedings of the 2015 34th Chinese Control Conference (CCC), Hangzhou, China, 28–30 July 2015; pp. 5566–5571. [Google Scholar]

- Crispoltoni, M.; Fravolini, M.L.; Balzano, F.; D’Urso, S.; Napolitano, M.R. Interval Fuzzy Model for Robust Aircraft IMU Sensors Fault Detection. Sensors 2018, 18, 2488. [Google Scholar] [CrossRef]

- Narasimhappa, M.; Mahindrakar, A.D.; Guizilini, V.C.; Terra, M.H.; Sabat, S.L. MEMS-Based IMU Drift Minimization: Sage Husa Adaptive Robust Kalman Filtering. IEEE Sens. J. 2020, 20, 250–260. [Google Scholar] [CrossRef]

- Li, X.; Huang, W.; Zhu, X.; Zhao, Z. MEMS-IMU Error Modelling and Compensation by 3D turntable with temperature chamber. In Proceedings of the 2022 International Symposium on Networks, Computers and Communications (ISNCC), Shenzhen, China, 19–22 July 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Han, S.; Meng, Z.; Zhang, X.; Yan, Y. Hybrid Deep Recurrent Neural Networks for Noise Reduction of MEMS-IMU with Static and Dynamic Conditions. Micromachines 2021, 12, 214. [Google Scholar] [CrossRef]

- Continental Engineering Services ARS548 Datasheet. Available online: https://conti-engineering.com/wp-content/uploads/2023/01/RadarSensors_ARS548RDI.pdf (accessed on 30 March 2024).

| Data | Description | Unit |

|---|---|---|

| XR | Cartesian coordinate system—X | m |

| YR | Cartesian coordinate system—Y | m |

| ZR | Cartesian coordinate system—Z | m |

| Range | Spherical coordinate system—r | m |

| Azimuth | Spherical coordinate system—φ | rad |

| Elevation | Spherical coordinate system—θ | rad |

| Radar cross-section | Detection strength | dBm2 |

| Range Rate | Detection velocity | m/s |

| Angle | 1. RMSE IMU—Radar | 2. RMSE IMU—Filtered Radar | 3. RMSE IMU—Filtered Radar Main Flight Parts |

|---|---|---|---|

| Roll | 1.6° | 1.6° | 1.5° |

| Pitch | 2.5° | 2.4° | 1.4° |

| Angle | 1. RMSE IMU—Radar | 2. RMSE IMU—Filtered Radar | 3. RMSE IMU—Filtered Radar Main Flight Parts |

|---|---|---|---|

| Roll | 3.3° | 3.7° | 2.5° |

| Pitch | 2.8° | 2.5° | 1.4° |

| Angle | 1. RMSE IMU—Radar | 2. RMSE IMU—Filtered Radar | 3. RMSE IMU—Filtered Radar Main Flight Parts |

|---|---|---|---|

| Roll | 5.6° | 5.5° | 5.1° |

| Pitch | 8.7° | 8.0° | 7.8° |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weber, C.; Eggert, M.; Udelhoven, T. Flight Attitude Estimation with Radar for Remote Sensing Applications. Sensors 2024, 24, 4905. https://doi.org/10.3390/s24154905

Weber C, Eggert M, Udelhoven T. Flight Attitude Estimation with Radar for Remote Sensing Applications. Sensors. 2024; 24(15):4905. https://doi.org/10.3390/s24154905

Chicago/Turabian StyleWeber, Christoph, Marius Eggert, and Thomas Udelhoven. 2024. "Flight Attitude Estimation with Radar for Remote Sensing Applications" Sensors 24, no. 15: 4905. https://doi.org/10.3390/s24154905

APA StyleWeber, C., Eggert, M., & Udelhoven, T. (2024). Flight Attitude Estimation with Radar for Remote Sensing Applications. Sensors, 24(15), 4905. https://doi.org/10.3390/s24154905