Virtual Inspection System for Pumping Stations with Multimodal Feedback

Abstract

1. Introduction

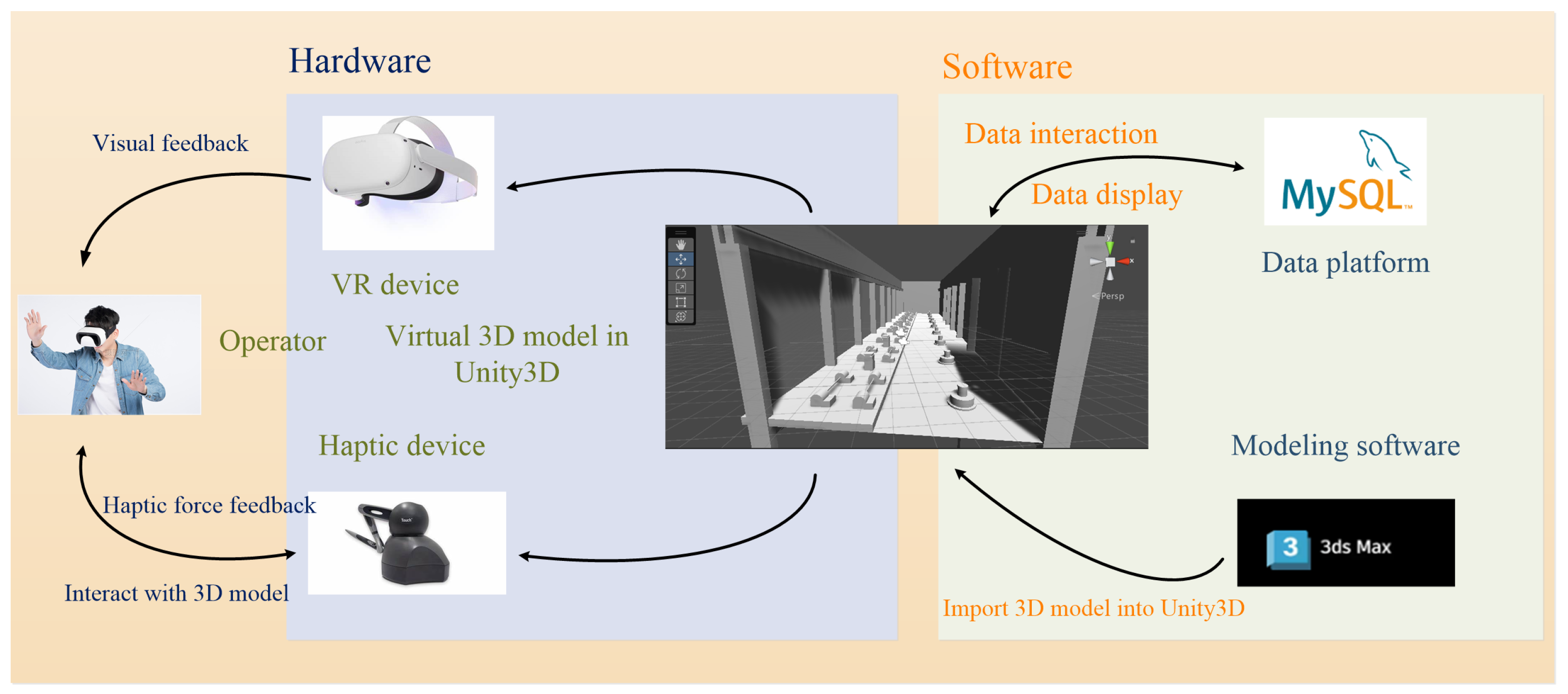

2. Methods and Materials

2.1. Visual Model

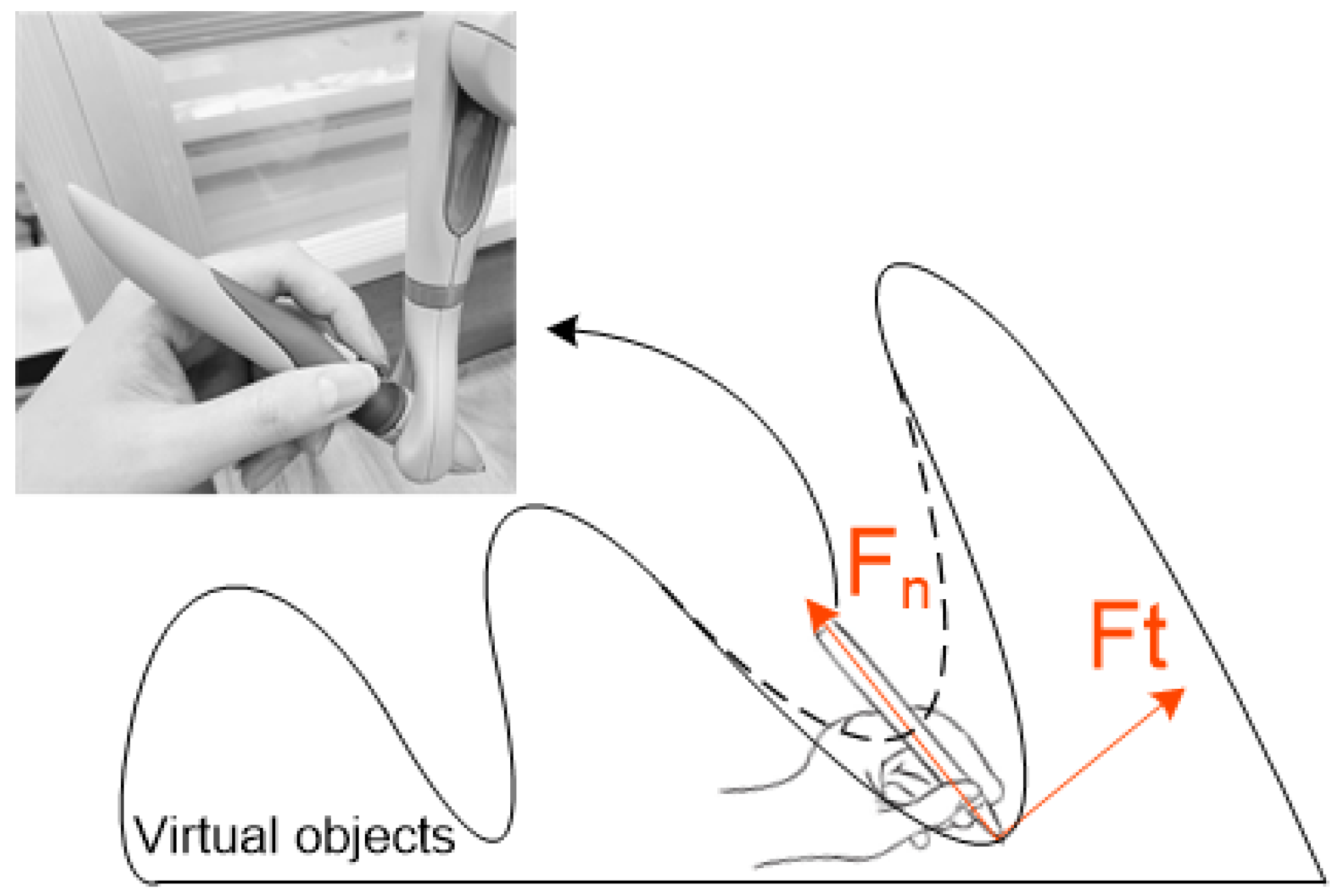

2.2. Haptic Model

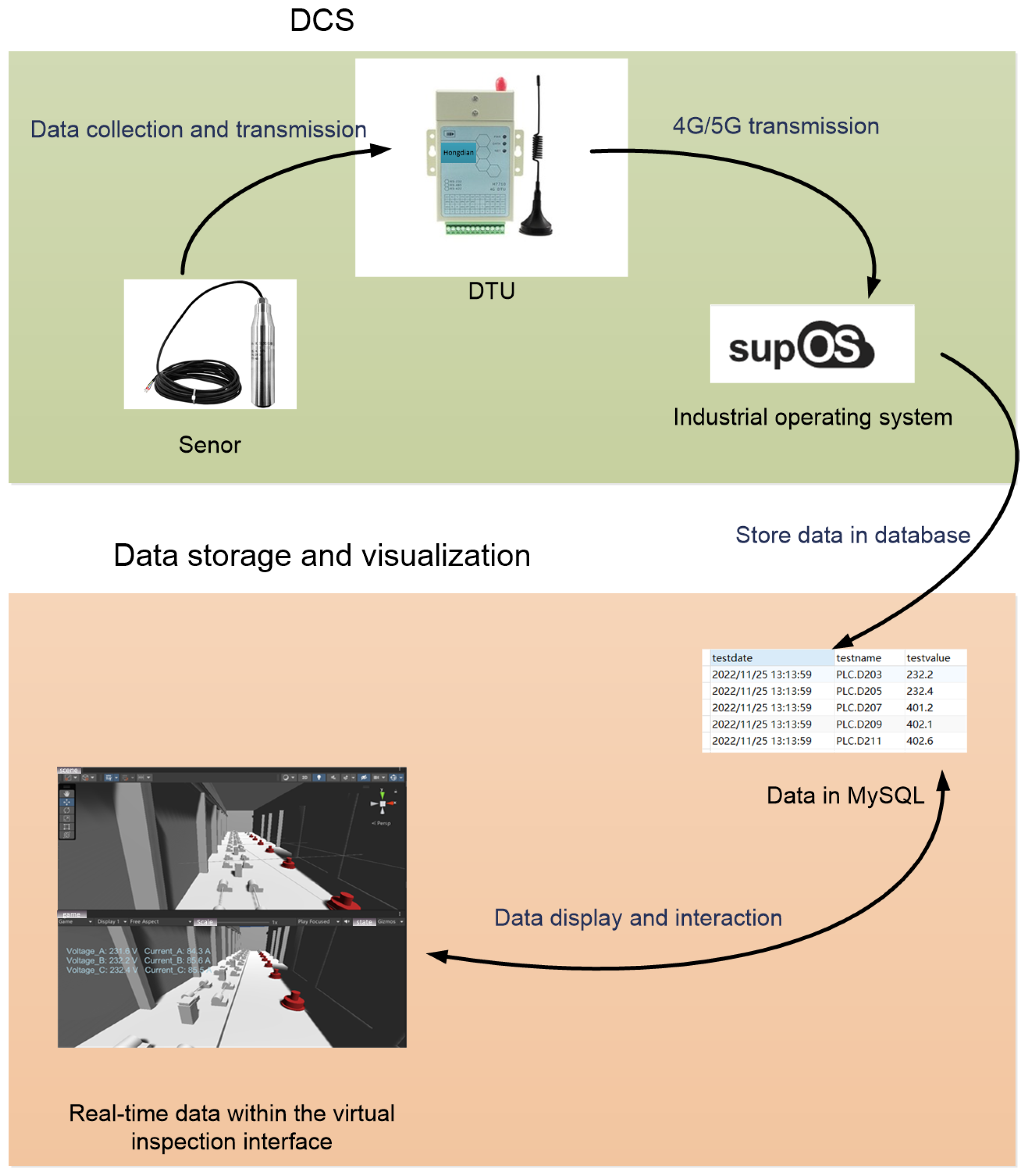

2.3. Data Platform

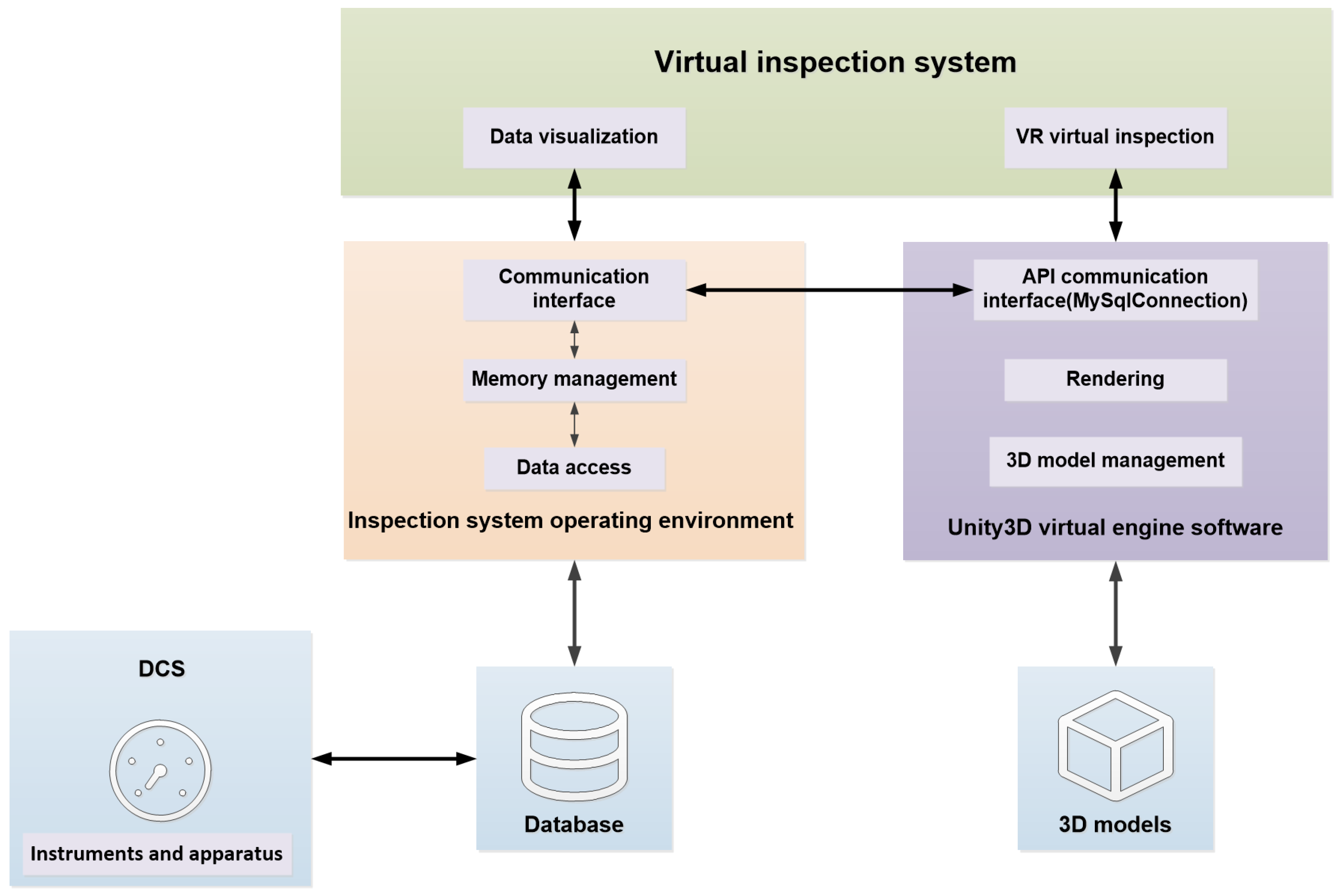

3. System Implementation

3.1. Building Visual Model

3.2. Building Haptic Model

3.2.1. Collision Detection

3.2.2. Building Haptic Force

3.3. Building Data Platform

3.3.1. Data Preparation

3.3.2. Building Data Model

3.3.3. Data Collection and Transmission

3.3.4. Data Management and Visualization

3.3.5. System Design and Implementation

4. Application and Testing

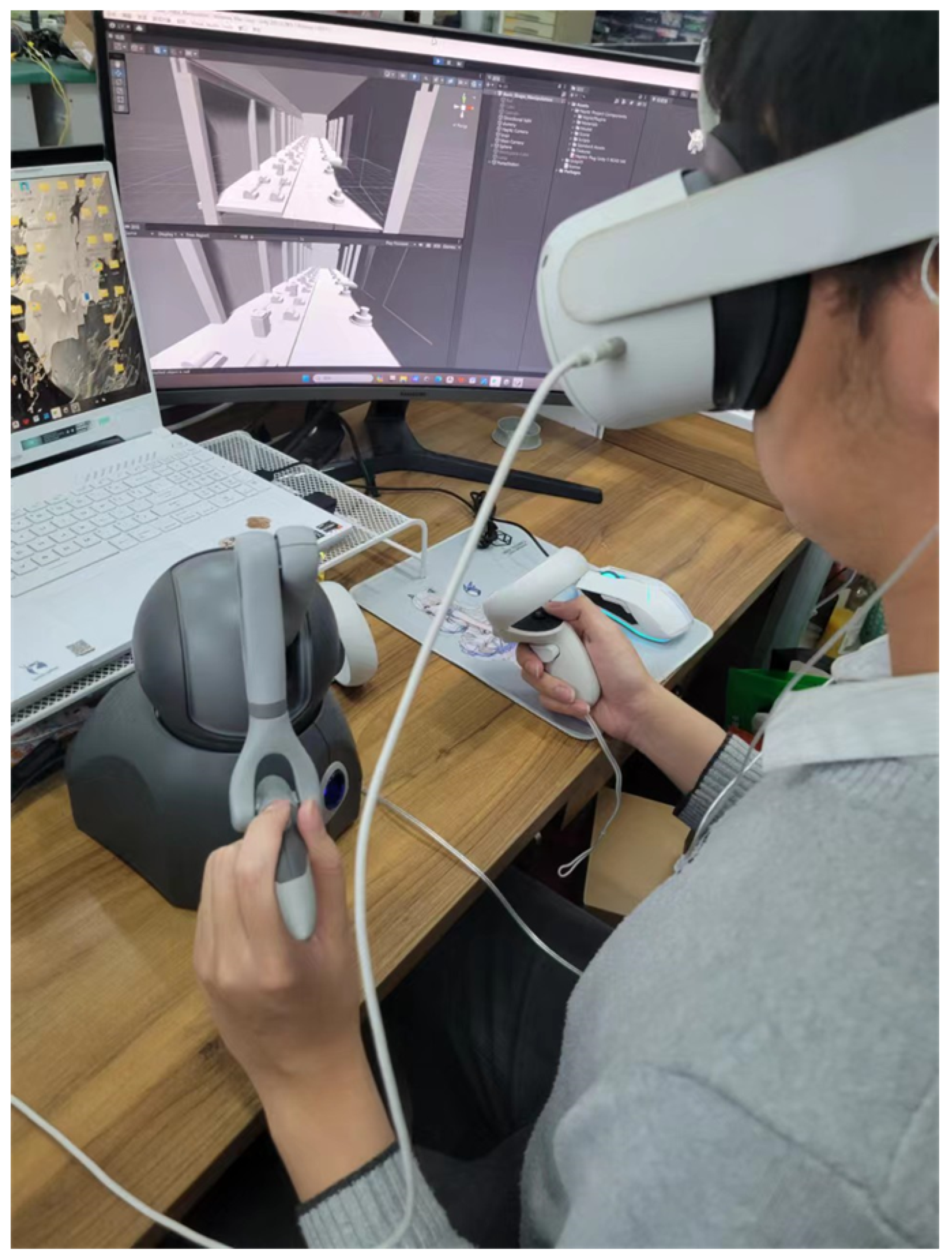

4.1. Establishment of Virtual Inspection System

4.2. Establishment of Data Platform

4.3. Validation

4.3.1. Experiment Aims

4.3.2. Experiment Subjects

4.3.3. Experiment Methods and Procedures

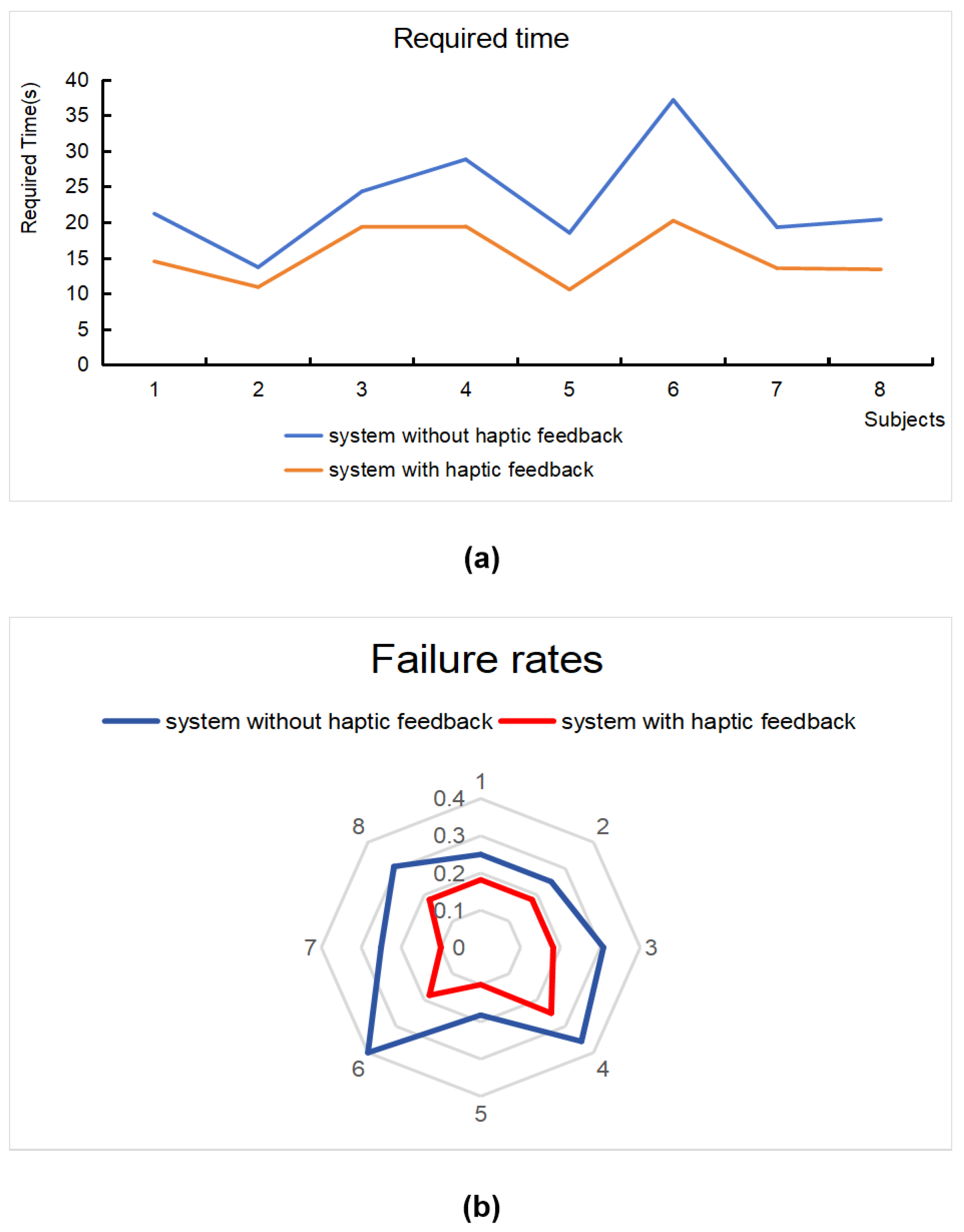

4.3.4. Experiment Results

5. Conclusions and Discussion

5.1. Discussion

- Improved Efficiency and Accuracy: The reduced task completion time and lower failure rate observed in this study suggest that the virtual inspection system with haptic feedback can lead to substantial improvements in efficiency and accuracy.

- Enhanced Safety: VR-based inspection systems can provide a safe and controlled environment for training and practicing inspection procedures, reducing the risk of accidents and injuries associated with real-world inspections.

- Reduced Costs: The virtual inspection systems with multimodal feedback can potentially reduce the need for costly and time-consuming on-site inspections, leading to significant cost savings.

5.2. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cao, F. Application and Research of Online Monitoring and Diagnosis System for DC Power Supply in Distribution Stations. Technol. Dev. Enterp. 2015, 34, 24–25. [Google Scholar]

- Yun, T.; Shuai, L.; Wang, Z. Development and Design Trend of Intelligent Patrol Robot. Ind. Des. 2019, 11, 143–144. [Google Scholar]

- Shen, Y. The Research on Path Planning in Transformer Substation Inspection Robot System. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 2017. [Google Scholar]

- Wang, Y.; Ma, S. Patrol Inspection System for Substations Based on RFID. Sichuan Electr. Power Technol. 2010, 33, 77–79. [Google Scholar]

- Li, Y.; He, C.; Liu, K. Development and application of the substation inspection system. Electr. Eng. 2018, 19, 97–100. [Google Scholar]

- Halder, S.; Afsari, K.; Chiou, E.; Patrick, R.; Hamed, K.A. Construction inspection and monitoring with quadruped robots in future human-robot teaming: A preliminary study. J. Build. Eng. 2023, 65, 105814. [Google Scholar] [CrossRef]

- Bao, J.; Zhu, Z.; Qian, F.; Zhao, L.; Tang, H. Research on pumping station informatization technology and application. Yangtze River 2016, 42, 108–113. [Google Scholar]

- Yuan, Z.; Gao, X.; Yu, H. Research on intelligent technology application in large water conservancy projects. Water Resour. Informatiz. 2021, 75, 63–66. [Google Scholar]

- Chen, H.; Fu, H. The Application of Digital Twins in Power Systems. Electron. Technol. Softw. Eng. 2020, 21, 235–236. [Google Scholar]

- Xu, C. Digital Twinning and Intelligent Management of Large Pumping Stations. Shaanxi Water Resour. 2023, 4, 115–117. [Google Scholar]

- Huang, B. Discussion on arrangement of water environment monitoring network and volunteer station network mode: Case of water environment monitoring network construction in Yangtze River Basin. Yangtze River 2014, 18, 70–73. [Google Scholar]

- Hu, X.; Ye, L. Application of the bar code intelligence inspection technology management in draining water pumping stations. J. China Urban Water Assoc. 2009, 100, 75–78. [Google Scholar]

- Lei, X.; Sun, W.; Wei, X.; Hu, Q. Application of the AMS Inspection System in Shuibuya Hydropower Station. Hydropower New Energy 2015, 53, 48–50. [Google Scholar]

- Weng, X.; Jiang, J.; Zhao, W.; Fu, X. Application of Embedded Industrial PDA Barcode System in Pump Station Inspection. Digit. Agric. Intell. Agric. Mach. 2006, 38, 30. [Google Scholar]

- Wu, D.; Pan, J.; Yu, F.; Pan, B.; Gong, Y. Substation Inspection System Based on HoloLens. Comput. Mod. 2020, 50. [Google Scholar] [CrossRef]

- Berni, A.; Borgianni, Y. Applications of Virtual Reality in Engineering and Product Design: Why, What, How, When and Where. Electronics 2020, 9, 1064. [Google Scholar] [CrossRef]

- Aati, K.; Chang, D.; Edara, P.; Sun, C. Immersive Work Zone Inspection Training using Virtual Reality. Transp. Res. Rec. 2020, 2674, 224–232. [Google Scholar] [CrossRef]

- Latif, K.; Sharafat, A.; Seo, J. Digital Twin-Driven Framework for TBM Performance Prediction, Visualization, and Monitoring through Machine Learning. Appl. Sci. 2023, 13, 11435. [Google Scholar] [CrossRef]

- Vora, J.; Nair, S.; Gramopadhye, A.K.; Duchowski, A.T.; Melloy, B.J.; Kanki, B. Using virtual reality technology for aircraft visual inspection training: Presence and comparison studies. Appl. Ergon. 2002, 33, 559–570. [Google Scholar] [CrossRef] [PubMed]

- Ni, D.; Song, A.; Li, H. Survey on robot teleoperation based on virtual reality. Chin. J. Sci. Instrum. 2017, 38, 2351–2363. [Google Scholar]

- Jiwon, L.; Kim, M.; Kim, J. A Study on Immersion and VR Sickness in Walking Interaction for Immersive Virtual Reality Applications. Symmetry 2017, 9, 78. [Google Scholar] [CrossRef]

- Linn, C.; Bender, S.; Prosser, J.; Schmitt, K.; Werth, D. Virtual remote inspection—A new concept for virtual reality enhanced real-time maintenance. In Proceedings of the 2017 23rd International Conference on Virtual System & Multimedia (VSMM), Dublin, Ireland, 31 October–2 November 2017. [Google Scholar]

- Zidianakis, E.; Partarakis, N.; Ntoa, S.; Dimopoulos, A.; Kopidaki, S.; Ntagianta, A.; Ntafotis, E.; Xhako, A.; Pervolarakis, Z.; Kontaki, E.; et al. The Invisible Museum: A User-Centric Platform for Creating Virtual 3D Exhibitions with VR Support. Electronics 2021, 10, 363. [Google Scholar] [CrossRef]

- Umair, M.; Sharafat, A.; Lee, D.E.; Seo, J. Impact of Virtual Reality-Based Design Review System on User’s Performance and Cognitive Behavior for Building Design Review Tasks. Appl. Sci. 2022, 12, 7249. [Google Scholar] [CrossRef]

- Shi, Q.; Lin, W.; Ma, J.; Jin, J.; Wang, T.; Hou, S. Application of UAV Tilt Photography Modeling in Power Substation Engineering Survey. Mod. Ind. Econ. Inform. 2022, 12, 315–317. [Google Scholar]

- Yang, G.; Wang, M. The Tilt Photographic Measuration Technique and Expectation. Geomat. Spat. Inf. Technol. 2016, 39, 13–15. [Google Scholar]

- Niu, P. On the Urban 3D Modeling Method Based on Oblique Photography Technique. Value Eng. 2014, 33, 224–225. [Google Scholar]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV photogrammetry for mapping and 3D modeling: Current status and future perspectives. In Proceedings of the International Conference on Unmanned Aerial Vehicle in Geomatics (UAV-g), Zurich, Switzerland, 14–16 September 2011; International Society for Photogrammetry and Remote Sensing (ISPRS): Bethesda, MD, USA, 2011; pp. 25–31. [Google Scholar]

- Distante, A.; Distante, C. Shape from Shading. In Handbook of Image Processing and Computer Vision: Volume 3: From Pattern to Object; Springer International Publishing: Cham, Switzerland, 2020; pp. 413–478. [Google Scholar] [CrossRef]

- Cao, Z.; Wang, Y.; Zheng, W.; Yin, L.; Tang, Y.; Miao, W.; Liu, S.; Yang, B. The algorithm of stereo vision and shape from shading based on endoscope imaging. Biomed. Signal Process. Control 2022, 76, 103658. [Google Scholar] [CrossRef]

- Ernst, M.O.; Banks, M.S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 2002, 415, 429–433. [Google Scholar] [CrossRef] [PubMed]

- Cui, Z.; Matsunaga, K.; Kazunori, S. The effect of reaction force feedback on object-insert work in virtual reality environment. Researeh Rep. Infom. Sci. Eleetrleal Eng. Kyshu Univ. 2002, 7, 105–110. [Google Scholar]

- Satava, R.M. Robotics, telepresence and virtual reality:a critical analysis of the future of surgery. Minim. Invasive Ther. 1992, 1, 357–363. [Google Scholar]

- Song, A. Research on human-robot interaction telerobot with force telepresence. Sci. Technol. Rev. 2015, 33(23), 100–109. [Google Scholar]

- Yu, N.; Li, S.; Zhao, Y. Design and implementation of a dexterous human-robot interaction system based on haptic shared control. Chin. J. Sci. Instrum. 2017, 38, 602. [Google Scholar]

- Li, H.; Xie, G.; Song, A.; Li, B. Stability analysis of VE force feedback interaction system based on haptic handle. Chin. J. Sci. Instrum. 2019, 40, 162–170. [Google Scholar]

- Fu, Z. Application Research of Virtual Reality Haptic Feedback System. Master’s Thesis, Shanghai University of Engineering Science, Shanghai, China, 2014. [Google Scholar]

- Lu, J.; Hang, L.; Huang, X. Application Technology of Force Feedback Interaction System Based on Virtual Reality. Light Ind. Mach. 2016, 34, 98–102. [Google Scholar]

- Hilt, S.; Meunier, T.; Pontonnier, C.; Dumont, G. Biomechanical Fidelity of Simulated Pick-and-Place Tasks: Impact of Visual and Haptic Renderings. IEEE Trans. Haptics 2021, 14, 692–698. [Google Scholar] [CrossRef]

- Li, X.; Guo, S.; Shi, P.; Jin, X.; Kawanishi, M. An Endovascular Catheterization Robotic System Using Collaborative Operation with Magnetically Controlled Haptic Force Feedback. Micromachines 2022, 13, 505. [Google Scholar] [CrossRef]

- Lee, J.; Zhang, X.; Park, C.H.; Kim, M.J. Real-Time Teleoperation of Magnetic Force-Driven Microrobots With 3D Haptic Force Feedback for Micro-Navigation and Micro-Transportation. IEEE Robot. Autom. Lett. 2021, 6, 1769–1776. [Google Scholar] [CrossRef]

- Guo, Y.; Tong, Q.; Zheng, Y.; Wang, Z.; Zhang, Y.; Wang, D. An Adaptable VR Software Framework for Collaborative Multi-modal Haptic and Immersive Visual Display. J. Syst. Simul. 2020, 32, 1385–1392. [Google Scholar]

- Wei, W.; Ao, X. Digital Twins and Digital operations and Maintenance. Electr. Technol. Intell. Build. 2023, 17, 115–119. [Google Scholar]

- GB/T 7930-2008; Specifications for Aerophotogrammetric Office Operation of 1:500 1:1000 1:2000 Topographic Maps. Standards Press of China: Beijing, China, 2008.

- Chang, E.; Kim, H.T.; Yoo, B. Virtual Reality Sickness: A Review of Causes and Measurements. Int. J. Hum.-Comput. Interact. 2020, 36, 1658–1682. [Google Scholar] [CrossRef]

| VR Headset | Haptic Device |

|---|---|

| Receive visual information | Receive haptic force feedback |

| Platform for 3D model and data visualization display | Generates different haptic force feedback depending on different physical factors |

| Device Specifications | Metric |

|---|---|

| Force Feedback Workspace | 431 W × 348 H × 165 D mm |

| Backdrive Friction | <0.26 N |

| Maximum Exertable Force (at nominal orthogonal arms position) | 3.3 N |

| Stiffness | X axis > 1.26 N/mm; Y axis > 2.31 N/mm; Z axis > 1.02 N/mm |

| Data Name | Data Type |

|---|---|

| Running state | Boolean |

| Rack X-direction vibration amplitude | Float |

| Rack Y-direction vibration amplitude | Float |

| Rack Z-direction vibration amplitude | Float |

| Current in phase A | Float |

| Current in phase B | Float |

| Current in phase C | Float |

| Voltage in phase A | Float |

| Voltage in phase B | Float |

| Voltage in phase C | Float |

| Active Power | Float |

| Reactive Power | Float |

| Power Factor | Float |

| Stator Temperature | Float |

| Hydraulic Cylinder Temperature | Float |

| Last power-on time | string |

| Last power-off time | string |

| Power-on time | string |

| Water level height (D110) | Float |

| Water level height (D111) | Float |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, Z.; Liu, T.; Li, J.; Tang, H. Virtual Inspection System for Pumping Stations with Multimodal Feedback. Sensors 2024, 24, 4932. https://doi.org/10.3390/s24154932

Shao Z, Liu T, Li J, Tang H. Virtual Inspection System for Pumping Stations with Multimodal Feedback. Sensors. 2024; 24(15):4932. https://doi.org/10.3390/s24154932

Chicago/Turabian StyleShao, Zhiyu, Tianyuan Liu, Jingwei Li, and Hongru Tang. 2024. "Virtual Inspection System for Pumping Stations with Multimodal Feedback" Sensors 24, no. 15: 4932. https://doi.org/10.3390/s24154932

APA StyleShao, Z., Liu, T., Li, J., & Tang, H. (2024). Virtual Inspection System for Pumping Stations with Multimodal Feedback. Sensors, 24(15), 4932. https://doi.org/10.3390/s24154932