ARM4CH: A Methodology for Autonomous Reality Modelling for Cultural Heritage

Abstract

:1. Introduction

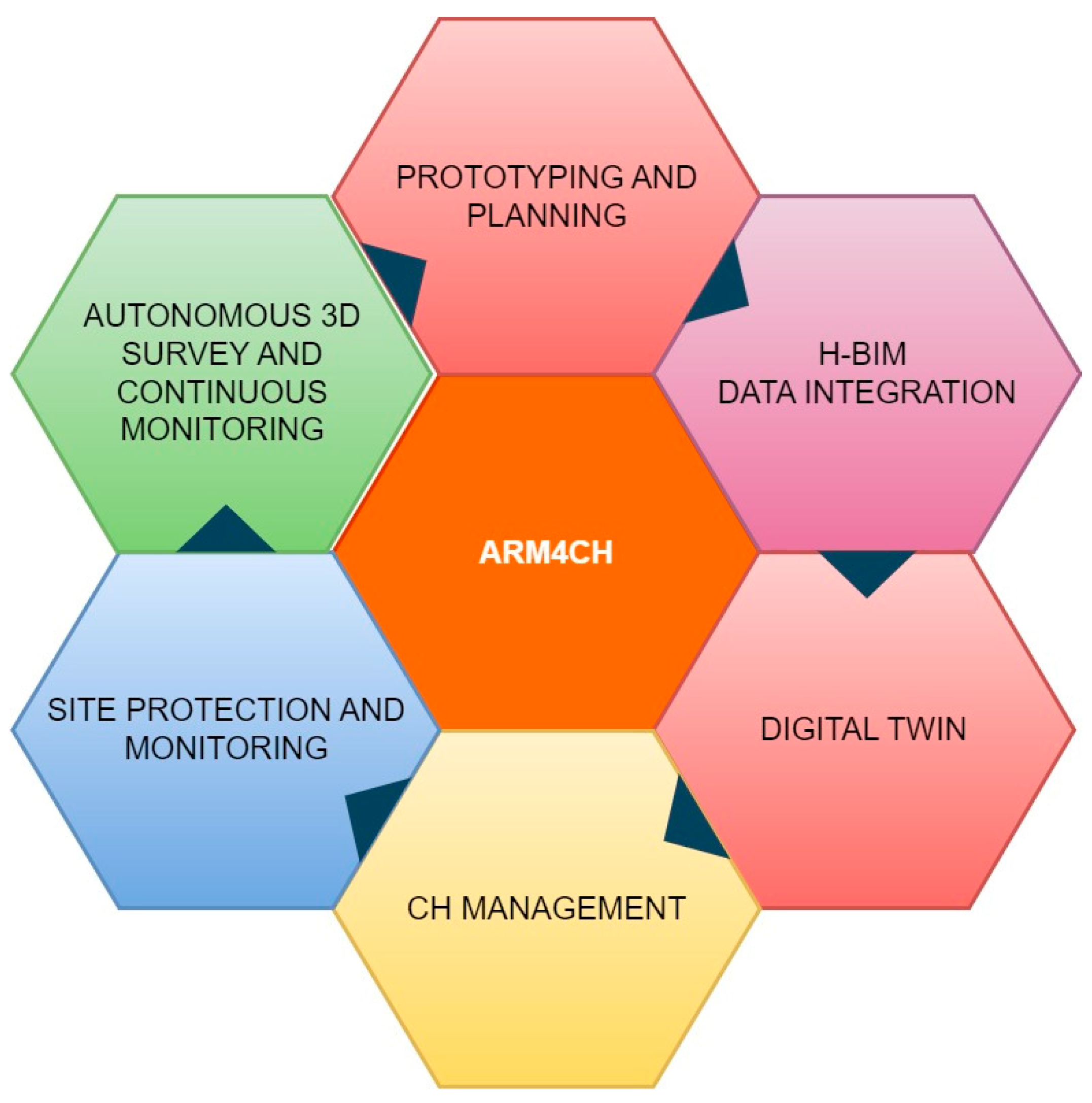

- Non-invasive and autonomous survey and inspection;

- Scanning operation for hard-to-reach, complex or dangerous areas;

- Reduction of labor costs and time-consuming scanning processes;

- Versatility and an increase in data precision;

- Consistency and optimization of measurements and data acquisition;

- Scanning and survey reproducibility;

- Regular monitoring of a CH site;

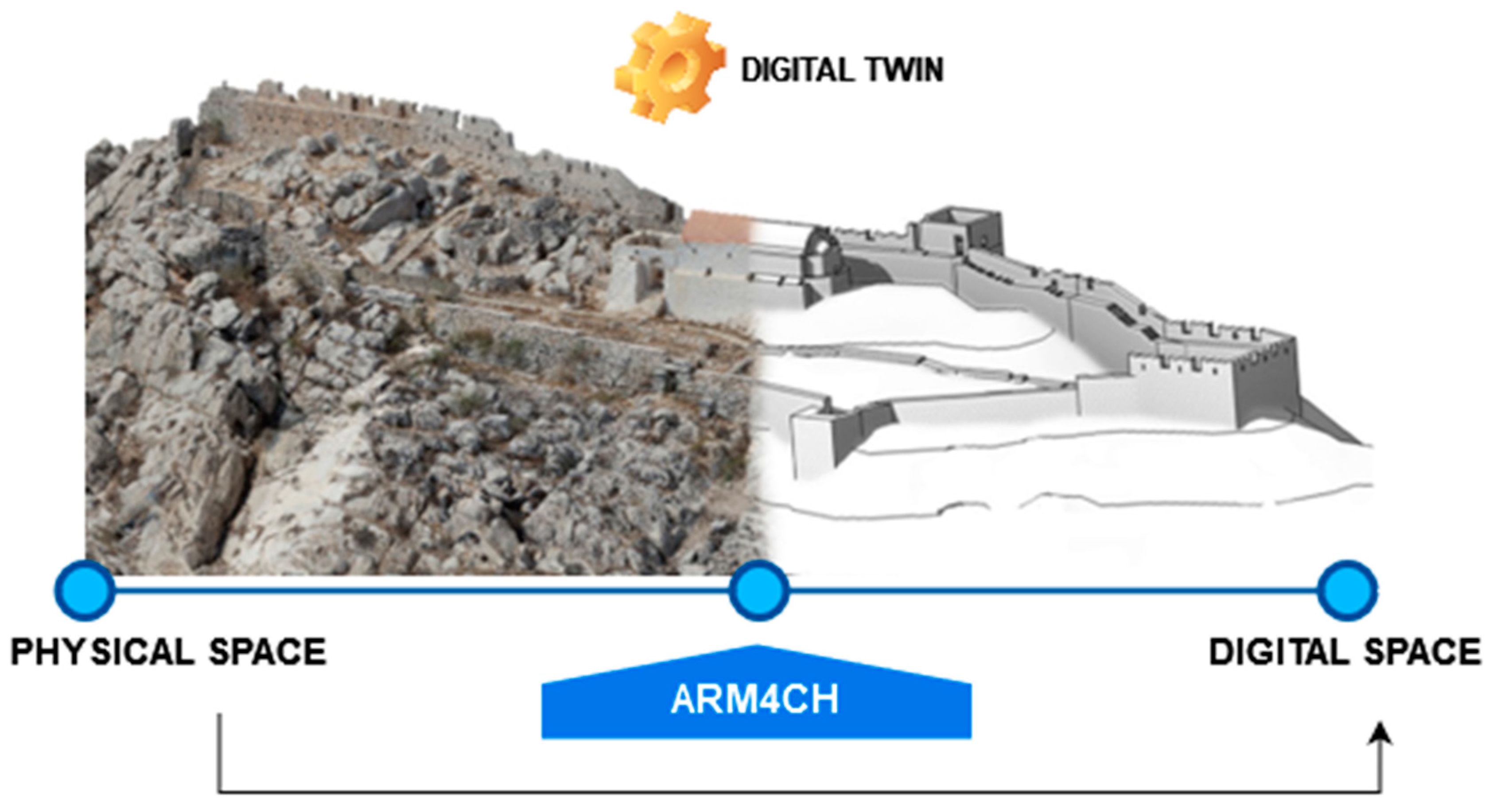

- Long-Term Monument Preservation and Management, a fostering of the Digital Twin concept.

2. Robotic Agents and 3D Scanning: A Brief Overview

3. Autonomous Reality Modeling for Cultural Heritage (ARM4CH)

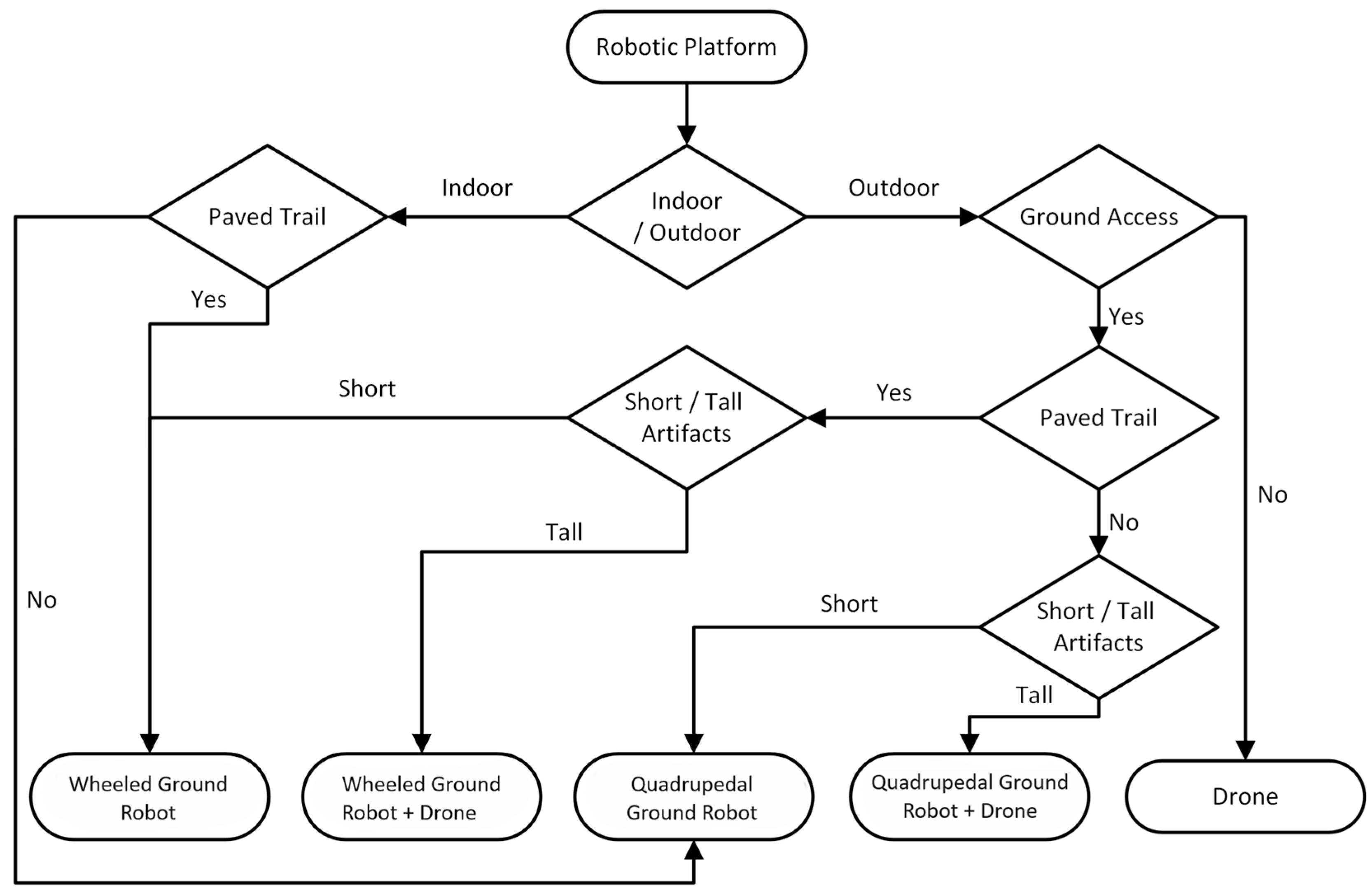

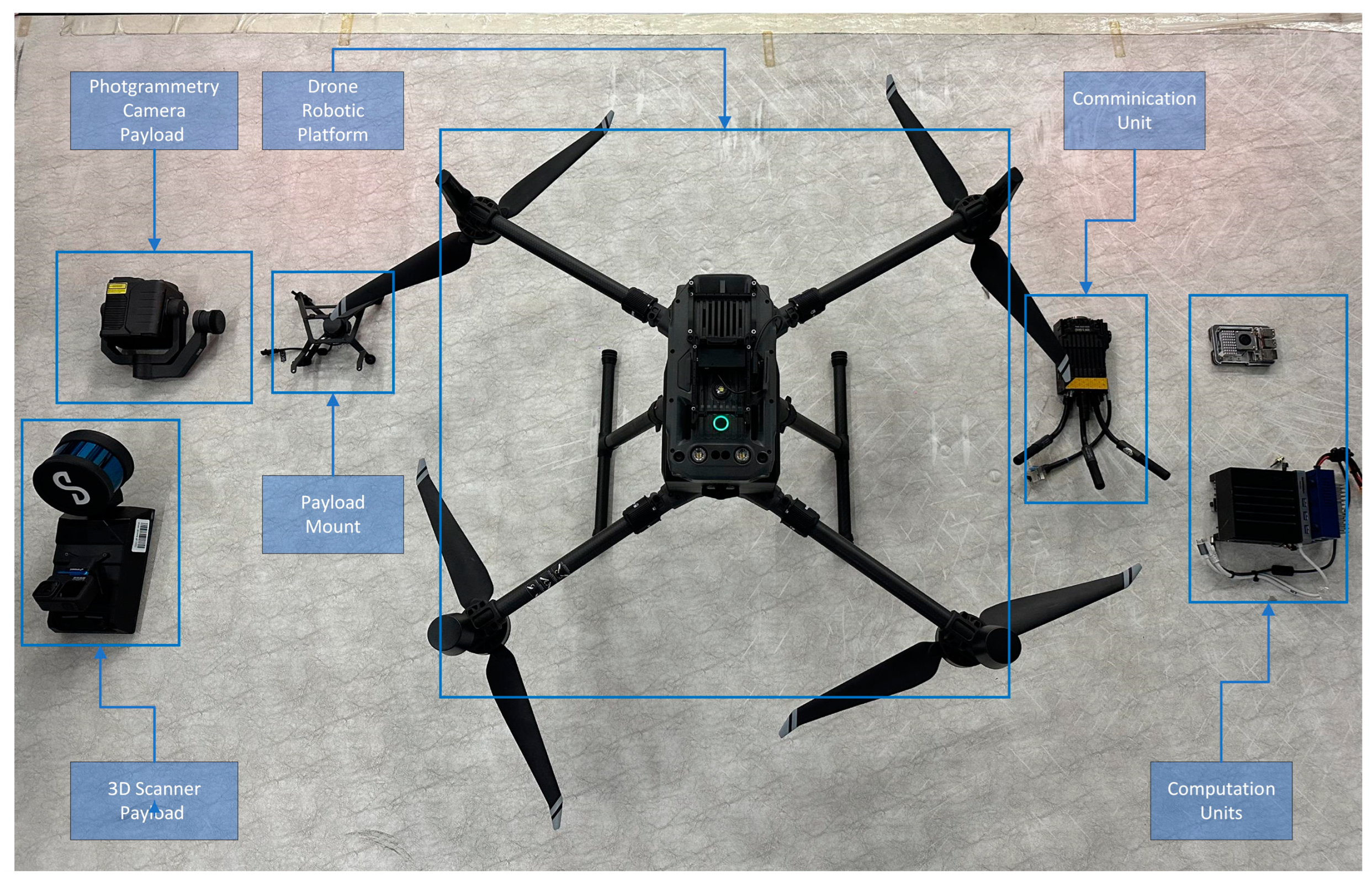

3.1. Robotic Agent (RA) Architecture

3.2. Methodology

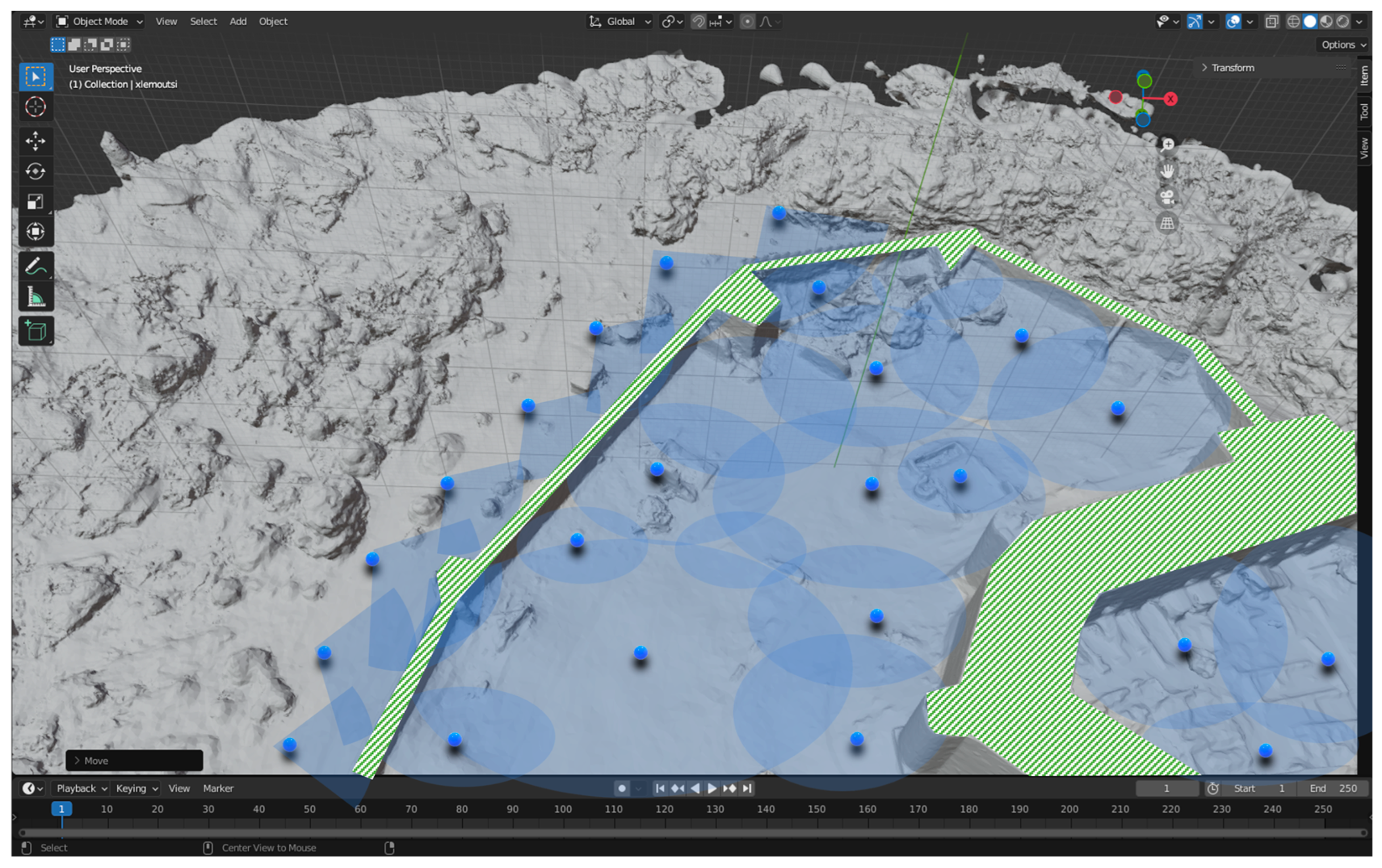

3.2.1. Scouting

3.2.2. Point of Interest (POI) Identification

3.2.3. Next Best View Detection

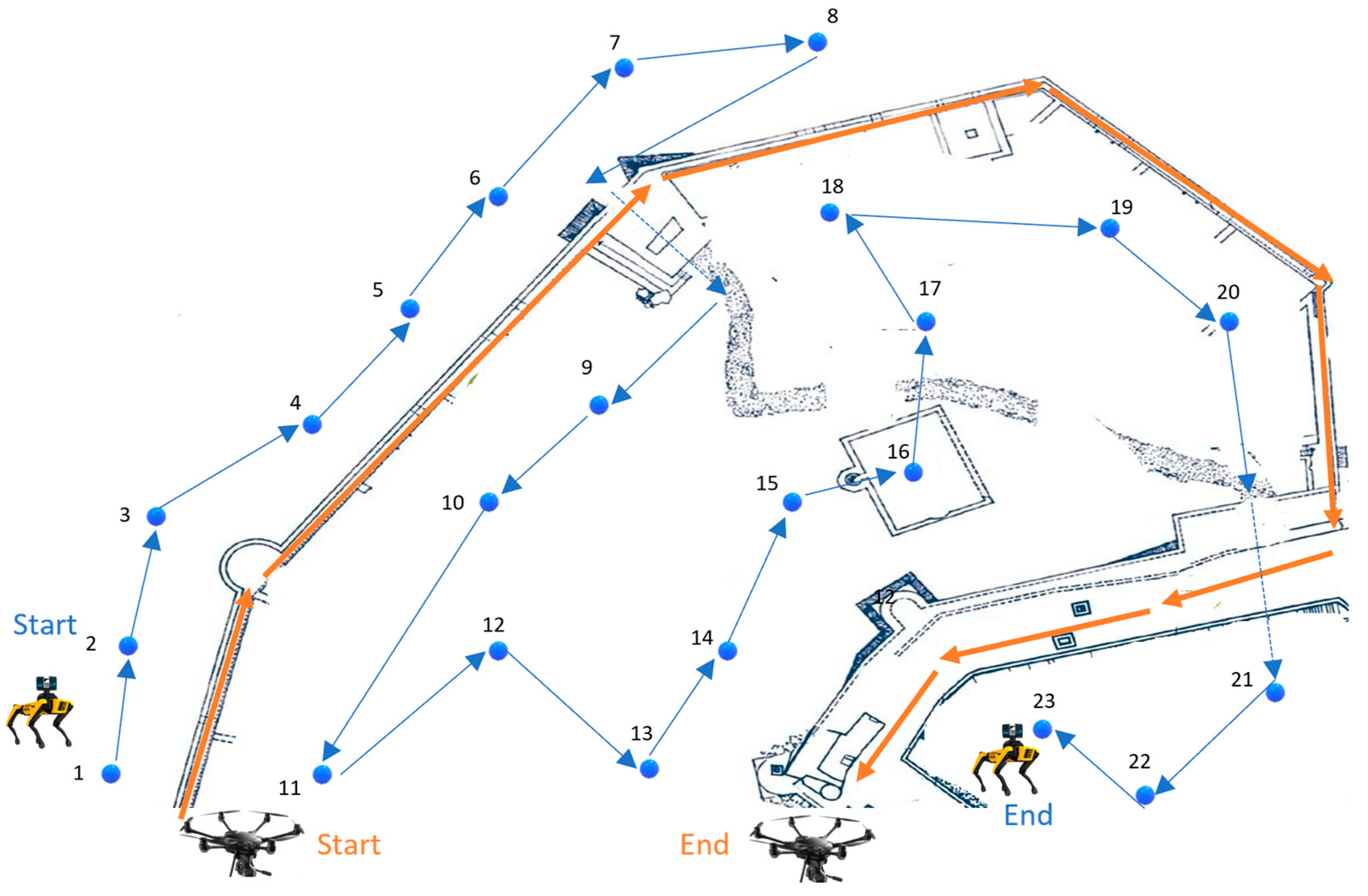

3.2.4. Path Planning

3.2.5. Scanning Process

4. Benefits and Barriers of the ARM4CH Methodology

- The ability to schedule robots remotely on unsupervised data capture and monitoring missions, 24/7, with specific field coverage.

- Ensure accuracy by capturing data from the same locations (viewpoints) multiple times, thus making direct data comparison feasible.

- The ability to create specific schedule plans to capture up-to-date data reliably.

- Reviewing, surveying, and inspecting spaces or places of critical/specific importance or those that pose a level of danger to the human surveyor.

- Complement the advantages of various sensor technologies and boost performance.

- Continuous or periodic monitoring. Thus, once a problem is confirmed, a maintenance team may be sent.

5. Discussion and Future Work

- Comparison, evaluation and final selection of the algorithms: This will ensure the seamless operation of the equipment (RAs and payload), as well as those responsible for scouting, POI, NBV, path planning, and scanning.

- Experimentation and training on a simulated environment: After step 1, this stage involves the training of operational RAs using the latest simulation software platforms, such as the Robot Operation System (ROS) [69], NVIDIA Omniverse [70], and Gazebo [71]. Those platforms are core modules that provide pre-trained models augmented with synthetic data to design, test, and train the autonomous navigation of RAs and deliver scalable and physically accurate virtual environments for high-fidelity simulations.

- Experimentation in a laboratory environment: This step involves the gradual release of operation in specific scenarios into a controlled environment. Moreover, it will verify that RAs have sufficient control, communication, awareness, and perception, as well as the ability to operate and navigate in dynamic and unpredictable indoor/outdoor environments.

- Full deployment in a real heritage site: In this final step, ARM4CH will be released and evaluated in a large-scale Cultural Heritage park that includes various infrastructure for public services, protected monuments, and archaeological sites.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, D.; Minner, J. UAVs and 3D City Modeling to Aid Urban Planning and Historic Preservation: A Systematic Review. Remote Sens. 2023, 15, 5507. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, L.; Chen, Y.; Zhang, N.; Fan, H.; Zhang, Z. 3D LiDAR and multi-technology collaboration for preservation of built heritage in China: A review. Int. J. Appl. Earth Obs. Geoinf. 2023, 116, 103156. [Google Scholar] [CrossRef]

- Mitric, J.; Radulovic, I.; Popovic, T.; Scekic, Z.; Tinaj, S. AI and Computer Vision in Cultural Heritage Preservation. In Proceedings of the 2024 28th International Conference on Information Technology (IT), Zabljak, Montenegro, 21–24 February 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Caron, G.; Bellon, O.R.P.; Shimshoni, I. Computer Vision and Robotics for Cultural Heritage: Theory and Applications. J. Imaging 2023, 9, 9. [Google Scholar] [CrossRef] [PubMed]

- Aicardi, I.; Chiabrando, F.; Lingua, A.M.; Noardo, F. Recent trends in cultural heritage 3D survey: The photogrammetric computer vision approach. J. Cult. Herit. 2018, 32, 257–266. [Google Scholar] [CrossRef]

- Mahmood, S.; Majid, Z.; Idris, K.M. Terrestrial LiDAR sensor modeling towards optimal scan location and spatial density planning for 3D surveying. Appl. Geomat. 2020, 12, 467–480. [Google Scholar] [CrossRef]

- Prieto, S.A.; Giakoumidis, N.; García De Soto, B. Multiagent robotic systems and exploration algorithms: Applications for data collection in construction sites. J. Field Robot. 2024, 41, 1187–1203. [Google Scholar] [CrossRef]

- Fawcett, R.T.; Pandala, A.; Ames, A.D.; Hamed, K.A. Robust Stabilization of Periodic Gaits for Quadrupedal Locomotion via QP-Based Virtual Constraint Controllers. IEEE Control. Syst. Lett. 2022, 6, 1736–1741. [Google Scholar] [CrossRef]

- Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 2020, 5, eabc5986. [Google Scholar] [CrossRef] [PubMed]

- Soori, M.; Arezoo, B.; Dastres, R. Artificial intelligence, machine learning and deep learning in advanced robotics, a review. Cogn. Robot. 2023, 3, 54–70. [Google Scholar] [CrossRef]

- Mikołajczyk, T.; Mikołajewski, D.; Kłodowski, A.; Łukaszewicz, A.; Mikołajewska, E.; Paczkowski, T.; Skornia, M. Energy Sources of Mobile Robot Power Systems: A Systematic Review and Comparison of Efficiency. Appl. Sci. 2023, 13, 7547. [Google Scholar] [CrossRef]

- Chen, L.; Hoang, D.; Lin, H.; Nguyen, T. Innovative methodology for multi-view point cloud registration in robotic 3d object scanning and reconstruction. Appl. Sci. 2016, 6, 132. [Google Scholar] [CrossRef]

- Park, S.; Yoon, S.; Ju, S.; Heo, J. BIM-based scan planning for scanning with a quadruped walking robot. Autom. Constr. 2023, 152, 104911. [Google Scholar] [CrossRef]

- Kim, P.; Park, J.; Cho, Y. As-is geometric data collection and 3D visualization through the collaboration between UAV and UGV. In Proceedings of the International Symposium on Automation and Robotics in Construction (ISARC), Banff, Canada, 21–24 May 2019; Volume 36, pp. 544–551. [Google Scholar] [CrossRef]

- Peers, C.; Motawei, M.; Richardson, R.; Zhou, C. Development of a teleoperative quadrupedal manipulator. In Proceedings of the UKRAS21 Conference: Robotics at Home Proceedings, Online, 2 June 2021; University of Hertfordshire: Hatfield, UK. [Google Scholar] [CrossRef]

- Ding, Y.; Pandala, A.; Li, C.; Shin, Y.; Park, H.W. Representation-free model predictive control for dynamic motions in quadrupeds. IEEE Trans. Robot. 2021, 37, 1154–1171. [Google Scholar] [CrossRef]

- Hutter, M.; Gehring, C.; Jud, D.; Lauber, A.; Bellicoso, C.D.; Tsounis, V.; Hwangbo, J.; Bodie, K.; Fankhauser, P.; Bloesch, M.; et al. ANYmal—A highly mobile and dynamic quadrupedal robot. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 38–44. [Google Scholar] [CrossRef]

- Borkar, K.K.; Aljrees, T.; Pandey, S.K.; Kumar, A.; Singh, M.K.; Sinha, A.; Sharma, V. Stability Analysis and Navigational Techniques of Wheeled Mobile Robot: A Review. Processes 2023, 11, 3302. [Google Scholar] [CrossRef]

- Rubio, F.; Valero, F.; Llopis-Albert, C. A review of mobile robots: Concepts, methods, theoretical framework, and applications. Int. J. Adv. Robot. Syst. 2019, 16, 172988141983959. [Google Scholar] [CrossRef]

- Camurri, M.; Ramezani, M.; Nobili, S.; Fallon, M. Pronto: A Multi-Sensor State Estimator for Legged Robots in Real-World Scenarios. Front. Robot. AI 2020, 7, 68. [Google Scholar] [CrossRef] [PubMed]

- Macario Barros, A.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A Comprehensive Survey of Visual SLAM Algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Mittal, S. A Survey on optimized implementation of deep learning models on the NVIDIA Jetson platform. J. Syst. Archit. 2019, 97, 428–442. [Google Scholar] [CrossRef]

- Li, Y.; Du, S.; Kim, Y. Robot swarm MANET cooperation based on mobile agent. In Proceedings of the 2009 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guilin, China, 19–23 December 2009. [Google Scholar]

- Ivanov, M.; Sergiyenko, O.; Tyrsa, V.; Lindner, L.; Reyes-García, M.; Rodríguez-Quiñonez, J.C.; Hernández-Balbuena, D. Data Exchange and Task of Navigation for Robotic Group; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 389–430. [Google Scholar]

- Kalvoda, P.; Nosek, J.; Kuruc, M.; Volarik, T.; Kalvodova, P. Accuracy Evaluation and Comparison of Mobile Laser Scanning and Mobile Photogrammetry Data. IOP Conf. Ser. Earth Environ. Sci. 2020, 609, 012091. [Google Scholar] [CrossRef]

- Dering, G.M.; Micklethwaite, S.; Thiele, S.T.; Vollgger, S.A.; Cruden, A.R. Review of drones, photogrammetry and emerging sensor technology for the study of dykes: Best practises and future potential. J. Volcanol. Geotherm. Res. 2019, 373, 148–166. [Google Scholar] [CrossRef]

- Daneshmand, M.; Helmi, A.; Avots, E.; Noroozi, F.; Alisinanoglu, F.; Arslan, H.S.; Gorbova, J.; Haamer, R.E.; Ozcinar, C.; Anbarjafari, G. 3D Scanning: A Comprehensive Survey. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Chen, K.; Reichard, G.; Akanmu, A.; Xu, X. Geo-registering UAV-captured close-range images to GIS-based spatial model for building façade inspections. Autom. Constr. 2021, 122, 103503. [Google Scholar] [CrossRef]

- Kalaitzakis, M.; Cain, B.; Carroll, S.; Ambrosi, A.; Whitehead, C.; Vitzilaios, N. Fiducial Markers for Pose Estimation. J. Intell. Robot. Syst. 2021, 101, 71. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Wallace, D.; He, Y.H.; Vaz, J.C.; Georgescu, L.; Oh, P.Y. Multimodal Teleoperation of Heterogeneous Robots within a Construction Environment. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2020. [Google Scholar]

- Pierdicca, R.; Paolanti, M.; Matrone, F.; Martini, M.; Morbidoni, C.; Malinverni, E.S.; Lingua, A.M. Point Cloud Semantic Segmentation Using a Deep Learning Framework for Cultural Heritage. Remote Sens. 2020, 12, 1005. [Google Scholar] [CrossRef]

- Câmara, A.; de Almeida, A.; Caçador, D.; Oliveira, J. Automated methods for image detection of cultural heritage: Overviews and perspectives. Archaeol. Prospect. 2023, 30, 153–169. [Google Scholar] [CrossRef]

- Fiorucci, M.; Verschoof-Van Der Vaart, W.B.; Soleni, P.; Le Saux, B.; Traviglia, A. Deep Learning for Archaeological Object Detection on LiDAR: New Evaluation Measures and Insights. Remote Sens. 2022, 14, 1694. [Google Scholar] [CrossRef]

- Potthast, C.; Sukhatme, G.S. A probabilistic framework for next best view estimation in a cluttered environment. J. Vis. Commun. Image Represent. 2014, 25, 148–164. [Google Scholar] [CrossRef]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding horizon path planning for 3D exploration and surface inspection. Auton. Robot. 2018, 42, 291–306. [Google Scholar] [CrossRef]

- Delmerico, J.; Isler, S.; Sabzevari, R.; Scaramuzza, D. A comparison of volumetric information gain metrics for active 3D object reconstruction. Auton. Robot. 2018, 42, 197–208. [Google Scholar] [CrossRef]

- Almadhoun, R.; Abduldayem, A.; Taha, T.; Seneviratne, L.; Zweiri, Y. Guided Next Best View for 3D Reconstruction of Large Complex Structures. Remote Sens. 2019, 11, 2440. [Google Scholar] [CrossRef]

- Palazzolo, E.; Stachniss, C. Effective Exploration for MAVs Based on the Expected Information Gain. Drones 2018, 2, 9. [Google Scholar] [CrossRef]

- Kaba, M.D.; Uzunbas, M.G.; Lim, S.N. A Reinforcement Learning Approach to the View Planning Problem. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Trummer, M.; Munkelt, C.; Denzler, J. Combined GKLT Feature Tracking and Reconstruction for Next Best View Planning; Springer: Berlin/Heidelberg, Germany, 2009; pp. 161–170. [Google Scholar]

- Wang, Y.; Del Bue, A. Where to Explore Next? ExHistCNN for History-Aware Autonomous 3D Exploration; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 125–140. [Google Scholar]

- Morreale, L.; Romanoni, A.; Matteucci, M. Predicting the Next Best View for 3D Mesh Refinement; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 760–772. [Google Scholar]

- Jin, L.; Chen, X.; Rückin, J.; Popović, M. NeU-NBV: Next Best View Planning Using Uncertainty Estimation in Image-Based Neural Rendering. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 11305–11312. [Google Scholar] [CrossRef]

- Muhammad, A.; Abdullah, N.R.H.; Ali, M.A.; Shanono, I.H.; Samad, R. Simulation Performance Comparison of A*, GLS, RRT and PRM Path Planning Algorithms. In Proceedings of the 2022 IEEE 12th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malaysia, 21–22 May 2022. [Google Scholar]

- Bujanca, M.; Shi, X.; Spear, M.; Zhao, P.; Lennox, B.; Luján, M. Robust SLAM Systems: Are We There Yet? In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar]

- Campos, C.; Elvira, R.; Rodríguez JJ, G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 5135–5142. [Google Scholar] [CrossRef]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar] [CrossRef]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97. “Towards New Computational Principles for Robotics and Automation”, Monterey, CA, USA, 10–11 June 1997; pp. 146–151. [Google Scholar] [CrossRef]

- Dang, T.; Tranzatto, M.; Khattak, S.; Mascarich, F.; Alexis, K.; Hutter, M. Graph-based subterranean exploration path planning using aerial and legged robots, Special Issue on Field and Service Robotics (FSR). J. Field Robot. 2020, 37, 1363–1388. [Google Scholar] [CrossRef]

- Luo, F.; Zhou, Q.; Fuentes, J.; Ding, W.; Gu, C. A Soar-Based Space Exploration Algorithm for Mobile Robots. Entropy 2022, 24, 426. [Google Scholar] [CrossRef] [PubMed]

- Segment Anything. Available online: https://segment-anything.com/ (accessed on 27 July 2024).

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient Graph-Based Image Segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016. ECCV 2016. Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9905. [Google Scholar] [CrossRef]

- Wang, T.; Xi, W.; Cheng, Y.; Han, H.; Yang, Y. RL-NBV: A deep reinforcement learning based next-best-view method for unknown object reconstruction. Pattern Recognit. Lett. 2024, 184, 1–6. [Google Scholar] [CrossRef]

- Zeng, R.; Zhao, W.; Liu, Y.-J. PC-NBV: A Point Cloud Based Deep Network for Efficient Next Best View Planning. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hangzhou, China, 19–25 October 2020; pp. 7050–7057. [Google Scholar] [CrossRef]

- Moon, C.B.; Chung, W. Kinodynamic Planner Dual-Tree RRT (DT-RRT) for Two-Wheeled Mobile Robots Using the Rapidly Exploring Random Tree. IEEE Trans. Ind. Electron. 2015, 62, 1080–1090. [Google Scholar] [CrossRef]

- Kavraki, L.E.; Svestka, P.; Latombe, J.C.; Overmars, M.H. Probabilistic roadmaps for path planning in high-dimensional configuration spaces. IEEE Trans. Robot. Autom. 1996, 12, 566–580. [Google Scholar] [CrossRef]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A Formal Basis for the Heuristic Determination of Minimum Cost Paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Parrinello, S.; Picchio, F. Digital Strategies to Enhance Cultural Heritage Routes: From Integrated Survey to Digital Twins of Different European Architectural Scenarios. Drones 2023, 7, 576. [Google Scholar] [CrossRef]

- Cimino, C.; Ferretti, G.; Leva, A. Harmonising and integrating the digital twins multiverse: A paradigm and a toolset proposal. Comput. Ind. 2021, 132, 103501. [Google Scholar] [CrossRef]

- Osco, L.P.; Junior, J.M.; Ramos, A.P.M.; de Castro Jorge, L.A.; Fatholahi, S.N.; de Andrade Silva, J.; Matsubara, E.T.; Pistori, H.; Gonçalves, W.N.; Li, J. A review on deep learning in UAV remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102456. [Google Scholar] [CrossRef]

- Qiu, Q.; Lau, D. Real-time detection of cracks in tiled sidewalks using YOLO-based method applied to unmanned aerial vehicle (UAV) images. Autom. Constr. 2023, 147, 104745. [Google Scholar] [CrossRef]

- Mittal, P.; Singh, R.; Sharma, A. Deep learning-based object detection in low-altitude UAV datasets: A survey. Image Vis. Comput. 2020, 104, 104046. [Google Scholar] [CrossRef]

- Robot Operation System (ROS). Available online: https://www.ros.org/ (accessed on 27 July 2024).

- NVIDIA Omniverse. Available online: https://www.nvidia.com/en-eu/omniverse/ (accessed on 27 July 2024).

- Gazebo. Available online: https://gazebosim.org (accessed on 27 July 2024).

| Criteria | Terrestrial Laser Scanners (TLS) | SLAM-Based/Mobile Scanners | Photogrammetry/Structure from Motion (SfM) |

|---|---|---|---|

| Accuracy and Precision | High (millimeter precision) | Moderate (depends on technology) | Variable (high under optimal conditions) |

| Data Collection Speed | Low (requires setup and multiple stations) | Fast (on-the-go collection) | Medium (depends on the required accuracy and complexity) |

| Cost | High (expensive hardware) | Moderate (less expensive than Terrestrial Laser) | Low to high (depends on camera equipment) |

| Operational Complexity | High (requires skilled operation) | Moderate (easier in complex environments) | Moderate (requires photographic expertise) |

| Environmental Constraints | Sensitive to reflective surfaces | Sensitive to reflective surfaces | Highly dependent on lighting and weather conditions |

| Post-Processing | Intensive (cleaning, registration, and merging) | Moderate (alignment aids, needs noise reduction) | Automated but long processing and sensitive to image quality |

| Application Suitability | Ideal for detailed, static environments | Suitable for complex environments | Versatile for various scales under good environment conditions |

| Common Use Cases | Detailed architectural, archeological, and engineering documentation | Extensive and complex environments like urban areas or large buildings | Large or remote outdoor areas |

| Task | Algorithm | Indicative References |

|---|---|---|

| Scouting | SLAM-based: ORB-based LIO-SAM ROVIO | [47,48,49] |

| Exploration-based: Frontier-based Graph-based planners SOAR-based space exploration | [50,51,52] | |

| Point of Interest | Semantic Segmentation: Segment Anything Meta (SAM) Graph-based PSPNet | [53,54,55] |

| Object Detection: YOLO-based R-CNN SSD | [56,57,58] | |

| Next Best View | Reinforcement Learning NBV (RL-NBV) Point Cloud NBV (PC-NBV) NeU-NBV | [44,59,60] |

| Path Planning | RRT RPM A* algorithm | [61,62,63] |

| Benefits | Detailed Analysis |

|---|---|

| Non-invasive survey and inspection | ARM4CH may carry out detailed Reality Modeling and monitoring without causing any physical disruption to the site, gathering high-resolution images, 3D scans, which are essential for a detailed analysis and documentation of the current state of the CH site. |

| Access to hard-to-reach areas and complex areas | Human operators are not exposed to missions and roles that might be challenging for them or to conventional surveying equipment. ARM4CH may suit perfectly for the survey or modeling of deteriorating structures of a monument. |

| Reduce labor costs and survey time | As CH sites often have complex architectures or difficult-to-access areas, ARM4CH may reduce the laborious and time-consuming process of 3D scanning by a human operator. The user now has a supervisory role to extensive surveys or inspections, which can be both time-consuming and expensive. |

| Versatility and precision | ARM4CH can be equipped with different sensors and tools, such as cameras, thermal imaging, and LIDAR, allowing it to perform a wide range of monitoring and surveying tasks with high precision, reducing the chances of human error and ensuring high-quality data collection. |

| Consistency and optimization | ARM4CH may perform tasks autonomously and systematically, following predefined routes and schedules, ensuring optimized, consistent, and reliable data collection with high precision and consistency. |

| Survey replication | ARM4CH may be replicated/executed systematically, as many times as necessary, providing the ability to complete follow-up scans of the CH site and update its Digital Twin or Heritage Building Information Model (H-BIM). |

| Regular monitoring | ARM4CH may lead to regular and consistent monitoring, providing up-to-date information on the CH site (i.e., detecting gradual changes or deterioration over time, damage, structural weaknesses, etc.), facilitating an immediate response to potential problems. |

| Long-term preservation and management | The detailed and systematic data collected by ARM4CH may assist curators, and conservation experts in prototype planning and executing restoration projects and a holistic CH management strategy. |

| Cost | The initial cost for creating the ARM4CH core platform including RAs and sensors is high, which might be a barrier for surveying companies or CH stakeholders that would like to operate this methodology. |

| Training and expertise transfer | The execution of ARM4CH and data management requires the presence of an expert in the survey team, who would supervise the process. This may necessitate additional resources for staff training or hiring skilled personnel. |

| Data management | If regular surveys are needed, a robust data infrastructure should be available, since large volumes of data collected need to be stored, processed, and managed (e.g., a complete DT platform). |

| Ethical and cultural concerns | The use of Industry 4.0 equipment in CH sites might raise ethical or cultural concerns among stakeholders who prefer traditional methods or have concerns about the use of frameworks of the latest technology (i.e., malfunctions that may cause unintentional damage to the site). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Giakoumidis, N.; Anagnostopoulos, C.-N. ARM4CH: A Methodology for Autonomous Reality Modelling for Cultural Heritage. Sensors 2024, 24, 4950. https://doi.org/10.3390/s24154950

Giakoumidis N, Anagnostopoulos C-N. ARM4CH: A Methodology for Autonomous Reality Modelling for Cultural Heritage. Sensors. 2024; 24(15):4950. https://doi.org/10.3390/s24154950

Chicago/Turabian StyleGiakoumidis, Nikolaos, and Christos-Nikolaos Anagnostopoulos. 2024. "ARM4CH: A Methodology for Autonomous Reality Modelling for Cultural Heritage" Sensors 24, no. 15: 4950. https://doi.org/10.3390/s24154950