Enhancing Autonomous Truck Navigation with Ultra-Wideband Technology in Industrial Environments

Abstract

:1. Introduction

- Initial Setup Cost: The initial cost of implementing UWB technology is high, which can be a barrier to widespread adoption, especially for smaller enterprises or those with limited budgets [19].

2. Traditional RTLS-BASED AGV Truck Navigation Systems

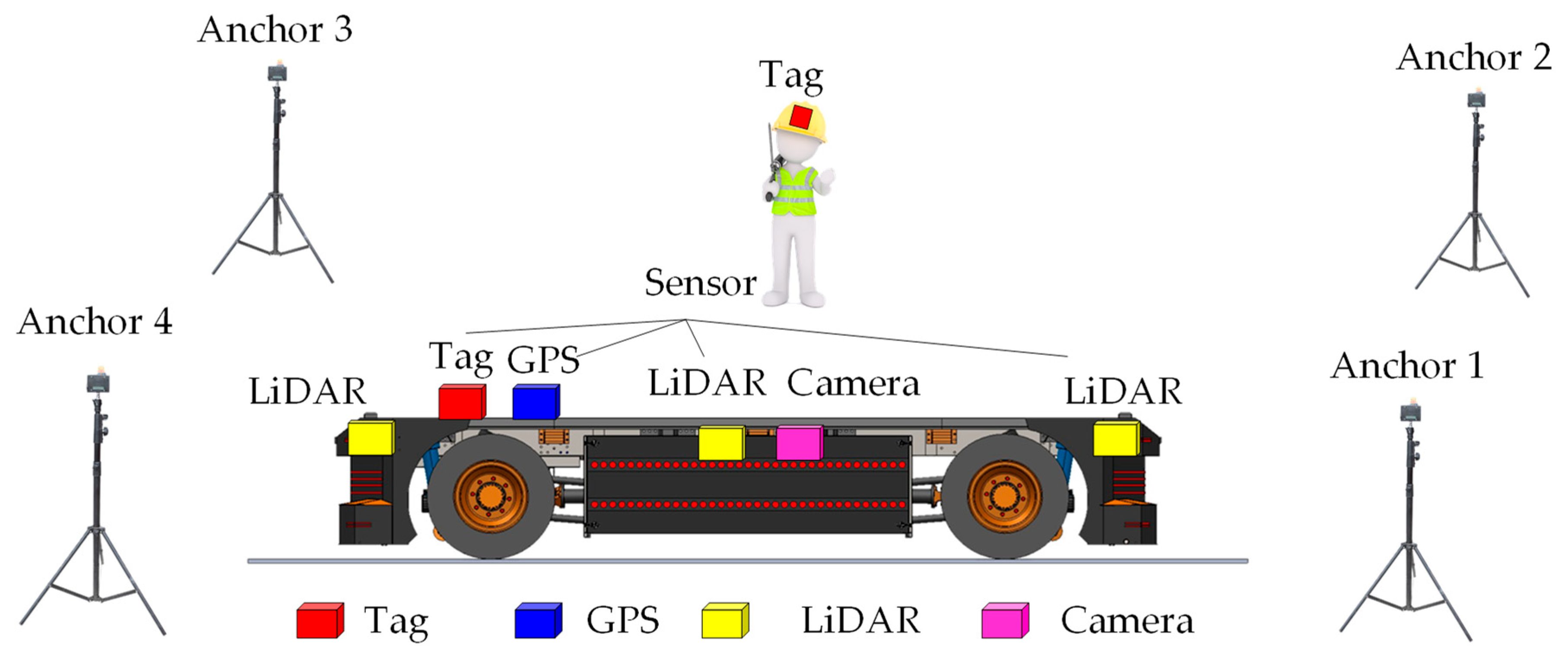

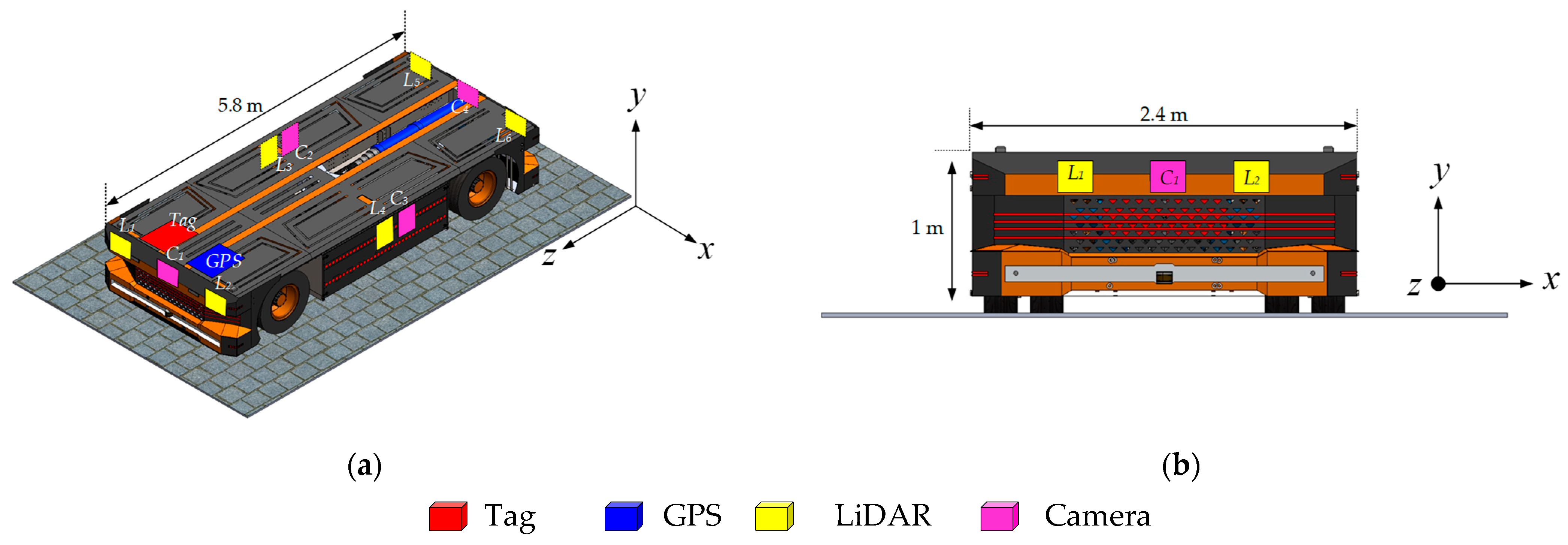

2.1. Sensor Fusion of LiDAR, GPS, Image-Based Positioning, and UWB

2.2. Basic Architecture of a Real-Time Location System

2.3. Navigation System for AGVs Using an FI-RTLS

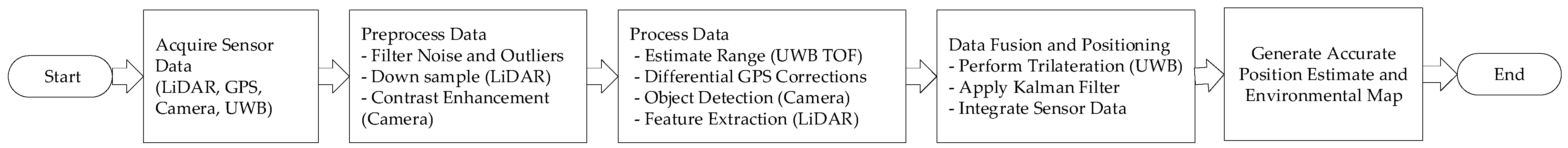

3. Overview of the Proposed FI-RTLS AGV System

3.1. Proposed Architecture

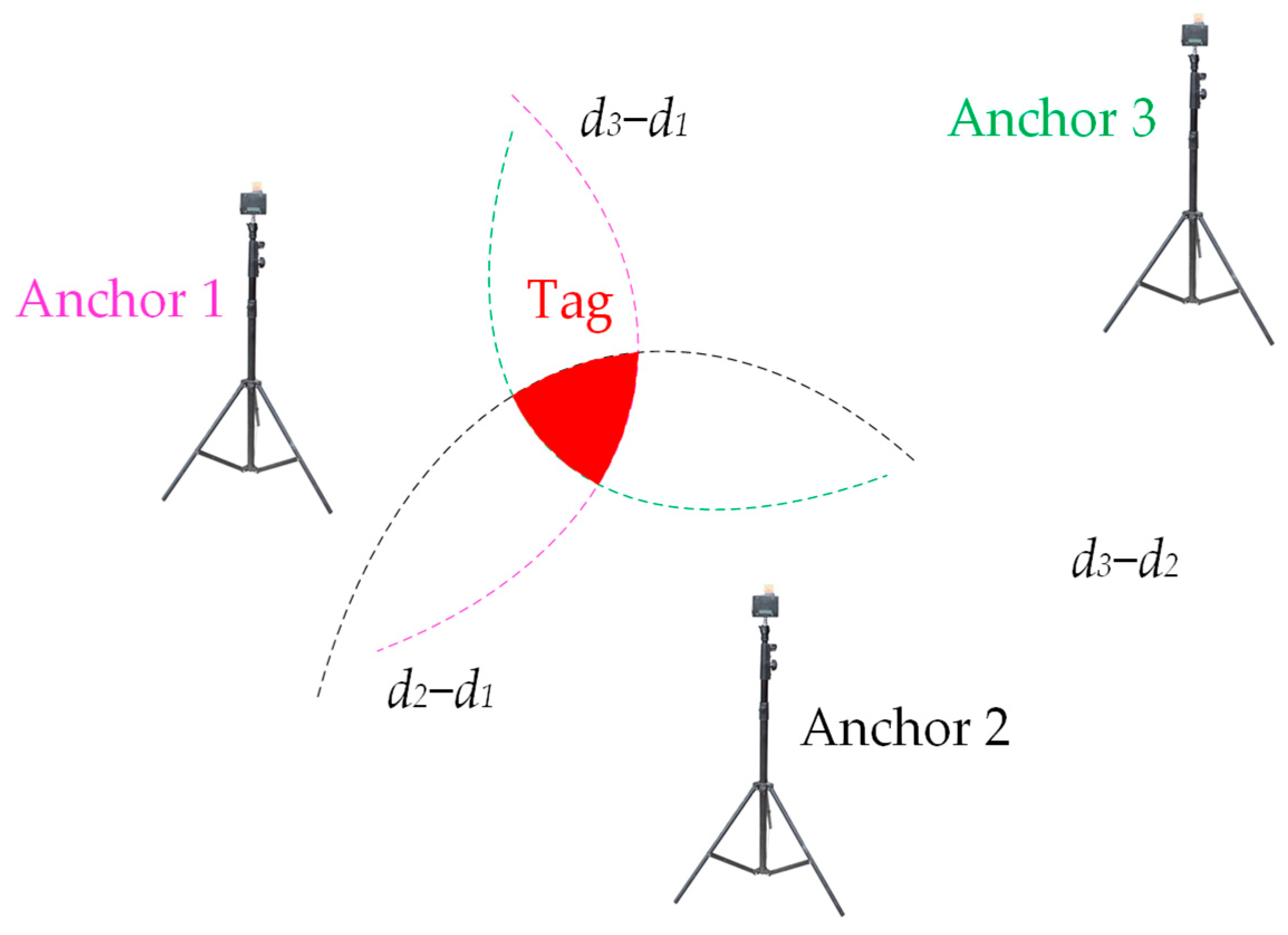

3.2. Implementation of UWB Positioning Using the TDoA Algorithm

| Algorithm 1. Function of the GD–Taylor method. | |

| Input | Locations of anchors (x1, y1), (x2, y2),…, (xn, yn) Received time stamps t1, t2,…, tn Maximal iteration time max_iter Initial location (xinit, yinit) |

| Output | Estimated location of tag (xt, yt) |

| 1 | Calculate d21, d31, …, dn1 by multiplying light speed and time resolution to (t2 t1), (t3 t1), …, (tn t1); |

| 2 | Set weight to 10−10; |

| 3 | Set (x, y) to (xinit, yinit); |

| 4 | while times < max_iter do |

| 5 | d1, d2, …, dn are the distances from anchors to (x, y); |

| 6 | use (8) and (11) to calculate and ; |

| 7 | Set to (+); |

| 8 | Set weight to (weight+ + ); |

| 9 | Set x to (x+ /(weight)1/2); |

| 10 | Set y to (y+ /(weight)1/2); |

| 11 | times++; |

| 12 | if ((, x2+ , y2)/weight)1/2 < 0.001 then |

| 13 | break |

| 14 | end if |

| 15 | end while |

| 16 | return (x, y) |

3.3. Extend Kalman Filter (EKF) Node

- -

- Empirical Analysis: For each sensor (LiDAR, GPS, camera, UWB), we collect extensive data under controlled conditions. This involves multiple testing scenarios to capture various operational states.

- -

- Covariance Estimation: We estimate the noise covariance matrices (Q for process noise and R for measurement noise) based on the collected data. These matrices reflect the statistical properties of the sensor noise.

- -

- Bias and Variance Identification: Calibration procedures are performed to identify and correct systematic biases and measure the variance in sensor outputs. This includes static and dynamic calibration techniques to ensure the sensors provide accurate readings.

- -

- Dynamic Calibration: We conduct continuous monitoring and recalibration during operation to account for environmental changes and sensor aging.

- -

- ROS robot_localization Package: We employ the ROS robot_localization package, which integrates data from multiple sensors using EKF. This package supports the specification of noise parameters for each sensor, allowing the EKF to effectively manage the statistical properties of the sensor data.

- -

- Parameter Specification: We use accurate specification of sensor noise parameters in the configuration files, ensuring that the EKF can adapt to the different noise characteristics of each sensor type.

- -

- Cross-Validation with Ground Truth Data: We perform cross-validation by comparing the EKF outputs with ground truth data obtained from high-precision reference systems. This helps to validate the accuracy of the EKF implementation.

- -

- Dynamic Adjustment: Based on validation results, we dynamically adjust the noise characteristics in the EKF to maintain optimal performance. This iterative process helps to refine the state estimates continuously.

4. Results and Discussion

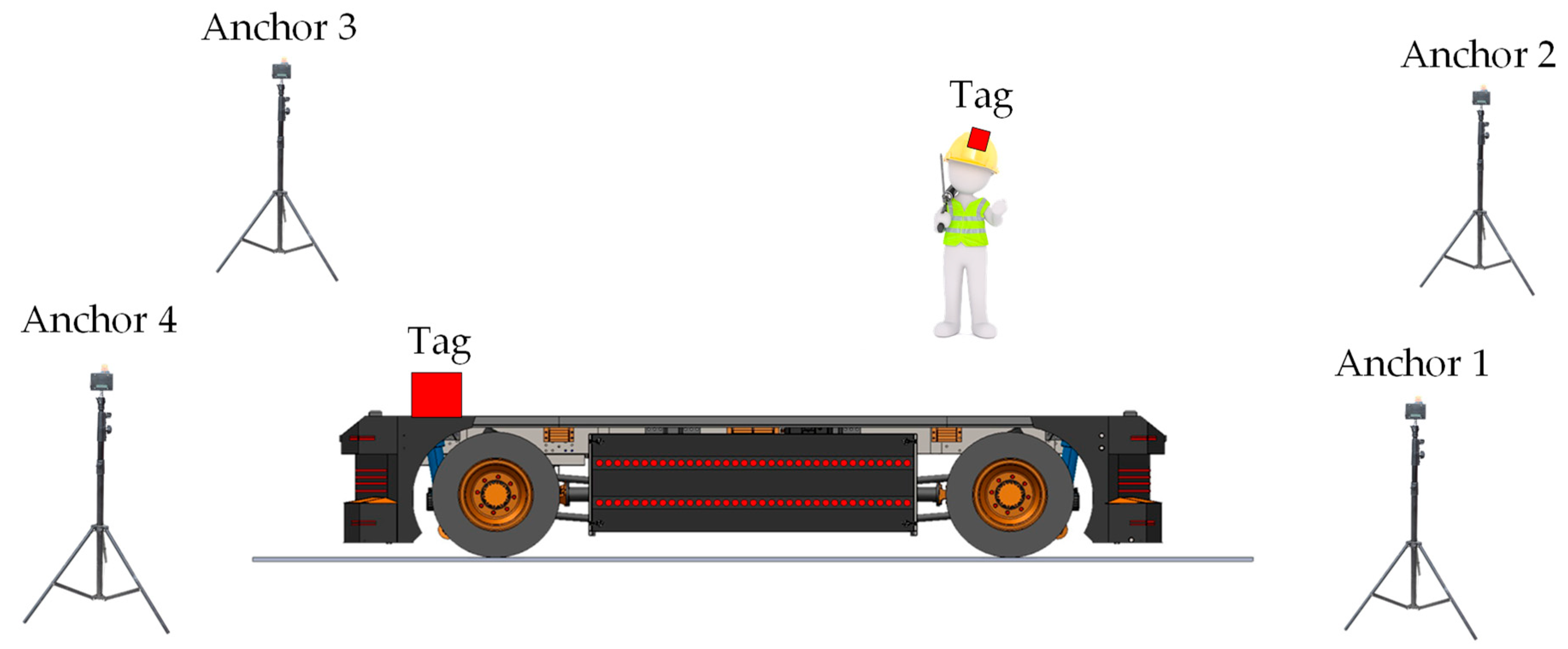

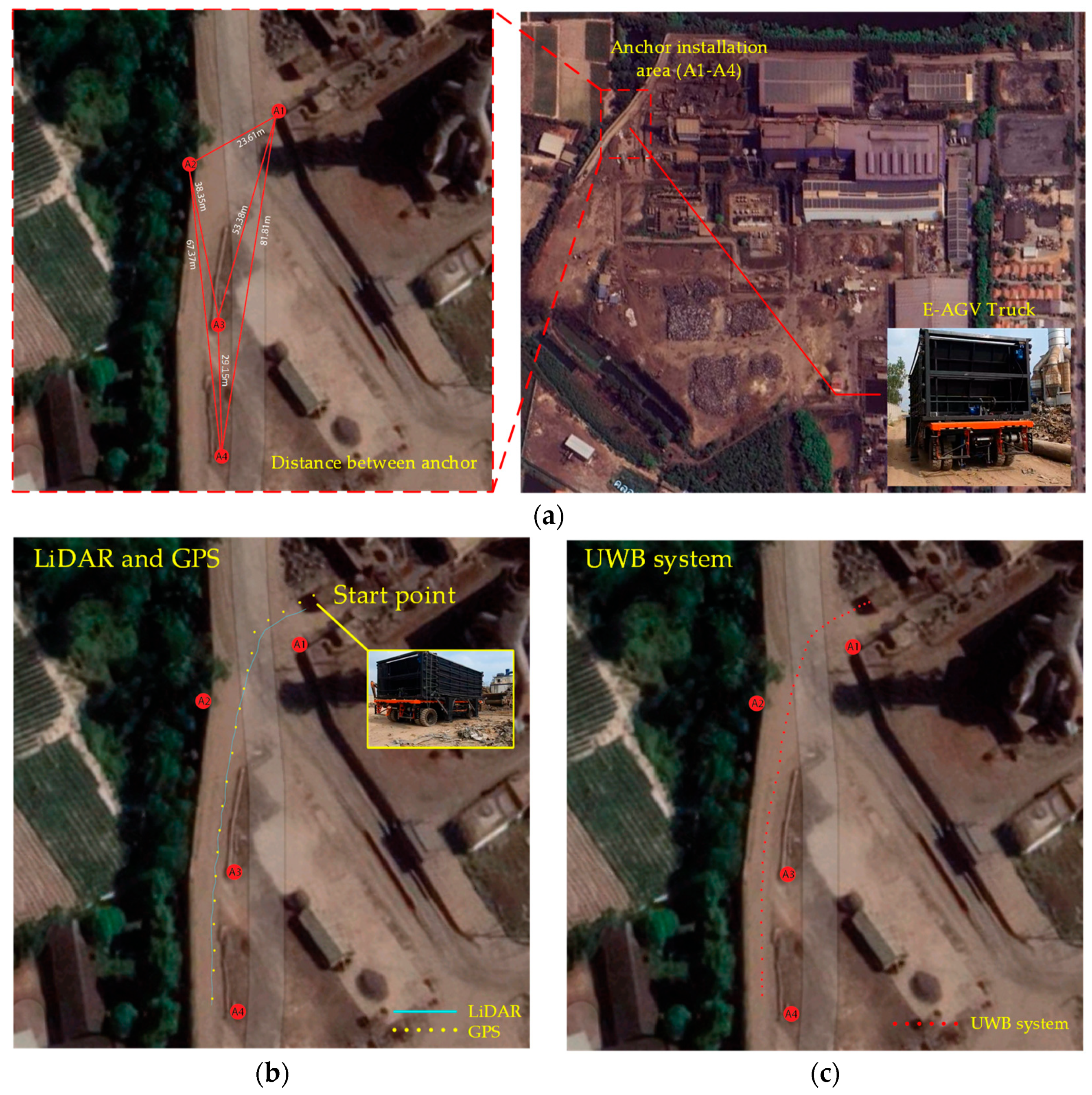

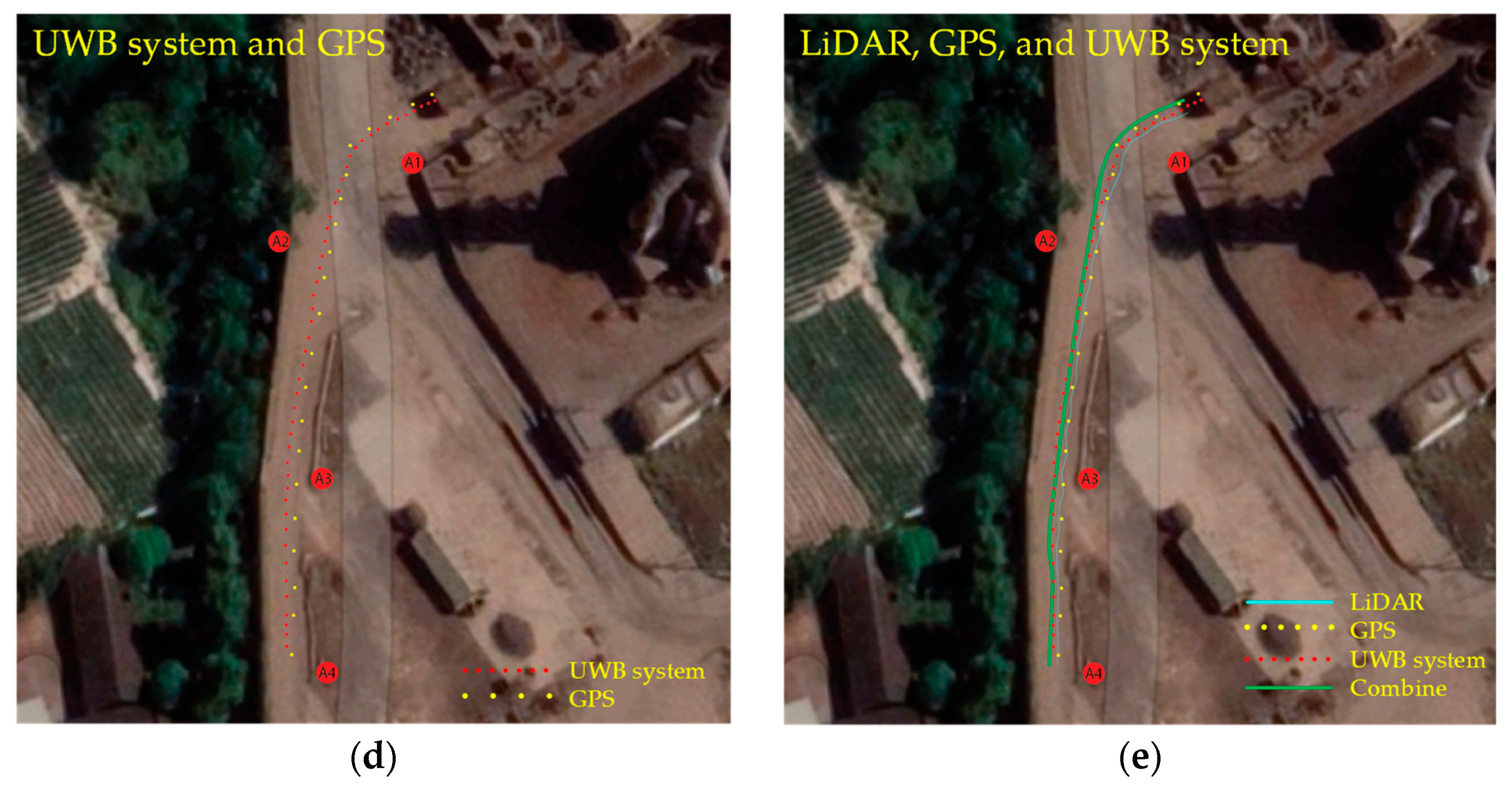

4.1. UWB System Configuration

- (1)

- (2)

- Preprocessing: The raw data undergo preprocessing to remove noise and outliers. This is achieved using filtering techniques such as band-pass filtering to eliminate out-of-band frequency noise components [39].

- (3)

- Range Estimation: The preprocessed signals are used to estimate the distance between the UWB transmitter and the tag receiver. This involves calculating the TOF and applying it to determine the range, given the speed of light [45].

- (4)

- (5)

- Error Correction: To enhance accuracy, error correction algorithms like the Kalman Filter are applied to smooth out the position estimates and reduce the impact of multipath effects and other inaccuracies. These steps ensure accurate and reliable UWB signal processing, leading to precise position estimation in industrial environments [48,50].

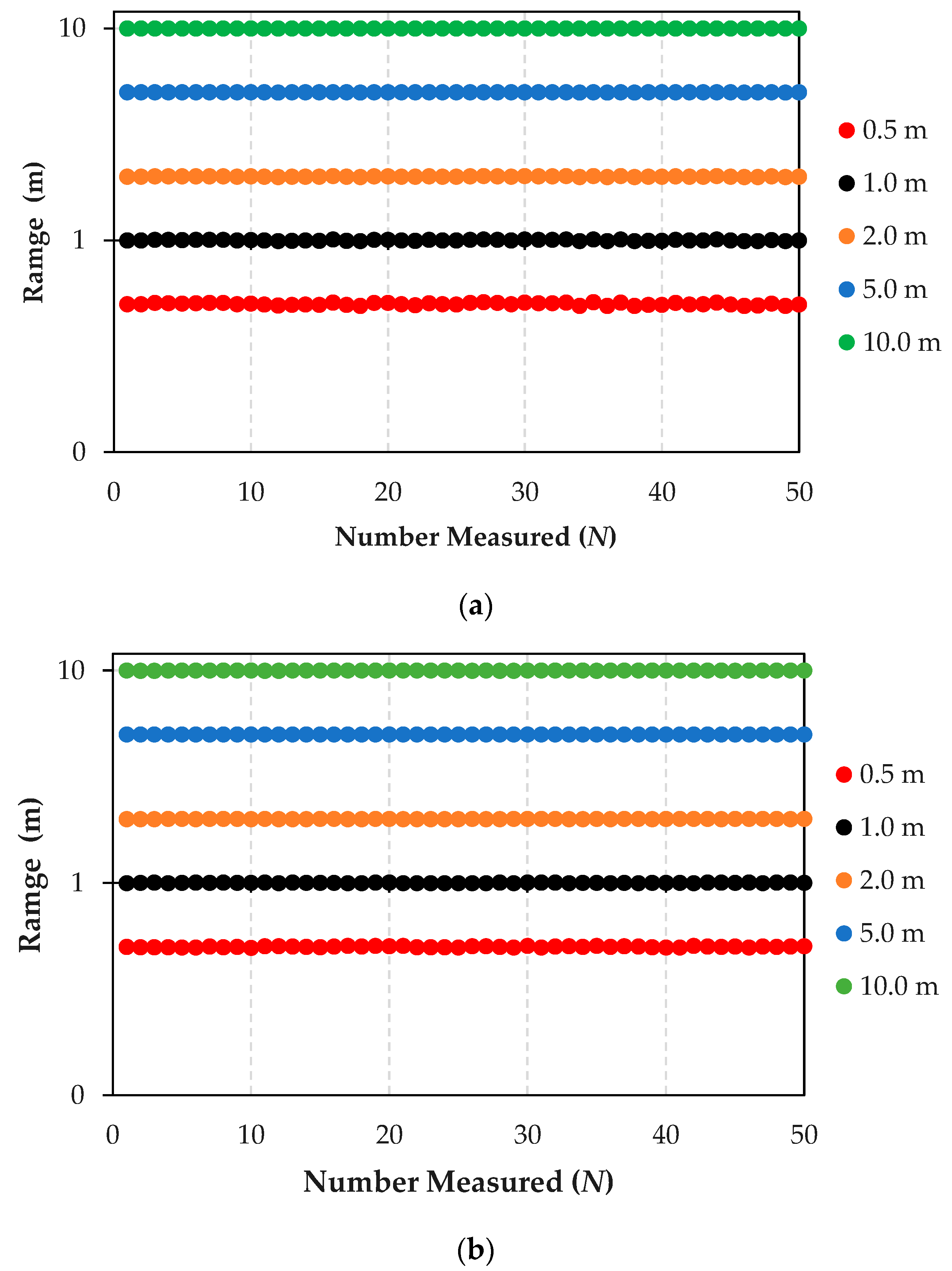

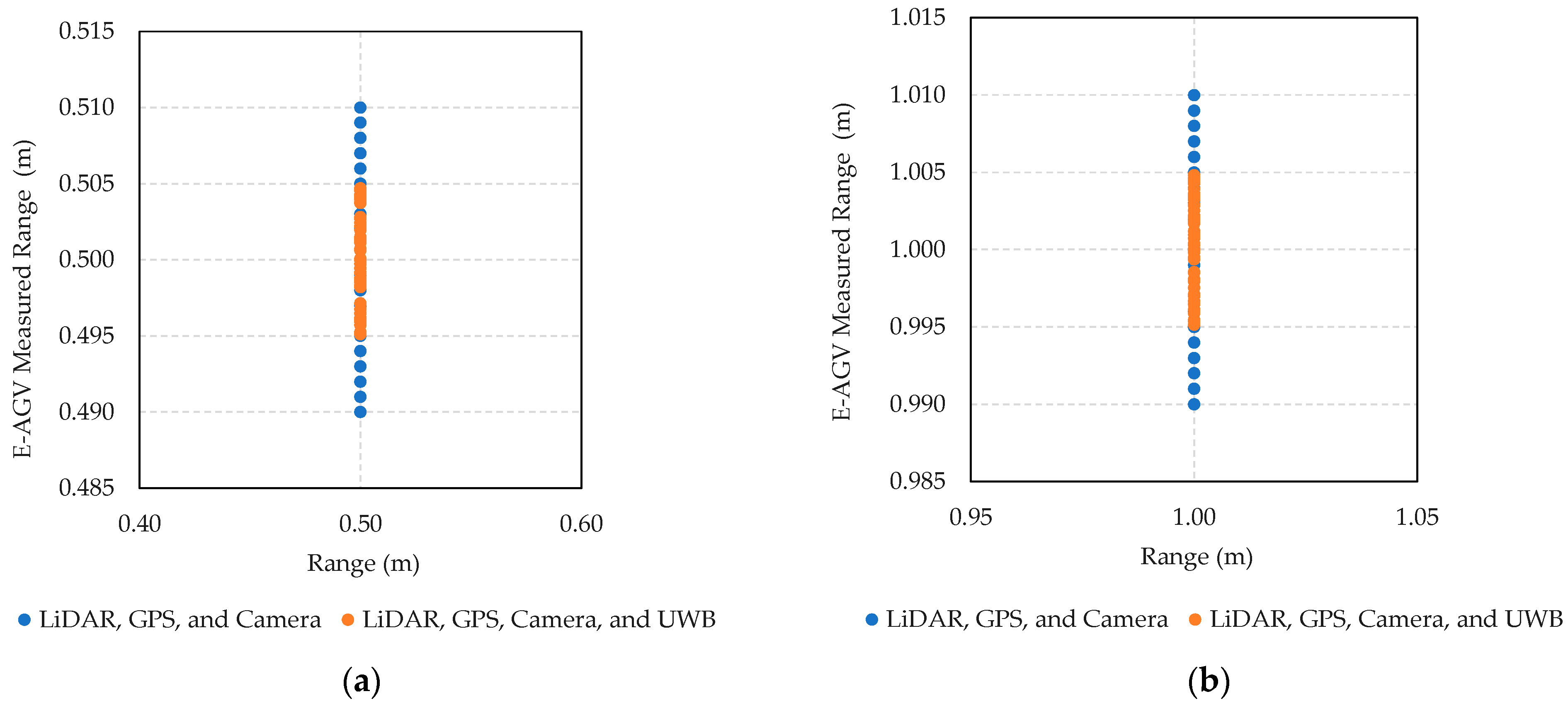

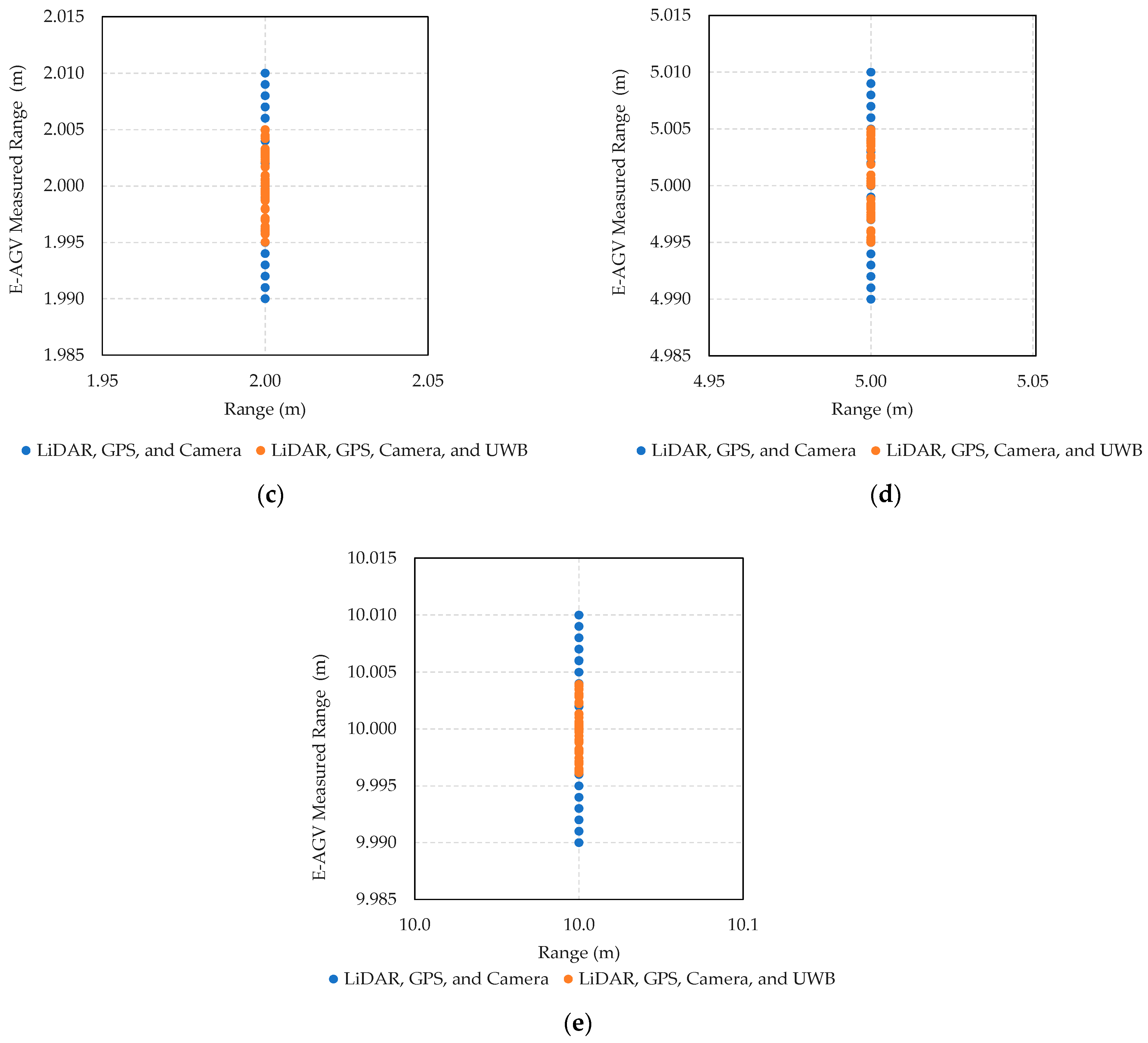

4.2. First Measurement Campaign: Static Measurements with an E-AGV Truck Prototype

4.3. Second Measurement Campaign: Static Measurements with an E-AGV Truck Prototype

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smith, J.A.; Brown, B.C. Ultra-Wideband Positioning Systems for Industrial Environments. Ind. J. Nav. Tech. 2021, 15, 24–45. [Google Scholar]

- Johnson, L.; Lee, M.K. Challenges of GPS Navigation in Urban and Indoor Settings. J. Geo. Res. 2020, 112, 334–350. [Google Scholar]

- Shi, D.; Mi, H.; Collins, E.G.; Wu, J. An indoor low-cost and high-accuracy localization approach for AGVs. IEEE Access 2020, 8, 50085–50090. [Google Scholar] [CrossRef]

- Jiang, J.; Guo, Y.; Liao, W. Research on AGV guided by real-time locating system (RTLS) for material distribution. Int. J. Control Autom. 2015, 8, 213–226. [Google Scholar] [CrossRef]

- Thompson, H.; Walters, T.Y. An Overview of LiDAR Technology and Its Automotive Applications. Automot. Innov. Rev. 2019, 8, 77–89. [Google Scholar]

- Kirsch, C.; Röhrig, C. Global localization and position tracking of an automated guided vehicle. IFAC Proc. 2011, 44, 14036–14041. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, Y. Improving Accuracy in Positioning Systems Using Ultra-Wideband Technology. Sens. Tech. J. 2018, 14, 1012–1029. [Google Scholar]

- White, P.R.; Green, D.F. Integration Challenges of UWB in Autonomous Vehicles. J. Auto. Eng. 2022, 16, 230–245. [Google Scholar]

- Kirsch, C.; Künemund, F.; He, D.; Röhrig, C. Comparison of localization algorithms for AGVs in industrial environments. In Proceedings of the 7th German Conference on Robotics (ROBOTIK), Munich, Germany, 21–22 January 2012; pp. 183–188. [Google Scholar]

- Davis, K.; Murphy, S. UWB vs. Traditional Positioning Technologies in Industrial Autonomous Vehicles. Ind. Auto. J. 2019, 9, 88–107. [Google Scholar]

- Pérez-Rubio, M.C.; Losada-Gutiérrez, C.; Espinosa, F.; Macias-Guarasa, J.; Tiemann, J.; Eckermann, F.; Wietfeld, C.; Katkov, M.; Huba, S.; Ureña, J.M.; et al. A realistic evaluation of indoor robot position tracking systems: The IPIN 2016 competition experience. Measurement 2019, 135, 151–162. [Google Scholar] [CrossRef]

- Santos, E.R.S.; Azpurua, H.; Rezeck, P.A.F.; Corrêa, M.F.S.; Vieira, M.A.M.; Freitas, G.M.; Macharet, D.G. Localization using ultra wideband and IEEE 802.15.4 radios with nonlinear Bayesian filters: A comparative study. J. Intell. Robotic Syst. 2020, 99, 571–587. [Google Scholar] [CrossRef]

- Anderson, G.; Thompson, J. The Economic Implications of Implementing Ultra-Wideband Technology in Industrial Settings. Econ. Ind. Tech. Rev. 2020, 5, 59–73. [Google Scholar]

- Patel, A.; Singh, S. A Comparative Study of RF Interference Effects on UWB and GPS Technologies. J. Commun. Tech. 2021, 22, 196–213. [Google Scholar]

- Zhu, X.; Yi, J.; Cheng, J.; He, L. Adapted error map-based mobile robot UWB indoor positioning. IEEE Trans. Instrum. Meas. 2020, 69, 6336–6350. [Google Scholar] [CrossRef]

- Luo, C.; Li, W.; Fan, X.; Yang, H.; Ni, J.; Zhang, X.; Xin, G.; Shi, P. Positioning technology of mobile vehicle using self-repairing heterogeneous sensor networks. J. Netw. Comput. Appl. 2017, 93, 110–122. [Google Scholar] [CrossRef]

- Robertson, T.; Carter, H. Operational Efficiency Enhancements with UWB in Autonomous Industrial Trucks. Ind. Log. Rev. 2020, 12, 142–155. [Google Scholar]

- Vasilyev, P.; Pearson, S.; El-Gohary, M.; Aboy, M.; McNames, J. Inertial and time-of-arrival ranging sensor fusion. Gait Posture. 2017, 54, 1–7. [Google Scholar] [CrossRef]

- Ding, G.; Lu, H.; Bai, J.; Qin, X. Development of a high precision UWB/vision-based AGV and control system. In Proceedings of the 5th International Conference on Control and Robotics Engineering (ICCRE), Nanjing, China, 20–23 April 2020; pp. 99–103. [Google Scholar]

- Benini, A.; Mancini, A.; Longhi, S. An IMU/UWB/vision-based extended Kalman filter for mini-UAV localization in indoor environment using 802.15.4a wireless sensor network. J. Intell. Robotic Syst. 2013, 70, 461–476. [Google Scholar] [CrossRef]

- An, X.; Zhao, S.; Cui, X.; Shi, Q.; Lu, M. Distributed multi-antenna positioning for automatic-guided vehicle. Sensors 2020, 20, 1155. [Google Scholar] [CrossRef]

- Wiebking, L.; Vossiek, M.; Reindl, L.; Christmann, M.; Mastela, D. Precise local positioning radar with implemented extended Kalman filter. In Proceedings of the European Conference on Wireless Technology, Munich, Germany, 7–9 October 2003; pp. 459–462. [Google Scholar]

- Chu, Y.; Ganz, A. A UWB-based 3D location system for indoor environments. In Proceedings of the 2nd International Conference on Broadband Networks, Boston, MA, USA, 3–7 October 2005; Volume 2, pp. 1147–1155. [Google Scholar]

- Mastela, D.; Reindl, L.; Wiebking, L.; Kawalkiewicz, M.; Zander, T. Angle tracking using FMCW radar-based localization system. In Proceedings of the International Radar Symposium, Krakow, Poland, 24–26 May 2006; pp. 1–4. [Google Scholar]

- O’Neil, M.; Jacobs, L. System Integration Strategies for UWB in Industrial Autonomous Systems. Sys. Eng. J. 2019, 13, 21–37. [Google Scholar]

- Kim, Y.; Cho, J. The Role of UWB Technology in the Future of Industrial Automation. Future Ind. Tech. J. 2021, 7, 200–218. [Google Scholar]

- Lee, A.; Johnson, R. Testing and Analysis of UWB Systems Under Various Industrial Conditions. J. Ind. Tests. 2022, 10, 50–65. [Google Scholar]

- Morgan, C.; Patel, R. Safety Implications of Autonomous Vehicles: The Potential of UWB Technology. Saf. Sci. J. 2020, 18, 45–60. [Google Scholar]

- Nash, B.; Kramer, F. High Data Transmission Rates with UWB: Benefits for Industrial Applications. Comm. Tech. Mag. 2019, 11, 156–172. [Google Scholar]

- Edwards, S.; Lin, T. Power Management in UWB Systems for Efficient Industrial Applications. Energy Manag. J. 2018, 9, 98–111. [Google Scholar]

- Tragas, P.; Kalis, A.; Papadias, C.; Ellinger, F.; Eickhoff, R.; Ussmuller, T.; Mosshammer, M.; Huemer, A.; Dabek, D.; Doumenis, A.; et al. RESOLUTION: Reconfigurable systems for mobile local communication and positioning. In Proceedings of the 16th IST Mobile and Wireless Communications Summit, Budapest, Hungary, 1–5 July 2007; pp. 216–220. [Google Scholar]

- Ellinger, F.; Eickhoff, R.; Ziroff, A.; Hütner, J.; Gierlich, R.; Carls, J.; Böck, G. European project RESOLUTION-local positioning systems based on novel FMCW radar. In Proceedings of the IEEE MTT-S International Microwave Symposium Digest, Honolulu, HI, USA, 29 October–1 November 2007; pp. 499–502. [Google Scholar]

- Greene, J.H.; Matthews, P.L. Real-world Application of UWB in Industrial AV: A Case Study. Case Stud. Ind. App. 2022, 4, 134–145. [Google Scholar]

- Black, T.; White, S. Advances in UWB Technology for Precise Positioning in Industrial Environments. Adv. Tech. J. 2021, 19, 84–99. [Google Scholar]

- Röhrig, C.; Spieker, S. Tracking of transport vehicles for warehouse management using a wireless sensor network. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 22–26 October 2008; pp. 3260–3265. [Google Scholar]

- Adler, G.; Marks, R. Environmental Challenges in LIDAR and UWB Operations. Env. Res. J. 2019, 15, 27–42. [Google Scholar]

- Tate, K.; Lew, H. Cost Analysis of Deploying UWB Technologies in Existing Industrial Infrastructures. Fin. Rev. Ind. Tech. 2020, 6, 170–188. [Google Scholar]

- Liu, L.; Manli, E.; Wang, Z.; Zhou, M. A 3D self-positioning method for wireless sensor nodes based on linear FMCW and TFDA. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 2990–2995. [Google Scholar]

- Cho, H.; Lee, C.-W.; Ban, S.-J.; Kim, S.-W. An enhanced positioning scheme for chirp spread spectrum ranging. Expert Syst. Appl. 2010, 37, 5278–5735. [Google Scholar] [CrossRef]

- Liu, L.; Manli, E. Improve the positioning accuracy for wireless sensor nodes based on TFDA and TFOA using data fusion. In Proceedings of the International Conference on Network, Sensor and Control (ICNSC), Chicago, IL, USA, 10–12 April 2010; pp. 32–37. [Google Scholar]

- Zhou, Y.; Law, C.L.; Chin, F. Construction of local anchor map for indoor position measurement system. IEEE Trans. Instrum. Meas. 2010, 59, 1986–1988. [Google Scholar] [CrossRef]

- Zamora-Cadenas, L.; Velez, I.; Sierra-Garcia, J.E. UWB-Based Safety System for Autonomous Guided Vehicles Without Hardware on the Infrastructure. IEEE Access 2021, 21, 3485. [Google Scholar] [CrossRef]

- Kang, D.; Namgoong, Y.; Yang, S.; Choi, S.; Shin, Y. A simple asynchronous UWB position location algorithm based on single round-trip transmission. In Proceedings of the 8th International Conference on Advanced Communication Technology, Phoenix Park, Republic of Korea, 20–22 February 2006; Volume 3, pp. 1458–1461. [Google Scholar]

- Nam, Y.; Lee, H.; Kim, J.; Park, K. Two-way ranging algorithms using estimated frequency offsets in WPAN and WBAN. In Proceedings of the 3rd International Conference on Convergence and Hybrid Information Technology, Busan, Republic of Korea, 11–13 November 2008; Volume 1, pp. 842–847. [Google Scholar]

- Arrue, N.; Losada, M.; Zamora-Cadenas, L.; Jimenez-Irastorza, A.; Velez, I. Design of an IR-UWB indoor localization system based on a novel RTT ranging estimator. In Proceedings of the 1st International Conference on Sensor Device Technologies and Applications, Venice, Italy, 18–25 July 2010; pp. 52–57. [Google Scholar]

- D’Amico, A.A.; Taponecco, L.; Mengali, U. Ultra-wideband TOA estimation in the presence of clock frequency offset. IEEE Trans. Wirel. Commun. 2013, 12, 1606–1616. [Google Scholar] [CrossRef]

- Sharma, S.; Bhatia, V.; Gupta, A. Joint symbol and ToA estimation for iterative transmitted reference pulse cluster UWB system. IEEE Syst. J. 2019, 13, 2629–2640. [Google Scholar] [CrossRef]

- Joung, J.; Jung, S.; Chung, S.; Jeong, E. CNN-based TxRx distance estimation for UWB system localization. Electron. Lett. 2019, 55, 938–940. [Google Scholar] [CrossRef]

- Karapistoli, E.; Pavlidou, F.; Gragopoulos, I.; Tsetsinas, I. An overview of the IEEE 802.15.4a Standard. IEEE Commun. Mag. 2010, 48, 47–53. [Google Scholar] [CrossRef]

- Alari, A.; Al-Salman, A.; Alsaleh, M.; Alnafessah, A.; Al-Hadhrami, S.; Al-Ammar, M.; Al-Khalifa, H. Ultra wideband indoor positioning technologies: Analysis and recent advances. Sensors 2016, 16, 707. [Google Scholar] [CrossRef] [PubMed]

- Muthukrishnan, K.; Hazas, M. Position estimation from UWB pseudorange and angle-of-arrival: A comparison of non-linear regression and Kalman filtering. In Proceedings of the 4th International Symposium on Location and Context Awareness (LoCA), Berlin, Germany, 7–8 May 2009; pp. 222–239. [Google Scholar]

- Chen, Y.-Y.; Huang, S.-P.; Wu, T.-W.; Tsai, W.-T.; Liou, C.-Y.; Mao, S.-G. UWB System for Indoor Positioning and Tracking with Arbitrary Target Orientation, Optimal Anchor Location, and Adaptive NLOS Mitigation. IEEE Trans. Veh. Technol. 2020, 69, 9304–9314. [Google Scholar] [CrossRef]

- Basnayake, C.; Haas, C.; Ridenour, J.; Young, M.; Zemp, R.; Jayakody, J.; Samarakoon, S. Ultra-Wideband Positioning Sensor with Application to an Autonomous Ultraviolet-C Disinfection Vehicle. Sensors 2020, 20, 6837. [Google Scholar]

- Yang, K.; An, J.; Bu, X.; Sun, G. Constrained Total Least-Squares Location Algorithm Using Time-Difference-of-Arrival Measurements. IEEE Trans. Veh. Technol. 2010, 59, 1558–1562. [Google Scholar] [CrossRef]

- Li, A.; Luan, F. An Improved Localization Algorithm Based on CHAN with High Positioning Accuracy in NLOS-WGN Environment. In Proceedings of the 2018 10th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 25–26 August 2018; Volume 1, pp. 332–335. [Google Scholar]

- Cheng, Y.; Zhou, T. UWB Indoor Positioning Algorithm Based on TDOA Technology. In Proceedings of the 2019 10th International Conference on Information Technology in Medicine and Education (ITME), Qingdao, China, 23–25 August 2019; pp. 777–782. [Google Scholar]

- Li, L.; Liu, Z. Analysis of TDOA Algorithm about Rapid Moving Target with UWB Tag. In Proceedings of the 2017 9th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 26–27 August 2017; Volume 1, pp. 406–409. [Google Scholar]

- Baidoo-Williams, H.E.; Dasgupta, S.; Mudumbai, R.; Bai, E. On the Gradient Descent Localization of Radioactive Sources. IEEE Signal Process. Lett. 2013, 20, 1046–1049. [Google Scholar] [CrossRef]

- Yağmur, N.; Alagöz, B.B. Comparison of Solutions of Numerical Gradient Descent Method and Continuous Time Gradient Descent Dynamics and Lyapunov Stability. In Proceedings of the 2019 27th Signal Processing and Communications Applications Conference (SIU), Sivas, Turkey, 24–26 April 2019; pp. 1–4. [Google Scholar]

- Zhang, A.; Lipton, Z.; Li, M.; Smola, A. Dive Into Deep Learning. Available online: http://www.d2l.ai (accessed on 31 July 2021).

- Smith, G.L.; Schmidt, S.F.; McGee, L.A. Application of Statistical Filter Theory to the Optimal Estimation of Position and Velocity on Board a Circumlunar Vehicle; National Aeronautics and Space Administration: Washington, DC, USA, 1962. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; University of North Carolina: Chapel Hill, NC, USA, 1995. [Google Scholar]

- Odelson, B.J.; Rajamani, M.R.; Rawlings, J.B. A new autocovariance least-squares method for estimating noise covariances. Automatica 2006, 42, 303–308. [Google Scholar] [CrossRef]

- Decawave. DW1000 User Manual Version 2.18. Available online: https://www.decawave.com/dw1000/usermanual/ (accessed on 30 October 2023).

- Ridol, M.; Van De Velde, S.; Steendam, H.; De Poorter, E. Analysis of the scalability of UWB indoor localization solutions for high user densities. Sensors 2018, 18, 1875. Available online: https://www.mdpi.com/1424-8220/18/6/1875 (accessed on 30 October 2023). [CrossRef] [PubMed]

| Parameter | Value |

|---|---|

| Carrier frequency | 3.9936 GHz |

| Bandwidth | 500 MHz |

| Channel | 5 |

| Bitrate | 6.8 Mbps |

| PRF (pulse repetition frequency) | 16 MHz |

| Preamble length | 1024 symbols |

| Preamble code | 3 |

| SFD (start of frame delimiter) | 8 symbols |

| Latency | 200 ms |

| Positioning rate | 5 Hz |

| Tx power | −14 dBm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Waiwanijchakij, P.; Chotsiri, T.; Janpangngern, P.; Thongsopa, C.; Thosdeekoraphat, T.; Santalunai, N.; Santalunai, S. Enhancing Autonomous Truck Navigation with Ultra-Wideband Technology in Industrial Environments. Sensors 2024, 24, 4988. https://doi.org/10.3390/s24154988

Waiwanijchakij P, Chotsiri T, Janpangngern P, Thongsopa C, Thosdeekoraphat T, Santalunai N, Santalunai S. Enhancing Autonomous Truck Navigation with Ultra-Wideband Technology in Industrial Environments. Sensors. 2024; 24(15):4988. https://doi.org/10.3390/s24154988

Chicago/Turabian StyleWaiwanijchakij, Pairoj, Thanapat Chotsiri, Pisit Janpangngern, Chanchai Thongsopa, Thanaset Thosdeekoraphat, Nuchanart Santalunai, and Samran Santalunai. 2024. "Enhancing Autonomous Truck Navigation with Ultra-Wideband Technology in Industrial Environments" Sensors 24, no. 15: 4988. https://doi.org/10.3390/s24154988