Nonintrusive and Effective Volume Reconstruction Model of Swimming Sturgeon Based on RGB-D Sensor

Abstract

:1. Introduction

2. Materials and Methods

2.1. Overall Framework

2.2. Calibration

2.2.1. Calibration Devices

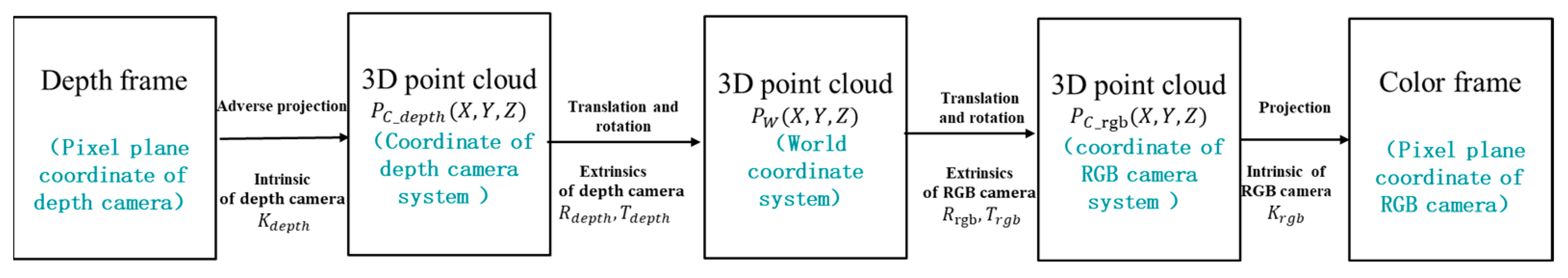

2.2.2. Aligning the Depth Frame to the Color Frame

2.3. Fish Segmentation

2.3.1. Images Acquisition System and Dataset Creation

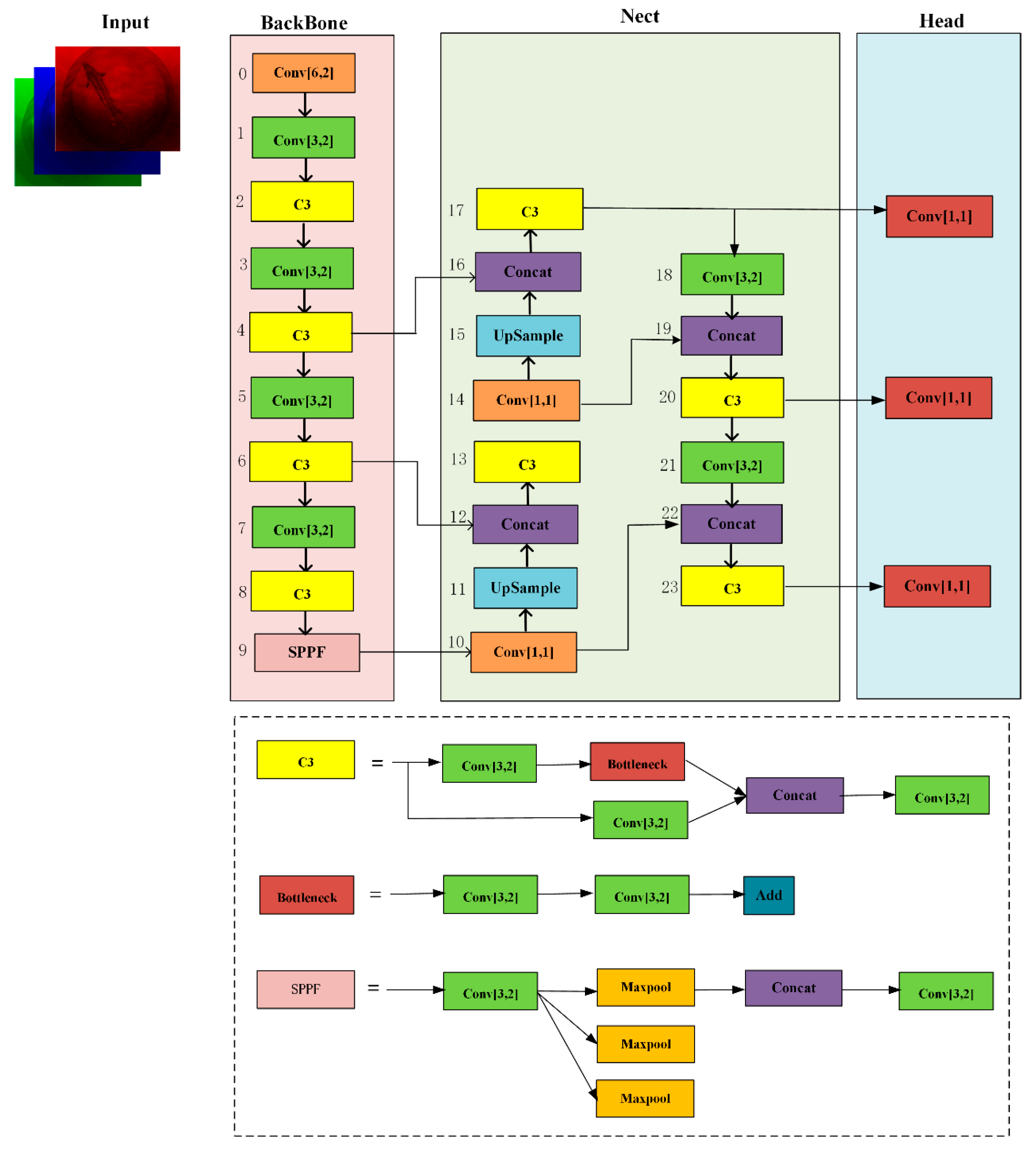

2.3.2. YOLOv5s Sturgeon Segmentation Algorithm

2.4. Estimation of Sturgeon Mass

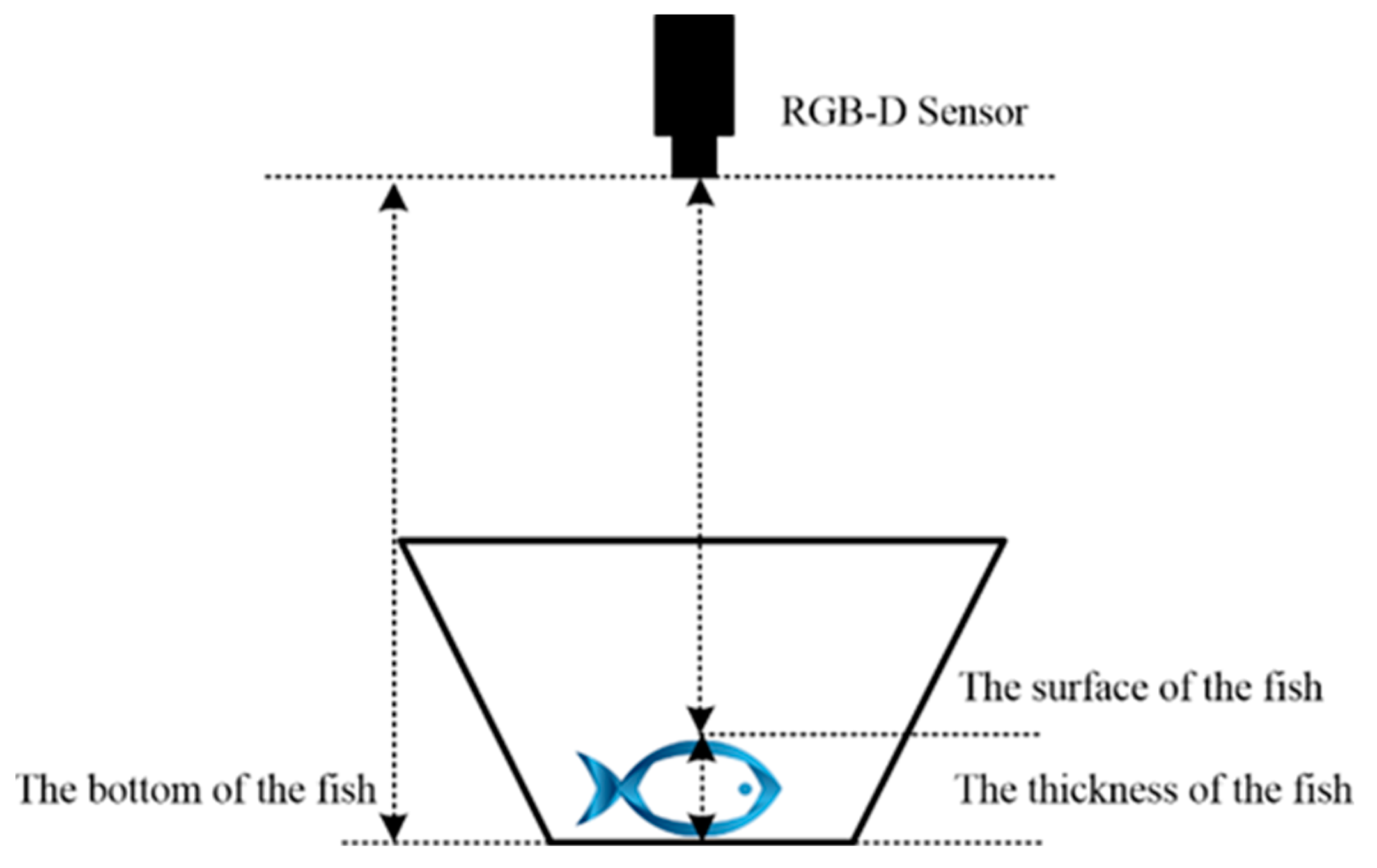

2.4.1. Reconstruction of Sturgeon Volume

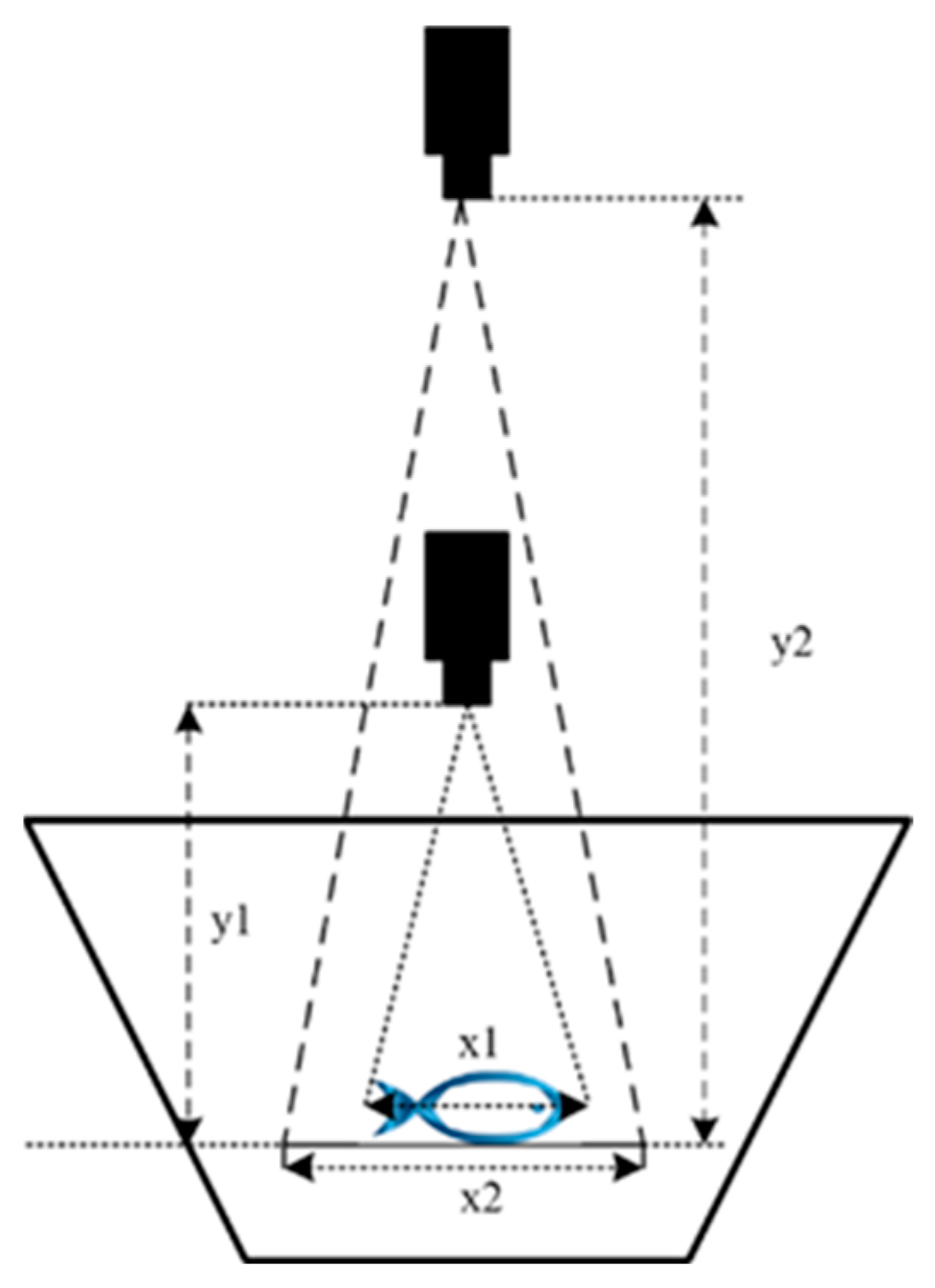

2.4.2. Corrected Volume Ratio Changes Caused by Lens Distance

3. Results

3.1. Calibration Results and Verification

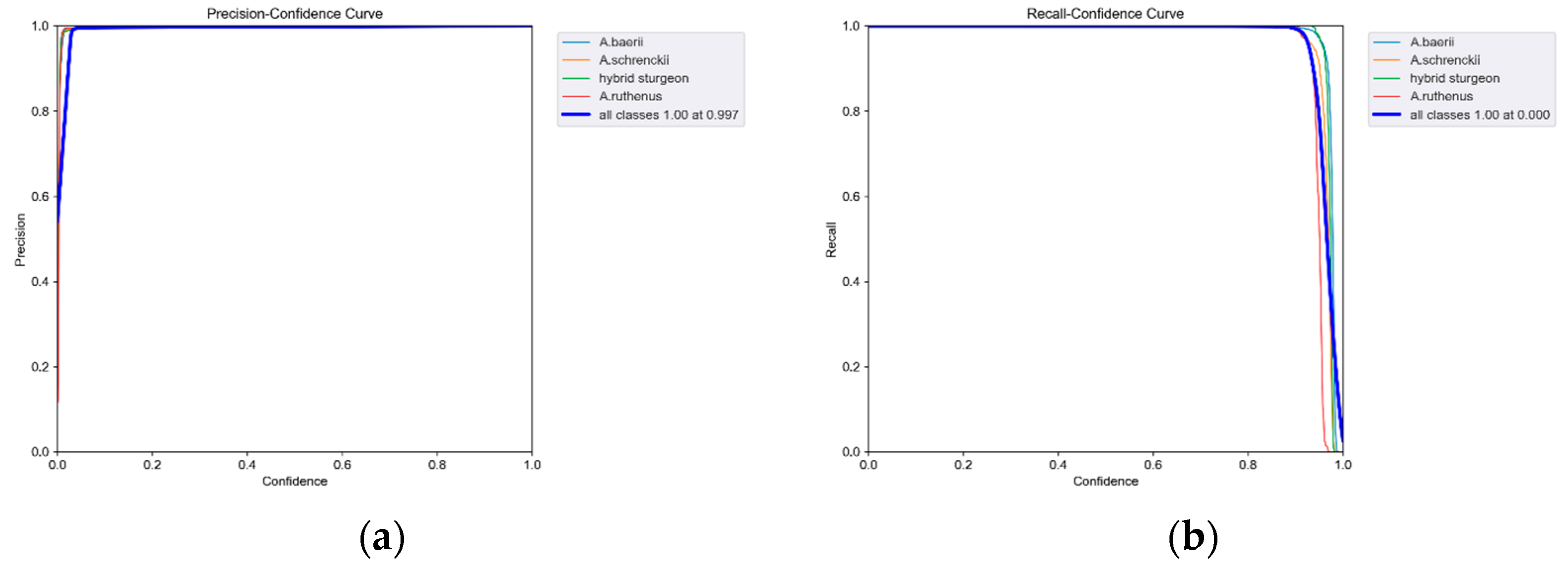

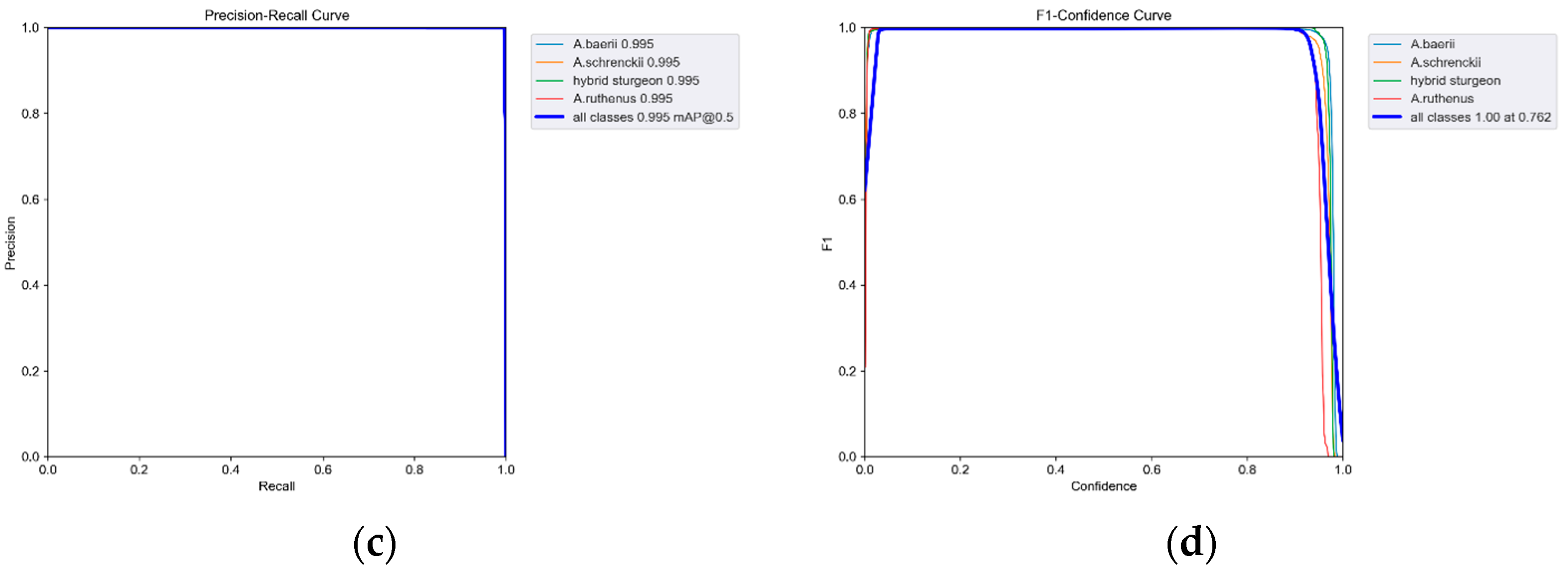

3.2. Analysis of Model Training Results and Segmentation Test Results

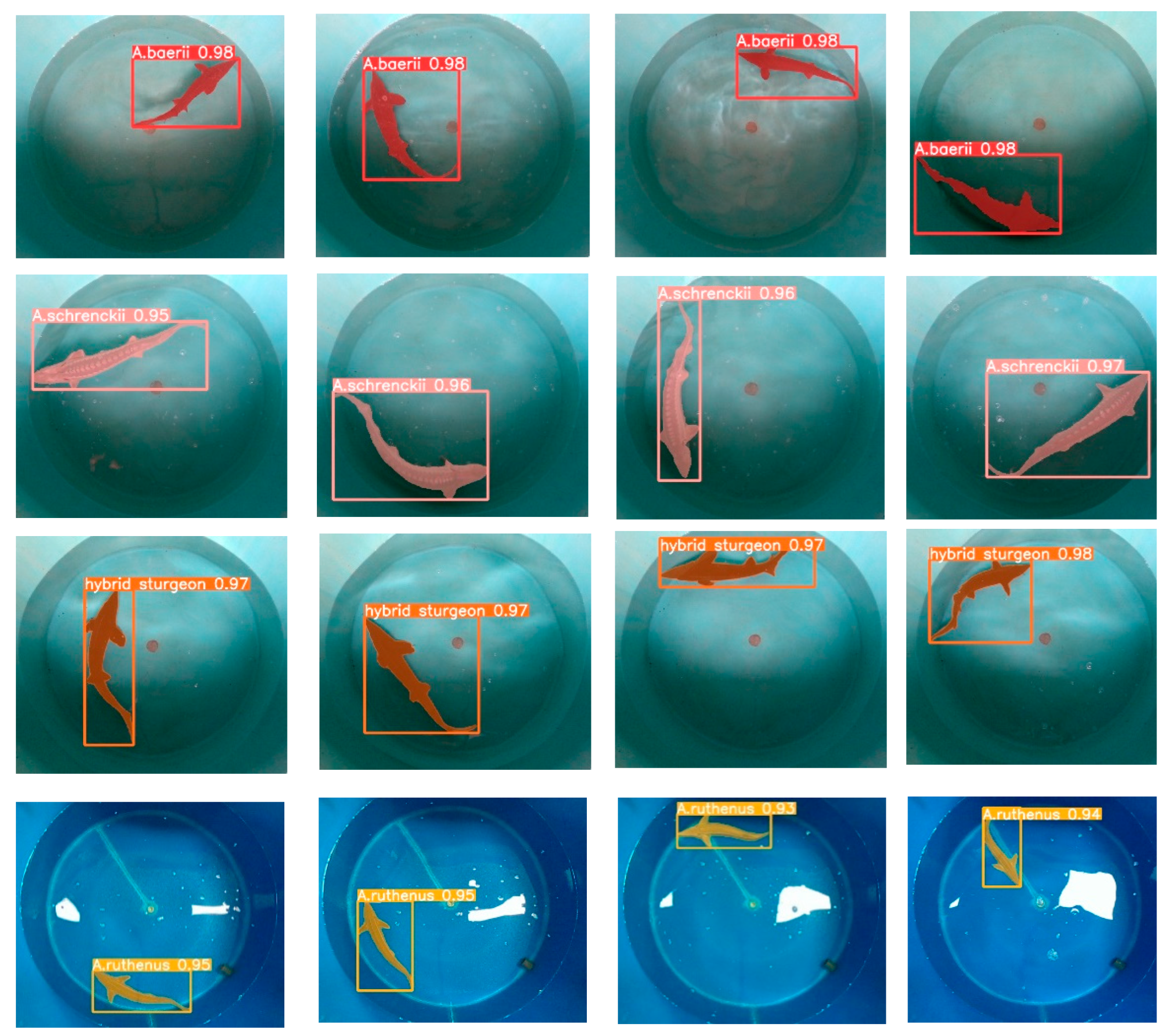

3.3. Mass Estimation Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Song, H.; Xu, S.; Luo, K.; Hu, M.; Luan, S.; Shao, H.; Kong, J.; Hu, H. Estimation of genetic parameters for growth and egg related traits in Russian sturgeon (Acipenser gueldenstaedtii). Aquaculture 2022, 546, 737299. [Google Scholar] [CrossRef]

- Yan, X.; Dong, Y.; Dong, T.; Song, H.; Wang, W.; Hu, H. InDel DNA Markers Potentially Unique to Kaluga Sturgeon Huso dauricus Based on Whole-Genome Resequencing Data. Diversity 2023, 15, 689. [Google Scholar] [CrossRef]

- FAO. FAOSTAT. Available online: https://www.fao.org/faostat/en/#home (accessed on 24 May 2024).

- Song, H.; Dong, T.; Yan, X.; Wang, W.; Tian, Z.; Sun, A.; Dong, Y.; Zhu, H.; Hu, H. Genomic selection and its research progress in aquaculture breeding. Rev. Aquac. 2022, 15, 274–291. [Google Scholar] [CrossRef]

- Tonachella, N.; Martini, A.; Martinoli, M.; Pulcini, D.; Romano, A.; Capoccioni, F. An affordable and easy-to-use tool for automatic fish length and weight estimation in mariculture. Sci. Rep. 2022, 12, 15642. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Hao, Y.; Duan, Y. Nonintrusive methods for biomass estimation in aquaculture with emphasis on fish: A review. Rev. Aquac. 2019, 12, 1390–1411. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A Review on the Use of Computer Vision and Artificial Intelligence for Fish Recognition, Monitoring, and Management. Fishes 2022, 7, 335. [Google Scholar] [CrossRef]

- Rakowitz, G.; Tušer, M.; Říha, M.; Jůza, T.; Balk, H.; Kubečka, J. Use of high-frequency imaging sonar (DIDSON) to observe fish behaviour towards a surface trawl. Fish. Res. 2012, 123–124, 37–48. [Google Scholar] [CrossRef]

- Kim, H.; Kang, D.; Cho, S.; Kim, M.; Park, J.; Kim, K. Acoustic Target Strength Measurements for Biomass Estimation of Aquaculture Fish, Redlip Mullet (Chelon haematocheilus). Appl. Sci. 2018, 8, 1536. [Google Scholar] [CrossRef]

- Vo, T.T.E.; Ko, H.; Huh, J.-H.; Kim, Y. Overview of Smart Aquaculture System: Focusing on Applications of Machine Learning and Computer Vision. Electronics 2021, 10, 2882. [Google Scholar] [CrossRef]

- Abinaya, N.S.; Susan, D.; Sidharthan, R.K. Deep learning-based segmental analysis of fish for biomass estimation in an occulted environment. Comput. Electron. Agric. 2022, 197, 106985. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, J.; Duan, Q. Estimation for fish mass using image analysis and neural network. Comput. Electron. Agric. 2020, 173, 105439. [Google Scholar] [CrossRef]

- Hao, Y.; Yin, H.; Li, D. A novel method of fish tail fin removal for mass estimation using computer vision. Comput. Electron. Agric. 2022, 193, 106601. [Google Scholar] [CrossRef]

- Fernandes, A.F.A.; Turra, E.M.; de Alvarenga, É.R.; Passafaro, T.L.; Lopes, F.B.; Alves, G.F.O.; Singh, V.; Rosa, G.J.M. Deep Learning image segmentation for extraction of fish body measurements and prediction of body weight and carcass traits in Nile tilapia. Comput. Electron. Agric. 2020, 170, 105274. [Google Scholar] [CrossRef]

- Shi, C.; Zhao, R.; Liu, C.; Li, D. Underwater fish mass estimation using pattern matching based on binocular system. Aquac. Eng. 2022, 99, 102285. [Google Scholar] [CrossRef]

- Yu, X.; Wang, Y.; Liu, J.; Wang, J.; An, D.; Wei, Y. Non-contact weight estimation system for fish based on instance segmentation. Expert Syst. Appl. 2022, 210, 118403. [Google Scholar] [CrossRef]

- Risholm, P.; Mohammed, A.; Kirkhus, T.; Clausen, S.; Vasilyev, L.; Folkedal, O.; Johnsen, O.; Haugholt, K.H.; Thielemann, J. Automatic length estimation of free-swimming fish using an underwater 3D range-gated camera. Aquac. Eng. 2022, 97, 102227. [Google Scholar] [CrossRef]

- Shi, C.; Wang, Q.; He, X.; Zhang, X.; Li, D. An automatic method of fish length estimation using underwater stereo system based on LabVIEW. Computers and Electronics in Agriculture 2020, 173, 105419. [Google Scholar] [CrossRef]

- Tillett, R.; McFarlane, N.; Lines, J. Estimating Dimensions of Free-Swimming Fish Using 3D Point Distribution Models. Comput. Vis. Image Underst. 2000, 79, 123–141. [Google Scholar] [CrossRef]

- Shafait, F.; Harvey, E.S.; Shortis, M.R.; Mian, A.; Ravanbakhsh, M.; Seager, J.W.; Culverhouse, P.F.; Cline, D.E.; Edgington, D.R. Towards automating underwater measurement of fish length: A comparison of semi-automatic and manual stereo-video measurements. ICES J. Mar. Sci. 2017, 74, 1690–1701. [Google Scholar] [CrossRef]

- Njane, S.N.; Shinohara, Y.; Kondo, N.; Ogawa, Y.; Suzuki, T.; Nishizu, T. Underwater fish volume estimation using closed and open cavity Helmholtz resonators. Engineering in Agriculture. Environ. Food 2019, 12, 81–88. [Google Scholar] [CrossRef]

- Almansa, C.; Reig, L.; Oca, J. The laser scanner is a reliable method to estimate the biomass of a Senegalese sole (Solea senegalensis) population in a tank. Aquac. Eng. 2015, 69, 78–83. [Google Scholar] [CrossRef]

- da Silva Vale, R.T.; Ueda, E.K.; Takimoto, R.Y.; Castro Martins, T.d. Fish Volume Monitoring Using Stereo Vision for Fish Farms. IFAC-Pap. 2020, 53, 15824–15828. [Google Scholar] [CrossRef]

- Saberioon, M.; Císař, P. Automated within tank fish mass estimation using infrared reflection system. Comput. Electron. Agric. 2018, 150, 484–492. [Google Scholar] [CrossRef]

| Image Data Set | Sturgeon Species | Number of Fish | Original Image | Augmentation | Total |

|---|---|---|---|---|---|

| Training set | Acipenser baerii Acipenser schrenckii hybrid Acipenser ruthenus | 123 | 2040 | 2798 | 4838 |

| Validation set | Acipenser baerii Acipenser schrenckii hybrid Acipenser ruthenus | 123 | 254 | 350 | 604 |

| Testing set | Acipenser baerii Acipenser schrenckii hybrid Acipenser ruthenus | 123 | 254 | 350 | 604 |

| Detection set | Acipenser ruthenus | 81 | 14,580 | - | 14,580 |

| Classes | Precision (P) | Recall (R) | [email protected] | [email protected] |

|---|---|---|---|---|

| All | 0.88 | 0.606 | 0.669 | 0.521 |

| A. baerii | 0.974 | 0.604 | 0.716 | 0.574 |

| A. schrenckii | 0.983 | 0.522 | 0.646 | 0.499 |

| hybrid | 0.834 | 0.583 | 0.634 | 0.531 |

| A. ruthenus | 0.728 | 0.714 | 0.679 | 0.478 |

| Group | Predicted Mass (g) ± Standard Devition (SD) | Correlation Coefficient (R2) |

|---|---|---|

| Group1 | 477.23 ± 211.31 | 0.897 |

| Group2 | 485.35 ± 221.14 | 0.861 |

| Group3 | 507.41 ± 223.34 | 0.883 |

| Set | No. | RMSE | NRMSE |

|---|---|---|---|

| Set 1 (<200 g) | 5 | 42.67 | 0.41 |

| Set 2 (200 g–500 g) | 43 | 115.12 | 0.30 |

| Set 3 (>500 g) | 33 | 195.84 | 0.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, K.; Zhang, S.; Hu, J.; Li, H.; Guo, W.; Hu, H. Nonintrusive and Effective Volume Reconstruction Model of Swimming Sturgeon Based on RGB-D Sensor. Sensors 2024, 24, 5037. https://doi.org/10.3390/s24155037

Lin K, Zhang S, Hu J, Li H, Guo W, Hu H. Nonintrusive and Effective Volume Reconstruction Model of Swimming Sturgeon Based on RGB-D Sensor. Sensors. 2024; 24(15):5037. https://doi.org/10.3390/s24155037

Chicago/Turabian StyleLin, Kai, Shiyu Zhang, Junjie Hu, Hongsong Li, Wenzhong Guo, and Hongxia Hu. 2024. "Nonintrusive and Effective Volume Reconstruction Model of Swimming Sturgeon Based on RGB-D Sensor" Sensors 24, no. 15: 5037. https://doi.org/10.3390/s24155037