Comparative Analysis of TLS and UAV Sensors for Estimation of Grapevine Geometric Parameters

Abstract

:1. Introduction

2. Materials and Methods

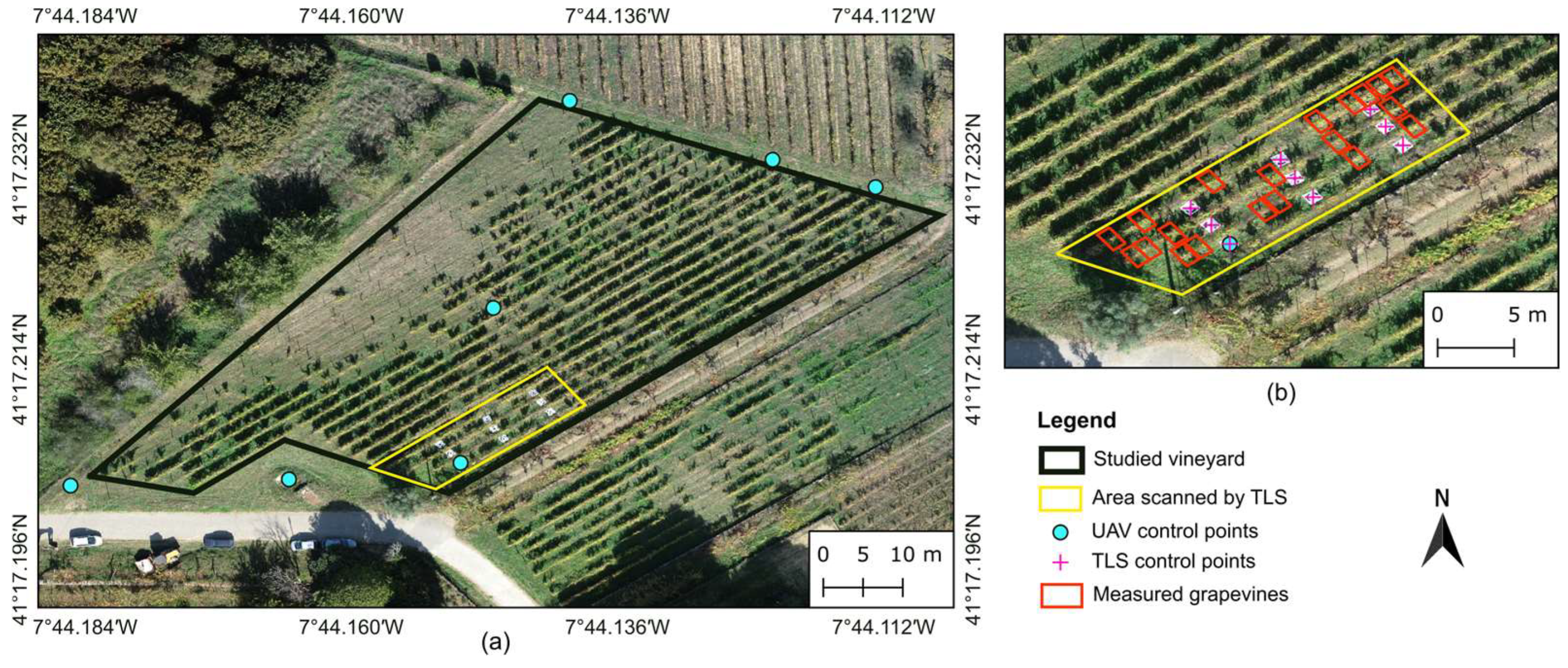

2.1. Study Area

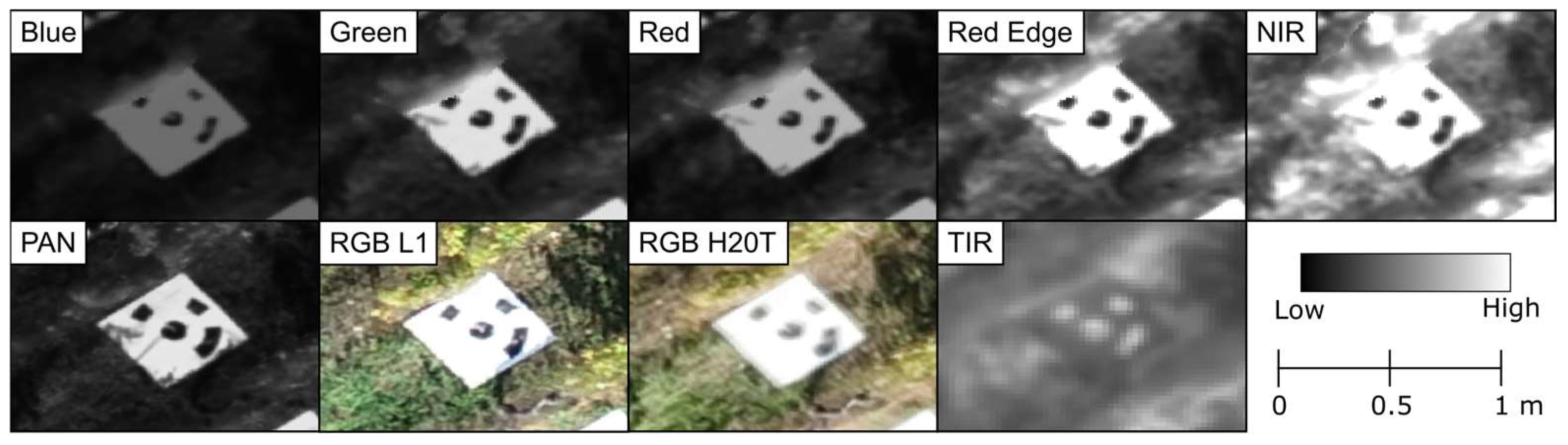

2.2. Data Acquisition

2.2.1. UAV Data Acquisition

2.2.2. TLS Data Acquisition

2.3. Data Processing

2.3.1. UAV Data Processing

2.3.2. TLS Data Processing

2.3.3. Grapevine Geometrical Parameters Extraction from Point Clouds

2.4. Data Analysis

3. Results

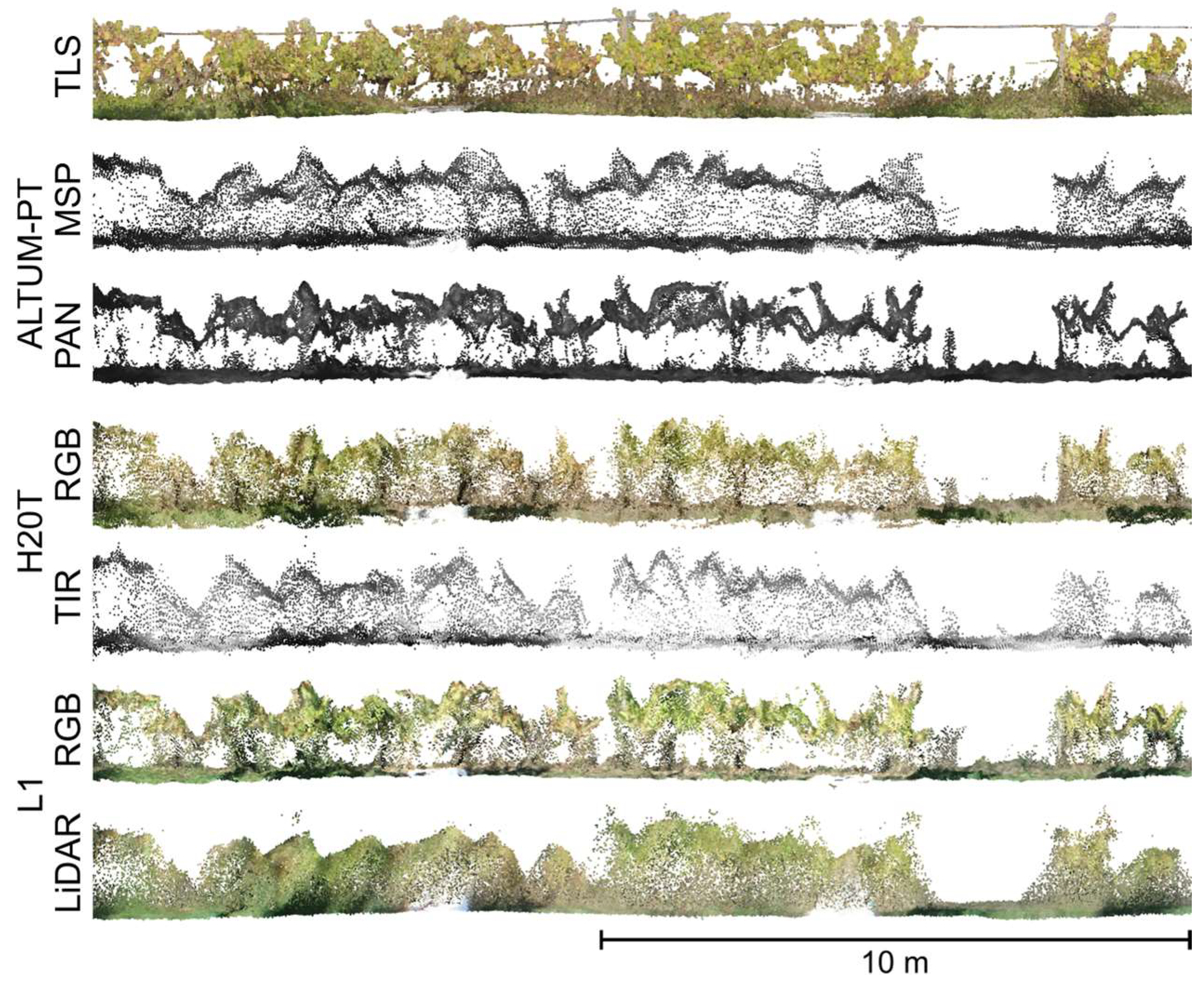

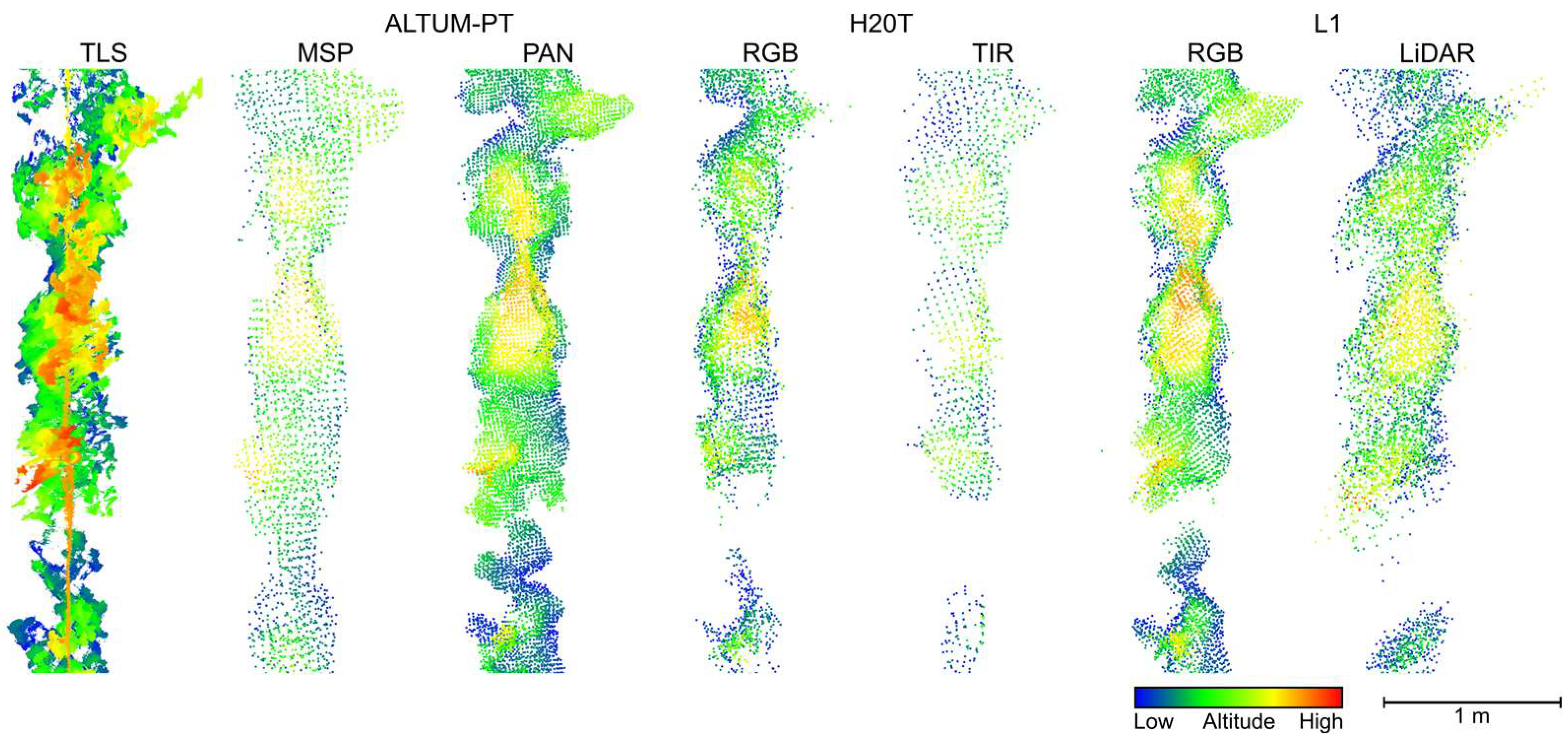

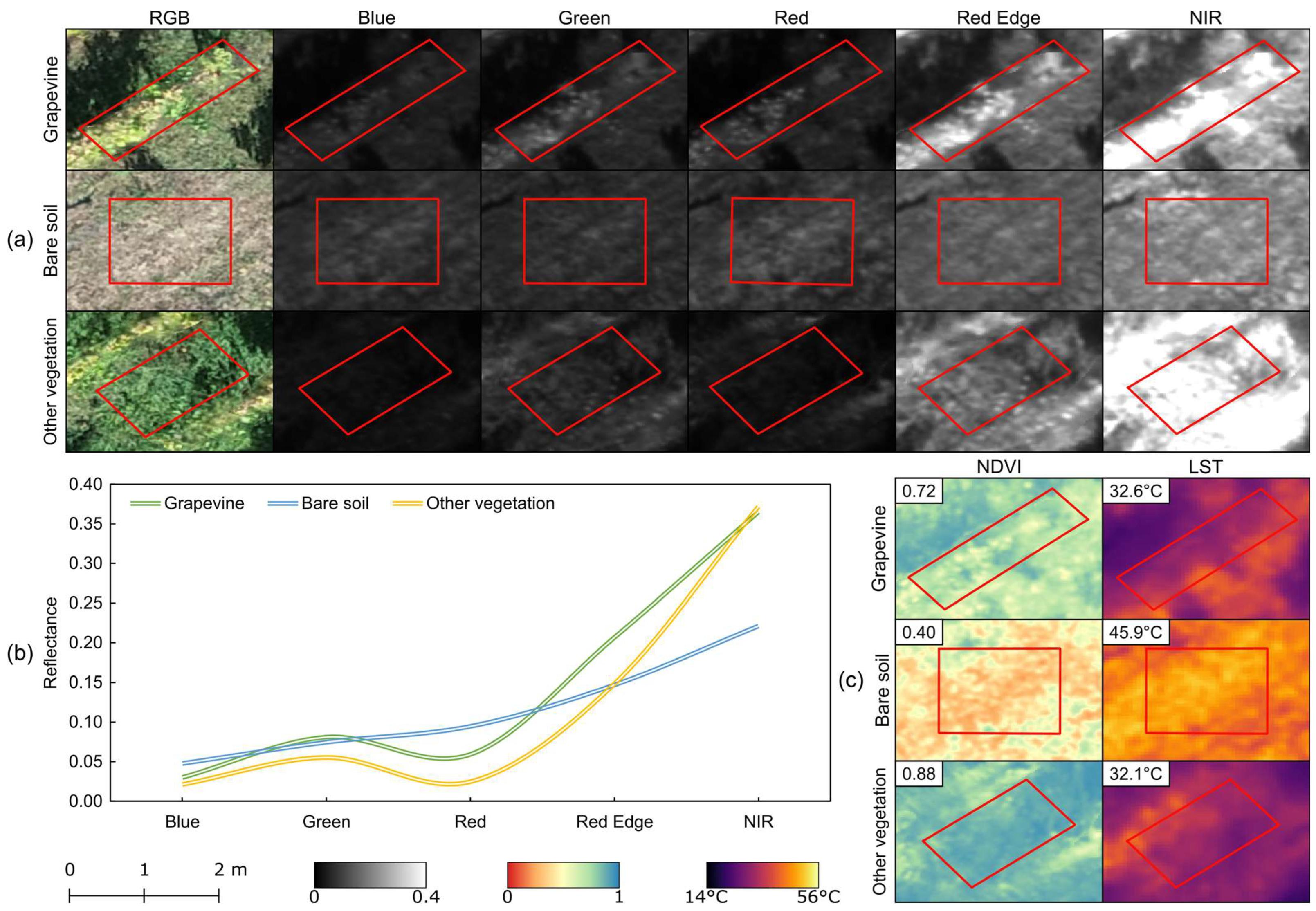

3.1. Data Characterization

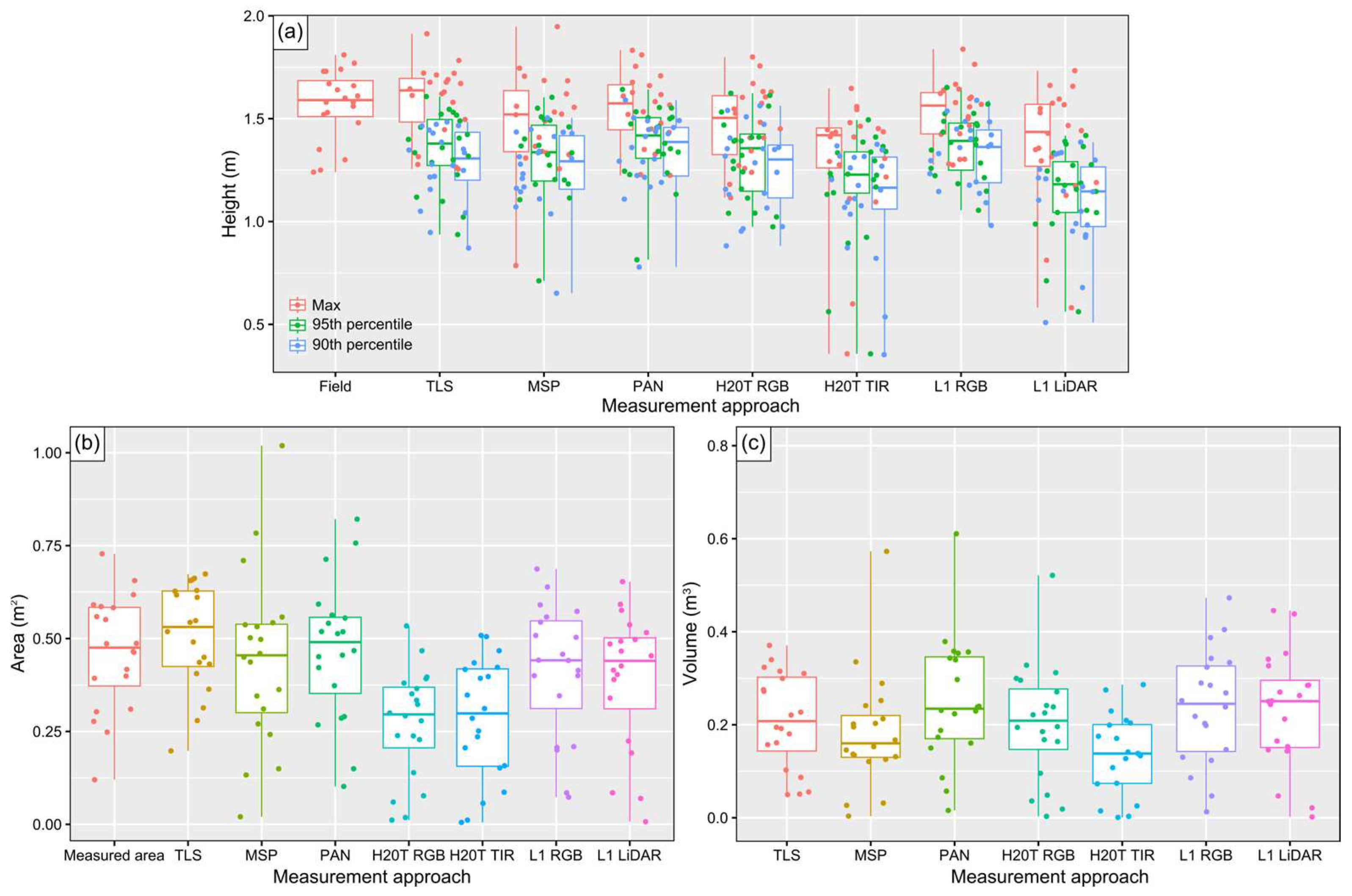

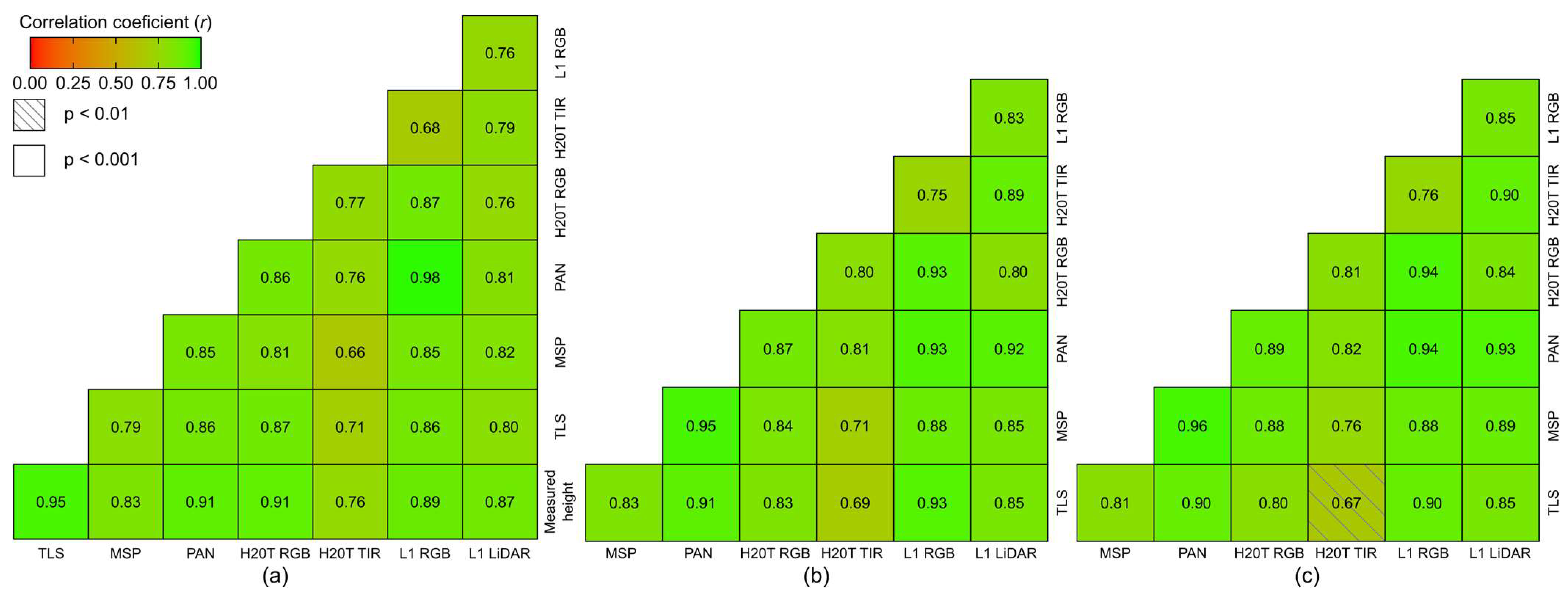

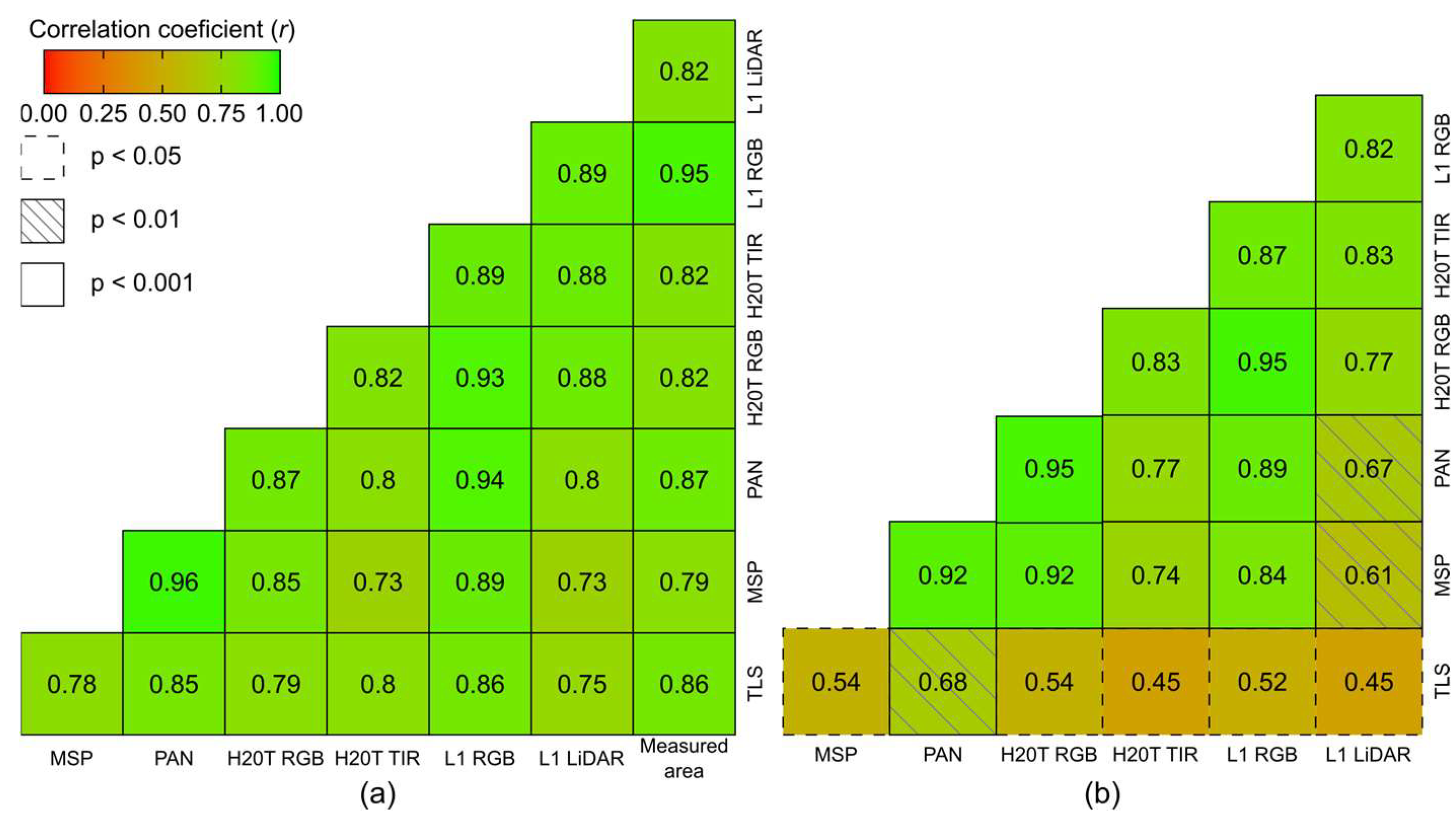

3.2. Grapevine Geometric Parameters

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gebbers, R.; Adamchuk, V.I. Precision Agriculture and Food Security. Science 2010, 327, 828–831. [Google Scholar] [CrossRef] [PubMed]

- Sassu, A.; Gambella, F.; Ghiani, L.; Mercenaro, L.; Caria, M.; Pazzona, A.L. Advances in Unmanned Aerial System Remote Sensing for Precision Viticulture. Sensors 2021, 21, 956. [Google Scholar] [CrossRef] [PubMed]

- Santos, J.A.; Fraga, H.; Malheiro, A.C.; Moutinho-Pereira, J.; Dinis, L.-T.; Correia, C.; Moriondo, M.; Leolini, L.; Dibari, C.; Costafreda-Aumedes, S.; et al. A Review of the Potential Climate Change Impacts and Adaptation Options for European Viticulture. Appl. Sci. 2020, 10, 3092. [Google Scholar] [CrossRef]

- Moreno, H.; Andújar, D. Proximal Sensing for Geometric Characterization of Vines: A Review of the Latest Advances. Comput. Electron. Agric. 2023, 210, 107901. [Google Scholar] [CrossRef]

- Morais, R.; Fernandes, M.A.; Matos, S.G.; Serôdio, C.; Ferreira, P.J.S.G.; Reis, M.J.C.S. A ZigBee Multi-Powered Wireless Acquisition Device for Remote Sensing Applications in Precision Viticulture. Comput. Electron. Agric. 2008, 62, 94–106. [Google Scholar] [CrossRef]

- Matese, A.; Gennaro, S.F.D. Technology in Precision Viticulture: A State of the Art Review. IJWR 2015, 7, 69–81. [Google Scholar] [CrossRef]

- Rosell Polo, J.R.; Sanz, R.; Llorens, J.; Arnó, J.; Escolà, A.; Ribes-Dasi, M.; Masip, J.; Camp, F.; Gràcia, F.; Solanelles, F.; et al. A Tractor-Mounted Scanning LIDAR for the Non-Destructive Measurement of Vegetative Volume and Surface Area of Tree-Row Plantations: A Comparison with Conventional Destructive Measurements. Biosyst. Eng. 2009, 102, 128–134. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F.; Orlandi, G.; Gatti, M.; Poni, S. Assessing Grapevine Biophysical Parameters from Unmanned Aerial Vehicles Hyperspectral Imagery. Front. Plant Sci. 2022, 13, 898722. [Google Scholar] [CrossRef]

- Cataldo, E.; Fucile, M.; Mattii, G.B. A Review: Soil Management, Sustainable Strategies and Approaches to Improve the Quality of Modern Viticulture. Agronomy 2021, 11, 2359. [Google Scholar] [CrossRef]

- Zhou, L.; Xue, X.; Zhou, L.; Zhang, L.; Ding, S.; Chang, C.; Zhang, X.; Chen, C. Research Situation and Progress Analysis on Orchard Variable Rate Spraying Technology. Trans. Chin. Soc. Agric. Eng. 2017, 33, 80–92. [Google Scholar]

- Sommer, K.J.; Islam, M.T.; Clingeleffer, P.R. Light and Temperature Effects on Shoot Fruitfulness in Vitis vinifera L. Cv. Sultana: Influence of Trellis Type and Grafting. Aust. J. Grape Wine Res. 2000, 6, 99–108. [Google Scholar] [CrossRef]

- Petrie, P.R.; Trought, M.C.T.; Howell, G.S.; Buchan, G.D.; Palmer, J.W. Whole-Canopy Gas Exchange and Light Interception of Vertically Trained Vitis vinifera L. under Direct and Diffuse Light. Am. J. Enol. Vitic. 2009, 60, 173–182. [Google Scholar] [CrossRef]

- Haselgrove, L.; Botting, D.; van Heeswijck, R.; Høj, P.B.; Dry, P.R.; Ford, C.; Land, P.G.I. Canopy Microclimate and Berry Composition: The Effect of Bunch Exposure on the Phenolic Composition of Vitis vinifera L Cv. Shiraz Grape Berries. Aust. J. Grape Wine Res. 2000, 6, 141–149. [Google Scholar] [CrossRef]

- Ehlert, D.; Horn, H.-J.; Adamek, R. Measuring Crop Biomass Density by Laser Triangulation. Comput. Electron. Agric. 2008, 61, 117–125. [Google Scholar] [CrossRef]

- Ding Weimin, Z.S. Measurement Methods of Fruit Tree Canopy Volume Based on Machine Vision. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2016, 47, 1–10. [Google Scholar]

- Qi, Y.; Dong, X.; Chen, P.; Lee, K.-H.; Lan, Y.; Lu, X.; Jia, R.; Deng, J.; Zhang, Y. Canopy Volume Extraction of Citrus Reticulate Blanco Cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning. Remote Sens. 2021, 13, 3437. [Google Scholar] [CrossRef]

- Fernández-Sarría, A.; López-Cortés, I.; Estornell, J.; Velázquez-Martí, B.; Salazar, D. Estimating Residual Biomass of Olive Tree Crops Using Terrestrial Laser Scanning. Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 163–170. [Google Scholar] [CrossRef]

- Verma, N.K.; Lamb, D.W.; Reid, N.; Wilson, B. Comparison of Canopy Volume Measurements of Scattered Eucalypt Farm Trees Derived from High Spatial Resolution Imagery and LiDAR. Remote Sens. 2016, 8, 388. [Google Scholar] [CrossRef]

- Chiappini, S.; Giorgi, V.; Neri, D.; Galli, A.; Marcheggiani, E.; Savina Malinverni, E.; Pierdicca, R.; Balestra, M. Innovation in Olive-Growing by Proximal Sensing LiDAR for Tree Volume Estimation. In Proceedings of the 2022 IEEE Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Perugia, Italy, 3–5 November 2022; pp. 213–217. [Google Scholar]

- Hawryło, P.; Socha, J.; Wężyk, P.; Ochał, W.; Krawczyk, W.; Miszczyszyn, J.; Tymińska-Czabańska, L. How to Adequately Determine the Top Height of Forest Stands Based on Airborne Laser Scanning Point Clouds? For. Ecol. Manag. 2024, 551, 121528. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F.; Berton, A. Assessment of a Canopy Height Model (CHM) in a Vineyard Using UAV-Based Multispectral Imaging. Int. J. Remote Sens. 2017, 38, 2150–2160. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J.; Chang, S.; Sun, L.; An, L.; Chen, Y.; Xu, J. Classification of Street Tree Species Using UAV Tilt Photogrammetry. Remote Sens. 2021, 13, 216. [Google Scholar] [CrossRef]

- Balestra, M.; Tonelli, E.; Vitali, A.; Urbinati, C.; Frontoni, E.; Pierdicca, R. Geomatic Data Fusion for 3D Tree Modeling: The Case Study of Monumental Chestnut Trees. Remote Sens. 2023, 15, 2197. [Google Scholar] [CrossRef]

- Wężyk, P.; Hawryło, P.; Szostak, M.; Zięba-Kulawik, K.; Winczek, M.; Siedlarczyk, E.; Kurzawiński, A.; Rydzyk, J.; Kmiecik, J.; Gilewski, W.; et al. Using LiDAR Point Clouds in Determination of the Scots Pine Stands Spatial Structure Meaning in the Conservation of Lichen Communities in “Bory Tucholskie” National Park. Arch. Photogramm. Cartogr. Remote Sens. 2019, 31, 85–103. [Google Scholar] [CrossRef]

- Li, X.; Wang, L.; Guan, H.; Chen, K.; Zang, Y.; Yu, Y. Urban Tree Species Classification Using UAV-Based Multispectral Images and LiDAR Point Clouds. J. Geovis Spat. Anal. 2023, 8, 5. [Google Scholar] [CrossRef]

- Nistor-Lopatenco, L.; Tiganu, E.; Vlasenco, A.; Iacovlev, A.; Grama, V. Creation of the Point Cloud and the 3D Model for the Above-Ground Infrastructure in the City of Chisinau by Modern Geodetic Methods. Bulletin of the Transilvania University of Brasov. Ser. I—Eng. Sci. 2022, 15, 9–18. [Google Scholar] [CrossRef]

- Bieda, A.; Balawejder, M.; Warchoł, A.; Bydłosz, J.; Kolodiy, P.; Pukanská, K. Use of 3D Technology in Underground Tourism: Example of Rzeszow (Poland) and Lviv (Ukraine). Acta Montan. Slovaca 2021, 26, 205–221. [Google Scholar] [CrossRef]

- Ahmed, R.; Mahmud, K.H.; Tuya, J.H. A GIS-Based Mathematical Approach for Generating 3D Terrain Model from High-Resolution UAV Imageries. J. Geovis Spat. Anal. 2021, 5, 24. [Google Scholar] [CrossRef]

- da Silva, D.Q.; Aguiar, A.S.; dos Santos, F.N.; Sousa, A.J.; Rabino, D.; Biddoccu, M.; Bagagiolo, G.; Delmastro, M. Measuring Canopy Geometric Structure Using Optical Sensors Mounted on Terrestrial Vehicles: A Case Study in Vineyards. Agriculture 2021, 11, 208. [Google Scholar] [CrossRef]

- Njoroge, B.M.; Fei, T.K.; Thiruchelvam, V. A Research Review of Precision Farming Techniques and Technology. J. Appl. Technol. Innov. 2018, 2, 22–30. [Google Scholar]

- Torres-Sánchez, J.; Escolà, A.; Isabel de Castro, A.; López-Granados, F.; Rosell-Polo, J.R.; Sebé, F.; Manuel Jiménez-Brenes, F.; Sanz, R.; Gregorio, E.; Peña, J.M. Mobile Terrestrial Laser Scanner vs. UAV Photogrammetry to Estimate Woody Crop Canopy Parameters—Part 2: Comparison for Different Crops and Training Systems. Comput. Electron. Agric. 2023, 212, 108083. [Google Scholar] [CrossRef]

- Dassot, M.; Constant, T.; Fournier, M. The Use of Terrestrial LiDAR Technology in Forest Science: Application Fields, Benefits and Challenges. Ann. For. Sci. 2011, 68, 959–974. [Google Scholar] [CrossRef]

- Jung, J.; Kim, T.; Min, H.; Kim, S.; Jung, Y.-H. Intricacies of Opening Geometry Detection in Terrestrial Laser Scanning: An Analysis Using Point Cloud Data from BLK360. Remote Sens. 2024, 16, 759. [Google Scholar] [CrossRef]

- Pagliai, A.; Ammoniaci, M.; Sarri, D.; Lisci, R.; Perria, R.; Vieri, M.; D’Arcangelo, M.E.M.; Storchi, P.; Kartsiotis, S.-P. Comparison of Aerial and Ground 3D Point Clouds for Canopy Size Assessment in Precision Viticulture. Remote Sens. 2022, 14, 1145. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, C.; Takeda, F.; Kramer, E.A.; Ashrafi, H.; Hunter, J. 3D Point Cloud Data to Quantitatively Characterize Size and Shape of Shrub Crops. Hortic. Res. 2019, 6, 43. [Google Scholar] [CrossRef]

- Colaço, A.F.; Trevisan, R.G.; Molin, J.P.; Rosell-Polo, J.R.; Escolà, A. A Method to Obtain Orange Crop Geometry Information Using a Mobile Terrestrial Laser Scanner and 3D Modeling. Remote Sens. 2017, 9, 763. [Google Scholar] [CrossRef]

- Moorthy, I.; Miller, J.R.; Berni, J.A.J.; Zarco-Tejada, P.; Hu, B.; Chen, J. Field Characterization of Olive (Olea europaea L.) Tree Crown Architecture Using Terrestrial Laser Scanning Data. Agric. For. Meteorol. 2011, 151, 204–214. [Google Scholar] [CrossRef]

- Murray, J.; Fennell, J.T.; Blackburn, G.A.; Whyatt, J.D.; Li, B. The Novel Use of Proximal Photogrammetry and Terrestrial LiDAR to Quantify the Structural Complexity of Orchard Trees. Precis. Agric. 2020, 21, 473–483. [Google Scholar] [CrossRef]

- Tsoulias, N.; Paraforos, D.S.; Fountas, S.; Zude-Sasse, M. Calculating the Water Deficit Spatially Using LiDAR Laser Scanner in an Apple Orchard. In Precision Agriculture ’19; Wageningen Academic Publishers: Wageningen, The Netherlands, 2019; pp. 115–121. ISBN 978-90-8686-337-2. [Google Scholar]

- Andújar, D.; Moreno, H.; Bengochea-Guevara, J.M.; de Castro, A.; Ribeiro, A. Aerial Imagery or On-Ground Detection? An Economic Analysis for Vineyard Crops. Comput. Electron. Agric. 2019, 157, 351–358. [Google Scholar] [CrossRef]

- del-Campo-Sanchez, A.; Moreno, M.; Ballesteros, R.; Hernandez-Lopez, D. Geometric Characterization of Vines from 3D Point Clouds Obtained with Laser Scanner Systems. Remote Sens. 2019, 11, 2365. [Google Scholar] [CrossRef]

- Lowe, T.; Moghadam, P.; Edwards, E.; Williams, J. Canopy Density Estimation in Perennial Horticulture Crops Using 3D Spinning Lidar SLAM. J. Field Robot. 2021, 38, 598–618. [Google Scholar] [CrossRef]

- Moreno, H.; Valero, C.; Bengochea-Guevara, J.M.; Ribeiro, Á.; Garrido-Izard, M.; Andújar, D. On-Ground Vineyard Reconstruction Using a LiDAR-Based Automated System. Sensors 2020, 20, 1102. [Google Scholar] [CrossRef] [PubMed]

- Petrović, I.; Sečnik, M.; Hočevar, M.; Berk, P. Vine Canopy Reconstruction and Assessment with Terrestrial Lidar and Aerial Imaging. Remote Sens. 2022, 14, 5894. [Google Scholar] [CrossRef]

- Sanz, R.; Llorens, J.; Escolà, A.; Arnó, J.; Planas, S.; Román, C.; Rosell-Polo, J.R. LIDAR and Non-LIDAR-Based Canopy Parameters to Estimate the Leaf Area in Fruit Trees and Vineyard. Agric. For. Meteorol. 2018, 260–261, 229–239. [Google Scholar] [CrossRef]

- Tagarakis, A.C.; Koundouras, S.; Fountas, S.; Gemtos, T. Evaluation of the Use of LIDAR Laser Scanner to Map Pruning Wood in Vineyards and Its Potential for Management Zones Delineation. Precis. Agric. 2018, 19, 334–347. [Google Scholar] [CrossRef]

- Keightley, K.E.; Bawden, G.W. 3D Volumetric Modeling of Grapevine Biomass Using Tripod LiDAR. Comput. Electron. Agric. 2010, 74, 305–312. [Google Scholar] [CrossRef]

- Rinaldi, M.F.; Llorens Calveras, J.; Gil Moya, E. Electronic Characterization of the Phenological Stages of Grapevine Using a LIDAR Sensor. In Precision Agriculture’13; Wageningen Academic Publishers: Wageningen, The Netherlands, 2013. [Google Scholar]

- Bei, R.; Fuentes, S.; Gilliham, M.; Tyerman, S.; Edwards, E.; Bianchini, N.; Smith, J.; Collins, C. VitiCanopy: A Free Computer App to Estimate Canopy Vigor and Porosity for Grapevine. Sensors 2016, 16, 585. [Google Scholar] [CrossRef] [PubMed]

- Llorens, J.; Gil, E.; Llop, J.; Escolà, A. Ultrasonic and LIDAR Sensors for Electronic Canopy Characterization in Vineyards: Advances to Improve Pesticide Application Methods. Sensors 2011, 11, 2177–2194. [Google Scholar] [CrossRef]

- Roy, P.S.; Behera, M.D.; Srivastav, S.K. Satellite Remote Sensing: Sensors, Applications and Techniques. Proc. Natl. Acad. Sci. USA 2017, 87, 465–472. [Google Scholar] [CrossRef]

- Caruso, G.; Tozzini, L.; Rallo, G.; Primicerio, J.; Moriondo, M.; Palai, G.; Gucci, R. Estimating Biophysical and Geometrical Parameters of Grapevine Canopies (‘Sangiovese’) by an Unmanned Aerial Vehicle (UAV) and VIS-NIR Cameras. VITIS—J. Grapevine Res. 2017, 56, 63–70. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F. Using 3D Point Clouds Derived from UAV RGB Imagery to Describe Vineyard 3D Macro-Structure. Remote Sensing 2017, 9, 111. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D Mapping Applications: A Review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Ferro, M.V.; Catania, P.; Miccichè, D.; Pisciotta, A.; Vallone, M.; Orlando, S. Assessment of Vineyard Vigour and Yield Spatio-Temporal Variability Based on UAV High Resolution Multispectral Images. Biosyst. Eng. 2023, 231, 36–56. [Google Scholar] [CrossRef]

- Ouyang, J.; Bei, R.D.; Collins, C. Assessment of Canopy Size Using UAV-Based Point Cloud Analysis to Detect the Severity and Spatial Distribution of Canopy Decline. OENO One 2021, 55, 253–266. [Google Scholar] [CrossRef]

- Jurado, J.M.; Pádua, L.; Feito, F.R.; Sousa, J.J. Automatic Grapevine Trunk Detection on UAV-Based Point Cloud. Remote Sens. 2020, 12, 3043. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Gay, P. Unsupervised Detection of Vineyards by 3D Point-Cloud UAV Photogrammetry for Precision Agriculture. Comput. Electron. Agric. 2018, 155, 84–95. [Google Scholar] [CrossRef]

- Comba, L.; Zaman, S.; Biglia, A.; Ricauda Aimonino, D.; Dabbene, F.; Gay, P. Semantic Interpretation and Complexity Reduction of 3D Point Clouds of Vineyards. Biosyst. Eng. 2020, 197, 216–230. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; Jiménez-Brenes, F.M.; Oneka, O.; Marín, D.; Loidi, M.; de Castro, A.I.; Santesteban, L.G. Monitoring Vineyard Canopy Management Operations Using UAV-Acquired Photogrammetric Point Clouds. Remote Sens. 2020, 12, 2331. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Mesas-Carrascosa, F.J.; Santesteban, L.-G.; Jiménez-Brenes, F.M.; Oneka, O.; Villa-Llop, A.; Loidi, M.; López-Granados, F. Grape Cluster Detection Using UAV Photogrammetric Point Clouds as a Low-Cost Tool for Yield Forecasting in Vineyards. Sensors 2021, 21, 3083. [Google Scholar] [CrossRef]

- García-Fernández, M.; Sanz-Ablanedo, E.; Pereira-Obaya, D.; Rodríguez-Pérez, J.R. Vineyard Pruning Weight Prediction Using 3D Point Clouds Generated from UAV Imagery and Structure from Motion Photogrammetry. Agronomy 2021, 11, 2489. [Google Scholar] [CrossRef]

- Mathews, A.J.; Jensen, J.L.R. Visualizing and Quantifying Vineyard Canopy LAI Using an Unmanned Aerial Vehicle (UAV) Collected High Density Structure from Motion Point Cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Tortia, C.; Mania, E.; Guidoni, S.; Gay, P. Leaf Area Index Evaluation in Vineyards Using 3D Point Clouds from UAV Imagery. Precis. Agric. 2020, 21, 881–896. [Google Scholar] [CrossRef]

- Campos, J.; Llop, J.; Gallart, M.; García-Ruiz, F.; Gras, A.; Salcedo, R.; Gil, E. Development of Canopy Vigour Maps Using UAV for Site-Specific Management during Vineyard Spraying Process. Precis. Agric. 2019, 20, 1136–1156. [Google Scholar] [CrossRef]

- Cantürk, M.; Zabawa, L.; Pavlic, D.; Dreier, A.; Klingbeil, L.; Kuhlmann, H. UAV-Based Individual Plant Detection and Geometric Parameter Extraction in Vineyards. Front. Plant Sci. 2023, 14, 1244384. [Google Scholar] [CrossRef] [PubMed]

- Escolà, A.; Peña, J.M.; López-Granados, F.; Rosell-Polo, J.R.; de Castro, A.I.; Gregorio, E.; Jiménez-Brenes, F.M.; Sanz, R.; Sebé, F.; Llorens, J.; et al. Mobile Terrestrial Laser Scanner vs. UAV Photogrammetry to Estimate Woody Crop Canopy Parameters—Part 1: Methodology and Comparison in Vineyards. Comput. Electron. Agric. 2023, 212, 108109. [Google Scholar] [CrossRef]

- Hobart, M.; Pflanz, M.; Weltzien, C.; Schirrmann, M. Growth Height Determination of Tree Walls for Precise Monitoring in Apple Fruit Production Using UAV Photogrammetry. Remote Sens. 2020, 12, 1656. [Google Scholar] [CrossRef]

- Lorenz, D.H.; Eichhorn, K.W.; Bleiholder, H.; Klose, R.; Meier, U.; Weber, E. Growth Stages of the Grapevine: Phenological Growth Stages of the Grapevine (Vitis vinifera L. ssp. Vinifera)—Codes and Descriptions According to the Extended BBCH Scale†. Aust. J. Grape Wine Res. 1995, 1, 100–103. [Google Scholar] [CrossRef]

- Sohl, M.A.; Mahmood, S.A. Low-Cost UAV in Photogrammetric Engineering and Remote Sensing: Georeferencing, DEM Accuracy, and Geospatial Analysis. J. Geovis Spat. Anal. 2024, 8, 14. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in The Great Plains with ERTS. In Goddard Space Flight Center 3d ERTS-1 Symposium; NASA: Washington, DC, USA, 1974; Volume 1, pp. 309–317. [Google Scholar]

- Pingel, T.J.; Clarke, K.C.; McBride, W.A. An Improved Simple Morphological Filter for the Terrain Classification of Airborne LIDAR Data. ISPRS J. Photogramm. Remote Sens. 2013, 77, 21–30. [Google Scholar] [CrossRef]

- Edelsbrunner, H.; Mücke, E.P. Three-Dimensional Alpha Shapes. ACM Trans. Graph. 1994, 13, 43–72. [Google Scholar] [CrossRef]

- Di Gennaro, S.F.; Matese, A. Evaluation of Novel Precision Viticulture Tool for Canopy Biomass Estimation and Missing Plant Detection Based on 2.5D and 3D Approaches Using RGB Images Acquired by UAV Platform. Plant Methods 2020, 16, 91. [Google Scholar] [CrossRef]

- Liu, X.; Wang, Y.; Kang, F.; Yue, Y.; Zheng, Y. Canopy Parameter Estimation of Citrus Grandis Var. Longanyou Based on LiDAR 3D Point Clouds. Remote Sens. 2021, 13, 1859. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Core Team: Vienna, Austria, 2013. [Google Scholar]

- Legendre, P. Lmodel2: Model II Regression 2018. Available online: https://cran.r-project.org/web/packages/lmodel2/index.html (accessed on 2 August 2024).

- Wickham, H.; Chang, W.; Henry, L.; Pedersen, T.L.; Takahashi, K.; Wilke, C.; Woo, K.; Yutani, H.; Dunnington, D.; van den Brand, T.; et al. Ggplot2: Create Elegant Data Visualisations Using the Grammar of Graphics 2024. R Package Version 2022, 3. [Google Scholar]

- Mukaka, M.M. A Guide to Appropriate Use of Correlation Coefficient in Medical Research. Malawi Med. J. 2012, 24, 69–71. [Google Scholar]

- Haukoos, J.S.; Lewis, R.J. Advanced Statistics: Bootstrapping Confidence Intervals for Statistics with “Difficult” Distributions. Acad. Emerg. Med. 2005, 12, 360–365. [Google Scholar] [CrossRef]

- Field, A. Discovering Statistics Using IBM SPSS Statistics; SAGE: Newcastle upon Tyne, UK, 2013; ISBN 978-1-4462-7458-3. [Google Scholar]

- Mazzetto, F.; Calcante, A.; Mena, A.; Vercesi, A. Integration of Optical and Analogue Sensors for Monitoring Canopy Health and Vigour in Precision Viticulture. Precis. Agric. 2010, 11, 636–649. [Google Scholar] [CrossRef]

- Cabrera-Pérez, C.; Llorens, J.; Escolà, A.; Royo-Esnal, A.; Recasens, J. Organic Mulches as an Alternative for Under-Vine Weed Management in Mediterranean Irrigated Vineyards: Impact on Agronomic Performance. Eur. J. Agron. 2023, 145, 126798. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F. Practical Applications of a Multisensor UAV Platform Based on Multispectral, Thermal and RGB High Resolution Images in Precision Viticulture. Agriculture 2018, 8, 116. [Google Scholar] [CrossRef]

- Pádua, L.; Adão, T.; Sousa, A.; Peres, E.; Sousa, J.J. Individual Grapevine Analysis in a Multi-Temporal Context Using UAV-Based Multi-Sensor Imagery. Remote Sens. 2020, 12, 139. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Adão, T.; Guimarães, N.; Sousa, A.; Peres, E.; Sousa, J.J. Vineyard Variability Analysis through UAV-Based Vigour Maps to Assess Climate Change Impacts. Agronomy 2019, 9, 581. [Google Scholar] [CrossRef]

- Cao, L.; Liu, H.; Fu, X.; Zhang, Z.; Shen, X.; Ruan, H. Comparison of UAV LiDAR and Digital Aerial Photogrammetry Point Clouds for Estimating Forest Structural Attributes in Subtropical Planted Forests. Forests 2019, 10, 145. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Jurado, J.M.; López, A.; Pádua, L.; Sousa, J.J. Remote Sensing Image Fusion on 3D Scenarios: A Review of Applications for Agriculture and Forestry. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102856. [Google Scholar] [CrossRef]

- Chakraborty, M.; Khot, L.R.; Sankaran, S.; Jacoby, P.W. Evaluation of Mobile 3D Light Detection and Ranging Based Canopy Mapping System for Tree Fruit Crops. Comput. Electron. Agric. 2019, 158, 284–293. [Google Scholar] [CrossRef]

- Buunk, T.; Vélez, S.; Ariza-Sentís, M.; Valente, J. Comparing Nadir and Oblique Thermal Imagery in UAV-Based 3D Crop Water Stress Index Applications for Precision Viticulture with LiDAR Validation. Sensors 2023, 23, 8625. [Google Scholar] [CrossRef]

- Tumbo, S.D.; Salyani, M.; Whitney, J.D.; Wheaton, T.A.; Miller, W.M. Investigation of Laser and Ultrasonic Ranging Sensors for Measurements of Citrus Canopy Volume. Appl. Eng. Agric. 2002, 18, 367. [Google Scholar] [CrossRef]

- Johansen, K.; Raharjo, T.; McCabe, M.F. Using Multi-Spectral UAV Imagery to Extract Tree Crop Structural Properties and Assess Pruning Effects. Remote Sens. 2018, 10, 854. [Google Scholar] [CrossRef]

- Li, M.; Shamshiri, R.R.; Schirrmann, M.; Weltzien, C. Impact of Camera Viewing Angle for Estimating Leaf Parameters of Wheat Plants from 3D Point Clouds. Agriculture 2021, 11, 563. [Google Scholar] [CrossRef]

- Che, Y.; Wang, Q.; Xie, Z.; Zhou, L.; Li, S.; Hui, F.; Wang, X.; Li, B.; Ma, Y. Estimation of Maize Plant Height and Leaf Area Index Dynamics Using an Unmanned Aerial Vehicle with Oblique and Nadir Photography. Ann. Bot. 2020, 126, 765–773. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.-J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.-M.; Borra-Serrano, I.; López-Granados, F. Assessing Optimal Flight Parameters for Generating Accurate Multispectral Orthomosaicks by UAV to Support Site-Specific Crop Management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef]

- Ottoy, S.; Tziolas, N.; Van Meerbeek, K.; Aravidis, I.; Tilkin, S.; Sismanis, M.; Stavrakoudis, D.; Gitas, I.Z.; Zalidis, G.; De Vocht, A. Effects of Flight and Smoothing Parameters on the Detection of Taxus and Olive Trees with UAV-Borne Imagery. Drones 2022, 6, 197. [Google Scholar] [CrossRef]

- Zhu, W.; Rezaei, E.E.; Nouri, H.; Sun, Z.; Li, J.; Yu, D.; Siebert, S. UAV Flight Height Impacts on Wheat Biomass Estimation via Machine and Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 7471–7485. [Google Scholar] [CrossRef]

- Catania, P.; Ferro, M.V.; Roma, E.; Orlando, S.; Vallone, M. Evaluation of Different Flight Courses with UAV in Vineyard. In Proceedings of the AIIA 2022: Biosystems Engineering Towards the Green Deal, Palermo, Italy, 19–22 September 2022; Ferro, V., Giordano, G., Orlando, S., Vallone, M., Cascone, G., Porto, S.M.C., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 457–467. [Google Scholar]

- Leica BLK360 Imaging Laser Scanner. Available online: https://leica-geosystems.com/en-US/products/laser-scanners/scanners/blk360 (accessed on 24 February 2024).

- Bauwens, S.; Bartholomeus, H.; Calders, K.; Lejeune, P. Forest Inventory with Terrestrial LiDAR: A Comparison of Static and Hand-Held Mobile Laser Scanning. Forests 2016, 7, 127. [Google Scholar] [CrossRef]

- Cabo, C.; Del Pozo, S.; Rodríguez-Gonzálvez, P.; Ordóñez, C.; González-Aguilera, D. Comparing Terrestrial Laser Scanning (TLS) and Wearable Laser Scanning (WLS) for Individual Tree Modeling at Plot Level. Remote Sens. 2018, 10, 540. [Google Scholar] [CrossRef]

- Tarolli, P.; Sofia, G.; Calligaro, S.; Prosdocimi, M.; Preti, F.; Fontana, G.D. Vineyards in Terraced Landscapes: New Opportunities from Lidar Data. Land. Degrad. Dev. 2015, 26, 92–102. [Google Scholar] [CrossRef]

- Jurado, J.M.; Ortega, L.; Cubillas, J.J.; Feito, F.R. Multispectral Mapping on 3D Models and Multi-Temporal Monitoring for Individual Characterization of Olive Trees. Remote Sens. 2020, 12, 1106. [Google Scholar] [CrossRef]

- López, A.; Jurado, J.M.; Ogayar, C.J.; Feito, F.R. An Optimized Approach for Generating Dense Thermal Point Clouds from UAV-Imagery. ISPRS J. Photogramm. Remote Sens. 2021, 182, 78–95. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Aimonino, D.R.; Barge, P.; Tortia, C.; Gay, P. 2D and 3D Data Fusion for Crop Monitoring in Precision Agriculture. In Proceedings of the 2019 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Portici, Italy, 24–26 October 2019; pp. 62–67. [Google Scholar]

- Cucchiaro, S.; Fallu, D.J.; Zhang, H.; Walsh, K.; Van Oost, K.; Brown, A.G.; Tarolli, P. Multiplatform-SfM and TLS Data Fusion for Monitoring Agricultural Terraces in Complex Topographic and Landcover Conditions. Remote Sens. 2020, 12, 1946. [Google Scholar] [CrossRef]

| Sensor | Imagery Type | RMSE XY (m) | RMSE Z (m) | RMSE XYZ (m) | Spatial Res. (m) |

|---|---|---|---|---|---|

| ALTUM-PT | Multispectral | 0.005 | 0.021 | 0.013 | 0.0262 |

| Panchromatic | 0.006 | 0.017 | 0.011 | 0.0124 | |

| Zenmuse L1 | RGB | 0.008 | 0.018 | 0.012 | 0.0158 |

| Zenmuse H20T | RGB | 0.008 | 0.022 | 0.014 | 0.0197 |

| Thermal infrared | 0.016 | 0.072 | 0.044 | 0.0527 |

| Sensor | Data Type | Min. | Max. | Mean | SD | CV (%) |

|---|---|---|---|---|---|---|

| Maximum height (m) | ||||||

| Measured | 1.24 | 1.81 | 1.57 a | 0.17 | 10.84 | |

| BLK360 | TLS | 1.26 | 1.91 | 1.59 a | 0.18 | 11.67 |

| ALTUM-PT | MSP | 0.79 | 1.95 | 1.48 a,b | 0.24 | 16.25 |

| PAN | 1.22 | 1.83 | 1.55 a | 0.18 | 11.35 | |

| Zenmuse H20T | RGB | 1.12 | 1.80 | 1.48 a,b | 0.19 | 13.11 |

| TIR | 0.36 | 1.65 | 1.30 c | 0.32 | 24.32 | |

| Zenmuse L1 | RGB | 1.28 | 1.84 | 1.53 a | 0.16 | 10.34 |

| LiDAR | 0.58 | 1.73 | 1.37 b,c | 0.29 | 21.05 | |

| 90th percentile of height (m) | ||||||

| BLK360 | TLS | 0.87 | 1.48 | 1.27 a | 0.18 | 14.32 |

| ALTUM-PT | MSP | 0.65 | 1.50 | 1.25 a,b | 0.20 | 15.74 |

| PAN | 0.78 | 1.59 | 1.33 a | 0.19 | 13.89 | |

| Zenmuse H20T | RGB | 0.88 | 1.56 | 1.25 a,b | 0.21 | 16.64 |

| TIR | 0.35 | 1.38 | 1.10 b,c | 0.27 | 24.89 | |

| Zenmuse L1 | RGB | 0.98 | 1.59 | 1.32 a | 0.16 | 12.31 |

| LiDAR | 0.51 | 1.39 | 1.10 c | 0.23 | 20.63 | |

| 95th percentile of height (m) | ||||||

| BLK360 | TLS | 0.94 | 1.61 | 1.34 a | 0.19 | 13.8 |

| ALTUM-PT | MSP | 0.71 | 1.60 | 1.31 a | 0.20 | 15.52 |

| PAN | 0.81 | 1.64 | 1.38 a | 0.19 | 13.61 | |

| Zenmuse H20T | RGB | 0.97 | 1.62 | 1.31 a | 0.20 | 15.41 |

| TIR | 0.36 | 1.49 | 1.16 b | 0.28 | 24.42 | |

| Zenmuse L1 | RGB | 1.05 | 1.65 | 1.37 a | 0.16 | 11.4 |

| LiDAR | 0.56 | 1.42 | 1.15 b | 0.22 | 19.26 | |

| Sensor | Data Type | Min. | Max. | Mean | SD | CV (%) |

|---|---|---|---|---|---|---|

| Projected area (m2) | ||||||

| Measured | 0.12 | 0.73 | 0.46 a,b | 0.15 | 33.46 | |

| BLK360 | TLS | 0.20 | 0.67 | 0.51 a | 0.14 | 28.07 |

| ALTUM-PT | MSP | 0.02 | 1.02 | 0.44 a,b | 0.23 | 52.57 |

| PAN | 0.10 | 0.82 | 0.47 a,b | 0.19 | 39.99 | |

| Zenmuse H20T | RGB | 0.01 | 0.53 | 0.27 c | 0.15 | 54.04 |

| TIR | 0.01 | 0.51 | 0.28 c | 0.16 | 57.83 | |

| Zenmuse L1 | RGB | 0.07 | 0.69 | 0.41 a,b | 0.18 | 42.93 |

| LiDAR | 0.01 | 0.65 | 0.39 b | 0.18 | 46.82 | |

| Canopy volume (m3) | ||||||

| BLK360 | TLS | 0.055 | 0.372 | 0.232 a | 0.097 | 41.66 |

| ALTUM-PT | MSP | 0.004 | 0.573 | 0.184 a,b | 0.124 | 67.45 |

| PAN | 0.016 | 0.611 | 0.251 a | 0.135 | 53.8 | |

| Zenmuse H20T | RGB | 0.003 | 0.521 | 0.203 a,b | 0.125 | 61.34 |

| TIR | 0.001 | 0.286 | 0.136 b | 0.085 | 62.73 | |

| Zenmuse L1 | RGB | 0.013 | 0.473 | 0.238 a | 0.123 | 51.46 |

| LiDAR | 0.002 | 0.445 | 0.232 a | 0.124 | 53.28 | |

| Sensor | Data Type | Maximum Height (m) | Area (m2) | ||||

|---|---|---|---|---|---|---|---|

| r | R2 | RMSE | r | R2 | RMSE | ||

| BLK360 | TLS | 0.95 | 0.90 | 0.027 | 0.86 | 0.74 | 0.042 |

| ALTUM-PT | Multispectral | 0.83 | 0.70 | 0.084 | 0.79 | 0.63 | 0.072 |

| Panchromatic | 0.91 | 0.83 | 0.025 | 0.87 | 0.76 | 0.042 | |

| Zenmuse H20T | RGB | 0.91 | 0.83 | 0.081 | 0.82 | 0.66 | 0.165 |

| TIR | 0.76 | 0.58 | 0.147 | 0.82 | 0.67 | 0.143 | |

| Zenmuse L1 | RGB | 0.89 | 0.79 | 0.038 | 0.95 | 0.89 | 0.048 |

| LiDAR | 0.87 | 0.76 | 0.129 | 0.82 | 0.67 | 0.068 | |

| Study | Sensors Used | Parameter | Reference Measurement | Results | ||

|---|---|---|---|---|---|---|

| TLS | MTLS | UAV | ||||

| Escolà et al. [67] | • | • | Height | MTLS | r: 0.79; R2: 0.62 | |

| H90 | r: 0.83; R2: 0.69 | |||||

| Llorens et al. [50] | • | Height | Field measurements | R2: 0.23 | ||

| Volume | Ultrasonic sensor | R2: 0.56 | ||||

| LAI | R2: 0.22 | |||||

| Chakraborty et al. [90] | • | Height | Field measurements | r: 0.59 | ||

| Volume | UAV canopy surface area | r: 0.82 convex hull r: 0.75 voxel grid | ||||

| Rinaldi et al. [48] | • | Height | Field measurements | R2: 0.98 | ||

| Torres-Sánchez et al. [31] | • | • | Height | MTLS | R2: 0.76 | |

| Area | R2: 0.78 | |||||

| Volume | R2: 0.73 (convex hull) R2: 0.85 (2.5 D volume) | |||||

| Pagliai et al. [34] | • | • | Height | MTLS | R2: 0.80; RMSE: 0.124 m | |

| Volume | R2: 0.78; RMSE: 0.057 m3 | |||||

| Buunk et al. [91] | • | Volume | UAV LiDAR | R2: 0.70 | ||

| Petrović et al. [44] | • | • | Volume | MTLS and UAV | R2: 0.92 | |

| Caruso et al. [52] | • | Height | Field measurements | R2: 0.61 | ||

| Volume | R2: 0.75 | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ferreira, L.; Sousa, J.J.; Lourenço, J.M.; Peres, E.; Morais, R.; Pádua, L. Comparative Analysis of TLS and UAV Sensors for Estimation of Grapevine Geometric Parameters. Sensors 2024, 24, 5183. https://doi.org/10.3390/s24165183

Ferreira L, Sousa JJ, Lourenço JM, Peres E, Morais R, Pádua L. Comparative Analysis of TLS and UAV Sensors for Estimation of Grapevine Geometric Parameters. Sensors. 2024; 24(16):5183. https://doi.org/10.3390/s24165183

Chicago/Turabian StyleFerreira, Leilson, Joaquim J. Sousa, José. M. Lourenço, Emanuel Peres, Raul Morais, and Luís Pádua. 2024. "Comparative Analysis of TLS and UAV Sensors for Estimation of Grapevine Geometric Parameters" Sensors 24, no. 16: 5183. https://doi.org/10.3390/s24165183