Mix-VIO: A Visual Inertial Odometry Based on a Hybrid Tracking Strategy

Abstract

:1. Introduction

- We propose a VIO system that is robust for illumination changes and accurate in tracking. To tackle dynamic lighting and high-speed motion environments, we combine deep learning with traditional optical flow for feature extraction and matching, presenting a hybrid feature point dispersion strategy for more robust and accurate results. Leveraging TensorRT for parallel acceleration of feature extraction and matching networks enables real-time operation of the entire system on edge devices.

- Unlike the aforementioned approaches [16,17] that accelerate optical flow tracking using direct methods, our approach combines optical flow with parallel depth feature extraction and feature matching. We employ a hybrid method of optical flow tracking and feature point matching as our front-end matching scheme, achieving robustness against image blurring and lighting changes.

- We have open-sourced our code at https://github.com/luobodan/Mix-vio (accessed on 12 June 2024) for community enhancement and development.

2. Materials and Methods

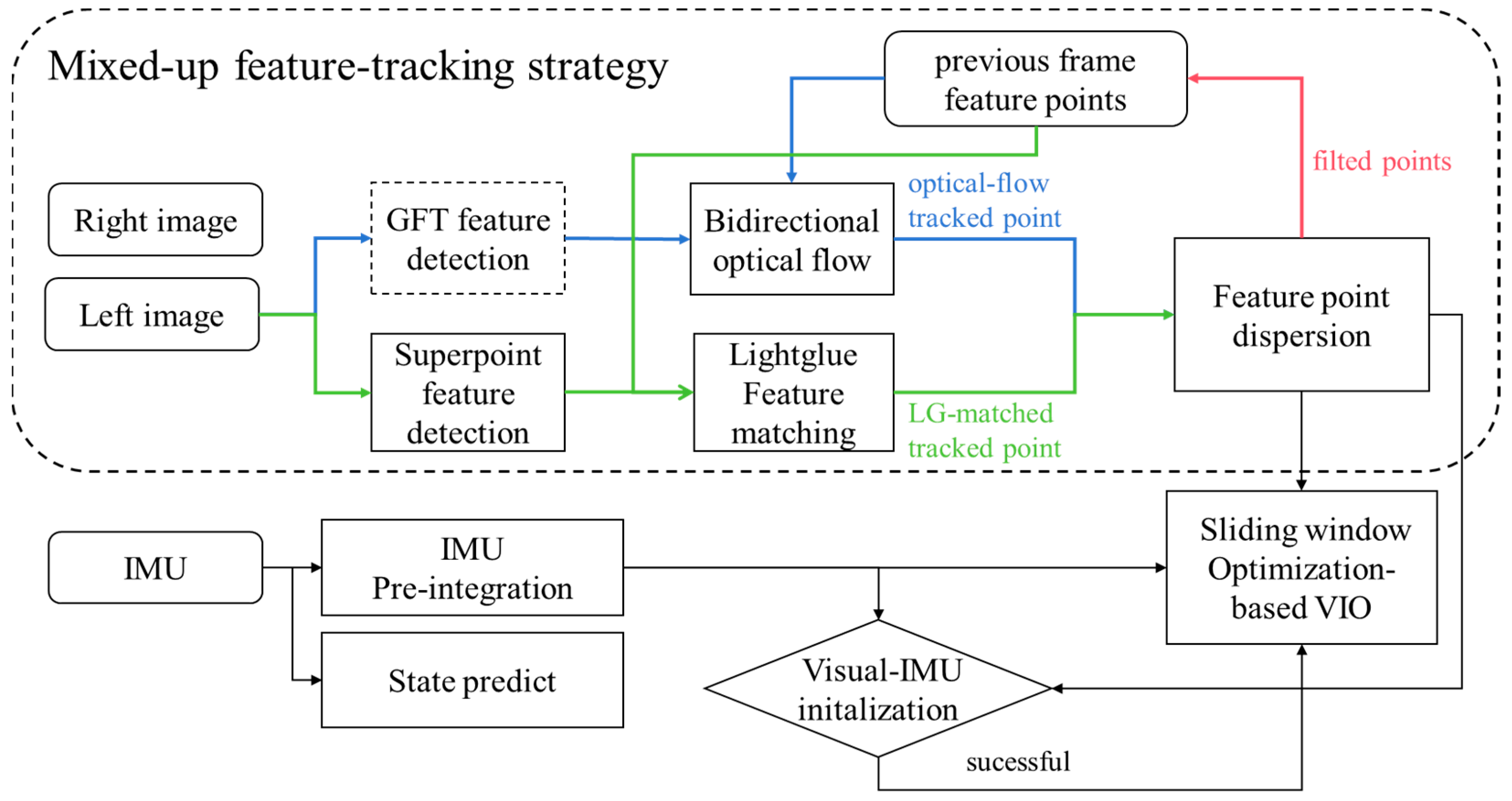

2.1. System Overview

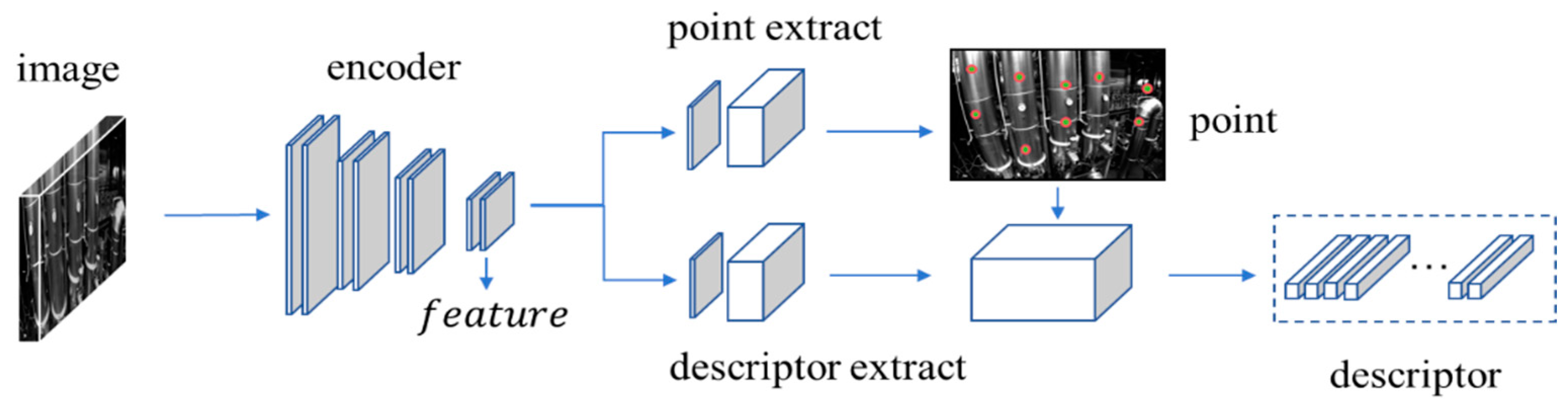

2.2. DNN-Based Feature Extraction and Matching Pipeline Based on Superpoint and Lightglue

2.2.1. Deep Feature Detection and Description Based on Superpoint

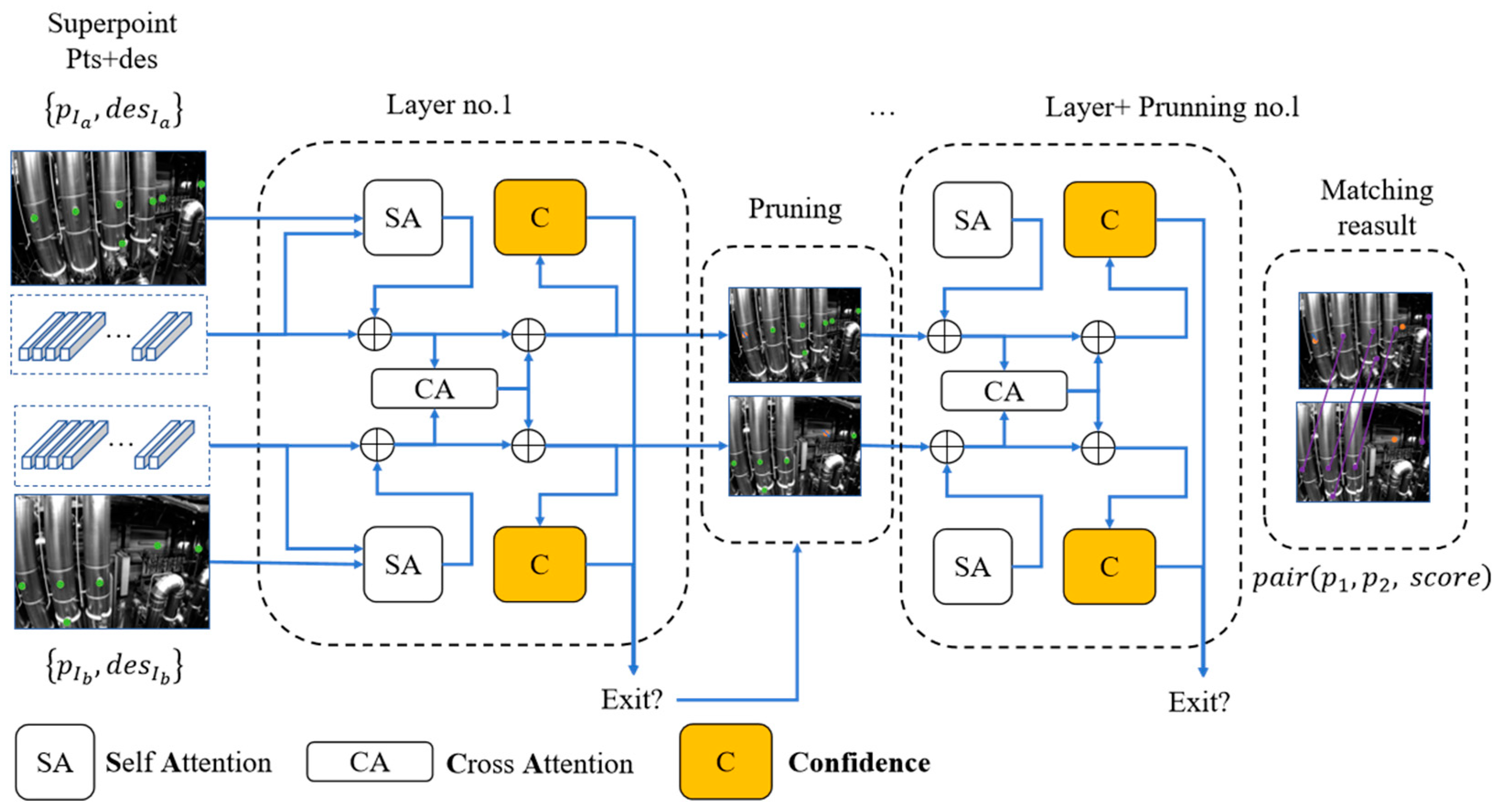

2.2.2. Deep Feature Matching Based on LightGlue

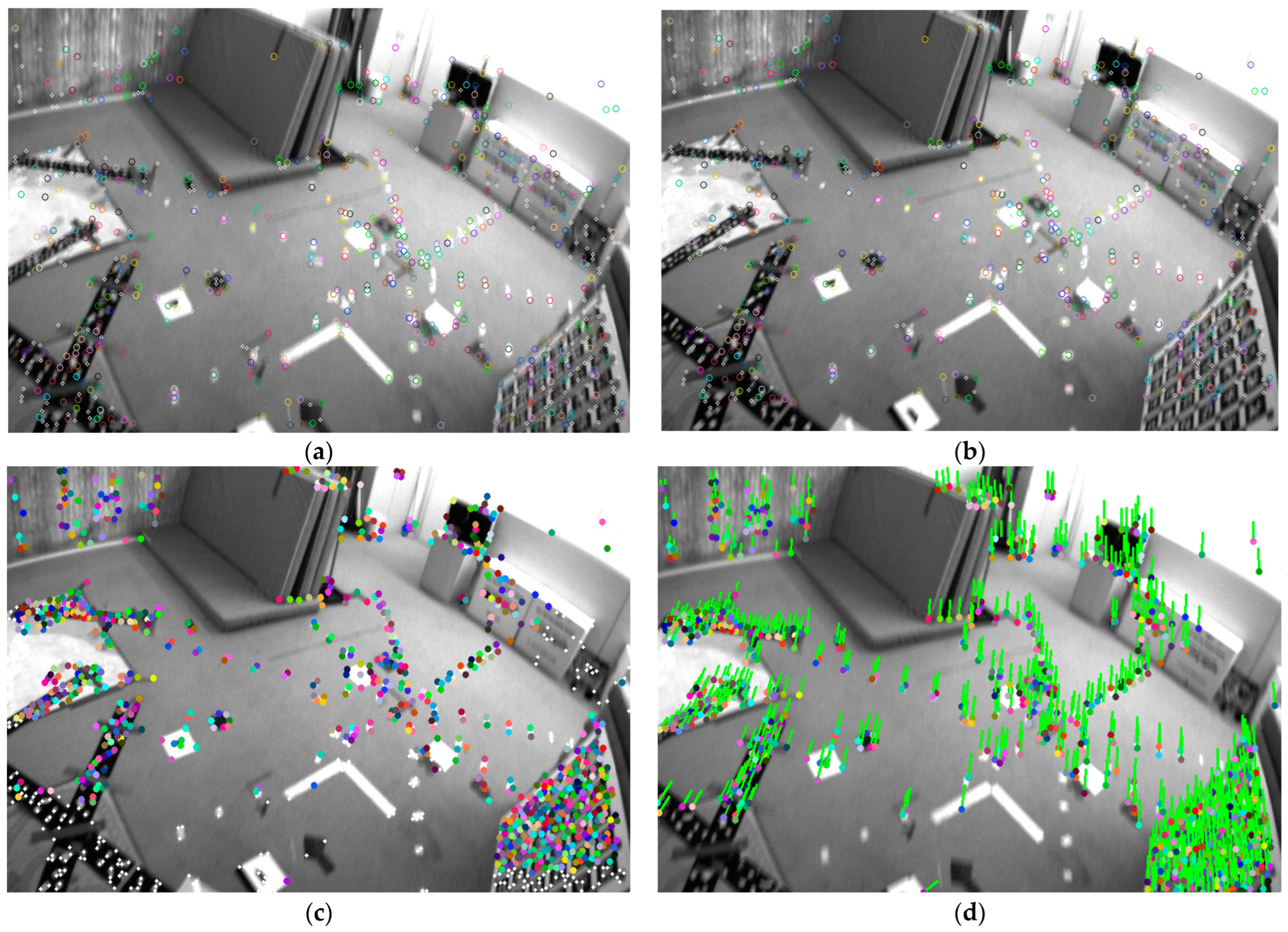

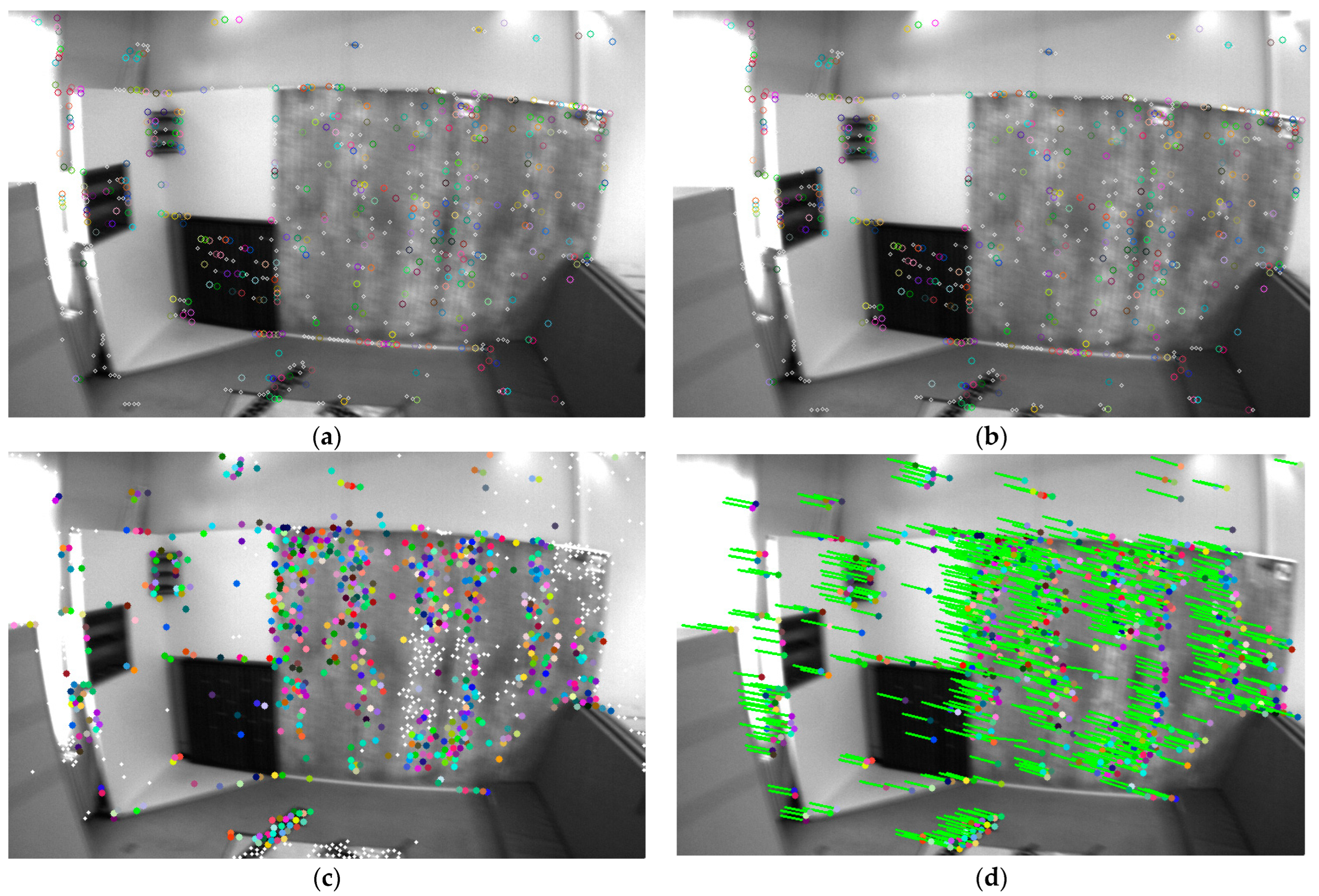

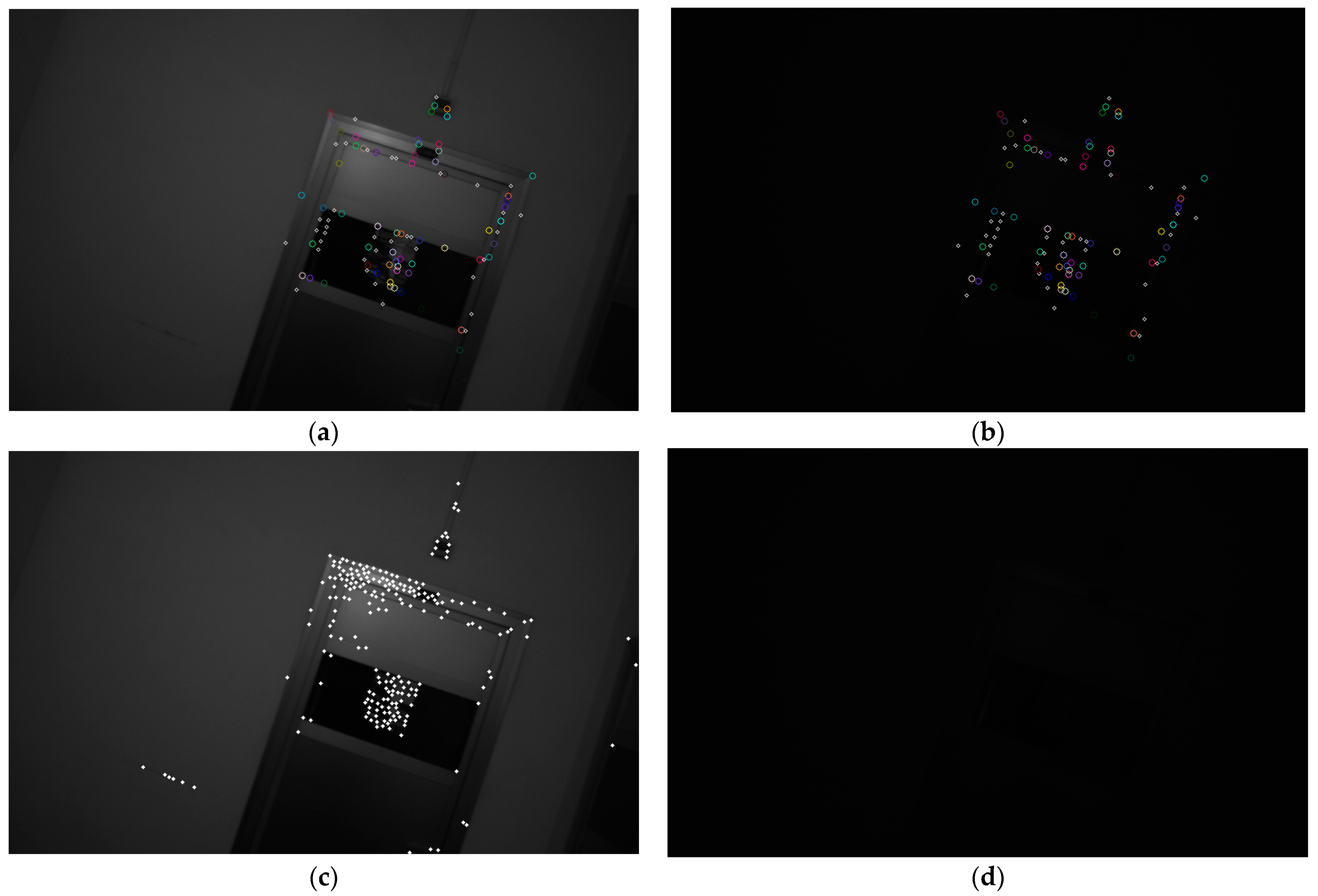

2.3. Hybrid Feature-Tracking Strategy

| Algorithm 1 feature points dispersion | |

| Input: The successful optical flow tracking point vector and the successful deep matching SP point vector , where and are sorted by the tracked times. And the min distance between the points, for optical flow points, for SP points. Output: the point set to add to the optimization | |

| 1 | Cv::Mat mask1, mask2; mask1.fillin(255); mask2.fillin(255); Vector ; //Step 1. Construct the initial mask for and . Construct |

| 2 | for p in : |

| 3 | if(mask1.at(p) == 255): |

| 4 | Circle(mask1, −1, , ); |

| 5 | Circle(mask2, −1, , ); |

| 6 | = true; |

| 7 | ; |

| 8 | else: |

| 9 | = false; |

| 10 | end if |

| 11 | end for |

| 12 | for p in : |

| 13 | if(mask2.at(p) == 255): |

| 14 | = True; |

| 15 | Circle(mask2, −1, , ); |

| 16 | ; |

| 17 | else: |

| 18 | = false; |

| 19 | end if |

| 20 | end for |

| 21 | return |

2.3.1. IMU State Estimation and Error Propagation

2.3.2. Backend Optimization

3. Results

3.1. EuRoc Dataset

3.2. TUM-VI Dataset

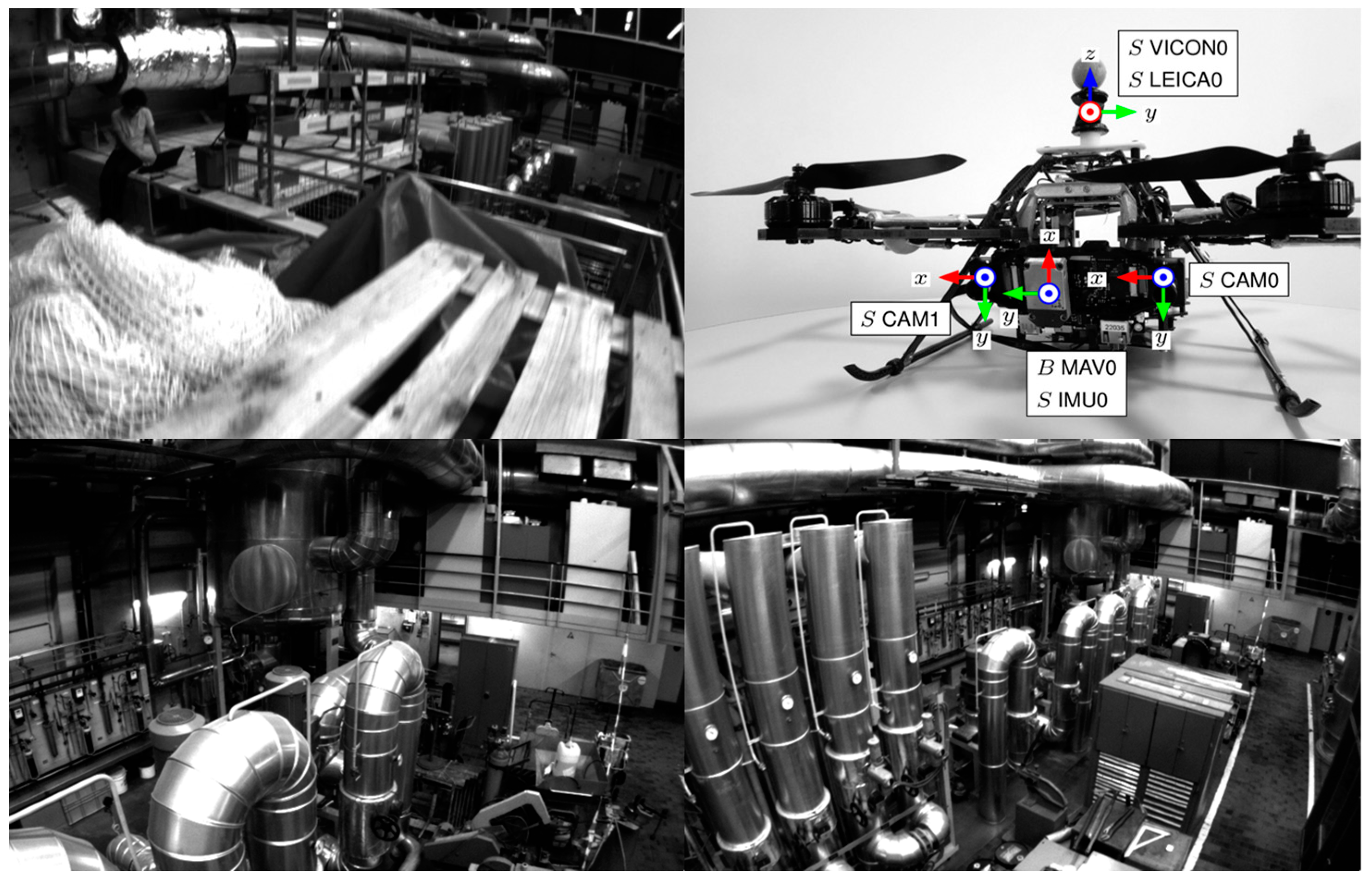

3.3. UMA-VI Dataset

4. Discussion

4.1. EuRoc Dataset Result

4.2. TUM-VI Dataset Result

4.3. UMA-VI Dataset Result

4.4. Time Consumption

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Cheng, J.; Zhang, L.; Chen, Q.; Hu, X.; Cai, J. A review of visual SLAM methods for autonomous driving vehicles. Eng. Appl. Artif. Intell. 2022, 114, 104992. [Google Scholar] [CrossRef]

- Yuan, H.; Wu, C.; Deng, Z.; Yin, J. Robust Visual Odometry Leveraging Mixture of Manhattan Frames in Indoor Environments. Sensors 2022, 22, 8644. [Google Scholar] [CrossRef] [PubMed]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Von Stumberg, L.; Cremers, D. DM-VIO: Delayed marginalization visual-inertial odometry. IEEE Robot. Autom. Lett. 2022, 7, 1408–1415. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Baker, S.; Matthews, I. Lucas-kanade 20 years on: A unifying framework. Int. J. Comput. Vis. 2004, 56, 221–255. [Google Scholar] [CrossRef]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Zuñiga-Noël, D.; Jaenal, A.; Gomez-Ojeda, R.; Gonzalez-Jimenez, J. The UMA-VI dataset: Visual–inertial odometry in low-textured and dynamic illumination environments. Int. J. Robot. Res. 2020, 39, 1052–1060. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Viswanathan, D.G. Features from accelerated segment test (fast). In Proceedings of the 10th Workshop on Image Analysis for Multimedia Interactive Services, London, UK, 6–8 May 2009; pp. 6–8. [Google Scholar]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans. Robot. 2016, 33, 249–265. [Google Scholar] [CrossRef]

- Xu, H.; Yang, C.; Li, Z. OD-SLAM: Real-time localization and mapping in dynamic environment through multi-sensor fusion. In Proceedings of the 2020 5th International Conference on Advanced Robotics and Mechatronics (ICARM), Shenzhen, China, 18–21 December 2020; pp. 172–177. [Google Scholar]

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Leutenegger, S.; Furgale, P.; Rabaud, V.; Chli, M.; Konolige, K.; Siegwart, R. Keyframe-based visual-inertial slam using nonlinear optimization. In Robotis Science and Systems (RSS) 2013; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Clark, R.; Wang, S.; Wen, H.; Markham, A.; Trigoni, N. Vinet: Visual-inertial odometry as a sequence-to-sequence learning problem. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Teed, Z.; Lipson, L.; Deng, J. Deep patch visual odometry. Adv. Neural Inf. Process. Syst. 2024, 36. [Google Scholar]

- Tang, C.; Tan, P. Ba-net: Dense bundle adjustment network. arXiv 2018, arXiv:1806.04807. [Google Scholar]

- Image matching from handcrafted to deep features: A survey. Int. J. Comput. Vis. 2021, 129, 23–79. [CrossRef]

- Balntas, V.; Lenc, K.; Vedaldi, A.; Mikolajczyk, K. HPatches: A benchmark and evaluation of handcrafted and learned local descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5173–5182. [Google Scholar]

- Verdie, Y.; Yi, K.; Fua, P.; Lepetit, V. Tilde: A temporally invariant learned detector. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5279–5288. [Google Scholar]

- Yi, K.M.; Trulls, E.; Lepetit, V.; Fua, P. Lift: Learned invariant feature transform. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 467–483. [Google Scholar]

- Ono, Y.; Trulls, E.; Fua, P.; Yi, K.M. LF-Net: Learning local features from images. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 6237–6247. [Google Scholar]

- Lowe, G. Sift-the scale invariant feature transform. Int. J 2004, 2, 2. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 224–236. [Google Scholar]

- Li, G.; Yu, L.; Fei, S. A deep-learning real-time visual SLAM system based on multi-task feature extraction network and self-supervised feature points. Measurement 2021, 168, 108403. [Google Scholar] [CrossRef]

- Sarlin, P.-E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4938–4947. [Google Scholar]

- Lindenberger, P.; Sarlin, P.-E.; Pollefeys, M. Lightglue: Local feature matching at light speed. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 17627–17638. [Google Scholar]

- Xu, H.; Liu, P.; Chen, X.; Shen, S. D2SLAM: Decentralized and Distributed Collaborative Visual-inertial SLAM System for Aerial Swarm. arXiv 2022, arXiv:2211.01538. [Google Scholar]

- Shi, J. Good features to track. In Proceedings of the 1994 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Xu, K.; Hao, Y.; Yuan, S.; Wang, C.; Xie, L. Airvo: An illumination-robust point-line visual odometry. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 3429–3436. [Google Scholar]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.-M.; Randall, G. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 32, 722–732. [Google Scholar] [CrossRef]

- Liu, P.; Feng, C.; Xu, Y.; Ning, Y.; Xu, H.; Shen, S. OmniNxt: A Fully Open-source and Compact Aerial Robot with Omnidirectional Visual Perception. arXiv 2024, arXiv:2403.20085. [Google Scholar]

- Yang, S.; Scherer, S.A.; Yi, X.; Zell, A. Multi-camera visual SLAM for autonomous navigation of micro aerial vehicles. Robot. Auton. Syst. 2017, 93, 116–134. [Google Scholar] [CrossRef]

- Liu, Z.; Shi, D.; Li, R.; Yang, S. ESVIO: Event-based stereo visual-inertial odometry. Sensors 2023, 23, 1998. [Google Scholar] [CrossRef] [PubMed]

- Chen, P.; Guan, W.; Lu, P. Esvio: Event-based stereo visual inertial odometry. IEEE Robot. Autom. Lett. 2023, 8, 3661–3668. [Google Scholar] [CrossRef]

- Schubert, D.; Goll, T.; Demmel, N.; Usenko, V.; Stückler, J.; Cremers, D. The TUM VI benchmark for evaluating visual-inertial odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1680–1687. [Google Scholar]

- Bloesch, M.; Burri, M.; Omari, S.; Hutter, M.; Siegwart, R. Iterated extended Kalman filter based visual-inertial odometry using direct photometric feedback. Int. J. Robot. Res. 2017, 36, 1053–1072. [Google Scholar] [CrossRef]

- Gomez-Ojeda, R.; Moreno, F.-A.; Zuniga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

| EuRoc | MH01 | MH02 | MH03 | MH04 | MH05 | V101 | V102 | V103 | V201 | V202 | V203 | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OKVIS | 0.33 | 0.37 | 0.25 | 0.27 | 0.39 | 0.094 | 0.14 | 0.21 | 0.090 | 0.17 | 0.23 | 0.231 |

| MSCKF | 0.42 | 0.45 | 0.23 | 0.37 | 0.48 | 0.34 | 0.2 | 0.67 | 0.1 | 0.16 | 0.34 | 0.341 |

| ROVIO | 0.21 | 0.25 | 0.25 | 0.49 | 0.52 | 0.10 | 0.10 | 0.14 | 0.12 | 0.14 | 0.23 | 0.231 |

| Vins-m-150 | 0.15 | 0.15 | 0.22 | 0.32 | 0.30 | 0.079 | 0.11 | 0.18 | 0.08 | 0.16 | 0.27 | 0.183 |

| Vins-m-300 | 0.16 | 0.13 | 0.14 | 0.18 | 0.33 | 0.069 | 0.12 | 0.16 | 0.24 | 0.13 | 0.16 | 0.165 |

| Vins-m-400 | 0.14 | 0.10 | 0.08 | 0.17 | 0.22 | 0.066 | 0.096 | failed | 0.11 | 0.11 | 0.20 | - |

| Mix-VIO (200 + 1024) | 0.17 | 0.12 | 0.07 | 0.30 | 0.25 | 0.070 | 0.096 | 0.13 | 0.10 | 0.070 | 0.13 | 0.137 |

| Mix-VIO (50 + 512) | 0.10 | 0.13 | 0.14 | 0.22 | 0.35 | 0.063 | 0.097 | 0.15 | 0.063 | 0.070 | 0.12 | 0.136 |

| Mix-VIO (0 + 512) | 0.23 | 0.18 | 0.2 | 0.32 | 0.36 | 0.090 | 0.12 | 0.15 | 0.074 | 0.10 | 0.49 | 0.210 |

| Mix-VIO (0 + 1024) | 0.22 | 0.16 | 0.16 | 0.27 | 0.26 | 0.073 | 0.11 | 0.21 | 0.10 | 0.10 | 0.43 | 0.190 |

| Number | Proportion | |

|---|---|---|

| SP + LG | 415 | 415/1024 ≈ 41% |

| GFT + opt-flow | 743 | 743/1024 ≈ 73% |

| TUMVI | Corridor4 | Corridor5 | Room1 | Room2 | Room5 | Average |

|---|---|---|---|---|---|---|

| Vins-m | 0.25 | 0.77 | 0.07 | 0.07 | 0.20 | 0.272 |

| VF-m | 0.26 | 0.80 | 0.10 | 0.07 | 0.21 | 0.288 |

| VF-s | 0.20 | 0.88 | 0.09 | 0.19 | 0.14 | 0.300 |

| Mix-VIO-m (40 + 1024) | 0.31 | 0.80 | 0.10 | 0.07 | 0.18 | 0.292 |

| Mix-VIO-m (100 + 1024) | 0.28 | 0.67 | 0.07 | 0.06 | 0.15 | 0.246 |

| Mix-VIO-s (80 + 1024) | 0.13 | 0.66 | 0.10 | 0.22 | 0.29 | 0.280 |

| Mix-VIO-s (100 + 1024) | 0.16 | 0.55 | 0.11 | 0.19 | 0.31 | 0.264 |

| Mix-VIO-s (150 + 1024) | 0.08 | 0.68 | 0.11 | 0.17 | 0.22 | 0.252 |

| UMA | Indoor | |||||

|---|---|---|---|---|---|---|

| Class-En | Hall1-En | Hall1-Rev-En | Hall23-En | Third-Floor-En | Average | |

| mono | ||||||

| Vins-m (150) | 0.11 | 0.35 | failed | failed | 0.36 | - |

| Mix-VIO-m (75 + 1024) | 0.20 | 0.31 | 0.25 | 0.29 | 0.32 | 0.274 |

| Mix-VIO-m (50 + 1024) | 0.11 | 0.22 | 0.24 | 0.23 | 0.30 | 0.22 |

| Mix-VIO-m (0 + 1024) | 0.31 | 0.22 | 0.25 | 0.29 | 0.32 | 0.26 |

| stereo | ||||||

| Vins-s (50) | 0.12 | 0.14 | 0.16 | drift | 0.31 | - |

| Mix-VIO-s (50 + 1024) | 0.14 | 0.26 | 0.17 | 0.32 | 0.26 | 0.23 |

| Mix-VIO-s (30 + 1024) | 0.14 | 0.18 | 0.17 | 0.32 | 0.30 | 0.222 |

| UMA | Illumination-Change | ||||

|---|---|---|---|---|---|

| Class-Eng | Conf-Csc2 | Conf-Csc3 | Third-Floor-Csc2 | Average | |

| mono | |||||

| Vins-m-150 | failed | failed | failed | failed | - |

| Vins-m-150 (ours) | 0.11 | 0.26 | failed | 0.18 | - |

| Mix-VIO-m (75 + 1024) | 0.26 | 0.26 | 0.28 | 0.18 | 0.245 |

| Mix-VIO-m (50 + 1024) | 0.11 | 0.27 | 0.28 | 0.18 | 0.21 |

| Mix-VIO-m (0 + 1024) | 0.31 | 0.26 | 0.29 | 0.17 | 0.2575 |

| stereo | |||||

| Airvo [35] | 0.52 | 0.16 | - | 0.13 | - |

| PL-slam [43] | 2.69 | 1.59 | - | 6.06 | - |

| Vins-s (50 ours) | drift | 0.23 | 0.099 | 0.14 | - |

| Mix-VIO-s (50 + 1024) | 0.17 | 0.16 | 0.095 | 0.094 | 0.15225 |

| Mix-VIO-s (30 + 1024) | 0.14 | 0.20 | 0.098 | 0.091 | 0.1318 |

| Number | Proportion | |

|---|---|---|

| SP + LG | 133 | 133/1024 ≈ 13% |

| GFT + opt-flow | 0 | 0/1024 ≈ 0% |

| Time | Point-Detection | Point-Matching |

|---|---|---|

| SP + LG | 9.2 ms | 16.9 ms |

| SP + LG (TRT acc) | 2.7 ms | 3.5 ms |

| SP + SG | 9.2 ms | 42.3 ms |

| SP + LG (TRT acc) | 2.7 ms | 12.8 ms |

| GFT + opt-flow | 3.1 ms | 7.0 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, H.; Han, K.; Lou, B. Mix-VIO: A Visual Inertial Odometry Based on a Hybrid Tracking Strategy. Sensors 2024, 24, 5218. https://doi.org/10.3390/s24165218

Yuan H, Han K, Lou B. Mix-VIO: A Visual Inertial Odometry Based on a Hybrid Tracking Strategy. Sensors. 2024; 24(16):5218. https://doi.org/10.3390/s24165218

Chicago/Turabian StyleYuan, Huayu, Ke Han, and Boyang Lou. 2024. "Mix-VIO: A Visual Inertial Odometry Based on a Hybrid Tracking Strategy" Sensors 24, no. 16: 5218. https://doi.org/10.3390/s24165218

APA StyleYuan, H., Han, K., & Lou, B. (2024). Mix-VIO: A Visual Inertial Odometry Based on a Hybrid Tracking Strategy. Sensors, 24(16), 5218. https://doi.org/10.3390/s24165218