Evaluating UAV-Based Remote Sensing for Hay Yield Estimation

Abstract

1. Introduction

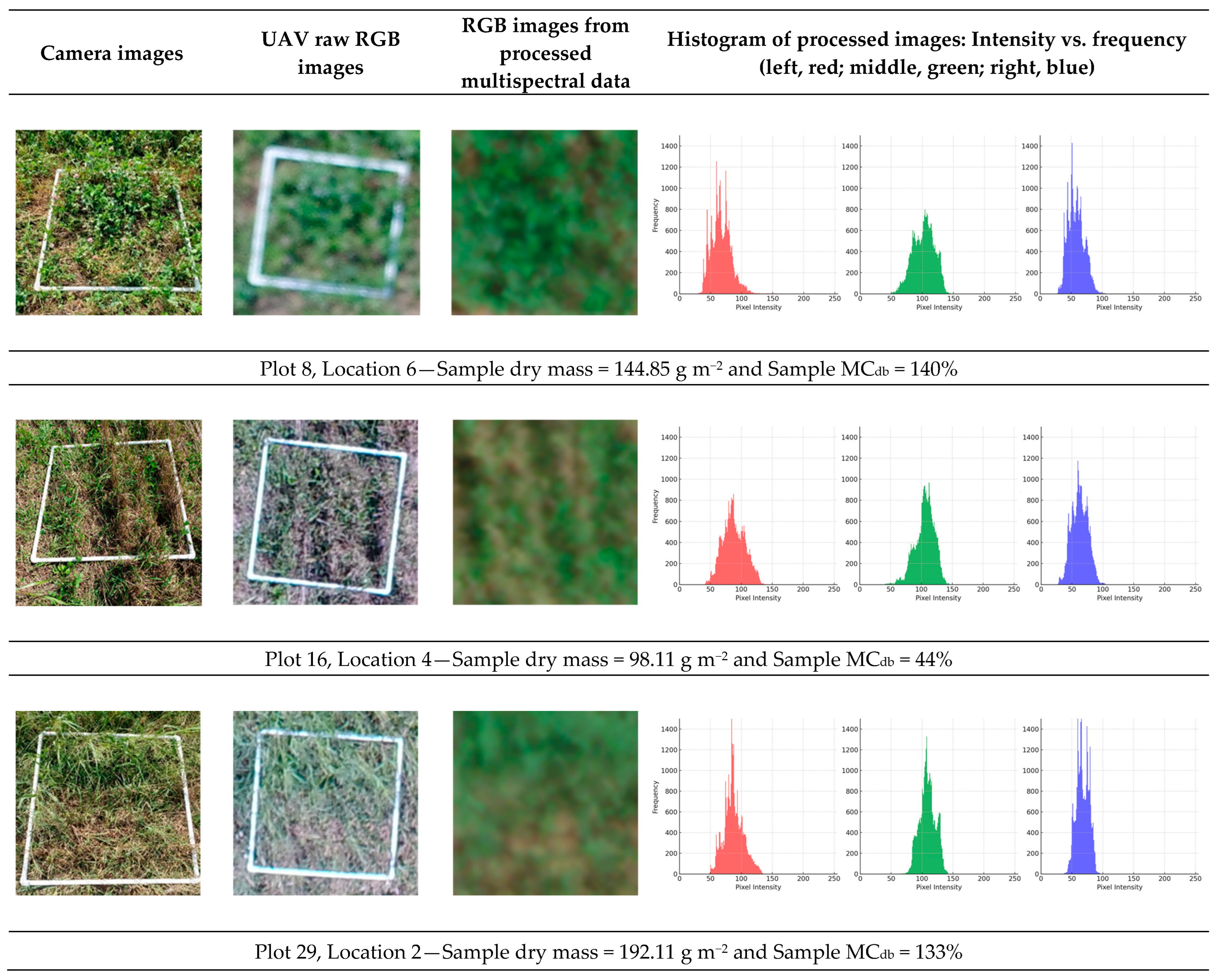

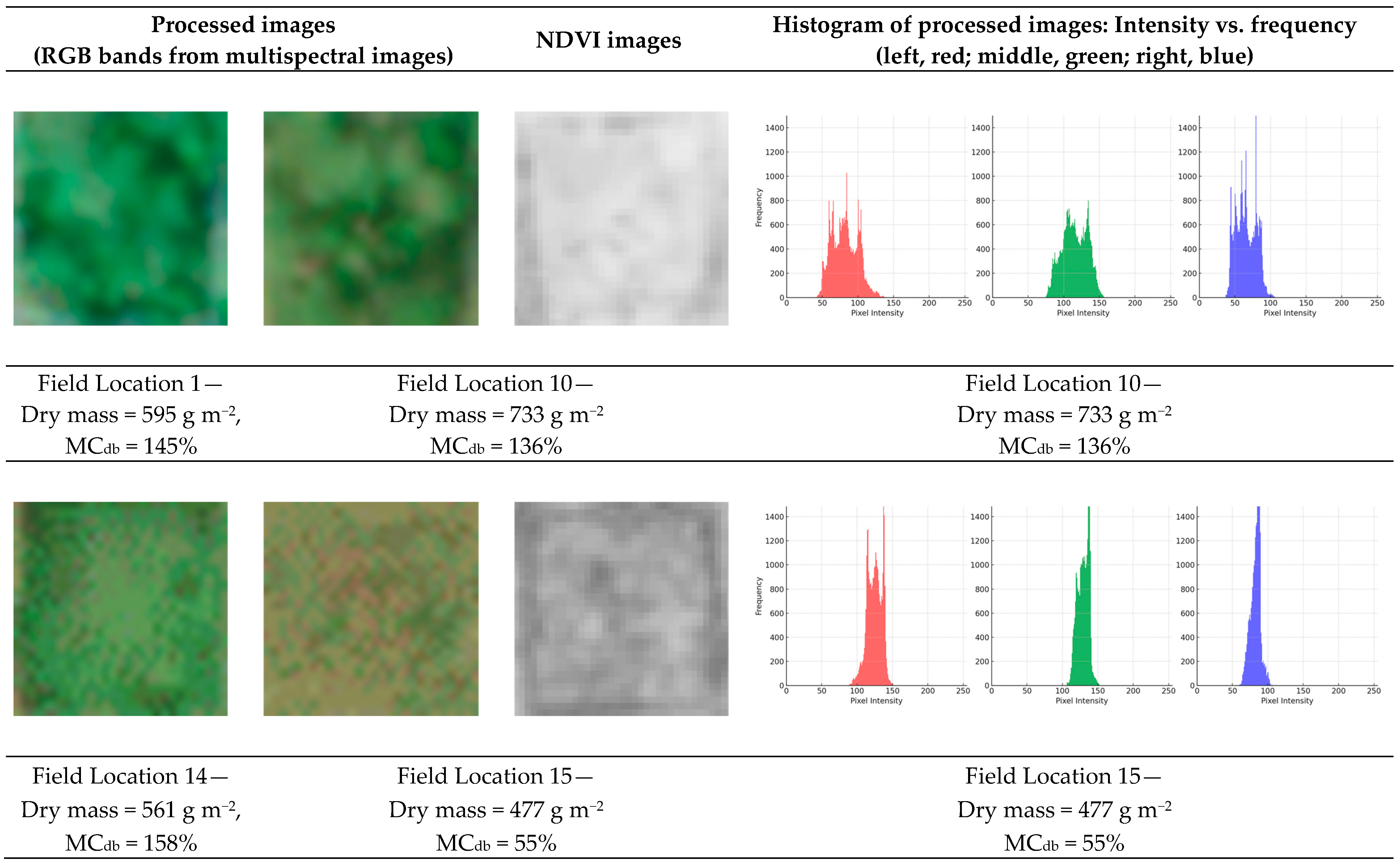

2. Materials and Methods

2.1. Field Information

2.2. Ground Truth-Data Collection

2.3. UAV System and Remote Sensing Data Collection

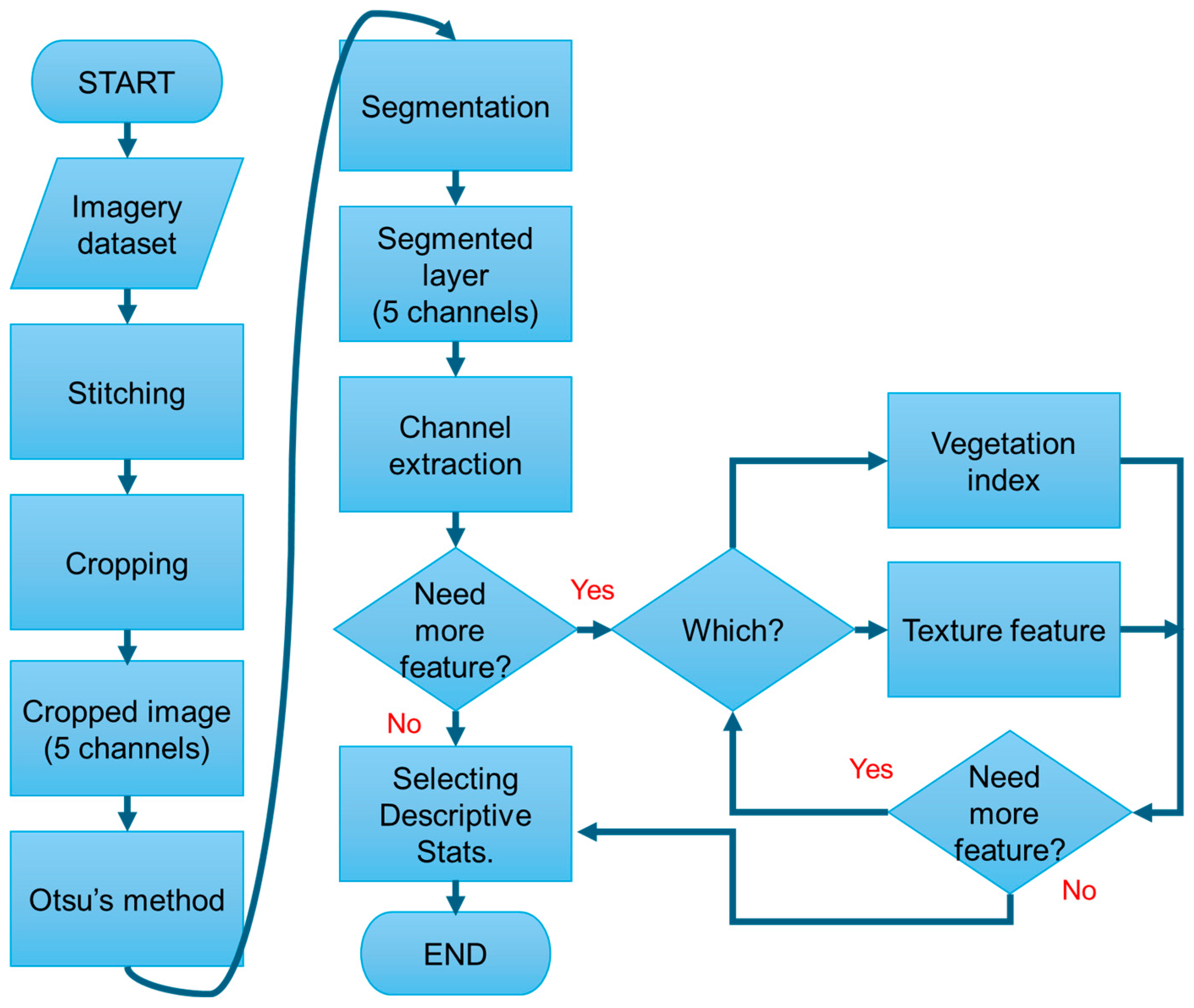

2.4. Data Processing and Analysis

3. Results

3.1. Ground-Truth Data: Comparison and Mass

3.2. Model Performance

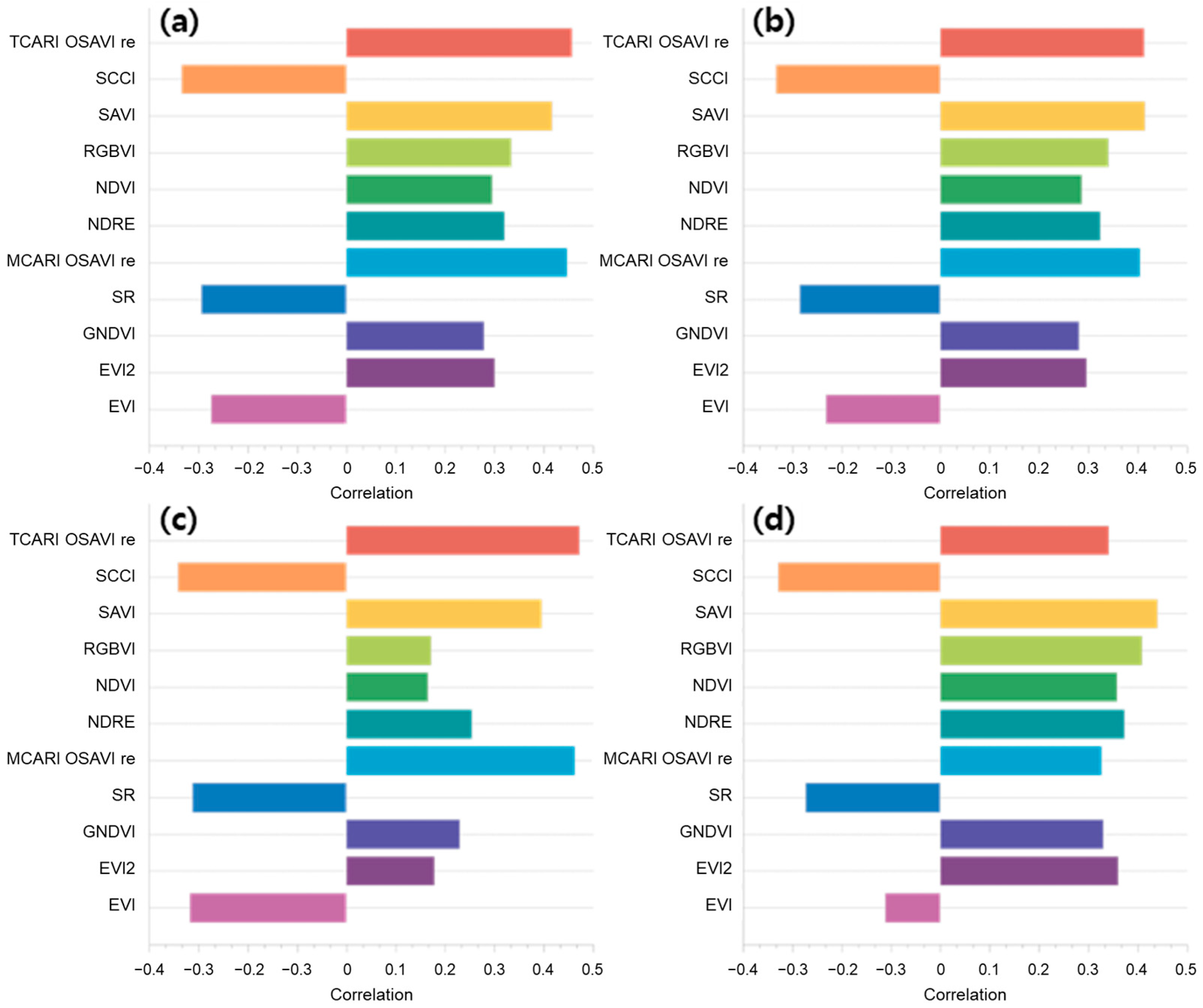

3.2.1. VI Correlation

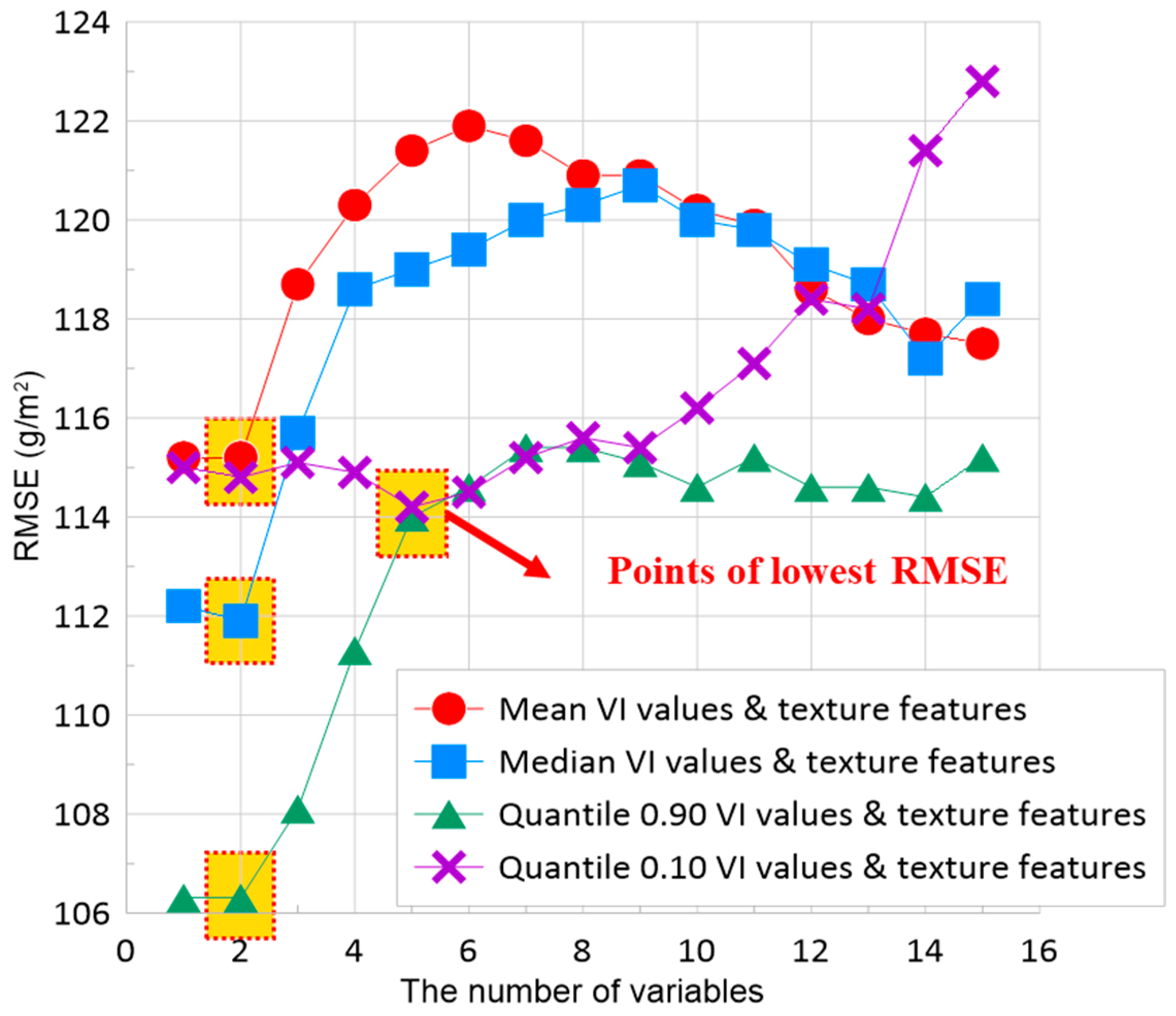

3.2.2. RFE Results

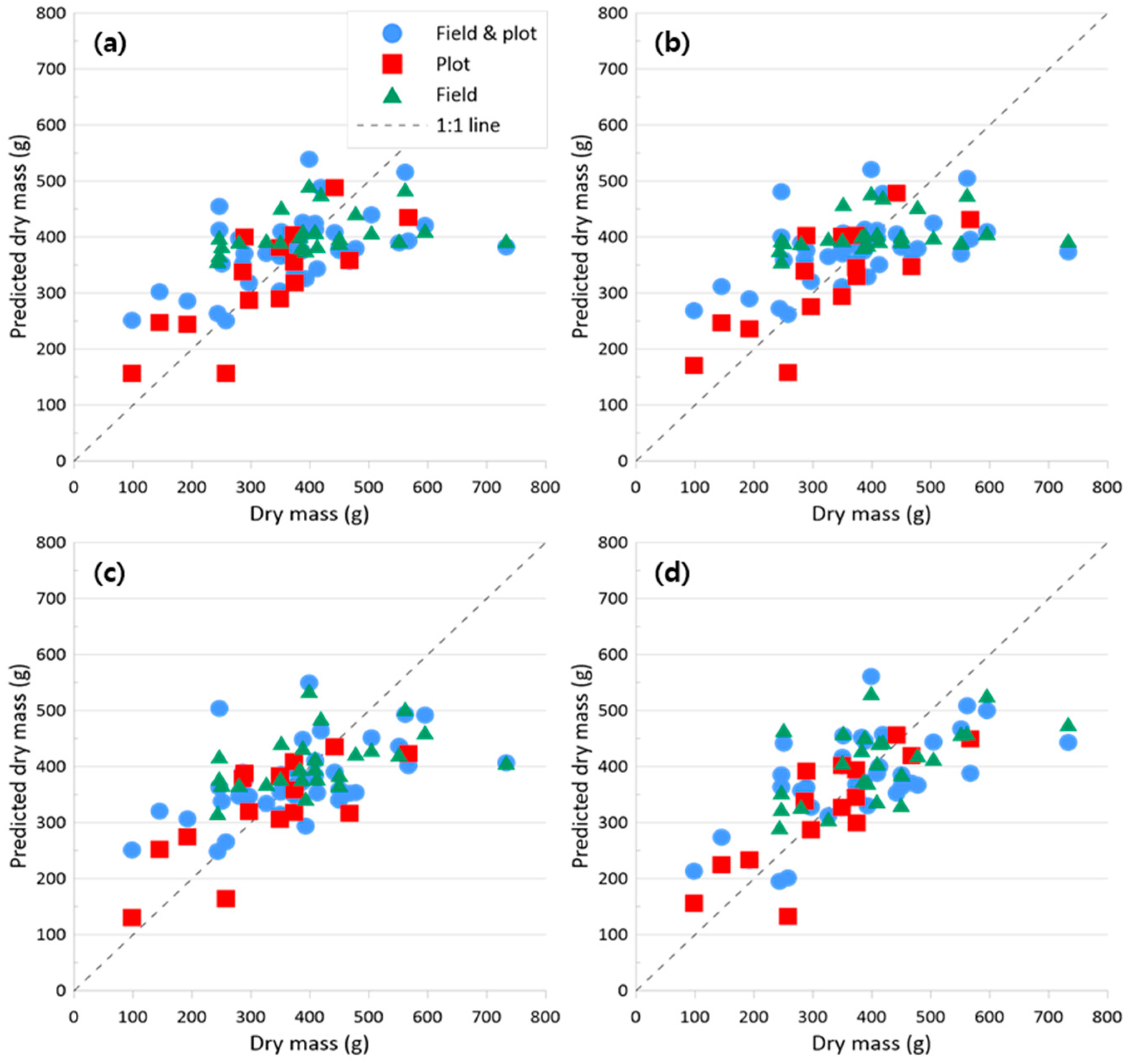

3.2.3. Model Results Based on RFE

4. Discussion

4.1. Ground-Truth Data

4.2. Remote-Sensing Data

4.3. Biomass Estimation in a Mixed Hay Crop

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Index | Equation * | Features | Reference |

|---|---|---|---|

| Enhanced Vegetation Index (EVI) | - Correct NDVI results for air and soil influence - Appropriate for dense canopy area | [32] | |

| Enhanced Vegetation Index 2 (EVI 2) | - Correct EVI results which is calculated by red and NIR bands | [33] | |

| Green Normalized Difference Vegetation Index (GNDVI) | - More sensitive to chlorophyll concentration than NDVI | [34] | |

| Simple Ratio (SR) | - Simple index to distinguish vegetation from soil | [35] | |

| Modified Chlorophyll Absorption Ratio Index/Optimized Soil-Adjusted Vegetation Index red edge (MCARI/OSAVI red edge) ** | - Minimize the effect of non-photosynthetic materials on spectral estimates of absorbed photosynthetically active radiation | [36] | |

| - Enhanced SAVI to minimize soil reflectance | |||

| Normalized-Difference Red Edge Index (NDRE) | - Sensitive index to chlorophyll - Improve variation detection | [37] | |

| Normalized Difference Vegetation Index (NDVI) | - Widespread index related to chlorophyll | [38] | |

| Red–Green–Blue Vegetation Index (RGBVI) | - Related to chlorophyll - Enhanced index for green reflectance | [39] | |

| Soil Adjusted Vegetation Index (SAVI) | - Minimize soil brightness influence - Appropriate for arid regions | [35] | |

| Simplified Canopy Chlorophyll Content Index (SCCCI) | - Sensitive to N uptake and total plant N% - Appropriate to recognize growth stage | [40] | |

| Transformed Chlorophyll Absorption in the Reflectance Index/Optimized Soil-Adjusted Vegetation Index red edge (TCARI/OSAVI red edge) *** | - Indicate the relative abundance of chlorophyll - Affected by underlying soil reflectance and leaf area index | [36] | |

| - Enhanced SAVI to minimize soil reflectance |

| Index | Equation * | Features |

|---|---|---|

| Contrast | - Measures the drastic change in gray level between contiguous pixels - High contrast images feature high spatial frequencies | |

| Correlation | - Measures the linear dependency in the image - High correlation values imply a linear relationship between the gray levels of adjacent pixel pairs | |

| Energy | - Measures texture uniformity or pixel pair repetitions - High energy occurs when the distribution of gray level values is constant or periodic | |

| Entropy | - Measures the disorder of an image and is negatively correlated with Energy - Entropy is high when the image is texturally complex or includes much noise | |

| Homogeneity | - Measures image homogeneity - Sensitive to the presence of near diagonal elements in a GLCM, representing the similarity in gray level between adjacent pixels |

References

- International Society of Precision Agriculture. Available online: https://www.ispag.org (accessed on 1 January 2024).

- Pedersen, S.M.; Lind, K.M. Precision agriculture–from mapping to site-specific application. In Precision Agriculture: Technology and Economic Perspectives; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–20. [Google Scholar] [CrossRef]

- Gordon, C.H.; Derbyshire, J.C.; Wiseman, H.G.; Kane, E.A.; Melin, C.G. Preservation and feeding value of alfalfa stored as hay, haylage, and direct-cut silage. J. Dairy Sci. 1961, 44, 1299–1311. [Google Scholar] [CrossRef]

- Coffey, L.; Baier, A.H. Guide for Organic Livestock Producers. Available online: https://www.ams.usda.gov/sites/default/files/media/GuideForOrganicLivestockProducers.pdf (accessed on 1 January 2024).

- Çakmakçı, R.; Salık, M.A.; Çakmakçı, S. Assessment and principles of environmentally sustainable food and agriculture systems. Agriculture 2023, 13, 1073. [Google Scholar] [CrossRef]

- Dhakal, D.; Islam, M.A. Grass-legume mixtures for improved soil health in cultivated agroecosystem. Sustainability 2018, 10, 2718. [Google Scholar] [CrossRef]

- Carlson, A.; Greene, C.; Raszap Skorbiansky, S.R.; Hitaj, C.; Ha, K.A.; Cavigelli, M.; Ferrier, P.; McBride, W.D. US Organic Production, Markets, Consumers, and Policy, 2000–21. Available online: https://www.ers.usda.gov/publications/pub-details/?pubid=106015 (accessed on 1 January 2024).

- Hatzenbuehler, P.L.; Tejeda, H.; Hines, S.; Packham, J. Change in hay-to-milk price responsiveness with dairy industry expansion. J. Agric. Appl. Econ. 2021, 53, 246–258. [Google Scholar] [CrossRef]

- Sarkar, S.; Jha, P.K. Is precision agriculture worth it? Yes, maybe. J. Biotechnol. Crop Sci. 2020, 9, 4–9. [Google Scholar]

- Maguire, S.M.; Godwin, R.J.; O’Dogherty, M.J.; Blackburn, K. A dynamic weighing system for determining individual square bale weights during harvesting. Biosyst. Eng. 2007, 98, 138–145. [Google Scholar] [CrossRef]

- Possoch, M.; Bieker, S.; Hoffmeister, D.; Bolten, A.; Schellberg, J.; Bareth, G. Multi-temporal crop surface models combined with the RGB vegetation index from UAV-based images for forage monitoring in grassland. ISPRS Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, XLI-B1, 991–998. [Google Scholar] [CrossRef]

- Lussem, U.; Bolten, A.; Gnyp, M.L.; Jasper, J.; Bareth, G. Evaluation of RGB-based vegetation indices from UAV imagery to estimate forage yield in grassland. ISPRS Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, XLII-3, 1215–1219. [Google Scholar] [CrossRef]

- Michez, A.; Philippe, L.; David, K.; Sébastien, C.; Christian, D.; Bindelle, J. Can low-cost unmanned aerial systems describe the forage quality heterogeneity? Insight from a timothy pasture case study in southern Belgium. Remote Sens. 2020, 12, 1650. [Google Scholar] [CrossRef]

- Dvorak, J.S.; Pampolini, L.F.; Jackson, J.J.; Seyyedhasani, H.; Sama, M.P.; Goff, B. Predicting quality and yield of growing alfalfa from a UAV. Trans. ASABE 2021, 64, 63–72. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on convolutional neural networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Sadler, E.J.; Baffaut, C.; Sudduth, K.A.; Lerch, R.N.; Kitchen, N.R.; Vorles, E.D.; Veum, K.S.; Yost, M.A. Central Mississippi River Basin LTAR site overview. In Headwaters to Estuaries: Advances in Watershed Science and Management—Proceedings of the Fifth Interagency Conference on Research in the Watersheds, North Charleston, SC, USA, 2–5 March 2015; Stringer, C.E., Krauss, K.W., Latimer, J.S., Eds.; e-Gen. Tech. Rep. SRS-211; U.S. Department of Agriculture Forest Service, Southern Research Station: Asheville, NC, USA, 2016; pp. 61–67. Available online: https://www.srs.fs.usda.gov/pubs/gtr/gtr_srs211.pdf?#page=79 (accessed on 1 January 2024).

- Blaser, R.E.; Hamme, R.C., Jr.; Fontenot, J.P.; Bryant, H.T.; Polan, C.E.; Wolf, D.D.; McClaugherty, F.S.; Kline, R.G.; Moore, J.S. Forage-animal management systems. In Forage-Animal Management Systems; Virginia Agricultural Experiment Station: Virginia Beach, VA, USA, 1986; pp. 86–87. Available online: http://hdl.handle.net/10919/56312 (accessed on 1 January 2024).

- ASAE Standard No. S358.2; Moisture Measurement—Forages. American Society of Agricultural and Biological Engineers (ASABE): St. Joseph, MI, USA, 2012.

- Zhou, J.; Yungbluth, D.; Vong, C.N.; Scaboo, A.; Zhou, J. Estimation of the Maturity Date of Soybean Breeding Lines Using UAV-Based Multispectral Imagery. Remote Sens. 2019, 11, 2075. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Yin, Y.; Jang-Jaccard, J.; Xu, W.; Singh, A.; Zhu, J.; Sabrina, F.; Kwak, J. IGRF-RFE: A hybrid feature selection method for MLP-based network intrusion detection on UNSW-NB15 dataset. J. Big Data 2023, 10, 15. [Google Scholar] [CrossRef]

- Safari, H.; Fricke, T.; Reddersen, B.; Möckel, T.; Wachendorf, M. Comparing mobile and static assessment of biomass in heterogeneous grassland with a multi-sensor system. J. Sens. Sens. Syst. 2016, 5, 301–312. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Ramsey, H. Development and Implementation of Hay Yield Monitoring Technology. Master’s Thesis, Clemson University, Clemson, SC, USA, 2015. Available online: https://tigerprints.clemson.edu/cgi/viewcontent.cgi?article=3248&context=all_theses (accessed on 1 January 2024).

- Moeckel, T.; Safari, H.; Reddersen, B.; Fricke, T.; Wachendorf, M. Fusion of ultrasonic and spectral sensor data for improving the estimation of biomass in grasslands with heterogeneous sward structure. Remote Sens. 2017, 9, 98. [Google Scholar] [CrossRef]

- Legg, M.; Bradley, S. Ultrasonic arrays for remote sensing of pasture biomass. Remote Sens. 2019, 12, 111. [Google Scholar] [CrossRef]

- Kharel, T.P.; Bhandari, A.B.; Mubvumba, P.; Tyler, H.L.; Fletcher, R.S.; Reddy, K.N. Mixed-species cover crop biomass estimation using planet imagery. Sensors 2023, 23, 1541. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Zuo, Z.; Yue, W.; Morel, J.; Parsons, D.; Liu, J.; Zhou, Z. Estimation of biomass and nutritive value of grass and clover mixtures by analyzing spectral and crop height data using chemometric methods. Comput. Electron. Agric. 2022, 192, 106571. [Google Scholar] [CrossRef]

- Bazzo, C.O.G.; Kamali, B.; Hütt, C.; Bareth, G.; Gaiser, T. A review of estimation methods for aboveground biomass in grasslands using UAV. Remote Sens. 2023, 15, 639. [Google Scholar] [CrossRef]

- Geipel, J.; Bakken, A.K.; Jørgensen, M.; Korsaeth, A. Forage yield and quality estimation by means of UAV and hyperspectral imaging. Precis. Agric. 2021, 22, 1437–1463. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Modi, A.K.; Das, P. Multispectral imaging camera sensing to evaluate vegetation index from UAV. Methodology 2019, 16, 12. [Google Scholar]

- Ren, H.; Zhou, G.; Zhang, F. Using negative soil adjustment factor in soil-adjusted vegetation index (SAVI) for aboveground living biomass estimation in arid grasslands. Remote Sens. Environ. 2018, 209, 439–445. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crops Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Morlin Carneiro, F.; Angeli Furlani, C.E.; Zerbato, C.; Candida de Menezes, P.; da Silva Gírio, L.A.; Freire de Oliveira, M. Comparison between vegetation indices for detecting spatial and temporal variabilities in soybean crop using canopy sensors. Precis. Agric. 2020, 21, 979–1007. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. Available online: https://books.google.co.kr/books?hl=en&lr=&id=ACO-9ZDF_foC&oi=fnd&pg=PA309&dq=Rouse,+J.+W.,+Haas,+R.+H.,+Schell,+J.+A.,+%26+Deering,+D.+W.+(1973).+Monitoring+vegetation+systems+in+the+Great+Plains+with+ERTS.+Proceedings+of+the+3rd+ERTS+Symposium,+1,+309%E2%80%93317.+Scopus.&ots=k9h4JuHDYM&sig=FkZ5kjx4Bydt-R8E8YAJLnPlXTI#v=onepage&q&f=false (accessed on 1 January 2024).

- Barbosa, B.D.S.; Ferraz, G.A.S.; Gonçalves, L.M.; Marin, D.B.; Maciel, D.T.; Ferraz, P.F.P.; Rossi, G. RGB vegetation indices applied to grass monitoring: A qualitative analysis. Agronomy Res. 2019, 17, 349–357. [Google Scholar] [CrossRef]

- Perry, E.M.; Goodwin, I.; Cornwall, D. Remote Sensing Using Canopy and Leaf Reflectance for Estimating Nitrogen Status in Red-blush Pears. HortScience 2018, 53, 78–83. [Google Scholar] [CrossRef]

| Rating | Proportion of Timothy Grass and Red Clover |

|---|---|

| 1 | Mostly timothy grass |

| 2 | Approximately 2:1 timothy to red clover |

| 3 | Approximately equal |

| 4 | Approximately 1:2 timothy to red clover |

| 5 | Mostly red clover |

| Group | Location | Date (2020) | Ground Sample Distance (m) | Expected Ground Resolution (mm pixel−1) | Image Resolution (pixels × pixels) | Flight Speed (km h−1) | Frames per Second (fps) |

|---|---|---|---|---|---|---|---|

| Plot | Plot 8 | 14 Sept. | 30 | 20 | 1280 × 960 | 7 | 1 |

| Plot 16 | 14 Sept. | ||||||

| Plot 29 | 14 Sept. | ||||||

| Field | North | 14 Sept. | 50 | 34 | 1280 × 960 | 7 | 1 |

| Middle | 15 Sept. | ||||||

| South | 15 Sept. |

| Group for ANOVA Test | Composition | Sample Proportion (%) | Number of Sampling Locations in Each Composition * | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Plot 8 | Plot 16 | Plot 29 | N | M | S | ||||

| All | Grass and clover | Mostly timothy grass | 20.0 | 3 | 2 | 2 | 1 | - | - |

| Approximately 2:1 timothy to red clover | 17.5 | - | 1 | - | 2 | 2 | 2 | ||

| Equal | 5.0 | 1 | - | - | 1 | - | - | ||

| Approximately 1:2 timothy to red clover | 7.5 | - | - | - | 3 | - | - | ||

| Mostly red clover | 20.0 | 3 | - | 1 | 3 | 1 | - | ||

| Including other biomasses ** | Mostly grass and 2:1 grass to clover | 25.0 | - | 1 | 1 | - | 7 | 1 | |

| Equal | 2.5 | - | - | - | - | 1 | - | ||

| Mostly clover and 1:2 grass to clover | 2.5 | - | - | - | - | 1 | - | ||

| Dry Mass (kg) | Number of Quadrat Locations |

|---|---|

| Over 0.5 | 5 |

| 0.4–0.5 | 7 |

| 0.3–0.4 | 16 |

| 0.2–0.3 | 9 |

| 0.1–0.2 | 2 |

| Under 0.1 | 1 |

| Number of Variables | RMSE (g m−2) | Recommended Significant Variables * | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | Median | Quantile 90% | Quantile 10% | ||||||

| All | Only VI | All | Only VI | All | Only VI | All | Only VI | ||

| 1 | 115.2 | 116.1 | 112.2 | 112.4 | 106.3 | 106 | 115 | 121.6 | - |

| 2 | 115.2 | 114.8 | 111.9 | 112 | 106.3 | 106 | 114.8 | 118.6 | TCARI/OSAVI RE |

| 3 | 118.7 | 115.9 | 115.7 | 112.8 | 108.1 | 108 | 115.1 | 117.5 | MCARI/OSAVI RE |

| 4 | 120.3 | 117.9 | 118.6 | 114.1 | 111.3 | 109.9 | 114.9 | 117.8 | - |

| 5 | 121.4 | 119.6 | 119 | 116.5 | 114 | 111 | 114.2 | 116.7 | - |

| 6 | 121.9 | 121 | 119.4 | 118.7 | 114.6 | 112.2 | 114.5 | 116.8 | TCARI/OSAVI RE |

| 7 | 121.6 | 122.5 | 120 | 119.8 | 115.4 | 113.4 | 115.2 | 117.7 | MCARI/OSAVI RE |

| 8 | 120.9 | 122.2 | 120.3 | 120.9 | 115.4 | 113.1 | 115.6 | 117.2 | SAVI |

| 9 | 120.9 | 121.9 | 120.7 | 119.9 | 115.1 | 113.1 | 115.4 | 124.8 | RGBVI |

| 10 | 120.2 | 122.9 | 120 | 122.7 | 114.6 | 114.8 | 116.2 | 124.7 | EVI2 |

| Optimum number of variables | 2 | 2 | 2 | 2 | 2 | 2 | 5 | 5 | |

| Number of Variables | R2 | RMSE (g m−2) | MAE (g m−2) | Optimum Number of Variables | Significant Variables |

|---|---|---|---|---|---|

| 1 | 0.33 | 142.5 | 117.67 | Five | Contrast Correlation Energy Entropy Homogeneity |

| 2 | 0.34 | 137.1 | 113.43 | ||

| 3 | 0.29 | 134.5 | 110.61 | ||

| 4 | 0.31 | 128.5 | 104.78 | ||

| 5 | 0.38 | 117.9 | 96.18 |

| Group | Statistics (Variables from RFE) | R2 | RMSE (%) | MAE (g m−2) | |||

|---|---|---|---|---|---|---|---|

| VIs | VIs and Texture Feature | VIs | VIs and Texture Feature | VIs | VIs and Texture Feature | ||

| Plot | Quantile 90% * | 0.52 | 0.53 | 21.79 | 21.46 | 71.26 | 67.68 |

| Quantile 10% ** | 0.68 | 0.68 | 17.84 | 17.72 | 54.49 | 56.44 | |

| Mean * | 0.61 | 0.61 | 19.76 | 19.66 | 64.80 | 64.65 | |

| Median * | 0.58 | 0.59 | 20.42 | 20.25 | 67.23 | 67.18 | |

| Field | Quantile 90% * | 0.05 | 0.18 | 30.06 | 28.01 | 84.43 | 81.71 |

| Quantile 10% ** | 0.12 | 0.31 | 28.90 | 25.71 | 84.35 | 77.53 | |

| Mean * | 0.01 | 0.09 | 30.79 | 29.50 | 85.67 | 83.61 | |

| Median * | 0.00 | 0.07 | 30.88 | 29.71 | 85.54 | 83.07 | |

| Plot and field | Quantile 90% * | 0.21 | 0.28 | 29.20 | 27.97 | 82.02 | 79.31 |

| Quantile 10% ** | 0.32 | 0.41 | 27.08 | 25.34 | 77.73 | 76.68 | |

| Mean * | 0.20 | 0.25 | 29.46 | 28.45 | 82.76 | 80.93 | |

| Median * | 0.16 | 0.21 | 30.12 | 29.29 | 83.37 | 82.19 | |

| Sensing Type | Crops and Purpose | Measurements and Accuracy | Limitations | Reference |

|---|---|---|---|---|

| Proximal and remote (ultrasonic sensors and hyperspectral sensors on UAV) | Grass (Lolio-Cynosuretum)—data fusion and estimation of yield | Plant height and hyperspectral reflectance—R2 = 0.8 | Lower accuracy caused by too-long or too-short sward heights | [23] |

| Extremely heterogeneous grasslands such as are typical for very leniently stocked pastures | ||||

| Proximal and remote (ultrasonic sensors and hyperspectral sensors on UAV) | Grass (Lolio-Cynosuretum)—data fusion and estimation of yield | Plant height and hyperspectral reflectance—R2 = 0.5 | Highly complex variation in pasture or sward structure | [26] |

| Proximal (infrared and ultrasonic distance sensors) | Mixed grass, bermudagrass, hybrid pearl millet, and oats—estimating plant height and yield | Plant height—15 to 20% yield prediction error | Tolerance to withstand dust and dirt | [25] |

| Proximal (ultrasonic sensors) | Grass—improving the accuracy of measuring plant height | Plant height—R2 = 0.8 | Tilting or bouncing of sensor array | [27] |

| Remote (RGB camera on UAV) | Barley—simple estimation of yield | Plant height (crop surface model) and RGB reflectance—R2 = 0.8 | Inability to collect data at specific growth stages due to weather (e.g., clouds and rain) | [24] |

| Ground Sample Distance (m) | Expected Ground Resolution (mm pixel−1) | Image Resolution (pixels × pixels) | Flight Speed (km h−1) | Frame per Second (fps) | Control Application |

|---|---|---|---|---|---|

| 20 | 5 | 4864 × 3648 | 7 | 0.5 | Litchi |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, K.; Sudduth, K.A.; Zhou, J. Evaluating UAV-Based Remote Sensing for Hay Yield Estimation. Sensors 2024, 24, 5326. https://doi.org/10.3390/s24165326

Lee K, Sudduth KA, Zhou J. Evaluating UAV-Based Remote Sensing for Hay Yield Estimation. Sensors. 2024; 24(16):5326. https://doi.org/10.3390/s24165326

Chicago/Turabian StyleLee, Kyuho, Kenneth A. Sudduth, and Jianfeng Zhou. 2024. "Evaluating UAV-Based Remote Sensing for Hay Yield Estimation" Sensors 24, no. 16: 5326. https://doi.org/10.3390/s24165326

APA StyleLee, K., Sudduth, K. A., & Zhou, J. (2024). Evaluating UAV-Based Remote Sensing for Hay Yield Estimation. Sensors, 24(16), 5326. https://doi.org/10.3390/s24165326