Prediction of Vascular Access Stenosis by Lightweight Convolutional Neural Network Using Blood Flow Sound Signals

Abstract

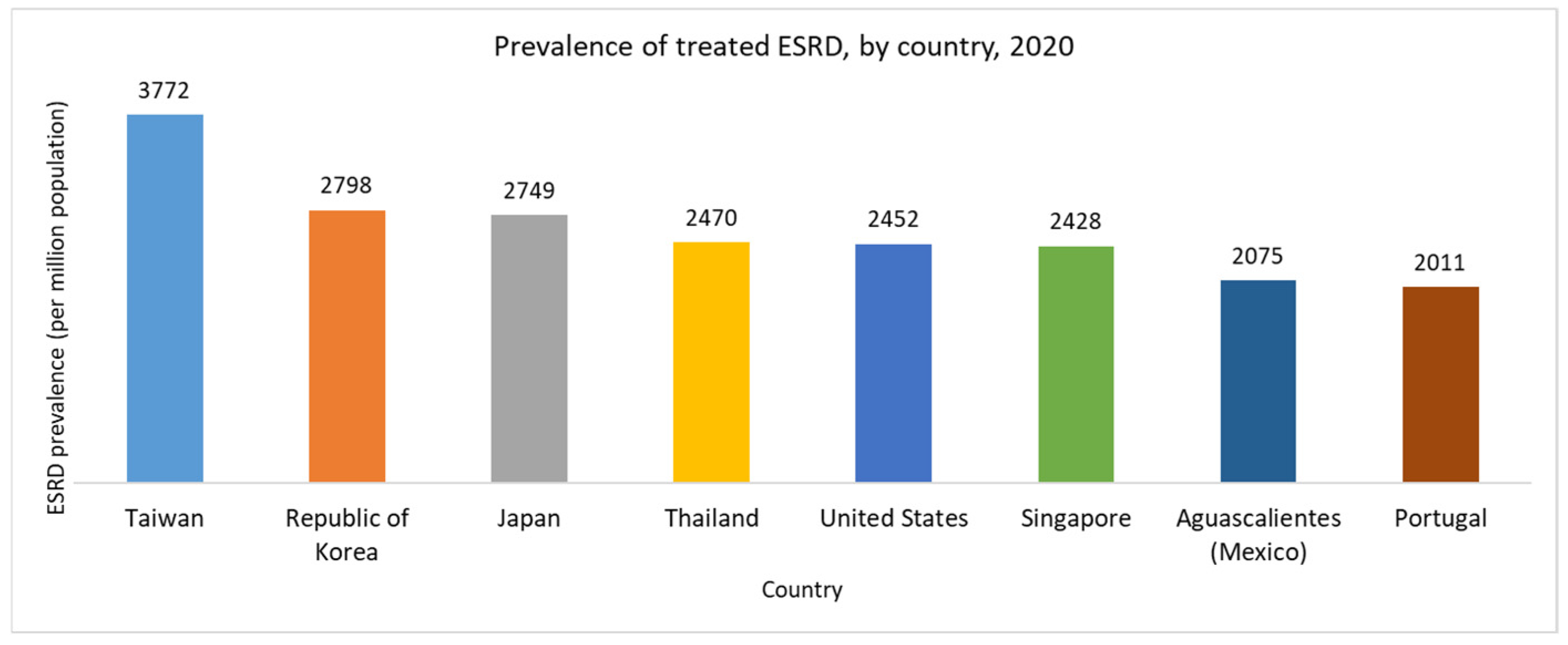

1. Introduction

2. Materials and Methods

2.1. Data Source

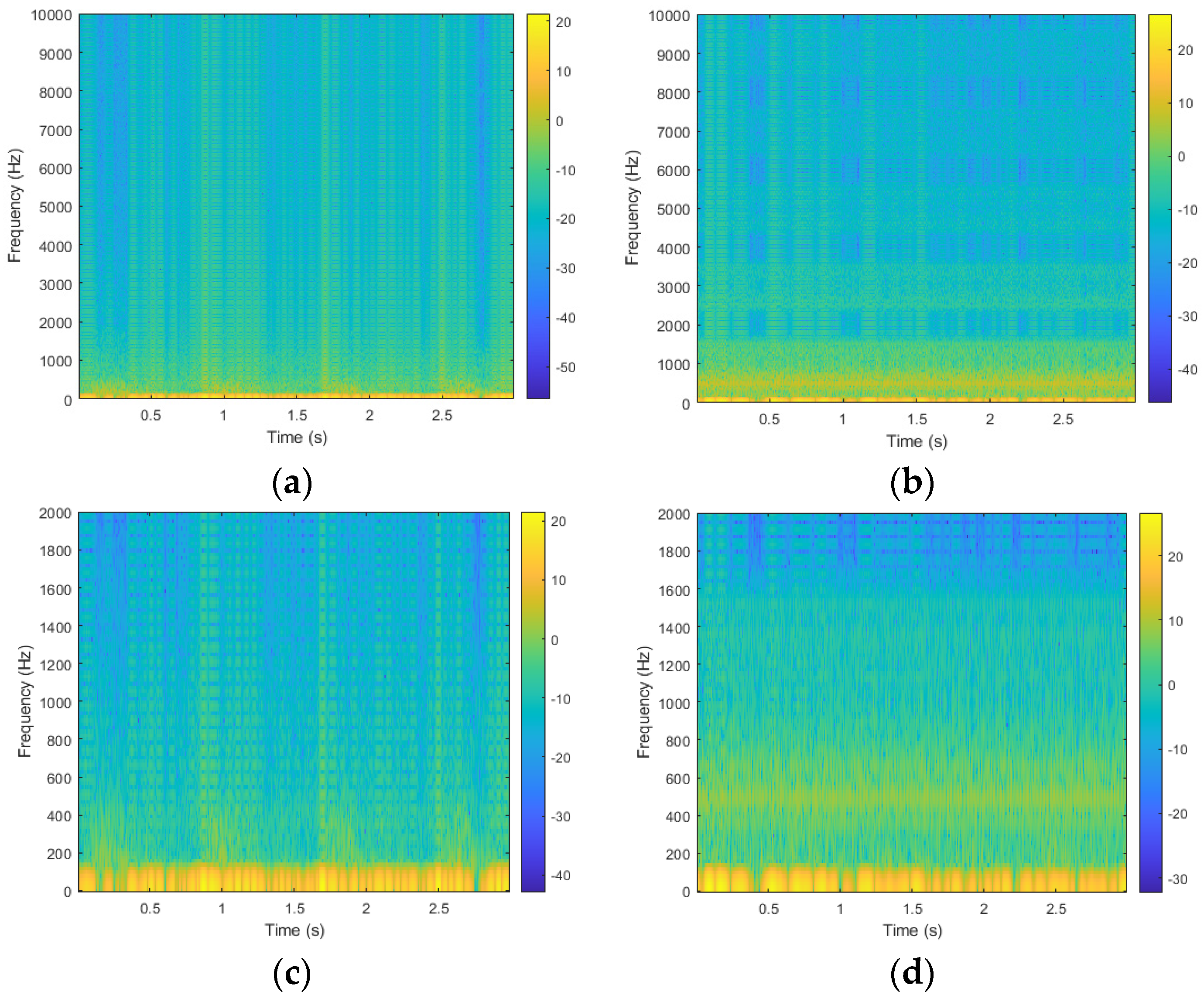

2.2. Data Preprocessing

2.3. Dataset Partitions

2.4. Model Architecture

2.5. Loss Function

2.6. Evaluation Metrics

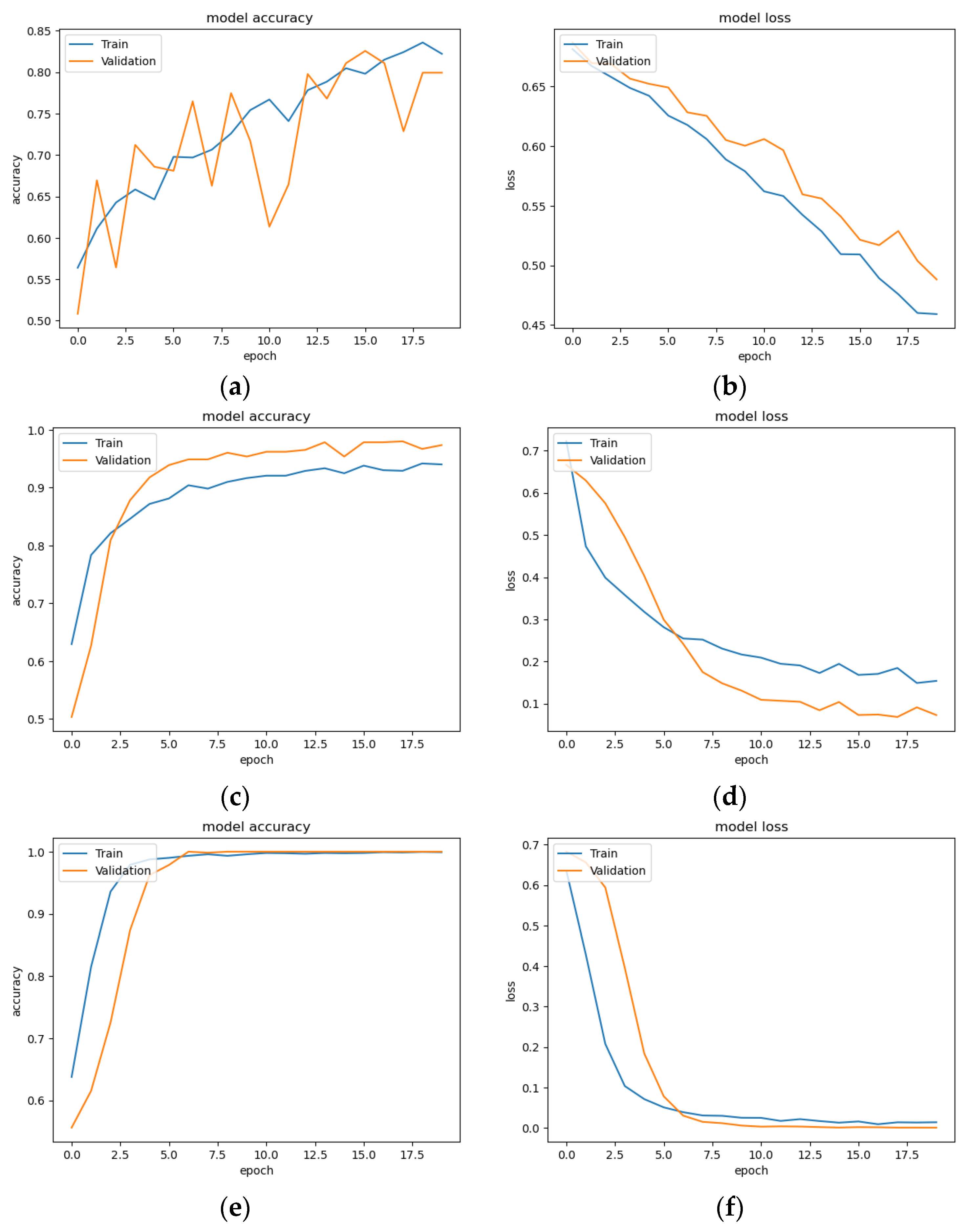

3. Experimental Results

3.1. Classification Result of CNN Models

3.2. Five-Fold Validation Classification Result

3.3. Model Size

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Van Tricht, I.; De Wachter, D.; Tordoir, J.; Verdonck, P. Hemodynamics and Complications Encountered with Arteriovenous Fistulas and Grafts as Vascular Access for Hemodialysis: A Review. Ann. Biomed. Eng. 2005, 33, 1142–1157. [Google Scholar] [CrossRef] [PubMed]

- Neyra, N.R.; Wazir, S. The Evolving Panorama of Vascular Access in the 21st Century. Front. Nephrol. 2022, 2, 917265. [Google Scholar] [CrossRef] [PubMed]

- Driessen, W.; van der Meijden, W.; Wanten, G.; van Hoek, F. Long-Term Patency Rate of the Translocated Autologous Saphenous Vein versus Prosthetic Material in Vascular Access Surgery for Haemodialysis and Parenteral Nutrition. J. Vasc. Access 2023, 24, 972–979. [Google Scholar] [CrossRef]

- Sahasrabudhe, P.; Bindu, A. Nuances of Arteriovenous Fistula Creation for Vascular Access in Hemodialysis. Indian J. Plast. Surg. 2021, 54, 257–263. [Google Scholar] [CrossRef]

- United States Renal Data System. Available online: https://usrds-adr.niddk.nih.gov/2022/end-stage-renal-disease/11-international-comparisons (accessed on 2 April 2024).

- Tsai, S.-Y.; Tseng, H.-F.; Tan, H.-F.; Chien, Y.-S.; Chang, C.-C. End-Stage Renal Disease in Taiwan: A Case–Control Study. J. Epidemiol. 2009, 19, 169–176. [Google Scholar] [CrossRef]

- Hwang, S.; Tsai, J.; Chen, H. Epidemiology, Impact and Preventive Care of Chronic Kidney Disease in Taiwan. Nephrology 2010, 15, 3–9. [Google Scholar] [CrossRef]

- Hsu, H.; Chiang, Y.; Lai, Y.; Lin, L.; Hsieh, H.; Chen, J. Effectiveness of Multidisciplinary Care for Chronic Kidney Disease: A Systematic Review. Worldviews Evid.-Based Nurs. 2021, 18, 33–41. [Google Scholar] [CrossRef]

- Chang, J.C.-J.; Yang, H.-Y. Epidemiology of Chronic Kidney Disease of Undetermined Aetiology in Taiwanese Farmers: A Cross-Sectional Study from Changhua Community-Based Integrated Screening Programme. Occup. Environ. Med. 2021, 78, 849–858. [Google Scholar] [CrossRef]

- Wu, V.; Wu, P.; Wu, C.; Huang, T.; Chang, C.; Tsai, P.; Ko, W.; Chen, L.; Wang, C.; Chu, T.; et al. The Impact of Acute Kidney Injury on the Long-term Risk of Stroke. J. Am. Heart Assoc. 2014, 3, e000933. [Google Scholar] [CrossRef]

- Wang, H.-Y.; Wu, C.-H.; Chen, C.-Y. Bor-Shyh Lin Novel Noninvasive Approach for Detecting Arteriovenous Fistula Stenosis. IEEE Trans. Biomed. Eng. 2014, 61, 1851–1857. [Google Scholar] [CrossRef]

- Sung, P.-H.; Kan, C.-D.; Chen, W.-L.; Jang, L.-S.; Wang, J.-F. Hemodialysis Vascular Access Stenosis Detection Using Auditory Spectro-Temporal Features of Phonoangiography. Med. Biol. Eng. Comput. 2015, 53, 393–403. [Google Scholar] [CrossRef] [PubMed]

- Masengu, A.; Maxwell, A.P.; Hanko, J.B. Investigating Clinical Predictors of Arteriovenous Fistula Functional Patency in a European Cohort. Clin. Kidney J. 2016, 9, 142–147. [Google Scholar] [CrossRef] [PubMed]

- Zomer, B.; Ruiter, M.S.; Dekker, F.W.; Goertz, E.G.; de Haan, M.W.; Hemmelder, M.H.; Hiligsmann, M.J.; Konijn, W.S.; van Loon, M.M.; Maessen, J.M.; et al. FLOW: Flow Dysfunction of Hemodialysis Vascular Access: A Randomized Controlled Trial on the Effectiveness of Surveillance of Arteriovenous Fistulas and Grafts. J. Vasc. Access 2024. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Shi, K.; Zhang, Y.; Cao, Y.; Zhou, Y. Logistic Regression Analysis of Risk Factors for Hematoma after Autologous Arteriovenous Fistula in Hemodialysis Patients. Medicine 2024, 103, e36890. [Google Scholar] [CrossRef]

- Sadasivan, K.; Kunjuraman, U.; Murali, B.; Yadev, I.; Kochunarayanan, A. Factors Affecting the Patency of Radiocephalic Arteriovenous Fistulas Based on Clinico-Radiological Parameters. Cureus 2021, 13, 1–6. [Google Scholar] [CrossRef]

- Ota, K.; Nishiura, Y.; Ishihara, S.; Adachi, H.; Yamamoto, T.; Hamano, T. Evaluation of Hemodialysis Arteriovenous Bruit by Deep Learning. Sensors 2020, 20, 4852. [Google Scholar] [CrossRef]

- Julkaew, S.; Wongsirichot, T.; Damkliang, K.; Sangthawan, P. DeepVAQ: An Adaptive Deep Learning for Prediction of Vascular Access Quality in Hemodialysis Patients. BMC Med. Inform. Decis. Mak. 2024, 24, 45. [Google Scholar] [CrossRef]

- Peralta, R.; Garbelli, M.; Bellocchio, F.; Ponce, P.; Stuard, S.; Lodigiani, M.; Fazendeiro Matos, J.; Ribeiro, R.; Nikam, M.; Botler, M.; et al. Development and Validation of a Machine Learning Model Predicting Arteriovenous Fistula Failure in a Large Network of Dialysis Clinics. Int. J. Environ. Res. Public Health 2021, 18, 12355. [Google Scholar] [CrossRef]

- Wang, X.; Cai, S.; Wang, H.; Li, J.; Yang, Y. Deep-learning-based Renal Artery Stenosis Diagnosis via Multimodal Fusion. J. Appl. Clin. Med. Phys. 2024, 25, e14298. [Google Scholar] [CrossRef]

- Li, Z.; He, W. Stenosis Diagnosis Based on Peripheral Arterial and Artificial Neural Network. Netw. Model. Anal. Heal. Inform. Bioinform. 2021, 10, 13. [Google Scholar] [CrossRef]

- Nguyen, D.H.; Chao, P.C.-P.; Shuai, H.-H.; Fang, Y.-W.; Lin, B.S. Achieving High Accuracy in Predicting Blood Flow Volume at the Arteriovenous Fistulas of Hemodialysis Patients by Intelligent Quality Assessment on PPGs. IEEE Sens. J. 2022, 22, 5844–5856. [Google Scholar] [CrossRef]

- Chung, T.-L.; Liu, Y.-H.; Wu, P.-Y.; Huang, J.-C.; Tsai, Y.-C.; Wang, Y.-C.; Pan, S.-P.; Hsu, Y.-L.; Chen, S.-C. Prediction of Arteriovenous Access Dysfunction by Mel Spectrogram-Based Deep Learning Model. Int. J. Med. Sci. 2024, 21, 2252–2260. [Google Scholar] [CrossRef]

- Bai, Q.; Su, C.; Tang, W.; Li, Y. Machine Learning to Predict End Stage Kidney Disease in Chronic Kidney Disease. Sci. Rep. 2022, 12, 8377. [Google Scholar] [CrossRef] [PubMed]

- Debal, D.A.; Sitote, T.M. Chronic Kidney Disease Prediction Using Machine Learning Techniques. J. Big Data 2022, 9, 109. [Google Scholar] [CrossRef]

- Islam, M.A.; Majumder, M.Z.H.; Hussein, M.A. Chronic Kidney Disease Prediction Based on Machine Learning Algorithms. J. Pathol. Inform. 2023, 14, 100189. [Google Scholar] [CrossRef]

- Kaur, C.; Kumar, M.S.; Anjum, A.; Binda, M.B.; Mallu, M.R.; Ansari, M.S. Al Chronic Kidney Disease Prediction Using Machine Learning. J. Adv. Inf. Technol. 2023, 14, 384–391. [Google Scholar] [CrossRef]

- Hakim, R.; Himmelfarb, J. Hemodialysis Access Failure: A Call to Action. Kidney Int. 1998, 54, 1029–1040. [Google Scholar] [CrossRef]

- Hoeben, H.; Abu-Alfa, A.K.; Reilly, R.F.; Aruny, J.E.; Bouman, K.; Perazella, M.A. Vascular Access Surveillance: Evaluation of Combining Dynamic Venous Pressure and Vascular Access Blood Flow Measurements. Am. J. Nephrol. 2003, 23, 403–408. [Google Scholar] [CrossRef]

- Jeon, H.; Jung, Y.; Lee, S.; Jung, Y. Area-Efficient Short-Time Fourier Transform Processor for Time–Frequency Analysis of Non-Stationary Signals. Appl. Sci. 2020, 10, 7208. [Google Scholar] [CrossRef]

- Mateo, C.; Talavera, J.A. Short-Time Fourier Transform with the Window Size Fixed in the Frequency Domain. Digit. Signal Process. 2018, 77, 13–21. [Google Scholar] [CrossRef]

- Han, X.; Zhang, Z.; Ding, N.; Gu, Y.; Liu, X.; Huo, Y.; Qiu, J.; Yao, Y.; Zhang, A.; Zhang, L.; et al. Pre-Trained Models: Past, Present and Future. AI Open 2021, 2, 225–250. [Google Scholar] [CrossRef]

- Yu, R.; Wang, Y.; Zou, Z.; Wang, L. Convolutional Neural Networks with Refined Loss Functions for the Real-Time Crash Risk Analysis. Transp. Res. Part C Emerg. Technol. 2020, 119, 102740. [Google Scholar] [CrossRef]

- Dj Novakovi, J.; Veljovi, A.; Ili, S.S.; Papi, Ž.; Tomovi, M. Evaluation of Classification Models in Machine Learning. Theory Appl. Math. Comput. Sci. 2017, 7, 39–46. [Google Scholar]

- Kurokawa, Y.; Abiko, S.; Watanabe, K. Noninvasive Detection of Intracranial Vascular Lesions by Recording Blood Flow Sounds. Stroke 1994, 25, 397–402. [Google Scholar] [CrossRef]

- Mansy, H.A.; Hoxie, S.J.; Patel, N.H.; Sandler, R.H. Computerised Analysis of Auscultatory Sounds Associated with Vascular Patency of Haemodialysis Access. Med. Biol. Eng. Comput. 2005, 43, 56–62. [Google Scholar] [CrossRef]

- Han, Y.; Han, P.; Yuan, B.; Zhang, Z.; Liu, L.; Panneerselvam, J. Novel Transformation Deep Learning Model for Electrocardiogram Classification and Arrhythmia Detection Using Edge Computing. J. Grid Comput. 2024, 22, 7. [Google Scholar] [CrossRef]

- Zhou, G.; Chen, Y.; Chien, C.; Revatta, L.; Ferdous, J.; Chen, M.; Deb, S.; De Leon Cruz, S.; Wang, A.; Lee, B.; et al. Deep Learning Analysis of Blood Flow Sounds to Detect Arteriovenous Fistula Stenosis. Npj Digit. Med. 2023, 6, 163. [Google Scholar] [CrossRef]

- Park, J.H.; Park, I.; Han, K.; Yoon, J.; Sim, Y.; Kim, S.J.; Won, J.Y.; Lee, S.; Kwon, J.H.; Moon, S.; et al. Feasibility of Deep Learning-Based Analysis of Auscultation for Screening Significant Stenosis of Native Arteriovenous Fistula for Hemodialysis Requiring Angioplasty. Korean J. Radiol. 2022, 23, 949. [Google Scholar] [CrossRef]

| Dataset | Label | Train | Test | Total |

|---|---|---|---|---|

| Raw signal | Obstructed class | 1538 | 385 | 1923 |

| Unobstructed class | 1516 | 380 | 1896 |

| Models | Accuracy | Obstructed Class | Unobstructed Class | ||||

|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | ||

| VGG16 | 0.53 | 0.52 | 0.88 | 0.65 | 0.58 | 0.17 | 0.26 |

| ResNet50 | 0.59 | 0.59 | 0.62 | 0.60 | 0.59 | 0.56 | 0.57 |

| Light-CNN | 0.55 | 0.58 | 0.38 | 0.46 | 0.53 | 0.72 | 0.61 |

| Models | Accuracy | Obstructed Class | Unobstructed Class | ||||

|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | ||

| VGG16 | 0.95 | 0.96 | 0.95 | 0.95 | 0.95 | 0.96 | 0.95 |

| ResNet50 | 0.99 | 1.00 | 0.97 | 0.99 | 0.97 | 1.00 | 0.99 |

| Lightweight CNN | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Fold | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| 1 | 0.99 | 0.99 | 0.99 | 0.99 |

| 2 | 0.99 | 0.99 | 0.99 | 0.99 |

| 3 | 0.96 | 0.96 | 0.96 | 0.96 |

| 4 | 0.97 | 0.97 | 0.97 | 0.97 |

| 5 | 0.99 | 0.99 | 0.99 | 0.99 |

| Average | 0.98 | 0.98 | 0.98 | 0.98 |

| Fold | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| 1 | 1.00 | 1.00 | 1.00 | 1.00 |

| 2 | 1.00 | 1.00 | 1.00 | 1.00 |

| 3 | 1.00 | 1.00 | 1.00 | 1.00 |

| 4 | 1.00 | 1.00 | 1.00 | 1.00 |

| 5 | 1.00 | 1.00 | 1.00 | 1.00 |

| Average | 1.00 | 1.00 | 1.00 | 1.00 |

| Fold | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| 1 | 1.00 | 1.00 | 1.00 | 1.00 |

| 2 | 1.00 | 1.00 | 1.00 | 1.00 |

| 3 | 1.00 | 1.00 | 1.00 | 1.00 |

| 4 | 1.00 | 1.00 | 1.00 | 1.00 |

| 5 | 1.00 | 1.00 | 1.00 | 1.00 |

| Average | 1.00 | 1.00 | 1.00 | 1.00 |

| Models | Parameters | Memory Size |

|---|---|---|

| VGG16 | 14,862,242 | 57.9 MB |

| ResNet50 | 23,661,538 | 91.3 MB |

| Lightweight CNN | 201,378 | 2.37 MB |

| Author | Dataset | Model | Method | Outcome |

|---|---|---|---|---|

| Ota et al. [17] | AVF sounds recording 1 min from 20 patients. | CNN + BiLSTM (Bidirectional Long Short-Term Memory) | Extracted heartbeat-specific arteriovenous fistula sounds. | 0.70 to 0.93 of Accuracy, 0.75 to 0.92 of AUC. |

| Julkaew et al. [18] | Clinical data from 398 HD patients | DeepVAQ-CNN | Used Photoplethysmogram (PPG) to predict VA quality, trained and fine-tuned CNN. | 0.92 of Accuracy, 0.96 of Specificity, 0.88 of Precision, 0.84 of F1-score, 0.86 of AUC. |

| Peralta et al. [19] | 13,369 dialysis patients (EuCliD® Database) | AVF-FM (XGBoost) | Predict AVF failure in 3 months. | 0.80 of AUC (95% CI 0.79–0.81). |

| Nguyen et al. [22] | 300 qualified and 202 unqualified PPG waveforms | 1D-CNN + FCNN | Developed an ML algorithm to assess PPG signal quality for prediction of blood flow volume, using waveform quality criteria. | Transformed NN: 0.94 of Accuracy. 1D-CNN: 0.95 of Accuracy. |

| Zhou et al. [38] | 2565 AVF blood flow sounds from 433 patients | Vision Transformer (ViT) | AVF sounds from 6 locations, pre-processed into Mel-spectrograms and recurrence plots. | ViT: 0.92 of Sensitivity, 0.79 of Specificity, 0.91 of F1-score. |

| Chung et al. [23] | 437 audio recordings from 84 HD patients | CNN, ViT-GRU | AV access bruit recordings converted to Mel-spectrograms. Models trained to predict dysfunction. | CNN: 0.70 of F1 Score, 0.71 of AUC. ViT-GRU: 0.52 of F1-score, 0.60 of AUC. |

| Park et al. [39] | 80 audio files from 40 HD patients. | ResNet50, EfficientNetB5, DenseNet201 | Digital AVF sounds recorded, converted to mel spectrograms, and used DCNN models. | ResNet50: 0.99 of AUC. EfficientNetB5: 0.98 of AUC. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.-J.; Sharma, A.K.; Liu, S.-H.; Zhang, H.; Chen, W.; Lee, T.-L. Prediction of Vascular Access Stenosis by Lightweight Convolutional Neural Network Using Blood Flow Sound Signals. Sensors 2024, 24, 5922. https://doi.org/10.3390/s24185922

Wang J-J, Sharma AK, Liu S-H, Zhang H, Chen W, Lee T-L. Prediction of Vascular Access Stenosis by Lightweight Convolutional Neural Network Using Blood Flow Sound Signals. Sensors. 2024; 24(18):5922. https://doi.org/10.3390/s24185922

Chicago/Turabian StyleWang, Jia-Jung, Alok Kumar Sharma, Shing-Hong Liu, Hangliang Zhang, Wenxi Chen, and Thung-Lip Lee. 2024. "Prediction of Vascular Access Stenosis by Lightweight Convolutional Neural Network Using Blood Flow Sound Signals" Sensors 24, no. 18: 5922. https://doi.org/10.3390/s24185922

APA StyleWang, J.-J., Sharma, A. K., Liu, S.-H., Zhang, H., Chen, W., & Lee, T.-L. (2024). Prediction of Vascular Access Stenosis by Lightweight Convolutional Neural Network Using Blood Flow Sound Signals. Sensors, 24(18), 5922. https://doi.org/10.3390/s24185922