The Use of Triaxial Accelerometers and Machine Learning Algorithms for Behavioural Identification in Domestic Dogs (Canis familiaris): A Validation Study

Abstract

1. Introduction

2. Materials and Methods

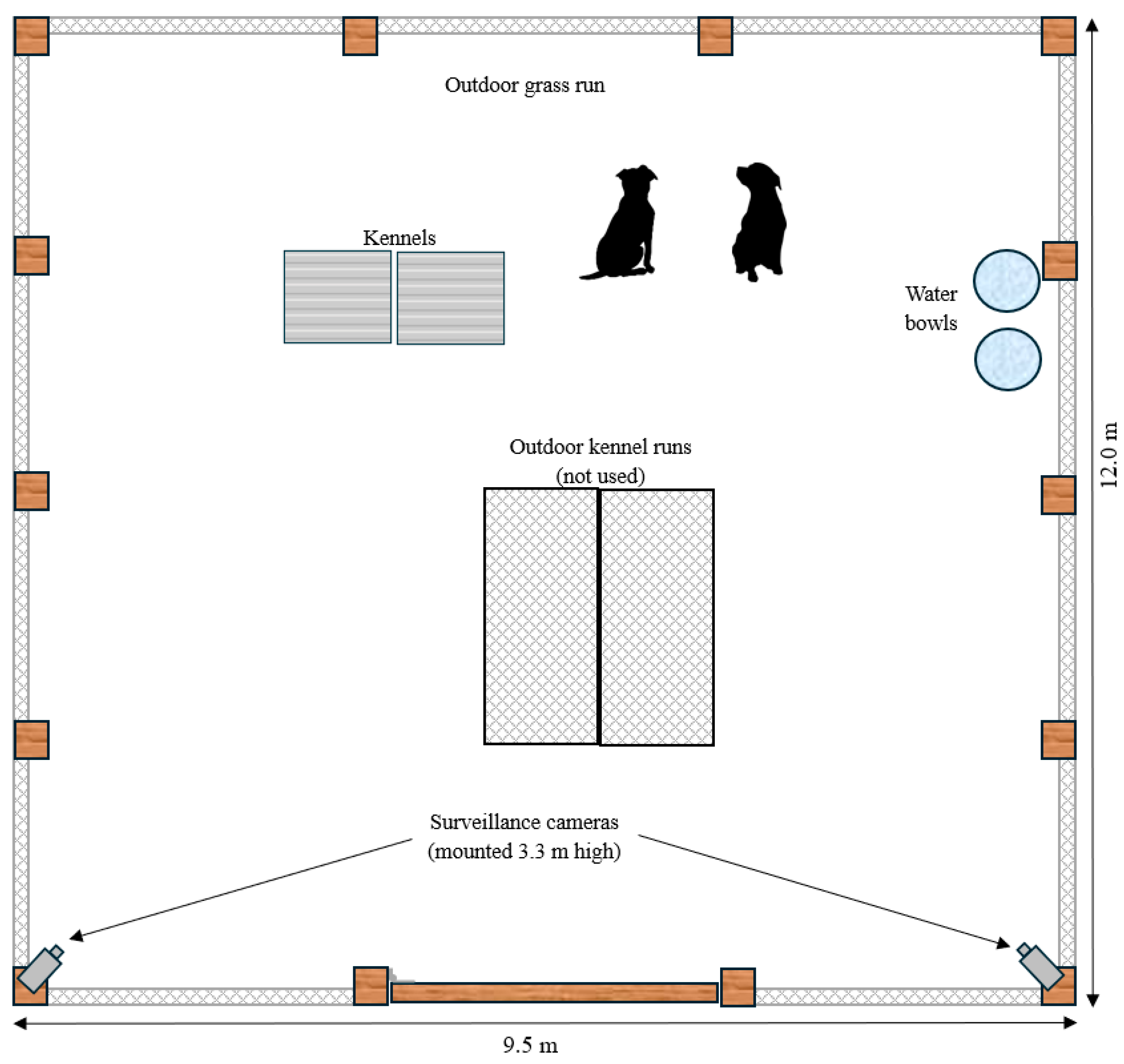

2.1. Animal Husbandry

2.2. Experimental Design

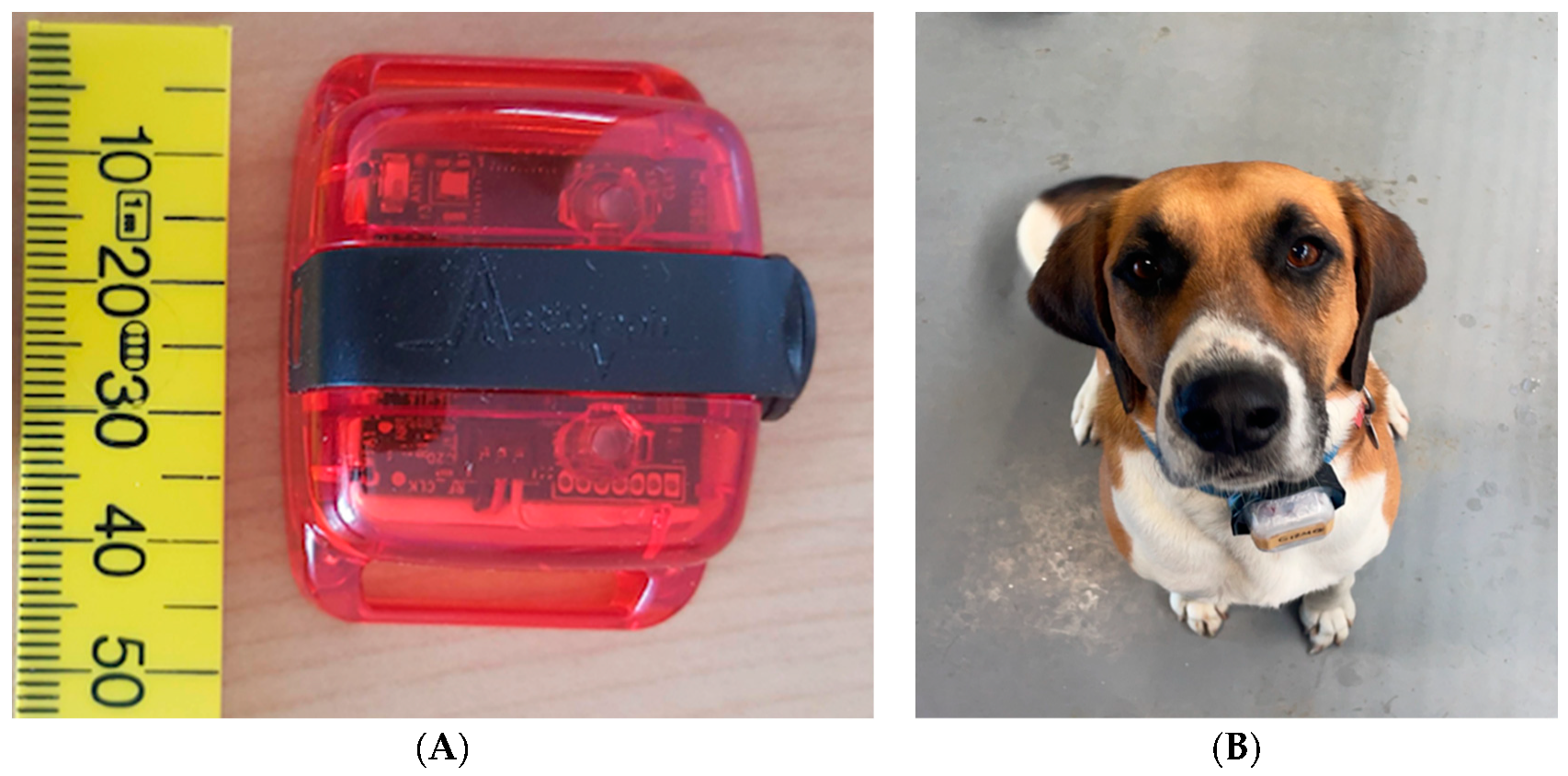

2.3. Accelerometry (ActiGraph® wGT3X-BT)

2.4. Collection and Assessment of the Video Footage

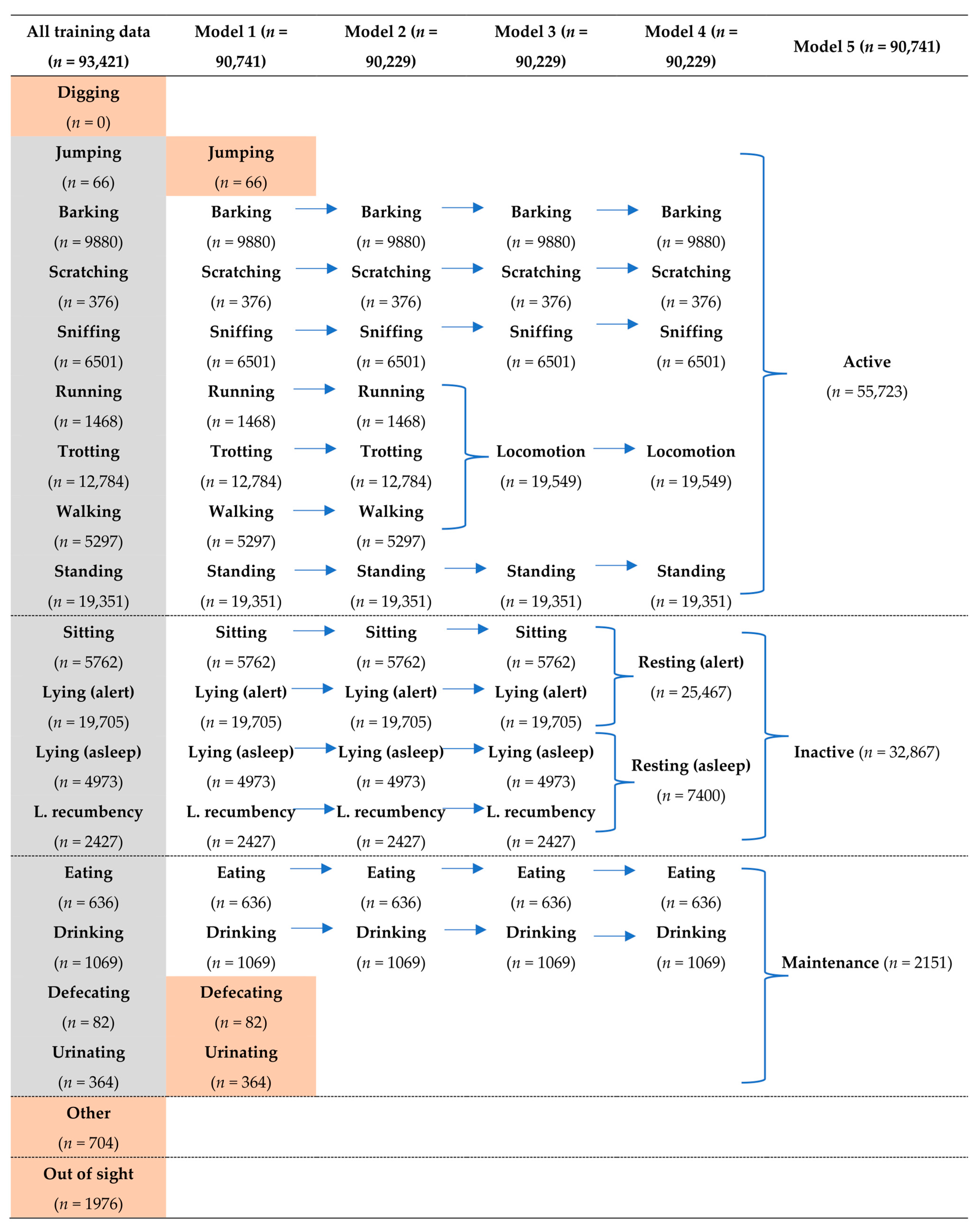

2.5. Building the Behavioural Models

2.6. Model Evaluation

3. Results

3.1. Model Performance

3.1.1. Model 1 (16 Behavioural Categories)

3.1.2. Model 2 (13 Behavioural Categories)

3.1.3. Model 3 (11 Behavioural Categories)

3.1.4. Model 4 (Nine Behavioural Categories)

3.1.5. Model 5 (Three Behavioural Categories)

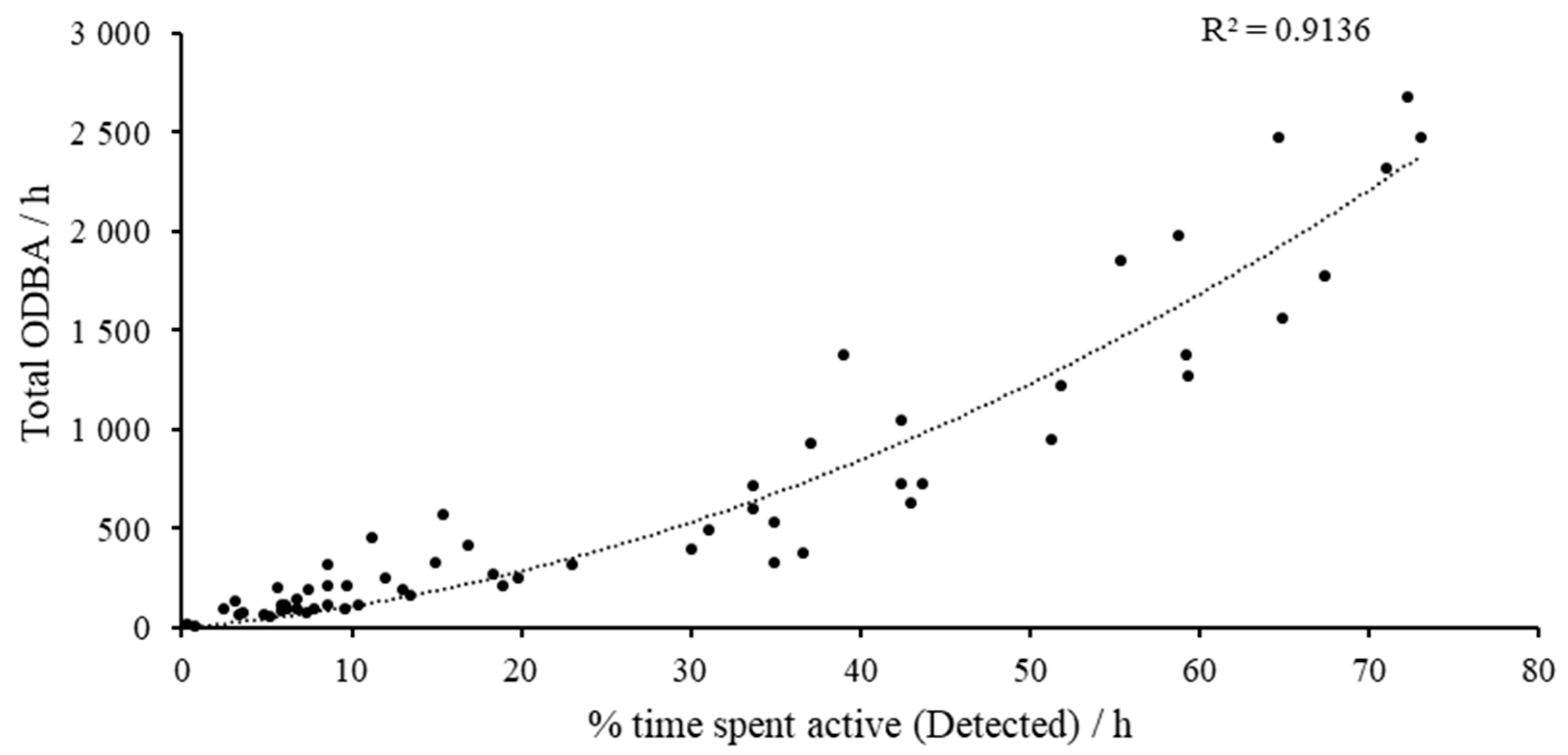

3.2. Overall Physical Activity/Overall Dynamic Body Acceleration

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Menache, S. Dogs and human beings: A story of friendship. Soc. Anim. 1998, 6, 67–86. [Google Scholar] [CrossRef]

- Svartberg, K.; Forkman, B. Personality traits in the domestic dog (Canis familiaris). Appl. Anim. Behav. Sci. 2002, 79, 133–155. [Google Scholar] [CrossRef]

- King, T.; Marston, L.C.; Bennett, P.C. Breeding dogs for beauty and behaviour: Why scientists need to do more to develop valid and reliable behaviour assessments for dogs kept as companions. Appl. Anim. Behav. Sci. 2012, 137, 1–12. [Google Scholar] [CrossRef]

- Diederich, C.; Giffroy, J.-M. Behavioural testing in dogs: A review of methodology in search for standardisation. Appl. Anim. Behav. Sci. 2006, 97, 51–72. [Google Scholar] [CrossRef]

- Dare, P.; Strasser, R. Ruff Morning? The Use of Environmental Enrichment during an Acute Stressor in Kenneled Shelter Dogs. Animals 2023, 13, 1506. [Google Scholar] [CrossRef]

- Protopopova, A. Effects of sheltering on physiology, immune function, behavior, and the welfare of dogs. Physiol. Behav. 2016, 159, 95–103. [Google Scholar] [CrossRef]

- Jones, A.C.; Gosling, S.D. Temperament and personality in dogs (Canis familiaris): A review and evaluation of past research. Appl. Anim. Behav. Sci. 2005, 95, 1–53. [Google Scholar] [CrossRef]

- Brady, K.; Cracknell, N.; Zulch, H.; Mills, D.S. A systematic review of the reliability and validity of behavioural tests used to assess behavioural characteristics important in working dogs. Front. Vet. Sci. 2018, 5, 103. [Google Scholar] [CrossRef]

- Duffy, D.L.; Kruger, K.A.; Serpell, J.A. Evaluation of a behavioral assessment tool for dogs relinquished to shelters. Prev. Vet. Med. 2014, 117, 601–609. [Google Scholar] [CrossRef]

- Rayment, D.J.; De Groef, B.; Peters, R.A.; Marston, L.C. Applied personality assessment in domestic dogs: Limitations and caveats. Appl. Anim. Behav. Sci. 2015, 163, 1–18. [Google Scholar] [CrossRef]

- Donát, P. Measuring behaviour: The tools and the strategies. Neurosci. Biobehav. Rev. 1991, 15, 447–454. [Google Scholar] [CrossRef]

- Wiener, P.; Haskell, M.J. Use of questionnaire-based data to assess dog personality. J. Vet. Behav. 2016, 16, 81–85. [Google Scholar] [CrossRef]

- Lascelles, B.D.X.; Hansen, B.D.; Thomson, A.; Pierce, C.C.; Boland, E.; Smith, E.S. Evaluation of a digitally integrated accelerometer-based activity monitor for the measurement of activity in cats. Vet. Anaesth. Analg. 2008, 35, 173–183. [Google Scholar] [CrossRef] [PubMed]

- Dow, C.; Michel, K.E.; Love, M.; Brown, D.C. Evaluation of optimal sampling interval for activity monitoring in companion dogs. Am. J. Vet. Res. 2009, 70, 444–448. [Google Scholar] [CrossRef] [PubMed]

- Morrison, R.; Penpraze, V.; Beber, A.; Reilly, J.; Yam, P. Associations between obesity and physical activity in dogs: A preliminary investigation. J. Small Anim. Pract. 2013, 54, 570–574. [Google Scholar] [CrossRef] [PubMed]

- Jones, S.; Dowling-Guyer, S.; Patronek, G.J.; Marder, A.R.; Segurson D’Arpino, S.; McCobb, E. Use of accelerometers to measure stress levels in shelter dogs. J. Appl. Anim. Welf. Sci. 2014, 17, 18–28. [Google Scholar] [CrossRef] [PubMed]

- Chan, C.B.; Spierenburg, M.; Ihle, S.L.; Tudor-Locke, C. Use of pedometers to measure physical activity in dogs. J. Am. Vet. Med. Assoc. 2005, 226, 2010–2015. [Google Scholar] [CrossRef]

- Tudor-Locke, C.; Williams, J.E.; Reis, J.P.; Pluto, D. Utility of pedometers for assessing physical activity: Convergent validity. Sports Med. 2002, 32, 795–808. [Google Scholar] [CrossRef]

- Hansen, B.D.; Lascelles, B.D.X.; Keene, B.W.; Adams, A.K.; Thomson, A.E. Evaluation of an accelerometer for at-home monitoring of spontaneous activity in dogs. Am. J. Vet. Res. 2007, 68, 468–475. [Google Scholar] [CrossRef]

- Yashari, J.M.; Duncan, C.G.; Duerr, F.M. Evaluation of a novel canine activity monitor for at-home physical activity analysis. BMC Vet. Res. 2015, 11, 146. [Google Scholar] [CrossRef]

- Smit, M.; Ikurior, S.J.; Corner-Thomas, R.A.; Andrews, C.J.; Draganova, I.; Thomas, D.G. The Use of Triaxial Accelerometers and Machine Learning Algorithms for Behavioural Identification in Domestic Cats (Felis catus): A Validation Study. Sensors 2023, 23, 7165. [Google Scholar] [CrossRef] [PubMed]

- Barthélémy, I.; Barrey, E.; Thibaud, J.-L.; Uriarte, A.; Voit, T.; Blot, S.; Hogrel, J.-Y. Gait analysis using accelerometry in dystrophin-deficient dogs. Neuromuscul. Disord. 2009, 19, 788–796. [Google Scholar] [CrossRef] [PubMed]

- Moreau, M.; Siebert, S.; Buerkert, A.; Schlecht, E. Use of a tri-axial accelerometer for automated recording and classification of goats’ grazing behaviour. Appl. Anim. Behav. Sci. 2009, 119, 158–170. [Google Scholar] [CrossRef]

- Brown, D.C.; Boston, R.C.; Farrar, J.T. Use of an activity monitor to detect response to treatment in dogs with osteoarthritis. J. Am. Vet. Med. Assoc. 2010, 237, 66–70. [Google Scholar] [CrossRef] [PubMed]

- Yam, P.; Penpraze, V.; Young, D.; Todd, M.; Cloney, A.; Houston-Callaghan, K.; Reilly, J.J. Validity, practical utility and reliability of Actigraph accelerometry for the measurement of habitual physical activity in dogs. J. Small Anim. Pract. 2011, 52, 86–91. [Google Scholar] [CrossRef] [PubMed]

- Preston, T.; Baltzer, W.; Trost, S. Accelerometer validity and placement for detection of changes in physical activity in dogs under controlled conditions on a treadmill. Res. Vet. Sci. 2012, 93, 412–416. [Google Scholar] [CrossRef]

- Aich, S.; Chakraborty, S.; Sim, J.S.; Jang, D.J.; Kim, H.C. The design of an automated system for the analysis of the activity and emotional patterns of dogs with wearable sensors using machine learning. Appl. Sci. 2019, 9, 4938. [Google Scholar] [CrossRef]

- Ladha, C.; Hoffman, C.L. A combined approach to predicting rest in dogs using accelerometers. Sensors 2018, 18, 2649. [Google Scholar] [CrossRef]

- Kumpulainen, P.; Cardó, A.V.; Somppi, S.; Törnqvist, H.; Väätäjä, H.; Majaranta, P.; Gizatdinova, Y.; Antink, C.H.; Surakka, V.; Kujala, M.V. Dog behaviour classification with movement sensors placed on the harness and the collar. Appl. Anim. Behav. Sci. 2021, 241, 105393. [Google Scholar] [CrossRef]

- Hoffman, C.L.; Ladha, C.; Wilcox, S. An actigraphy-based comparison of shelter dog and owned dog activity patterns. J. Vet. Behav. 2019, 34, 30–36. [Google Scholar] [CrossRef]

- Valletta, J.J.; Torney, C.; Kings, M.; Thornton, A.; Madden, J. Applications of machine learning in animal behaviour studies. Anim. Behav. 2017, 124, 203–220. [Google Scholar] [CrossRef]

- Ortmeyer, H.K.; Robey, L.; McDonald, T. Combining actigraph link and PetPace collar data to measure activity, proximity, and physiological responses in freely moving dogs in a natural environment. Animals 2018, 8, 230. [Google Scholar] [CrossRef] [PubMed]

- Gerencsér, L.; Vásárhelyi, G.; Nagy, M.; Vicsek, T.; Miklósi, A. Identification of behaviour in freely moving dogs (Canis familiaris) using inertial sensors. PLoS ONE 2013, 8, e77814. [Google Scholar] [CrossRef] [PubMed]

- Nurwulan, N.R.; Selamaj, G. Random forest for human daily activity recognition. J. Phys. Conf. Ser. 2020, 1655, 012087. [Google Scholar] [CrossRef]

- Nathan, R.; Spiegel, O.; Fortmann-Roe, S.; Harel, R.; Wikelski, M.; Getz, W.M. Using tri-axial acceleration data to identify behavioral modes of free-ranging animals: General concepts and tools illustrated for griffon vultures. J. Exp. Biol. 2012, 215, 986–996. [Google Scholar] [CrossRef]

- Pillard, P.; Gibert, S.; Viguier, E. Development of a 3D accelerometric device for gait analysis in dogs. Comput. Methods Biomech. Biomed. Eng. 2012, 15, 246–249. [Google Scholar] [CrossRef]

- Bolton, S.; Cave, N.; Cogger, N.; Colborne, G. Use of a collar-mounted triaxial accelerometer to predict speed and gait in dogs. Animals 2021, 11, 1262. [Google Scholar] [CrossRef]

- Muñana, K.R.; Nettifee, J.A.; Griffith, E.H.; Early, P.J.; Yoder, N.C. Evaluation of a collar-mounted accelerometer for detecting seizure activity in dogs. J. Vet. Intern. Med. 2020, 34, 1239–1247. [Google Scholar] [CrossRef]

- Michel, K.E.; Brown, D.C. Determination and application of cut points for accelerometer-based activity counts of activities with differing intensity in pet dogs. Am. J. Vet. Res. 2011, 72, 866–870. [Google Scholar] [CrossRef]

- Cheung, K.W.; Starling, M.J.; McGreevy, P.D. A comparison of uniaxial and triaxial accelerometers for the assessment of physical activity in dogs. J. Vet. Behav. 2014, 9, 66–71. [Google Scholar] [CrossRef]

- Clark, K.; Caraguel, C.; Leahey, L.; Béraud, R. Evaluation of a novel accelerometer for kinetic gait analysis in dogs. Can. J. Vet. Res. 2014, 78, 226–232. [Google Scholar] [PubMed]

- Clarke, N.; Fraser, D. Automated monitoring of resting in dogs. Appl. Anim. Behav. Sci. 2016, 174, 99–102. [Google Scholar] [CrossRef]

- Ministry for Primary Industries. Code of Welfare: Dogs; Ministry for Primary Industries: Wellington, New Zealand, 2008. [Google Scholar]

- Laflamme, D.P. Understanding and managing obesity in dogs and cats. Vet. Clin. Small Anim. Pract. 2006, 36, 1283–1295. [Google Scholar] [CrossRef] [PubMed]

- Riaboff, L.; Shalloo, L.; Smeaton, A.F.; Couvreur, S.; Madouasse, A.; Keane, M.T. Predicting livestock behaviour using accelerometers: A systematic review of processing techniques for ruminant behaviour prediction from raw accelerometer data. Comput. Electron. Agric. 2022, 192, 106610. [Google Scholar] [CrossRef]

- Brown, D.D.; Kays, R.; Wikelski, M.; Wilson, R.; Klimley, A.P. Observing the unwatchable through acceleration logging of animal behavior. Anim. Biotelemetry 2013, 1, 20. [Google Scholar] [CrossRef]

- Friard, O.; Gamba, M.; Fitzjohn, R. BORIS: A free, versatile open-source event-logging software for video/audio coding and live observations. Methods Ecol. Evol. 2016, 7, 1325–1330. [Google Scholar] [CrossRef]

- Koler-Matznick, J.; Brisbin, I.; Feinstein, M. An Ethogram for the New Guinea Singing (Wild) Dog (Canis hallstromi); The New Guinea Singing Dog Conservation Society: Salem, OR, USA, 2005. [Google Scholar]

- Walker, J.K.; Dale, A.R.; D’Eath, R.B.; Wemelsfelder, F. Qualitative Behaviour Assessment of dogs in the shelter and home environment and relationship with quantitative behaviour assessment and physiological responses. Appl. Anim. Behav. Sci. 2016, 184, 97–108. [Google Scholar] [CrossRef]

- Lee, C.Y.; Ngai, J.T.K.; Chau, K.K.Y.; Yu, R.W.M.; Wong, P.W.C. Development of a pilot human-canine ethogram for an animal-assisted education programme in primary schools–A case study. Appl. Anim. Behav. Sci. 2022, 255, 105725. [Google Scholar] [CrossRef]

- Fukuzawa, M.; Nakazato, I. Influence of changes in luminous emittance before bedtime on sleep in companion dogs. J. Vet. Behav. 2015, 10, 12–16. [Google Scholar] [CrossRef]

- Eyre, A.W.; Zapata, I.; Hare, E.; Serpell, J.A.; Otto, C.M.; Alvarez, C.E. Machine learning prediction and classification of behavioral selection in a canine olfactory detection program. Sci. Rep. 2023, 13, 12489. [Google Scholar] [CrossRef]

- Kleanthous, N.; Hussain, A.; Khan, W.; Sneddon, J.; Mason, A. Feature extraction and random forest to identify sheep behavior from accelerometer data. In Proceedings of the Intelligent Computing Methodologies: 16th International Conference, ICIC 2020, Bari, Italy, 2–5 October 2020; Proceedings, Part III 16. Springer: Cham, Switzerland, 2020; pp. 408–419. [Google Scholar]

- Ikurior, S.J.; Marquetoux, N.; Leu, S.T.; Corner-Thomas, R.A.; Scott, I.; Pomroy, W.E. What Are Sheep Doing? Tri-Axial Accelerometer Sensor Data Identify the Diel Activity Pattern of Ewe Lambs on Pasture. Sensors 2021, 21, 6816. [Google Scholar] [CrossRef] [PubMed]

- Shaik, A.B.; Srinivasan, S.A. A Brief survey on random forest ensembles in classification model. In Proceedings of the International Conference on Innovative Computing and Communications: Proceedings of ICICC; Springer: Singapore, 2018; Volume 2, pp. 253–260. [Google Scholar]

- Fernández, A.; Garcia, S.; Herrera, F.; Chawla, N.V. SMOTE for learning from imbalanced data: Progress and challenges, marking the 15-year anniversary. J. Artif. Intell. Res. 2018, 61, 863–905. [Google Scholar] [CrossRef]

- Bardini, R.; Politano, G.; Benso, A.; Di Carlo, S. Multi-level and hybrid modelling approaches for systems biology. Comput. Struct. Biotech. J. 2017, 15, 396–402. [Google Scholar] [CrossRef] [PubMed]

- Helm, J.; McBrearty, A.; Fontaine, S.; Morrison, R.; Yam, P. Use of accelerometry to investigate physical activity in dogs receiving chemotherapy. J. Small Anim. Pract. 2016, 57, 600–609. [Google Scholar] [CrossRef] [PubMed]

- Van der Laan, J.E.; Vinke, C.M.; Arndt, S.S. Sensor-Supported Measurement of Adaptability of Dogs (Canis Familiaris) to a Shelter Environment: Nocturnal Activity and Behavior. PLoS ONE 2023, 18, 0286429. [Google Scholar] [CrossRef]

- Hounslow, J.; Brewster, L.; Lear, K.; Guttridge, T.; Daly, R.; Whitney, N.; Gleiss, A. Assessing the effects of sampling frequency on behavioural classification of accelerometer data. J. Exp. Mar. Biol. Ecol. 2019, 512, 22–30. [Google Scholar] [CrossRef]

- Wasikowski, M.; Chen, X.W. Combating the small sample class imbalance problem using feature selection. IEEE Trans. Knowl. Data Eng. 2010, 22, 1388. [Google Scholar] [CrossRef]

- Dal Pozzolo, A.; Caelen, O.; Bontempi, G. When is undersampling effective in unbalanced classification tasks? In Machine Learning and Knowledge Discovery in Databases, Proceedings of the European Conference, ECML PKDD 2015; Porto, Portugal, 7–11 September 2015, Proceedings, Part I 15; Springer: Cham, Switzerland, 2015; pp. 200–215. [Google Scholar]

- Saito, T.; Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef]

- Martiskainen, P.; Järvinen, M.; Skön, J.-P.; Tiirikainen, J.; Kolehmainen, M.; Mononen, J. Cow behaviour pattern recognition using a three-dimensional accelerometer and support vector machines. Appl. Anim. Behav. Sci. 2009, 119, 32–38. [Google Scholar] [CrossRef]

- Den Uijl, I.; Gómez Álvarez, C.B.; Bartram, D.; Dror, Y.; Holland, R.; Cook, A. External validation of a collar-mounted triaxial accelerometer for second-by-second monitoring of eight behavioural states in dogs. PLoS ONE 2017, 12, e0188481. [Google Scholar] [CrossRef]

- Tatler, J.; Cassey, P.; Prowse, T.A. High accuracy at low frequency: Detailed behavioural classification from accelerometer data. J. Exp. Biol. 2018, 221, jeb184085. [Google Scholar] [CrossRef] [PubMed]

- Martin, K.W.; Olsen, A.M.; Duncan, C.G.; Duerr, F.M. The method of attachment influences accelerometer-based activity data in dogs. BMC Vet. Res. 2016, 13, 48. [Google Scholar] [CrossRef] [PubMed]

- Westgarth, C.; Ladha, C. Evaluation of an open source method for calculating physical activity in dogs from harness and collar based sensors. BMC Vet. Res. 2017, 13, 322. [Google Scholar] [CrossRef] [PubMed]

- Smit, M.; Corner-Thomas, R.A.; Draganova, I.; Andrews, C.J.; Thomas, D.G. How Lazy Are Pet Cats Really? Using Machine Learning and Accelerometry to Get a Glimpse into the Behaviour of Privately Owned Cats in Different Households. Sensors 2024, 24, 2623. [Google Scholar] [CrossRef]

- Liu, Y.; Smit, M.; Andrews, C.; Corner-Thomas, R.; Draganova, I.; Thomas, D. Use of triaxial accelerometers and a machine learning algorithm for behavioural identification to assess the efficacy of a joint supplement in old domestic cats (Felis catus). In Proceedings of the American Society of Animal Science, Calgary, AB, Canada, 21 July 2024. [Google Scholar]

| Name | Sex | Pair | Age (years) | Breed | Desexed | Weight (kg) |

|---|---|---|---|---|---|---|

| Belvedere | Female | 3 | 7.5 | Huntaway | Yes | 22.4 |

| Blacky | Male | 3 | 3.9 | Huntaway/Heading | Yes | 23.9 |

| Chevelle | Female | 2 | 7.5 | Huntaway | Yes | 23.0 |

| Gizmo | Male | 1 | 5.7 | Harrier Hound | Yes | 31.1 |

| Gus | Male | 2 | 4.0 | Huntaway/Smithfield Terrier | Yes | 22.7 |

| Monaro | Male | 1 | 7.5 | Huntaway | Yes | 32.8 |

| Category | Behaviour | Description |

|---|---|---|

| Active | Walking | The slowest upright gait where the body is moving forward, each paw lifting from the ground one at a time in a regular sequence [48]. |

| Trotting | A rhythmic two-beat gait where diagonally opposite paws strike the ground at the same time as the subject moves forward. This gait is faster than walking [48]. | |

| Running | Can also be defined as a ‘canter’. This is a three-beat gait in which two legs move separately and two as a diagonal pair. This gait is faster than a walk and trot [48]. | |

| Jumping | Subject has both hindlegs on the floor and rears in a manner that results in both forelegs in contact with the fencing of paddock, kennel, or person [49]. | |

| Barking | Barking is defined as the mouth being opened and closed quickly in a snapping motion, releasing a low frequency vocalization [49]. | |

| Sniffing | Nose directed to a point of interest and sniffs [50]. | |

| Digging | The dog uses its forepaws to repeatedly scratch the ground surface [49]. | |

| Scratching | Grooming behaviour directed towards subjects’ own body, using paws [49]. | |

| Inactive | Resting-alert | Lying on stomach with forelegs extended to the front, hind legs bent and resting close to the body on each side, or with the body twisted and both hind legs on one side. Head is held up off the ground or surface [47]. |

| Resting-asleep | Lying on stomach with forelegs extended to the front, hind legs bent and resting close to the body on each side, or with the body twisted and both hind legs on one side. Head is lowered to rest on either forelegs or the ground between them [47]. | |

| L. recumbency | Lying down flat on one side with head resting on surface in sideways position [51]. | |

| Sitting | Hind quarters on ground with front legs standing up straight and being used for support [49]. | |

| Standing | All four paws planted on ground and legs extended so they are upright in stationary position [49]. | |

| Maintenance | Defecating | Excretion of faeces from the subject’s body [49]. |

| Urinating | Excretion of urine from the subject’s body [49]. | |

| Eating | Subject chews and ingests food from bowl provided by human [49]. | |

| Drinking | Subject drinks from water bowl in paddock by lapping up the water with their tongue [48,49]. | |

| Auto grooming | Grooming behaviour directed towards the subject’s own body including licking, self-biting, and scratching [49]. | |

| Other | Other | Any behaviour that does not fit into one of the behaviours included in this ethogram. |

| Out of sight | Subject is out of view and behaviour cannot be observed. |

| Identifier Variable | Description |

|---|---|

| Mean acceleration | Mean which is calculated for every second using the raw acceleration data (30 measures per second) |

| Sum acceleration | Sum(Axis) = ∑ Axisi |

| Minimum (min) | Minimum value of every 30 measures per second |

| Maximum (max) | Maximum value of every 30 measures per second |

| Standard deviation (SD) | Quantifies the amount of variability within a dataset |

| Skewness | Measures the asymmetry of the probability distribution of a dataset |

| Kurtosis | Measures the weight of the tails in relation to normal distribution |

| Vector magnitude (VM) | V M = √ X2 + Y2 + Z2 |

| Overall dynamic body acceleration (ODBA) | ODBA = |

| Dynamic body acceleration (DBA) | DBA = Sumaxis − moving average |

| Parameter | Calculation |

|---|---|

| Sensitivity/recall | =TP/(TP + FN) |

| Specificity | =TP/(TN + FP) |

| Balanced accuracy | =(sensitivity + specificity)/2 |

| Precision | =TP/(TP + FP) |

| Precision recall (F1 Score) | = |

| Observed prevalence | =(TP + FN)/(TP + TN + FP + FN) |

| Detected prevalence | =(TP + FP)/(TP + TN + FP + FN) |

| Overall accuracy | =(TP + TN)/(TP + TN + FP + FN) |

| Kappa coefficient (κ) | where N = Total number of observations (all behaviours) k = Number of behaviour categories i = Behaviour category i xii = Number of observations that both that both visual observation and the predictor model classified into the i-th category xi+ = Number of observations that were visually classified into the i-th category |

| X+i = Number of observations that the predictor model classified into the i-th category |

| Behaviour | Sensitivity | Specificity | Balanced Accuracy | Precision | Precision-Recall | Prevalence (Observed) | Detection Prevalence | CV% |

|---|---|---|---|---|---|---|---|---|

| Model 1 | ||||||||

| Barking | 0.84 | 0.97 | 0.91 | 0.80 | 0.82 | 0.109 | 0.115 | 3.77 |

| Defecating | 0.71 | 1.00 | 0.85 | 0.96 | 0.81 | 0.001 | 0.001 | 21.57 |

| Drinking | 0.71 | 1.00 | 0.85 | 0.92 | 0.80 | 0.012 | 0.009 | 18.75 |

| Eating | 0.78 | 1.00 | 0.89 | 0.96 | 0.86 | 0.007 | 0.006 | 14.95 |

| Jumping | 0.00 | 1.00 | 0.50 | - | - | 0.001 | 0.000 | 141.42 |

| L. recumbency | 0.94 | 1.00 | 0.97 | 0.98 | 0.96 | 0.027 | 0.026 | 2.83 |

| Lying-asleep | 0.85 | 1.00 | 0.92 | 0.91 | 0.88 | 0.055 | 0.051 | 4.67 |

| Lying-alert | 0.85 | 0.94 | 0.89 | 0.79 | 0.82 | 0.217 | 0.234 | 5.11 |

| Running | 0.12 | 1.00 | 0.56 | 0.54 | 0.20 | 0.016 | 0.004 | 88.67 |

| Scratching | 0.61 | 1.00 | 0.80 | 0.97 | 0.75 | 0.004 | 0.003 | 32.65 |

| Sitting | 0.37 | 0.99 | 0.68 | 0.63 | 0.47 | 0.064 | 0.037 | 36.54 |

| Sniffing | 0.93 | 0.98 | 0.95 | 0.75 | 0.83 | 0.072 | 0.089 | 15.06 |

| Standing | 0.64 | 0.86 | 0.75 | 0.55 | 0.59 | 0.213 | 0.251 | 11.57 |

| Trotting | 0.58 | 0.92 | 0.75 | 0.56 | 0.57 | 0.141 | 0.147 | 3.04 |

| Urinating | 0.48 | 1.00 | 0.74 | 1.00 | 0.65 | 0.004 | 0.002 | 49.20 |

| Walking | 0.25 | 0.99 | 0.62 | 0.54 | 0.34 | 0.058 | 0.027 | 52.88 |

| Average | 0.60 ± 0.07 | 0.97 ± 0.01 | 0.79 ± 0.04 | 0.34 ± 0.08 | 0.79 ± 0.05 | ⅀ = 1.000 | ⅀ = 1.000 | 31.4 ± 9.4 |

| Model 2 | ||||||||

| Barking | 0.84 | 0.97 | 0.90 | 0.80 | 0.82 | 0.110 | 0.115 | 3.25 |

| Drinking | 0.71 | 1.00 | 0.86 | 0.91 | 0.80 | 0.012 | 0.009 | 17.18 |

| Eating | 0.79 | 1.00 | 0.90 | 0.93 | 0.86 | 0.007 | 0.006 | 11.23 |

| L. Recumbency | 0.94 | 1.00 | 0.97 | 0.98 | 0.96 | 0.027 | 0.026 | 3.12 |

| Lying-asleep | 0.85 | 1.00 | 0.92 | 0.92 | 0.88 | 0.055 | 0.051 | 5.27 |

| Lying-alert | 0.85 | 0.93 | 0.89 | 0.78 | 0.81 | 0.218 | 0.238 | 6.07 |

| Running | 0.12 | 1.00 | 0.56 | 0.56 | 0.20 | 0.016 | 0.003 | 91.69 |

| Scratching | 0.72 | 1.00 | 0.86 | 0.95 | 0.82 | 0.004 | 0.003 | 19.63 |

| Sitting | 0.33 | 0.98 | 0.66 | 0.58 | 0.42 | 0.064 | 0.037 | 37.87 |

| Sniffing | 0.94 | 0.98 | 0.96 | 0.77 | 0.85 | 0.072 | 0.087 | 13.42 |

| Standing | 0.64 | 0.86 | 0.75 | 0.55 | 0.59 | 0.215 | 0.248 | 10.13 |

| Trotting | 0.59 | 0.92 | 0.76 | 0.55 | 0.57 | 0.142 | 0.152 | 5.02 |

| Walking | 0.24 | 0.99 | 0.61 | 0.56 | 0.34 | 0.059 | 0.025 | 57.21 |

| Average | 0.66 ± 0.07 | 0.97 ± 0.01 | 0.81 ± 0.04 | 0.76 ± 0.05 | 0.69 ± 0.07 | ⅀ = 1.000 | ⅀ = 1.000 | 21.6 ± 7.3 |

| Behaviour | Sensitivity | Specificity | Balanced Accuracy | Precision | Precision-Recall | Prevalence (Observed) | Detection Prevalence | CV% |

|---|---|---|---|---|---|---|---|---|

| Model 3 | ||||||||

| Barking | 0.82 | 0.98 | 0.90 | 0.83 | 0.83 | 0.110 | 0.109 | 0.49 |

| Drinking | 0.71 | 1.00 | 0.86 | 0.93 | 0.81 | 0.012 | 0.009 | 18.95 |

| Eating | 0.79 | 1.00 | 0.90 | 0.97 | 0.87 | 0.007 | 0.006 | 14.32 |

| L. Recumbency | 0.94 | 1.00 | 0.97 | 0.99 | 0.96 | 0.027 | 0.025 | 4.11 |

| Locomotion | 0.67 | 0.88 | 0.77 | 0.61 | 0.64 | 0.217 | 0.236 | 6.09 |

| Lying-asleep | 0.87 | 1.00 | 0.93 | 0.92 | 0.89 | 0.055 | 0.052 | 4.13 |

| Lying-alert | 0.84 | 0.94 | 0.89 | 0.79 | 0.81 | 0.218 | 0.233 | 4.57 |

| Scratching | 0.64 | 1.00 | 0.82 | 0.94 | 0.77 | 0.004 | 0.003 | 26.83 |

| Sitting | 0.35 | 0.98 | 0.67 | 0.60 | 0.44 | 0.064 | 0.037 | 37.22 |

| Sniffing | 0.93 | 0.98 | 0.95 | 0.79 | 0.85 | 0.072 | 0.084 | 11.20 |

| Standing | 0.57 | 0.89 | 0.73 | 0.59 | 0.58 | 0.215 | 0.205 | 3.06 |

| Average | 0.74 ± 0.05 | 0.96 ± 0.01 | 0.85 ± 0.03 | 0.82 ± 0.05 | 0.77 ± 0.05 | ⅀ = 1.000 | ⅀ = 1.000 | 11.9 ± 3.5 |

| Model 4 | ||||||||

| Barking | 0.82 | 0.98 | 0.90 | 0.83 | 0.82 | 0.110 | 0.108 | 0.70 |

| Drinking | 0.73 | 1.00 | 0.86 | 0.93 | 0.82 | 0.012 | 0.009 | 17.37 |

| Eating | 0.72 | 1.00 | 0.86 | 0.96 | 0.82 | 0.007 | 0.005 | 19.87 |

| Locomotion | 0.66 | 0.88 | 0.77 | 0.61 | 0.64 | 0.217 | 0.234 | 5.37 |

| Rest-asleep | 0.89 | 1.00 | 0.94 | 0.94 | 0.92 | 0.082 | 0.077 | 4.11 |

| Rest-alert | 0.85 | 0.94 | 0.89 | 0.85 | 0.85 | 0.282 | 0.283 | 0.08 |

| Scratching | 0.66 | 1.00 | 0.83 | 0.96 | 0.79 | 0.004 | 0.003 | 26.19 |

| Sniffing | 0.93 | 0.98 | 0.95 | 0.79 | 0.85 | 0.072 | 0.085 | 11.51 |

| Standing | 0.54 | 0.90 | 0.72 | 0.59 | 0.57 | 0.215 | 0.196 | 6.52 |

| Average | 0.76 ± 0.04 | 0.96 ± 0.02 | 0.86 ± 0.03 | 0.83 ± 0.05 | 0.78 ± 0.04 | ⅀ = 1.000 | ⅀ = 1.000 | 10.2 ± 3.0 |

| Model 5 | ||||||||

| Active | 0.95 | 0.86 | 0.9053 | 0.91 | 0.93 | 0.613 | 0.638 | 2.9 |

| Inactive | 0.87 | 0.95 | 0.9095 | 0.91 | 0.89 | 0.364 | 0.345 | 3.8 |

| Maintenance | 0.71 | 1.00 | 0.85618 | 0.98 | 0.83 | 0.023 | 0.017 | 22.4 |

| Average | 0.84 ± 0.07 | 0.93 ± 0.04 | 0.86 ± 0.04 | 0.98 ± 0.02 | 0.88 ± 0.03 | ⅀ = 1.000 | ⅀ = 1.000 | 9.7 ± 6.4 |

| Model Prediction | Observed Behaviour | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Barking | Drinking | Eating | Locomotion | Resting-Asleep | Resting-Alert | Scratching | Sniffing | Standing | |

| Barking | 81.77 | 0.44 | 2.94 | 4.20 | 0.19 | 1.24 | 0.00 | 0.07 | 2.71 |

| Drinking | 0.00 | 72.65 | 0.00 | 0.18 | 0.00 | 0.00 | 0.00 | 0.00 | 0.12 |

| Eating | 0.00 | 0.00 | 72.06 | 0.02 | 0.00 | 0.01 | 0.00 | 0.14 | 0.02 |

| Locomotion | 9.59 | 16.85 | 5.88 | 66.22 | 0.41 | 2.59 | 11.88 | 3.59 | 31.08 |

| Resting-asleep | 0.09 | 0.00 | 0.00 | 0.20 | 89.06 | 1.11 | 1.88 | 0.22 | 0.21 |

| Resting-alert | 4.53 | 0.44 | 2.21 | 4.81 | 8.01 | 84.89 | 8.75 | 0.72 | 9.29 |

| Scratching | 0.00 | 0.00 | 0.00 | 0.01 | 0.03 | 0.02 | 66.25 | 0.00 | 0.00 |

| Sniffing | 0.09 | 4.81 | 12.50 | 3.69 | 0.57 | 0.82 | 2.50 | 92.89 | 2.54 |

| Standing | 3.92 | 4.81 | 4.41 | 20.66 | 1.73 | 9.33 | 8.75 | 2.37 | 54.03 |

| n | 4234 | 457 | 272 | 8377 | 3171 | 10,914 | 160 | 2785 | 8292 |

| Model Predictions | Observed Behaviour | ||

|---|---|---|---|

| Active | Inactive | Maintenance | |

| Active | 95.22 | 13.40 | 26.26 |

| Inactive | 4.75 | 86.57 | 2.47 |

| Maintenance | 0.03 | 0.04 | 71.27 |

| Total Observations (s) | 23,690 | 14,085 | 891 |

| Behaviour | Average ODBA/s (Observed) | Average ODBA/s (Detected) | CV% |

|---|---|---|---|

| Locomotion | 0.819 ± 0.007 | 0.996 ± 0.006 | 13.8 |

| Barking | 0.726 ± 0.015 | 0.707 ± 0.014 | 1.9 |

| Drinking | 0.573 ± 0.032 | 0.659 ± 0.047 | 9.9 |

| Eating | 0.514 ± 0.031 | 0.427 ± 0.031 | 13.1 |

| Standing | 0.454 ± 0.006 | 0.277 ± 0.004 | 34.2 |

| Scratching | 0.372 ± 0.057 | 0.465 ± 0.075 | 15.7 |

| Sniffing | 0.364 ± 0.008 | 0.357 ± 0.006 | 1.4 |

| Resting-alert | 0.069 ± 0.001 | 0.047 ± 0.001 | 26.8 |

| Resting-asleep | 0.020 ± 0.001 | 0.014 ± 0.000 | 25.3 |

| Average | 0.435 ± 0.089 | 0.439 ± 0.105 | 15.7 ± 3.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Redmond, C.; Smit, M.; Draganova, I.; Corner-Thomas, R.; Thomas, D.; Andrews, C. The Use of Triaxial Accelerometers and Machine Learning Algorithms for Behavioural Identification in Domestic Dogs (Canis familiaris): A Validation Study. Sensors 2024, 24, 5955. https://doi.org/10.3390/s24185955

Redmond C, Smit M, Draganova I, Corner-Thomas R, Thomas D, Andrews C. The Use of Triaxial Accelerometers and Machine Learning Algorithms for Behavioural Identification in Domestic Dogs (Canis familiaris): A Validation Study. Sensors. 2024; 24(18):5955. https://doi.org/10.3390/s24185955

Chicago/Turabian StyleRedmond, Cushla, Michelle Smit, Ina Draganova, Rene Corner-Thomas, David Thomas, and Christopher Andrews. 2024. "The Use of Triaxial Accelerometers and Machine Learning Algorithms for Behavioural Identification in Domestic Dogs (Canis familiaris): A Validation Study" Sensors 24, no. 18: 5955. https://doi.org/10.3390/s24185955

APA StyleRedmond, C., Smit, M., Draganova, I., Corner-Thomas, R., Thomas, D., & Andrews, C. (2024). The Use of Triaxial Accelerometers and Machine Learning Algorithms for Behavioural Identification in Domestic Dogs (Canis familiaris): A Validation Study. Sensors, 24(18), 5955. https://doi.org/10.3390/s24185955