Inertial Motion Capture-Driven Digital Human for Ergonomic Validation: A Case Study of Core Drilling

Abstract

:1. Introduction

2. Digital Human Model Generation

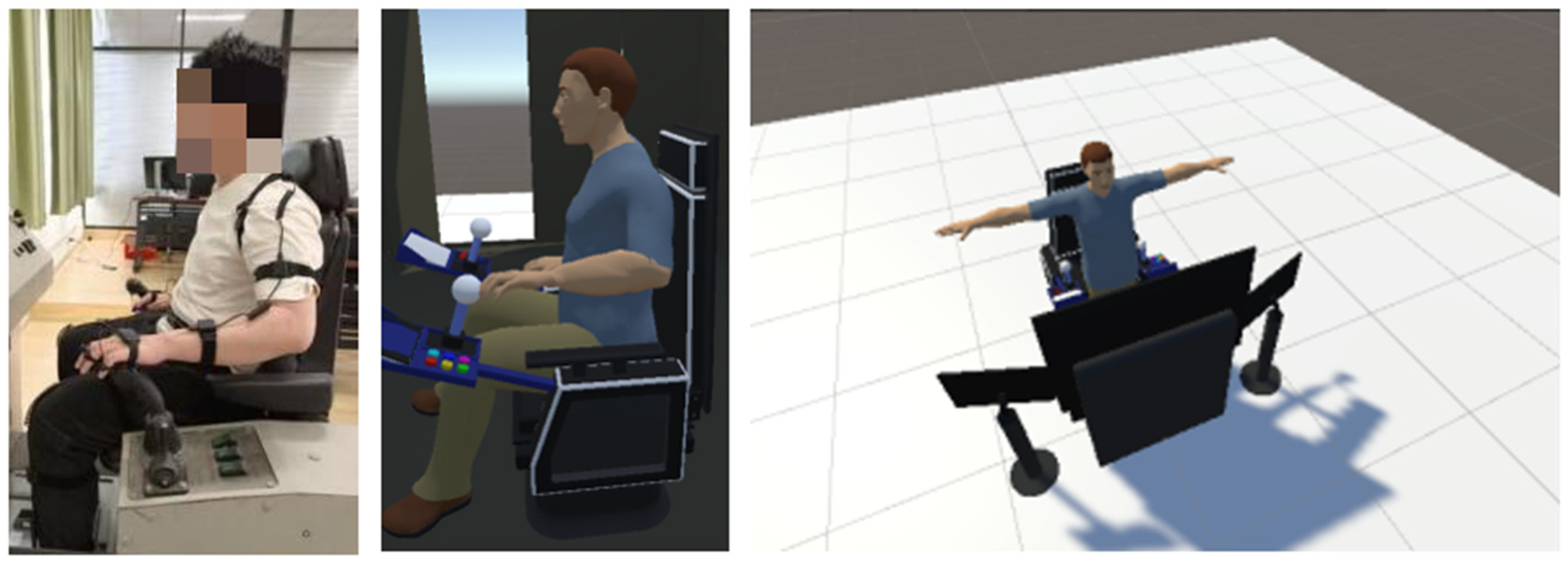

2.1. Establishment of Three-Dimensional Virtual Human Model

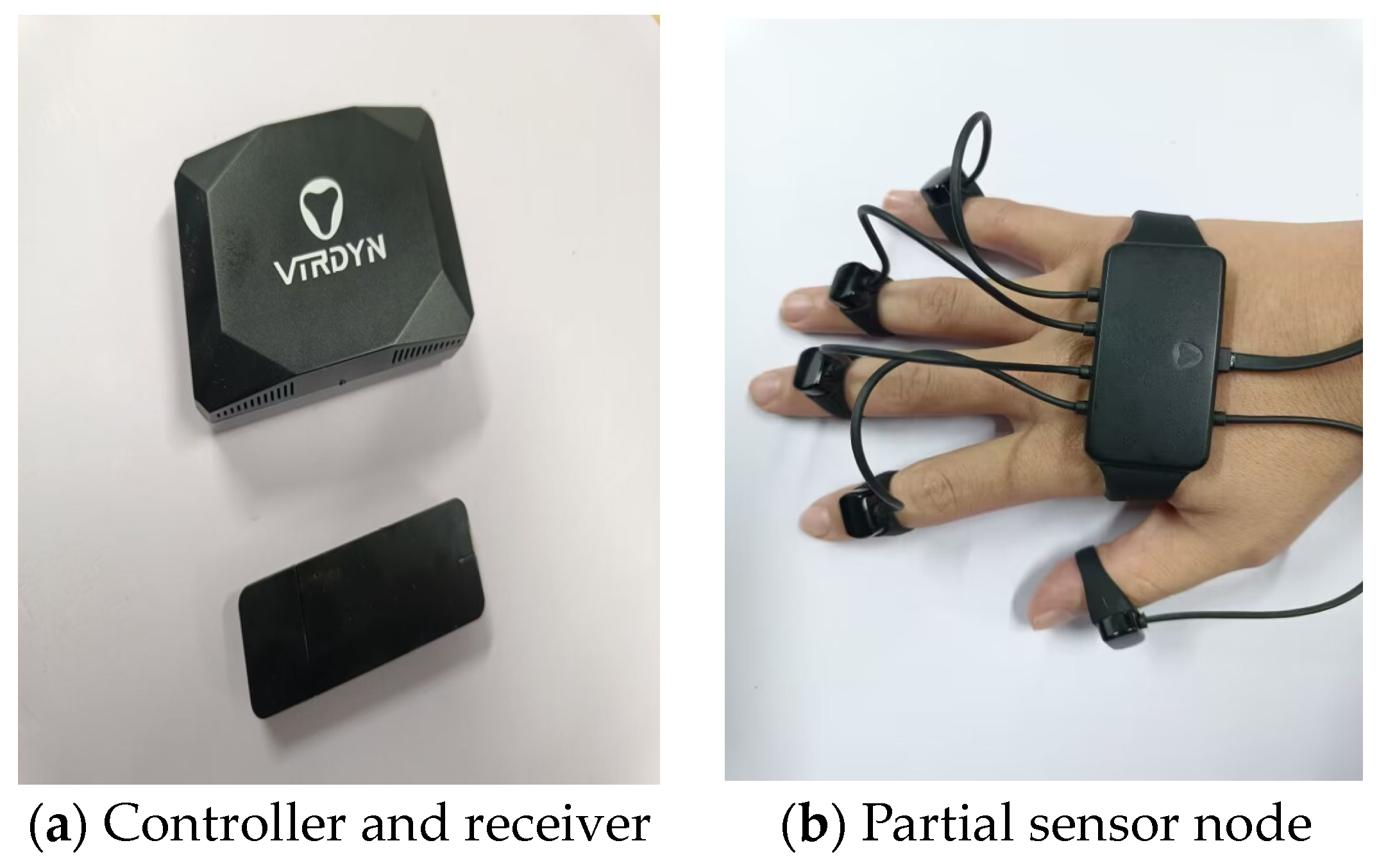

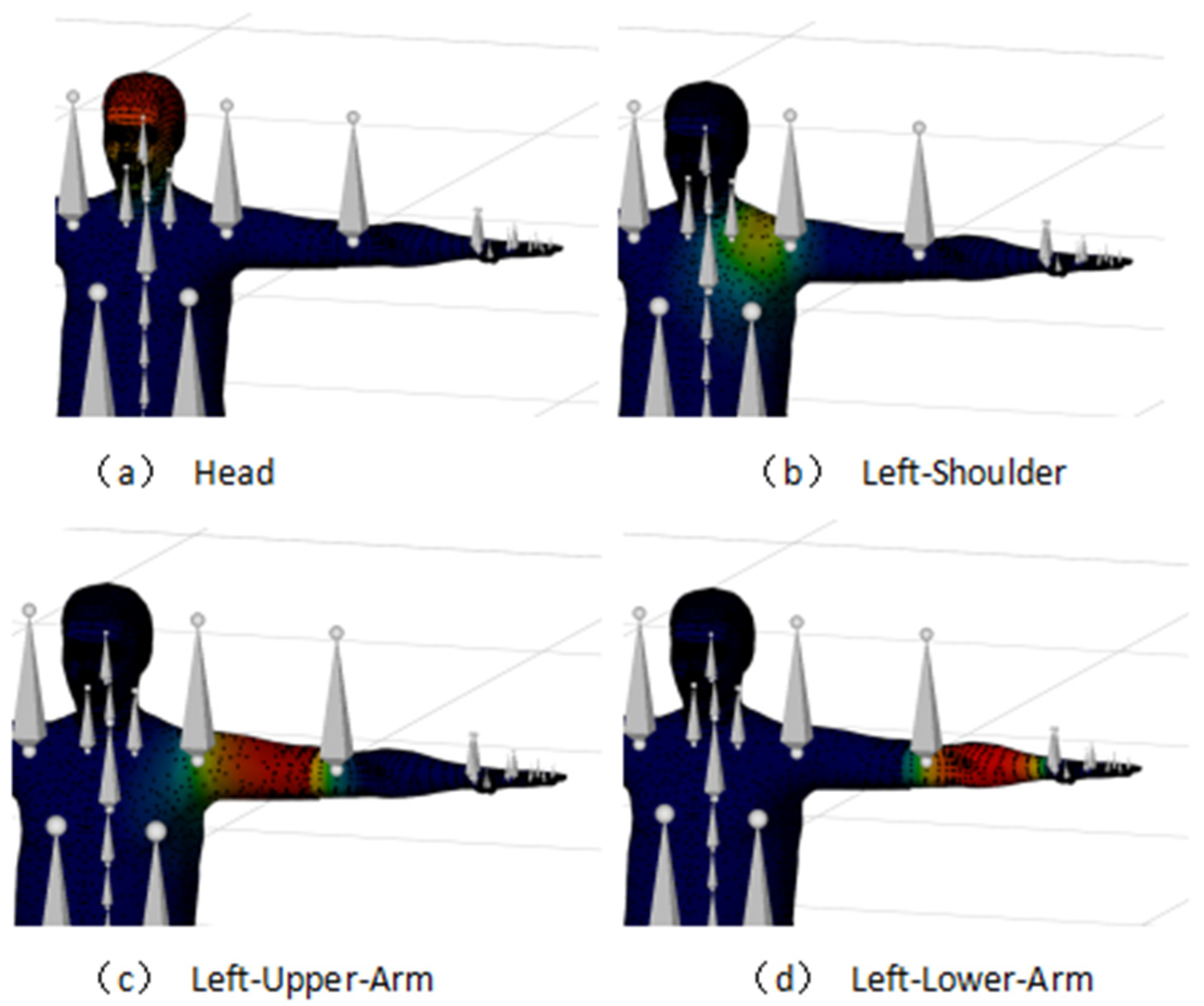

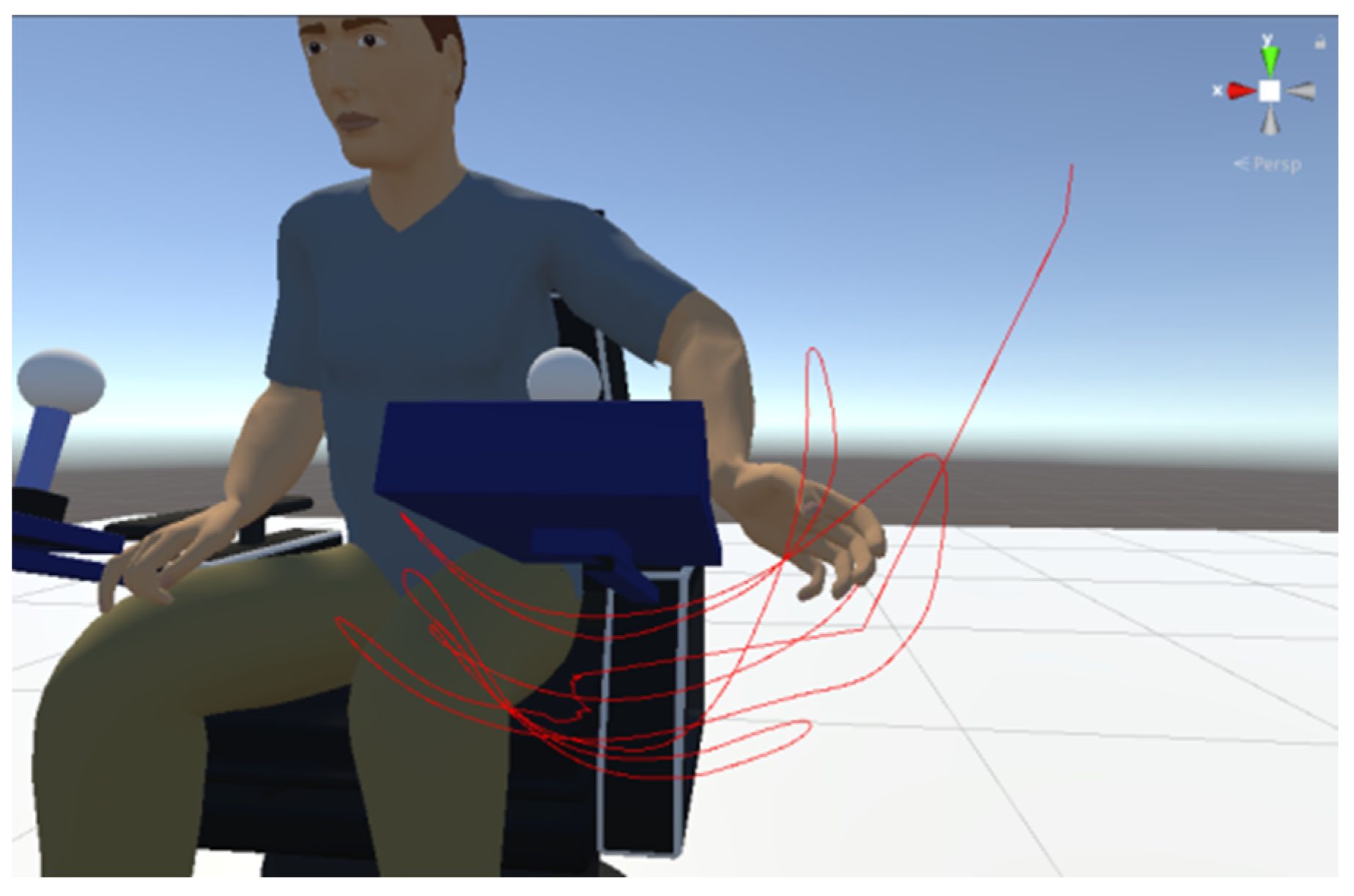

2.2. Motion Capture Device-Driven Generation of a Digital Human

3. Evaluation of Human Comfortability

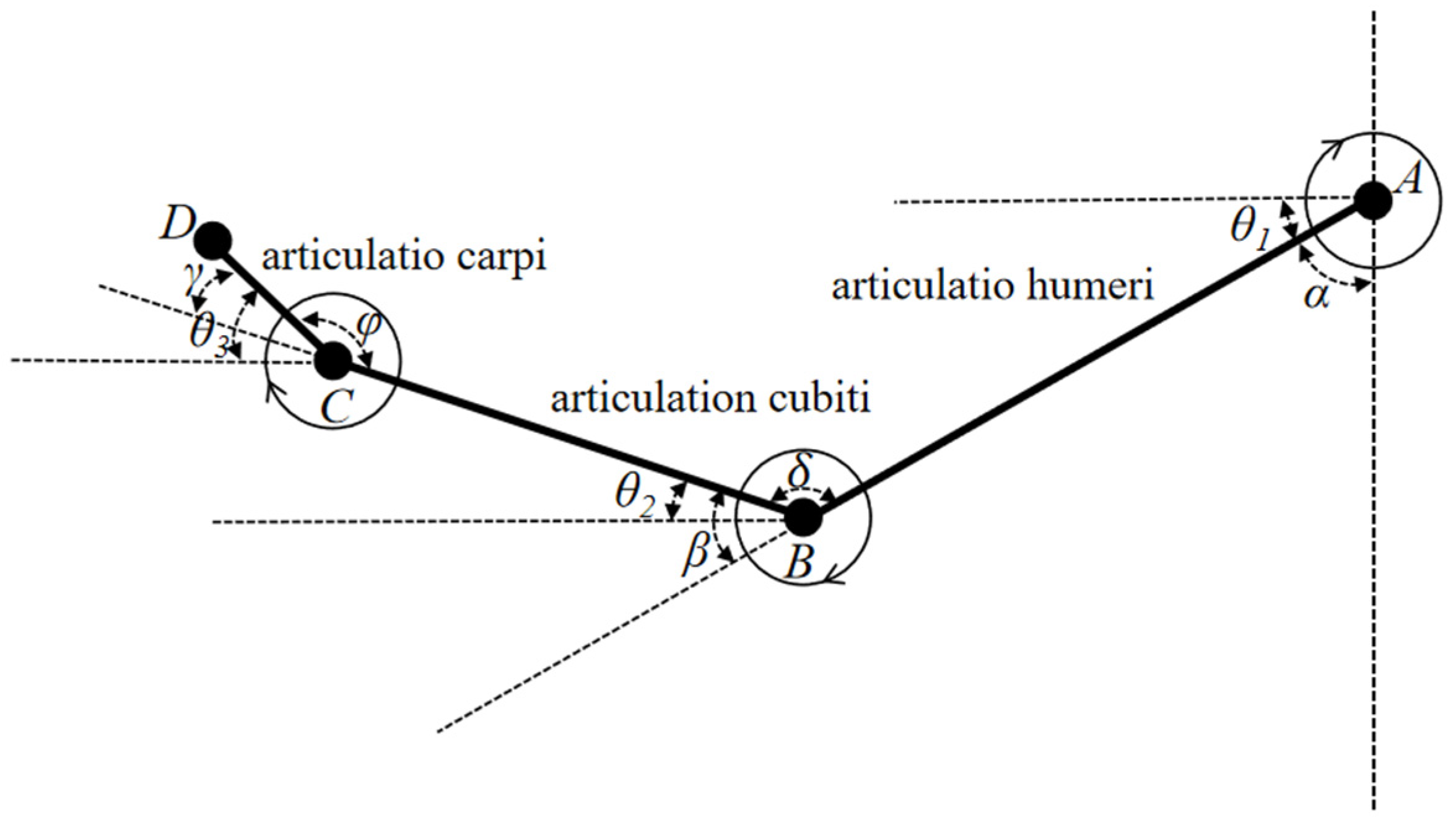

3.1. Calculation of Joint Angle in the Human Upper Limb

3.2. Calculation of Upper Extremity Joint Moments

4. Experiment

4.1. Data Acquisition

4.2. Calculation of Upper Extremity Joint Angles and Torques

4.3. Assessment Criteria

4.4. Analysis of Visible and Reachable Domains

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jennifer, L.; Hans, R. Visual ergonomics on-the-go. Work 2019, 63, 321–324. [Google Scholar] [CrossRef]

- Qiu, B.B.; Zhang, Y.F.; Shen, H.; Zhou, J.; Chu, L. Ergonomic researches in agricultural machinery—A systematic review using the PRISMA method. Int. J. Ind. Ergon. 2023, 95, 103446. [Google Scholar] [CrossRef]

- Fabio, G.; Margherita, P.; Luca, Z.; Marcello, P. An automatic procedure based on virtual ergonomic analysis to promote human-centric manufacturing. Procedia Manuf. 2019, 38, 488–496. [Google Scholar] [CrossRef]

- Manocha, M.; Soni, S.; Beck, M.; Ramirez, X. Scenario Based Training in Drilling Rig Simulator Improves Drillers Core Competency. In Proceedings of the SPE/IADC International Drilling Conference and Exhibition, Stavanger, Norway, 7–9 March 2023. [Google Scholar] [CrossRef]

- Bazazan, A.; Dianat, I.; Feizollahi, N.; Mombeini, Z.; Shirazi, A.M.; Castellucci, H.I. Effect of a posture correction-based intervention on musculoskeletal symptoms and fatigue among control room operators. Appl. Ergon. 2019, 76, 12–19. [Google Scholar] [CrossRef] [PubMed]

- Dai, M.Y.; Lu, Y.L.; Wang, S.; Zhang, H. Research progress on engineering mechanical ergonomic design based on virtual reality technology. J. Mach. Des. 2023, 37, 116–123. [Google Scholar] [CrossRef]

- Li, F.Y.; Li, Y.F. A Computer Aided Conceptual Evaluation of Excavator Cab Based on Ergonomics. Appl. Mech. Mater. 2012, 121–126, 1844–1848. [Google Scholar] [CrossRef]

- Chatterjee, T.; Bhattacharyya, D.; Majumdar, D.; Pal, M. Ergonomic Assessment of Multi Calibre Individual Weapon System in Virtual Reality Platform. Def. Ence J. 2019, 69, 240–248. [Google Scholar] [CrossRef]

- Rahal, R.; Matarese, G.; Gabiccini, M.; Artoni, A.; Prattichizzo, D.; Giordano, P.R.; Pacchierotti, C. Caring about the human operator: Haptic shared control for enhanced user comfort in robotic telemanipulation. IEEE Trans. Haptics 2020, 13, 197–203. [Google Scholar] [CrossRef] [PubMed]

- Nadeau, S.; Salmanzadeh, H.; Ahmadi, M.; Landau, K. Aviation deicing workers, global risk assessment of musculoskeletal injuries. Int. J. Ind. Ergon. 2019, 71, 8–13. [Google Scholar] [CrossRef]

- Ma, Q.G.; Sun, X.L.; Fu, H.J.; Zhao, D.C.; Guo, J.F. Manufacturing process design based on mental and physical workload analysis. Appl. Mech. Mater. 2013, 345, 482–485. [Google Scholar] [CrossRef]

- Ellegast, R.P.; Kraft, K.; Groenesteijn, L.; Krause, F.; Berger, H.; Vink, P. Comparison of four specific dynamic office chairs with a conventional office chair: Impact upon muscle activation, physical activity and posture. Appl. Ergon. 2012, 43, 296–307. [Google Scholar] [CrossRef] [PubMed]

- Caputo, F.; D’Amato, E.; Spada, S.; Sessa, F. Upper Body Motion Tracking System with Inertial Sensors for Ergonomic Issues in Industrial Environments. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2016; Volume 489. [Google Scholar] [CrossRef]

- Gong, L.; Chen, B.; Xu, W.; Liu, C.; Li, X.; Zhao, Z.; Zhao, L. Motion Similarity Evaluation between Human and a Tri-Co Robot during Real-Time Imitation with a Trajectory Dynamic Time Warping Model. Sensors 2022, 22, 1968. [Google Scholar] [CrossRef] [PubMed]

- Dahibhate, G.; Shinde, R.; Sakle, N. The Use of Digital Human Modeling in Ergonomic Design and Product Development. J. Inst. Eng. India Ser. C 2023, 104, 1133–1138. [Google Scholar] [CrossRef]

- ISO 13485:2016; Medical Devices—Quality Management Systems—Requirements for Regulatory Purposes. International Organization for Standardization: Geneva, Switzerland, 2016.

- Sers, R.; Forrester, S.; Moss, E.; Ward, S.; Ma, J.; Zecca, M. Validity of the Perception Neuron inertial motion capture system for upper body motion analysis. Measurement 2020, 149, 107024. [Google Scholar] [CrossRef]

- ISO 11228-1:2003; Ergonomics—Manual Handling—Part 1: Lifting and Carrying. International Organization for Standardization: Geneva, Switzerland, 2003.

- ISO 9241-210:2019; Ergonomics of Human-System Interaction—Part 210: Human-Centered Design for Interactive Systems. International Organization for Standardization: Geneva, Switzerland, 2019.

- Li, S.; Hao, Y.; Mo, H.; Li, Q.; Lyu, Y.; Wang, X.; Li, H. Fast non-rigid human motion 3D reconstruction. J. Comput.-Aided Des. Comput. Graph. 2018, 30, 1505–1513. [Google Scholar] [CrossRef]

- Kavan, L.; Collins, S.; Zara, J.; O’Sullivan, C. Geometric Skinning with Approximate Dual Quaternion Blending. ACM Trans. Graph. 2008, 27, 105-1–105-23. [Google Scholar] [CrossRef]

- Tanaka, Y.; Nishikawa, K.; Yamada, N.; Tsuji, T. Analysis of Operational Comfort in Manual Tasks Using Human Force Manipulability Measure. IEEE Trans. Haptics 2015, 8, 8–19. [Google Scholar] [CrossRef] [PubMed]

- Gates, D.H.; Walters, L.S.; Cowley, J.; Wilken, J.M.; Resnik, L. Range of Motion Requirements for Upper-Limb Activities of Daily Living. Am. J. Occup. Ther. 2016, 70, 1–10. [Google Scholar] [CrossRef] [PubMed]

- GB/T14779-1993; Sitting Posture and Human Body Template Design Requirements. China Standards Press: Beijing, China, 1993.

- Zhang, R.M.; Liu, Z.Q.; Zhou, Q.X.; Shen, Y.H.; Li, C.M. Comfortableness evaluation of range of motion of human upper limb joint. China Saf. Sci. J. 2018, 28, 75–80. [Google Scholar] [CrossRef]

- Qing, T.; Li, Z.; Kang, J. Evaluation of human upper limb motion comfort based on motion capture system. In Proceedings of the IEEE International Conference on Automation and Computing, Newcastle upon Tyne, UK, 6–7 September 2018. [Google Scholar] [CrossRef]

| Anthropometry Parameters/Human of the 95th Percentile (cm) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Stature | Weight | Head Length | Acromion Height | Biacromial Breadth | Arm Length | Elbow Span | Buttock-Popliteal Length | Thigh Clearance |

| 177.5 | 75.0 | 19.8 | 147.1 | 39.7 | 79.9 | 135.0 | 49.0 | 16.2 |

| Position Data of Each Node at the Same Time | Random Sampling Node Number | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |||

| Operating rocker | Left Hand | x | −0.124 | −0.122 | −0.118 | −0.112 | −0.108 | −0.103 | −0.098 | −0.093 | −0.090 | −0.089 |

| y | 0.843 | 0.847 | 0.854 | 0.864 | 0.873 | 0.884 | 0.896 | 0.910 | 0.925 | 0.938 | ||

| z | 0.194 | 0.192 | 0.191 | 0.189 | 0.188 | 0.186 | 0.187 | 0.189 | 0.193 | 0.199 | ||

| Left Lower Arm | x | −0.126 | −0.123 | −0.119 | −0.114 | −0.110 | −0.105 | −0.100 | −0.095 | −0.092 | −0.091 | |

| y | 0.839 | 0.843 | 0.849 | 0.859 | 0.867 | 0.878 | 0.890 | 0.904 | 0.919 | 0.931 | ||

| z | 0.188 | 0.186 | 0.184 | 0.183 | 0.182 | 0.181 | 0.181 | 0.184 | 0.189 | 0.194 | ||

| Left Upper Arm | x | −0.292 | −0.292 | −0.292 | −0.293 | −0.294 | −0.295 | −0.297 | −0.299 | −0.301 | −0.303 | |

| y | 0.931 | 0.930 | 0.930 | 0.929 | 0.930 | 0.930 | 0.931 | 0.933 | 0.935 | 0.938 | ||

| z | 0.011 | 0.010 | 0.009 | 0.009 | 0.010 | 0.013 | 0.018 | 0.026 | 0.035 | 0.044 | ||

| Left Shoulder | x | −0.183 | −0.184 | −0.184 | −0.184 | −0.184 | −0.184 | −0.184 | −0.184 | −0.184 | −0.184 | |

| y | 1.175 | 1.174 | 1.173 | 1.173 | 1.173 | 1.172 | 1.172 | 1.172 | 1.172 | 1.172 | ||

| z | −0.001 | −0.002 | −0.002 | −0.003 | −0.004 | −0.004 | −0.005 | −0.005 | −0.005 | −0.005 | ||

| Twist knob | Left Hand | x | −0.092 | −0.092 | −0.091 | −0.091 | −0.091 | −0.092 | −0.092 | −0.093 | −0.093 | −0.093 |

| y | 0.836 | 0.836 | 0.837 | 0.837 | 0.838 | 0.836 | 0.839 | 0.839 | 0.839 | 0.840 | ||

| z | 0.034 | 0.034 | 0.038 | 0.039 | 0.041 | 0.042 | 0.043 | 0.044 | 0.045 | 0.045 | ||

| Left Lower Arm | x | −0.092 | −0.092 | −0.092 | −0.092 | −0.092 | −0.092 | −0.092 | −0.092 | −0.092 | −0.093 | |

| y | 0.831 | 0.831 | 0.831 | 0.832 | 0.832 | 0.833 | 0.833 | 0.833 | 0.834 | 0.834 | ||

| z | 0.028 | 0.028 | 0.032 | 0.033 | 0.035 | 0.036 | 0.037 | 0.038 | 0.039 | 0.040 | ||

| Left Upper Arm | x | −0.231 | −0.231 | −0.230 | −0.230 | −0.229 | −0.229 | −0.228 | −0.228 | −0.228 | −0.229 | |

| y | 0.929 | 0.929 | 0.928 | 0.927 | 0.927 | 0.927 | 0.927 | 0.927 | 0.926 | 0.926 | ||

| z | −0.166 | −0.166 | −0.164 | −0.163 | −0.163 | −0.162 | −0.162 | −0.161 | −0.161 | −0.160 | ||

| Left Shoulder | x | −0.136 | −0.136 | −0.136 | −0.135 | −0.135 | −0.135 | −0.135 | −0.134 | −0.134 | −0.134 | |

| y | 1.166 | 1.166 | 1.166 | 1.166 | 1.166 | 1.165 | 1.165 | 1.165 | 1.165 | 1.165 | ||

| z | −0.090 | −0.090 | −0.091 | −0.091 | −0.090 | −0.090 | −0.090 | −0.090 | −0.090 | −0.090 | ||

| Push button | Left Hand | x | −0.359 | −0.360 | −0.360 | −0.359 | −0.359 | −0.358 | −0.356 | −0.354 | −0.352 | −0.349 |

| y | 0.868 | 0.868 | 0.869 | 0.869 | 0.869 | 0.869 | 0.869 | 0.870 | 0.870 | 0.871 | ||

| z | 0.198 | 0.201 | 0.202 | 0.204 | 0.204 | 0.204 | 0.205 | 0.205 | 0.205 | 0.206 | ||

| Left Lower Arm | x | −0.357 | −0.357 | −0.358 | −0.357 | −0.356 | −0.355 | −0.353 | −0.351 | −0.349 | −0.347 | |

| y | 0.863 | 0.863 | 0.864 | 0.864 | 0.864 | 0.865 | 0.865 | 0.866 | 0.866 | 0.866 | ||

| z | 0.192 | 0.194 | 0.196 | 0.197 | 0.198 | 0.198 | 0.198 | 0.198 | 0.198 | 0.199 | ||

| Left Upper Arm | x | −0.296 | −0.297 | −0.297 | −0.298 | −0.298 | −0.298 | −0.299 | −0.299 | −0.299 | −0.301 | |

| y | 0.974 | 0.975 | 0.976 | 0.976 | 0.977 | 0.977 | 0.976 | 0.975 | 0.975 | 0.975 | ||

| z | 0.011 | 0.010 | 0.009 | 0.009 | 0.010 | 0.013 | 0.018 | 0.026 | 0.035 | 0.044 | ||

| Left Shoulder | x | −0.128 | −0.128 | −0.128 | −0.129 | −0.129 | −0.129 | −0.130 | −0.130 | −0.130 | −0.131 | |

| y | 1.171 | 1.171 | 1.172 | 1.173 | 1.173 | 1.172 | 1.172 | 1.172 | 1.172 | 1.172 | ||

| z | −0.097 | −0.097 | −0.097 | −0.096 | −0.096 | −0.095 | −0.095 | −0.096 | −0.094 | −0.094 | ||

| Upper Limb Movement | Joint Parameter | Experimental Data Statistics | ||

|---|---|---|---|---|

| Average Value | Minimum Value | Maximum Value | ||

| Operating rocker | Shoulder angle (°) | 66.04 | 48.01 | 98.91 |

| Elbow angle (°) | 86.32 | 67.98 | 113.62 | |

| Wrist angle (°) | 31.02 | 22.99 | 43.47 | |

| Shoulder torques (N·cm) | 253.39 | 0 | 857.79 | |

| Elbow torques (N·cm) | 119.66 | 0 | 483.62 | |

| Twist knob | Shoulder angle (°) | 95.46 | 65.79 | 130.06 |

| Elbow angle (°) | 108.65 | 82.81 | 133.29 | |

| Wrist angle (°) | 78.82 | 58.12 | 104.85 | |

| Shoulder torques (N·cm) | 296.78 | 0 | 1108.45 | |

| Elbow torques (N·cm) | 189.35 | 0 | 746.83 | |

| Push button | Shoulder angle (°) | 66.04 | 48.01 | 98.91 |

| Elbow angle (°) | 82.61 | 50.83 | 117.43 | |

| Wrist angle (°) | 36.30 | 14.32 | 63.18 | |

| Shoulder torques (N·cm) | 213.80 | 0 | 738.64 | |

| Elbow torques (N·cm) | 101.33 | 0 | 250.21 | |

| Joint | Mode of Motion | Limiting Angle | Comfort Zone |

|---|---|---|---|

| Shoulder joint | Front and rear pendulum | 140°~40° | 40°~90° |

| Elbow joint | Bend and stretch | 140°~40° | 80°~110° |

| Wrist joint | Wrist flexion and extension | 80°~70° | 10°~30° |

| Joint | Upper Limb Movement | ||

|---|---|---|---|

| Operating Rocker | Twist Knob | Push Button | |

| Shoulder joint | 0.782 | 0.758 | 0.800 |

| Elbow joint | 0.833 | 0.797 | 0.815 |

| Comfort Level | I | II | III | IV | V |

|---|---|---|---|---|---|

| Comfort index | |||||

| Comfort description | Very uncomfortable | Not comfortable | Generally comfortable | More comfortable | Very comfortable |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Q.; Lu, T.; Tao, M.; Cheng, S.; Wen, G. Inertial Motion Capture-Driven Digital Human for Ergonomic Validation: A Case Study of Core Drilling. Sensors 2024, 24, 5962. https://doi.org/10.3390/s24185962

Zhao Q, Lu T, Tao M, Cheng S, Wen G. Inertial Motion Capture-Driven Digital Human for Ergonomic Validation: A Case Study of Core Drilling. Sensors. 2024; 24(18):5962. https://doi.org/10.3390/s24185962

Chicago/Turabian StyleZhao, Quan, Tao Lu, Menglun Tao, Siyi Cheng, and Guojun Wen. 2024. "Inertial Motion Capture-Driven Digital Human for Ergonomic Validation: A Case Study of Core Drilling" Sensors 24, no. 18: 5962. https://doi.org/10.3390/s24185962

APA StyleZhao, Q., Lu, T., Tao, M., Cheng, S., & Wen, G. (2024). Inertial Motion Capture-Driven Digital Human for Ergonomic Validation: A Case Study of Core Drilling. Sensors, 24(18), 5962. https://doi.org/10.3390/s24185962