Integrated Approach for Human Wellbeing and Environmental Assessment Based on a Wearable IoT System: A Pilot Case Study in Singapore

Abstract

:1. Introduction

2. Materials and Methods

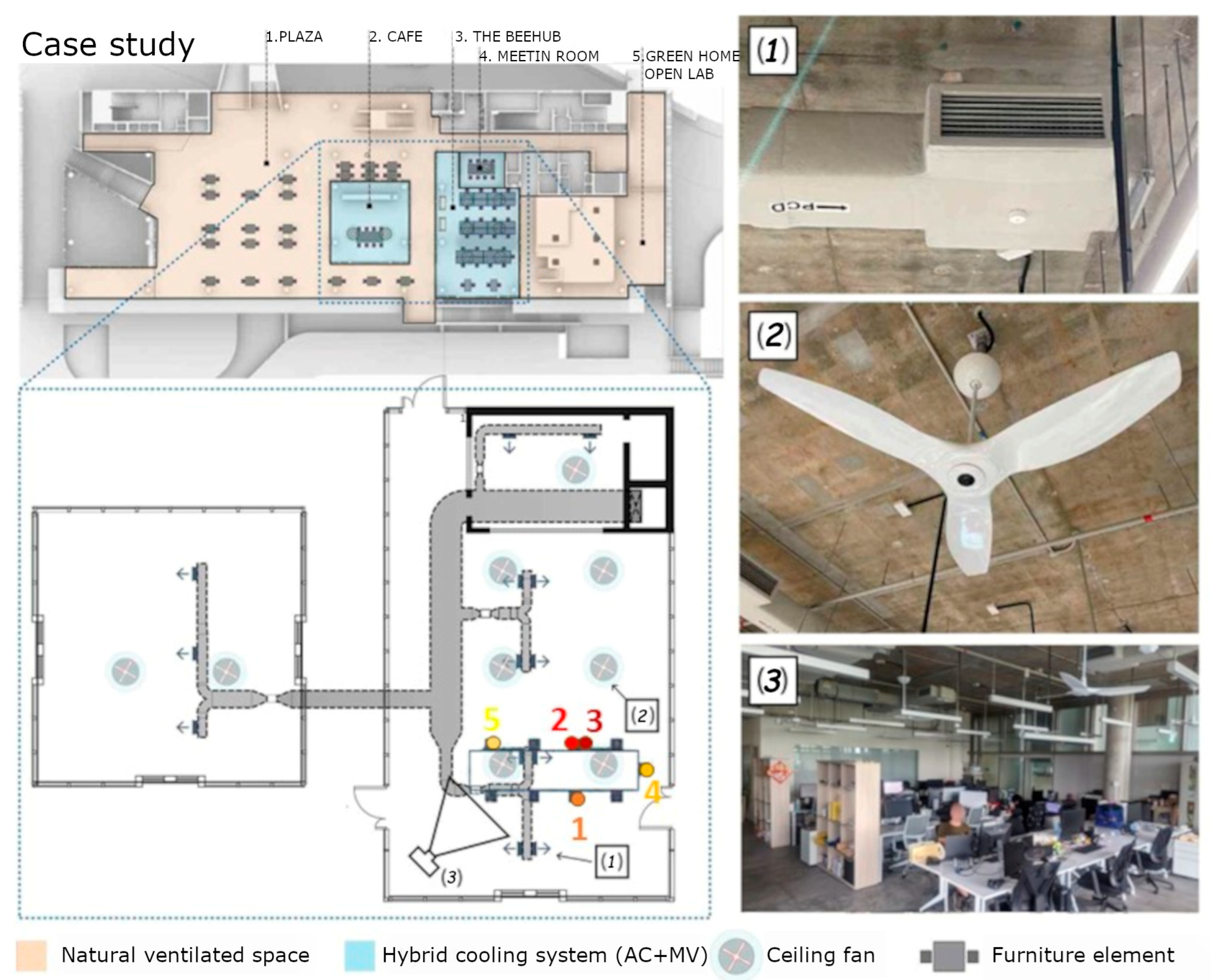

2.1. Case Study and Testers

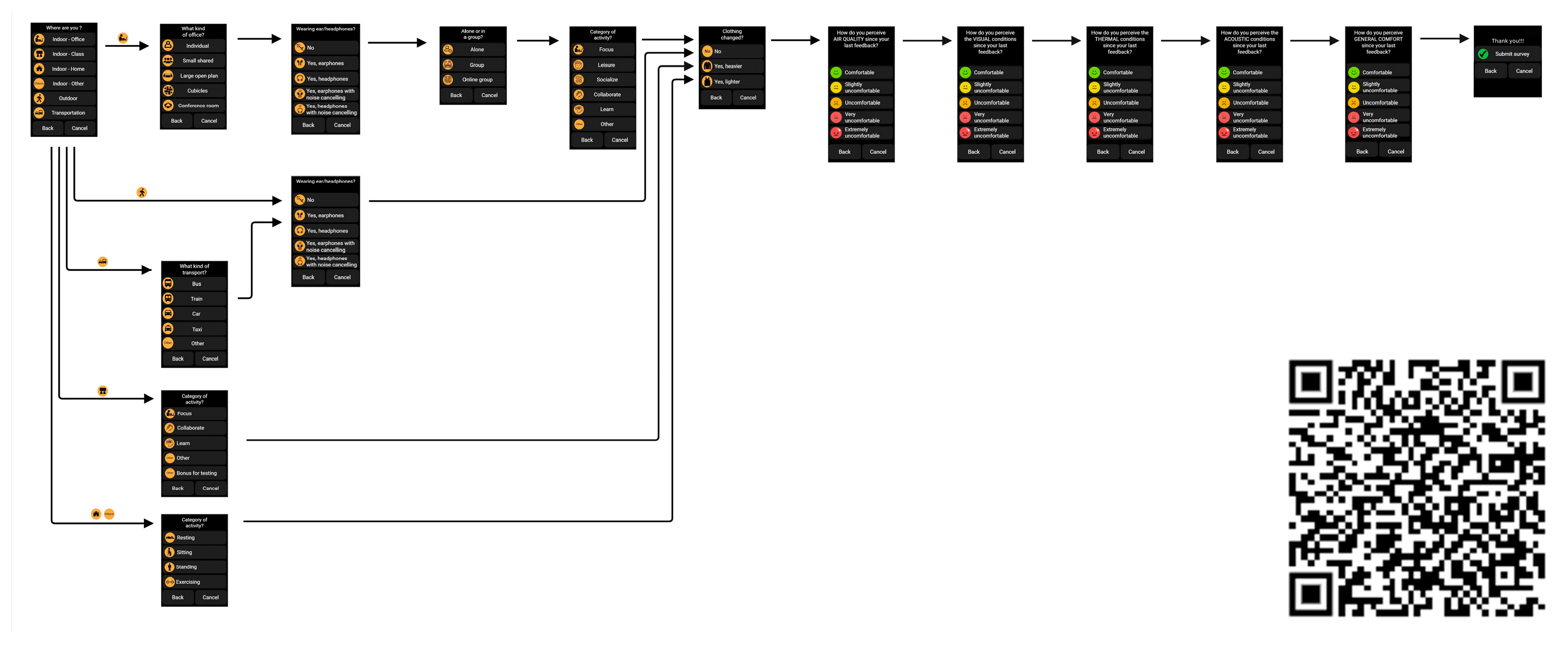

2.2. Smartwatch with Cozie App

2.3. Physiological Monitoring Device

- a photoplethysmography (PPG) sensor for the detection of the heart rate (HR);

- an electrodermal activity (EDA) sensor;

- an infrared thermopile;

- a 3-axis accelerometer.

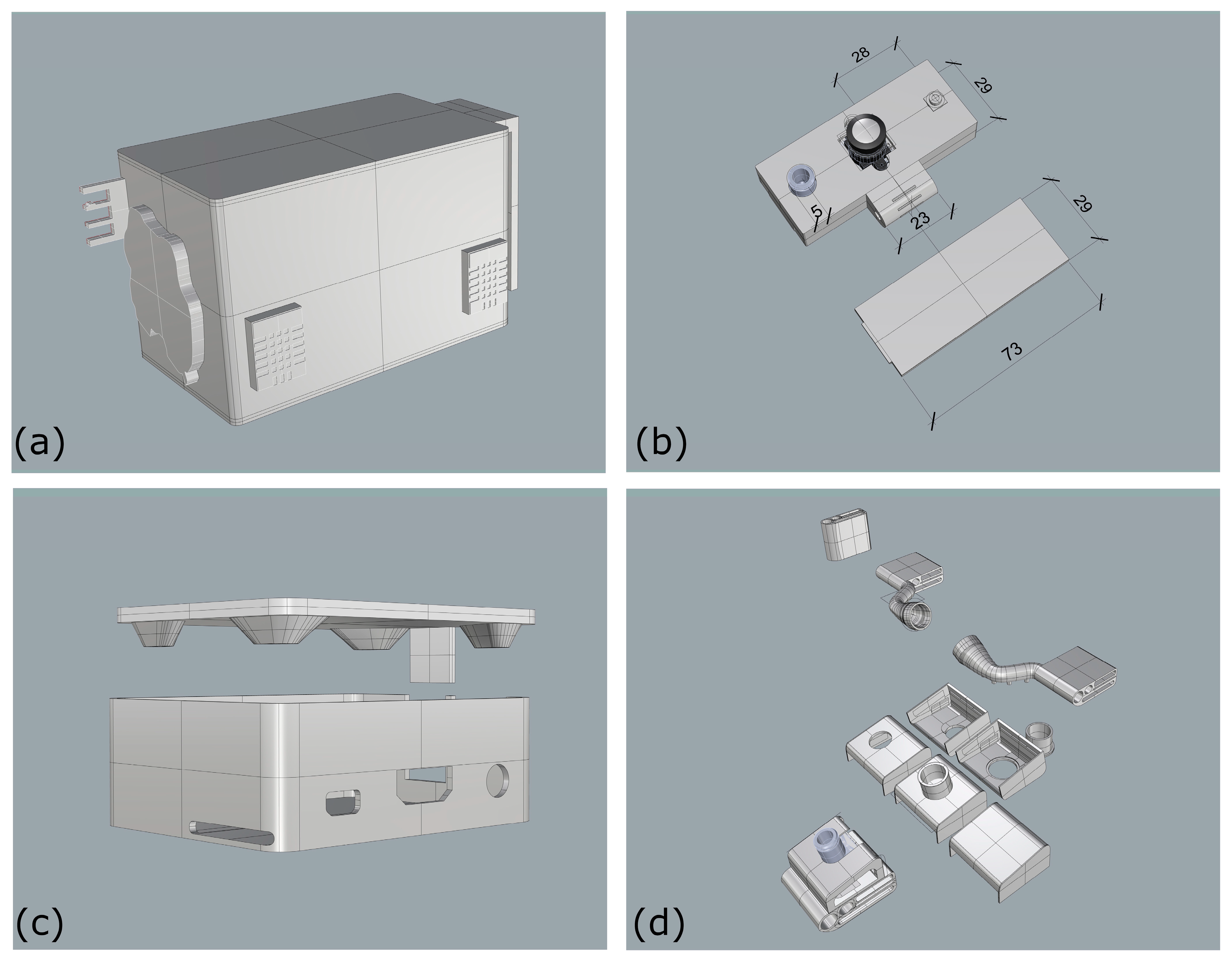

2.4. WEMoS Prototype

2.5. Data Preparation

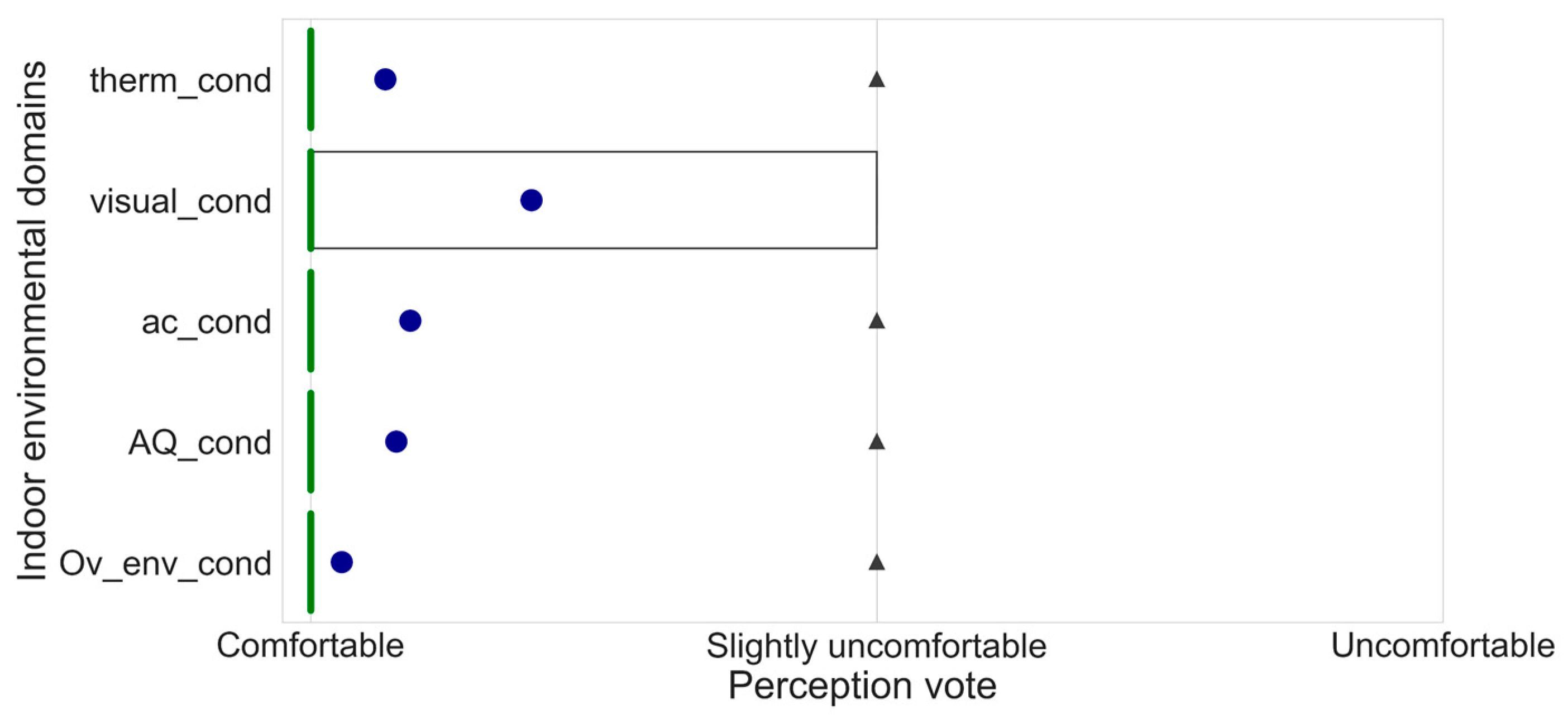

3. Results

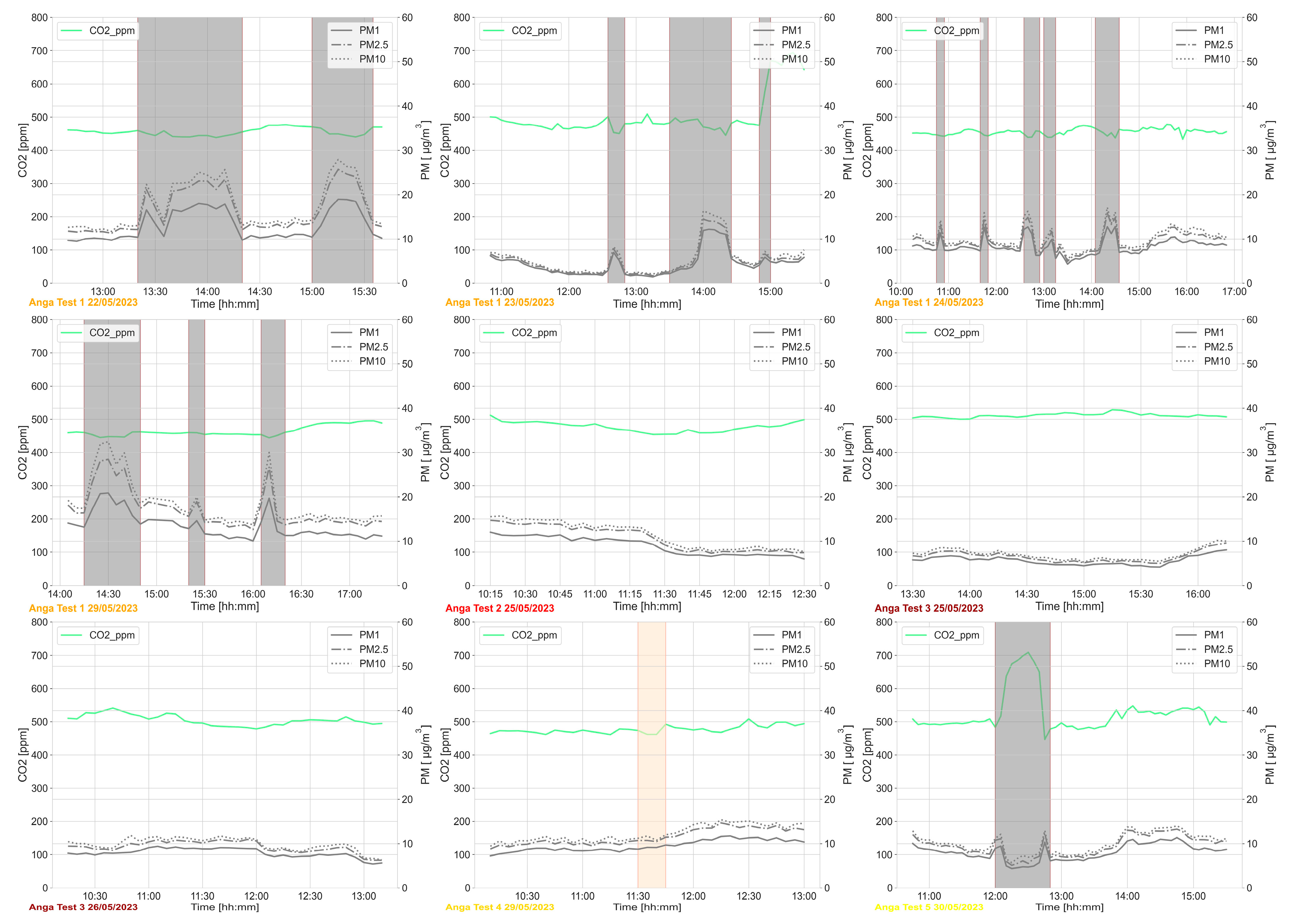

3.1. Descriptive Analysis on the Monitored Environmental Variables

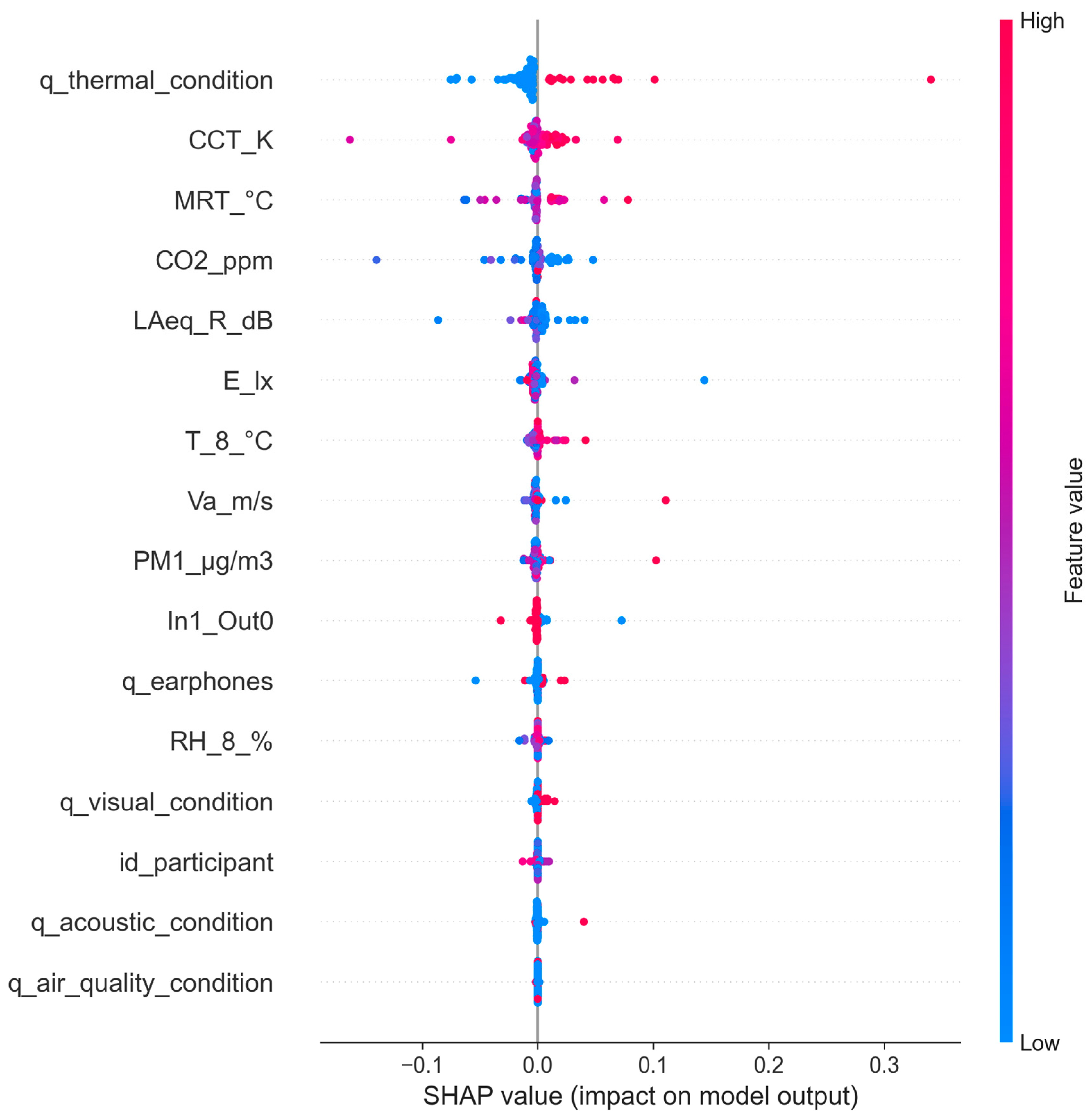

3.2. Predictive Data Analysis

- A Boolean value defining the position of the user (In1_Out0’);

- The CO2 concentration in ppm (‘CO2_ppm’);

- The PM1 concentration in μg/m3 (‘PM1’);

- The relative humidity in % at 8 cm from the body (‘RH_8_%’);

- The air temperature in °C at 8 cm from the human body (‘T_8_°C’);

- The air velocity in m/s (‘Va_m/s’);

- The Mean Radiant Temperature in °C derived as an average value from 4 IR modules (‘MRT_°C’);

- The illuminance in lx (‘E_lx’);

- The Correlated Colour Temperature in K (CCT);

- The equivalent continuous sound level (A-weighted) of R channel in dB (‘LAeq_R’);

- Heart rate variability in ms (‘HRV’);

- Electrodermal Activity in μSiemens (‘EDA’);

- Blood Volume Pulse in μV (‘BVP’);

- Skin temperature at wrist level in °C (‘TEMP’);

- Data of x acceleration in the range [−2 g, 2 g] (‘ACC_X’);

- Data of y acceleration in the range [−2 g, 2 g] (‘ACC_Y’);

- Data of z acceleration in the range [−2 g, 2 g] (‘ACC_Z’);

- Data of overall acceleration in the range [−2 g, 2 g] (‘ACC_Overall’);

- Identification string assigned to each participant (‘id_participant’);

- Answer related to the question “wearing ear/headphones?” (‘q_earphones’);

- Answer related to the question “how do you perceive the VISUAL conditions since your last feedback?” (‘q_visual_condition’);

- Answer related to the question “how do you perceive the THERMAL conditions since your last feedback?” (‘q_thermal_condition’);

- Answer related to the question “how do you perceive the AIR QUALITY since your last feedback?” (‘q_air_quality_condition’);

- Answer related to the question “how do you perceive the ACOUSTIC conditions since your last feedback?” (‘q_acoustic_condition’).

4. Discussion

4.1. Limitations of the Proposed Study

4.2. Further Implications Regarding the Application of the Wearable-Based Framework in the Real World

- provide continuous, individualized monitoring of physiological, environmental, and subjective comfort parameters, enabling personalized interventions in healthcare, workplace ergonomics, and daily well-being by allowing real-time adjustments based on personal comfort or health metrics.

- enable a more holistic understanding of a person’s comfort or health status, thanks to the possibility of using multiple data sources (environmental, physiological, and subjective data). This can be used in various areas such as urban planning, building design, and occupational health, where data-driven insights can help optimize the environment for human comfort and performance.

- provide a wealth of data that, if used on a larger scale, can help researchers and policymakers better understand population trends in comfort and health. This could serve as a basis for public health strategies, workplace regulations, and even product design to improve human wellbeing in different areas.

5. Conclusions

- The DIY approach enables the construction of a wearable device to assess environmental monitoring in a descriptive way.

- It is applicable in real-world contexts for longer test periods than those carried on in the laboratory.

- The environmental data collected with the WEMoS can be merged with physiological information and user feedback, helping to identify key features that are important for defining the overall perception of comfort.

- The potential of this wearable-based framework in the real world is enormous, ranging from improving personal health and comfort to influencing environmental and health policy on a large scale.

- Each element of the wearable system for monitoring environmental variables should undergo instrumental verification before it can be used.

- An initial training phase related to the use of the devices is required.

- The architecture of the wearable-based framework as used in the test performed in Singapore could be more integrated, so that all information could converge to a single database.

- As is well known, predictive models do not allow for good interpretability of results, so it is necessary to always accompany a descriptive phase of the data monitored during the test.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Al Horr, Y.; Arif, M.; Kaushik, A.; Mazroei, A.; Katafygiotou, M.; Elsarrag, E. Occupant productivity and office indoor environment quality: A review of the literature. Build. Environ. 2016, 105, 369–389. [Google Scholar] [CrossRef]

- Andargie, M.S.; Touchie, M.; O’Brien, W. A review of factors affecting occupant comfort in multi-unit residential buildings. Build. Environ. 2019, 160, 106182. [Google Scholar] [CrossRef]

- Schweiker, M.; Ampatzi, E.; Andargie, M.S.; Andersen, R.K.; Azar, E.; Barthelmes, V.M.; Berger, C.; Bourikas, L.; Carlucci, S.; Chinazzo, G.; et al. Review of multi-domain approaches to indoor environmental perception and behaviour. Build. Environ. 2020, 176, 106804. [Google Scholar] [CrossRef]

- Torresin, S.; Pernigotto, G.; Cappelletti, F.; Gasparella, A. Combined effects of environmental factors on human perception and objective performance: A review of experimental laboratory works. Indoor Air 2018, 28, 525–538. [Google Scholar] [CrossRef]

- Berger, C.; Mahdavi, A. Exploring Cross-Modal Influences on the Evaluation of Indoor-Environmental Conditions. Front. Built Environ. 2021, 7, 676607. [Google Scholar] [CrossRef]

- Chinazzo, G.; Andersen, R.K.; Azar, E.; Barthelmes, V.M.; Becchio, C.; Belussi, L.; Berger, C.; Carlucci, S.; Corgnati, S.P.; Crosby, S.; et al. Quality criteria for multi-domain studies in the indoor environment: Critical review towards research guidelines and recommendations. Build. Environ. 2022, 226, 109719. [Google Scholar] [CrossRef]

- Mahdavi, A.; Berger, C.; Bochukova, V.; Bourikas, L.; Hellwig, R.T.; Jin, Q.; Pisello, A.L.; Schweiker, M. Necessary Conditions for Multi-Domain Indoor Environmental Quality Standards. Sustainability 2020, 12, 8439. [Google Scholar] [CrossRef]

- Pellegrino, A.; Serra, V.; Favoino, F.; Astolfi, A.; Giovannini, L.; Clos, A. HIEQLab, a facility to support multi-domain human-centered research on building performance and environmental quality. J. Phys. Conf. Ser. 2021, 2069, 012244. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, F.; Wang, J.; Doyle, J.K.; Hancock, P.A.; Mak, C.M.; Liu, S. How indoor environmental quality affects occupants’ cognitive functions: A systematic review. Build. Environ. 2021, 193, 107647. [Google Scholar] [CrossRef]

- Masullo, M.; Maffei, L. The Multidisciplinary Integration of Knowledge, Approaches and Tools: Toward the Sensory Human Experience Centres. Vib. Phys. Syst. 2022, 33. [Google Scholar] [CrossRef]

- Franke, M.; Nadler, C. Towards a holistic approach for assessing the impact of IEQ on satisfaction, health, and productivity. Build. Res. Inf. 2021, 49, 417–444. [Google Scholar] [CrossRef]

- Choy, L.T. The Strengths and Weaknesses of Research Methodology: Comparison and Complimentary between Qualitative and Quantitative Approaches. IOSR J. Humanit. Soc. Sci. 2014, 19, 99–104. [Google Scholar] [CrossRef]

- Fransson, N.; Västfjäll, D.; Skoog, J. In search of the comfortable indoor environment: A comparison of the utility of objective and subjective indicators of indoor comfort. Build. Environ. 2007, 42, 1886–1890. [Google Scholar] [CrossRef]

- Gilani, S.; O’Brien, W. Review of current methods, opportunities, and challenges for in-situ monitoring to support occupant modelling in office spaces. J. Build. Perform. Simul. 2017, 10, 444–470. [Google Scholar] [CrossRef]

- Cureau, R.J.; Pigliautile, I.; Pisello, A.L.; Bavaresco, M.; Berger, C.; Chinazzo, G.; Deme Belafi, Z.; Ghahramani, A.; Heydarian, A.; Kastner, D.; et al. Bridging the gap from test rooms to field-tests for human indoor comfort studies: A critical review of the sustainability potential of living laboratories. Energy Res. Soc. Sci. 2022, 92, 102778. [Google Scholar] [CrossRef]

- Vellei, M.; Pigliautile, I.; Pisello, A.L. Effect of time-of-day on human dynamic thermal perception. Sci. Rep. 2023, 13, 2367. [Google Scholar] [CrossRef]

- Vellei, M.; Jerome, L.D.; Jerome, N.; Manon, R. Thermal alliesthesia under whole-body cyclical conditions. In Proceedings of the Healthy Buildings Europe Conference, Aachen, Germany, 11–14 June 2023. [Google Scholar]

- Bian, Y.; Ma, Y. Subjective survey & simulation analysis of time-based visual comfort in daylit spaces. Build. Environ. 2018, 131, 63–73. [Google Scholar] [CrossRef]

- Stone, J.V. Vision and Brain: How We Perceive the World; The MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Goldstein, E.B. Cognitive Psychology: Connecting Mind, Research, and Everyday Experience; Cengage Learning: Stamford, CT, USA, 2018; ISBN 1337408271. [Google Scholar]

- Masullo, M.; Maffei, L.; Iachini, T.; Rapuano, M.; Cioffi, F.; Ruggiero, G.; Ruotolo, F. A questionnaire investigating the emotional salience of sounds. Appl. Acoust. 2021, 182, 108281. [Google Scholar] [CrossRef]

- Bickerstaff, K. Risk perception research: Socio-cultural perspectives on the public experience of air pollution. Environ. Int. 2004, 30, 827–840. [Google Scholar] [CrossRef]

- Song, W.; Kwan, M.-P. Air pollution perception bias: Mismatch between air pollution exposure and perception of air quality in real-time contexts. Health Place 2023, 84, 103129. [Google Scholar] [CrossRef]

- Salamone, F.; Masullo, M.; Sibilio, S. Wearable Devices for Environmental Monitoring in the Built Environment: A Systematic Review. Sensors 2021, 21, 4727. [Google Scholar] [CrossRef] [PubMed]

- Eldridge, S.M.; Lancaster, G.A.; Campbell, M.J.; Thabane, L.; Hopewell, S.; Coleman, C.L.; Bond, C.M. Defining Feasibility and Pilot Studies in Preparation for Randomised Controlled Trials: Development of a Conceptual Framework. PLoS ONE 2016, 11, e0150205. [Google Scholar] [CrossRef] [PubMed]

- Abdelrahman, M.M.; Chong, A.; Miller, C. Personal thermal comfort models using digital twins: Preference prediction with BIM-extracted spatial–temporal proximity data from Build2Vec. Build. Environ. 2022, 207, 108532. [Google Scholar] [CrossRef]

- Cozie Website. Available online: https://cozie.app/ (accessed on 12 August 2023).

- Jayathissa, P.; Quintana, M.; Sood, T.; Nazarian, N.; Miller, C. Is your clock-face cozie? A smartwatch methodology for the in-situ collection of occupant comfort data. J. Phys. Conf. Ser. 2019, 1343, 012145. [Google Scholar] [CrossRef]

- Tartarini, F.; Frei, M.; Schiavon, S.; Chua, Y.X.; Miller, C. Cozie Apple: An iOS mobile and smartwatch application for environmental quality satisfaction and physiological data collection. J. Phys. Conf. Ser. 2023, 2600, 142003. [Google Scholar] [CrossRef]

- Detail of the Cozie App Questions Flowchart—High Res. Available online: https://cnrsc-my.sharepoint.com/:i:/g/personal/francesco_salamone_cnr_it/EYNId2dKBANGqL2zFiQLXbgByGTZtXfmRDJTKtO-xUHmwA?e=VKibdE (accessed on 12 August 2023).

- Empatica E4 Wristband. Available online: https://www.empatica.com/manuals/ (accessed on 22 January 2024).

- Salamone, F.; Chinazzo, G.; Danza, L.; Miller, C.; Sibilio, S.; Masullo, M. Low-Cost Thermohygrometers to Assess Thermal Comfort in the Built Environment: A Laboratory Evaluation of Their Measurement Performance. Buildings 2022, 12, 579. [Google Scholar] [CrossRef]

- DHT22 Temperature and Humidity Sensor. Available online: https://www.adafruit.com/product/385 (accessed on 26 January 2022).

- Salamone, F.; Danza, L.; Sibilio, S.; Masullo, M. Effect of Spatial Proximity and Human Thermal Plume on the Design of a DIY Human-Centered Thermohygrometric Monitoring System. Appl. Sci. 2023, 13, 4967. [Google Scholar] [CrossRef]

- Senseair K30 Product Specification. Available online: https://rmtplusstoragesenseair.blob.core.windows.net/docs/publicerat/PSP12132.pdf (accessed on 28 April 2023).

- Adafruit PMSA003I Air Quality Breakout Specifications. Available online: https://www.adafruit.com/product/4632 (accessed on 18 April 2023).

- Wind Sensor Rev. C Webpage. Available online: https://moderndevice.com/products/wind-sensor (accessed on 5 July 2023).

- Arduino Micro Microcontroller Specifications. Available online: https://store.arduino.cc/products/arduino-micro (accessed on 20 April 2023).

- Raspberry Pi 3 Model A+ Webpage. Available online: https://www.raspberrypi.com/products/raspberry-pi-3-model-a-plus/ (accessed on 5 July 2023).

- Salamone, F.; Sibilio, S.; Masullo, M. Assessment of the Performance of a Portable, Low-Cost and Open-Source Device for Luminance Mapping through a DIY Approach for Massive Application from a Human-Centred Perspective. Sensors 2022, 22, 7706. [Google Scholar] [CrossRef]

- Adafruit MLX90640 24x32 IR Thermal Camera Breakout—110 Degree FoV Webpage. Available online: https://www.adafruit.com/product/4469 (accessed on 5 July 2023).

- PiSugar2-Plus Webpage. Available online: https://github.com/PiSugar/PiSugar/wiki/PiSugar2-Plus (accessed on 19 September 2024).

- Salamone, F.; Belussi, L.; Danza, L.; Ghellere, M.; Meroni, I. An Open Source “Smart Lamp” for the Optimization of Plant Systems and Thermal Comfort of Offices. Sensors 2016, 16, 338. [Google Scholar] [CrossRef]

- Scikit-learn Flowchart for Choosing the Right Algorithm. Available online: https://scikit-learn.org/stable/machine_learning_map.html (accessed on 19 September 2024).

- Scikit-learn Description of the Linear Support Vector Classifier. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.svm.LinearSVC.html (accessed on 19 September 2024).

- Scikit-Learn Description of the Support Vector Classifier. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html (accessed on 19 September 2024).

- Scikit-Learn Description of the k-Nearest Neighbors Preditctor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.neighbors.KNeighborsClassifier.html (accessed on 19 September 2024).

- Scikit-Learn Description of Random Forest Predictor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html (accessed on 6 July 2023).

- Scikit-Learn Description of the Gradient Boosting Classifier. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingClassifier.html (accessed on 19 September 2024).

- Scikit-Learn Description of the ExtraTrees Predictor. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.ExtraTreesClassifier.html (accessed on 6 July 2023).

- Scikit-Learn GridSearchCV. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.GridSearchCV.html#sklearn.model_selection.GridSearchCV (accessed on 6 July 2023).

- Vega García, M.; Aznarte, J.L. Shapley additive explanations for NO2 forecasting. Ecol. Inform. 2020, 56, 101039. [Google Scholar] [CrossRef]

- Shap Library Documentation. Available online: https://shap.readthedocs.io/en/latest/index.html (accessed on 6 July 2023).

- Nearable Definition. Available online: https://en.wikipedia.org/wiki/Nearables (accessed on 23 January 2024).

| User | Gender | Day(s) of Test m = Morning, a = Afternoon | Empatica E4 Wristband Data |

|---|---|---|---|

| Anga Test 1 | M | May 22 (m and a) May 23 (m and a) May 24 (m and a) May 29 (a) | No Yes Yes Yes |

| Anga Test 2 | M | May 25 (m) | No |

| Anga Test 3 | M | May 25 (a) May 26 (m) | No No |

| Anga Test 4 | F | May 29 (m) | Yes |

| Anga Test 5 | F | May 30 (m and a) | Yes |

| Sensor | Typical Range | Sampling Frequency |

|---|---|---|

| PPG sensor | - | 64 Hz |

| EDA sensor | 0.01 ÷ 100 µS | 4 Hz |

| Skin Temperature sensor | −40 ÷ +85 °C | 4 Hz |

| 3-axes accelerometer | ±2 g | 32 Hz |

| # | Feature | List1 (267 Available Data for Each Feature) | List2 (403 Available Data for Each Feature) | List3 (403 Available Data for Each Feature) |

|---|---|---|---|---|

| 1 | In1_Out0 | • | • | |

| 2 | CO2_ppm | • | • | • |

| 3 | PM1 | • | • | |

| 8 | RH_8_% | • | • | |

| 9 | T_8_°C | • | • | |

| 10 | Va_m/s | • | • | |

| 15 | MRT [°C] | • | • | • |

| 39 | E_lx | • | • | |

| 43 | CCT | • | • | • |

| 47 | LAeq_R | • | • | • |

| 59 | HRV | • | ||

| 60 | EDA | • | ||

| 61 | BVP | • | ||

| 62 | TEMP | • | ||

| 63 | ACC_X | • | ||

| 64 | ACC_Y | • | ||

| 65 | ACC_Z | • | ||

| 66 | ACC_Overall | • | ||

| 67 | id_participant | • | • | |

| 73 | q_earphones | • | • | |

| 74 | q_visual_condition | • | • | |

| 75 | q_thermal_condition | • | • | • |

| 76 | q_air_quality_condition | • | • | |

| 77 | q_acoustic_condition | • | • | |

| 78 | q_general_comfort_condition | (target) | (target) | (target) |

| Algorithm | Hyperparameter | Range | Selected |

|---|---|---|---|

| RF | n_estimators | Range (1, 22, 2) | 21 |

| GBC | max_depth | Range (5, 16, 2) | 7 |

| min_samples_split | Range (200, 1001, 200) | 220 | |

| ETC | max_depth | Range (1, 50, 4) | 29 |

| min_samples_leaf | [i/10.0 for i in range (1, 6)] | 0.1 | |

| max_features | [i/10.0 for i in range (1, 11)] | 0.6 | |

| LSVC | penalty | [‘l1’, ‘l2’] | l2 |

| C | [100, 10, 1.0, 0.1, 0.01] | 100 | |

| KNN | leaf_size | List (range (1, 50)) | 1 |

| n_neighbors | List (range (1, 30)) | 3 | |

| p | [1, 2] | 1 | |

| SVC | kernel | [‘poly’, ‘rbf’, ‘sigmoid’] | rbf |

| C | [50, 10, 1.0, 0.1, 0.01] | 50 | |

| gamma | [‘auto’, ‘scale’, 1, 0.1, 0.01] | 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salamone, F.; Sibilio, S.; Masullo, M. Integrated Approach for Human Wellbeing and Environmental Assessment Based on a Wearable IoT System: A Pilot Case Study in Singapore. Sensors 2024, 24, 6126. https://doi.org/10.3390/s24186126

Salamone F, Sibilio S, Masullo M. Integrated Approach for Human Wellbeing and Environmental Assessment Based on a Wearable IoT System: A Pilot Case Study in Singapore. Sensors. 2024; 24(18):6126. https://doi.org/10.3390/s24186126

Chicago/Turabian StyleSalamone, Francesco, Sergio Sibilio, and Massimiliano Masullo. 2024. "Integrated Approach for Human Wellbeing and Environmental Assessment Based on a Wearable IoT System: A Pilot Case Study in Singapore" Sensors 24, no. 18: 6126. https://doi.org/10.3390/s24186126

APA StyleSalamone, F., Sibilio, S., & Masullo, M. (2024). Integrated Approach for Human Wellbeing and Environmental Assessment Based on a Wearable IoT System: A Pilot Case Study in Singapore. Sensors, 24(18), 6126. https://doi.org/10.3390/s24186126