Abstract

Anomaly detection in industrial control system (ICS) data is one of the key technologies for ensuring the security monitoring of ICSs. ICS data are characterized as complex, multi-dimensional, and long-sequence time-series data that embody ICS business logic. Due to its complex and varying periodic characteristics, as well as the presence of long-distance and misaligned temporal associations among features, current anomaly detection methods in ICS are insufficient for feature extraction. This paper proposes an anomaly detection method named TFANet, based on time–frequency fusion feature attention encoding. Considering that periodic variations are more concentrated in the frequency domain, this method first transforms the time-domain data into the frequency domain, obtaining both amplitude and phase data. Then, these data, together with the original time-series data, are used to extract features from two perspectives: long-term temporal changes and long-distance associations. Finally, the six features learned from both the time and frequency domains are fused, and the feature weights are calculated using an attention mechanism to complete the anomaly classification. In multi-classification tasks on three ICS datasets, the proposed method outperforms three popular time-series models—iTransformer, Crossformer, and TimesNet—across five metrics: accuracy, precision, recall, F1 score, and AUC-ROC, with average improvements of approximately 19%, 37%, 31%, 35%, and 22%, respectively.

1. Introduction

As industrial control systems are connected to the Internet, the security of industrial control systems faces severe challenges. Traditional industrial control security research focuses on network protection, which is difficult to deal with once the system is invaded. Regardless of the invasion method, many attacks will cause system failures by tampering with the key data of the industrial control system. Therefore, the anomaly detection of industrial control system operation data is the last line of defense for industrial control security []. The anomaly detection of industrial control system operation data refers to judging the security status of the system by calculating whether the operation data of the industrial control system conforms to the normal business logic, so as to promptly alarm dangerous conditions.

ICS data are complex, high-dimensional, long-sequence time-series data that encapsulate the business logic of system operation. ICS data exhibit two distinct characteristics: (1) inconsistent feature periodicity—different features of ICS data are derived from various sensors, each with a unique data variation cycle; and (2) long-distance and misaligned temporal associations—for instance, a change in a flow sensor may lead to a subsequent change in a level sensor. The long-term temporal variation of features is referred to as one perspective, while the associations between features represent another perspective. Due to these characteristics, directly applying popular anomaly detection algorithms in the time-series domain, such as iTransformer, Crossformer, and TimesNet, has not achieved satisfactory results. This is because these methods only extract features based on temporal dependencies among features or use attention mechanisms in isolation, resulting in incomplete feature information extraction from ICS data.

In order to solve the problem of data feature extraction difficulties in industrial control system anomaly detection, this paper proposes a method for industrial control system operation data anomaly detection based on time–frequency fusion feature attention encoding, TFANet. First, the industrial control data are Fourier-transformed to obtain the frequency domain amplitude and phase data of the corresponding data to capture periodic features, thereby improving the problem of feature extraction difficulties caused by period inconsistency; in order to fully learn the long-term changes of features and the long-distance correlation between features, the original time domain data and frequency domain data are extracted separately to obtain six feature maps; in order to mine the deep information of these feature maps, the time domain and frequency domain information are fused, and these features are encoded based on self-attention; finally, a classification module is constructed.

At present, the anomaly detection methods for industrial control system operation data are developed around three types of methods, namely the representation learning of time series data, the feature representation of attention mechanism modeling, and graph neural networks.

Representation learning for time series can be divided into two categories: optimizing the network structure for extracting time series features and optimizing the network loss function. Among the methods for optimizing the feature extraction structure, Sanchez et al. [] proposed a network structure based on the fusion of variational autoencoders and generative adversarial networks to decouple the temporal relationship of satellite images. The core point is to separate the public information and unique information in the satellite image time series data. Chen et al. [] proposed a multi-task time series data representation learning method MTRL, which combines a deep wavelet decomposition network and a 1D-CNN residual network to extract multi-scale subsequence features and time domain features, respectively. Franceshi et al. [] proposed a time series data representation learning framework based on a 1D-CNN encoder network with extended causality to improve the efficiency and scalability of long time series representation learning. Yang and Hong et al. [] proposed a time series unsupervised representation learning framework BTSF, which is achieved by integrating temporal and spectral information and constructing more reasonable sample pairs. In terms of the integration of temporal and spectral information, an aggregation module is designed to interact with temporal information and spectral information across domains, thereby iteratively extracting features at both temporal and spectral scales. Frankin et al. [] improved the network based on the TCN architecture [] and proposed a time embedding module that focuses on capturing time-related features, such as periodicity, trends, and distribution drift. In terms of optimizing network learning methods, Tonekaboni et al. [] proposed a generative method to decouple global and local feature representations in time series data and use counterfactual regularization to minimize the mutual information between global and local variables. There are two studies that use masked autoencoder architectures, namely TiMAE [] and SimMTM []. Among them, the goal of TiMAE is to solve the problem of distribution drift. Its core is to learn strong representations with less inductive bias. In terms of masking strategy, it creates different views for the encoder at each iteration to make better use of the entire time series input. SimMTM reconstructs the original time series from multiple masked time series through sequence similarity learning and point-level aggregation to implicitly reveal the local structure of the manifold. To this end, it introduces a neighborhood aggregation design for reconstruction, aggregating the point-level representations of the time series by the similarity learned in the series representation space. On top of the reconstruction loss, a constraint loss based on the neighborhood assumption of the time series manifold is proposed to guide sequence representation learning. Among the methods for optimizing network loss functions, contrastive learning has been a relatively popular self-supervised learning method in recent years. The following studies have applied this method to design loss functions: Eldele et al. [] proposed self-supervised representation learning framework TS-TCC through time series and context contrast. By designing a cross-view prediction task, the enhanced past latent features are used to predict the future of another enhancement at a certain time step, and a new time contrast module is used to learn time dependencies. This module further learns discriminative representations by maximizing the similarity between different contexts of the same sample and minimizing the similarity between different sample contexts. The TNC method [] applies contrastive learning to focus on complex multivariate non-stationary time series. This method uses debiased contrast loss to ensure that the distribution of signals from the neighborhood and the distribution of non-neighboring signals in the latent space are distinguishable, thereby learning feature representations.

The overall idea of learning methods for time series representation is to model the time-varying features of the original data and to better represent the time-varying features, which effectively improves the representation of feature changes in the depth of time series data feature extraction. However, the value of other features is also ignored. For example, frequency domain data also contain a large number of effective representations of features, and these features can be used to capture the correlation between periodic changes between features.

Related research on feature representation modeling using attention mechanisms includes the iTransformer method proposed by Liu et al. [], which analyzes the reasons why models based on the Transformer architecture perform poorly on prediction tasks. An important point is that models using this architecture will encode multiple features at the same time as time series tokens at the input stage and then use the attention mechanism to capture the correlation between the moments. However, for all feature points at the same time, the physical meanings they represent are different, and there are large differences in scale. Direct encoding may cause the correlation between features to be eliminated, making it impossible for the model to learn a more efficient representation. To this end, this method encodes a single feature into an independent token without changing the Transformer architecture, so the attention mechanism models the correlation between different features, while the temporal correlation is learned by the feedforward network layer. The TimesNet method proposed by Wu et al. [] is inspired by the multi-periodicity of time series data. It uses Fourier transform to split the more complex time series feature changes into changes within a single cycle and between multiple cycles. Then, the original one-dimensional time series data are converted into a two-dimensional tensor represented by multiple cycles. Finally, a two-dimensional convolution kernel is used to model and extract the time series changes. The study pointed out that it is complex and tedious to extract periodic changes from multivariate time series data in the time domain alone, but if we focus on the frequency domain, we can use the Fourier transform to obtain periodic frequency changes between different features. Therefore, we first use the Fourier transform to obtain the more obvious periods in the original data and then use these periods to represent the original time domain data, convert the data from one dimension to two-dimensional tensor representation, and finally use two-dimensional convolution to extract the correlation between features. The Crossformer method proposed by Zhang et al. [] uses the Transformer architecture. Its core point is to model cross-feature dependencies, that is, the correlation between features at different times. The original Transformer’s attention weight distribution focuses on the feature and its adjacent data points and cannot model the attention representation of cross-feature dependencies. On this basis, this method proposes Dimension Segment Wise (DSW) to split the original input into different data segments and then designs a two-stage attention layer to model the attention representation between different data segments. Specifically, the first stage learns the attention representation of different data segments of a single feature to model the dependency relationship across features; the second stage learns the correlation relationship between multiple features. Since the direct application of the attention mechanism will lead to excessive network calculation, this method applies a routing mechanism. First, the input of the first stage is mapped to the routing vector, and then the routing vector distributes the dependency information of the first stage input according to the features so as to construct the correlation relationship across features.

For the method of modeling feature representation using the attention mechanism, industrial control data belong to time series data, which have other characteristics such as high dimensions, long-distance correlation of features, and misaligned time series changes. Although the attention mechanism is good at capturing long-distance correlation, it does not make sufficient use of the temporal position information of the data from the perspective of long time series; from the perspective of long-distance correlation between features, although it can learn the correlation between changes in features, it does not learn enough about the differences in the periodic changes of each feature, resulting in the inability of the anomaly detection model to model a relatively complete feature representation during feature extraction. Therefore, when designing the method, it is necessary to improve the problem of incomplete feature extraction of the anomaly detection model.

The following are some methods for applying graph neural networks: Zhang Zhen et al. [] proposed the GSC-MAD method, which obtains a stable graph structure under normal conditions and uses the high-dimensional time series embedding representation learned from normal data to achieve the single-step prediction of all variables. Then, the variable behavior deviation reflected by the prediction error is combined with the information propagation deviation between variables reflected by GSC to achieve anomaly detection. Chen et al. [] proposed the DyGraphAD method. The core idea of this method is to detect outliers based on the relationship between sequences and the deviation of the change of the time pattern within the sequence from the normal state to the abnormal state. At the same time, the evolutionary properties of the graph are used to assist the graph prediction task and the time series prediction task. Zambon et al. [] proposed a graph data anomaly detection method, GIF. This method considers that some incremental partitions of the data space are easier to isolate anomalies and outliers. Based on the isolation forest method, a new incremental partition of the graph space is introduced, making the isolation forest method applicable to general attribute graphs, that is, graphs where both nodes and edges can be associated with attributes. Zhou LiWen et al. [] proposed the HAD-MDGAT method, which simultaneously learns the dependencies between sensors in the temporal and spatial dimensions. At the same time, to solve the overfitting problem in the autoencoder-based method, a hybrid GAN and MLP anomaly detection method was proposed. Srinivas et al. [] proposed the HgAD method, which is used to learn discrete hypergraph structures. In addition, a hierarchical encoder–decoder architecture is used to model the temporal trends and spatial relationships between interdependent sensors to overcome the challenges of high-order dependencies in sensor networks.

The method using graph neural network has shown excellent results in capturing long-distance dependencies between features. By building a graph structure, the model can clearly learn the correlation between features. However, the amount of industrial control time series data is large, and the graph neural network structure is often shallow. Stacking too many layers will cause all vertices to converge. On the other hand, the changes in industrial control time series data are complex, and the graph constructed by the graph neural network cannot change adaptively.

Therefore, considering the advantages and disadvantages of the above three methods, we should start from the characteristics of industrial control data: on the one hand, for the long time series changes and long-distance associations of industrial control data, the time domain features are extracted using the horizontal and vertical dual perspectives; on the other hand, compared with the real domain, signals with similar changes will be expressed with higher concentration in the frequency domain, ignoring the time differences, thereby improving the robustness of the algorithm to the extraction of misaligned time series associations. The above time domain and frequency domain data are brought together to extract data features more completely.

In order to learn the deep information of the time domain and frequency domain data, a time–frequency feature fusion module is proposed. To ensure that the model can fully capture data changes, this module constructs six feature maps from three data samples and the transposition of data samples and uses convolution and other methods to extract features, so as to learn features from two perspectives: the long time series of features and the long-distance association between features. At the same time, to ensure that the model can fully understand the correlation changes between features of different scales, the different scale features output by the six sub-networks are fused into an overall feature map. Finally, a fused feature attention encoding module is proposed. This module uses self-attention encoding to fuse features, calculates attention weights for features of six scales, and uses weights as the basis for anomaly detection, thereby improving the performance of the industrial control data anomaly detection model. The main contributions of this paper are as follows:

- A method TFANet suitable for the task of anomaly detection of industrial control operation data is proposed. The time–frequency feature fusion module is used to improve the problem of incomplete anomaly detection feature extraction caused by inconsistent industrial control data feature cycles. Compared with the popular time series data anomaly detection method, the performance of the task of anomaly detection of industrial control data is greatly improved.

- A new perspective for understanding the structure and feature changes of industrial control operation data is proposed, that is, long-distance and dislocated time series association. Through this perspective, the connection between the time series data structure and the business logic of the industrial control system is connected in series, and a new feature extraction method for industrial control time series data is proposed.

- Experimental validation has shown that when using convolution as the feature extraction method for anomaly detection in ICS data, the start–stop operations of ICSs result in step changes in feature values. Smaller convolutional kernels are more effective in enhancing the overall performance of the model in anomaly detection tasks. The results of relevant ablation experiments further demonstrate that this method can effectively extract features from ICS data.

2. Time–Frequency Fusion Feature Attention Encoding

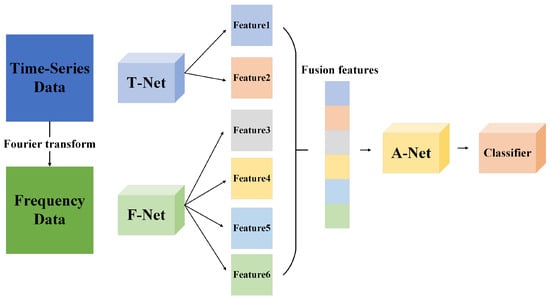

In order to extract feature information from both intra-feature and inter-feature perspectives, a model architecture is designed, as shown in Figure 1. The model as a whole can be divided into three parts, namely T-Net for feature extraction of time domain data, F-Net for the feature extraction of frequency domain data, and A-Net for the attention encoding of time–frequency fusion features.

Figure 1.

Overall model architecture.

In the data preprocessing stage, the corresponding frequency domain data can be obtained by Fourier transforming the original industrial control data (time domain data), which contain amplitude and phase.

In the model training phase, the time domain data are input into the T-Net network, and the amplitude data and phase data in the frequency domain are input into the F-Net network. The three types of data are used for feature extraction from two perspectives: long-term time series changes and long-distance associations between features. Finally, six features of different scales are output. The six features are connected and input into the A-Net for attention encoding, that is, the weights of features of different scales are calculated. Finally, the weight calculation results are input into the classifier for anomaly detection tasks.

2.1. Time–Frequency Feature Fusion Module

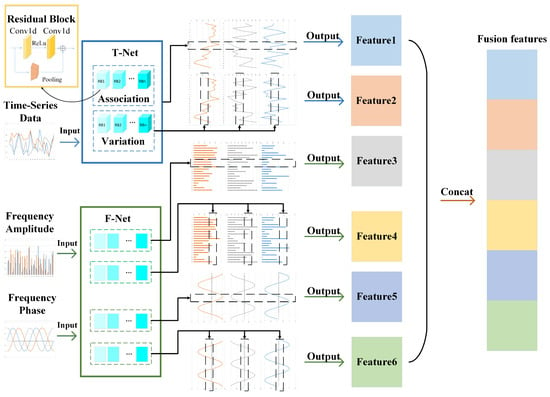

To more closely integrate time-domain and frequency-domain information, a time–frequency feature fusion module was designed based on dual-perspective feature extraction, as shown in Figure 2. The input to this module consists of three different types: time-domain data, frequency-domain amplitude data, and frequency-domain phase data. The two sets of frequency-domain data are obtained by applying a Fourier transform to the time-domain data. This module addresses a series of challenges posed by the characteristics of ICS data by extracting features from both time-domain and frequency-domain data simultaneously. Business logic may be more intuitively represented in the time domain, while certain anomaly patterns might exhibit a more concentrated expression in the frequency domain. By synchronously extracting features from both domains, the model can more accurately capture these potential differences, thereby extracting more comprehensive features from the ICS data.

Figure 2.

Dual-view time–frequency feature fusion module.

For time domain data, it is input into T-Net for feature extraction. T-Net consists of two sub-networks, which extract features from two perspectives: long-term temporal changes within features and long-distance correlations between features. Compared with general network structures for time series data such as RNN, LSTM, GRU, etc., which can only extract features from long-term temporal changes of features, this method captures feature changes at another scale, namely, long-distance correlations between features, which enables the model to avoid overfitting caused by a single feature scale.

Some features in industrial control data change relatively slowly, which may cause the gradient vanishing problem in the deep learning model training process. Residual network ResNet is often used to solve the gradient vanishing problem in model training. The method is to pool the original data before and after convolution and add the output after pooling and convolution to retain the gradient. On this basis, the residual block structure shown in the upper left corner of Figure 2 is designed, which consists of two one-dimensional convolutional layers and a pooling layer. The ReLu activation function is added in the middle of the convolutional layer. Multiple residual blocks are superimposed to extract deeper features. If the dual perspective represents feature extraction from breadth, then the stacking of residual blocks is feature extraction from depth. Finally, T-Net outputs the feature extraction results of two different scales. The feature extraction process of T-Net can be expressed as follows. The one-dimensional convolution formula is shown in Equation (1), where represents the input data of i columns and j rows, represents the weight matrix, and c represents the number of convolution kernels.

Pooling is divided into average pooling and maximum pooling, where p represents the padding value on both sides of the input data, k represents the number of elements selected for pooling at a time, and s represents the step size of pooling. The average pooling calculation formula is shown in Equation (2), and the maximum pooling calculation formula is shown in Equation (3).

The ReLu activation function is shown below Equation (4), where represents the larger value between 0 and j.

Then, the residual block can be expressed as shown in Equation (5).

The calculation of the final correlation perspective is shown in Equation (6).

The calculation formula for the time series perspective is as shown in Equation (7).

Through Equations (6) and (7), we can obtain the feature matrix output of T-Net. For frequency domain data, similarly, applying the above sub-network structure to amplitude data and phase data is F-Net. Since two scales of feature extraction are performed for amplitude and phase, respectively, there are four final feature outputs, which respectively represent the amplitude change correlation matrix between multiple features, the amplitude change matrix of a single feature, the correlation matrix of multiple feature periodic changes, and the periodic change matrix of a single feature.

After feature extraction by T-Net and F-Net, six features of different scales are obtained. After concatenating the six features, the final fusion feature matrix is obtained. The process of fusion features can be shown in Equation (8).

2.2. Fusion Feature Attention Encoding Module

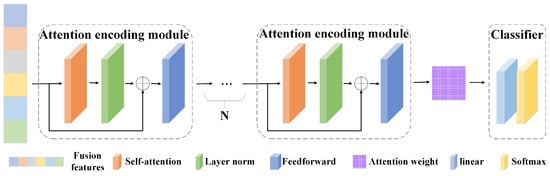

In order to learn the extracted features more accurately, a fusion feature attention encoding module is designed. Based on the six concatenated features, this module trains the weights of each feature map according to attention. Figure 3 shows the architecture details of A-Net. A-Net consists of multiple attention encoding modules. This module performs attention calculation on the fusion features output by the time–frequency feature fusion module and outputs the weight values of different features in the fusion features as the basis for downstream anomaly detection tasks.

Figure 3.

Fusion feature attention encoding module.

This module consists of a self-attention layer, layer normalization, a residual structure, and a feedforward layer. The depth can be increased by stacking multiple modules. The calculation of the self-attention layer is mainly performed by Equation (9), where represents the input data of i columns and j rows; represent the transformation matrices of query, key, and value, respectively; and represents the dimension of the input data.

The residual structure first normalizes the output of the self-attention layer and then adds the original self-attention to the normalized result. The calculation is shown in Equation (10). represents layer normalization of .

The feed-forward layer maps the output of the residual layer to features and then restores the mapping to the original dimension. This design helps the model learn richer feature representations. Its calculation is shown in Equation (11), where and represent the transformation matrices of j columns and k rows and k columns and j rows, respectively.

During the training phase, the cross entropy is used to calculate the training loss. The training loss L is calculated as shown in Equation (13), where represents the input classifier.

The above is the calculation process after the data are input into the fusion feature attention encoding module.

3. Experiment and Analysis

3.1. Introduction to Dataset

The experiment used two public datasets and one self-built dataset to train and test the model, respectively. The downstream task was set to classify the anomaly and normal data labeled in the dataset.

The SWaT (Secure Water Treatment) dataset [] is a public industrial control system security research dataset designed to simulate a real water treatment system. The dataset contains data on various types of attacks and normal operations, which are of great significance for evaluating and improving the security of industrial control systems. The dataset contains two categories.

The WADI (Water Distribution Network Dataset) dataset [] is another public industrial control system security research dataset that simulates a real water supply system. It aims to help researchers and security experts evaluate and improve the security of water supply systems. It contains a large amount of sensor data and network traffic data, which can be used to analyze abnormal behavior and attacks in water supply systems. The dataset contains 14 categories.

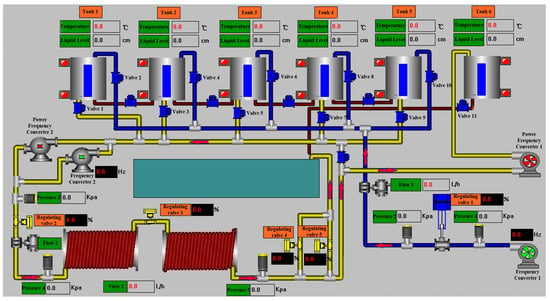

The OTS (Oil Transportation Simulation) dataset is a self-built dataset. It was created by simulating a real oil storage business process using a simulation platform equipped with various sensors, in conjunction with a SCADA system model, to construct the business dataset. The simulation platform is shown in Figure 4. The data collection process involved initially operating the simulation system manually under normal conditions and executing business processes to collect normal data. Simultaneously, abnormal data were collected by launching attacks on the system according to its status. Each attack lasted between 1 min and 2 h. During data collection, the following principles were followed: at the start of each collection period, the system was allowed to operate normally for 10 to 15 min. After an attack on the system, the recovery process was delayed by 2 min to 2 h, depending on the impact of the attack. The data collection included attack types that could simulate major accidents in industrial control environments, making such data more prevalent in the dataset. Data from the simulation platform were recorded every 0.5 s. After each round of operation, system data and the timeline were extracted from the system at specified intervals. These principles were implemented to closely replicate the data variation curves of real business scenarios. The final dataset contains 119 features, including sensor values, valve switch states, and certain internal registers of the PLC. A total of 13 types of attacks were simulated, with specific information for each type detailed in Table 1.

Figure 4.

Simulation platform.

Table 1.

Attack type details.

When conducting experiments, the data set is divided into different segments and input into the network, and Fourier transform is used to obtain frequency domain data. The following Table 2 shows some data segments, and Table 3 is the result of the Fourier transform of the data in Table 2. For Table 3, the real part of each column of data constitutes the amplitude data, and the imaginary part constitutes the phase data. The final network input is the time domain original data, the amplitude data, and the phase data.

Table 2.

Time domain data fragment.

Table 3.

Frequency domain data fragment.

In the data preprocessing stage, the data set is divided into a training set and a test set, and the data in each set are divided into data blocks of fixed length. The data blocks are then Fourier-transformed to obtain amplitude and phase data. In the training stage, the three data blocks are input into TFANet for training. Finally, in the testing stage, the same method is used to perform the anomaly classification task.

3.2. Introduction to Evaluation Indicator

Three comparison methods are used, namely iTransformer, TimesNet, and Crossformer. The following five evaluation indicators are used: Accuracy, Precision, Recall, F1-Score, and Area Under the Curve of ROC (ROC-AUC). The values of these five indicators are all between 0 and 1. The higher the score, the better the performance represented by the indicator.

The accuracy rate is used to calculate the ratio of the number of correctly judged samples to the total number of samples. The calculation Equation is shown in the following Equation (14).

Among them, the true positive (TP) when the positive sample is predicted as a positive sample, the false negative (FN) when the positive sample is predicted as a negative sample, the false positive class (FP) when the negative sample is predicted as a positive sample, and the true negative class (TN) when the negative sample is predicted as a negative sample.

The precision rate is used to evaluate the proportion of examples that are actually positive samples among those that are classified as positive samples. Its calculation is shown in Equation (15).

The recall rate evaluates the classifier’s ability to identify positive samples, and its calculation is shown in Equation (16).

Generally speaking, the precision and recall cannot be high at the same time. The F1 score is used to comprehensively consider the two. Its calculation is shown in Equation (17).

The AUC of the multi-classification problem is calculated in the following way: first, for each category, the remaining categories are regarded as negative samples, and the category is regarded as a positive sample. The two indicators of the positive prediction accuracy rate (True Positive Rate, TPR) and the positive prediction error rate (False Positive Rate, FPR) are calculated as follows: Equations (18) and (19).

For each category, the ROC curve of the category is drawn, with the horizontal axis being FPR and the vertical axis being TPR. Then, the area under the ROC curve of each category is calculated, that is, the AUC value of a single category. Finally, the AUC value of each category is averaged as the final AUC score.

The above is the calculation formula for the indicators used in the experiment.

3.3. Experiment Result

Relevant experiments were carried out according to the above experimental steps, and the results are shown in Table 4 below. From the data in Table 4, we can see that in terms of accuracy performance, the rankings from high to low are as follows: this method, Crossformer, iTransformer, and TimesNet, which are improved by about 9%, 10%, and 15%, respectively, for the three comparison methods. In terms of precision performance, the rankings from high to low are as follows: this method, iTransformer, TimesNet, and Crossformer, and the maximum improvement of this method in terms of precision improvement is about 41%. In terms of recall performance, the rankings from high to low are as follows: this method, Crossformer, iTransformer, and TimesNet, and the average improvement of this method for the three comparison methods is about 10%. In terms of F1 score performance, the rankings from high to low are as follows: this method, TimesNet, iTransformer, and Crossformer, which are improved by 9%, 11%, and 22%, respectively, for the three methods. In terms of the last item ROC-AUC performance, the rankings from high to low are: this method, iTransformer, Crossformer, and TimesNet, and the average improvement of the three comparison methods is about 10%.

Table 4.

SWaT dataset experimental results.

Table 5 below shows the results of experiments on the WADI dataset. From the data in Table 5, we can see that in terms of accuracy performance, the rankings from high to low are as follows: this method, TimesNet, iTransformer, and Crossformer, with the highest improvement reaching 31%. In terms of precision performance, the rankings from high to low are as follows: this method, TimesNet, iTransformer, and Crossformer, and the maximum improvement of this method in terms of precision improvement is about 31%. In terms of recall performance, the rankings from high to low are as follows: this method, TimesNet, iTransformer, and Crossformer, and this method improves by about 14%, 18%, and 31%, respectively, for the three comparison methods, which is similar to the performance in terms of accuracy. On terms of F1 score performance, the rankings from high to low are as follows: this method, TimesNet, iTransformer, and Crossformer, and the maximum improvement of this method in terms of F1 score improvement is about 39%. In terms of ROC-AUC performance, the rankings from high to low are: this method, TimesNet, iTransformer, and Crossformer, and the improvements of the three comparison methods are about 14%, 18%, and 31%, respectively.

Table 5.

WADI dataset experimental results.

Table 6 below shows the results of the experiment on the OTS dataset. From the data in Table 6, we can see that in terms of accuracy, the ranking from high to low is as follows this method, Crossformer, TimesNet, and iTransformer, and the average improvement for the three methods is about 26%. In terms of accuracy, recall, and F1 score, this method has a greater improvement than the three methods. In terms of ROC-AUC performance, the ranking from high to low is: this method, TimesNet, Crossformer, and iTransformer, and the average improvement for the three methods is about 23%. According to the above experimental results, TFANet has achieved different degrees of improvement in indicators compared with the other three methods.

Table 6.

OTS dataset experimental results.

The experimental results of the three comparison methods are analyzed below.

(1) For the iTransformer method, compared with the original Transformer model, this method uses the feature column as the query object and compares the correlation between the feature columns. The advantage of this method is that the optimized model can more fully learn the correlation representation of different variables of the input data for the attention layer of the original Transformer model, that is, learn the long-distance correlation between features. However, each column feature of industrial control data has its own unique change process, reflecting the characteristics of different sensor data. The use of a single embedding layer may not be able to encode each change process, and the above-mentioned misaligned time series phenomenon is also reflected in the change process of the feature, resulting in information loss in the time domain, affecting the classification results.

(2) For the Crossformer method, this method proposes the concept of cross-dimensional dependency based on the Transformer model to better capture the correlation between different dimensions in multivariate time series data. Specifically, unlike iTransformer, which embeds each feature column, Crossformer splits the time domain data of each feature column into different data segments for embedding and then calculates attention for different data segments of different feature columns. This attention is named cross attention. This method solves the impact of misaligned time series to a certain extent and highlights the importance of local features. However, for industrial control operation data anomaly detection, the global features of a single variable also contain certain business logic. Splitting data segments on different data sets may destroy the overall business logic and reduce the accuracy.

(3) For the TimesNet method, the core of this method is to capture the correlation changes between different periods of time series data and use this as the basis for downstream tasks. In terms of specific implementation, TimesNet obtains period information through Fourier transform, extracts the correlation of time domain data represented by different periods through convolution, and then fuses it with the original data for downstream tasks. For the anomaly detection of industrial control operation data, this method can capture the cross-period correlation of sensor data in different periods and has better downstream task generalization than iTransformer and Crossformer. However, the selection of the maximum period in this method will be affected by different data sets. For those with more obvious period changes, relatively good results can be achieved.

The two modules of this method are designed according to the characteristics of industrial control data. In the feature fusion module, features are extracted from the perspectives of time series and association, as well as the time domain and frequency domain, to obtain the correlation between variables and time series changes. At the same time, in the frequency domain, the period of each feature column is characterized by the data changes of the two attributes of amplitude and frequency, which improves the problem of period differences between features and has better robustness to misaligned time series. The above experimental results also illustrate the effectiveness of this method to a certain extent.

4. Ablation Study

To further explore the impact of the size of the convolution kernel of the one-dimensional convolution layer in T-Net and F-Net, the number of attention layers and the dimension of the feedforward layer in A-Net on the model detection results, the following ablation experiments were conducted using the OTS dataset: the convolution kernel size was set to 1 × 3 and 1 × 11; the number of attention layers in A-Net was set from no attention layer (zero layers) to four attention layers, and two setting strategies were adopted. One was the strategy of increasing the dimension of the feedforward layer: when the number of attention layers was set to 0, 1, 2, 3, and 4, the dimensions of the feedforward layer were 32, 64, 128, and 256, respectively; the other was the strategy of reducing the dimension of the feedforward layer: when the number of attention layers was set from 0 to 4, the dimensions of the feedforward layer were 1024, 512, 256, and 128, respectively. The experimental results of the attention layer increase and dimension increase strategies are shown in Table 7 below.

Table 7.

Experimental results under the strategy of increasing the attention layer and increasing the dimensions.

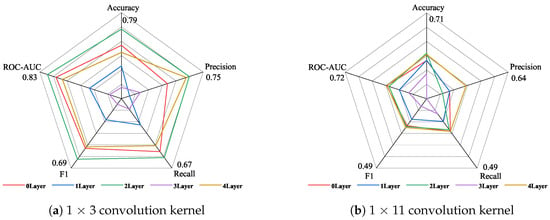

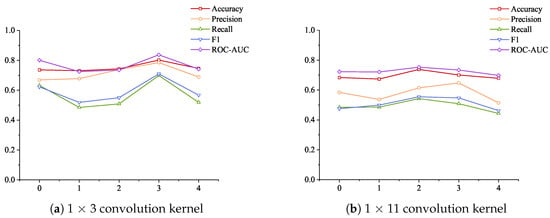

The data in Table 7 are plotted as a radar chart in Figure 5 below to show the different performances of the 1 × 3 small convolution kernel and the 1 × 11 large convolution kernel. Figure 5a shows the specific indicators of using the 1 × 3 convolution kernel, and Figure 5b shows the indicators of using the 1 × 11 large convolution kernel. As can be seen from Figure 5a, when using the 1 × 3 convolution kernel and the dimension increase strategy, setting two attention layers achieves the best results, and the highest values are achieved in all five indicators; setting three attention layers achieves the worst results, and the lowest values are achieved in four indicators. As can be seen from Figure 5b: when using the 1 × 11 convolution kernel, setting four attention layers achieves the best results, and setting three attention layers achieves the worst results.

Figure 5.

The performance of different convolution kernels under the strategy of increasing the attention layer and increasing the dimensions.

Comparing the experimental results shown in Figure 5a,b, it is found that setting the one-dimensional convolution layer as a 1 × 3 convolution kernel can achieve better results than the 1 × 11 convolution kernel under the dimension increase strategy. Figure 5 is drawn according to the data in Table 7 to observe the improvement of the five indicators using the 1 × 3 convolution kernel compared with the 1 × 11 convolution kernel under the dimension increase strategy.

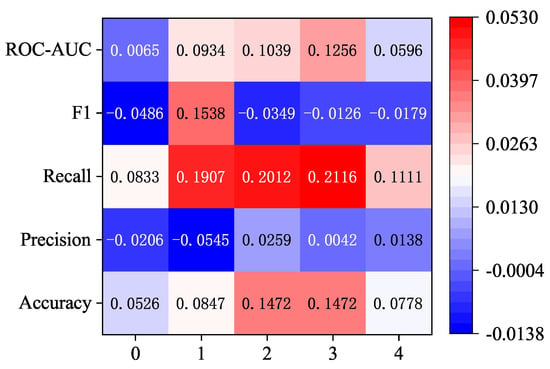

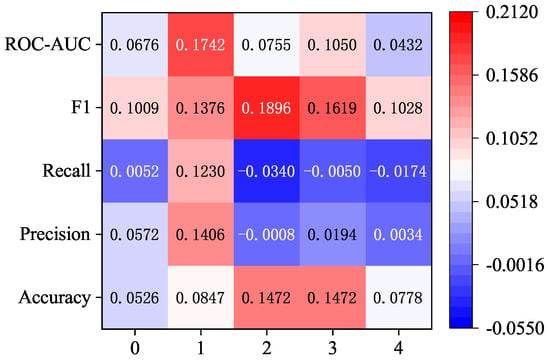

As can be seen from Figure 6, under the same attention layer, the use of the 1 × 3 convolution kernel has different degrees of improvement on the five indicators, among which the recall rate is more obviously improved, and it has a certain degree of improvement compared with other indicators when one, two, and three layers of attention are compared. At the same time, there are also some indicators that are reduced, such as F1 scores at 0, 2, 3, and 4 layers of attention and precision at 0 and 1 layers of attention.

Figure 6.

Improvement results of 1 × 3 convolution kernel compared with 1 × 11 convolution kernel under the strategy of increasing the attention layer and increasing the dimension.

In summary, overall, using the 1 × 3 convolution kernel can achieve better results than the 1 × 11 convolution kernel under the dimension increase strategy.

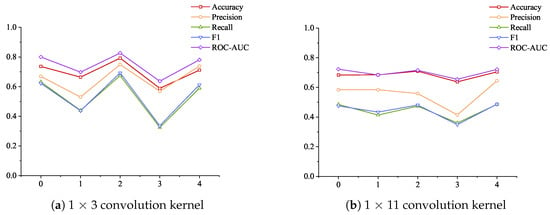

Considering the impact of increasing the number of attention layers on the model detection results, the data in Table 7 plotted the changes in the performance of different convolution kernels under the strategy of increasing the number of attention layers and increasing the dimension, as shown in Figure 7. As the number of attention layers increases and the dimension continues to increase, the use of 1 × 3 convolution kernels shows tortuous changes in the five indicators, and the use of 1 × 11 convolution kernels also shows tortuous changes in the five indicators. From the overall trend, the changes in the three indicators of recall rate, F1 score, and ROC-AOC are similar to those of 1 × 3 convolution kernels but more gentle. The change in precision rate shows a decrease first and then an increase, and the maximum value is achieved at four attention layers. The change in accuracy rate gradually increases from no attention layer to 2 attention layers to reach the maximum value and then begins to decrease and then increase.

Figure 7.

The indicators of different convolution kernels change with the number of layers and dimensions under the strategy of increasing the number of attention layers and increasing the dimensions.

There are many start–stop operations in industrial control systems, which reflects the “instantaneous jump” in data changes. The results shown in Figure 7 show that the use of a 1 × 3 small convolution kernel is better at extracting jump features, so the overall accuracy is better. The increase in the number of attention layers has little effect on the accuracy of large convolution kernels, indicating that large convolution kernels are not very sensitive to features. Although the change in the number of attention layers has a large impact on the final index when a small convolution kernel is selected, the average value of all evaluation results exceeds the above three comparison algorithms, which also shows the stability of the method proposed in the paper.

The experimental results under the strategy of increasing the attention layer and reducing the dimension are shown in Table 8.

Table 8.

Experimental results under the strategy of increasing the attention layer and decreasing the dimensions.

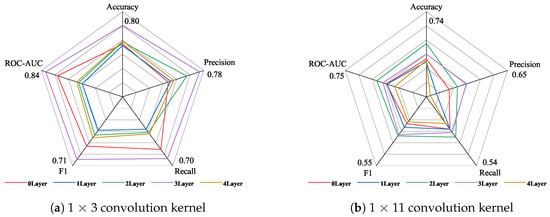

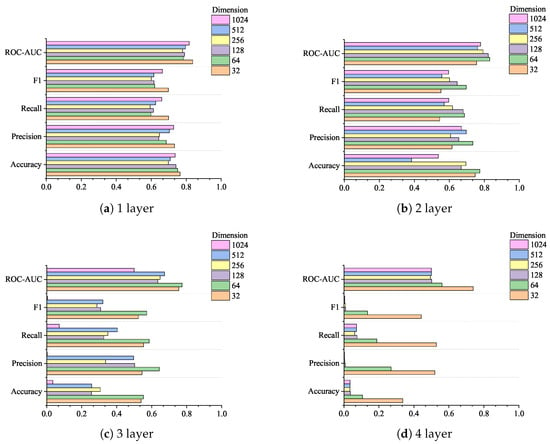

Similarly, the data in Table 8 are plotted as a radar chart, as shown in Figure 8, to observe the performance of different convolution kernels under the dimensionality-reduction strategy. As shown in Figure 8a, the best performance is achieved when three attention layers are set when using the 1 × 3 convolution kernel, and the worst performance is achieved when one attention layer is set. As shown in Figure 8b, when using the 1 × 11 convolution kernel, the best comprehensive effect is achieved when two attention layers are set, and the lowest indicator evaluation is achieved when four attention layers are set. Comparing the performance of the five indicators of the 1 × 3 convolution kernel and the 1 × 11 convolution kernel, the highest indicator result of the 1 × 3 convolution kernel is higher than the highest indicator result of the 1 × 11 convolution kernel.

Figure 8.

The performance of different convolution kernels under the strategy of increasing the attention layer and decreasing the dimensions.

Figure 9 shows the improvement in the indicators from using 1 × 3 convolution kernels over 1 × 11 convolution kernels under the dimension reduction strategy. As shown in Figure 8, the recall rate indicator of using 1 × 3 convolution kernels is reduced compared with that of using 1 × 11 convolution kernels, which is mainly reflected in the setting of two, three, and four attention layers. The precision indicator of setting two attention layers also shows a decline. In addition to the above two indicators, the other three indicators have increased to varying degrees. Among them, the most obvious improvement is the ROC-AUC score under setting one attention layer and the F1 score under setting two attention layers, which are increased by about 0.1742 and 0.1896, respectively.

Figure 9.

Improvement results of 1 × 3 convolution kernel compared with 1 × 11 convolution kernel under the strategy of increasing the attention layer and decreasing the dimensionality.

Figure 10 is drawn from the data in Table 8 to more intuitively observe the changes in indicators of different convolution kernels under the strategy of increasing the attention layers and reducing the dimensions. By comparing Figure 10a,b, it can be seen that the increase in the number of layers has a greater impact on the indicator results under the 1 × 3 small convolution kernel.

Figure 10.

The indicators of different convolution kernels change with the number of layers and dimensions under the strategy of increasing the number of attention layers and decreasing the dimension.

The above ablation experiments show the influence of three parameters, namely the size of the convolution kernel, the number of attention layers, and the dimension of the feedforward layer, on the model detection results. Combined with the results of the above ablation experiments, the following conclusions can be drawn for the method proposed in this paper: (1) Using a 1 × 3 convolution kernel in the industrial control data anomaly detection task can improve the detection effect of the model to a certain extent compared with using a 1 × 11 convolution kernel. (2) Changes in the number of attention layers and the dimension of the feedforward layer have little effect on the detection results of the model using a large 1 × 11 convolution kernel.

As for conclusion (1), it can be seen from the results of Figure 4 and Figure 7 that, regardless of whether the dimension is increased or decreased, the model using the 1 × 3 convolution kernel achieves the highest values of the five indicators compared with the 1 × 11 convolution kernel. First, from the perspective of the size of the convolution kernel, the 1 × 3 convolution kernel may be more sensitive to the local feature changes in the input data. The misaligned timing phenomenon in the operation of the industrial control system is reflected in the industrial control data as the jump of the data at the next moment. Therefore, using a small convolution kernel can better capture the sharp changes in different feature columns of the two samples. Secondly, from the perspective of the function of the convolution kernel, using a smaller convolution kernel can provide more complex nonlinear transformations, which is conducive to building a deeper network and enhancing the network’s ability to express features. Finally, from the perspective of the network structure, according to the method in this paper, the features extracted by the small convolution kernel will be fused and input into A-Net for attention encoding. The use of a small convolution kernel can more effectively retain the local feature changes in the time domain and frequency domain. In the calculation of attention weights, the differences in feature changes in different domains can be highlighted, and the calculation accuracy of weights can be improved.

As for conclusion (2), also from the perspective of convolution kernel size, as the convolution kernel increases, the receptive field also increases. Therefore, a large convolution kernel is better at extracting global features. When processing data jumps are caused by system start–stop operations, an overly large receptive field may focus on capturing the overall feature changes in the data and cause information loss of feature changes between samples. The main task of the T-Net and F-Net sub-networks of the model is to capture the jumps in feature values. If the lost information contains this important feature, then in the subsequent attention weight calculation, the A-Net sub-network’s weight calculations for the time domain and frequency domain may tend to be averaged. At this time, even changing the number of attention layers cannot make up for the impact of information loss.

In summary, for the method proposed in this article, the use of a small 1 × 3 convolution kernel is more sensitive to the feature changes caused by the misaligned timing phenomenon and can improve the model detection accuracy compared to a large 1 × 11 convolution kernel. Therefore, when constructing the model proposed by the method in this article, it is better to consider using a small convolution kernel similar to 1 × 3.

Table 9 shows the results of the ablation experiment using a 1 × 3 convolution kernel to fix the dimension size of the feedforward layer. The purpose of this experiment is to explore the impact of the dimension increase or dimension reduction strategy. The experimental settings are as follows: T-Net and F-Net use 1 × 3 convolution kernels. When adding attention layers to A-Net, the dimension of the feedforward layer is fixed in size and no longer increases or decreases with the number of layers. The values are set to 32, 64, 128, 256, 512, and 1024. The number of attention layers is set to 0, 1, 2, 3, and 4.

Table 9.

1 × 3 convolution kernel fixed dimension layer experimental result data.

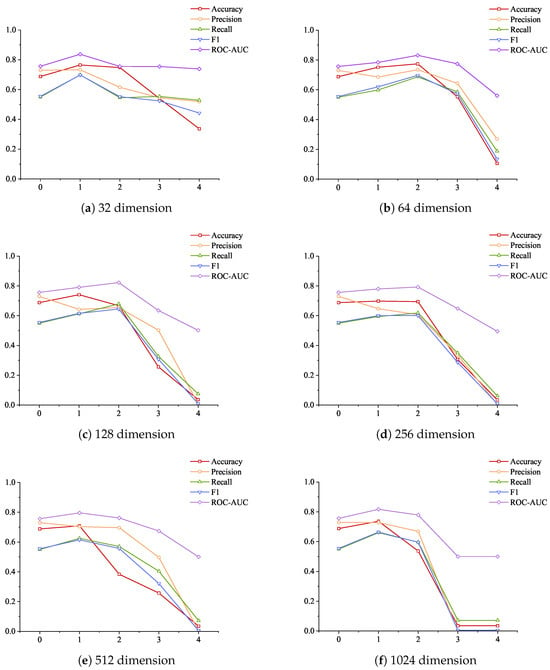

Figure 11 shows a comparison of model detection indicators under different numbers of attention layers. (a), (b), (c), and (d) represent the performance of the five indicators at different feedforward layer dimensions when the attention layers are 1, 2, 3, and 4, respectively. From the information in the figure, we can see that the indicator results are the worst when the attention layers are 4, and the indicator results are higher than 4 when the attention layers are 3. At the same time, by comparing (c) and (d), we can see that the remaining dimensions of the feedforward layer with dimensions higher than 32 have all experienced a significant decline in indicator performance. This may be due to information dilution. By adding attention layers, the model’s ability to perform complex features can be improved, but a network that is too deep may dilute the feature information, causing the model to experience gradient disappearance during training.

Figure 11.

Comparison of model detection index results under different attention layers.

In order to more intuitively reflect the changes in the indicators of the model with the increase in the number of layers under different feedforward layer dimension parameters, Figure 12 is drawn, where (a), (b), (c), (d), (e), and (f) represent the indicator changes under 32, 64, 128, 256, 512, and 1024 dimensions, respectively. As can be seen from the figure, most of the indicator change trends of (b), (c), (d), and (f) are consistent with the trend of decreasing from the non-attention layer to the two-layer attention layer, among which the decreasing trend of (f) is the most dramatic. The feedforward layer is usually used to map features to higher dimensions for learning more complex feature relationships. However, this is also accompanied by a series of problems caused by the increase in dimensions. The process of mapping the original features to high-dimensional space and then restoring them to the original dimensions may discard part of the original features. The industrial control data reflect the changes in different sensors of the industrial control system over time, and there is a correlation between each sensor. If this part of the feature changes is discarded during the mapping process, the model will not be able to effectively identify the anomaly, resulting in a decrease in the indicator results.

Figure 12.

Changes in model detection indicators under different feedforward layer dimensions as the number of layers increases.

From the above experiments, we can see that when determining the number of attention layers and the dimension of the feedforward layer in the A-Net sub-network of this method, it is necessary to consider the negative impact of the increase in the number of layers and the increase in dimension. As shown by the experimental results, choosing three attention layers and a dimensionality reduction strategy can achieve the best results.

5. Discussion and Conclusions

This paper proposes a method for detecting anomalies in industrial control operation data based on time–frequency fusion feature attention coding, TFANet. This method integrates original time domain data, frequency domain amplitude, and phase data and extracts features from two perspectives: long-term temporal changes within the sample features of industrial control data and long-distance correlations between features. The weights of different output features are calculated through attention coding, which is used as the basis for the task of detecting anomalies in industrial control operation data. After being verified on two public datasets and one self-built dataset, this method outperforms three other popular time series data anomaly detection methods, iTransformer, TimesNet, and Crossformer, in three datasets, proving the effectiveness of this method.

Additionally, ablation experiments on the convolution kernel size in the T-Net and F-Net sub-networks, as well as the number of attention layers and the feedforward layer dimensions in the A-Net sub-network, demonstrated that using smaller convolution kernels can better capture feature variations in ICS anomaly detection tasks, further validating the effectiveness of the proposed method. Specifically, the dataset used in this study consists of ICS sensor operation data, which encapsulates the business logic of ICS operation. For anomaly detection tasks, capturing these patterns is beneficial for extracting more in-depth feature information. Based on all ablation experiment results, an important conclusion is that both local and global feature information play a simultaneous role in anomaly detection. In the OTS dataset constructed in this paper, certain start–stop operations are part of the business logic in ICS data. This “pattern” manifests as instantaneous jumps in the data. To capture such abrupt changes, increasing the weight of local information, i.e., using a smaller convolution kernel (a 1 × 3 kernel was used in this study), may be more advantageous. On the other hand, global information might be more effective in capturing other “patterns” that represent the remaining business logic. Therefore, when applying this method to other datasets, factors such as anomaly types, system business logic, and data dimensions need to be considered to define the specific size of the convolution kernel.

Author Contributions

Conceptualization, J.L. and Y.S.; methodology, J.L.; software, J.L. and W.Z.; validation, J.L.; formal analysis, J.L.; investigation, J.L. and W.Z.; resources, Y.S., Y.Y. and X.L.; data curation, J.L.; writing—original draft preparation, J.L.; writing—review and editing, Y.S.; visualization, J.L.; supervision, Y.S.; project administration, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The WADI dataset analyzed during the current study is available at https://itrust.sutd.edu.sg/, accessed on 1 November 2023. The SWaT dataset analyzed during the current study is available at https://itrust.sutd.edu.sg/itrust-labs_datasets/dataset_info/, accessed on 1 November 2023. Other datasets are available from the corresponding author, Yun Sha, upon reasonable request.

Conflicts of Interest

The authors declare no competing interests.

References

- Whitehead, D.E.; Owens, K.; Gammel, D.; Smith, J. Ukraine cyber-induced power outage: Analysis and practical mitigation strategies. In Proceedings of the 2017 70th Annual Conference for Protective Relay Engineers (CPRE), College Station, TX, USA, 3–6 April 2017. [Google Scholar]

- Sanchez, E.H.; Serrurier, M.; Ortner, M. Learning disentangled representations of satellite image time series. In Proceedings of the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECMLPKDD), Würzburg, Germany, 16–20 September 2019. [Google Scholar]

- Chen, L.; Chen, D.; Yang, F.; Sun, J. A deep multi-task representation learning method for time series classification and retrieval. Inf. Sci. 2021, 555, 17–32. [Google Scholar] [CrossRef]

- Franceschi, J.Y.; Dieuleveut, A.; Jaggi, M. Unsupervised scalable representation learning for multivariate time series. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar] [CrossRef]

- Yang, L.; Hong, S. Unsupervised time-series representation learning with iterative bilinear temporal-spectral fusion. In Proceedings of the International Conference on Machine Learning (ICML), Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Fraikin, A.; Bennetot, A.; Allassonnière, S. T-Rep: Representation Learning for Time Series using Time-Embeddings. arXiv 2023, arXiv:2310.04486. [Google Scholar]

- Lea, C.; Flynn, M.D.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal convolutional networks for action segmentation and detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Tonekaboni, S.; Li, C.L.; Arik, S.O.; Goldenberg, A.; Pfister, T. Decoupling local and global representations of time series. In Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATS), Virtual Conference, 28–30 March 2022. [Google Scholar]

- Li, Z.; Rao, Z.; Pan, L.; Wang, P.; Xu, Z. Ti-mae: Self-supervised masked time series autoencoders. arXiv 2023, arXiv:2301.08871. [Google Scholar]

- Dong, J.; Wu, H.; Zhang, H.; Zhang, L.; Wang, J.; Long, M. Simmtm: A simple pre-training framework for masked time-series modeling. Adv. Neural Inf. Process. Syst. 2024, 36. [Google Scholar] [CrossRef]

- Eldele, E.; Ragab, M.; Chen, Z.; Wu, M.; Kwoh, C.K.; Li, X.; Guan, C. Time-series representation learning via temporal and contextual contrasting. arXiv 2021, arXiv:2106.14112. [Google Scholar]

- Tonekaboni, S.; Eytan, D.; Goldenberg, A. Unsupervised representation learning for time series with temporal neighborhood coding. arXiv 2021, arXiv:2106.00750. [Google Scholar]

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. itransformer: Inverted transformers are effective for time series forecasting. arXiv 2023, arXiv:2310.06625. [Google Scholar]

- Wu, H.; Hu, T.; Liu, Y.; Zhou, H.; Wang, J.; Long, M. Timesnet: Temporal 2d-variation modeling for general time series analysis. arXiv 2022, arXiv:2210.02186. [Google Scholar]

- Zhang, Y.; Yan, J. Crossformer: Transformer utilizing cross-dimension dependency for multivariate time series forecasting. In Proceedings of the 11th International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Zhang, Z.; Geng, Z.; Han, Y. Graph Structure Change-Based Anomaly Detection in Multivariate Time Series of Industrial Processes. IEEE Trans. Ind. Inform. 2024, 20, 6457–6466. [Google Scholar] [CrossRef]

- Chen, K.; Feng, M.; Wirjanto, T.S. Multivariate time series anomaly detection via dynamic graph forecasting. arXiv 2023, arXiv:2302.02051. [Google Scholar]

- Zambon, D.; Livi, L.; Alippi, C. Graph iForest: Isolation of anomalous and outlier graphs. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022. [Google Scholar]

- Zhou, L.; Zeng, Q.; Li, B. Hybrid anomaly detection via multihead dynamic graph attention networks for multivariate time series. IEEE Access 2022, 10, 40967–40978. [Google Scholar] [CrossRef]

- Srinivas, S.S.; Sarkar, R.K.; Runkana, V. Hypergraph Learning based Recommender System for Anomaly Detection, Control and Optimization. In Proceedings of the IEEE International Conference on Big Data (Big Data), Kyoto, Japan, 13–16 December 2022. [Google Scholar]

- Goh, J.; Adepu, S.; Junejo, K.N.; Mathur, A. A dataset to support research in the design of secure water treatment systems. In Proceedings of the Critical Information Infrastructures Security (CRITIS), Paris, France, 10–12 October 2016. [Google Scholar]

- Charilaou, C.; Ioannou, C.I.C.; Vassiliou, V. System for operational technology attack detection in industrial IoT. In Proceedings of the Mediterranean Communication and Computer Networking Conference (MedComNet), Paphos, Cyprus, 1–3 June 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).