A Transformer-Based Image-Guided Depth-Completion Model with Dual-Attention Fusion Module

Abstract

1. Introduction

2. Related Work

2.1. Vision Transformer

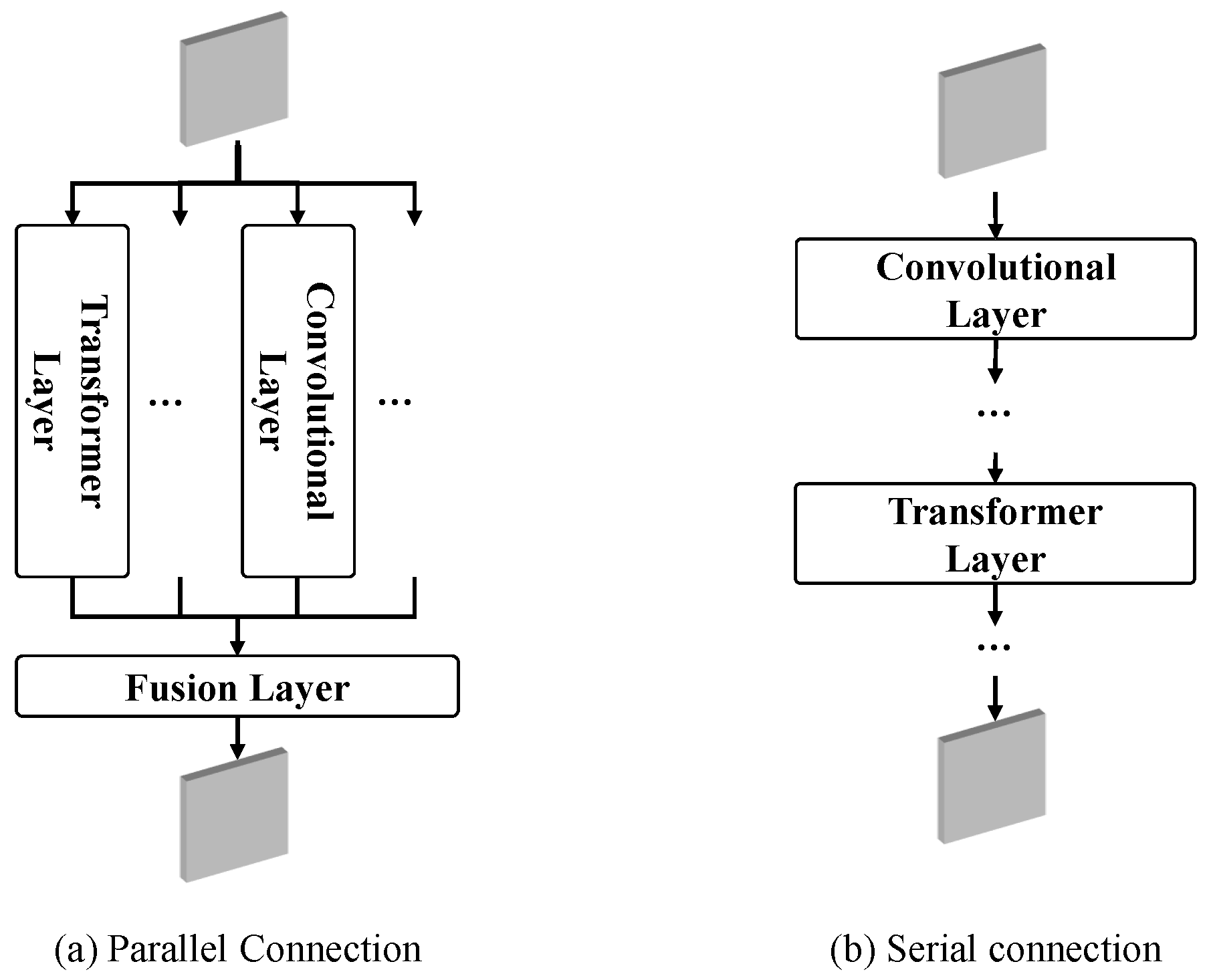

2.2. CNN and Transformer

2.3. Depth-Completion Network Architecture Design

Depth Completion Based on Transformer

3. Method

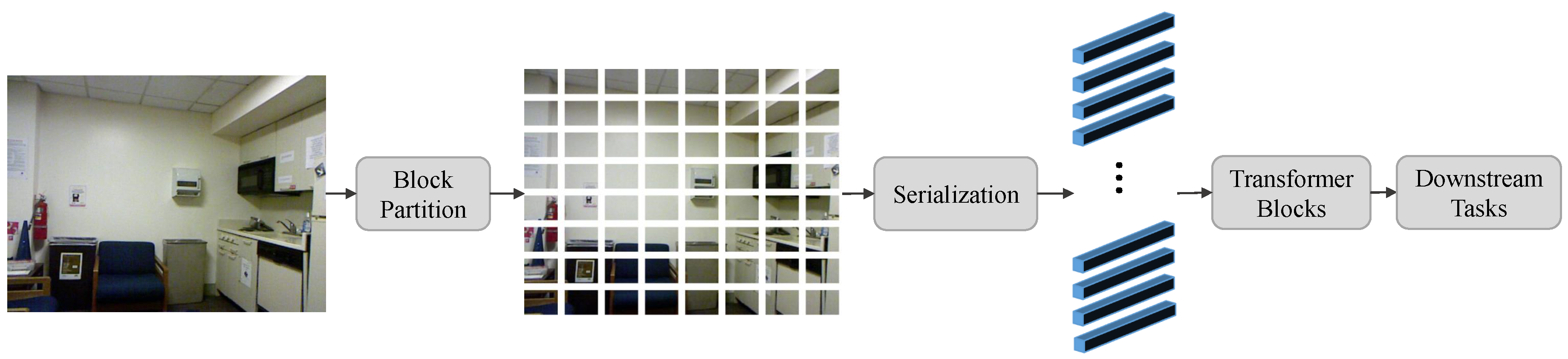

3.1. Transformer-Based Pyramid Dual-Branch Backbone

3.1.1. Image-Dominant Branch

3.1.2. Depth-Dominant Branch

3.1.3. Dual-Branch Fusion

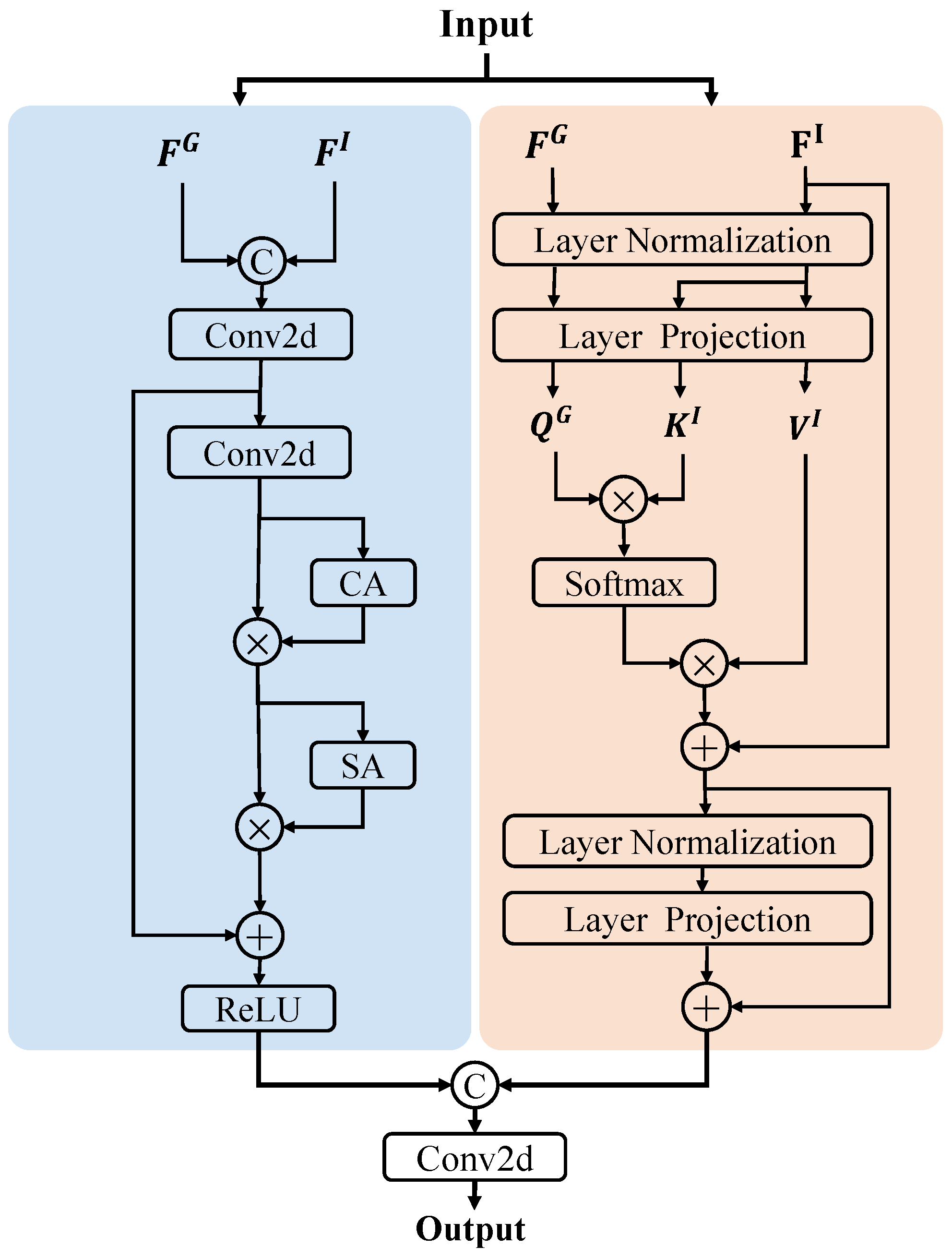

3.2. Dual-Attention Fusion Module

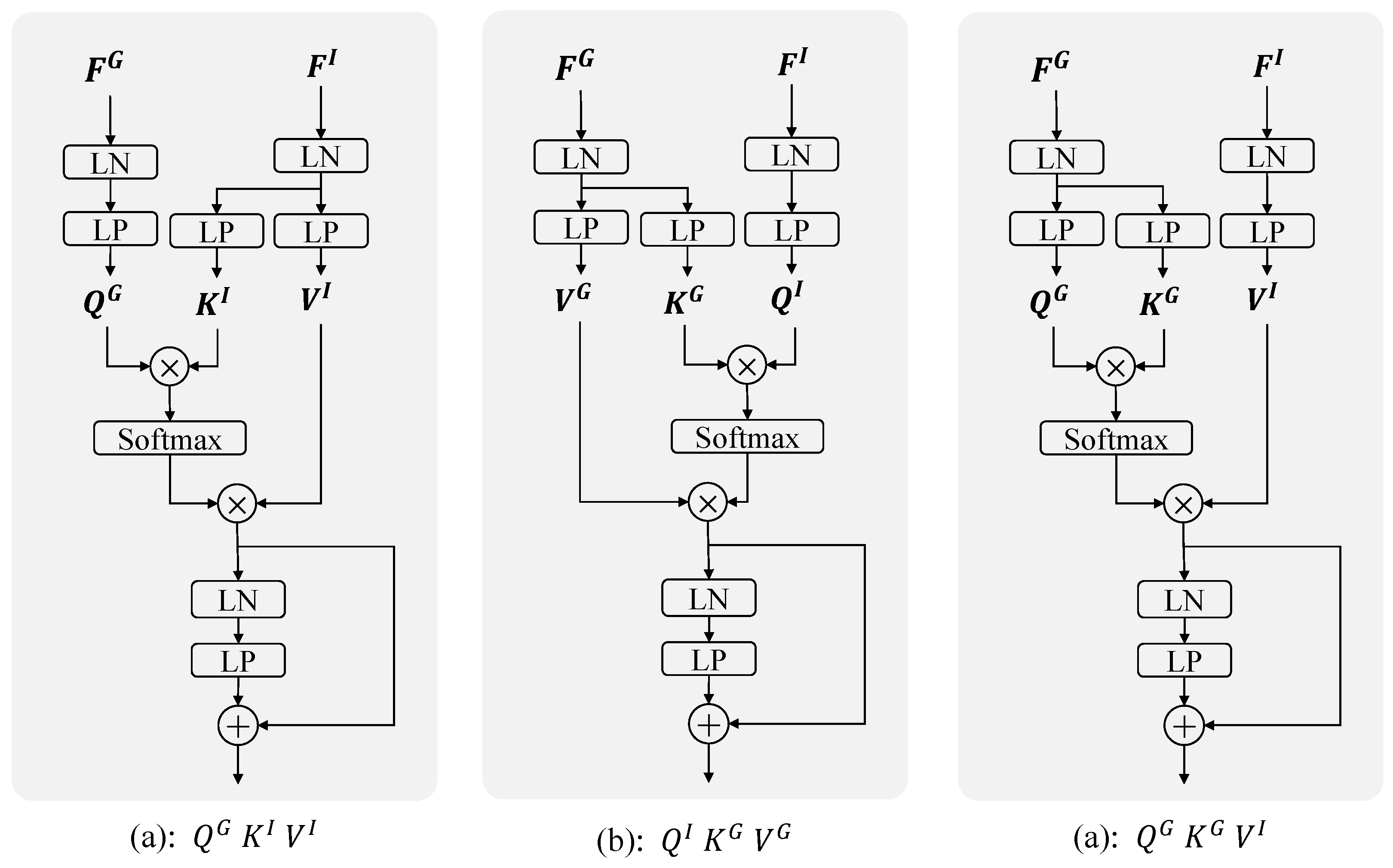

3.2.1. Transformer-Based Cross-Attention

3.2.2. Convolution-Based Attention

3.3. Post Refinement Module

3.4. Loss Function

4. Experiment

4.1. Experiment Setting

4.1.1. Dataset

4.1.2. Evaluation Metrics

4.1.3. Implementation Details

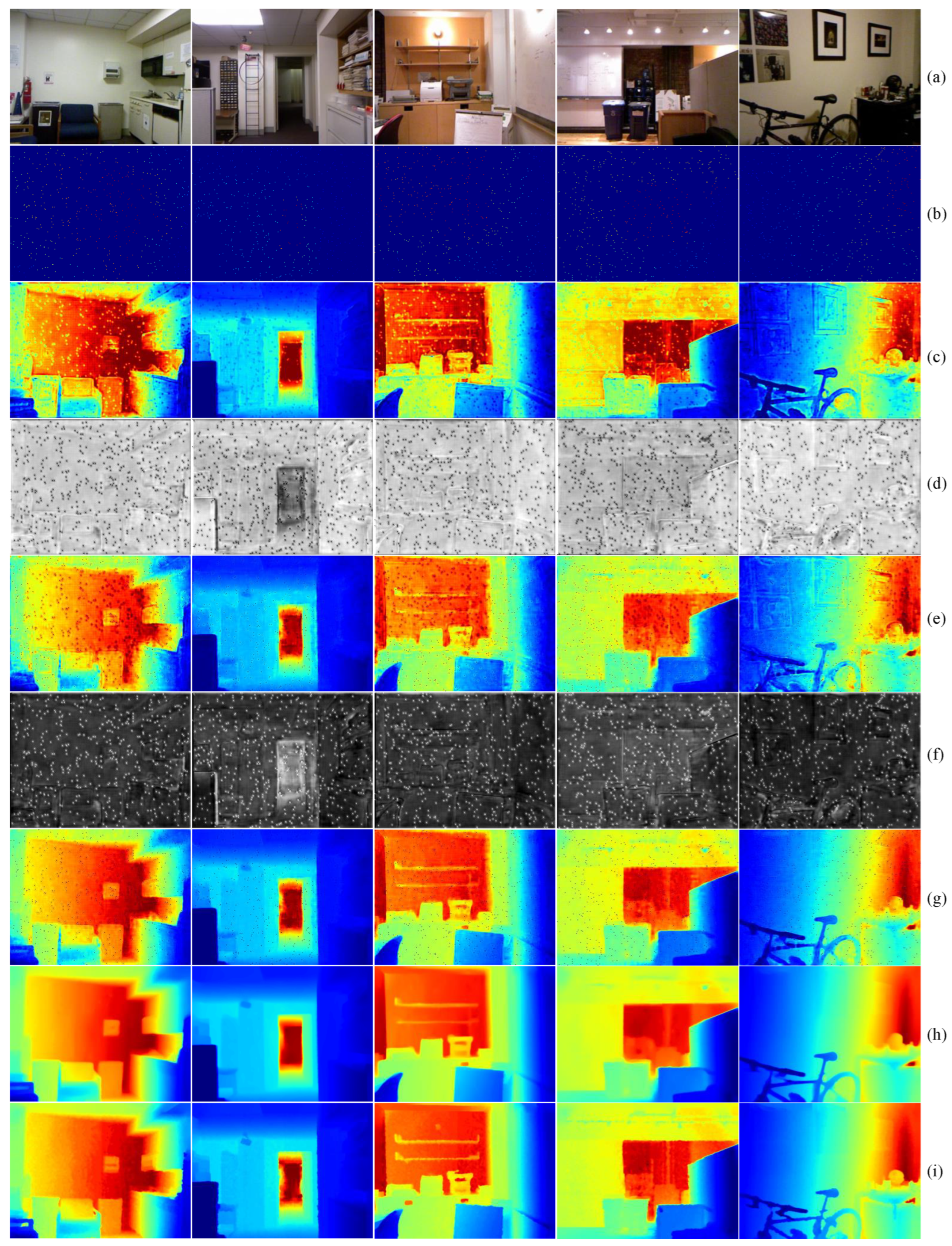

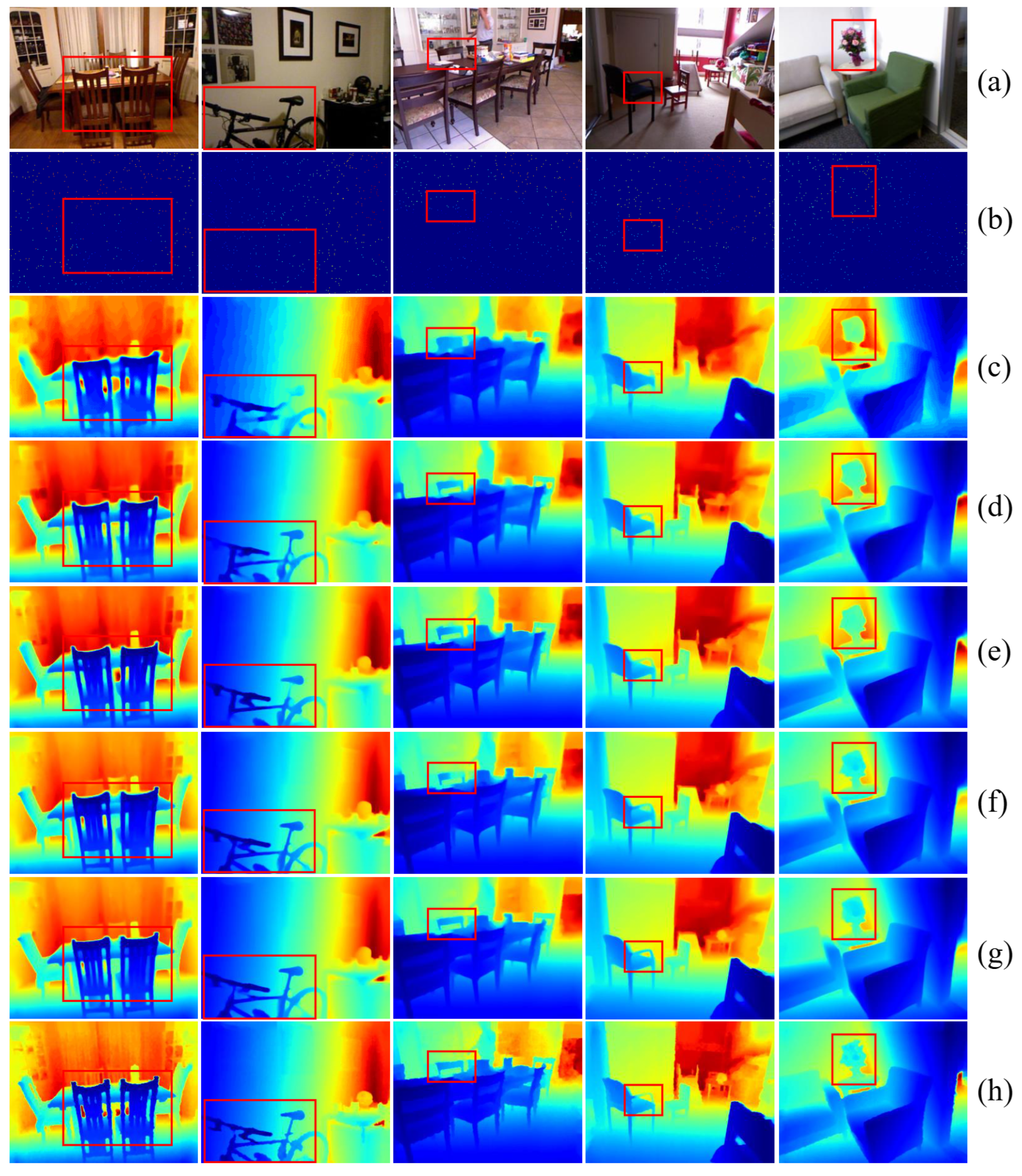

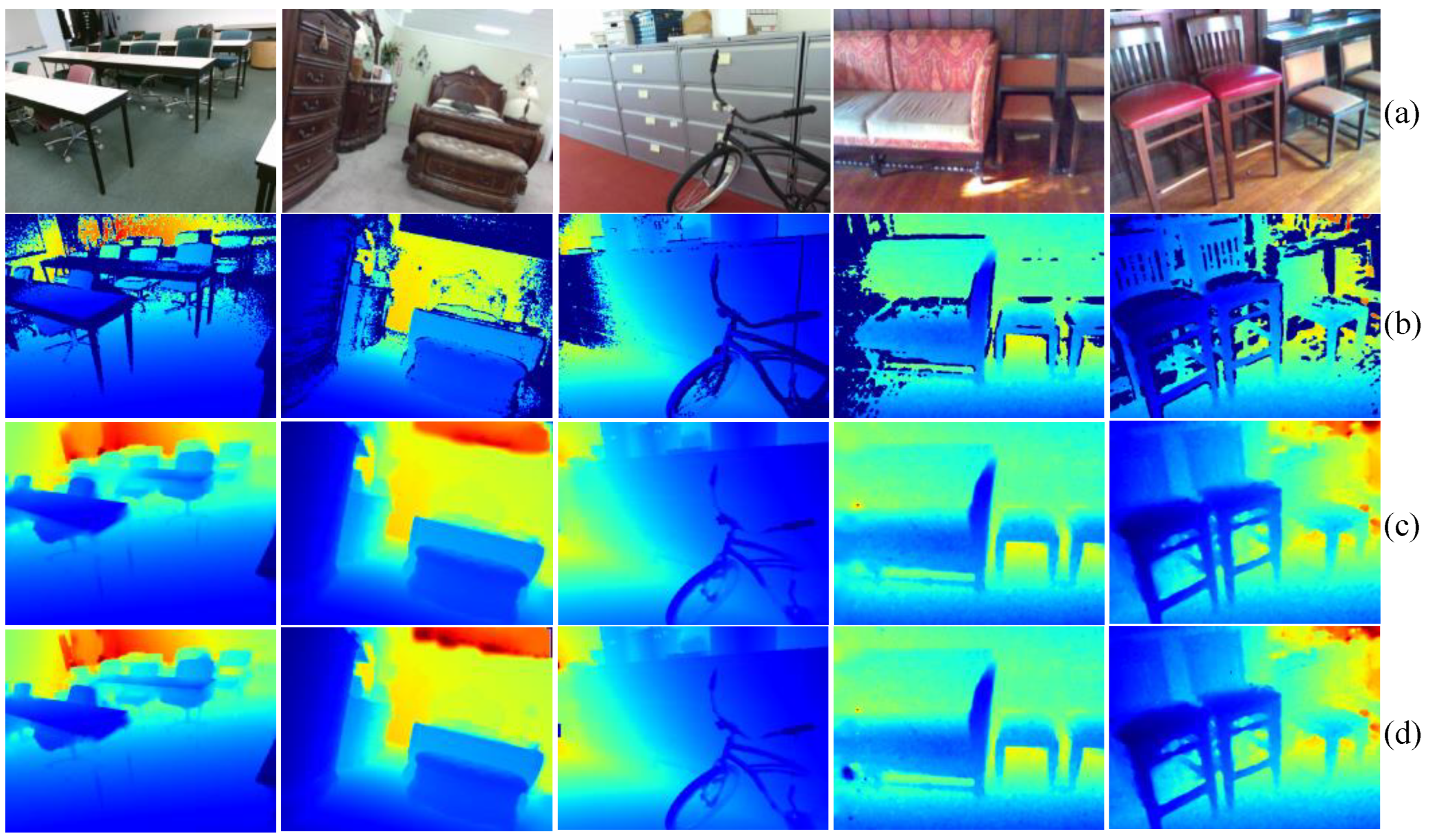

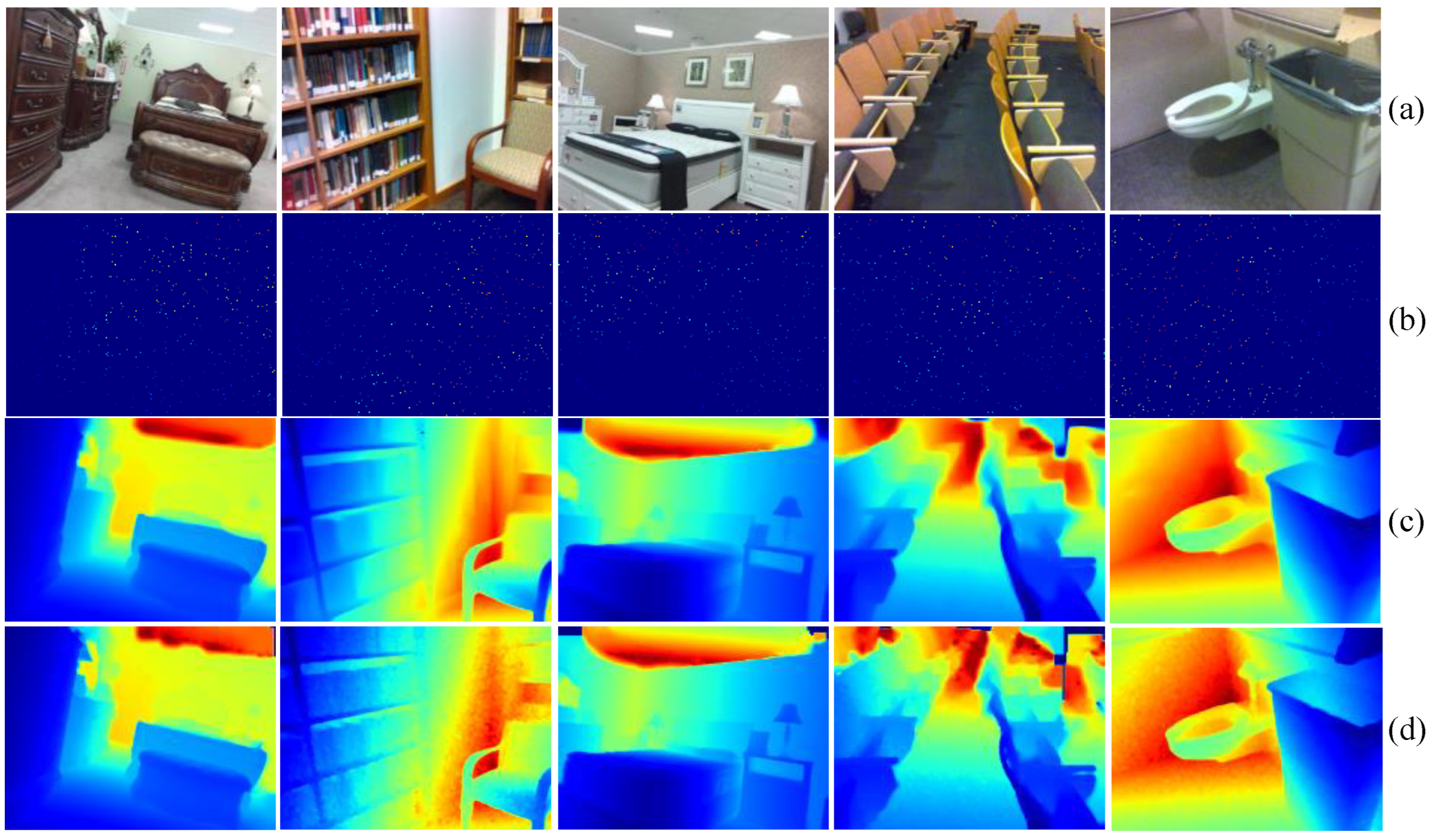

4.2. Visualization

4.3. Ablation Study

4.3.1. Mulit-Scale Dual-Branch Framework

4.3.2. Loss Function

4.3.3. Dual-Attention Fusion Module

- : Use the guided feature and input feature as and , respectively, to generate the correlation matrix, aggregating the information of input feature .

- : Use the guided feature and input feature as and , respectively, to generate the correlation matrix, aggregating the information of feature .

- : Use the guided feature simultaneously as and to generate the correlation matrix, aggregating the information of input feature .

4.4. Comparision with SOTA

4.5. Model Inference Efficiency Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SPN | Spatial Propagation Network |

| CNN | Convolutional Neural Networks |

| NLSPN | Non-Local Spatial Propagation Network |

| BN | Batch Normalization |

| ViT | Vision Transformer |

| JCAT | Joint Convolutional Attention and Transformer |

| DAFM | Dual-Attention Fusion Module |

| LN | Layer Normalization |

| LP | Layer Projection |

| CA | Channel Attention |

| SA | Spatial Attention |

| RMSE | Root Mean Square Error |

| MAE | Mean Absolute Error |

| iRMSE | Inverse Root Mean Square Error |

| iMAE | Inverse Mean Absolute Error |

| Rel | Absolute Relative Error |

| SSIM | Structural Similarity Index Measure |

References

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3d point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4338–4364. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Wang, X.; Yang, X.; Liu, H.; Li, J.; Wang, P. Path planning techniques for mobile robots: Review and prospect. Expert Syst. Appl. 2023, 227, 120254. [Google Scholar] [CrossRef]

- Zhang, Q.; Yan, F.; Song, W.; Wang, R.; Li, G. Automatic obstacle detection method for the train based on deep learning. Sustainability 2023, 15, 1184. [Google Scholar] [CrossRef]

- Uhrig, J.; Schneider, N.; Schneider, L.; Franke, U.; Brox, T.; Geiger, A. Sparsity invariant cnns. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 11–20. [Google Scholar]

- Huang, Z.; Fan, J.; Cheng, S.; Yi, S.; Wang, X.; Li, H. Hms-net: Hierarchical multi-scale sparsity-invariant network for sparse depth completion. IEEE Trans. Image Process. 2019, 29, 3429–3441. [Google Scholar] [CrossRef] [PubMed]

- Lu, K.; Barnes, N.; Anwar, S.; Zheng, L. From depth what can you see? Depth completion via auxiliary image reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11306–11315. [Google Scholar]

- Yu, Q.; Chu, L.; Wu, Q.; Pei, L. Grayscale and normal guided depth completion with a low-cost lidar. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 979–983. [Google Scholar]

- Lee, J.H.; Kim, C.S. Multi-loss rebalancing algorithm for monocular depth estimation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 785–801. [Google Scholar]

- Fu, H.; Gong, M.; Wang, C.; Batmanghelich, K.; Tao, D. Deep ordinal regression network for monocular depth estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2002–2011. [Google Scholar]

- Bhat, S.F.; Alhashim, I.; Wonka, P. Adabins: Depth estimation using adaptive bins. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 4009–4018. [Google Scholar]

- Yang, F.; Zhou, Z. Recovering 3d planes from a single image via convolutional neural networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 85–100. [Google Scholar]

- Li, B.; Huang, Y.; Liu, Z.; Zou, D.; Yu, W. StructDepth: Leveraging the structural regularities for self-supervised indoor depth estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12663–12673. [Google Scholar]

- Long, X.; Lin, C.; Liu, L.; Li, W.; Theobalt, C.; Yang, R.; Wang, W. Adaptive surface normal constraint for depth estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12849–12858. [Google Scholar]

- Yin, W.; Liu, Y.; Shen, C.; Yan, Y. Enforcing geometric constraints of virtual normal for depth prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5684–5693. [Google Scholar]

- Lee, J.H.; Han, M.K.; Ko, D.W.; Suh, I.H. From big to small: Multi-scale local planar guidance for monocular depth estimation. arXiv 2019, arXiv:1907.10326. [Google Scholar]

- Ma, F.; Karaman, S. Sparse-to-dense: Depth prediction from sparse depth samples and a single image. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4796–4803. [Google Scholar]

- Hua, J.; Gong, X. A normalized convolutional neural network for guided sparse depth upsampling. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018; pp. 2283–2290. [Google Scholar]

- Wang, B.; Feng, Y.; Liu, H. Multi-scale features fusion from sparse LiDAR data and single image for depth completion. Electron. Lett. 2018, 54, 1375–1377. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, P.; Li, H.; Zheng, N. Multiscale adaptation fusion networks for depth completion. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar]

- Qiu, J.; Cui, Z.; Zhang, Y.; Zhang, X.; Liu, S.; Zeng, B.; Pollefeys, M. Deeplidar: Deep surface normal guided depth prediction for outdoor scene from sparse lidar data and single color image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3313–3322. [Google Scholar]

- Hu, M.; Wang, S.; Li, B.; Ning, S.; Fan, L.; Gong, X. Penet: Towards precise and efficient image guided depth completion. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May 30–5 June 2021; pp. 13656–13662. [Google Scholar]

- Rho, K.; Ha, J.; Kim, Y. Guideformer: Transformers for image guided depth completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6250–6259. [Google Scholar]

- Cheng, X.; Wang, P.; Yang, R. Depth estimation via affinity learned with convolutional spatial propagation network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 103–119. [Google Scholar]

- Cheng, X.; Wang, P.; Guan, C.; Yang, R. Cspn++: Learning context and resource aware convolutional spatial propagation networks for depth completion. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 10615–10622. [Google Scholar]

- Park, J.; Joo, K.; Hu, Z.; Liu, C.K.; So Kweon, I. Non-local spatial propagation network for depth completion. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 120–136. [Google Scholar]

- Lin, Y.; Cheng, T.; Zhong, Q.; Zhou, W.; Yang, H. Dynamic spatial propagation network for depth completion. In Proceedings of the AAAI, Online, 22 February–1 March 2022; pp. 1638–1646. [Google Scholar]

- Liu, X.; Shao, X.; Wang, B.; Li, Y.; Wang, S. Graphcspn: Geometry-aware depth completion via dynamic gcns. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 90–107. [Google Scholar]

- Wang, Y.; Li, B.; Zhang, G.; Liu, Q.; Gao, T.; Dai, Y. LRRU: Long-short Range Recurrent Updating Networks for Depth Completion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 9422–9432. [Google Scholar]

- Imran, S.; Liu, X.; Morris, D. Depth completion with twin surface extrapolation at occlusion boundaries. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2583–2592. [Google Scholar]

- Xu, Y.; Zhu, X.; Shi, J.; Zhang, G.; Bao, H.; Li, H. Depth completion from sparse lidar data with depth-normal constraints. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2811–2820. [Google Scholar]

- Chen, Y.; Yang, B.; Liang, M.; Urtasun, R. Learning joint 2d-3d representations for depth completion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10023–10032. [Google Scholar]

- Yu, Z.; Sheng, Z.; Zhou, Z.; Luo, L.; Cao, S.Y.; Gu, H.; Zhang, H.; Shen, H.L. Aggregating Feature Point Cloud for Depth Completion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 8732–8743. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Lee, Y.; Kim, J.; Willette, J.; Hwang, S.J. Mpvit: Multi-path vision transformer for dense prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7287–7296. [Google Scholar]

- Xu, W.; Xu, Y.; Chang, T.; Tu, Z. Co-scale conv-attentional image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 9981–9990. [Google Scholar]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision transformers for dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12179–12188. [Google Scholar]

- Zhang, Y.; Guo, X.; Poggi, M.; Zhu, Z.; Huang, G.; Mattoccia, S. Completionformer: Depth completion with convolutions and vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 18527–18536. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part V 12. Springer: Berlin/Heidelberg, Germany, 2012; pp. 746–760. [Google Scholar]

- Song, S.; Lichtenberg, S.P.; Xiao, J. Sun rgb-d: A rgb-d scene understanding benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 567–576. [Google Scholar]

- Zhao, C.; Zhang, Y.; Poggi, M.; Tosi, F.; Guo, X.; Zhu, Z.; Huang, G.; Tang, Y.; Mattoccia, S. Monovit: Self-supervised monocular depth estimation with a vision transformer. In Proceedings of the 2022 International Conference on 3D Vision (3DV), Prague, Czech Republic, 12–15 September 2022; pp. 668–678. [Google Scholar]

- Guo, J.; Han, K.; Wu, H.; Tang, Y.; Chen, X.; Wang, Y.; Xu, C. Cmt: Convolutional neural networks meet vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12175–12185. [Google Scholar]

- Tang, J.; Tian, F.P.; Feng, W.; Li, J.; Tan, P. Learning guided convolutional network for depth completion. IEEE Trans. Image Process. 2020, 30, 1116–1129. [Google Scholar] [CrossRef] [PubMed]

- Schuster, R.; Wasenmuller, O.; Unger, C.; Stricker, D. Ssgp: Sparse spatial guided propagation for robust and generic interpolation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 197–206. [Google Scholar]

- Yan, Z.; Wang, K.; Li, X.; Zhang, Z.; Li, J.; Yang, J. RigNet: Repetitive image guided network for depth completion. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 214–230. [Google Scholar]

- Van Gansbeke, W.; Neven, D.; De Brabandere, B.; Van Gool, L. Sparse and noisy lidar completion with rgb guidance and uncertainty. In Proceedings of the 2019 16th International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 27–31 May 2019; pp. 1–6. [Google Scholar]

- Lee, S.; Lee, J.; Kim, D.; Kim, J. Deep architecture with cross guidance between single image and sparse lidar data for depth completion. IEEE Access 2020, 8, 79801–79810. [Google Scholar] [CrossRef]

- Feng, C.; Wang, X.; Zhang, Y.; Zhao, C.; Song, M. CASwin Transformer: A Hierarchical Cross Attention Transformer for Depth Completion. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 2836–2841. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, L.; Song, X.; Lyu, X.; Diao, J.; Wang, M.; Liu, Y.; Zhang, L. Fcfr-net: Feature fusion based coarse-to-fine residual learning for depth completion. In Proceedings of the proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 2136–2144. [Google Scholar]

- Zhao, S.; Gong, M.; Fu, H.; Tao, D. Adaptive context-aware multi-modal network for depth completion. IEEE Trans. Image Process. 2021, 30, 5264–5276. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Wang, M.; Che, Z.; Xu, Z.; Qiao, X.; Qi, M.; Feng, F.; Tang, J. Rgb-depth fusion gan for indoor depth completion. In Proceedings of the IEEE/Cvf Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6209–6218. [Google Scholar]

- Kam, J.; Kim, J.; Kim, S.; Park, J.; Lee, S. Costdcnet: Cost volume based depth completion for a single rgb-d image. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 257–274. [Google Scholar]

- Zhou, W.; Yan, X.; Liao, Y.; Lin, Y.; Huang, J.; Zhao, G.; Cui, S.; Li, Z. BEV@ DC: Bird’s-Eye View Assisted Training for Depth Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 9233–9242. [Google Scholar]

- Senushkin, D.; Romanov, M.; Belikov, I.; Patakin, N.; Konushin, A. Decoder modulation for indoor depth completion. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 2181–2188. [Google Scholar]

- Deng, Y.; Deng, X.; Xu, M. A Two-stage hybrid CNN-Transformer Network for RGB Guided Indoor Depth Completion. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 1127–1132. [Google Scholar]

| Model | Pyramid | NLSPN | RMSE | MAE | iRMSE | iMAE | REL |

|---|---|---|---|---|---|---|---|

| Single-B | ✓ | 0.108 | 49.94 | 17.77 | 7.82 | 0.017 | |

| Dual-B | 0.094 | 38.79 | 14.83 | 5.81 | 0.013 | ||

| Dual-B | ✓ | 0.090 | 37.09 | 14.11 | 5.52 | 0.012 | |

| Dual-B | ✓ | ✓ | 0.089 | 34.06 | 13.82 | 5.00 | 0.011 |

| Epoch | 1∼25 | 25∼50 | 50∼100 | 1∼25 | 25∼50 | 50∼100 | 1∼25 | 25∼50 | 50∼100 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0.5 | 0 | |

| 0 | 0 | 0 | 0.2 | 0.2 | 0.2 | 0.2 | 0 | 0 | |

| 0 | 0 | 0 | 0.2 | 0.2 | 0.2 | 0.2 | 0 | 0 | |

| RMSE | 0.096 | 0.095 | 0.089 | ||||||

| Conv | Trans | RMSE | MAE | iRMSE | iMAE | REL |

|---|---|---|---|---|---|---|

| ✓ | 0.095 | 38.71 | 14.92 | 5.73 | 0.013 | |

| ✓ | 0.094 | 38.80 | 14.57 | 5.73 | 0.013 | |

| ✓ | ✓ | 0.090 | 37.09 | 14.11 | 5.52 | 0.012 |

| Q | K | V | RMSE | MAE | iRMSE | iMAE | REL |

|---|---|---|---|---|---|---|---|

| 0.090 | 37.09 | 14.11 | 5.52 | 0.012 | |||

| 0.092 | 37.15 | 14.25 | 5.37 | 0.012 | |||

| 0.092 | 36.71 | 14.29 | 5.37 | 0.012 |

| Methods | RMSE ↓ | REL ↓ | ↑ | ↑ | ↑ |

|---|---|---|---|---|---|

| CSPN [23] | 0.115 | 0.022 | 99.2 | 99.9 | 100.0 |

| Deeplidar [20] | 0.115 | 0.022 | 99.3 | 99.9 | 100.0 |

| DepthNormal [30] | 0.112 | 0.018 | 99.5 | 99.9 | 100.0 |

| FCFR-net [54] | 0.106 | 0.015 | 99.5 | 99.9 | 100.0 |

| ACMNet [55] | 0.105 | 0.015 | 99.4 | 99.9 | 100.0 |

| RGBD-FusionGAN [56] | 0.103 | 0.016 | 99.4 | 99.9 | 100.0 |

| GuideNet [45] | 0.101 | 0.015 | 99.5 | 99.9 | 100.0 |

| CostDCNet [57] | 0.096 | 0.013 | 99.5 | 99.9 | 100.0 |

| NLSPN [25] | 0.092 | 0.012 | 99.6 | 99.9 | 100.0 |

| TWISE [29] | 0.092 | 0.012 | 99.6 | 99.9 | 100.0 |

| LRRU [28] | 0.091 | 0.011 | 99.6 | 99.9 | 100.0 |

| RigNet [47] | 0.090 | 0.013 | 99.6 | 99.9 | 100.0 |

| DySPN [26] | 0.090 | 0.012 | 99.6 | 99.9 | 100.0 |

| GraphCSPN [27] | 0.090 | 0.012 | 99.6 | 99.9 | - |

| CompletionFormer [40] | 0.090 | 0.012 | - | - | - |

| BEVDC [58] | 0.089 | 0.012 | 99.6 | 99.9 | 100.0 |

| PointDC [32] | 0.089 | 0.012 | 99.6 | 99.9 | 100.0 |

| Ours* | 0.090 | 0.012 | 99.6 | 99.9 | 100.0 |

| Ours | 0.089 | 0.011 | 99.6 | 99.9 | 100.0 |

| Methods | RMSE ↓ | MAE ↓ | REL ↓ | SSIM ↑ | ↑ |

|---|---|---|---|---|---|

| CSPN [23] | 0.116 | 0.039 | 0.027 | 97.9 | 91.4 |

| Sparse2Dense [16] | 0.118 | 0.040 | 0.027 | 98.0 | 90.7 |

| FusionNet [48] | 0.129 | 0.025 | 0.020 | 78.9 | 94.7 |

| GuideNet [45] | 0.088 | 0.027 | 0.018 | 75.3 | 93.9 |

| DM-LRN [59] | 0.098 | 0.027 | 0.017 | 98.8 | 94.8 |

| FCFR-Net [54] | 0.088 | 0.027 | 0.020 | 98.9 | 95.0 |

| TS-Net [60] | 0.086 | 0.024 | 0.019 | 99.1 | 95.8 |

| Ours | 0.082 | 0.020 | 0.016 | 98.0 | 96.4 |

| Models | Inference Time | Inference Memory | Model Size | RMSE |

|---|---|---|---|---|

| Single-B | 0.073 s | 2444 M | 177 M | 0.108 |

| Dual-B | 0.166 s | 2830 M | 468 M | 0.090 |

| Dual-B + NLSPN | 0.191 s | 3138 M | 469 M | 0.089 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Jiang, F.; Gong, X. A Transformer-Based Image-Guided Depth-Completion Model with Dual-Attention Fusion Module. Sensors 2024, 24, 6270. https://doi.org/10.3390/s24196270

Wang S, Jiang F, Gong X. A Transformer-Based Image-Guided Depth-Completion Model with Dual-Attention Fusion Module. Sensors. 2024; 24(19):6270. https://doi.org/10.3390/s24196270

Chicago/Turabian StyleWang, Shuling, Fengze Jiang, and Xiaojin Gong. 2024. "A Transformer-Based Image-Guided Depth-Completion Model with Dual-Attention Fusion Module" Sensors 24, no. 19: 6270. https://doi.org/10.3390/s24196270

APA StyleWang, S., Jiang, F., & Gong, X. (2024). A Transformer-Based Image-Guided Depth-Completion Model with Dual-Attention Fusion Module. Sensors, 24(19), 6270. https://doi.org/10.3390/s24196270