Experiment Study on Rock Mass Classification Based on RCM-Equipped Sensors and Diversified Ensemble-Learning Model

Abstract

1. Introduction

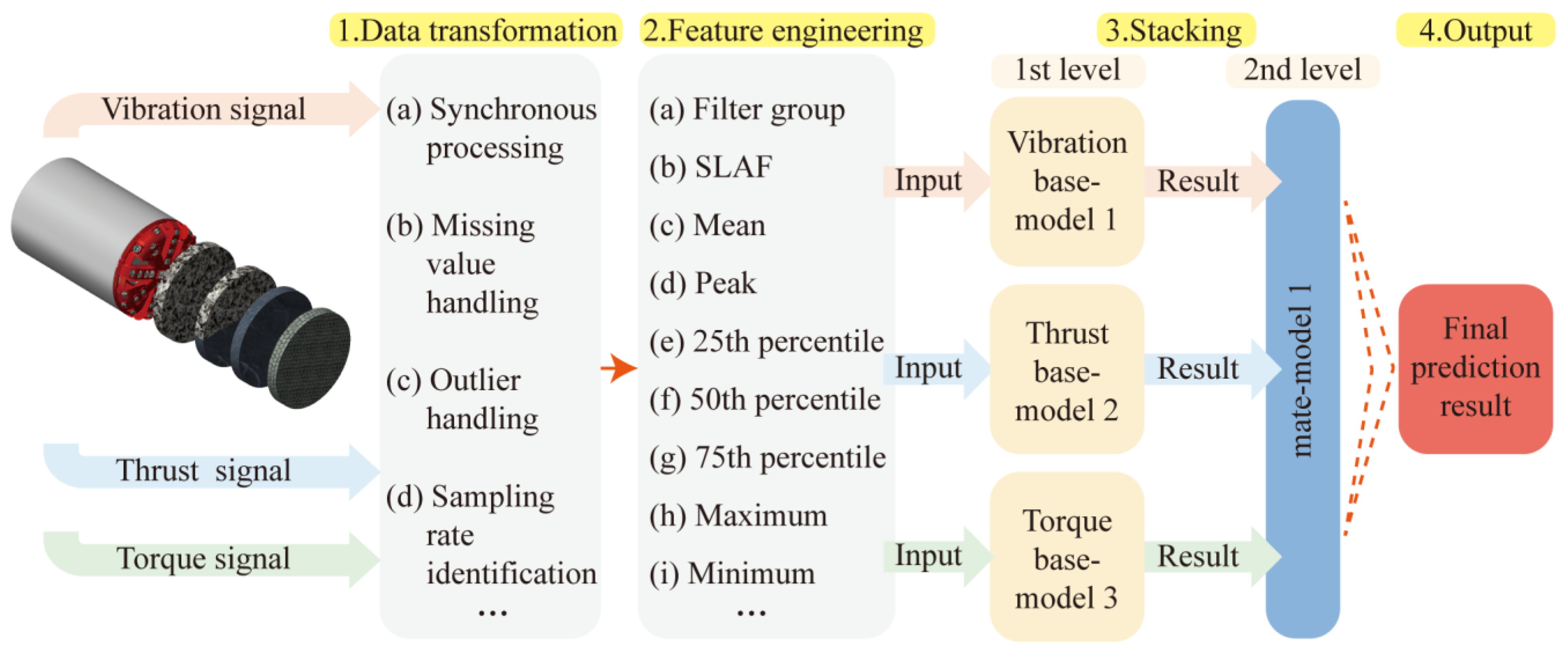

2. Materials and Methods

2.1. Data Transformation

2.2. Feature Engineering Based on TBM Parameters

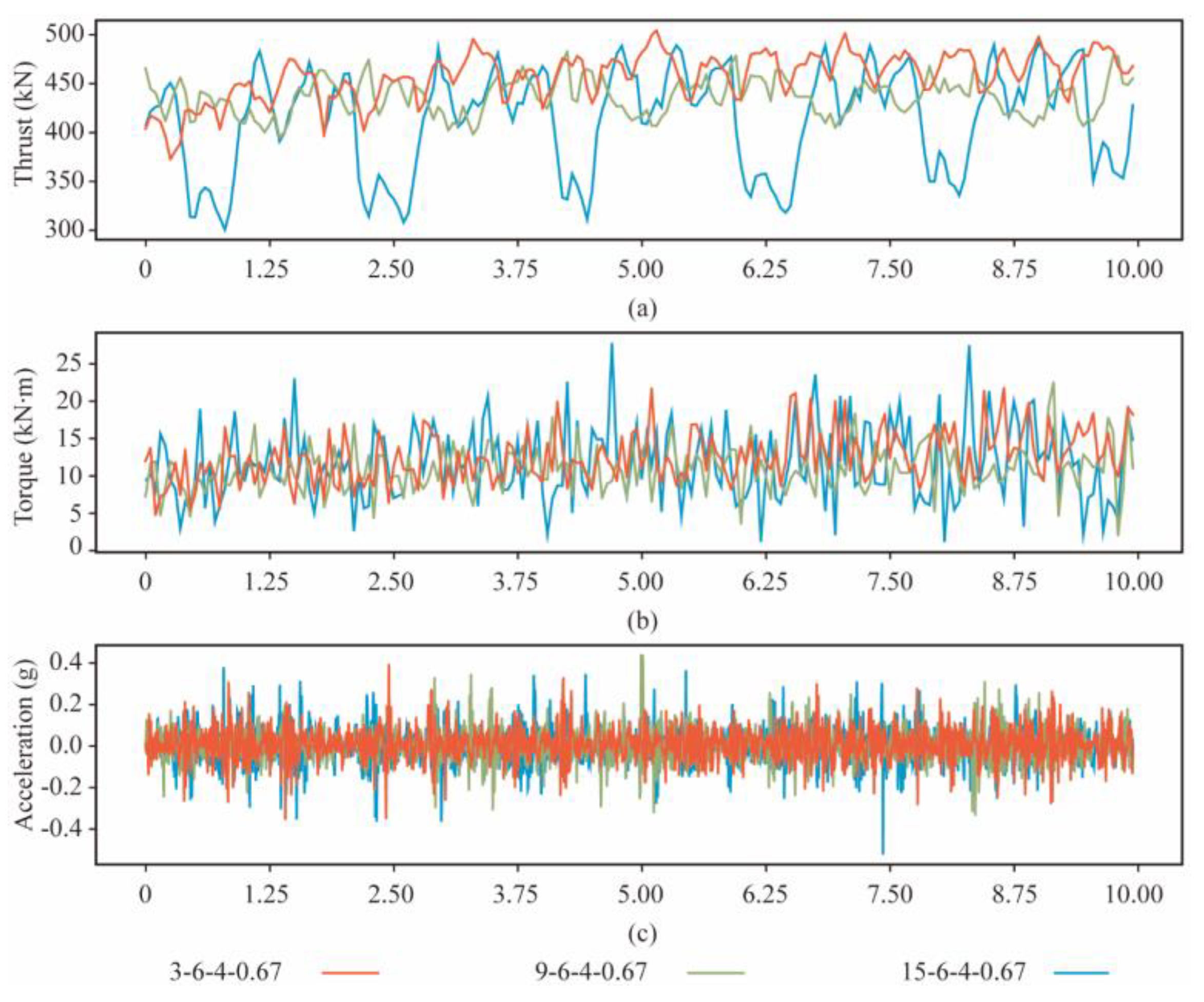

2.2.1. Torque and Thrust

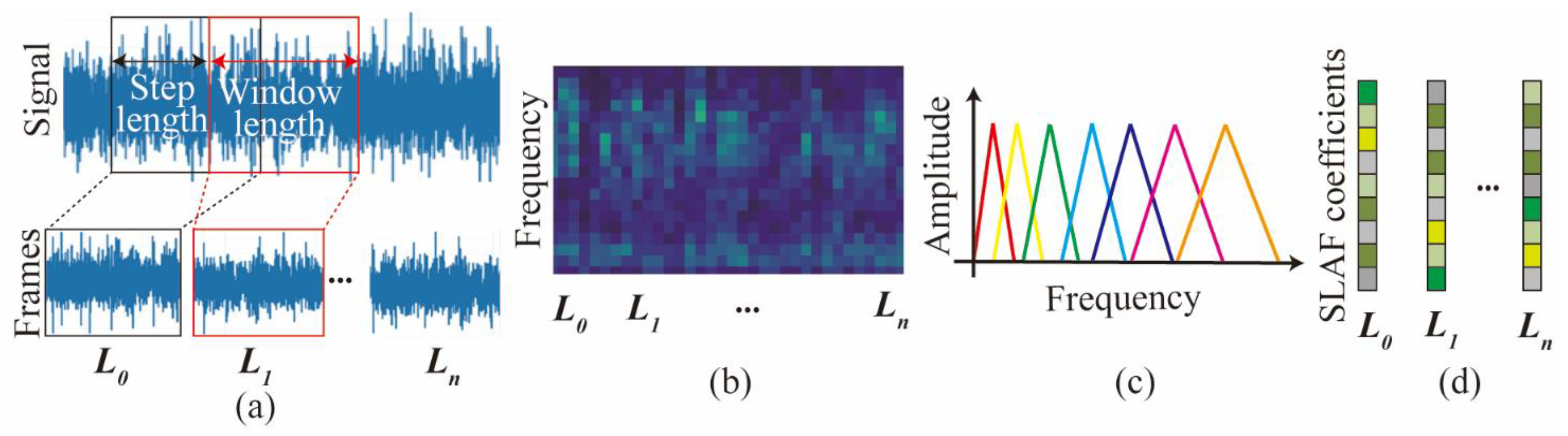

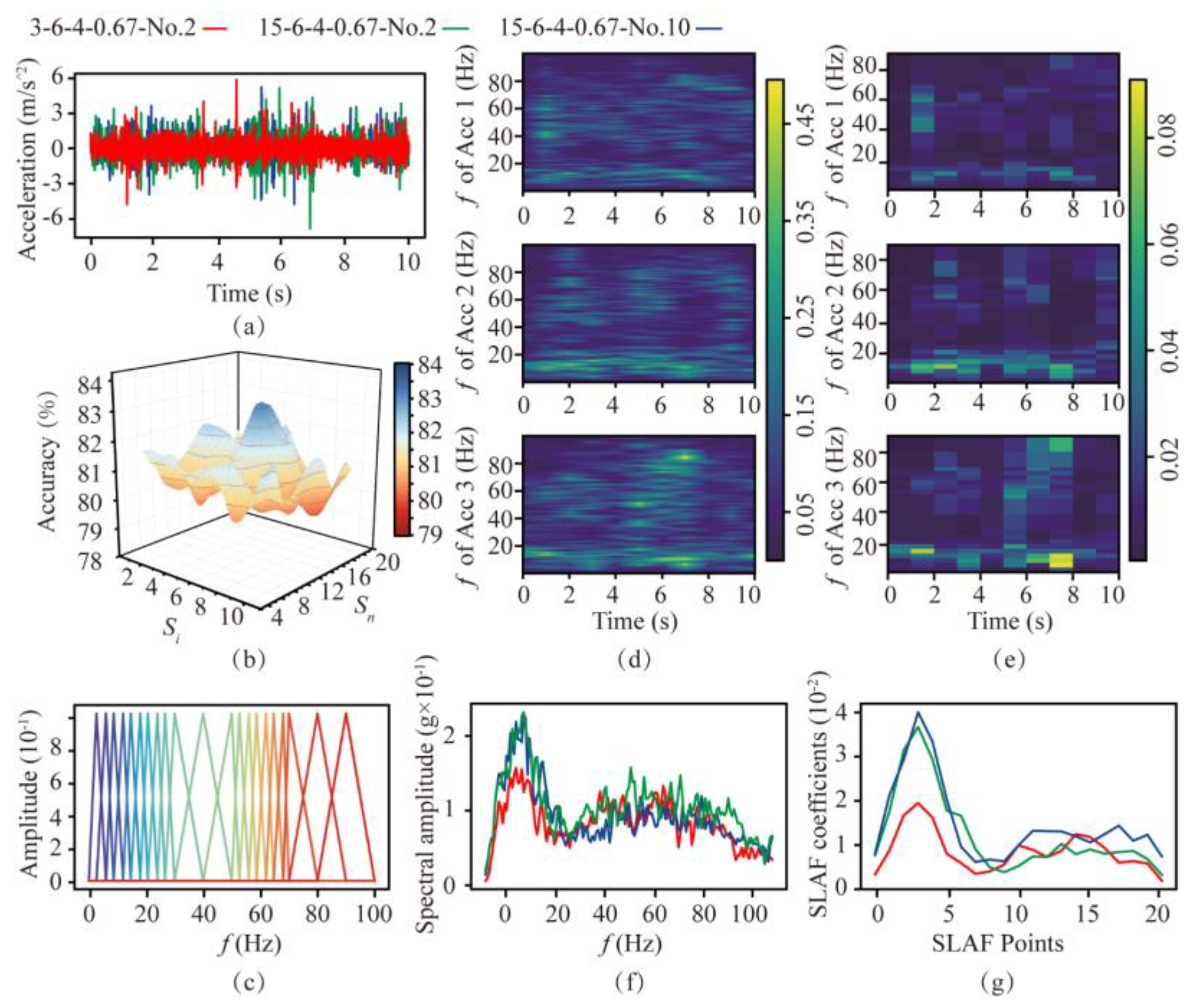

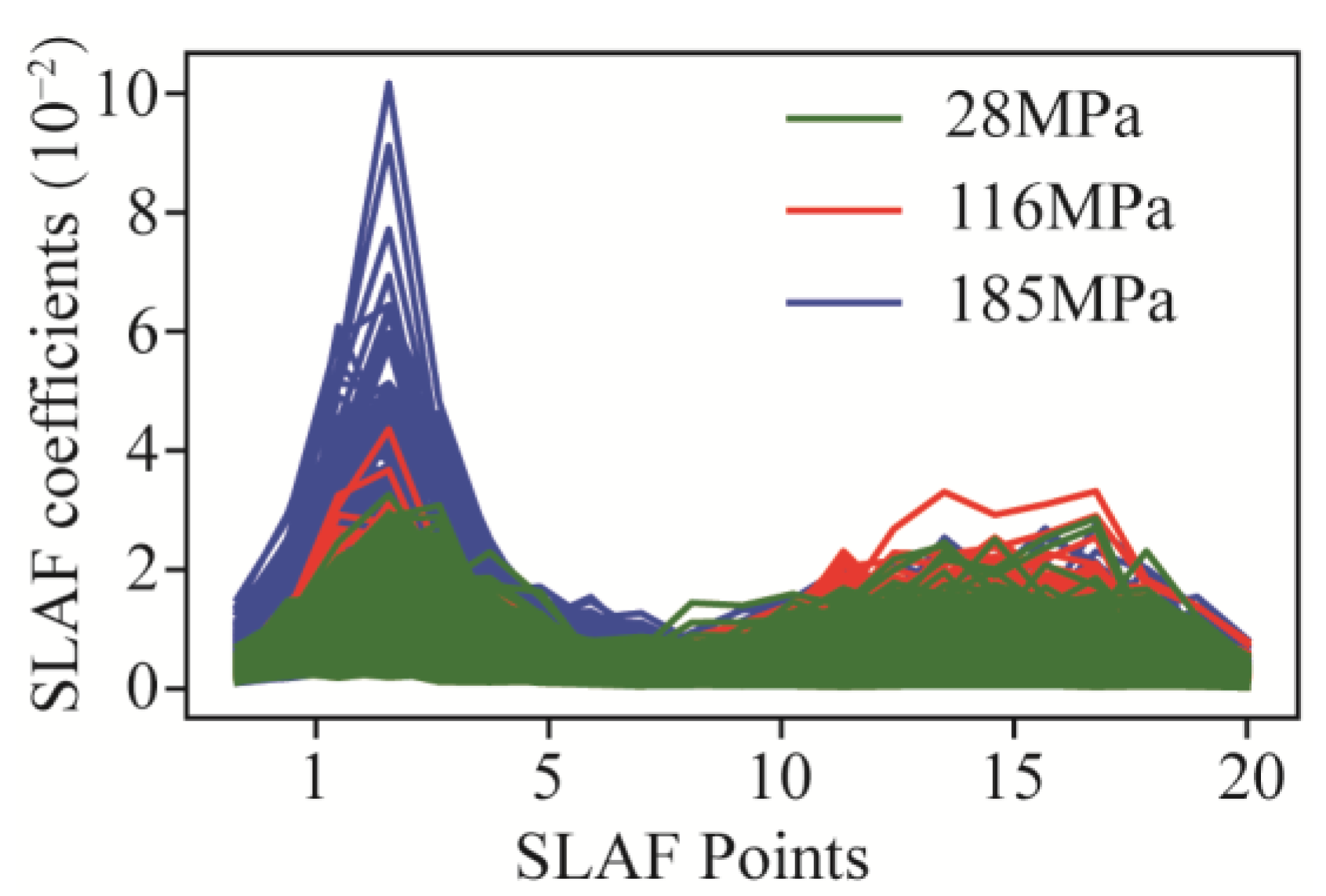

2.2.2. Vibration

2.2.3. TBM Performance

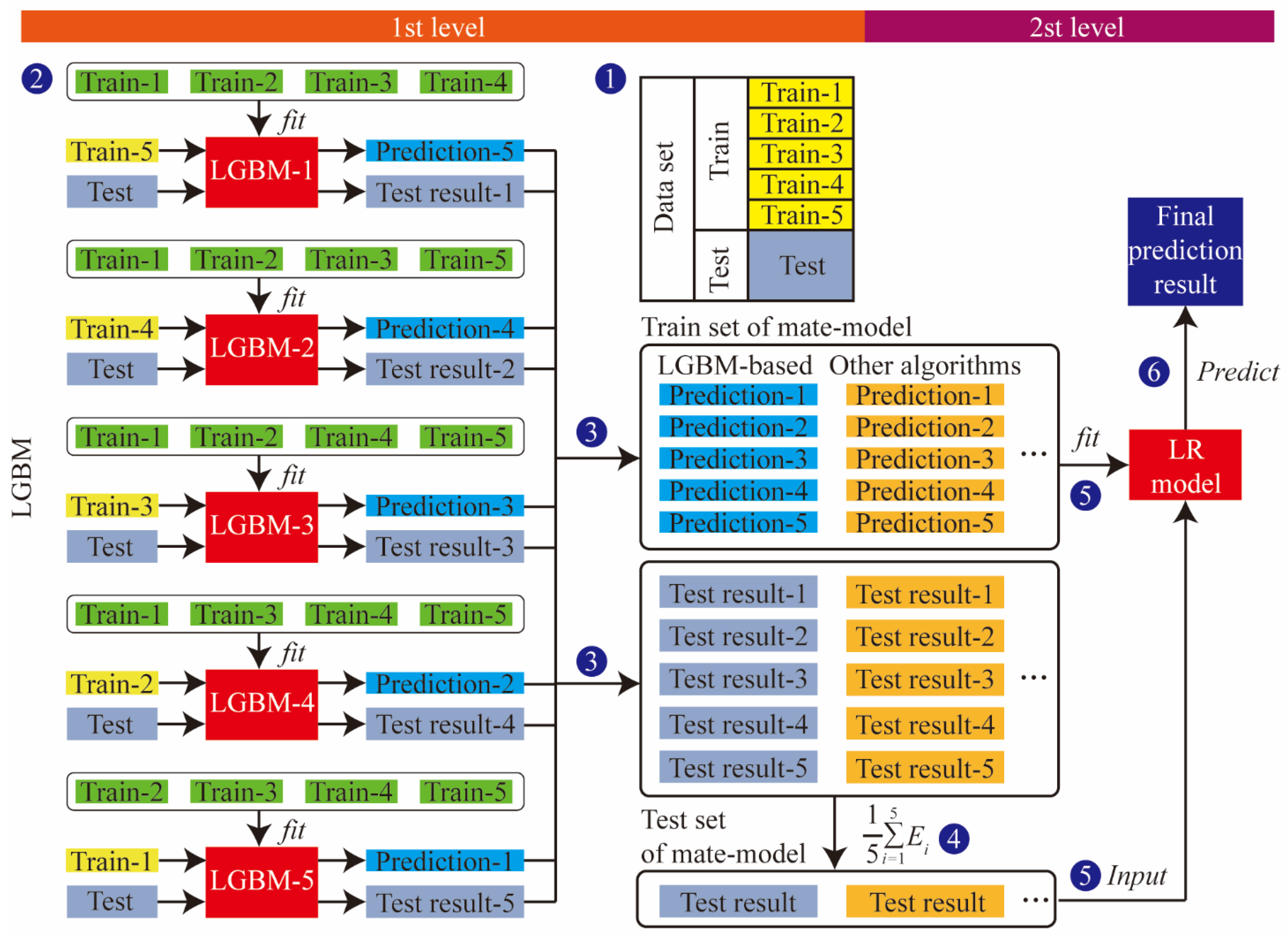

2.3. Stacking Ensemble-Learning Model and the Workflow

2.4. Performance Metrics Introduction

3. Experiment and Datasets

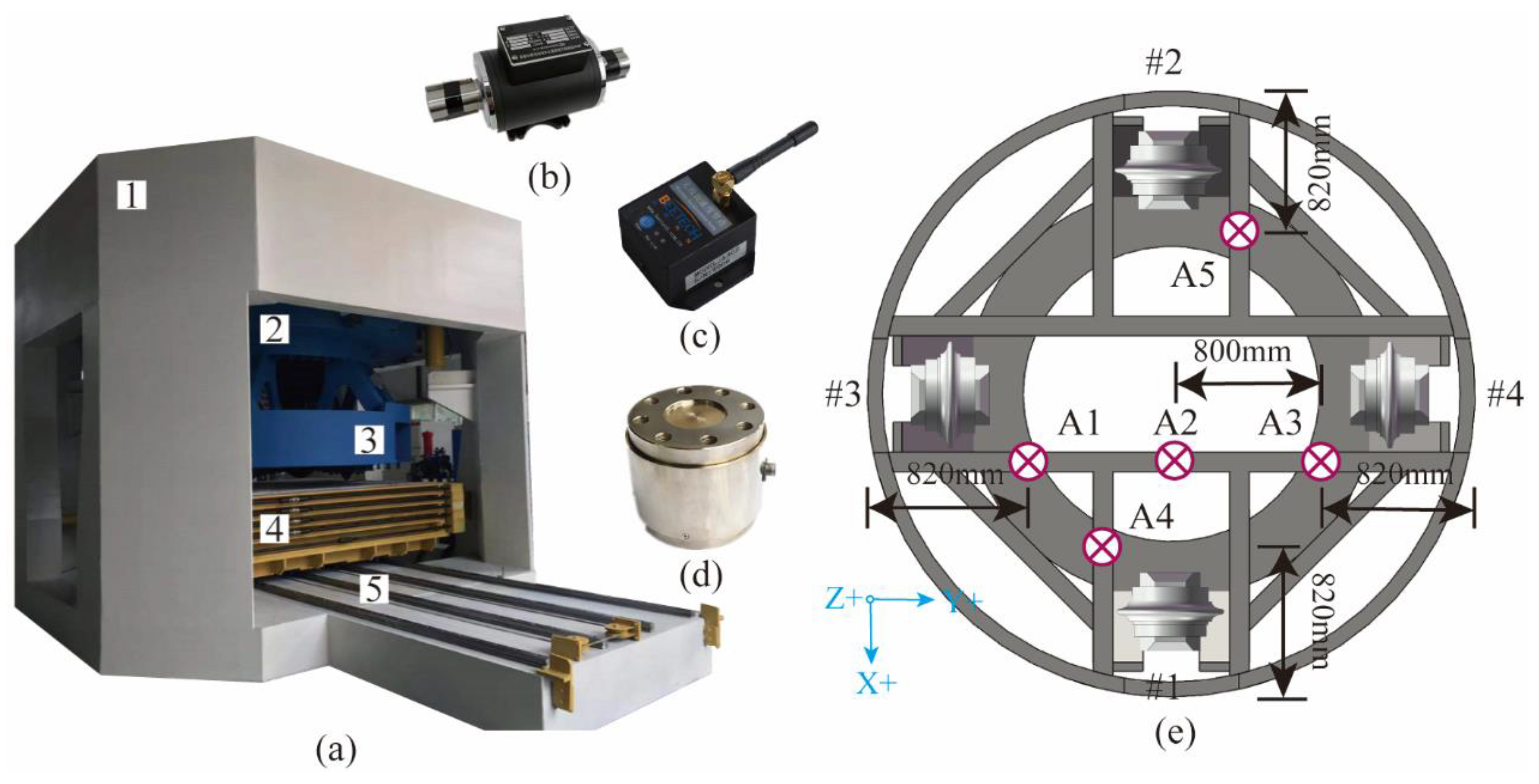

3.1. Experiment Setup

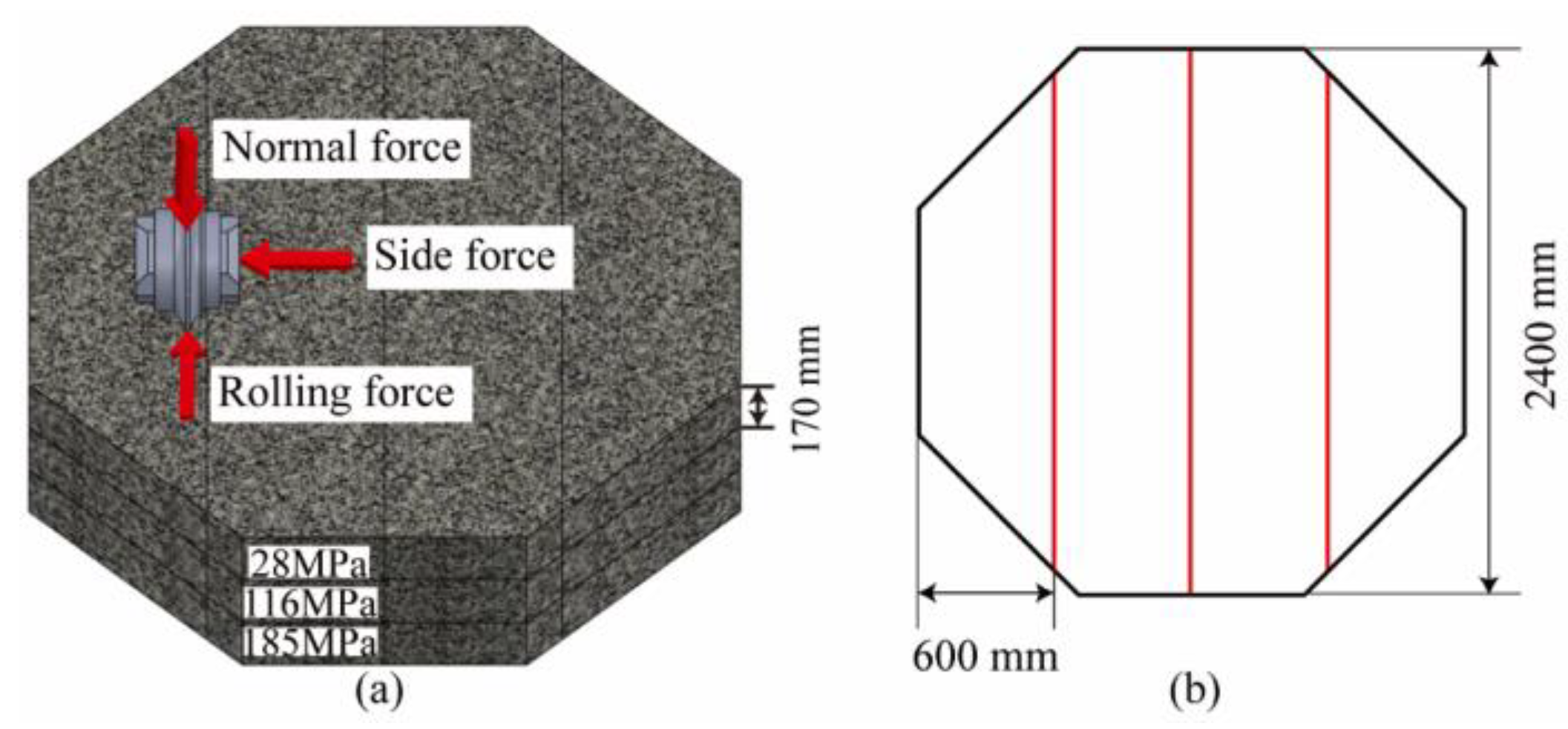

3.1.1. Multi-Mode Boring Test System

3.1.2. Preparation of Rock Specimens

3.1.3. Testing Procedure

3.2. Statistics and Analysis of Datasets

3.2.1. Datasets Division

3.2.2. Discussion on Distribution of the Filter Group

3.2.3. Statistical Features of Data

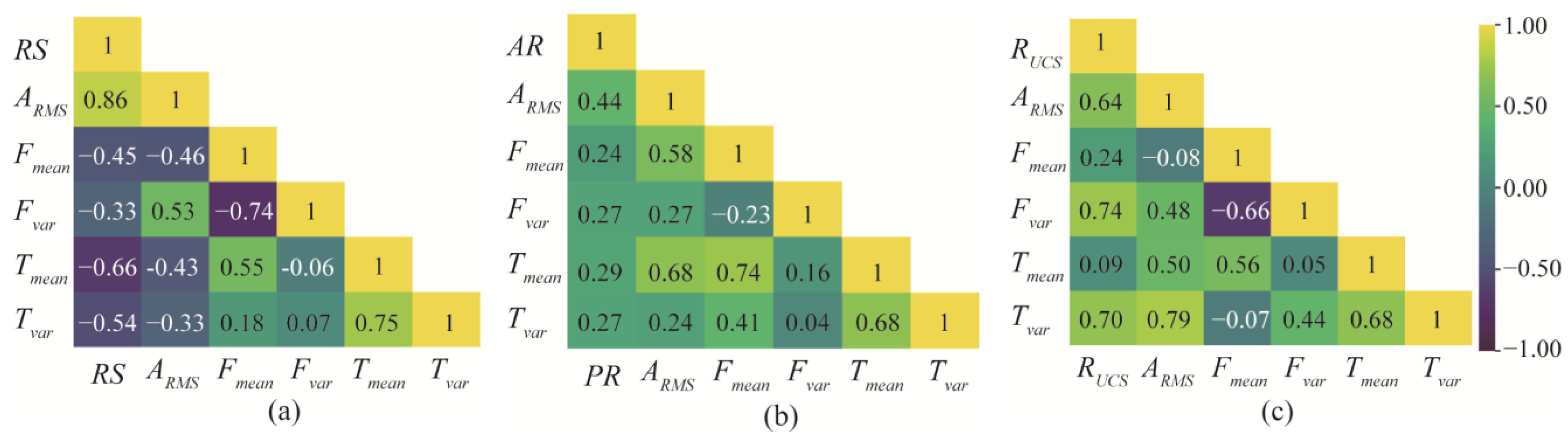

3.2.4. Pearson Correlation Coefficient

4. Model

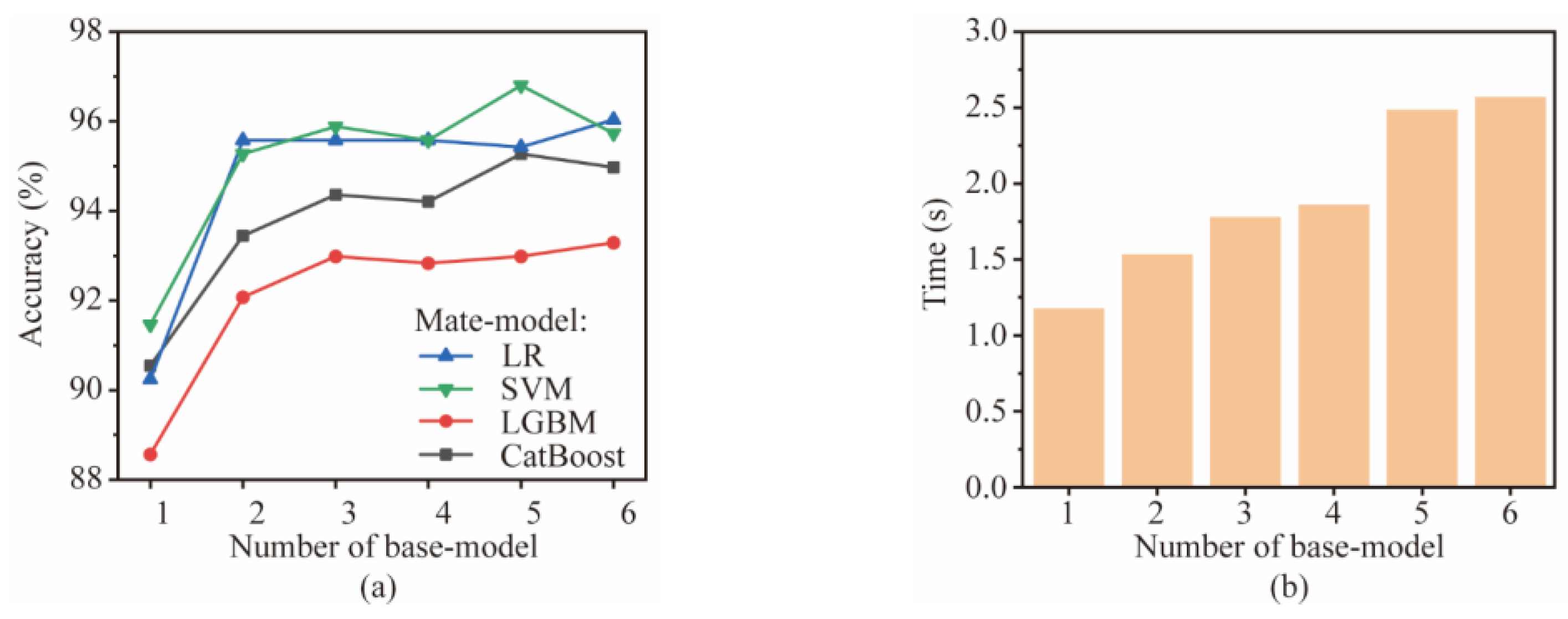

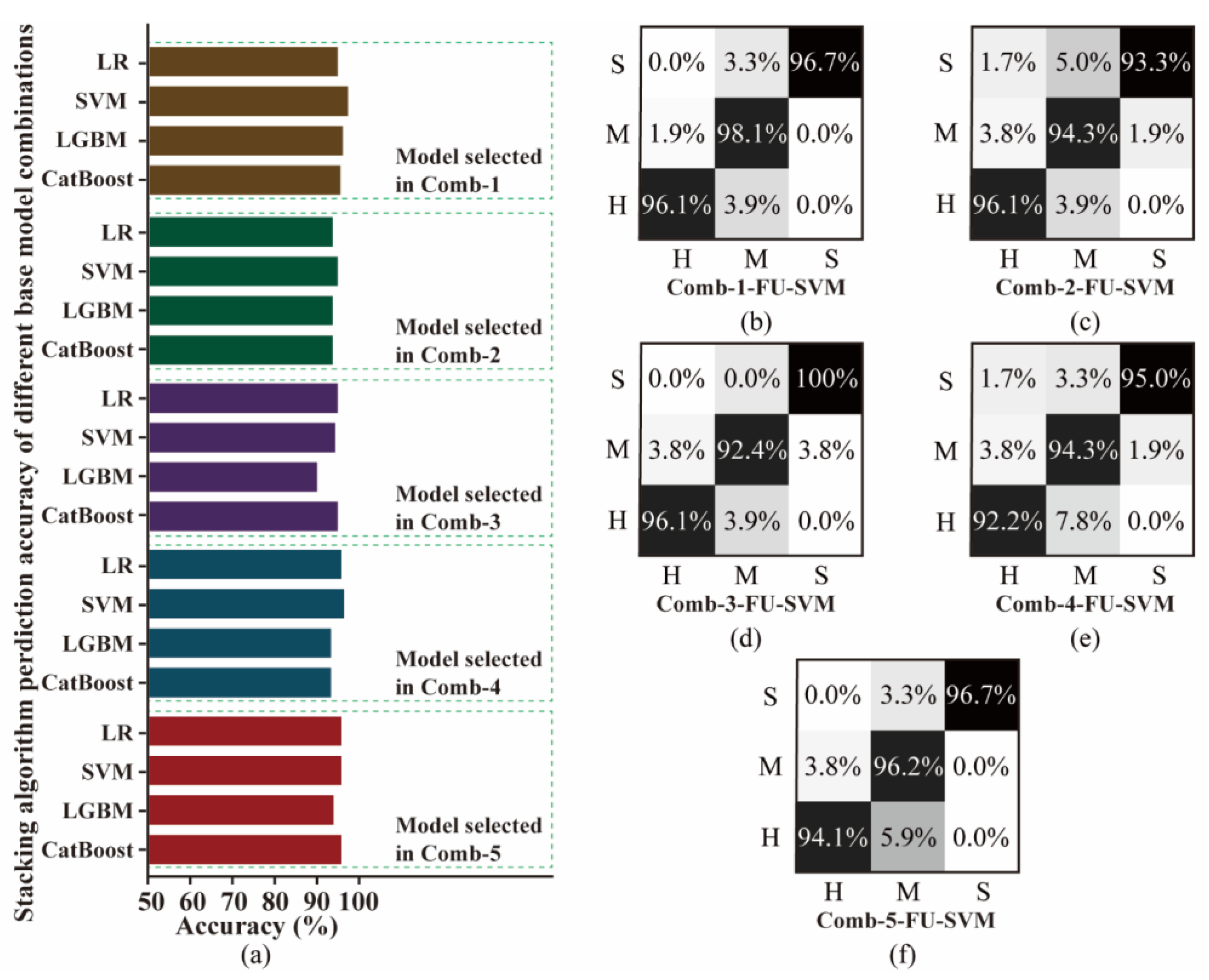

4.1. Model Selection and Combination

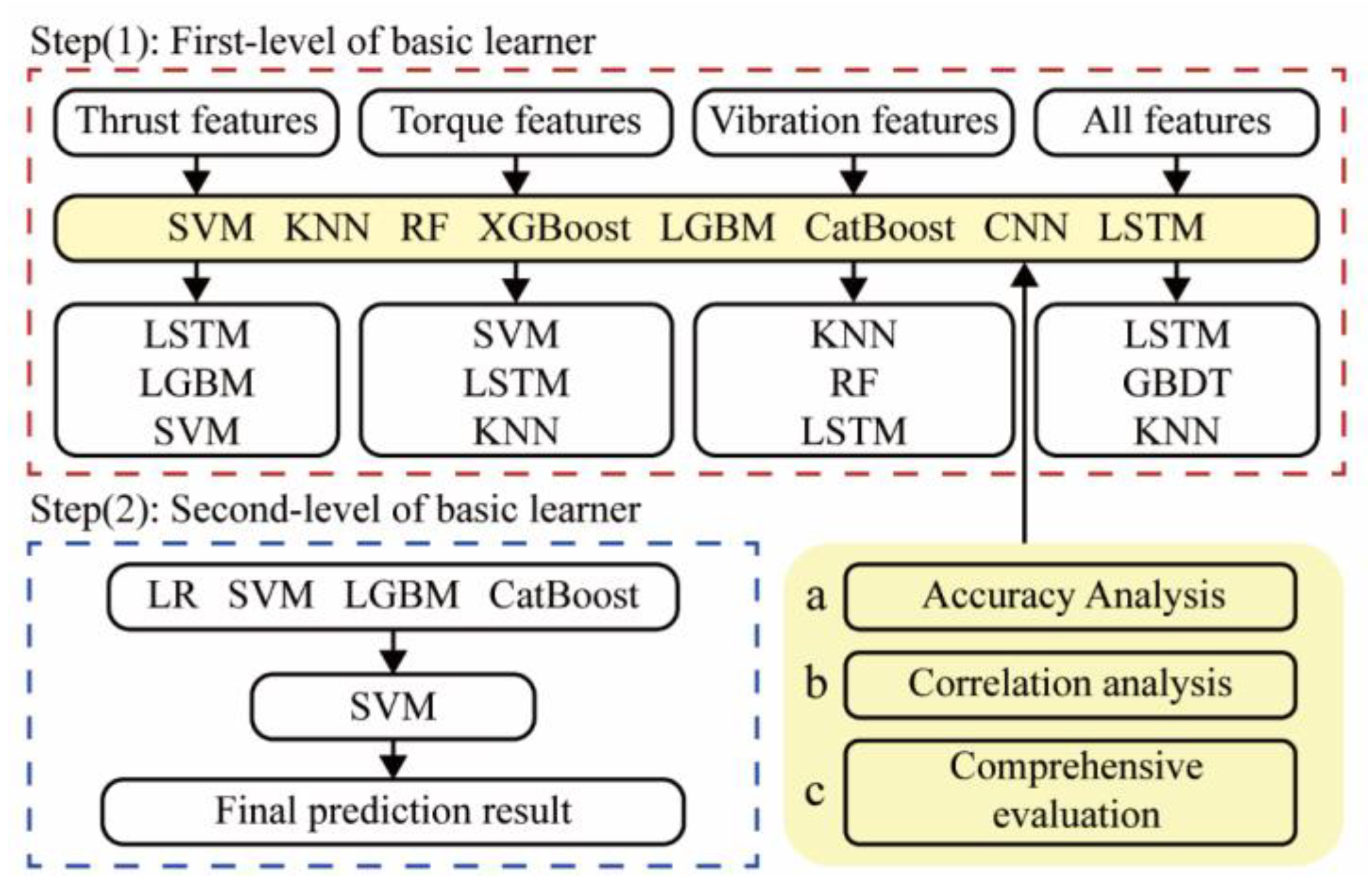

4.2. Feature Importance Identification

4.3. Establishment of Stacking Ensemble-Learning Model

5. Results and Discussion

5.1. Accuracy

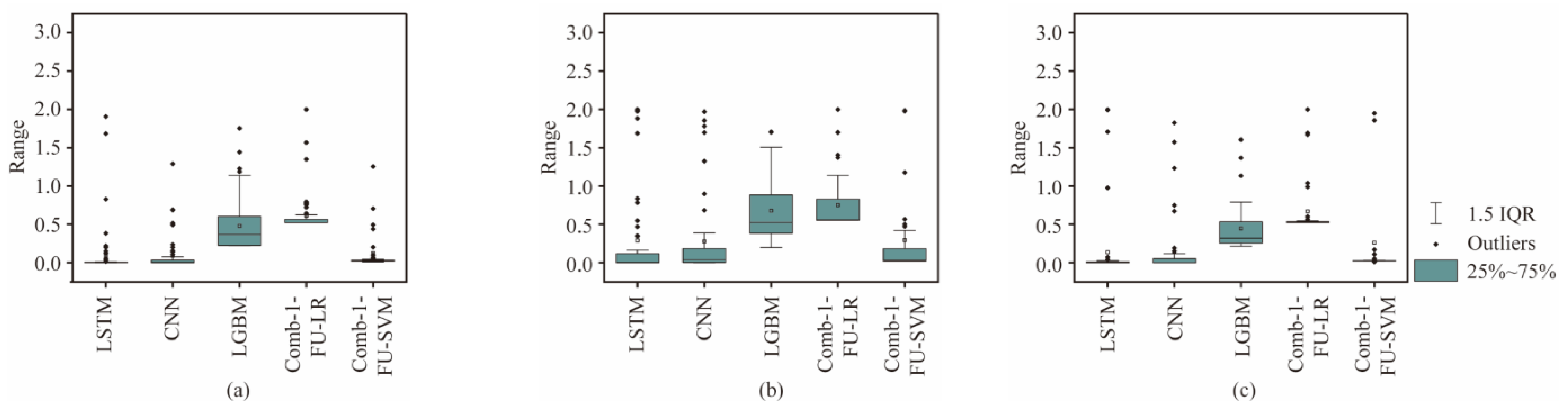

5.2. Stability

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, X.; Zhu, H.H.; Zhu, M.Q.; Zhang, L.Y.; Ju, J.W. An integrated parameter prediction framework for intelligent TBM excavation in hard rock. Tunn. Undergr. Space Technol. 2021, 118, 13. [Google Scholar] [CrossRef]

- Chen, J.T.; Yu, H.T.; Bobet, A.; Yuan, Y. Shaking table tests of transition tunnel connecting TBM and drill-and-blast tunnels. Tunn. Undergr. Space Technol. 2020, 96, 17. [Google Scholar] [CrossRef]

- Wang, Z.W.; Li, F.; Mei, G.D. OpenMP Parallel Finite-Discrete Element Method for Modeling Excavation Support with Rockbolt and Grouting. Rock Mech. Rock Eng. 2024, 57, 3635–3657. [Google Scholar] [CrossRef]

- Liu, B.L.; Yang, H.Q.; Haque, E.; Wang, G.L. Effect of Joint Orientation on the Breakage Behavior of Jointed Rock Mass Loaded by Disc Cutters. Rock Mech. Rock Eng. 2021, 54, 2087–2108. [Google Scholar] [CrossRef]

- Kim, D.; Pham, K.; Oh, J.Y.; Lee, S.J.; Choi, H. Classification of surface settlement levels induced by TBM driving in urban areas using random forest with data-driven feature selection. Autom. Constr. 2022, 135, 14. [Google Scholar] [CrossRef]

- Xu, Z.H.; Wang, W.Y.; Lin, P.; Nie, L.C.; Wu, J.; Li, Z.M. Hard-rock TBM jamming subject to adverse geological conditions: Influencing factor, hazard mode and a case study of Gaoligongshan Tunnel. Tunn. Undergr. Space Technol. 2021, 108, 17. [Google Scholar] [CrossRef]

- Liu, Z.B.; Li, L.; Fang, X.L.; Qi, W.B.; Shen, J.M.; Zhou, H.Y.; Zhang, Y.L. Hard-rock tunnel lithology prediction with TBM construction big data using a global-attention-mechanism-based LSTM network. Autom. Constr. 2021, 125, 13. [Google Scholar] [CrossRef]

- Gong, Q.M.; Yin, L.J.; Ma, H.S.; Zhao, J. TBM tunnelling under adverse geological conditions: An overview. Tunn. Undergr. Space Technol. 2016, 57, 4–17. [Google Scholar] [CrossRef]

- Huang, X.; Liu, Q.S.; Liu, H.; Zhang, P.L.; Pan, S.L.; Zhang, X.P.; Fang, J.N. Development and in-situ application of a real-time monitoring system for the interaction between TBM and surrounding rock. Tunn. Undergr. Space Technol. 2018, 81, 187–208. [Google Scholar] [CrossRef]

- Feng, G.L.; Chen, B.R.; Xiao, Y.X.; Jiang, Q.; Li, P.X.; Zheng, H.; Zhang, W. Microseismic characteristics of rockburst development in deep TBM tunnels with alternating soft-hard strata and application to rockburst warning: A case study of the Neelum-Jhelum hydropower project. Tunn. Undergr. Space Technol. 2022, 122, 16. [Google Scholar] [CrossRef]

- Shin, Y.J.; Farrokh, E.; Jung, J.H.; Lee, J.W.; Kang, H.B.Y. A study of rotary cutting machine (RCM) performance on Korean granite. Eng. Comput. 2024, 41, 155–182. [Google Scholar] [CrossRef]

- Zhang, H.P.; Xia, M.; Huang, F.Y.; Zhang, Z.Q. Research on Rock-Breaking Characteristics of Cutters and Matching of Cutter Spacing and Penetration for Tunnel Boring Machine. Buildings 2024, 14, 1757. [Google Scholar] [CrossRef]

- Geng, Q.; He, F.; Ma, M.X.; Liu, X.H.; Wang, X.B.; Zhang, Z.Y.; Ye, M. Application of Full-Scale Experimental Cutterhead System to Study Penetration Performance of Tunnel Boring Machines (TBMs). Rock Mech. Rock Eng. 2022, 55, 4673–4696. [Google Scholar] [CrossRef]

- Yang, H.Q.; Li, Z.; Jie, T.Q.; Zhang, Z.Q. Effects of joints on the cutting behavior of disc cutter running on the jointed rock mass. Tunn. Undergr. Space Technol. 2018, 81, 112–120. [Google Scholar] [CrossRef]

- Gong, Q.M.; Wu, F.; Wang, D.J.; Qiu, H.F.; Yin, L.J. Development and Application of Cutterhead Working Status Monitoring System for Shield TBM Tunnelling. Rock Mech. Rock Eng. 2021, 54, 1731–1753. [Google Scholar] [CrossRef]

- Xu, H.; Tang, T.; Cui, X.; Li, F.; Du, Y.; Zhao, Y.; Zhang, J. Installation Methods Evaluation for Tunnel Microseismic Monitoring Sensors. IEEE Sens. J. 2024, 24, 23986–23995. [Google Scholar] [CrossRef]

- Zhu, M.Q.; Gutierrez, M.; Zhu, H.H.; Ju, J.W.; Sarna, S. Performance Evaluation Indicator (PEI): A new paradigm to evaluate the competence of machine learning classifiers in predicting rockmass conditions. Adv. Eng. Inform. 2021, 47, 13. [Google Scholar] [CrossRef]

- Li, S.C.; Liu, B.; Xu, X.J.; Nie, L.C.; Liu, Z.Y.; Song, J.; Sun, H.F.; Chen, L.; Fan, K.R. An overview of ahead geological prospecting in tunneling. Tunn. Undergr. Space Technol. 2017, 63, 69–94. [Google Scholar] [CrossRef]

- Zhu, W.; Li, Z.; Heidari, A.A.; Wang, S.; Chen, H.; Zhang, Y. An Enhanced RIME Optimizer with Horizontal and Vertical Crossover for Discriminating Microseismic and Blasting Signals in Deep Mines. Sensors 2023, 23, 8787. [Google Scholar] [CrossRef]

- Jeong, H.Y.; Cho, J.W.; Jeon, S.; Rostami, J. Performance Assessment of Hard Rock TBM and Rock Boreability Using Punch Penetration Test. Rock Mech. Rock Eng. 2016, 49, 1517–1532. [Google Scholar] [CrossRef]

- Chen, L.; Wang, H.T.; Xu, X.J.; Zhang, Q.S.; Li, N.B.; Zhang, L.L. Advance Grouting Test Using Seismic Prospecting Method in TBM Tunneling: A Case Study in the Water Supply Project from Songhua River, Jilin, China. Geotech. Geol. Eng. 2019, 37, 267–281. [Google Scholar] [CrossRef]

- Schaeffer, K.; Mooney, M.A. Examining the influence of TBM-ground interaction on electrical resistivity imaging ahead of the TBM. Tunn. Undergr. Space Technol. 2016, 58, 82–98. [Google Scholar] [CrossRef]

- Ayawah, P.E.A.; Sebbeh-Newton, S.; Azure, J.W.A.; Kaba, A.G.A.; Anani, A.; Bansah, S.; Zabidi, H. A review and case study of Artificial intelligence and Machine learning methods used for ground condition prediction ahead of tunnel boring Machines. Tunn. Undergr. Space Technol. 2022, 125, 14. [Google Scholar] [CrossRef]

- Yin, X.; Liu, Q.S.; Huang, X.; Pan, Y.C. Perception model of surrounding rock geological conditions based on TBM operational big data and combined unsupervised-supervised learning. Tunn. Undergr. Space Technol. 2022, 120, 20. [Google Scholar] [CrossRef]

- Santos, A.E.M.; Lana, M.S.; Pereira, T.M. Rock Mass Classification by Multivariate Statistical Techniques and Artificial Intelligence. Geotech. Geol. Eng. 2021, 39, 2409–2430. [Google Scholar] [CrossRef]

- Zhang, Z.Q.; Zhang, K.J.; Dong, W.J.; Zhang, B. Study of Rock-Cutting Process by Disc Cutters in Mixed Ground based on Three-dimensional Particle Flow Model. Rock Mech. Rock Eng. 2020, 53, 3485–3506. [Google Scholar] [CrossRef]

- Xu, C.; Liu, X.L.; Wang, E.Z.; Wang, S.J. Prediction of tunnel boring machine operating parameters using various machine learning algorithms. Tunn. Undergr. Space Technol. 2021, 109, 12. [Google Scholar] [CrossRef]

- Hou, S.K.; Liu, Y.R.; Yang, Q. Real-time prediction of rock mass classification based on TBM operation big data and stacking technique of ensemble learning. J. Rock Mech. Geotech. Eng. 2022, 14, 123–143. [Google Scholar] [CrossRef]

- Zhang, H.; Zeng, J.; Ma, C.; Li, T.; Deng, Y.; Song, T. Multi-Classification of Complex Microseismic Waveforms Using Convolutional Neural Network: A Case Study in Tunnel Engineering. Sensors 2021, 21, 6762. [Google Scholar] [CrossRef]

- Zhang, C.; Liang, M.M.; Song, X.G.; Liu, L.X.; Wang, H.; Li, W.S.; Shi, M.L. Generative adversarial network for geological prediction based on TBM operational data. Mech. Syst. Signal Proc. 2022, 162, 16. [Google Scholar] [CrossRef]

| Feature | Formula | Feature | Formula |

|---|---|---|---|

| Sign | x1,x2,…,xn | PQ1 | 1 + (n − 1) × 0.25 |

| Max | max(|x1|,|x2|, …,|xn|) | PQ2 | 1 + (n − 1) × 0.50 |

| Min | min(|x1|,|x2|, …,|xn|) | PQ3 | 1 + (n − 1) × 0.75 |

| Peak | max(|x1|,|x2|,…,|xn|) − min(|x1|,|x2|, …,|xn|) | Mean | |

| Var | RMS |

| Types | TBM General Properties | Size |

|---|---|---|

| Cutterhead operating parameter | Number of cutters | 4 singles |

| Rotational speed | 0–8 rev/min | |

| Advance rate | 0.05–50 mm/min | |

| Displacement | 900 mm | |

| Cutter sensor | Normal (thrust) force | 0–500 kN |

| Cutting forces | 0–200 kN | |

| Side forces | 0–200 kN | |

| Vibration sensor | Range | 0–10 g |

| Frequency | 0–2000 Hz |

| Test Coding | UCS/ | VPstar/ | VPend/ | AR/ | RS/ | PR/ |

|---|---|---|---|---|---|---|

| MPa | mm | mm | mm/min | rev/min | mm/rev | |

| 1-4-4 | 28 | 20 | 40 | 4 | 4 | 1.00 |

| 2-5-4 | 40 | 60 | 4 | 5 | 0.80 | |

| 3-6-4 | 60 | 80 | 4 | 6 | 0.67 | |

| 4-4-6 | 80 | 100 | 6 | 4 | 1.50 | |

| 5-4-5 | 100 | 120 | 5 | 4 | 1.25 | |

| 6-6-8 | 120 | 140 | 8 | 6 | 1.33 | |

| 7-4-4 | 117 | 190 | 210 | 4 | 4 | 1.00 |

| 8-5-4 | 210 | 230 | 4 | 5 | 0.80 | |

| 9-6-4 | 230 | 250 | 4 | 6 | 0.67 | |

| 10-4-3 | 250 | 270 | 3 | 4 | 0.75 | |

| 11-4-2 | 270 | 290 | 2 | 4 | 0.50 | |

| 12-4-5 | 290 | 310 | 5 | 4 | 1.25 | |

| 13-4-4 | 185 | 360 | 380 | 4 | 4 | 1.00 |

| 14-5-4 | 380 | 400 | 4 | 5 | 0.80 | |

| 15-6-4 | 400 | 420 | 4 | 6 | 0.67 | |

| 16-4-3 | 420 | 440 | 3 | 4 | 0.75 | |

| 17-4-2 | 440 | 460 | 2 | 4 | 0.50 | |

| 18-4-5 | 460 | 480 | 5 | 4 | 1.25 |

| Features | Mean | Stdev | Min | Q1 | Q2 | Q3 | Max |

|---|---|---|---|---|---|---|---|

| FPI | 157.2 | 42.1 | 68.7 | 136.6 | 154.3 | 170.8 | 249.1 |

| TPI | 4.5 | 1.1 | 1.6 | 3.9 | 4.5 | 5.4 | 7.4 |

| Apeak | 8.1 | 2.5 | 3.0 | 6.2 | 7.8 | 9.9 | 14.4 |

| Amean | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.1 |

| Avar | 0.5 | 0.3 | 0.1 | 0.2 | 0.4 | 0.7 | 1.1 |

| ARMS | 0.6 | 0.2 | 0.3 | 0.5 | 0.7 | 0.8 | 1.1 |

| AQ1 | 0.3 | 0.1 | 0.6 | 0.4 | 0.3 | 0.2 | 0.1 |

| AQ2 | 0.0 | 0.0 | 0.1 | 0.0 | 0.0 | 0.0 | 0.1 |

| AQ3 | 0.3 | 0.1 | 0.1 | 0.2 | 0.3 | 0.5 | 0.6 |

| FPeak | 94.0 | 29.1 | 46.3 | 72.2 | 87.4 | 110.4 | 194.9 |

| Fmean | 457.4 | 24.2 | 343.4 | 440.8 | 460.0 | 473.9 | 514.4 |

| Fvar | 721.5 | 529.3 | 151.7 | 359.6 | 554.0 | 901.4 | 3032.0 |

| FRMS | 458.2 | 24.0 | 345.0 | 441.3 | 460.6 | 474.6 | 515.1 |

| FQ1 | 440.0 | 28.2 | 321.2 | 423.4 | 444.4 | 459.4 | 499.7 |

| FQ2 | 459.3 | 24.1 | 338.3 | 442.0 | 462.5 | 477.0 | 517.5 |

| FQ3 | 475.2 | 22.7 | 366.4 | 461.9 | 476.8 | 491.3 | 537.2 |

| TPeak | 25.5 | 11.6 | 9.3 | 17.7 | 22.7 | 32.2 | 62.6 |

| Tmean | 13.3 | 2.3 | 7.8 | 11.5 | 12.9 | 14.8 | 19.6 |

| Tvar | 51.9 | 47.6 | 4.8 | 17.9 | 35.2 | 73.4 | 264.5 |

| TRMS | 14.9 | 3.1 | 8.6 | 12.4 | 14.4 | 17.1 | 23.2 |

| TQ1 | 8.6 | 2.5 | 1.1 | 7.0 | 8.5 | 10.0 | 15.6 |

| TQ2 | 12.8 | 2.4 | 7.2 | 11.1 | 12.3 | 14.4 | 22.2 |

| TQ3 | 17.1 | 3.5 | 10.0 | 14.3 | 16.5 | 19.5 | 28.1 |

| No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|---|---|---|---|

| RF | Features’ | Fpeak | AQ2 | Fvar | AQ3 | Tvar | Tpeak | A65 | TQ1 |

| Importance | 0.15 | 0.12 | 0.12 | 0.06 | 0.06 | 0.05 | 0.05 | 0.05 | |

| GBDT | Features’ | Fpeak | AQ2 | Tvar | TQ1 | FPI | Tpeak | Fvar | AQ3 |

| Importance | 0.26 | 0.21 | 0.08 | 0.05 | 0.05 | 0.05 | 0.04 | 0.03 | |

| XGBoost | Features’ | Fpeak | AQ2 | A68 | Tpeak | TPI | TQ1 | FPI | Tvar |

| Importance | 0.29 | 0.28 | 0.16 | 0.07 | 0.07 | 0.05 | 0.05 | 0.05 | |

| Name | Selection Type | Feature Type | |||

|---|---|---|---|---|---|

| Thrust | Torque | Vibration | All | ||

| Comb-1 | Diverse | LSTM | SVM | KNN | LSTM |

| LGBM | LSTM | RF | GBDT | ||

| SVM | KNN | CNN | KNN | ||

| Comb-2 | Random | LR | GBDT | KNN | KNN |

| XGB | XGB | RF | LGBM | ||

| LGBM | LGBM | GBDT | CatBoost | ||

| Comb-3 | Random | LGBM | XGB | LR | LGBM |

| GBDT | LR | SVM | SVM | ||

| LSTM | CatBoost | LSTM | RF | ||

| Comb-4 | Random | XGB | RF | CNN | LGBM |

| CatBoost | CNN | GBDT | CNN | ||

| GBDT | KNN | LGBM | CatBoost | ||

| Comb-5 | Random | LGBM | LR | LSTM | KNN |

| LSTM | RF | SVM | SVM | ||

| CatBoost | XGB | CNN | GBDT | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, F.; Zeng, H.; Xu, H.; Sun, H. Experiment Study on Rock Mass Classification Based on RCM-Equipped Sensors and Diversified Ensemble-Learning Model. Sensors 2024, 24, 6320. https://doi.org/10.3390/s24196320

Li F, Zeng H, Xu H, Sun H. Experiment Study on Rock Mass Classification Based on RCM-Equipped Sensors and Diversified Ensemble-Learning Model. Sensors. 2024; 24(19):6320. https://doi.org/10.3390/s24196320

Chicago/Turabian StyleLi, Feng, Huike Zeng, Hongbin Xu, and Haokai Sun. 2024. "Experiment Study on Rock Mass Classification Based on RCM-Equipped Sensors and Diversified Ensemble-Learning Model" Sensors 24, no. 19: 6320. https://doi.org/10.3390/s24196320

APA StyleLi, F., Zeng, H., Xu, H., & Sun, H. (2024). Experiment Study on Rock Mass Classification Based on RCM-Equipped Sensors and Diversified Ensemble-Learning Model. Sensors, 24(19), 6320. https://doi.org/10.3390/s24196320