A Dataset of Visible Light and Thermal Infrared Images for Health Monitoring of Caged Laying Hens in Large-Scale Farming

Abstract

:1. Introduction

- (1)

- Dataset Introduction: A comprehensive description of the background, data content, collection process, data processing, and annotation methods involved in constructing the BClayinghens dataset.

- (2)

- Dataset Applications: An exploration of the practical application value of the BClayinghens dataset in chicken head target detection.

- (3)

- Deep Learning Model Training: An explanation of how to use this dataset to train deep learning models and enhance the model’s robustness in different environments through data augmentation techniques.

- (4)

- Providing Data Resources: Offering high-quality image data resources of caged laying hens to researchers and practitioners, supporting the research and development of poultry health detection and intelligent farming management systems.

2. Materials and Methods

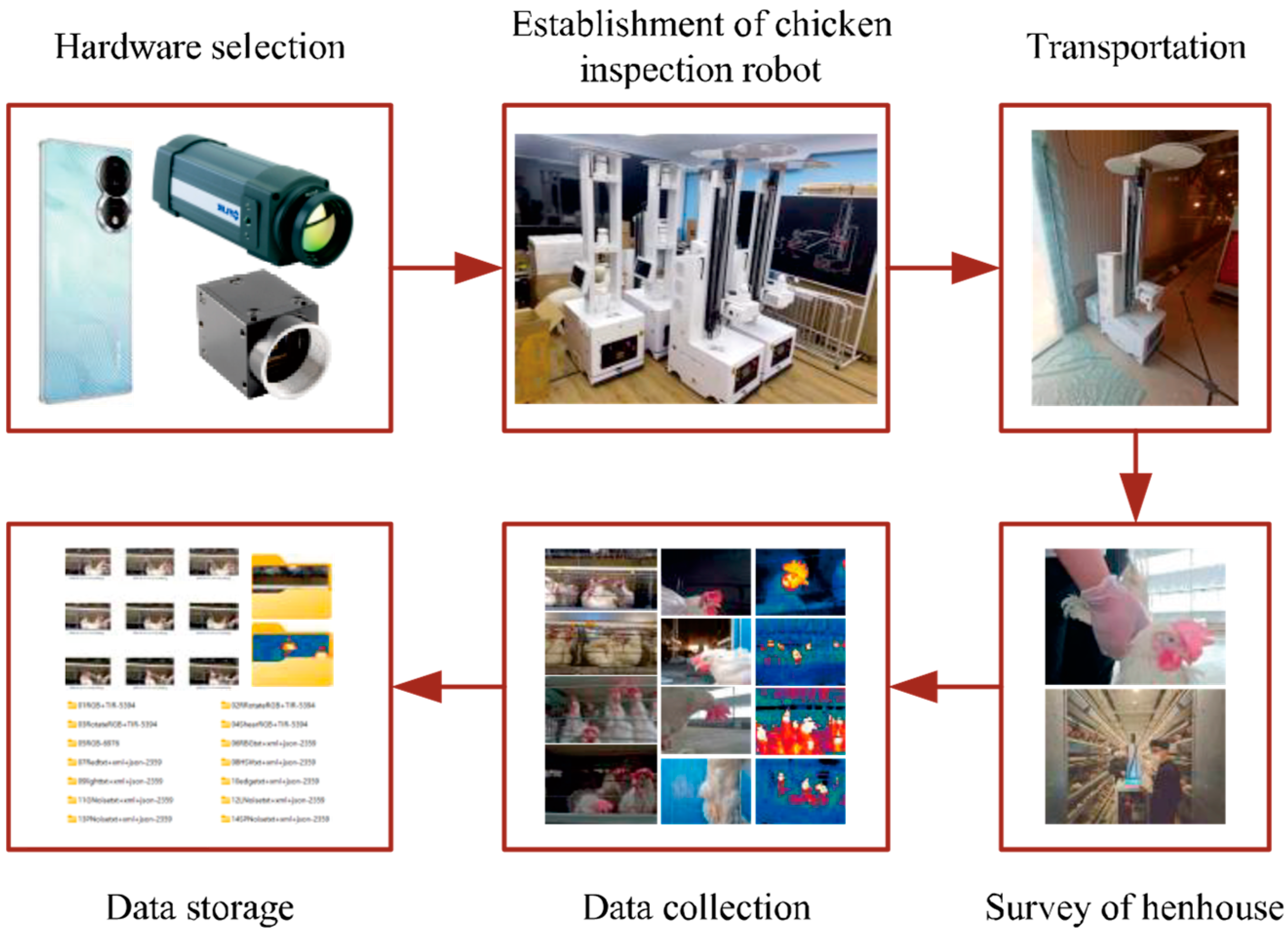

2.1. Data Collection

2.1.1. Experimental Location

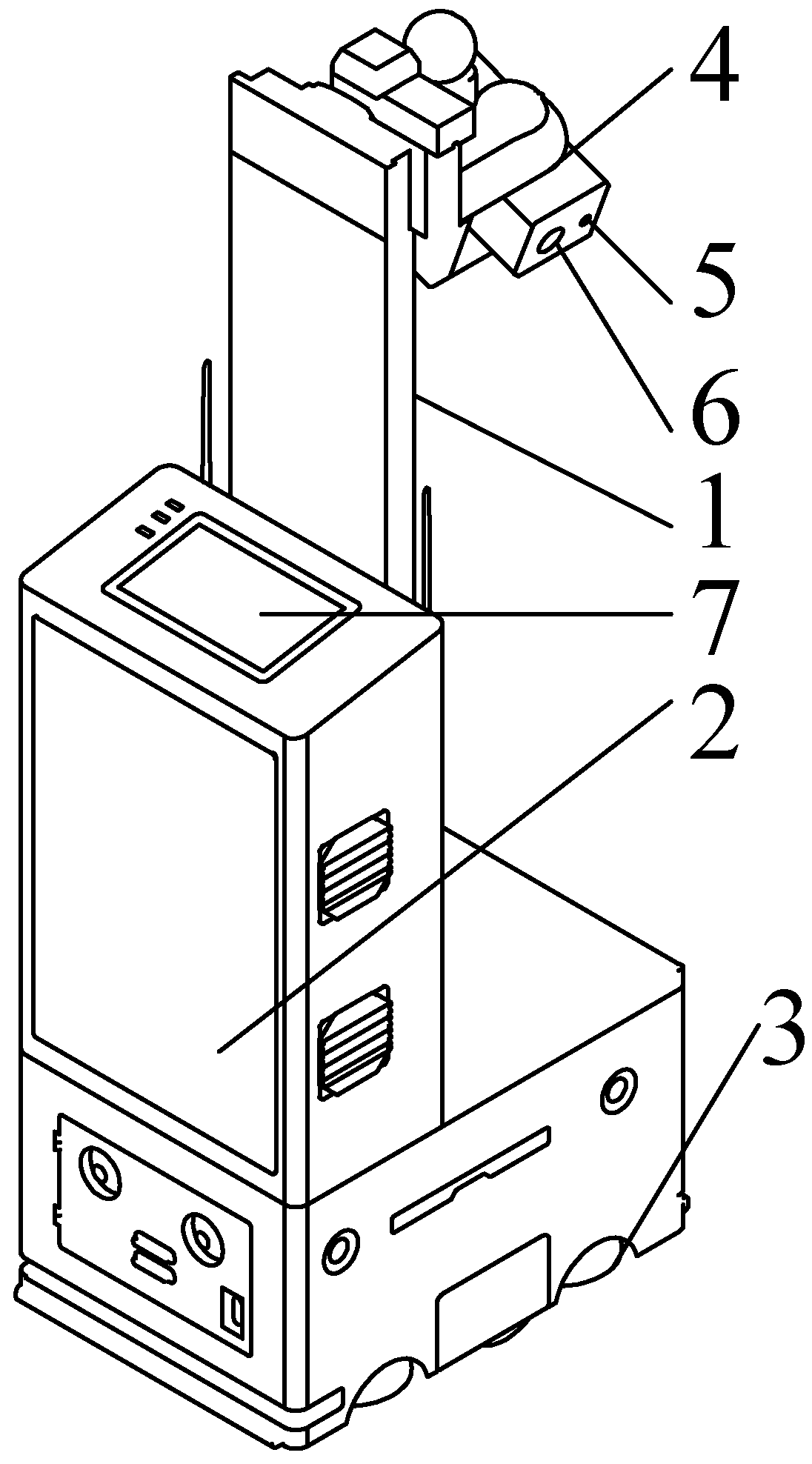

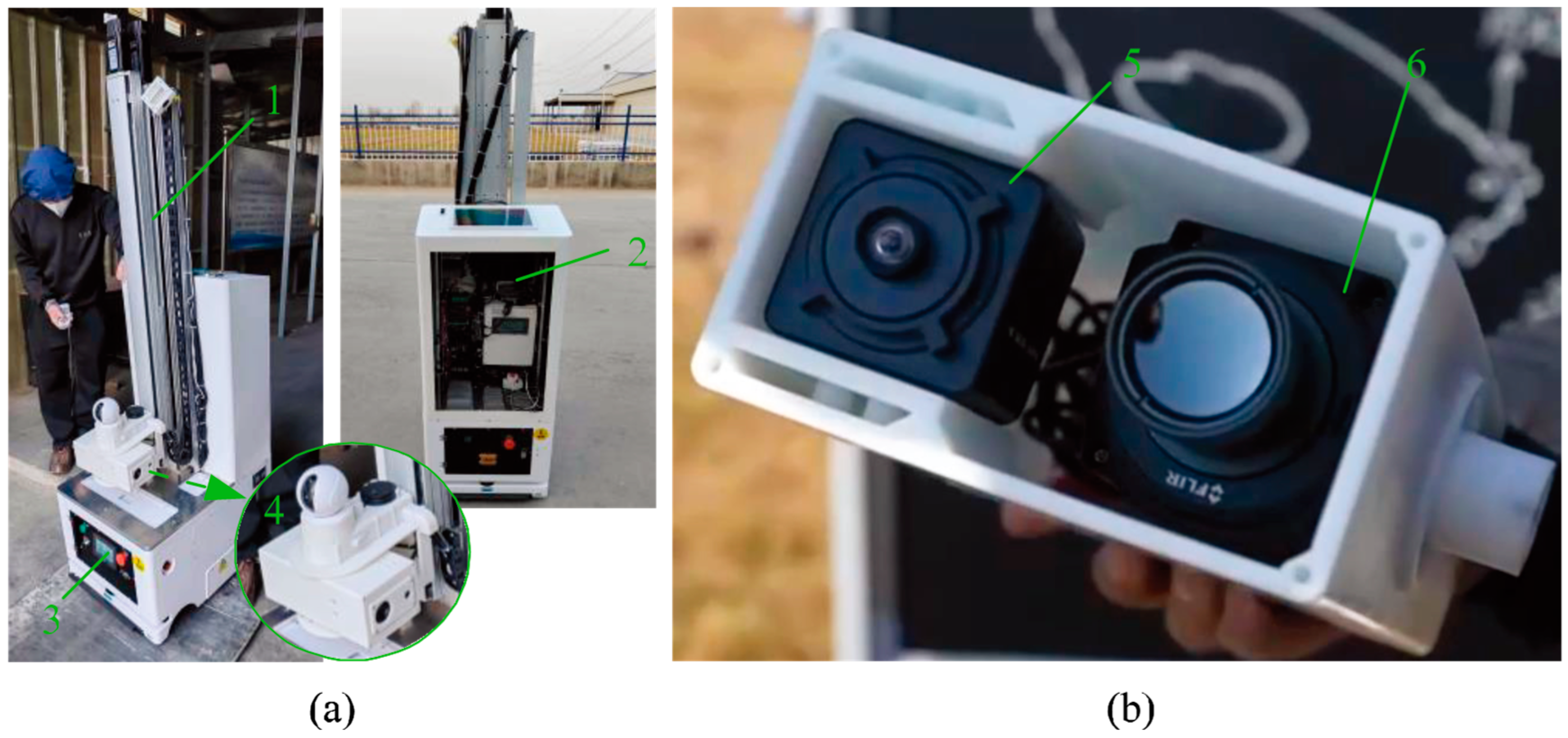

2.1.2. Experimental Equipment

2.1.3. Experimental Methods

2.1.4. Data Preprocessing

2.2. Data Augmentations

2.2.1. Rotation

2.2.2. R-Value (Redness) Enhancement

2.2.3. Hue Enhancement

2.2.4. Brightness Enhancement

2.2.5. Edge Enhancement

2.2.6. Gaussian Noise

2.2.7. Laplacian Noise

2.2.8. Poisson Noise

2.2.9. Salt-and-Pepper Noise

3. Results and Analysis

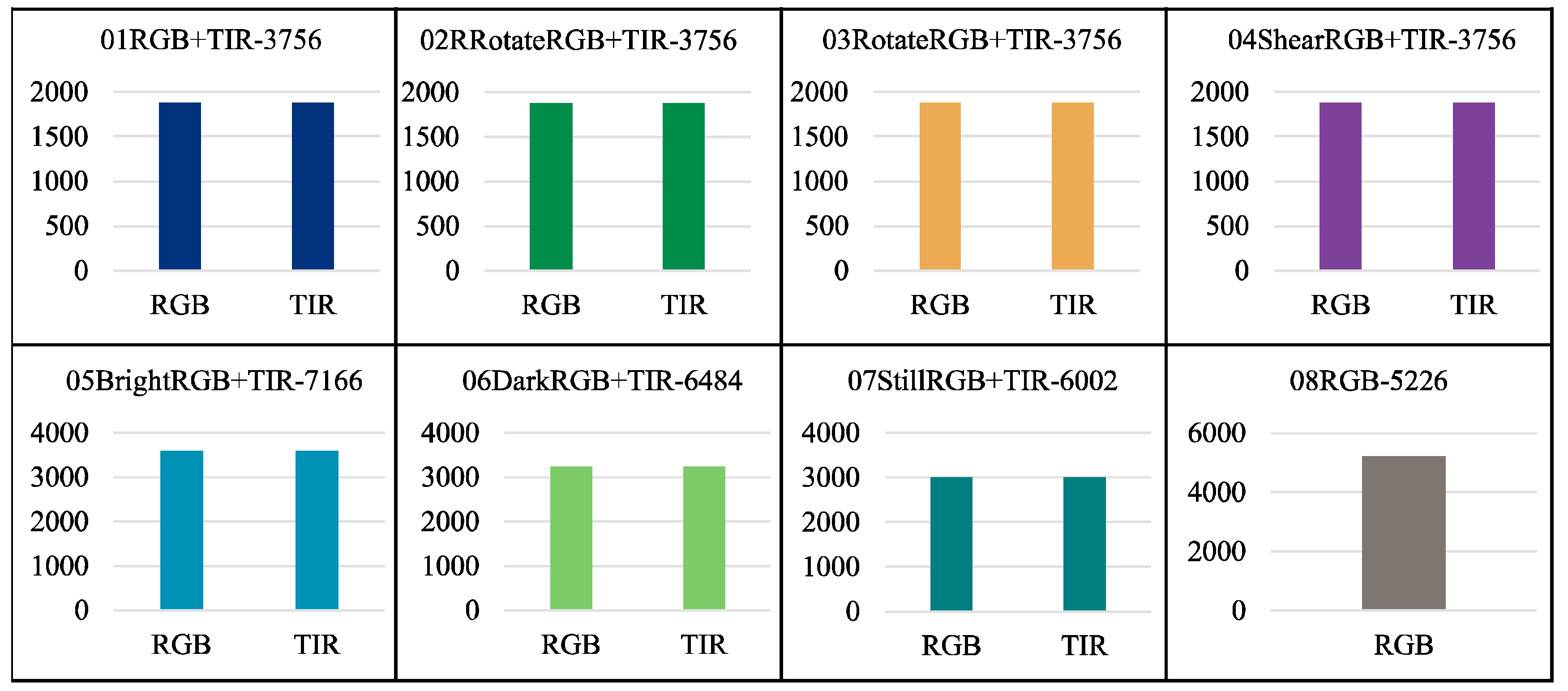

3.1. Data Description

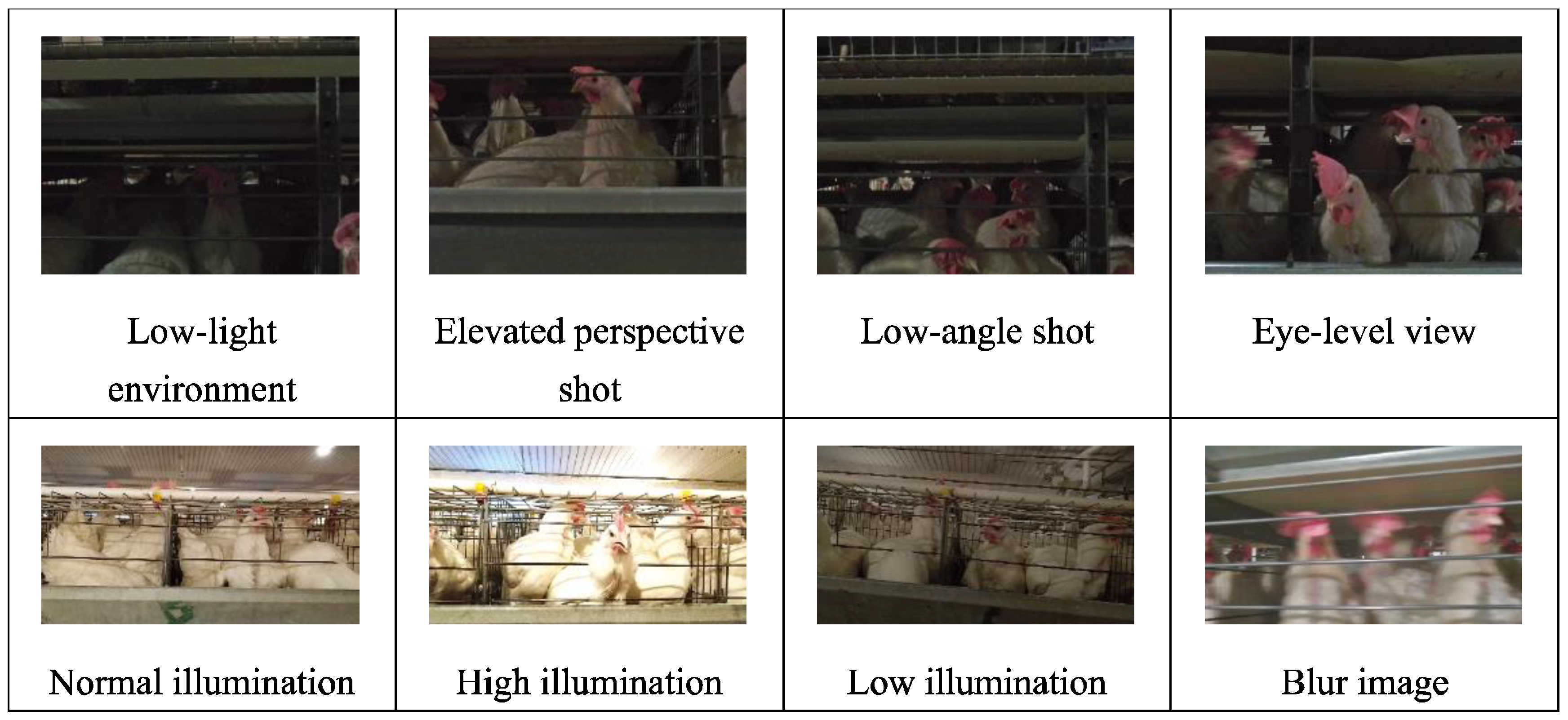

3.1.1. Data Environment

- (1)

- We collected data on individual laying hens in a natural environment, as illustrated in Figure 6. During photography, complete and partial images of the hens were captured under various natural lighting and background conditions. The data obtained from the natural setting are conducive to analyzing the morphology of free-range laying hens [16] and assessing their health state.

- (2)

- The activity states of caged laying hens were photographed, considering the variations in cage lighting and camera angles, as depicted in Figure 7. The dataset of caged laying hens can be utilized to analyze flock activity levels, detect deceased hens within cages, and calculate hen population numbers.

- (3)

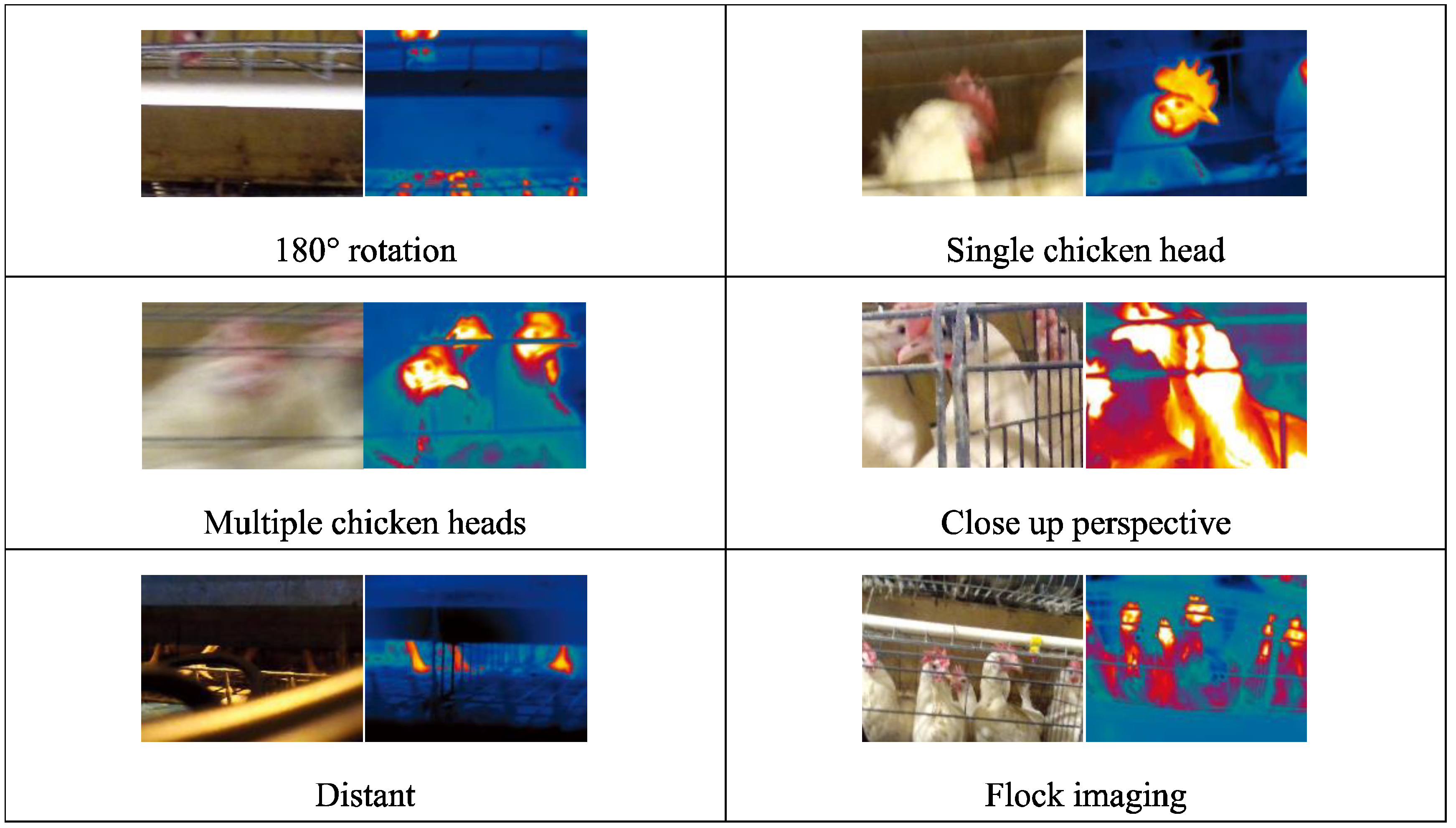

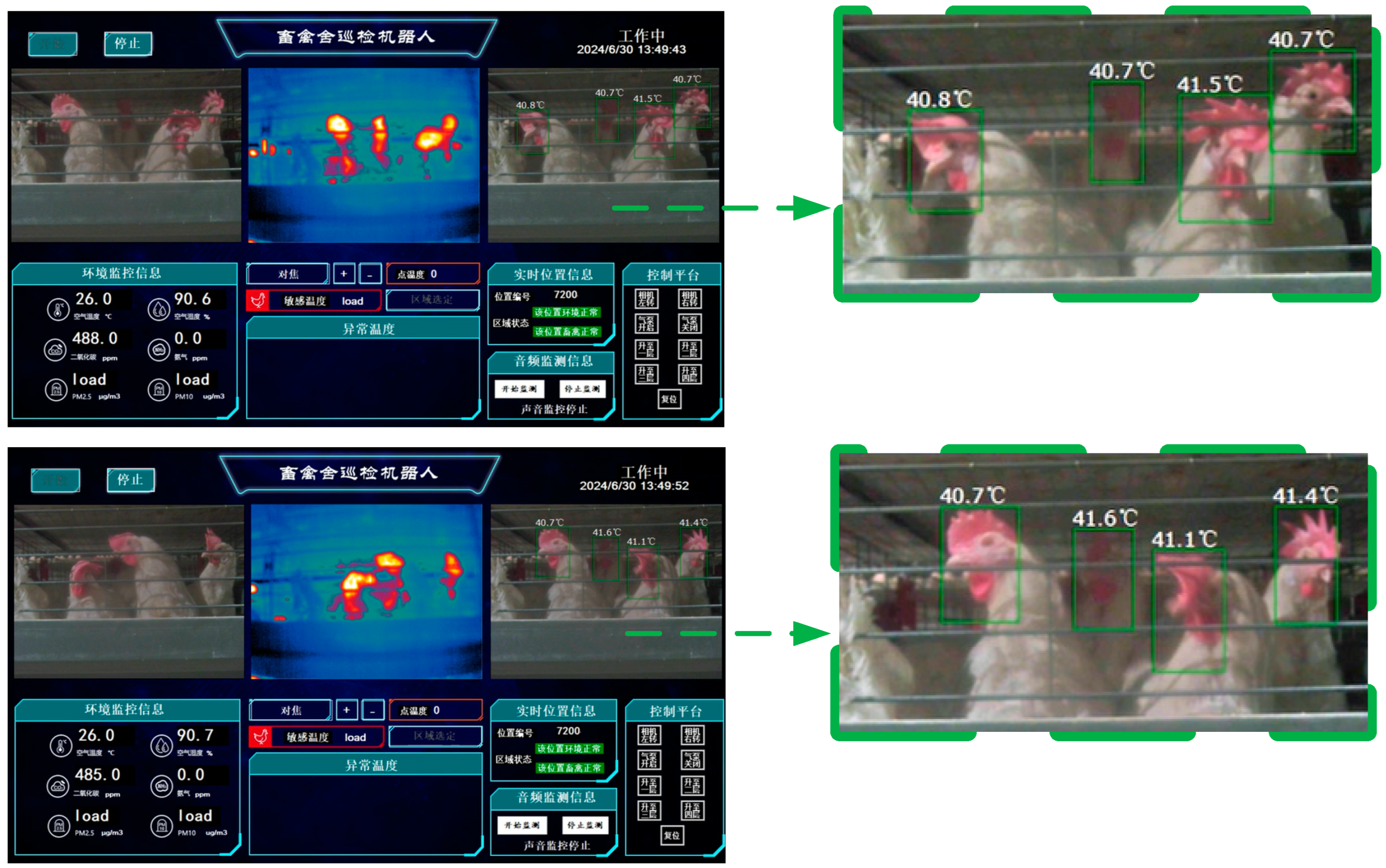

- An innovative image acquisition technology that fuses RGB images with TIR images was introduced [17], as shown in Figure 8. Corresponding RGB and TIR images were taken at different distances, angles, and with varying numbers of hens, capturing multimodal image data. The image fusion technique overcomes interference caused by camera shake in RGB images and provides data support for studying infectious diseases among hen flocks.

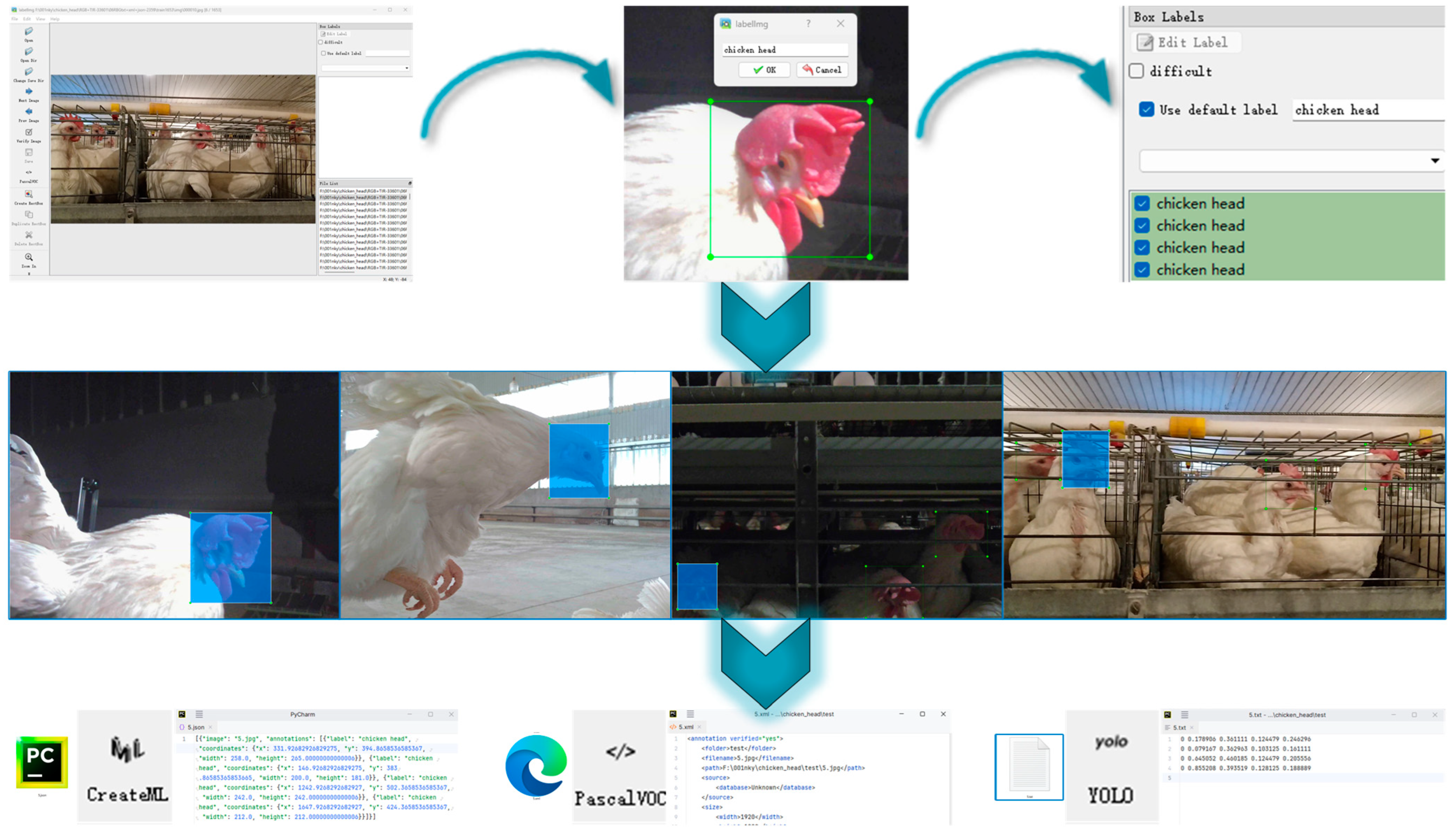

3.1.2. Annotations and Labels

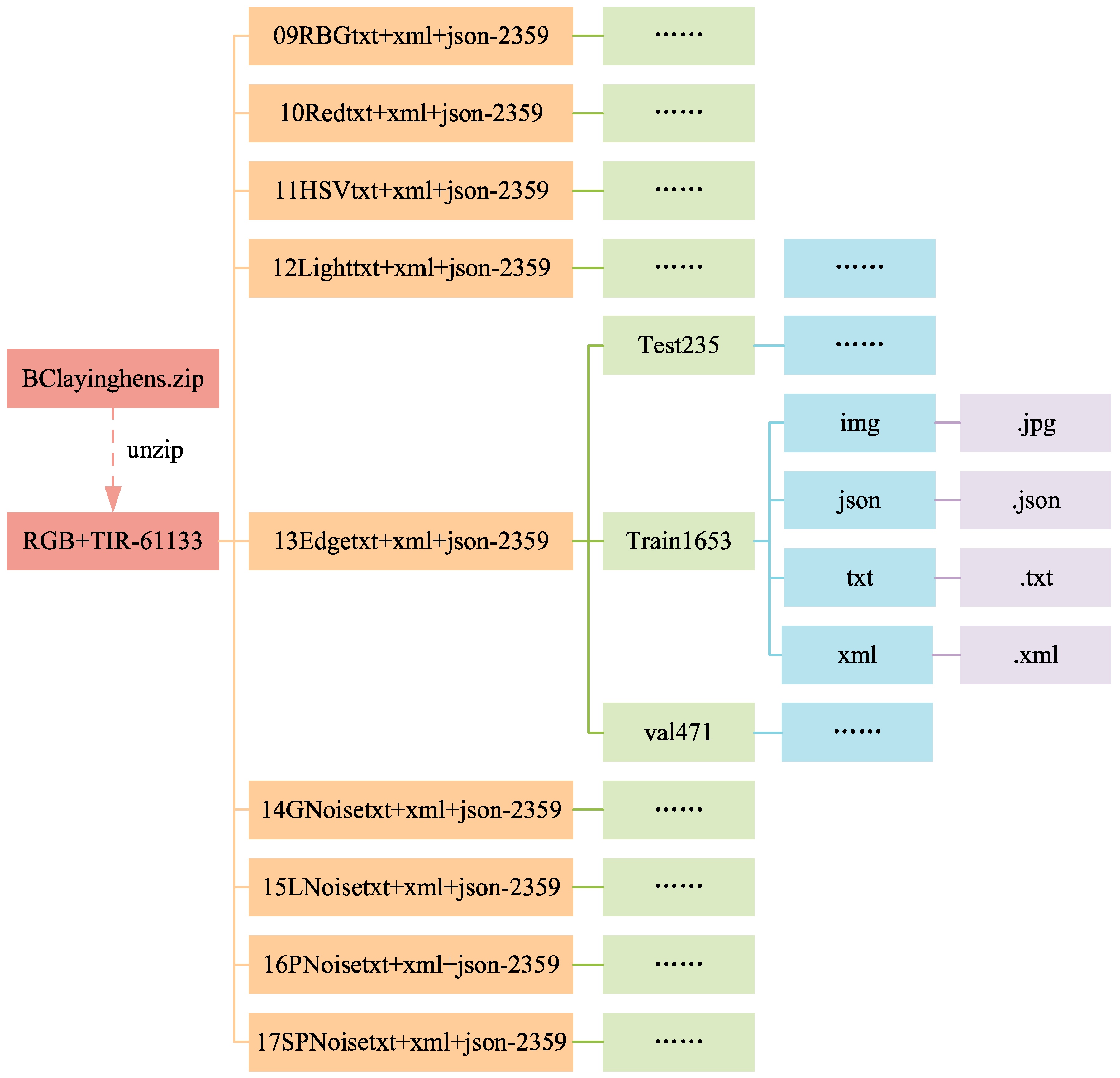

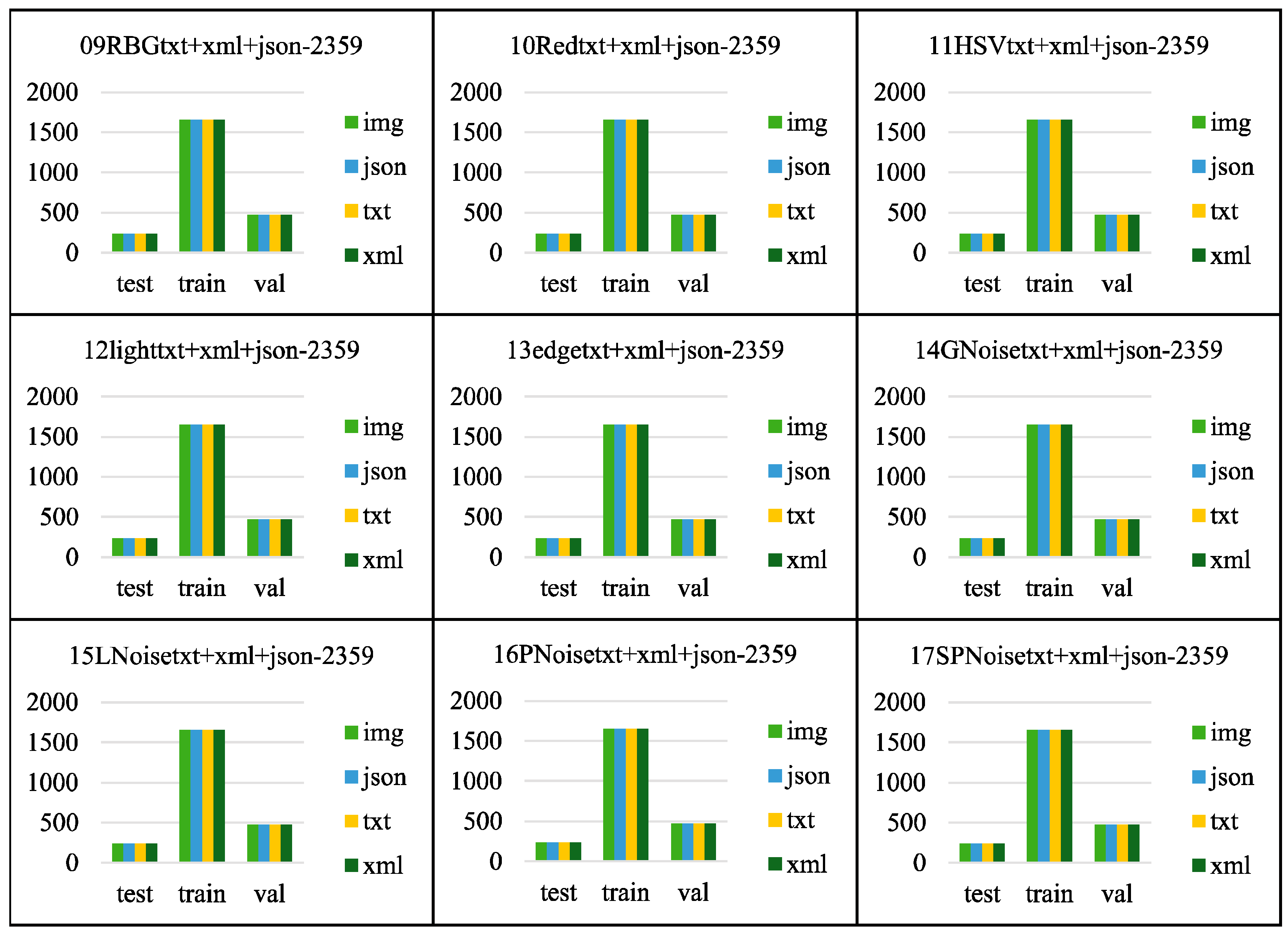

3.1.3. Data Folders

3.1.4. Value of the Data

- The dataset is applicable for the health monitoring and behavioral analysis of caged laying hens and research on chicken head detection and the counting of caged hens. It is particularly suitable for classification studies of hens based on deep learning convolutional neural networks [19].

- Multimodal image data from visible light and thermal infrared are provided, supporting research on the health monitoring and behavior recognition of hens through image fusion techniques. The fused images of hens are especially useful for body temperature detection, aiding disease prevention and control efforts in commercial poultry farming.

- Different morphological images of individual hens are supplied, which can be utilized for health assessments of laying hens.

- The use of the BClayinghens dataset for automatic monitoring and management [20] in laying hen farming not only enables the detection of disease risks in hens under non-contact, stress-free conditions but also improves the efficiency of routine inspections and the accuracy of disease detection [21], while reducing the labor costs of manual inspections in poultry farms. In contrast, the current health management methods for caged laying hens in commercial farms involve manually wearing headlamps and masks to inspect hens row by row. Such intense lighting and human contact not only negatively impact hen welfare but also pose potential health risks to on-site personnel.

3.2. Chicken Head and Head Temperature Detection

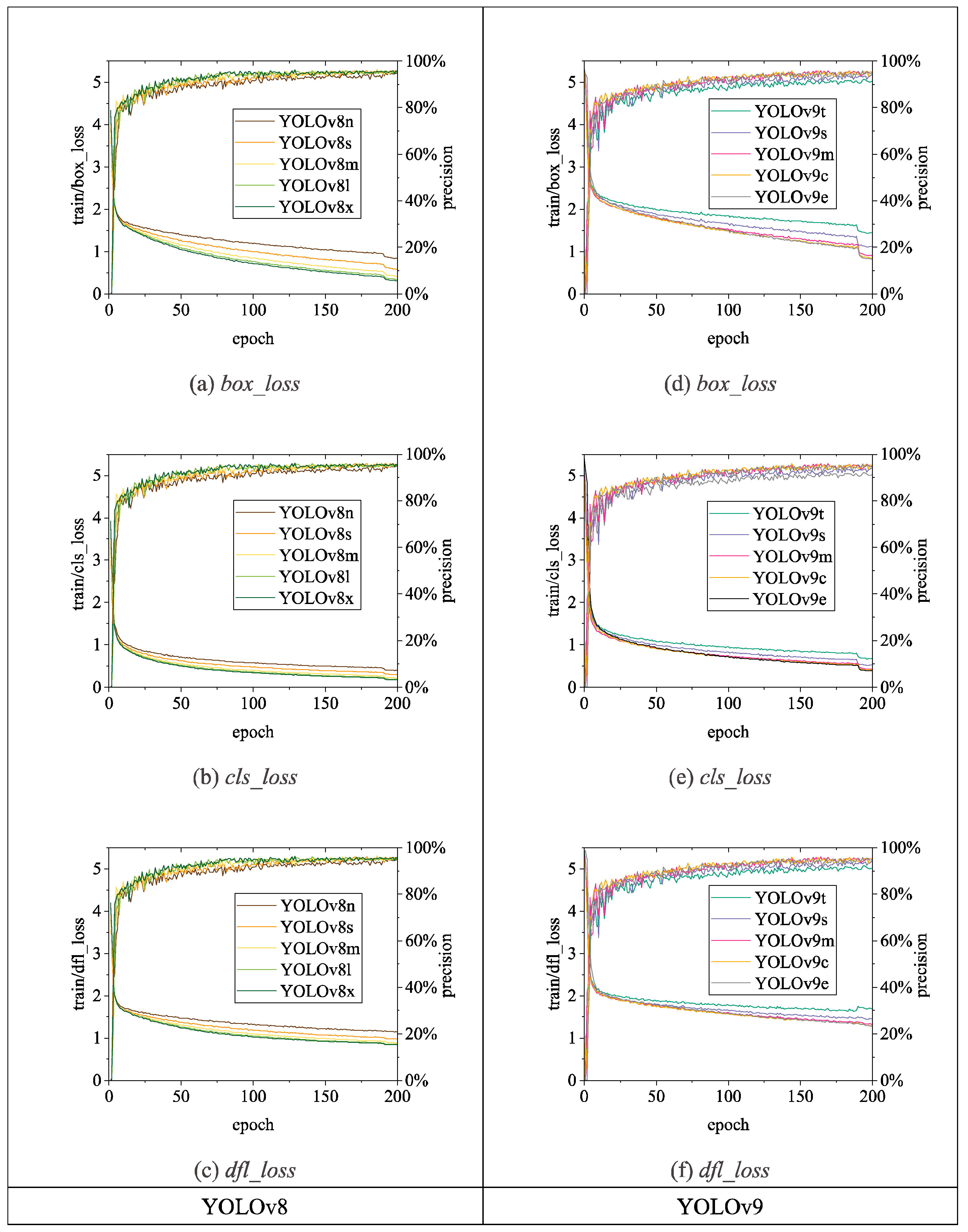

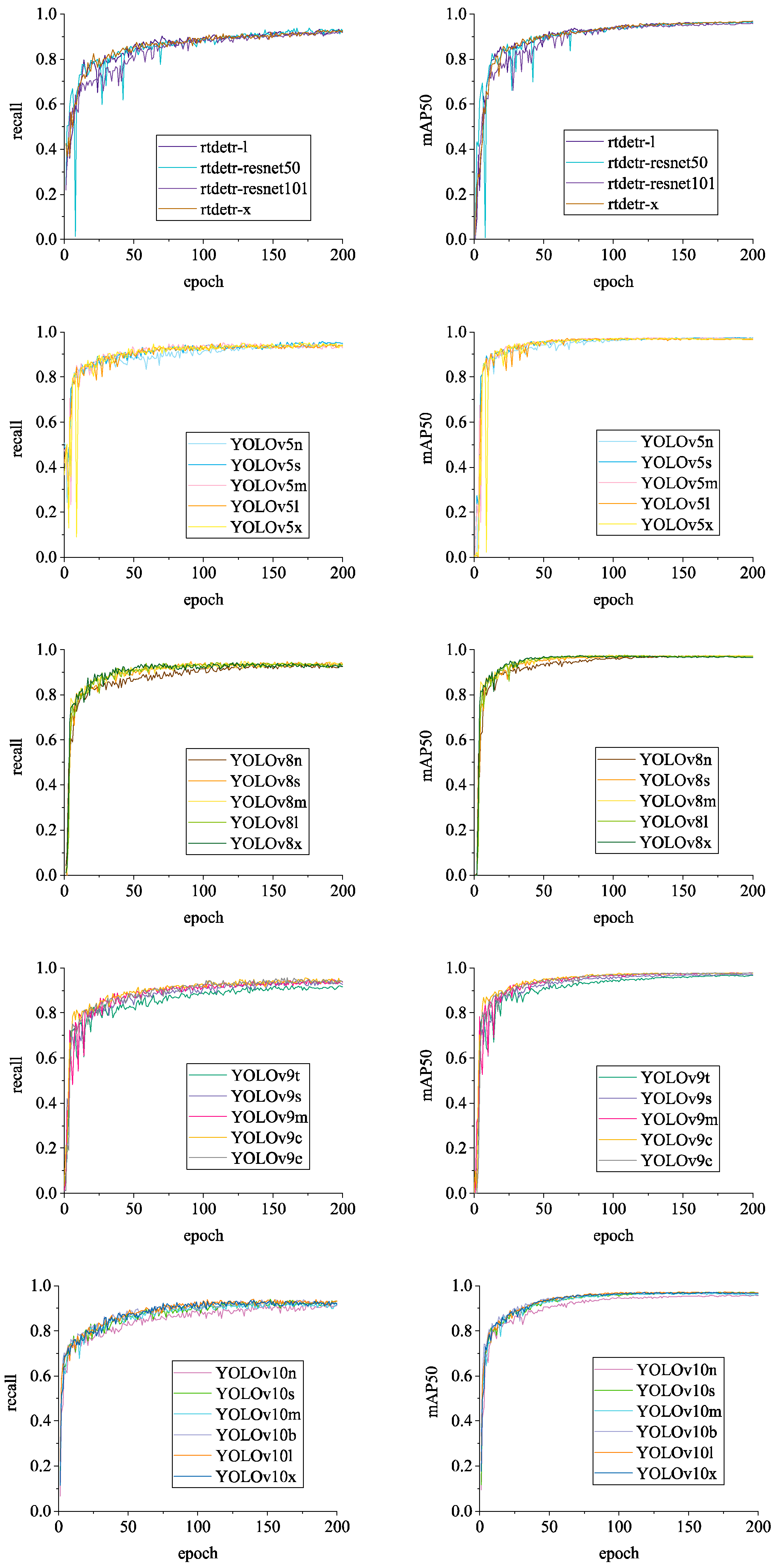

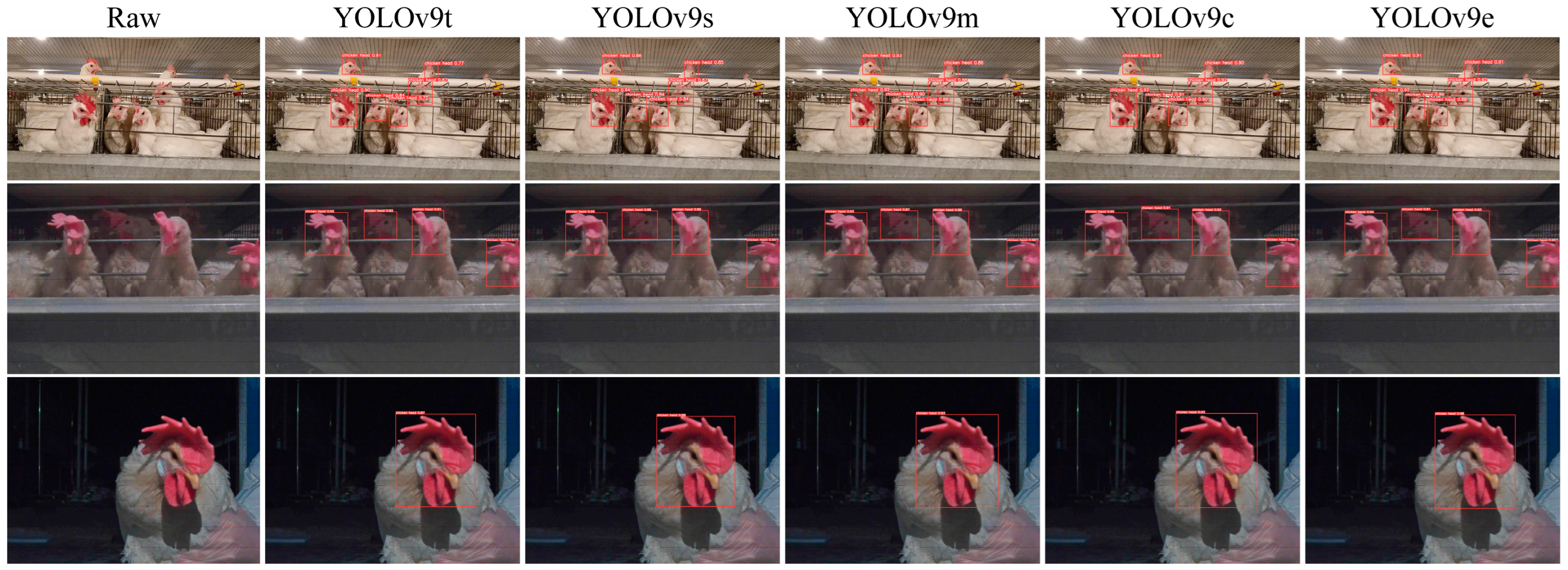

3.2.1. Comparison of Detection Performance of Different Algorithms

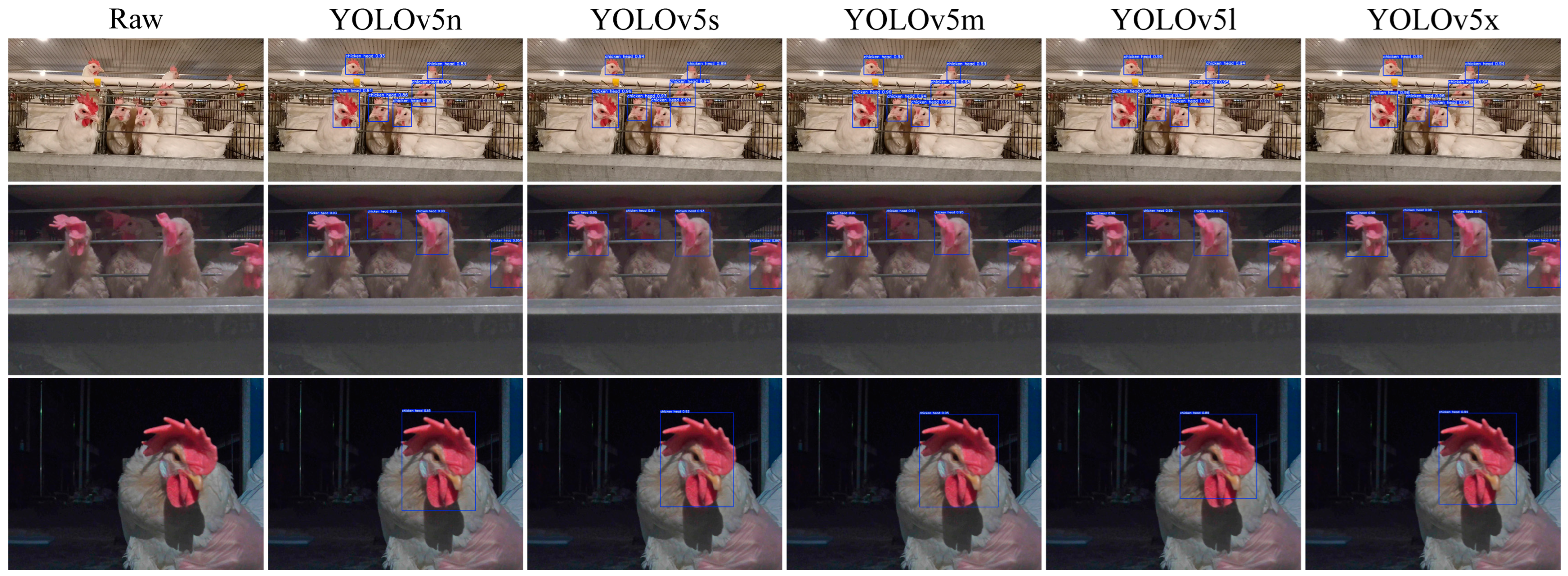

3.2.2. Analysis of Chicken Head Detection

3.2.3. Analysis of Chicken Head Temperature Identification

3.2.4. Comparison with Existing Research

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khanal, R.; Choi, Y.; Lee, J. Transforming Poultry Farming: A Pyramid Vision Transformer Approach for Accurate Chicken Counting in Smart Farm Environments. Sensors 2024, 24, 2977. [Google Scholar] [CrossRef] [PubMed]

- Geffen, O.; Yitzhaky, Y.; Barchilon, N.; Druyan, S.; Halachmi, I. A machine vision system to detect and count laying hens in battery cages. Animal 2020, 14, 2628–2634. [Google Scholar] [CrossRef]

- Bakar, M.; Ker, P.J.; Tang, S.G.H.; Baharuddin, M.Z.; Lee, H.J.; Omar, A.R. Translating conventional wisdom on chicken comb color into automated monitoring of disease-infected chicken using chromaticity-based machine learning models. Front. Vet. Sci. 2023, 10, 1174700. [Google Scholar] [CrossRef]

- Yu, Z.W.; Wan, L.Q.; Yousaf, K.; Lin, H.; Zhang, J.; Jiao, H.C.; Yan, G.Q.; Song, Z.H.; Tian, F.Y. An enhancement algorithm for head characteristics of caged chickens detection based on cyclic consistent migration neural network. Poult. Sci. 2024, 103, 103663. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.L.; Zhang, T.M.; Fang, C.; Yang, J.K.; Ma, C.; Zheng, H.K.; Zhao, H.Z. Super-resolution fusion optimization for poultry detection: A multi-object chicken detection method. J. Anim. Sci. 2023, 101, skad249. [Google Scholar] [CrossRef]

- Campbell, M.; Miller, P.; Díaz-Chito, K.; Hong, X.; McLaughlin, N.; Parvinzamir, F.; Del Rincón, J.M.; O’Connell, N. A computer vision approach to monitor activity in commercial broiler chickens using trajectory-based clustering analysis. Comput. Electron. Agric. 2024, 217, 108591. [Google Scholar] [CrossRef]

- Nasiri, A.; Yoder, J.; Zhao, Y.; Hawkins, S.; Prado, M.; Gan, H. Pose estimation-based lameness recognition in broiler using CNN-LSTM network. Comput. Electron. Agric. 2022, 197, 106931. [Google Scholar] [CrossRef]

- Yao, Y.Z.; Yu, H.Y.; Mu, J.; Li, J.; Pu, H.B. Estimation of the Gender Ratio of Chickens Based on Computer Vision: Dataset and Exploration. Entropy 2020, 22, 719. [Google Scholar] [CrossRef]

- Adebayo, S.; Aworinde, H.O.; Akinwunmi, A.O.; Alabi, O.M.; Ayandiji, A.; Sakpere, A.B.; Adeyemo, A.; Oyebamiji, A.K.; Olaide, O.; Kizito, E. Enhancing poultry health management through machine learning-based analysis of vocalization signals dataset. Data Brief 2023, 50, 109528. [Google Scholar] [CrossRef]

- Aworinde, H.O.; Adebayo, S.; Akinwunmi, A.O.; Alabi, O.M.; Ayandiji, A.; Sakpere, A.B.; Oyebamiji, A.K.; Olaide, O.; Kizito, E.; Olawuyi, A.J. Poultry fecal imagery dataset for health status prediction: A case of South-West Nigeria. Data Brief 2023, 50, 109517. [Google Scholar] [CrossRef]

- Han, H.; Xue, X.; Li, Q.; Gao, H.; Wang, R.; Jiang, R.; Ren, Z.; Meng, R.; Li, M.; Guo, Y.; et al. Pig-ear detection from the thermal infrared image based on improved YOLOv8n. Intell. Robot. 2024, 4, 20–38. [Google Scholar] [CrossRef]

- Hongwei, L.; Yang, J.; Manlu, L.; Xinbin, Z.; Jianwen, H.; Haoxiang, S. Path planning with obstacle avoidance for soft robots based on improved particle swarm optimization algorithm. Intell. Robot. 2023, 3, 565–580. [Google Scholar]

- Dlesk, A.; Vach, K.; Pavelka, K. Photogrammetric Co-Processing of Thermal Infrared Images and RGB Images. Sensors 2022, 22, 1655. [Google Scholar] [CrossRef]

- Lin, F.; Hu, W.; Wang, Y.; Tian, Y.; Lu, G.; Chen, F.; Xu, Y.; Wang, X. Universal Object Detection with Large Vision Model. Int. J. Comput. Vis. 2024, 132, 1258–1276. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Chen, C.; Leng, Y.; Fu, C. Towards environment perception for walking-aid robots: An improved staircase shape feature extraction method. Intell. Robot. 2024, 4, 179–195. [Google Scholar] [CrossRef]

- Tan, X.J.; Yin, C.C.; Li, X.X.; Cai, M.R.; Chen, W.H.; Liu, Z.; Wang, J.S.; Han, Y.X. SY-Track: A tracking tool for measuring chicken flock activity level. Comput. Electron. Agric. 2024, 217, 108603. [Google Scholar] [CrossRef]

- Ma, W.H.; Wang, K.; Li, J.W.; Yang, S.X.; Li, J.F.; Song, L.P.; Li, Q.F. Infrared and Visible Image Fusion Technology and Application: A Review. Sensors 2023, 23, 599. [Google Scholar] [CrossRef]

- Fan, J.; Zheng, E.; He, Y.; Yang, J. A Cross-View Geo-Localization Algorithm Using UAV Image and Satellite Image. Sensors 2024, 24, 3719. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A review of convolutional neural networks in computer vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Melak, A.; Aseged, T.; Shitaw, T. The Influence of Artificial Intelligence Technology on the Management of Livestock Farms. Int. J. Distrib. Sens. Netw. 2024, 2024, 8929748. [Google Scholar] [CrossRef]

- Li, X.; Cai, M.; Tan, X.; Yin, C.; Chen, W.; Liu, Z.; Wen, J.; Han, Y. An efficient transformer network for detecting multi-scale chicken in complex free-range farming environments via improved RT-DETR. Comput. Electron. Agric. 2024, 224, 109160. [Google Scholar] [CrossRef]

- Tong, Q.; Zhang, E.; Wu, S.; Xu, K.; Sun, C. A Real-Time Detector of Chicken Healthy Status Based on Modified YOLO. SIViP; Research Square: London, UK, 2023; pp. 4199–4207. [Google Scholar]

- Lv, W.; Xu, S.; Zhao, Y.; Wang, G.; Wei, J.; Cui, C.; Du, Y.; Dang, Q.; Liu, Y. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

- Terven, J.R.; Esparza, D.M.C. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Chen, J.; Ding, Q.A.; Yao, W.; Shen, M.X.; Liu, L.S. Fine-grained detection of caged-hen head states using adaptive Brightness Adjustment in combination with Convolutional Neural Networks. Int. J. Agric. Biol. Eng. 2023, 16, 208–216. [Google Scholar] [CrossRef]

- Cai, Z.; Cui, J.; Yuan, H.; Cheng, M. Application and research progress of infrared thermography in temperature measurement of livestock and poultry animals: A review. Comput. Electron. Agric. 2023, 205, 107586. [Google Scholar] [CrossRef]

- Souza-Junior, J.B.F.; El-Sabrout, K.; de Arruda, A.M.V.; Costa, L.L.d.M. Estimating sensible heat loss in laying hens through thermal imaging. Comput. Electron. Agric. 2019, 166, 105038. [Google Scholar] [CrossRef]

- Zhang, B.; Xiao, D.; Liu, J.; Huang, S.; Huang, Y.; Lin, T. Pig eye area temperature extraction algorithm based on registered images. Comput. Electron. Agric. 2024, 217, 108549. [Google Scholar] [CrossRef]

| Module | Category | Parameter |

|---|---|---|

| Walking Device | Navigation Method | Manual/Automatic Magnetic Navigation |

| Charging Method | Self Charging | |

| Cruise Speed | Ten-speed Adjustment: 3–30 m/min | |

| Turning Radius | Zero-radius Turning | |

| Control Device | Industrial PC | K345, dual network and six serial ports (6 RS485), 8th generation i7 16 G + 256 G, win10–64 bit |

| Touchscreen | 11.6-inch (16:9), 1920 × 1080P | |

| System Information | Windows 10 × 64, Intel(R) Core(TM) i7, 16RAM, Memory 256 G SSD + 1T HDD | |

| Communication Method | WiFi + 4G | |

| Sensing Device | Visible Light Camera | Details are provided in Table 2 |

| Thermal Infrared Camera | Details are provided in Table 2 | |

| Gas Sensor | Pump-operated, CO2 measuring range [0~5000 ppm, ±5%], NH3 measuring range [0~50 ppm, ±0.5 ppm] | |

| Temperature and Humidity Sensor | Measurement Range [0~50 °C, ±0.2 °C], Measurement Range [0~100%, ±0.3% RH] | |

| Lifting Device | Dimensions | 820 mm × 600 mm × [Height adjustable from 514 mm to 2519 mm] |

| Lifting Speed | 50 mm/s |

| Equipment | Category | Specifications |

|---|---|---|

| Smartphone | Camera manufacturer | HONOR (Shenzhen, China) |

| Camera model | ANN-AN00 | |

| Resolution | 2592 × 1944 | |

| Visible light camera on the robot | Camera model | LRCP20680_1080P |

| Resolution | 1920 × 1080 | |

| Infrared thermal camera on robot | Camera manufacturer | FLIR Systems |

| Camera model | FLIR A300 9 Hz | |

| Resolution | 320 × 240 |

| No. | Time | General State of Hens | Capturing Tool | Image Type |

|---|---|---|---|---|

| 1 | Day 1, 7 a.m. | Looking around | Smartphone | RGB |

| 2 | Day 2, 1 p.m. | Looking around | Robot | RGB + TIR |

| 3 | Day 3, 7 p.m. | Resting | Robot | RGB |

| 4 | Day 4, 9 p.m. | Resting | Robot | RGB + TIR |

| 5 | Day 5, 11 a.m. | Feeding | Smartphone | RGB |

| 6 | Day 6, 5 p.m. | Feeding | Robot | RGB + TIR |

| 7 | Day 7, 2 p.m. | Manual handling | Smartphone | RGB |

| 8 | Day 8, 11: 30 a.m. | Large-scale movement of feed cart | Robot | RGB |

| 9 | Day 9, 11 a.m. | Pecking | Smartphone | RGB |

| 10 | Day 10, 04: 30 p.m. | Egg collection device movement | Smartphone | RGB |

| 11 | Day 11, 9 a.m. | Excited | Robot | RGB + TIR |

| 12 | Day 12, 3 p.m. | Excited | Robot | RGB |

| Serial Number | Criteria | Content |

|---|---|---|

| 1 | Resolution | 2592 × 1944, 1920 × 1080, 320 × 240. |

| 2 | Clarity | Delete blurry or out-of-focus images. |

| 3 | Environmental Variation | The henhouse has 4 levels, with samples from each level accounting for 25%. |

| 4 | Sample Quantity | More than 6 images per cage. |

| 5 | Image Format | .jpg |

| 6 | Number of Hens per Image | More than 1 hen |

| 7 | Action Variation | Looking around, resting, eating, pecking, excitement; each accounting for 20%. |

| Subject | Dataset of Visible Light and Thermal Infrared Images for Caged Laying Hens, Precision Laying Hen Farming. |

|---|---|

| Specific Academic Field | Deep learning-based image recognition, counting, health monitoring, and behavioral analysis of caged laying hens. |

| Data Formats | Raw images, XML annotations, TXT annotations, JSON annotations. |

| Data Types | Visible light (RGB) images, thermal infrared (TIR) images. |

| Data Acquisition | A chicken inspection robot together with a smartphone, visible light camera, and infrared thermal imager was used to collect images of laying hens with various poses within a large-scale poultry farm. The collected images comprise RGB and TIR images with resolutions of 2592 × 1944, 1920 × 1080, and 320 × 240, totaling 61,133. The dataset, after compression, is 76.1 GB in size and is available for download in ZIP format. |

| Data Source Location | Country: China; City: Shengzhou, Hebei; Institution: Shengzhou Xinghuo Livestock and Poultry Professional Cooperative. |

| Data Accessibility | Repository Name: BClayinghens Direct URL to the Data: https://github.com/maweihong/BClayinghens.git (accessed on 1 September 2024) |

| Subject | Model Size (MB) | Parameters (M) | Computation (GFLOPs) | Precision (%) | Recall (%) | mAP50 (%) |

|---|---|---|---|---|---|---|

| RT-DETR-l | 66.2 | 32.0 | 103.4 | 93.69 | 93.35 | 96.78 |

| RT-DETR-ResNet50 | 86.0 | 41.9 | 125.6 | 94.42 | 93.84 | 96.54 |

| RT-DETR-ResNet101 | 124.2 | 60.9 | 186.2 | 92.41 | 92.18 | 95.94 |

| RT-DETR-x | 135.4 | 65.5 | 222.5 | 93.29 | 92.88 | 96.93 |

| YOLOv5n | 3.9 | 1.9 | 4.5 | 94.52 | 94.92 | 97.52 |

| YOLOv5s | 14.4 | 7.2 | 16.5 | 95.98 | 95.82 | 97.54 |

| YOLOv5m | 42.2 | 21.2 | 49.0 | 96.27 | 95.13 | 97.59 |

| YOLOv5l | 92.8 | 46.5 | 109.1 | 95.92 | 94.87 | 97.27 |

| YOLOv5x | 173.1 | 86.7 | 205.7 | 95.59 | 95.23 | 97.35 |

| YOLOv8n | 6.3 | 3.2 | 8.7 | 95.06 | 94.16 | 97.51 |

| YOLOv8s | 22.5 | 11.2 | 28.6 | 95.97 | 94.83 | 97.38 |

| YOLOv8m | 52.1 | 25.9 | 78.9 | 96.48 | 94.89 | 97.71 |

| YOLOv8l | 88.7 | 43.7 | 165.2 | 95.98 | 94.42 | 97.55 |

| YOLOv8x | 136.7 | 68.2 | 257.8 | 96.28 | 94.29 | 97.43 |

| YOLOv9t | 6.4 | 2.0 | 7.7 | 92.24 | 92.12 | 96.68 |

| YOLOv9s | 20.3 | 7.1 | 26.4 | 94.07 | 94.69 | 97.21 |

| YOLOv9m | 66.2 | 20.0 | 76.3 | 95.97 | 95.09 | 97.92 |

| YOLOv9c | 102.8 | 25.3 | 102.1 | 95.96 | 95.54 | 97.81 |

| YOLOv9e | 140.0 | 57.3 | 189.0 | 95.85 | 95.73 | 97.87 |

| YOLOv10n | 5.8 | 2.3 | 6.7 | 91.87 | 92.54 | 95.94 |

| YOLOv10s | 16.6 | 7.2 | 21.6 | 95.18 | 94.02 | 97.24 |

| YOLOv10m | 33.5 | 15.4 | 59.1 | 94.62 | 92.54 | 96.81 |

| YOLOv10b | 41.5 | 19.1 | 92.0 | 95.62 | 93.84 | 97.13 |

| YOLOv10l | 52.2 | 24.4 | 120.3 | 95.94 | 94.04 | 97.32 |

| YOLOv10x | 64.1 | 29.5 | 160.4 | 95.74 | 93.67 | 97.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, W.; Wang, X.; Xue, X.; Li, M.; Yang, S.X.; Guo, Y.; Gao, R.; Song, L.; Li, Q. A Dataset of Visible Light and Thermal Infrared Images for Health Monitoring of Caged Laying Hens in Large-Scale Farming. Sensors 2024, 24, 6385. https://doi.org/10.3390/s24196385

Ma W, Wang X, Xue X, Li M, Yang SX, Guo Y, Gao R, Song L, Li Q. A Dataset of Visible Light and Thermal Infrared Images for Health Monitoring of Caged Laying Hens in Large-Scale Farming. Sensors. 2024; 24(19):6385. https://doi.org/10.3390/s24196385

Chicago/Turabian StyleMa, Weihong, Xingmeng Wang, Xianglong Xue, Mingyu Li, Simon X. Yang, Yuhang Guo, Ronghua Gao, Lepeng Song, and Qifeng Li. 2024. "A Dataset of Visible Light and Thermal Infrared Images for Health Monitoring of Caged Laying Hens in Large-Scale Farming" Sensors 24, no. 19: 6385. https://doi.org/10.3390/s24196385