An Enhanced Transportation System for People of Determination

Abstract

:1. Introduction

Problem Statement

- -

- A bus station containing more than one bus line is not considered in most of the prevailing works, thus causing difficulty for VIPs in recognizing the relevant bus line.

- -

- The route information accessed from a single source is inefficient for VIPs who are making journeys.

- -

- The VIP still needs human guidance to identify bus routes and bus numbers, or they need to enquire of bus coordinators.

- -

- When there is a larger queue of buses at the bus station, it is difficult for VIPs to identify the correct bus, especially when the desired bus is farther away.

- -

- The conversion of the VIP’s voice data can be affected by the presence of background noise, resulting in the wrong information being attained.

- -

- In some existing works, computer vision has been used to capture the user’s surroundings for identification of buses. However, this approach is inefficient when nearby buses are not the appropriate transport for VIPs.

- -

- Main goal: The main goal of the proposed study is to detect and select the optimal bay, as per the visually impaired person’s query, in order to promote the accessibility of bus transport. Through selection of the optimal bay, the difficulties and obstacles faced by the VIP regarding the determination of the location of the desired bus in the bus station are mitigated. Thus, based on the recognition of the optimal bay, bus transportation becomes more efficient for the visually impaired. The major contributions or objectives of the proposed transportation system for the visually challenged are further mentioned.

- -

- The optimal bay among the detected bus bays is selected using the EPBFTOA method, in order to recognize the appropriate bus line in the bus station.

- -

- To access the correct bus, information from multiple sources is obtained, including the GPS location, voice data, bus image, and bus route.

- -

- To minimize the need for human guidance, the VIP’s speech data are acquired using an Internet of Things (IoT) application via a mobile device to retrieve the details of the desired bus.

- -

- The VIPs face difficulty in reaching the correct bus among the large queue of buses. Thus, an RFID sensor is utilized to inform the respective bus drivers who should carry the VIP.

- -

- To retrieve correct information from the VIP, their voice data are pre-processed with the Mean Cross-Covariance Spectral Subtraction (MCC-SS) approach in order to remove background noise.

- -

- Utilizing the SSCEAD method, the text is segmented from the bus, and the Levenshtein Distance (LD) measure is used to determine the route similarity.

2. Literature Survey

3. Proposed Methodology

3.1. Speech Input

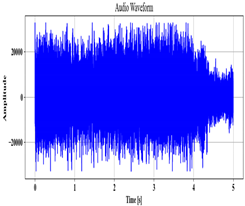

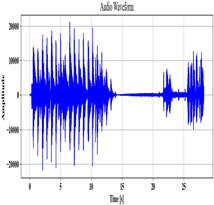

3.2. Speech Pre-Processing

3.3. Speech-to-Text Conversion

3.4. Bus Bay Detection

- -

- The VIP’s destination , in the form of text.

- -

- The VIP’s location , based on GPS.

- -

- Bay details concerning numerous locations, obtained from the cloud database.

- -

- Input Layer

- -

- Hidden Layer

- -

- Output layer

| Algorithm 1 Pseudocode for ArcGRNN |

| Input: VIP’s destination , location , bay details Output: Detected bay Begin Initialize parameters , For While input Calculate Hidden layer input Evaluate activation function Hidden layer output Vectorize output layer’s input Find final output End while End for Obtain detected bays End |

3.5. Optimal Bay Selection

- -

- Initialization

- -

- Fitness

- -

- Chemotaxis

- -

- Swarming

- -

- Reproduction

- -

- Elimination and Dispersal

| Algorithm 2 Pseudocode for EPBFTOA |

| Input: Detected Bay Output: Optimal Bus Bay |

| Begin Initialize bacteria population , Iteration , Calculate fitness While For Move bacteria by chemotaxis Swarm bacteria Reproduce bacteria Eliminate and disperse If Optimal bus bay Else Original position End if End for End while Return optimal bus bay End |

3.6. Image Capturing and Preprocessing

3.6.1. Step 1: Noise Removal

3.6.2. Step 2: Contrast Enhancement

3.7. Bus Detection

3.8. Text Identification and Segmentation

- -

- Encoder

- -

- Decoder

3.9. Text Extraction

3.10. Similarity Check

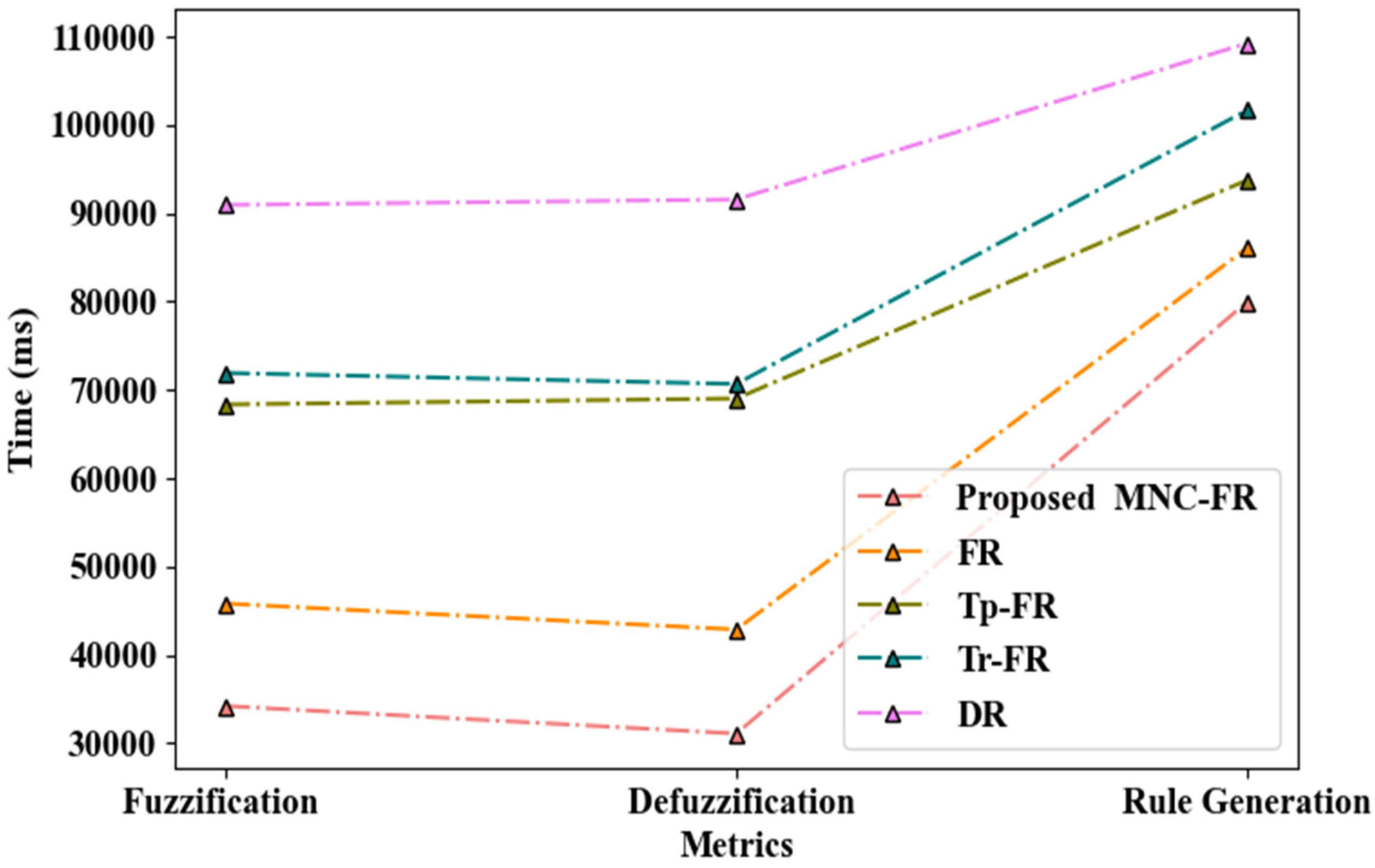

3.11. Decision-Making

- -

- Rule

- -

- Membership Function

- -

- Fuzzification

- -

- Fuzzy Relationship

- -

- Defuzzification

4. Results and Discussions

4.1. Dataset Description

4.2. Performance Assessment

4.3. Comparative Analysis with Related Works

Comparative Analysis with Similar Works Based on the MS-COCO Dataset

4.4. Practical Applicability of the Proposed System

4.5. Discussions and Limitations

5. Conclusions

Future Scope

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Choudhary, S.; Bhatia, V.; Ramkumar, K.R. IoT Based Navigation System for Visually Impaired People. In Proceedings of the ICRITO 2020—IEEE 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Direction), Noida, India, 13–14 October 2022; pp. 521–525. [Google Scholar] [CrossRef]

- Yadav, D.K.; Mookherji, S.; Gomes, J.; Patil, S. Intelligent Navigation System for the Visually Impaired—A Deep Learning Approach. In Proceedings of the 4th International Conference on Computing Methodologies and Communication, ICCMC, Erode, India, 11–13 March 2020; pp. 652–659. [Google Scholar] [CrossRef]

- Kuriakose, B.; Shrestha, R.; Sandnes, F.E. DeepNAVI: A deep learning based smartphone navigation assistant for people with visual impairments. Expert Syst. Appl. 2023, 212, 118720. [Google Scholar] [CrossRef]

- Khan, W.; Hussain, A.; Khan, B.M.; Crockett, K. Outdoor mobility aid for people with visual impairment: Obstacle detection and responsive framework for the scene perception during the outdoor mobility of people with visual impairment. Expert Syst. Appl. 2023, 228, 120464. [Google Scholar] [CrossRef]

- Poornima, J.; Vishnupriyan, J.; Vijayadhasan, G.K.; Ettappan, M. Voice assisted smart vision stick for visually impaired. Int. J. Control. Autom. 2020, 13, 512–519. [Google Scholar]

- Costa, C.; Paiva, S.; Gavalas, D. Multimodal Route Planning for Blind and Visually Impaired People; Lecture Notes in Intelligent Transportation and Infrastructure; Springer Nature: Cham, Switzerland, 2023; pp. 1017–1026. [Google Scholar] [CrossRef]

- Ashiq, F.; Asif, M.; Bin Ahmad, M.; Zafar, S.; Masood, K.; Mahmood, T.; Mahmood, M.T.; Lee, I.H. CNN-Based Object Recognition and Tracking System to Assist Visually Impaired People. IEEE Access 2022, 10, 14819–14834. [Google Scholar] [CrossRef]

- Yohannes, E.; Lin, P.; Lin, C.Y.; Shih, T.K. Robot Eye: Automatic Object Detection and Recognition Using Deep Attention Network to Assist Blind People. In Proceedings of the 2020 International Conference on Pervasive Artificial Intelligence, ICPAI, Taipei, Taiwan, 3–5 December 2020; pp. 152–157. [Google Scholar] [CrossRef]

- Gowda, M.C.P.; Hajare, R.; Pavan, P.S.S. Cognitive IoT System for visually impaired: Machine learning approach. Mater. Today Proc. 2021, 49, 529–535. [Google Scholar] [CrossRef]

- Martinez-Cruz, S.; Morales-Hernandez, L.A.; Perez-Soto, G.I.; Benitez-Rangel, J.P.; Camarillo-Gomez, K.A. An Outdoor Navigation Assistance System for Visually Impaired People in Public Transportation. IEEE Access 2021, 9, 130767–130777. [Google Scholar] [CrossRef]

- Akanda, M.R.R.; Khandaker, M.M.; Saha, T.; Haque, J.; Majumder, A.; Rakshit, A. Voice-controlled smart assistant and real-time vehicle detection for blind people. Lect. Notes Electr. Eng. 2020, 672, 287–297. [Google Scholar] [CrossRef]

- Agarwal, A.; Agarwal, K.; Agrawal, R.; Patra, A.K.; Mishra, A.K.; Nahak, N. Wireless bus identification system for visually impaired person. In Proceedings of the 1st Odisha International Conference on Electrical Power Engineering, Communication and Computing Technology, ODICON, Bhubaneswar, India, 8–9 January 2021; pp. 1–6. [Google Scholar] [CrossRef]

- de Andrade, H.G.V.; Borges, D.d.M.; Bernardes, L.H.C.; de Albuquerque, J.L.A.; da Silva-Filho, A.G. BlindMobi: A system for bus identification, based on Bluetooth Low Energy, for people with visual impairment. In Proceedings of the XXXVII Simpósio Brasileiro de Redes de Computadores e Sistemas Distribuídos, Gramado, Brazil, 6–10 May 2019; pp. 391–402. [Google Scholar] [CrossRef]

- Ramaswamy, T.; Vaishnavi, M.; Prasanna, S.S.; Archana, T. Bus identification for blind people using rfid. Int. Res. J. Mod. Eng. Technol. Sci. 2022, 4, 3478–3483. [Google Scholar]

- Kumar, K.A.; Sreekanth, P.; Reddy, P.R. Bus Identification Device for Blind People using Arduino. CVR J. Sci. Technol. 2019, 16, 48–52. [Google Scholar] [CrossRef]

- Tan, J.K.; Hamasaki, Y.; Zhou, Y.; Kazuma, I. A method of identifying a public bus route number employing MY VISION. J. Robot. Netw. Artif. Life 2021, 8, 224–228. [Google Scholar] [CrossRef]

- Mueen, A.; Awedh, M.; Zafar, B. Multi-obstacle aware smart navigation system for visually impaired people in fog connected IoT-cloud environment. Health Inform. J. 2022, 28, 14604582221112609. [Google Scholar] [CrossRef] [PubMed]

- Dash, S.; Pani, S.K.; Abraham, A.; Liang, Y. Advanced Soft Computing Techniques in Data Science, IoT and Cloud Computing. In Studies in Big Data; Springer International Publishing: Cham, Switzerland, 2021; Volume 89. [Google Scholar] [CrossRef]

- Bouteraa, Y. Design and development of a wearable assistive device integrating a fuzzy decision support system for blind and visually impaired people. Micromachines 2021, 12, 1082. [Google Scholar] [CrossRef] [PubMed]

- Mukhiddinov, M.; Cho, J. Smart glass system using deep learning for the blind and visually impaired. Electronics 2021, 10, 2756. [Google Scholar] [CrossRef]

- Feng, J.; Beheshti, M.; Philipson, M.; Ramsaywack, Y.; Porfiri, M.; Rizzo, J.R. Commute Booster: A Mobile Application for First/Last Mile and Middle Mile Navigation Support for People with Blindness and Low Vision. IEEE J. Transl. Eng. Health Med. 2023, 11, 523–535. [Google Scholar] [CrossRef]

- Arifando, R.; Eto, S.; Wada, C. Improved YOLOv5-Based Lightweight Object Detection Algorithm for People with Visual Impairment to Detect Buses. Appl. Sci. 2023, 13, 5802. [Google Scholar] [CrossRef]

- Suman, S.; Mishra, S.; Sahoo, K.S.; Nayyar, A. Vision Navigator: A Smart and Intelligent Obstacle Recognition Model for Visually Impaired Users. Mob. Inf. Syst. 2022, 2022, 9715891. [Google Scholar] [CrossRef]

- Pundlik, S.; Shivshanker, P.; Traut-Savino, T.; Luo, G. Field evaluation of a mobile app for assisting blind and visually impaired travelers to find bus stops. arXiv 2023, arXiv:2309.10940. [Google Scholar] [CrossRef]

- Bai, J.; Liu, Z.; Lin, Y.; Li, Y.; Lian, S.; Liu, D. Wearable travel aid for environment perception and navigation of visually impaired people. Electronics 2019, 8, 697. [Google Scholar] [CrossRef]

- Dubey, S.; Olimov, F.; Rafique, M.A.; Jeon, M. Improving small objects detection using transformer. J. Vis. Commun. Image Represent. 2022, 89, 103620. [Google Scholar] [CrossRef]

- Ancha, V.K.; Sibai, F.N.; Gonuguntla, V.; Vaddi, R. Utilizing YOLO Models for Real-World Scenarios: Assessing Novel Mixed Defect Detection Dataset in PCBs. IEEE Access 2024, 12, 100983–100990. [Google Scholar] [CrossRef]

- Said, Y.; Atri, M.; Albahar, M.A.; Ben Atitallah, A.; Alsariera, Y.A. Obstacle Detection System for Navigation Assistance of Visually Impaired People Based on Deep Learning Techniques. Sensors 2023, 23, 5262. [Google Scholar] [CrossRef] [PubMed]

- Quang, T.N.; Lee, S.; Song, B.C. Object detection using improved bi-directional feature pyramid network. Electronics 2021, 10, 746. [Google Scholar] [CrossRef]

- Naseer, A.; Almujally, N.A.; Alotaibi, S.S.; Alazeb, A.; Park, J. Efficient Object Segmentation and Recognition Using Multi-Layer Perceptron Networks. Comput. Mater. Contin. 2024, 78, 1381–1398. [Google Scholar] [CrossRef]

| Related Works | Objective | Methods Used | Advantages | Result | Drawbacks |

|---|---|---|---|---|---|

| [16] | Identification of bus route numbers | RF algorithm and pattern matching method | Bus details were accurately captured using the Lucas–Kanade tracker | Achieved a higher detection rate | Features relationships were not recognized by RF, resulting in inaccurate detection. |

| [17] | Navigation system for the VIP | YOLOv3, Environment-aware Bald Eagle Search, and Actor–Critic algorithm | The person’s query was analyzed, and the obstacles on the path were detected | Higher latency and detection accuracy | Trade-offs occurred when using the Actor–critic algorithm for navigation decisions. |

| [18] | Wearable device formulation for the safe traveling of VIPs | YOLOv3 and transfer learning method | The detected bus board number is communicated to the person in a voice format | Attained more accuracy | Smaller objects were not detected by the anchor box of YOLOv3. |

| [19] | Navigation device to assist the blind person | Robot operating system and Fuzzy logic algorithm | Safer directions were alerted to the person | Obtained lower collision | The Fuzzy rules were developed based on assumptions, thereby degrading the decision efficiency. |

| [20] | Smart glass system for the independent movement of blind persons at night-time | U2-Net and Tesseract model | Path image was expressed in a tactile graph, and the related information was translated into speech format | Detected objects in the path with improved accuracy and precision | Text was not properly analyzed from the low-quality and poorly lit images. |

| [21] | Mobile application-based navigation assistance for blind persons | CB application and OCR system | The utilization of way-finding footage provided accurate information on the path | The path was identified with higher accuracy, precision, and f1-score | Image data in different formats could not be processed by the OCR. |

| [22] | Lightweight bus detection network for VIP | Improved YOLO with slim scale detection module | The lightweight approach facilitated real-time detection of the bus | The bus was detected with high accuracy and precision | YOLO involved fewer parameters, thus resulting in sub-optimal detection. |

| [23] | Vision Navigator framework for the blind and VIP | RNN and single-shot mechanism | Stick and sensor-equipped shoes were utilized for identifying obstacles on the path | Achieved a high accuracy rate | Complex data patterns were not learned by the RNN due to gradient vanishing. |

| [24] | Mobile application-centered bus stop identification system for VIPs | Neural network-based AAA | The bus stop signs were processed for recognizing bus stops | Distance or deviation between actual location and identified bus stop location was lower | The application required a large number of labeled data and high-quality images for detection. |

| [25] | Wearable device assistance for the navigation of VIPs | CNN | The device was developed with an in-built camera for better detection of objects | Depicted higher safety and efficiency | Sequential data were not effectively learned by the CNN, which restricted its real-time object detection performance. |

| Input Speech Signal | .mp3 Audio File | ||

|---|---|---|---|

| Noise-removed signal |  |  |  |

| Speech-to-Text conversion |  |  |  |

| Input image |  |  |  |

| Noise-removed image |  |  |  |

| Contrast-enhanced image |  |  |  |

| Identified Bus |  |  |  |

| Text identification |  |  |  |

| Text extraction |  |  |  |

| Methods | Optimal Bay Selection Time (ms) | Average Fitness |

|---|---|---|

| Proposed EPBFTOA | 247,891 | 9.068 |

| BFOA | 389,124 | 19.8787 |

| MRFO | 596,757 | 42.0519 |

| AVOA | 804,628 | 67.5498 |

| BESO | 997,245 | 79.8054 |

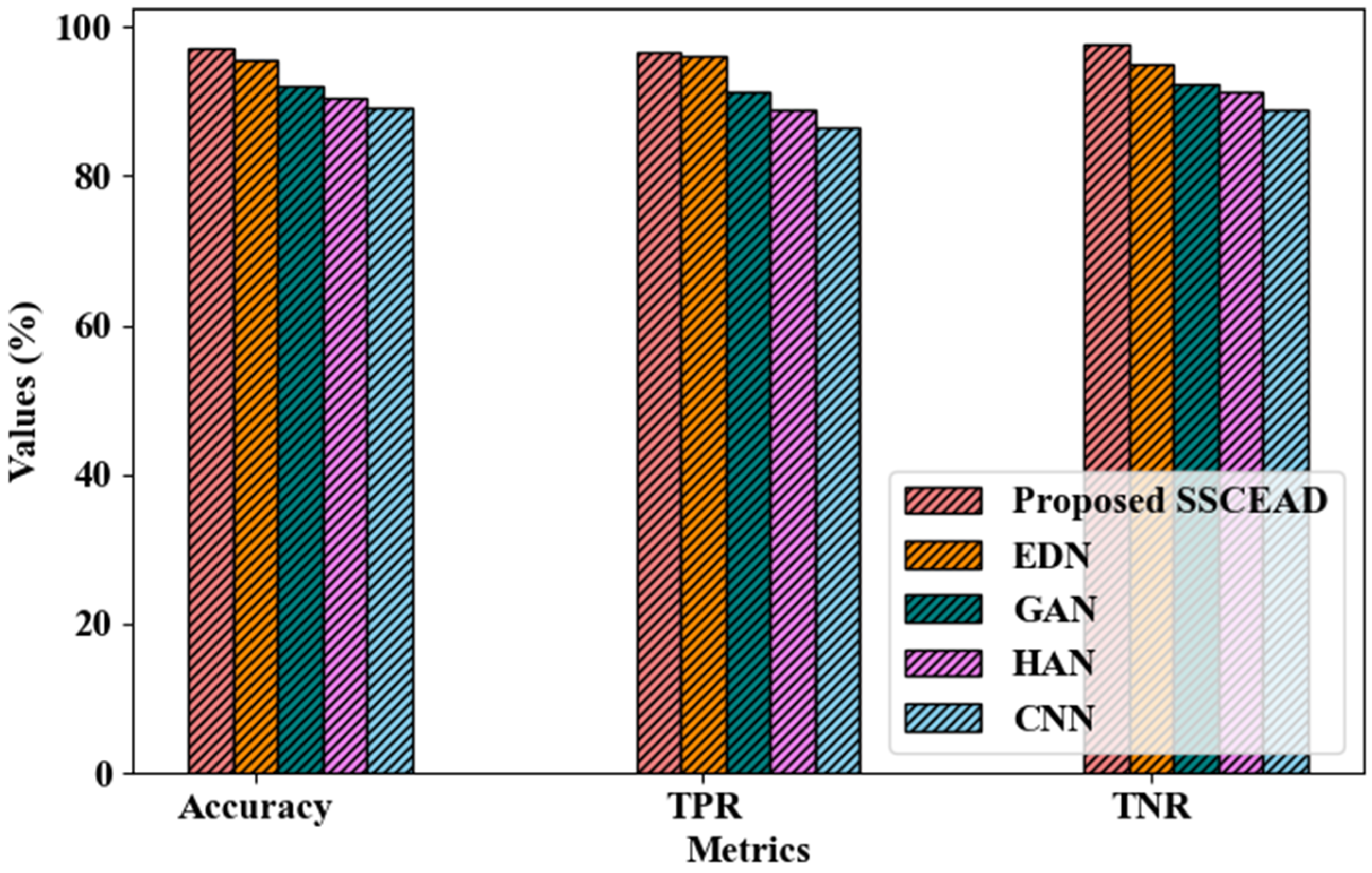

| Techniques | Specificity (%) | Sensitivity (%) | Processing Time (ms) |

|---|---|---|---|

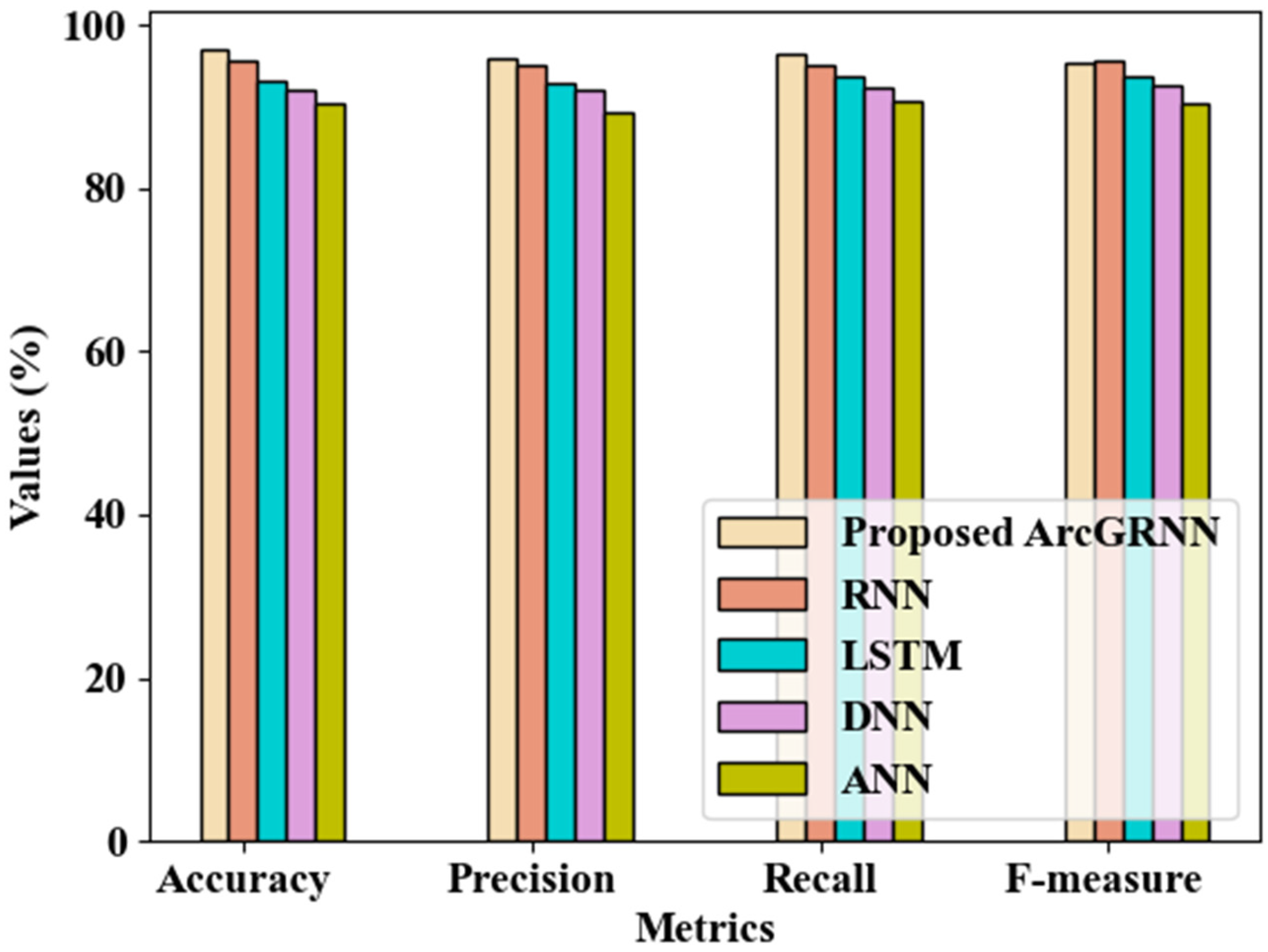

| Proposed ArcGRNN | 95.714 | 96.328 | 174,405 |

| RNN | 94.285 | 95.019 | 407,395 |

| LSTM | 93.09 | 93.652 | 428,012 |

| DNN | 91.788 | 92.301 | 562,513 |

| ANN | 90.394 | 90.595 | 623,048 |

| Methods | PPV (%) | NPV (%) |

|---|---|---|

| Proposed ArcGRNN | 96.74 | 95.83 |

| RNN | 94.20 | 94.12 |

| LSTM | 93.41 | 92.35 |

| DNN | 92.08 | 91.37 |

| ANN | 90.16 | 89.36 |

| Techniques | Detection Time (ms) |

|---|---|

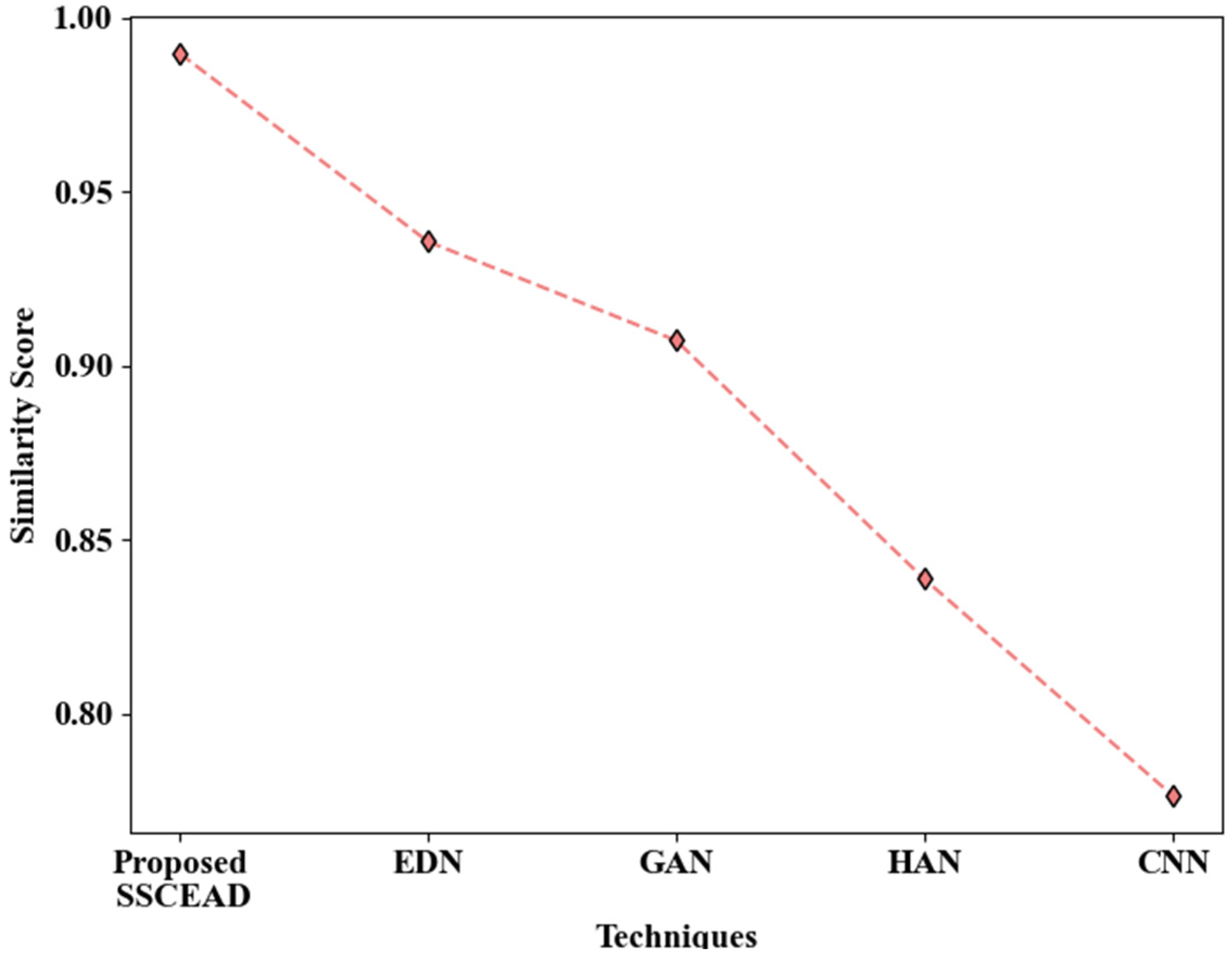

| Proposed SSCEAD | 160,738 |

| EDN | 286,059 |

| GAN | 215,191 |

| HAN | 260,425 |

| CNN | 321,728 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Perumal, U.; Jeribi, F.; Alhameed, M.H. An Enhanced Transportation System for People of Determination. Sensors 2024, 24, 6411. https://doi.org/10.3390/s24196411

Perumal U, Jeribi F, Alhameed MH. An Enhanced Transportation System for People of Determination. Sensors. 2024; 24(19):6411. https://doi.org/10.3390/s24196411

Chicago/Turabian StylePerumal, Uma, Fathe Jeribi, and Mohammed Hameed Alhameed. 2024. "An Enhanced Transportation System for People of Determination" Sensors 24, no. 19: 6411. https://doi.org/10.3390/s24196411

APA StylePerumal, U., Jeribi, F., & Alhameed, M. H. (2024). An Enhanced Transportation System for People of Determination. Sensors, 24(19), 6411. https://doi.org/10.3390/s24196411