The Role of Name, Origin, and Voice Accent in a Robot’s Ethnic Identity

Abstract

1. Introduction

Robot Ethnicity

2. Literature Review

2.1. Robot Origin and In-Group Out-Group Decisions

2.2. Voice Characteristics and Accent

2.3. Robot Gender

3. Experiment

3.1. Method

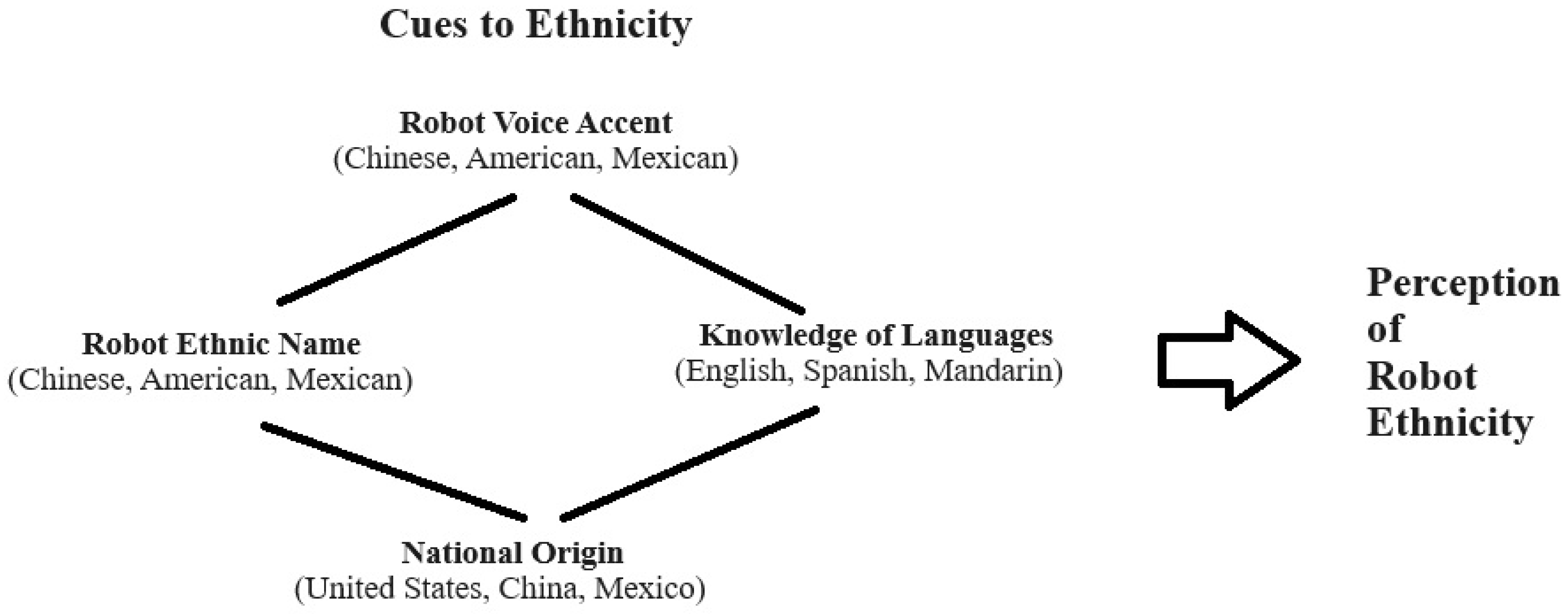

- RQ1

- Will voice accent serve as an effective cue in signaling robot ethnicity?

- According to Torre and Le Maguer [46] accents inform a listener as to where the speaker comes from, thus, it is predicted that voice accent will be an effective cue signaling robot ethnicity. This question fits the objectives of the study by evaluating a cue to ethnicity not directly investigated in previous studies which may trigger an ethnic identity for a robot.

- RQ2

- Is robot origin an effective cue signaling robot ethnicity?

- Eyssel and Kuchenbrandt [10] showed that German participants informed of the national origin of a robot as German or Turkish preferred the in-group robot with the German identity. From [10] it is predicted that robot origin will be an effective cue signaling robot ethnicity. Given the focus of the research to determine whether cues to ethnicity lead to an ethnic identity for a robot the stated national origin may provide support for the idea that robots may be perceived to have an ethnicity.

- RQ3

- Is an ethnic name given to a robot an effective cue to signal robot ethnicity?

- Eyssel and Kuchenbrandt [10] investigating the effect of social category membership on the evaluation of humanoid robots found that participants showed an in-group preference towards a robot that belonged to their in-group—as indicated by its ethnic name. In line with this finding, it is predicted that an ethnic name will signal the perception of an ethnic identity for a robot. Given the goal of the current research is to determine if cues to ethnicity lead to an ethnic identity for a robot, it is proposed that a name associated with the robot common to the stated country of origin could be an effective cue to signal an ethnic identity.

3.1.1. Experiment Design

3.1.2. Participants

3.1.3. Procedure

- American Accent/Spoken Narrative. Hi, my name is Sarah/Bill and I am a robot. I was built in the United States and I speak English and one other language.

- Mexican Accent/Spoken Narrative. Hi, my name is Maria/Jose and I am a robot. I was built in Mexico and I speak Spanish and one other language.

- Chinese Accent/Spoken Narrative. Hi, my name is Fang/Muchen and I am a robot. I was built in China and I speak Mandarin and one other language.

4. Results

5. Discussion

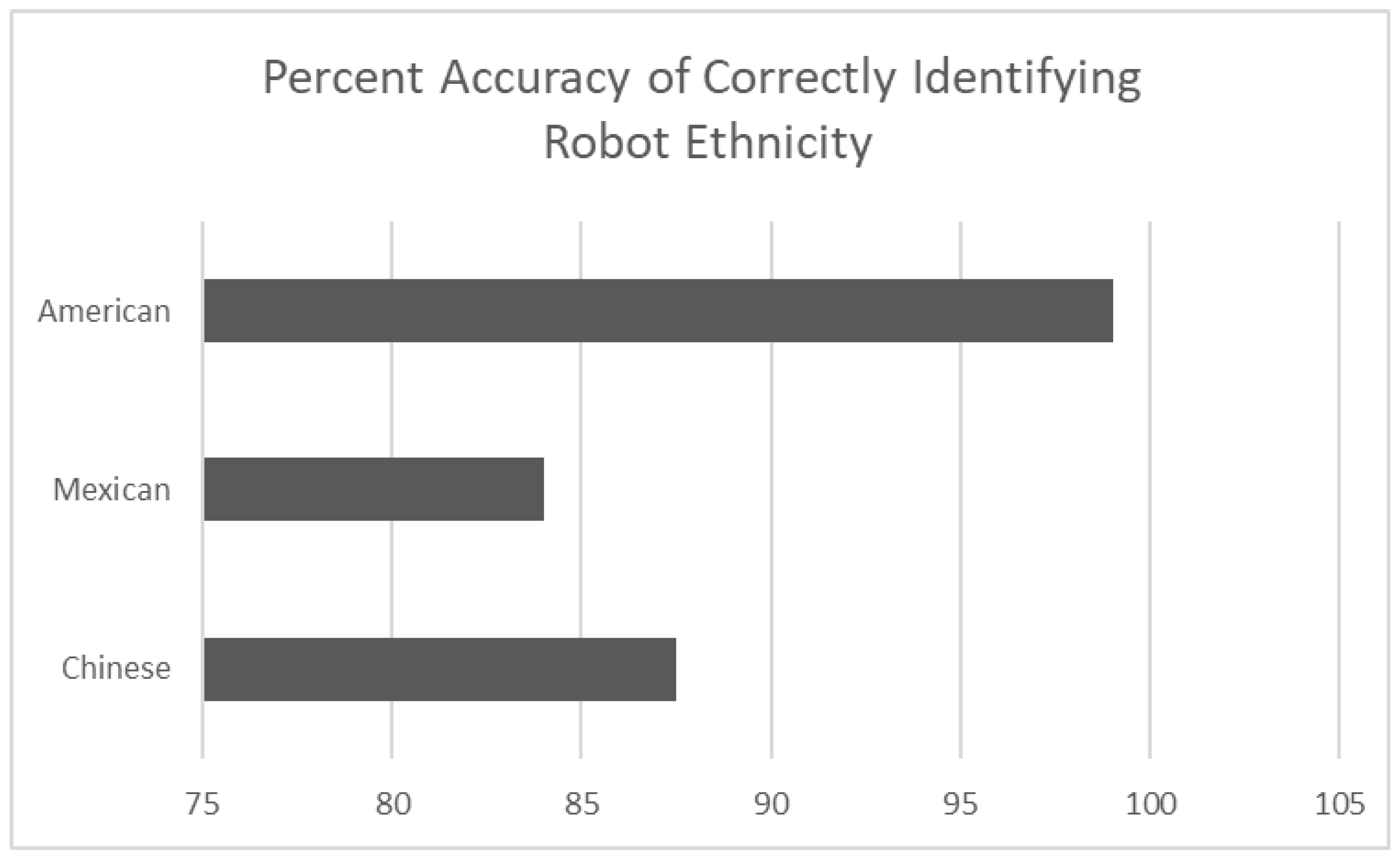

5.1. Research Questions

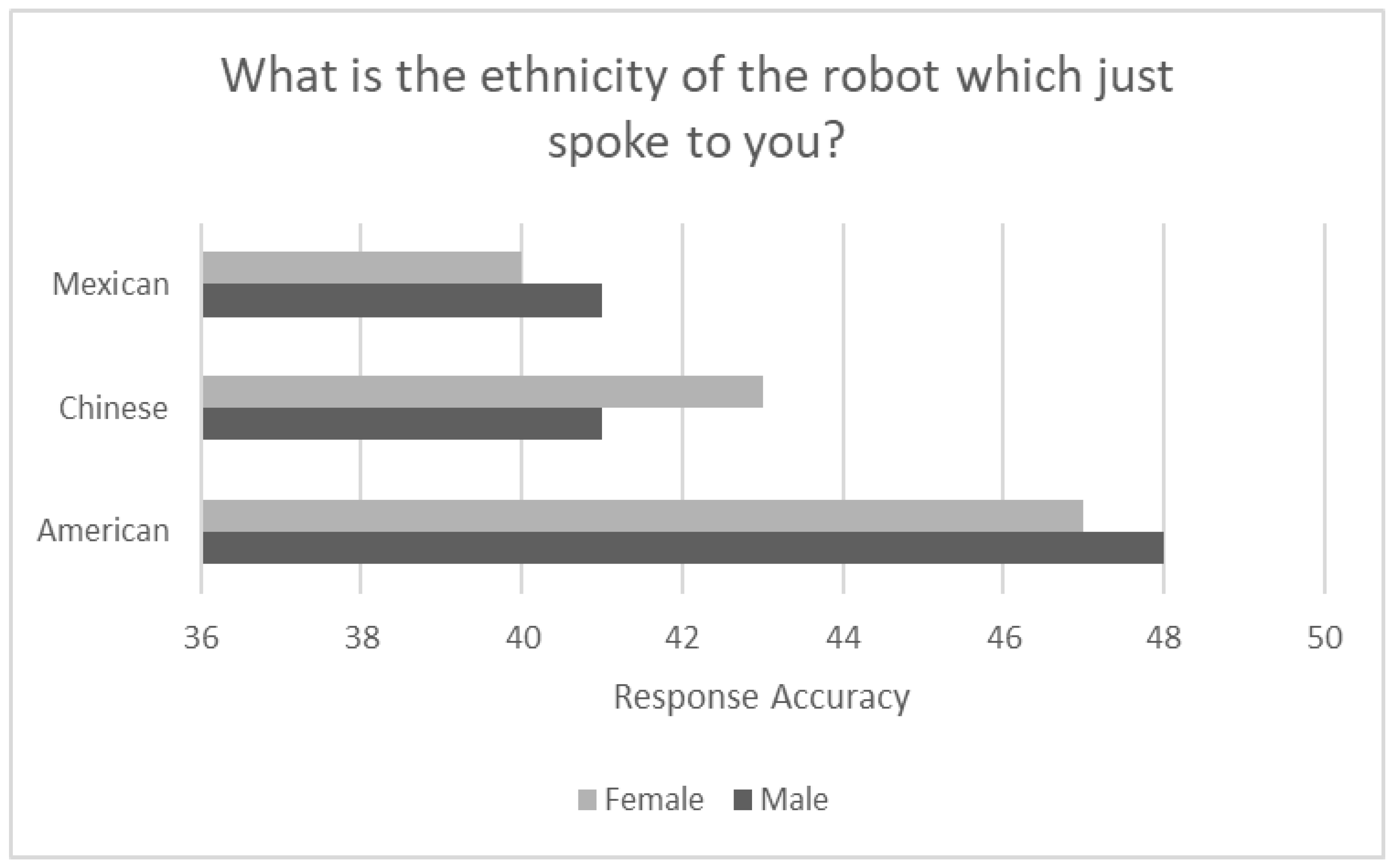

5.2. Bias and Robot Gender

5.3. Guidelines

6. Concluding Thoughts and Future Directions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Forlizzi, J. How Robotic Products Became Social Products: An Ethnographic Study of Cleaning in the Home. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Arlington, VA, USA, 8–11 March 2007; pp. 129–136. [Google Scholar]

- Gonn, A. World’s First Arabic Speaking Robot Constructed in UAE. The Jerusalem Post, 4 November 2009. [Google Scholar]

- Graetz, G.; Michaels, G. Robots at Work. Rev. Econ. Stat. 2018, 100, 753–768. [Google Scholar] [CrossRef]

- Mutlu, B.; Forlizzi, J. Robots in Organizations: The Role of Workflow, Social, and Environmental Factors. In Proceedings of the 3rd ACM/IEEE International Conference on Human Robot Interaction, Amsterdam, The Netherlands, 12–15 March 2008; pp. 287–294. [Google Scholar]

- de Graaf, M.M.A.; Maartje, M.A.; Allouch, B.; Allouch, S. The Evaluation of Different Roles for Domestic Social Robots. In Proceedings of the 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; pp. 676–681. [Google Scholar]

- Barfield, J.K. Evaluating the Self-Disclosure of Personal Information to AI-Enabled Technology. In Research Handbook on Artificial Intelligence and Communication; Nah, E., Ed.; Edward Elgar Press: Cheltenham, UK, 2023. [Google Scholar]

- Barfield, J.K. A Hierarchical Model for Human-Robot Interaction. In Proceedings of the ASIS&T Mid-Year Conference Proceedings, Virtual, 11–13 April 2023. [Google Scholar]

- Bartneck, C.; Nomura, T.; Kanda, T.; Suzuki, T.; Kennsuke, K. Cultural Differences in Attitudes Towards Robots, Proceedings of the AISB Symposium on Robot Companions: Hard Problems and Open Challenges. In Proceedings of the HCI International, Las Vegas, NV, USA, 22–27 July 2005; pp. 1–4. [Google Scholar]

- Bartneck, C.; Yogeeswaran, K.; Qi, M.S.; Woodrward, G.; Sparrow, R.; Wang, S.; Eyssel, F. Robots and Racism. In Proceedings of the 13th ACM/IEEE International Conference on Human-Robot Interaction (HRI ‘18), Chicago, IL, USA, 5–8 March 2018; pp. 196–204. [Google Scholar]

- Eyssel, F.; Kuchenbrandt, D. Social Categorization of Social Robots: Anthropomorphism as a Function of Robot Group Membership. Br. J. Soc. Psychol. 2012, 51, 724–731. [Google Scholar] [CrossRef] [PubMed]

- Eyssel, F.; Hegel, F. (S)he’s Got the Look: Gender Stereotyping of Robots. J. Appl. Soc. Psychol. 2012, 42, 2213–2230. [Google Scholar] [CrossRef]

- Barfield, J.K. Discrimination and Stereotypical Responses to Robots as a Function of Robot Colorization. In Proceedings of the UMAP ‘21: Adjunct Proceedings of the 29th ACM Conference on User Modeling, Adaptation and Personalization, Utrecht, The Netherlands, 21–25 June 2021; pp. 109–114. [Google Scholar]

- Barfield, J.K. Discrimination Against Robots: Discussing the Ethics of Social Interactions and Who is Harmed. Paladyn J. Behav. Robot. 2023, 14, 20220113. [Google Scholar] [CrossRef]

- Sparrow, R. Do Robots Have Race? IEEE Robot. Autom. Mag. 2020, 27, 144–150. [Google Scholar] [CrossRef]

- Sparrow, R. Robotics Has a Race Problem, Science. Technol. Hum. Values 2019, 45, 538–600. [Google Scholar] [CrossRef]

- Barfield, J.K. Robot Ethics for Interaction with Humanoid, AI-Enabled and Expressive Robots. In Cambridge Handbook on Law, Policy, and Regulations for Human-Robot Interaction; Cambridge University Press: Cambridge, UK, 2024. [Google Scholar]

- Eyssel, F. An Experimental Psychological Perspective on Social Robotics. Robot. Auton. Syst. 2017, 87, 363–371. [Google Scholar] [CrossRef]

- Spatola, N.; Anier, N.; Redersdorff, S.; Ferrand, L.; Belletier, C.; Normand, A.; Huguet, P. National Stereotypes and Robots’ Perception: The “Made in” Effect. Front. Robot. AI 2019, 6, 21. [Google Scholar] [CrossRef]

- Appiah, K.A. Race, Culture, Identity: Misunderstood Connections. In Color Conscious: The Political Morality of Race; Anthony, A.K., Gutmann, A., Eds.; Princeton University Press: Princeton, NJ, USA, 1996; pp. 30–105. [Google Scholar]

- Desmet, K.; Ortuño-Ortín, I.; Wacziarg, R. Culture, Ethnicity, and Dive. Am. Econ. Rev. 2017, 107, 2479–2513. [Google Scholar] [CrossRef]

- Banks, J.; Koban, K. A Kind Apart: The Limited Application of Human Race and Sex Stereotypes to a Humanoid Social Robot. Int. J. Soc. Robot. 2022, 15, 1949–1961. [Google Scholar] [CrossRef]

- Bernotat, J.; Eyssel, F. Can(’t) Wait to Have a Robot at Home?—Japanese and German Users’ Attitudes Toward Service Robots in Smart Homes. In Proceedings of the 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 15–22. [Google Scholar]

- Tajfel, H.; Turner, J.C.; Austin, W.G.; Worchel, S. An Integrative Theory of Intergroup Conflict. Organ. Identity Read. 1979, 56–65. [Google Scholar]

- Brubaker, R. Ethnicity Without Groups. Eur. J. Sociol. Arch. Eur. De Sociol. 2002, 43, 163–189. [Google Scholar] [CrossRef]

- Levine, H.B. Reconstructing Ethnicity. J. R. Anthropol. Inst. 1999, 5, 165–180. [Google Scholar] [CrossRef]

- Bernotat, J.; Eyssel, F.; Sachse, J. The (Fe)male Robot: How Robot Body Shape Impacts First Impressions and Trust Towards Robots. Int. J. Soc. Robot. 2021, 13, 477–489. [Google Scholar] [CrossRef]

- Harper, M.; Schoeman, W.J. Influences of gender as a basic-level category in person perception on the gender belief system. Sex Roles 2003, 49, 517–526. [Google Scholar] [CrossRef]

- Peterson, R.A.; Jolibert, A.J.P. A Meta-Analysis of Country of Origin Effects. J. Int. Business Stud. 1995, 26, 883–900. [Google Scholar] [CrossRef]

- Fiske, S.T.; Cuddy, A.J.C.; Glick, P. Universal Dimensions of Social Cognition: Warmth and Competence. Trends Cogn. Sci. 2007, 11, 77–83. [Google Scholar] [CrossRef]

- Howard, A.; Borenstein, J. The Ugly Truth about Ourselves and Our Robot Creations: The Problem of Bias and Social Inequity. Sci. Eng. Ethics 2018, 24, 1521–1536. [Google Scholar] [CrossRef]

- Kuchenbrandt, D.; Eyssel, F.; Bobinger, S.; Neufeld, M. When a Robot’s Group Membership Matters—Anthropomorphization of Robots as a Function of Social Categorization. Int. J. Soc. Robot. 2013, 5, 409–417. [Google Scholar] [CrossRef]

- Abrams, A.M.H.; Rosenthal-von der Putten, A.M. I-C-E Framework: Concepts for Group Dynamics Research in Human-Robot Interaction. Int. J. Soc. Robot. 2020, 12, 1213–1229. [Google Scholar] [CrossRef]

- Deligianis, C.; Stanton, C.; McGarty, C.; Stevens, C.J. The Impact of Intergroup Bias on Trust and Approach Behaviour Towards a Humanoid Robot. J. Hum.-Robot. Interact. 2017, 6, 4–20. [Google Scholar] [CrossRef]

- Nass, C.; Brave, S. Wired for Speech: How Voice Activates and Advances the Human-Computer Relationship; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Nass, C.; Isbister, K.; Lee, E. Truth is Beauty: Researching Embodied Conversational Agents. In Embodied Conversational Agents; Cassell, J., Sullivan, J., Prevost, S., Churchill, E., Eds.; MIT Press: Cambridge, MA, USA, 2000; pp. 374–402. [Google Scholar]

- Torre, I.; Goslin, J.; White, L.; Zanatto, D. Trust in Artificial Voices: A “Congruency Effect” of First Impressions and Behavioural Experience. In Proceedings of the Technology, Mind, and Society Conference (TechMindSociety), Washington, DC, USA, 5–7 April 2018; pp. 1–6. [Google Scholar]

- Tamagawa, R.; Watson, C.I.; Kuo, I.H.; MacDonald, B.A.; Broadbent, E. The Effects of Synthesized Voice Accents on User Perceptions of Robots. Int. J. Soc. Robot. 2011, 3, 253–262. [Google Scholar] [CrossRef]

- Steinhaeusser, S.C.; Schaper, P.; Akullo, O.B.; Friedrich, P.; On, J.; Lugrin, B. Your New Friend Nao Versus Robot No. 783-Effects of Personal of Impersonal Framing in A Robotic Storytelling Use Case. In Proceedings of the HRI’21 Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021; pp. 334–338. [Google Scholar]

- Wong, A.; Xu, A.; Dudek, G. Investigating Trust Factors in Human-Robot Shared Control: Implicit Gender Bias Around Robot Voice. In Proceedings of the 16th Conference on Computer and Robot Vision (CRV), Kingston, QC, Canada, 29–31 May 2019; pp. 195–200. [Google Scholar]

- Behrens, S.I.; Egsvang, A.K.K.; Hansen, M.; Mollegard-Schroll, A.M. Gendered Robot Voices and Their Influence on Trust. In Proceedings of the HRI ‘18: Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 3–5 March 2018; pp. 63–64. [Google Scholar]

- Gavidia-Calderon, C.; Bennaceur, A.; Kordoni, A.; Levine, M.; Nuseibeh, B. What Do You Want from Me? Adapting Systems to the Uncertainty of Human Preferences. In Proceedings of the 44th ACM/IEEE International Conference on Software Engineering—New Ideas and Emerging Results (ICSE-NIER), Pittsburgh, PA, USA, 22–24 May 2022; pp. 126–130. [Google Scholar]

- Song, S.; Baba, J.; Nakanishi, J.; Yoshikawa, Y.; Ishiguro, Y. Mind The Voice!: Effect of Robot Voice Pitch, Robot Voice Gender, and User Gender on User Perception of Teleoperated Robots. In Proceedings of the CHI EA ‘20: Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–8. [Google Scholar]

- Mullennix, J.W.; Stern, S.E.; Wilson, S.J.; Dyson, C. Social Perception of Male and Female Computer Synthesized Speech. Comput. Hum. Behav. 2003, 19, 407–424. [Google Scholar] [CrossRef]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places; CSLI: California, CA, USA, 1996. [Google Scholar]

- Trovato, G.; Ham, J.R.C.; Hashimoto, K.; Ishii, H.; Takanishi, A. Investigating the Effect of Relative Cultural Distance on the Acceptance of Robots. In Proceedings of the 7th International Conference on Social Robotics (ICSR), Paris, France, 26–30 October 2015; pp. 664–673. [Google Scholar]

- Torre, I.; Le Maguer, S. Should Robots Have Accents? In Proceedings of the 29th IEEE International Conference on Robot and Human Interactive Communication (IEEE RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 208–214. [Google Scholar]

- Lugrin, B.; Strole, E.; Obremski, D.; Schwab, F.; Lange, B. What if it Speaks Like it was from the Village? Effects of a Robot Speaking in Regional Language Variations on Users’ Evaluations. In Proceedings of the 29th IEEE International Conference on Robot and Human Interactive Communication (IEEE RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 1315–1320. [Google Scholar]

- McGinn, C.; Torre, I. Can You Tell the Robot by the Voice? An Exploratory Study on the Role of Voice in the Perception of Robots. In Proceedings of the 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 211–221. [Google Scholar]

- Bryant, D.; Borenstein, J.; Howard, A. Why Should We Gender?: The Effect of Robot Gendering and Occupational Stereotypes on Human Trust and Perceived Competency. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 13–21. [Google Scholar]

- Rubin, M.; Hewstone, M. Social Identity Theory’s Self-Esteem Hypothesis: A Review and Some Suggestions for Clarification. Personal. Soc. Psychol. Rev. 1998, 2, 40–62. [Google Scholar] [CrossRef] [PubMed]

- Seaborn, K.; Pennefather, P. Gender Neutrality in Robots: An Open Living Review Framework. In Proceedings of the 2022 ACM/IEEE International Conference on Human-Robot Interaction (HRI ‘22), Sapporo, Japan, 7–10 March 2022; pp. 634–638. [Google Scholar]

- Kuchenbrandt, D.; Eyssel, F.; Seidel, S.K. Cooperation Makes it Happen: Imagined Intergroup Cooperation Enhances the Positive Effects of Imagined Contact. Group Process. Intergroup Relat. 2013, 16, 635–647. [Google Scholar] [CrossRef]

- Garcha, D.; Geiskkovitch, D.; Thiessen, R.; Prentice, S.; Fisher, K.; Young, J. Fact to Face with s Sexist Robot: Investigating How Women Reach to Sexist Robot Behaviors. Int. J. Soc. Robot 2023, 15, 1809–1828. [Google Scholar] [CrossRef]

- De Angeli, A.; Brahmman, S.; Wallis, P.; Dix, A. Misuse and Use of Interactive Technologies. In Proceedings of the CHI’06 Extended Abstracts on Human Factors in Computing Systems, Montreal, QC, Canada, 22–27 April 2006; pp. 1647–1650. [Google Scholar]

- Galatolo, A.; Melsion, G.I.; Leite, I.; Winkle, K. The Right (Wo)Man for the Job? Exploring the Role of Gender when Challenging Gender Stereotypes with a Social Robot. Int. J. Soc. Robot. 2023, 15, 1933–1947. [Google Scholar] [CrossRef]

- Suzuki, T.; Nomura, T. Gender Preferences for Robots and Gender Equality Orientation in Communication Situations Depending on Occupation. J. Jpn. Soc. Fuzzy Theory Intell. Inform. 2023, 35, 706–711. [Google Scholar] [CrossRef]

- Rogers, K.; Bryant, D.; Howard, A. Robot Gendering: Influences on Trust, Occupational Competency, and Preference of Robot Over Human. In Proceedings of the CHI’20: Extended Abstracts of the 2020 Chi Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–7. [Google Scholar]

- Schrum, M.; Ghuy, M.; Hedlund-Botti, E.; Natarajan, M.; Johnson, M.; Gombolaym, M. Concerning Trends in Likert Scale Usage in Human-robot Interaction: Towards Improving Best Practices. Trans. Hum. Robot. Interact. 2023, 12, 33. [Google Scholar] [CrossRef]

- Ostrowski, A.K.; Walker, R.; Das, M.; Yang, M.; Brezeal, C.; Park, H.W.; Verma, A. Ethics, Equity, & Justice in Human-Robot Interaction: A Review and Future Directions. In Proceedings of the 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)—Social, Asocial, and Antisocial Robots, Naples, Italy, 29 August–2 September 2022; pp. 969–976. [Google Scholar]

- Askin, G.; Saltik, I.; Boz, T.E.; Urgen, B.A. Gendered Actions with a Genderless Robot: Gender Attribution to Humanoid Robots in Action. Int. J. Soc. Robot. 2023, 15, 1919–1931. [Google Scholar] [CrossRef]

| Chinese Accent Male Voice | Chinese Accent Female Voice | American Accent Male Voice | American Accent Female Voice | Mexican Accent Male Voice | Mexican Accent Female Voice | Chi-Square Analysis | Survey Question |

|---|---|---|---|---|---|---|---|

| 41 Correct 7 Incorrect (English, Latin, White) | 43 Correct 5 Incorrect (English, White) | 48 Correct 0 Incorrect | 47 Correct 1 Incorrect (Sharia) | 41 Correct 7 Incorrect (English, not sure, White) | 40 Correct 8 Incorrect | x2 = 3.91, p = 0.56 x2 = 12.29, p = 0.03 | What is the ethnicity of the robot which just spoke to you? |

| Mean = 8.19 Std = 1.54 | Mean = 8.40 Std = 1.57 | Mean = 8.40 Std = 1.59 | Mean = 8.15 Std = 1.95 | Mean = 7.88 Std = 1.93 | Mean = 8.08 Std = 1.87 | How confident are you in your above answer? | |

| 48 Correct 0 incorrect | 47 Correct 1 Incorrect | 48 Correct 0 Incorrect | 48 Correct 0 Incorrect | 48 Correct 0 Incorrect | 48 Correct 0 Incorrect | x2 = 0.02, p = 0.99 | What is the gender of the robot which spoke to you? |

| Mean = 8.58 Std = 1.51 | Mean = 8.56 Std = 1.54 | Mean = 8.69 Std = 1.43 | Mean = 8.60 Std = 1.53 | Mean = 8.56 Std = 1.61 | Mean = 8.56 Std = 1.64 | How confident are you in your above answer? | |

| 47 China/Asian 1 Incorrect (USA) | 46 China/Asian 2 Incorrect (1 USA, 1 Mexico) | 48 USA 0 Incorrect | 48 USA 0 Incorrect | 39 Mexico 9 Incorrect (USA, Taiwan, unclear) | 39 Mexico 9 Incorrect (USA) | x2 = 3.48, p = 0.62 x2 = 26.71, p < 0.0001 | Where was the robot built? |

| 44 Correct 4 Incorrect | 33 Correct 15 Incorrect (May, Frank, not sure, hard to understand | 39 Correct 9 Incorrect (Brew, Board, Will) | 48 Correct 0 Incorrect | 38 Correct 10 Incorrect (Moses, not sure) | 47 Correct 1 Incorrect (I don’t know) | x2 = 8.81, p = 0.12 x2 = 26.08, p < 0.0001 | What is the name of the robot? |

| Research Result | Design Rule | Relevance for HRI |

|---|---|---|

| Participants were highly accurate identifying gender by the robot’s voice pitch and gendered name | Robot gender can be achieved by varying voice pitch and robot name; more accurate judgments of robot gender may occur if both cues are presented together | A gendered voice can be used to create in-group members but could lead to gender biases |

| Participants were highly accurate in identifying the ethnicity of the robot by its accent especially if an American accent was used | Robot ethnicity decisions can be made more accurate if the robot voice accent matches the ethnicity of the user | Voice accents can be used to signal robot social characteristics and to create in-group members |

| There was a tendency to determine robot ethnicity based on the stated origin of the robot more so than based on the ethnic voice accent | Use cues to robot origin to signal robot ethnicity, voice accent is less effective in determining robot ethnicity than the stated origin of the robot | Providing a national origin for a robot influences the perception and evaluation of the robot |

| There was a tendency to judge the robot as American even if the origin was stated as China or Mexico | Robot origin is a factor in robot ethnicity decisions, but there may be an effect for the dominant culture | As explained by the concept of cultural closeness the primary (or dominant) culture may prevail in decisions about robot ethnicity |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barfield, J.K. The Role of Name, Origin, and Voice Accent in a Robot’s Ethnic Identity. Sensors 2024, 24, 6421. https://doi.org/10.3390/s24196421

Barfield JK. The Role of Name, Origin, and Voice Accent in a Robot’s Ethnic Identity. Sensors. 2024; 24(19):6421. https://doi.org/10.3390/s24196421

Chicago/Turabian StyleBarfield, Jessica K. 2024. "The Role of Name, Origin, and Voice Accent in a Robot’s Ethnic Identity" Sensors 24, no. 19: 6421. https://doi.org/10.3390/s24196421

APA StyleBarfield, J. K. (2024). The Role of Name, Origin, and Voice Accent in a Robot’s Ethnic Identity. Sensors, 24(19), 6421. https://doi.org/10.3390/s24196421