Adaptive Event-Triggered Consensus of Multi-Agent Systems in Sense of Asymptotic Convergence

Abstract

:1. Introduction

2. Preliminaries

2.1. Problem Statement

2.2. Graph Theory

3. Main Results

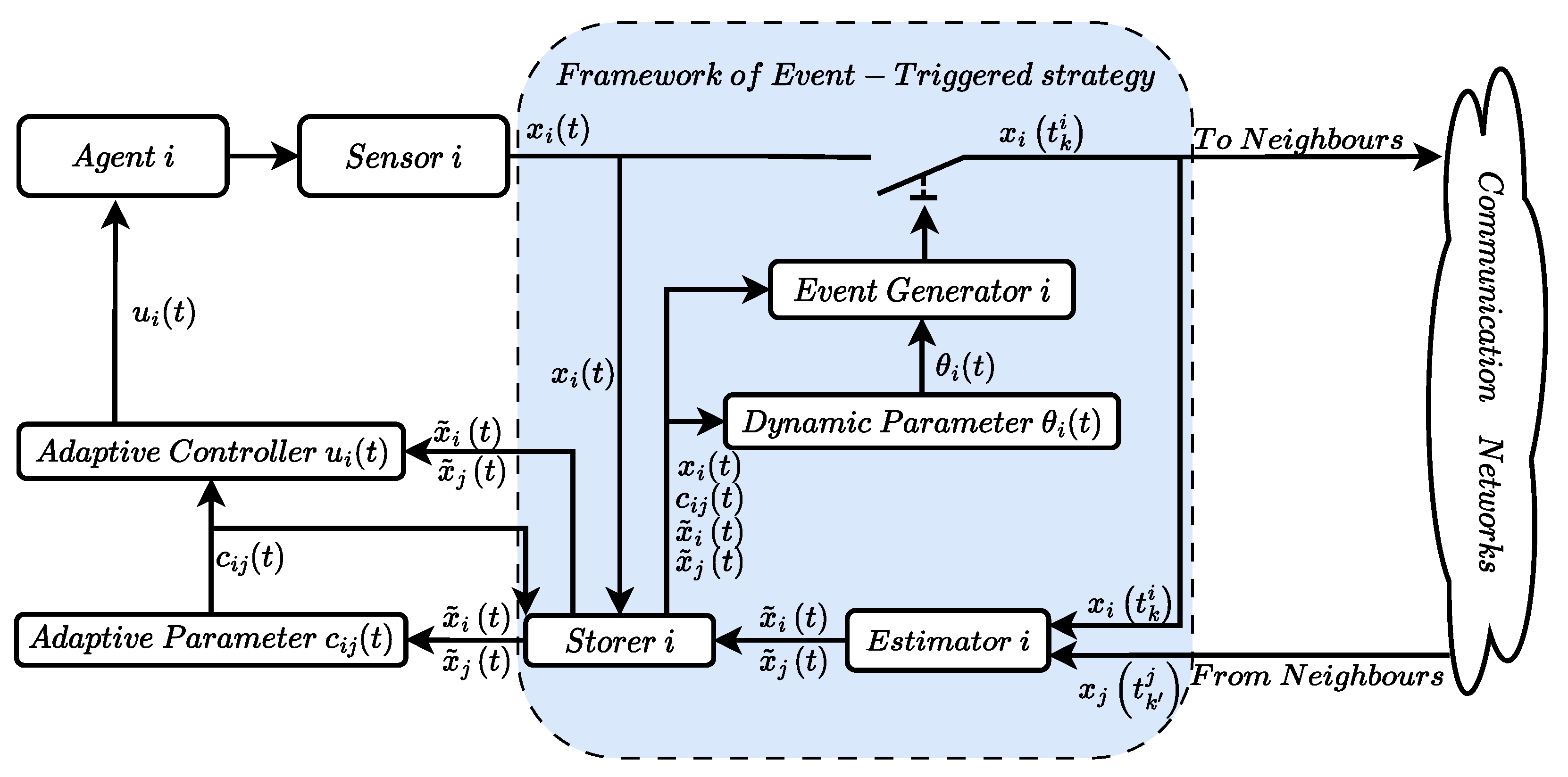

3.1. The Consensus Control Module

3.2. The Event-Triggering Protocol

3.3. Consensus Analysis

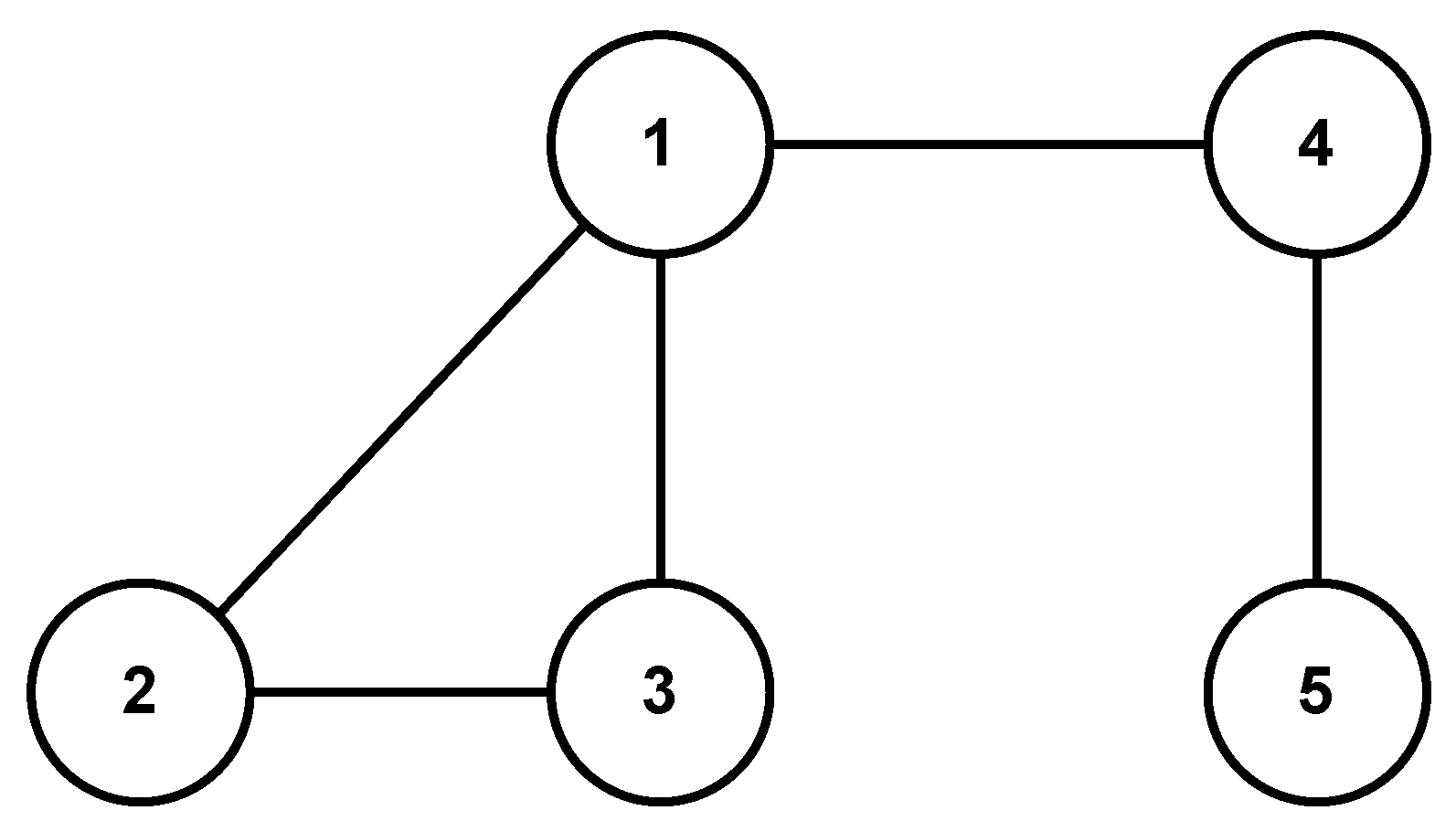

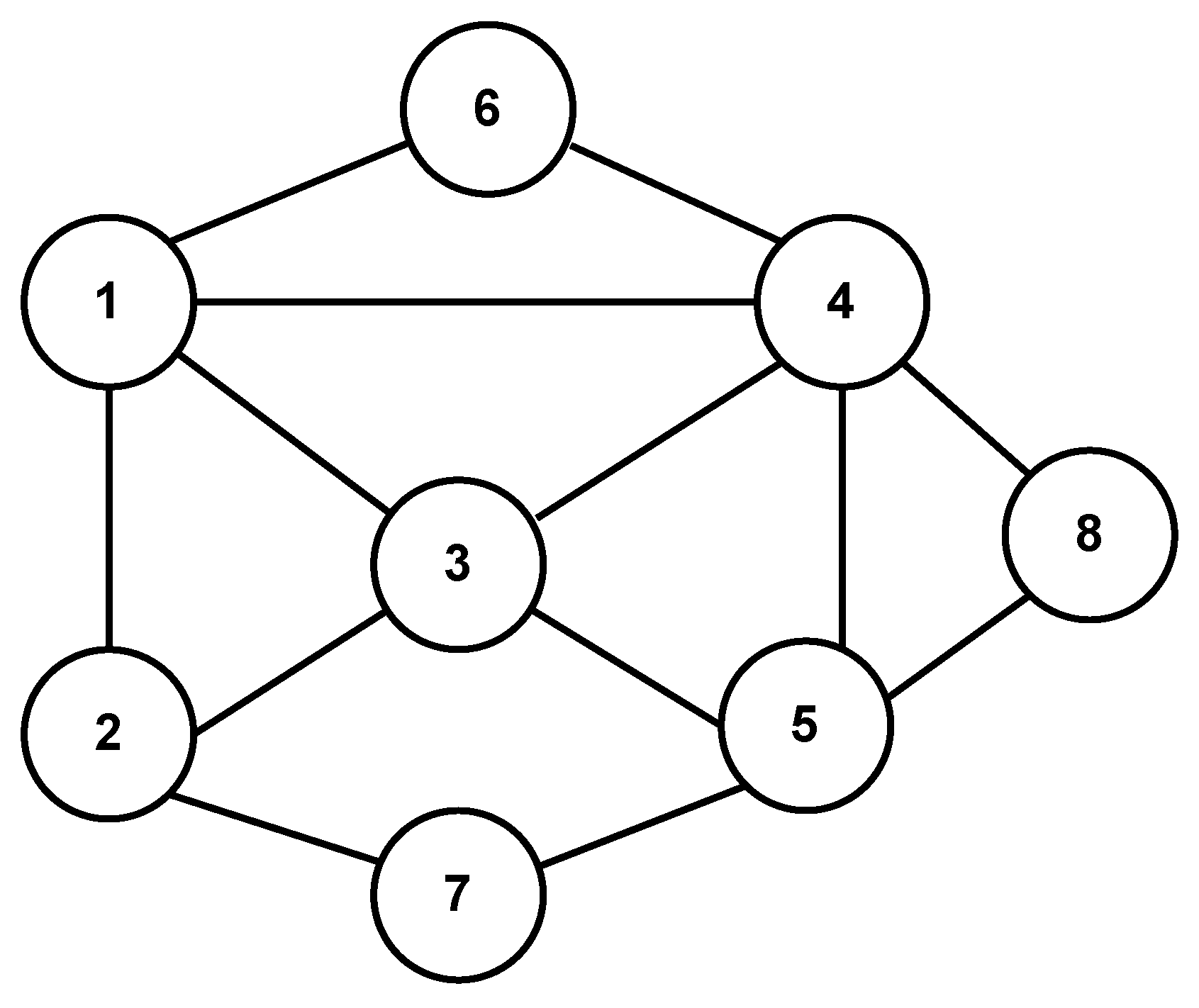

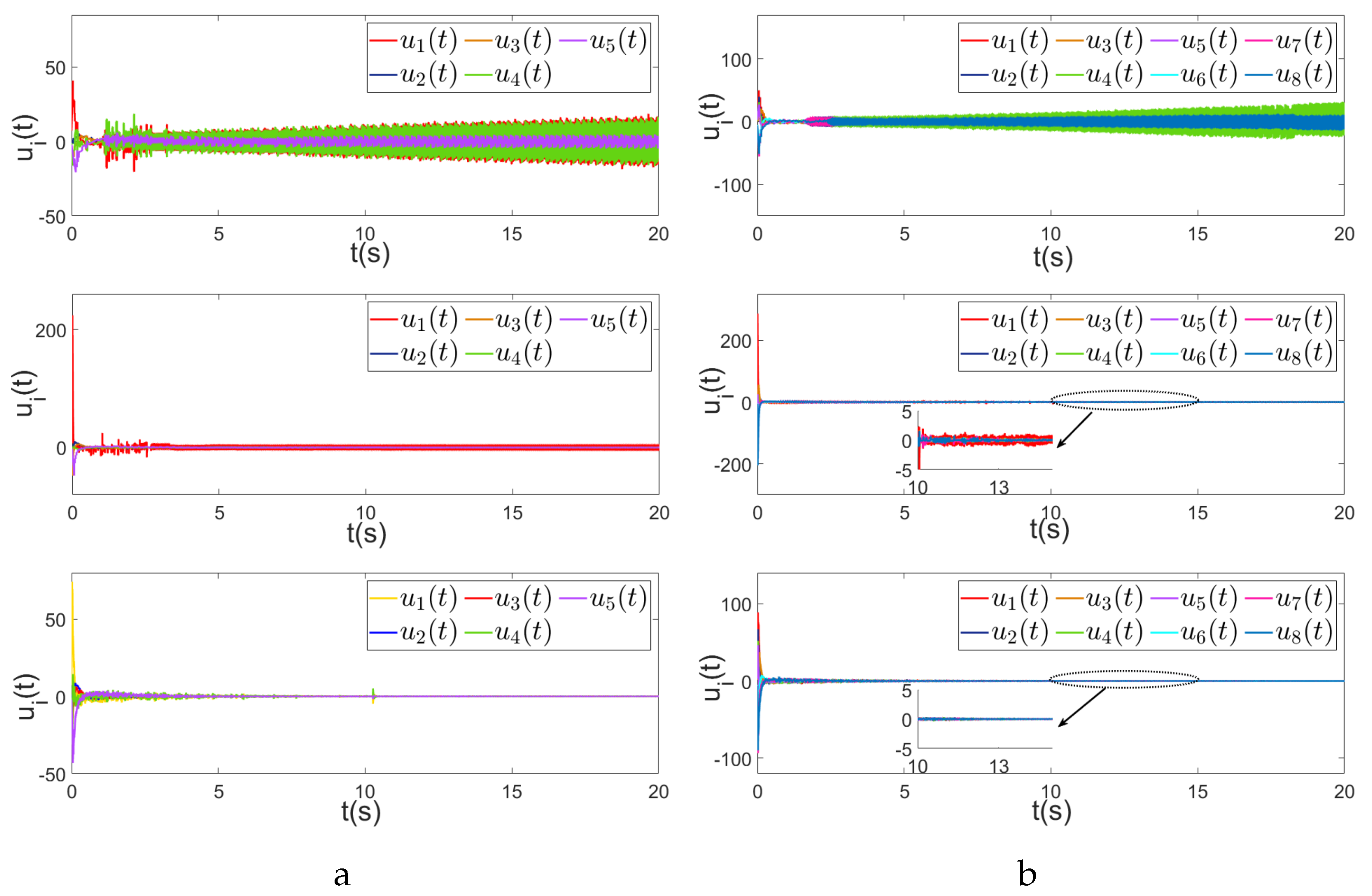

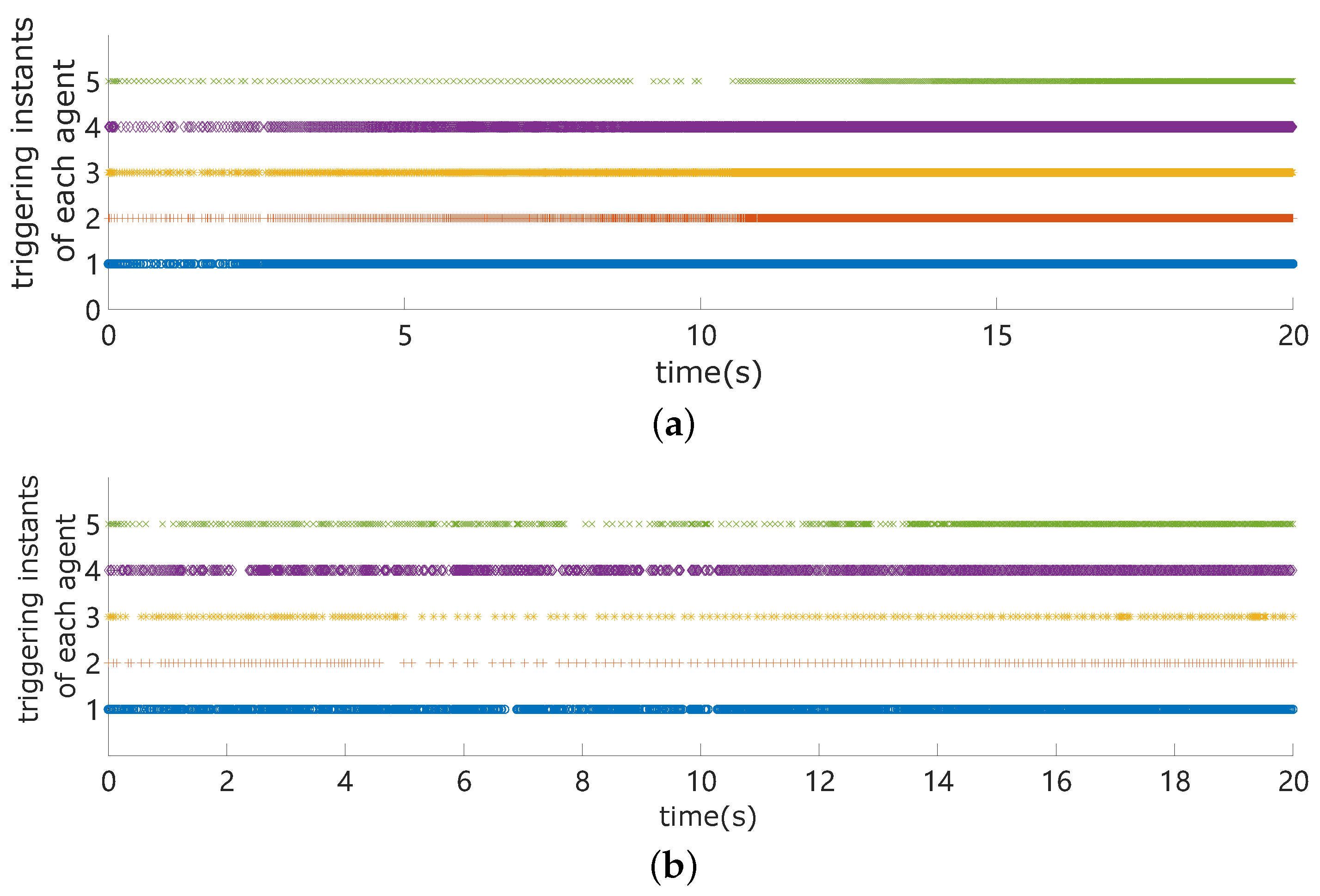

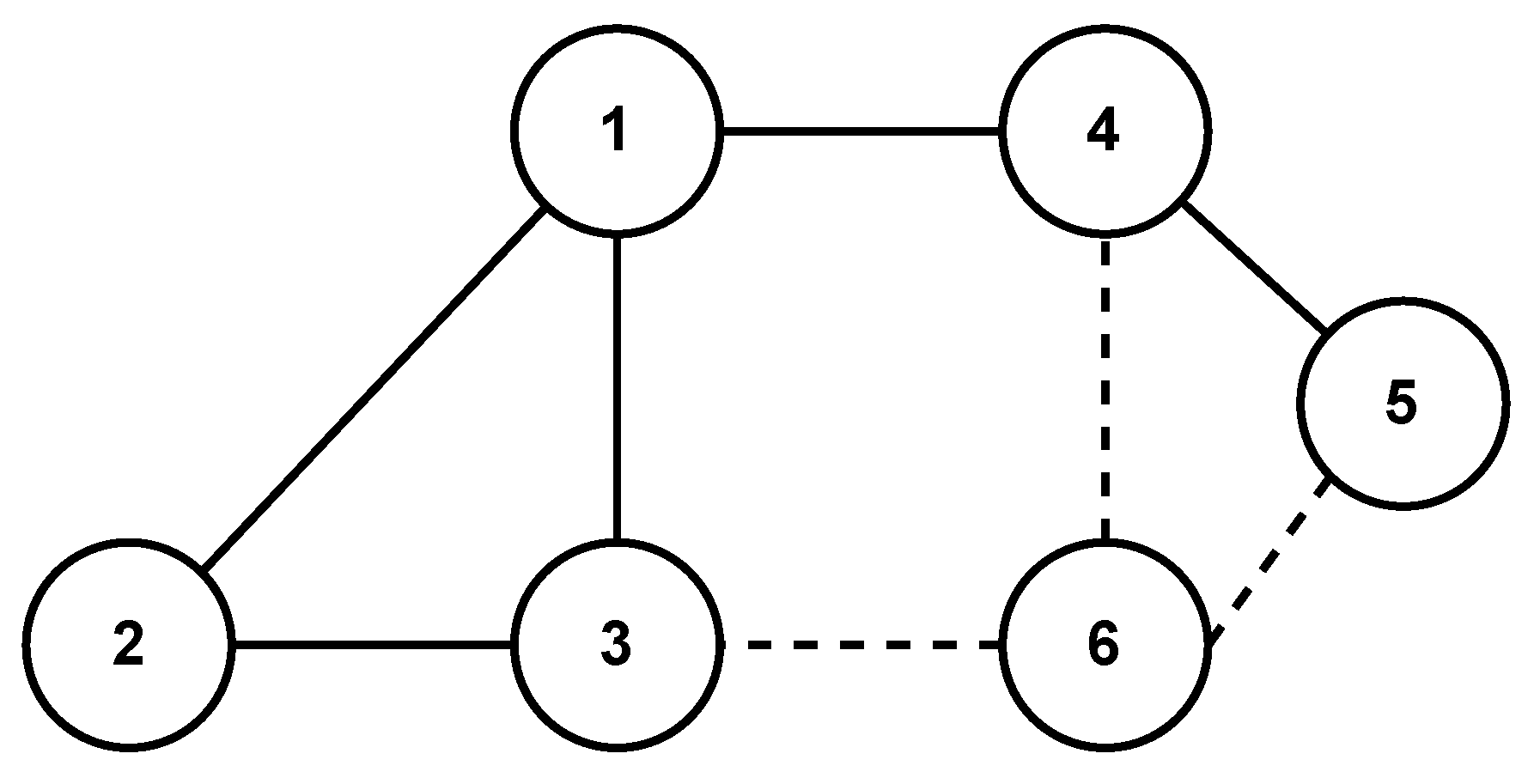

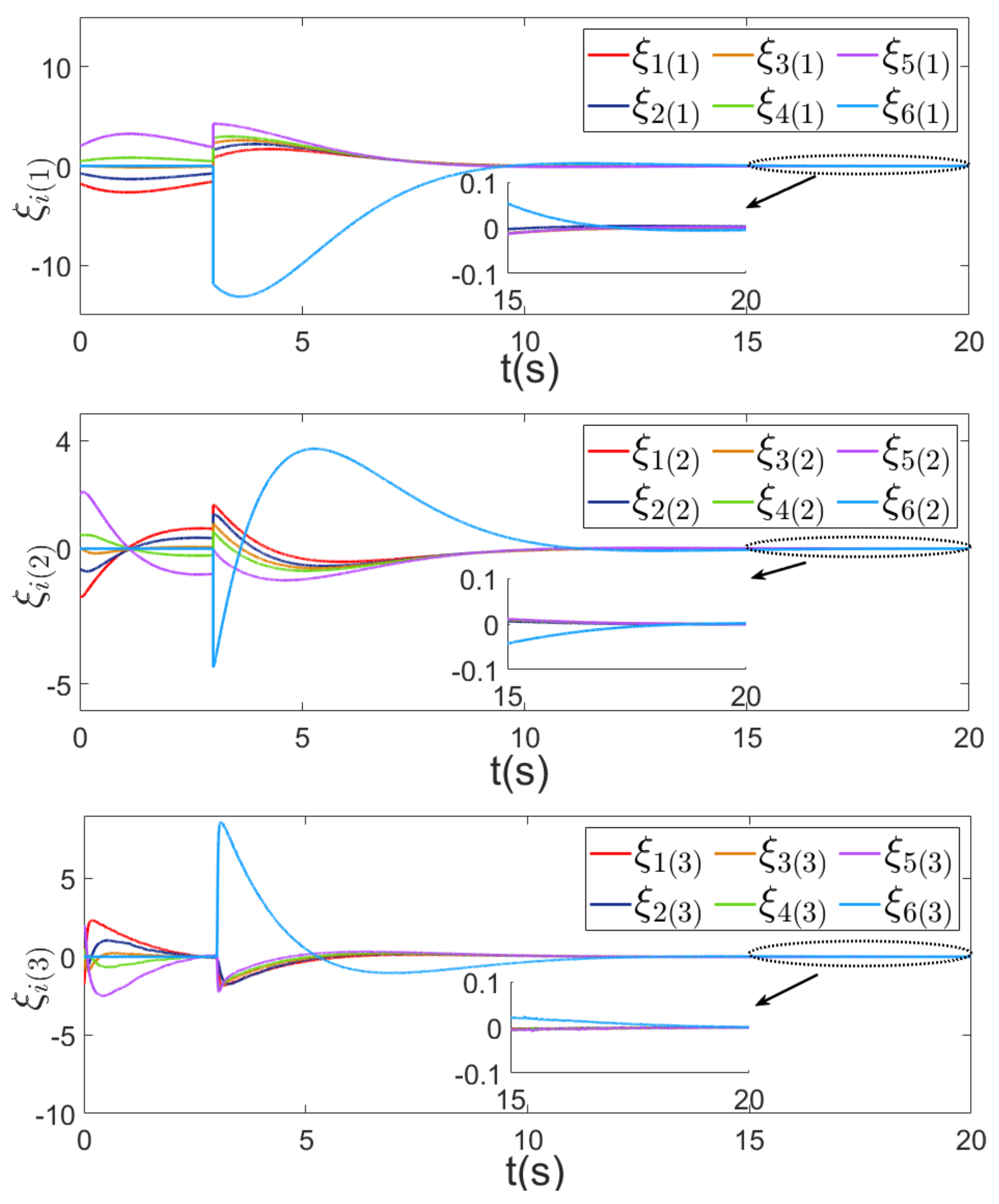

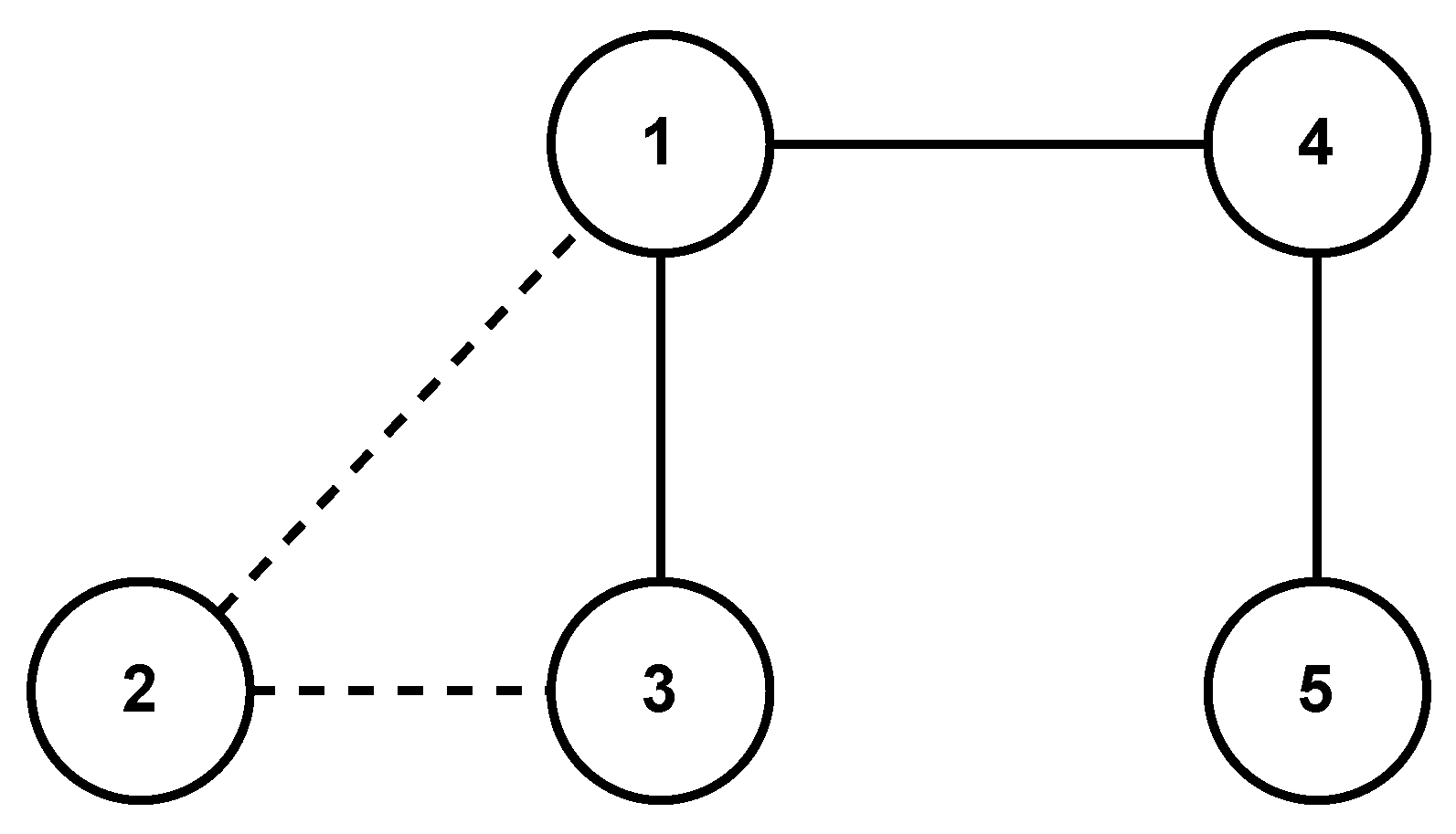

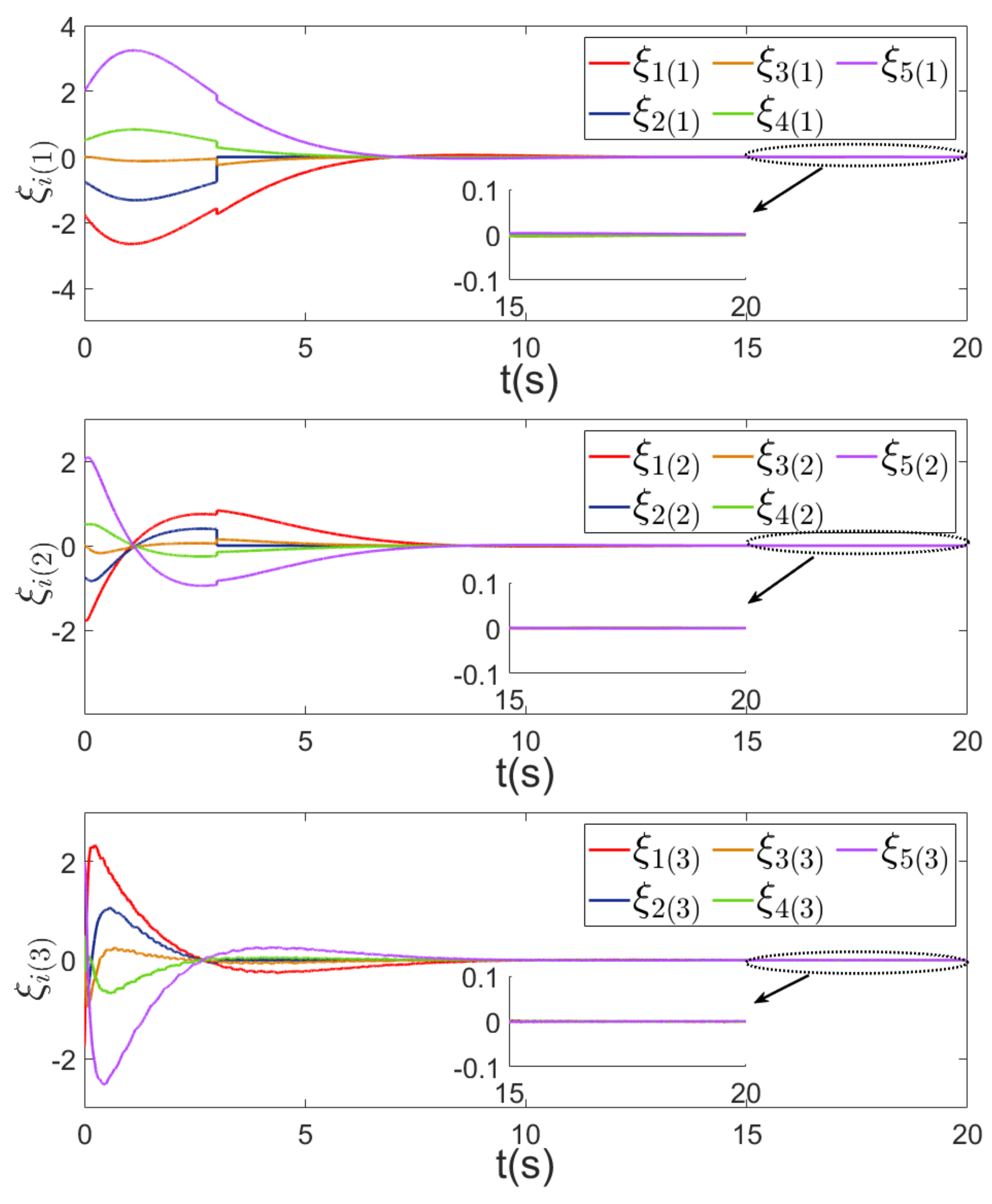

4. Simulation Results

5. Conclusions and Perspectives

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mahela, O.P.; Khosravy, M.; Gupta, N.; Khan, B.; Alhelou, H.H.; Mahla, R.; Patel, N.; Siano, P. Comprehensive Overview of Multi-Agent Systems for Controlling Smart Grids. Csee J. Power Energy Syst. 2022, 8, 115–131. [Google Scholar] [CrossRef]

- Ge, X.; Han, Q.L. Distributed Formation Control of Networked Multi-Agent Systems Using a Dynamic Event-Triggered Communication Mechanism. IEEE Trans. Ind. Electron. 2017, 64, 8118–8127. [Google Scholar] [CrossRef]

- Ge, X.; Han, Q.L. Distributed Sampled-Data Asynchronous H∞ Filtering of Markovian Jump Linear Systems over Sensor Networks. Signal Process. 2016, 127, 86–99. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, X.M.; Han, Q.L. Event-Triggered Generalized Dissipativity Filtering for Neural Networks With Time-Varying Delays. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 77–88. [Google Scholar] [CrossRef] [PubMed]

- Zhan, J.; Li, X. Consensus in Networked Multiagent Systems With Stochastic Sampling. IEEE Trans. Circuits Syst. II Express Briefs 2017, 64, 982–986. [Google Scholar] [CrossRef]

- Wen, G.; Wang, H.; Yu, X.; Yu, W. Bipartite Tracking Consensus of Linear Multi-Agent Systems With a Dynamic Leader. IEEE Trans. Circuits Syst. II Express Briefs 2018, 65, 1204–1208. [Google Scholar] [CrossRef]

- Ding, L.; Han, Q.L.; Ge, X.; Zhang, X.M. An Overview of Recent Advances in Event-Triggered Consensus of Multiagent Systems. IEEE Trans. Cybern. 2018, 48, 1110–1123. [Google Scholar] [CrossRef]

- Ge, X.; Han, Q.L.; Ding, D.; Zhang, X.M.; Ning, B. A Survey on Recent Advances in Distributed Sampled-Data Cooperative Control of Multi-Agent Systems. Neurocomputing 2018, 275, 1684–1701. [Google Scholar] [CrossRef]

- Nowzari, C.; Garcia, E.; Cortés, J. Event-Triggered Communication and Control of Networked Systems for Multi-Agent Consensus. Automatica 2019, 105, 1–27. [Google Scholar] [CrossRef]

- Yang, D.; Ren, W.; Liu, X. Decentralized Consensus for Linear Multi-Agent Systems under General Directed Graphs Based on Event-Triggered/Self-Triggered Strategy. In Proceedings of the 53rd IEEE Conference on Decision and Control, Los Angeles, CA, USA, 15–17 December 2014; pp. 1983–1988. [Google Scholar] [CrossRef]

- Murray, R.M. Recent Research in Cooperative Control of Multivehicle Systems. J. Dyn. Syst. Meas. Control. 2007, 129, 571–583. [Google Scholar] [CrossRef]

- Cao, Y.; Yu, W.; Ren, W.; Chen, G. An Overview of Recent Progress in the Study of Distributed Multi-Agent Coordination. IEEE Trans. Ind. Informatics 2013, 9, 427–438. [Google Scholar] [CrossRef]

- Li, Z.; Duan, Z.; Chen, G.; Huang, L. Consensus of Multiagent Systems and Synchronization of Complex Networks: A Unified Viewpoint. IEEE Trans. Circuits Syst. Regul. Pap. 2010, 57, 213–224. [Google Scholar] [CrossRef]

- Ma, C.; Zhang, J. Necessary and Sufficient Conditions for Consensusability of Linear Multi-Agent Systems. IEEE Trans. Autom. Control. 2010, 55, 1263–1268. [Google Scholar] [CrossRef]

- Yu, W.; Zheng, W.X.; Chen, G.; Ren, W.; Cao, J. Second-Order Consensus in Multi-Agent Dynamical Systems with Sampled Position Data. Automatica 2011, 47, 1496–1503. [Google Scholar] [CrossRef]

- Wen, G.; Duan, Z.; Yu, W.; Chen, G. Consensus of Multi-Agent Systems with Nonlinear Dynamics and Sampled-Data Information: A Delayed-Input Approach: Consensus of Multi-Agent Systems with Sampled-Data Information. Int. J. Robust Nonlinear Control. 2013, 23, 602–619. [Google Scholar] [CrossRef]

- Ding, L.; Guo, G. Sampled-Data Leader-Following Consensus for Nonlinear Multi-Agent Systems with Markovian Switching Topologies and Communication Delay. J. Frankl. Inst. 2015, 352, 369–383. [Google Scholar] [CrossRef]

- Tabuada, P. Event-Triggered Real-Time Scheduling of Stabilizing Control Tasks. IEEE Trans. Autom. Control. 2007, 52, 1680–1685. [Google Scholar] [CrossRef]

- Dimarogonas, D.V.; Frazzoli, E.; Johansson, K.H. Distributed Event-Triggered Control for Multi-Agent Systems. IEEE Trans. Autom. Control. 2012, 57, 1291–1297. [Google Scholar] [CrossRef]

- Seyboth, G.S.; Dimarogonas, D.V.; Johansson, K.H. Event-Based Broadcasting for Multi-Agent Average Consensus. Automatica 2013, 49, 245–252. [Google Scholar] [CrossRef]

- Zhu, W.; Jiang, Z.P.; Feng, G. Event-Based Consensus of Multi-Agent Systems with General Linear Models. Automatica 2014, 50, 552–558. [Google Scholar] [CrossRef]

- Nowzari, C.; Cortés, J. Distributed Event-Triggered Coordination for Average Consensus on Weight-Balanced Digraphs. Automatica 2016, 68, 237–244. [Google Scholar] [CrossRef]

- Liu, X.; Xuan, Y.; Zhang, Z.; Diao, Z.; Mu, Z.; Li, Z. Event-Triggered Consensus for Discrete-Time Multi-agent Systems with Parameter Uncertainties Based on a Predictive Control Scheme. J. Syst. Sci. Complex. 2020, 33, 706–724. [Google Scholar] [CrossRef]

- Cheng, Y.; Ugrinovskii, V. Event-Triggered Leader-Following Tracking Control for Multivariable Multi-Agent Systems. Automatica 2016, 70, 204–210. [Google Scholar] [CrossRef]

- Hu, W.; Liu, L. Cooperative Output Regulation of Heterogeneous Linear Multi-Agent Systems by Event-Triggered Control. IEEE Trans. Cybern. 2017, 47, 105–116. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Ho, D.W.C.; Li, L.; Cao, J. Event-Triggered Schemes on Leader-Following Consensus of General Linear Multiagent Systems Under Different Topologies. IEEE Trans. Cybern. 2017, 47, 212–223. [Google Scholar] [CrossRef] [PubMed]

- Xie, D.; Xu, S.; Zhang, B.; Li, Y.; Chu, Y. Consensus for Multi-agent Systems with Distributed Adaptive Control and an Event-triggered Communication Strategy. Iet Control. Theory Appl. 2016, 10, 1547–1555. [Google Scholar] [CrossRef]

- Zhu, W.; Zhou, Q.; Wang, D. Consensus of Linear Multi-Agent Systems via Adaptive Event-Based Protocols. Neurocomputing 2018, 318, 175–181. [Google Scholar] [CrossRef]

- Cheng, B.; Li, Z. Designing Fully Distributed Adaptive Event-Triggered Controllers for Networked Linear Systems With Matched Uncertainties. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3645–3655. [Google Scholar] [CrossRef]

- Li, T.; Qiu, Q.; Zhao, C. A Fully Distributed Protocol with an Event-Triggered Communication Strategy for Second-Order Multi-Agent Systems Consensus with Nonlinear Dynamics. Sensors 2021, 21, 4059. [Google Scholar] [CrossRef]

- Cheng, B.; Li, Z. Fully Distributed Event-Triggered Protocols for Linear Multiagent Networks. IEEE Trans. Autom. Control 2019, 64, 1655–1662. [Google Scholar] [CrossRef]

- Ye, D.; Chen, M.M.; Yang, H.J. Distributed Adaptive Event-Triggered Fault-Tolerant Consensus of Multiagent Systems With General Linear Dynamics. IEEE Trans. Cybern. 2019, 49, 757–767. [Google Scholar] [CrossRef] [PubMed]

- Qian, Y.Y.; Liu, L.; Feng, G. Distributed Event-Triggered Adaptive Control for Consensus of Linear Multi-Agent Systems with External Disturbances. IEEE Trans. Cybern. 2020, 50, 2197–2208. [Google Scholar] [CrossRef] [PubMed]

- He, W.; Xu, B.; Han, Q.L.; Qian, F. Adaptive Consensus Control of Linear Multiagent Systems With Dynamic Event-Triggered Strategies. IEEE Trans. Cybern. 2020, 50, 2996–3008. [Google Scholar] [CrossRef] [PubMed]

- Liu, K.; Ji, Z. Dynamic Event-Triggered Consensus of General Linear Multi-Agent Systems With Adaptive Strategy. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 3440–3444. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, Z.; Zhou, Z.; Yuan, H.; Wang, W.; Wang, J.; Xu, Z. Adaptive Event-Triggered Consensus of Multi-Agent Systems in Sense of Asymptotic Convergence. Sensors 2024, 24, 339. https://doi.org/10.3390/s24020339

Hou Z, Zhou Z, Yuan H, Wang W, Wang J, Xu Z. Adaptive Event-Triggered Consensus of Multi-Agent Systems in Sense of Asymptotic Convergence. Sensors. 2024; 24(2):339. https://doi.org/10.3390/s24020339

Chicago/Turabian StyleHou, Zhicheng, Zhikang Zhou, Hai Yuan, Weijun Wang, Jian Wang, and Zheng Xu. 2024. "Adaptive Event-Triggered Consensus of Multi-Agent Systems in Sense of Asymptotic Convergence" Sensors 24, no. 2: 339. https://doi.org/10.3390/s24020339