Hybrid Feature Extractor Using Discrete Wavelet Transform and Histogram of Oriented Gradient on Convolutional-Neural-Network-Based Palm Vein Recognition

Abstract

:1. Introduction

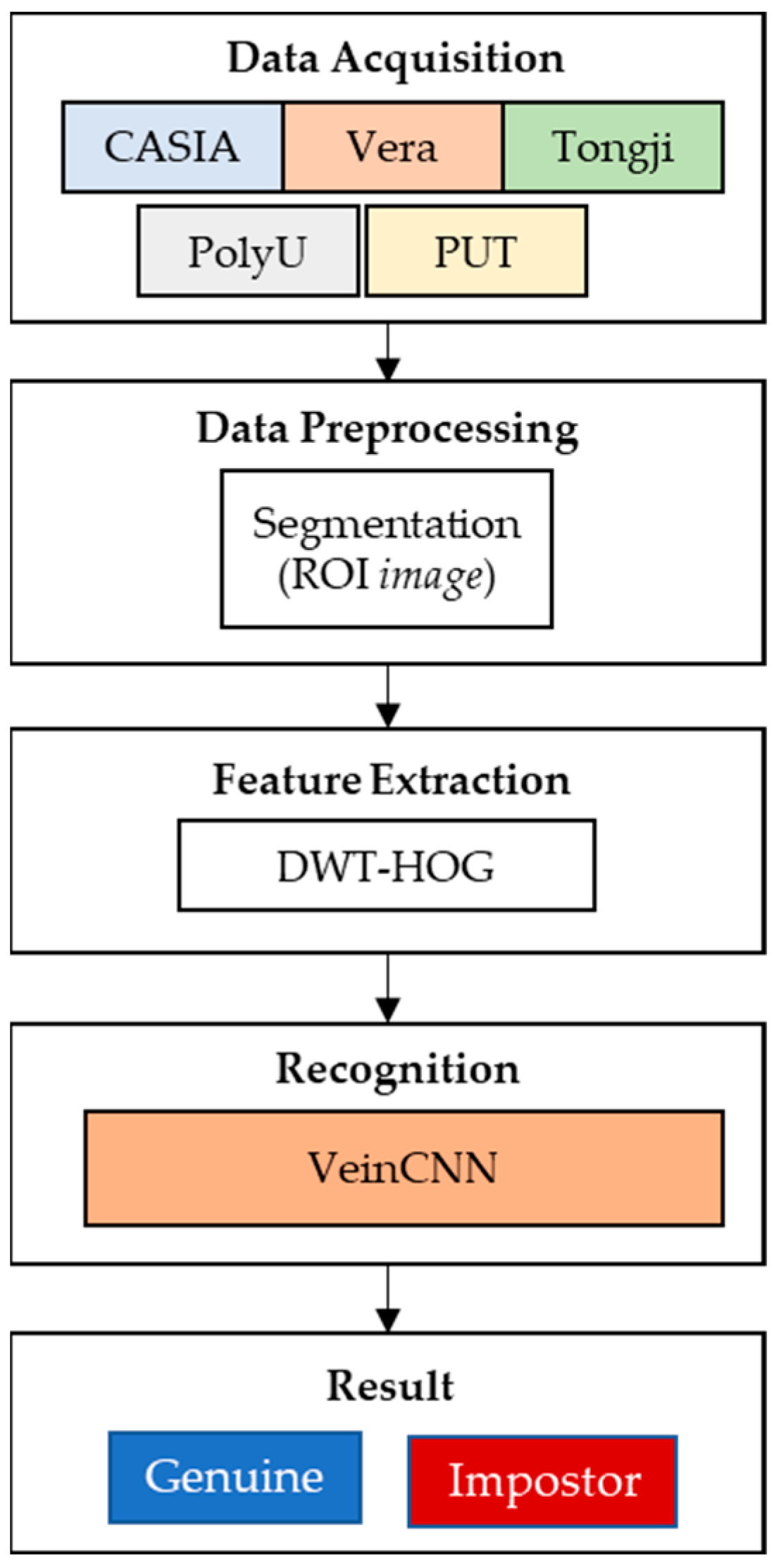

- A simple CNN hybrid structure with a feature extraction method to verify the palm vein pattern based on an image. Using the hybrid DWT and HOG as the feature extractor will handle the irregularity and unique properties of the images.

- The proposed hybrid DWT-HOG VeinCNN is implemented in five datasets of palm vein images in one study to understand the general condition of palm vein images.

- The proposed CNN structure can maintain satisfactory accuracy while minimizing the equal error rate.

2. Materials and Methods

2.1. Image Acquisition

2.1.1. CASIA

2.1.2. Vera

2.1.3. Tongji

2.1.4. PolyU

2.1.5. PUT

2.2. Preprocessing Data

2.3. Feature Extraction

2.3.1. Wavelet Feature Extraction

2.3.2. Histogram of Oriented Gradient Feature Extraction

2.3.3. Hybrid DWT and HOG Feature Extraction

2.4. Recognition Based on Convolutional Neural Network

2.4.1. VeinCNN

2.4.2. Vein Recognition Using Hybrid DWT-HOG VeinCNN Feature Extraction

2.5. Performance Biometric Evaluation

3. Results

4. Discussion

4.1. Total Parameter

4.2. Accuracy

4.3. Area under Curve

4.4. EER

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jain, A.K.; Bolle, R.; Pankanti, S. Biometrics: Personal Identification in Network Society; Springer Science+Business Media, Inc.: New York, NY, USA, 2006. [Google Scholar]

- Shoniregun, C.A.; Crosier, S. Securing Biometrics Applications; Springer: New York, NY, USA, 2008. [Google Scholar]

- Pato, J.N.; Millet, L.I. Biometric Recognition: Challenges and Opportunities; Pato, J.N., Lynette I. Millett, L.I., Eds.; National Academies Press: Washington, DC, USA, 2010. [Google Scholar]

- Kono, M.; Ueki, H.; Umemura, S. Near-Infrared Finger Vein Patterns for Personal Identification. Appl. Opt. 2002, 41, 7429–7436. [Google Scholar] [CrossRef]

- Mil’shtein, S.; Pillai, A.; Shendye, A.; Liessner, C.; Baier, M. Fingerprint Recognition Algorithms for Partial and Full Fingerprints. In Proceedings of the 2008 IEEE Conference on Technologies for Homeland Security, Waltham, MA, USA, 12–13 May 2008; pp. 449–452. [Google Scholar] [CrossRef]

- Bounneche, M.D.; Boubchir, L.; Bouridane, A.; Nekhoul, B.; Ali-Chérif, A. Multi-Spectral Palmprint Recognition Based on Oriented Multiscale Log-Gabor Filters. Neurocomputing 2016, 205, 274–286. [Google Scholar] [CrossRef]

- Li, L.; Mu, X.; Li, S.; Peng, H. A Review of Face Recognition Technology. IEEE Access 2020, 8, 139110–139120. [Google Scholar] [CrossRef]

- Hofbauer, H.; Jalilian, E.; Sequeira, A.F.; Ferryman, J.; Uhl, A. Mobile NIR Iris Recognition: Identifying Problems and Solutions. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), Redondo Beach, CA, USA, 22–25 October 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Khemani, A.; Choudhary, A. Intrinsic Biometrics. Int. J. Eng. Res. Technol. 2015, 4, 243–248. [Google Scholar]

- Tan, R.; Perkowski, M. Toward Improving Electrocardiogram (ECG) Biometric Verification Using Mobile Sensors: A Two-Stage Classifier Approach. Sensors 2017, 17, 410. [Google Scholar] [CrossRef]

- Chai, R.; Naik, G.R.; Ling, S.H.; Nguyen, H.T. Hybrid Brain–Computer Interface for Biomedical Cyber-Physical System Application Using Wireless Embedded EEG Systems. Biomed. Eng. Online 2017, 16, 5. [Google Scholar] [CrossRef]

- Nait-Ali, A. Hidden Biometrics: Towards Using Biosignals and Biomedical Images for Security Applications. In Proceedings of the International Workshop on Systems, Signal Processing and their Applications, WOSSPA, Tipaza, Algeria, 9–11 May 2011; pp. 352–356. [Google Scholar] [CrossRef]

- Ganz, A.; Witek, B.; Perkins, C., Jr.; Pino-Luey, D.; Resta-Flarer, F.; Bennett, H.; Lesser, J.; Ng, J.; Chiao, F.B. Vein Visualization: Patient Characteristic Factors and Efficacy of a New Infrared Vein Finder Technology. BJA Br. J. Anaesth. 2013, 110, 966–971. [Google Scholar] [CrossRef]

- Kumar, A.; Prathyusha, K.V. Personal Authentication Using Hand Vein Triangulation and Knuckle Shape. IEEE Trans. Image Process. 2009, 18, 2127–2136. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Cui, J.; Xue, D.; Jia, X. Palm-Dorsa Vein Recognition Based on Independent Principle Component Analysis. In Proceedings of the 2011 International Conference on Image Analysis and Signal Processing, Hubei, China, 21–23 October 2011; pp. 660–664. [Google Scholar] [CrossRef]

- Zhou, Y.; Kumar, A. Contactless Palm Vein Identification Using Multiple Representations. In Proceedings of the 2010 Fourth IEEE International Conference on Biometrics: Theory, Applications and Systems (BTAS), Washington, DC, USA, 27–29 September 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Sarkar, I.; Alisherov, F.; Kim, T.; Bhattacharyya, D. Palm Vein Authentication System: A Review. Int. J. Control Autom. 2010, 3, 27–34. [Google Scholar]

- Nayar, G.R.; Bhaskar, A.; Satheesh, L.; Kumar, P.S.; P, A.R. Personal Authentication Using Partial Palmprint and Palmvein Images with Image Quality Measures. In Proceedings of the 2015 International Conference on Computing and Network Communications (CoCoNet), Trivandrum, India, 16–19 December 2015; pp. 191–198. [Google Scholar] [CrossRef]

- Hoshyar, A.; Sulaiman, R.; Noori Houshyar, A. Smart Access Control with Finger Vein Authentication and Neural Network. J. Am. Sci. 2011, 7, 192–200. [Google Scholar]

- Syarif, M.A.; Ong, T.S.; Teoh, A.B.J.; Tee, C. Enhanced Maximum Curvature Descriptors for Finger Vein Verification. Multimed. Tools Appl. 2017, 76, 6859–6887. [Google Scholar] [CrossRef]

- Shahzad, A.; Walter, N.; Malik, A.S.; Saad, N.M.; Meriaudeau, F. Multispectral Venous Images Analysis for Optimum Illumination Selection. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 2383–2387. [Google Scholar] [CrossRef]

- Gurunathan, V.; Bharathi, S.; Sudhakar, R. Image Enhancement Techniques for Palm Vein Images. In Proceedings of the 2015 International Conference on Advanced Computing and Communication Systems, Coimbatore, India, 5–7 January 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, Y.; Feng, Q.; Yang, F.; Huang, J.; Nie, Y. Palm-Vein Classification Based on Principal Orientation Features. PLoS ONE 2014, 9, e112429. [Google Scholar] [CrossRef]

- Stanuch, M.; Wodzinski, M.; Skalski, A. Contact-Free Multispectral Identity Verification System Using Palm Veins and Deep Neural Network. Sensors 2020, 20, 5695. [Google Scholar] [CrossRef]

- Sasikala, R.; Sandhya, S.; Ravichandran, D.K.; Balsubramaniam, D.D. A Survey on Human Identification Using Palm-Vein Images Using Laplacian Filter. Int. J. Innov. Res. Comput. Commun. Eng. 2016, 4, 6599–6605. [Google Scholar]

- Wu, X.; Gao, E.; Tang, Y.; Wang, K. A Novel Biometric System Based on Hand Vein. In Proceedings of the 2010 Fifth International Conference on Frontier of Computer Science and Technology, Changchun, China, 18–22 August 2010; pp. 522–526. [Google Scholar] [CrossRef]

- Akintoye, K.A.; Rahim, M.S.M.; Abdullah, A.H. Enhancement of Finger Vein Image Using Multifiltering Algorithm. ARPN J. Eng. Appl. Sci. 2018, 13, 644–648. [Google Scholar]

- Raut, S.D.; Humbe, V.T.; Mane, A.V. Development of Biometrie Palm Vein Trait Based Person Recognition System: Palm Vein Biometrics System. In Proceedings of the Intelligent Systems and Information Management (ICISIM), 2017 1st International Conference on IEEE, Aurangabad, India, 5–6 October 2017; pp. 18–21. [Google Scholar]

- Elnasir, S.; Shamsuddin, S.M. Palm Vein Recognition Based on 2D-Discrete Wavelet Transform and Linear Discrimination Analysis. Int. J. Adv. Soft Comput. 2014, 6, 43–59. [Google Scholar]

- Debnath, L. Wavelet Transforms and Their Applications. Appl. Math. 2003, 48, 78. [Google Scholar] [CrossRef]

- Wu, W.; Li, Y.; Zhang, Y.; Li, C. Identity Recognition System Based on Multi-Spectral Palm Vein Image. Electronics 2023, 12, 3503. [Google Scholar] [CrossRef]

- Fronitasari, D.; Gunawan, D. Local Descriptor Approach to Wrist Vein Recognition with DVH-LBP Domain Feature Selection Scheme. Int. J. Adv. Sci. Eng. Inf. Technol. 2019, 9, 1025–1032. [Google Scholar] [CrossRef]

- Bashar, K.; Murshed, M. Texture Based Vein Biometrics for Human Identification: A Comparative Study. In Proceedings of the 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC), Tokyo, Japan, 23–27 July 2018; Volume 2, pp. 571–576. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20-25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Suryani, D.; Doetsch, P.; Ney, H. On the Benefits of Convolutional Neural Network Combinations in Offline Handwriting Recognition. In Proceedings of the 2016 15th International Conference on Frontiers in Handwriting Recognition (ICFHR), Shenzhen, China, 23–26 October 2016; pp. 193–198. [Google Scholar] [CrossRef]

- Meng, G.; Fang, P.; Zhang, B. Finger Vein Recognition Based on Convolutional Neural Network. In MATEC Web of Conferences; EDP Sciences: Les Ulis, France, 2017; Volume 128, p. 4015. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. In Proceedings of the International Conference on Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Fairuz, S.; Habaebi, M.H.; Elsheikh, E.M.A.; Chebil, A.J. Convolutional Neural Network-Based Finger Vein Recognition Using Near Infrared Images. In Proceedings of the 2018 7th International Conference on Computer and Communication Engineering (ICCCE), Kuala Lumpur, Malaysia, 19–20 September 2018; pp. 453–458. [Google Scholar] [CrossRef]

- Liu, W.; Li, W.; Sun, L.; Zhang, L.; Chen, P. Finger Vein Recognition Based on Deep Learning. In Proceedings of the 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; pp. 205–210. [Google Scholar] [CrossRef]

- Wan, H.; Chen, L.; Song, H.; Yang, J. Dorsal Hand Vein Recognition Based on Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Kansas City, MO, USA, 13–16 November 2017; pp. 1215–1221. [Google Scholar] [CrossRef]

- Li, X.; Huang, D.; Wang, Y. Comparative Study of Deep Learning Methods on Dorsal Hand Vein Recognition BT-Biometric Recognition; You, Z., Zhou, J., Wang, Y., Sun, Z., Shan, S., Zheng, W., Feng, J., Zhao, Q., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 296–306. [Google Scholar]

- Qin, H.; El Yacoubi, M.A.; Lin, J.; Liu, B. An Iterative Deep Neural Network for Hand-Vein Verification. IEEE Access 2019, 7, 34823–34837. [Google Scholar] [CrossRef]

- Chantaf, S.; Hilal, A.; Elsaleh, R. Palm Vein Biometric Authentication Using Convolutional Neural Networks BT-Proceedings of the 8th International Conference on Sciences of Electronics, Technologies of Information and Telecommunications (SETIT’18); Bouhlel, M.S., Rovetta, S., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 1, pp. 352–363. [Google Scholar]

- Hong, H.G.; Lee, M.B.; Park, K.R. Convolutional Neural Network-Based Finger-Vein Recognition Using NIR Image Sensors. Sensors 2017, 17, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.Y.; Hsia, C.H.; Chen, P.H. Contactless Multispectral Palm-Vein Recognition with Lightweight Convolutional Neural Network. IEEE Access 2021, 9, 149796–149806. [Google Scholar] [CrossRef]

- Wang, J.; Pan, Z.; Wang, G.; Li, M.; Li, Y. Spatial Pyramid Pooling of Selective Convolutional Features for Vein Recognition. IEEE Access 2018, 6, 28563–28572. [Google Scholar] [CrossRef]

- Wang, J.; Yang, K.; Pan, Z.; Wang, G.; Li, M.; Li, Y. Minutiae-Based Weighting Aggregation of Deep Convolutional Features for Vein Recognition. IEEE Access 2018, 6, 61640–61650. [Google Scholar] [CrossRef]

- Hou, B.; Yan, R. Convolutional Auto-Encoder Based Deep Feature Learning for Finger-Vein Verification. In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Hao, Y.; Sun, Z.; Tan, T.; Ren, C. Multispectral Palm Image Fusion for Accurate Contact-Free Palmprint Recognition. In Proceedings of the Image Processing, 2008. ICIP 2008. 15th IEEE International Conference on, San Diego, CA, USA, 12–15 October 2008; pp. 281–284. [Google Scholar]

- Zhang, D.; Guo, Z.; Lu, G.; Zhang, L.; Zuo, W. An Online System of Multispectral Palmprint Verification. IEEE Trans. Instrum. Meas. 2010, 59, 480–490. [Google Scholar] [CrossRef]

- Kabacinski, R.; Kowalski, M. Vein Pattern Database and Benchmark Results. Electron. Lett. 2011, 47, 1127–1128. [Google Scholar] [CrossRef]

- Zhang, L.; Cheng, Z.; Shen, Y.; Wang, D. Palmprint and Palmvein Recognition Based on DCNN and A New Large-Scale Contactless Palmvein Dataset. Symmetry 2018, 10, 78. [Google Scholar] [CrossRef]

- Tome, P.; Marcel, S. On the Vulnerability of Palm Vein Recognition to Spoofing Attacks. In Proceedings of the 2015 International Conference on Biometrics (ICB), Phuket, Thailand, 19–22 May 2015; pp. 319–325. [Google Scholar]

- Wulandari, M.; Gunawan, D. On the Performance of Pretrained CNN Aimed at Palm Vein Recognition Application. In Proceedings of the 2019 11th International Conference on Information Technology and Electrical Engineering, ICITEE 2019, Pattaya, Thailand, 10–11 October 2019. [Google Scholar] [CrossRef]

- Kuang, H.; Zhong, Z.; Liu, X.; Ma, X. Palm Vein Recognition Using Convolution Neural Network Based on Feature Fusion with HOG Feature. In Proceedings of the 2020 5th International Conference on Smart Grid and Electrical Automation (ICSGEA), Zhangjiajie, China, 13–14 June 2020; pp. 295–299. [Google Scholar] [CrossRef]

- Al-Rababah, K.; Mustaffa, M.R.; Doraisamy, S.C.; Khalid, F. Hybrid Discrete Wavelet Transform and Histogram of Oriented Gradients for Feature Extraction and Classification of Breast Dynamic Thermogram Sequences. In Proceedings of the 2021 Fifth International Conference on Information Retrieval and Knowledge Management (CAMP), Kuala Lumpur, Malaysia, 15–16 June 2021; pp. 31–35. [Google Scholar] [CrossRef]

- Ristiana, R.; Kusumandari, D.E.; Simbolon, A.I.; Amri, M.F.; Sanhaji, G.; Rumiah, R. A Comparative Study of Thermal Face Recognition Based on Haar Wavelet Transform (HWT) and Histogram of Gradient (HoG). In Proceedings of the 2021 3rd International Symposium on Material and Electrical Engineering Conference (ISMEE), Bandung, Indonesia, 10–11 November 2021; pp. 242–248. [Google Scholar] [CrossRef]

- Zhang, L.; Li, L.; Yang, A.; Shen, Y.; Yang, M. Towards Contactless Palmprint Recognition: A Novel Device, a New Benchmark, and a Collaborative Representation Based Identification Approach. Pattern Recognit. 2017, 69, 199–212. [Google Scholar] [CrossRef]

- Arora, S.; Brar, Y.S.; Kumar, S. HAAR Wavelet Transform for Solution of Image Retrieval. Int. J. Adv. Comput. Math. Sci. 2014, 5, 27–31. [Google Scholar]

- Zou, W.; Li, Y. Image Classification Using Wavelet Coefficients in Low-Pass Bands. In Proceedings of the 2007 International Joint Conference on Neural Networks, Orlando, FL, USA, 12–17 August 2007; pp. 114–118. [Google Scholar] [CrossRef]

- Shyla, N.S.J.; Emmanuel, W.R.S. Automated Classification of Glaucoma Using DWT and HOG Features with Extreme Learning Machine. In Proceedings of the 2021 Third International Conference on Intelligent Communication Technologies and Virtual Mobile Networks (ICICV), Tirunelveli, India, 4–6 February 2021; pp. 725–730. [Google Scholar] [CrossRef]

- Noh, Z.M.; Ramli, A.R.; Saripan, M.I.; Hanafi, M. Overview and Challenges of Palm Vein Biometric System. Int. J. Biom. 2016, 8, 2–18. [Google Scholar] [CrossRef]

- Ketkar, N.; Santana, E. Deep Learning with Python. Master’s Thesis, Apress, Berkeley, CA, USA, 2017; pp. 95–109. [Google Scholar]

- Liu, J.; Pan, Y.; Li, M.; Chen, Z.; Tang, L.; Lu, C.; Wang, J. Applications of Deep Learning to MRI Images: A Survey. Big Data Min. Anal. 2018, 1, 1–18. [Google Scholar] [CrossRef]

- Sarkar, A.; Singh, B.K. A Review on Performance, Security and Various Biometric Template Protection Schemes for Biometric Authentication Systems. Multimed. Tools Appl. 2020, 79, 27721–27776. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| Authors | Dataset | Feature Extractor | Method |

|---|---|---|---|

| Wang et al. (2018) [47] | PolyU | - | VGG-16 |

| Qin et al. (2019) [43] | CASIA PolyU | - | DBN |

| Wulandari et al. (2019) [55] | PUT | DWT | CNN |

| Chantaf et al. (2020) [44] | Nonpublic | - | CNN |

| Kuang et al. (2020) [56] | CASIA PolyU | HOG | CNN |

| Chen et al. (2021) [46] | CASIA PUT | Gabor | CNN |

| Wu et al. (2023) [31] | Nonpublic CASIA Tongji PolyU | SDSPCA-NPE | Distance Feature Matching |

| Proposed | CASIA Vera Tongji PolyU PUT | Hybrid DWT and HOG | VeinCNN |

| No. | Dataset | Total Volunteers | Total Images | Number of Session | Image Size | Format | Official ROI |

|---|---|---|---|---|---|---|---|

| 1. | CASIA | 200 | 2400 | 2 | jpg | Not Available | |

| 2. | Vera | 220 | 2200 | 2 | png | Available | |

| 3. | Tongji | 600 | 12,000 | 2 | tiff | Available | |

| 4. | PolyU | 500 | 24,000 | 2 | jpg | Available | |

| 5. | PUT | 100 | 1200 | 3 | bmp | Not Available |

| Proposed Hybrid Feature Extractor DWT and HOG Procedure | |

|---|---|

| 1: | |

| 2: | |

| 3: | |

| 4: | |

| 5: | |

| 6: | |

| 7: | |

| 8: | |

| Layer | Type | Activation Function | Output Shape | Kernel Size | Number of Filters |

|---|---|---|---|---|---|

| 0 | Input | - | 128 × 128 | - | - |

| 1 | 2D conv | ReLU | 126 × 126 | 3 | 32 |

| 2 | 2D max pooling | ReLU | 63 × 63 | 2 | 32 |

| 3 | 2D conv | ReLU | 61 × 61 | 3 | 64 |

| 4 | 2D max pooling | ReLU | 30 × 30 | 2 | 64 |

| 5 | 2D conv | ReLU | 28 × 28 | 3 | 64 |

| 6 | 2D max pooling | ReLU | 14 × 14 | 2 | 64 |

| 7 | 2D conv | ReLU | 12 × 12 | 3 | 64 |

| 8 | 2D max pooling | ReLU | 6 × 6 | 2 | 64 |

| 9 | Flattened | - | 2304 | - | - |

| 10 | Dense | ReLU | 128 | - | - |

| 11 | Dense | Sigmoid | 2 | - | - |

| Dataset | Feature Extractor | Accuracy (%) | AUC (%) | EER |

|---|---|---|---|---|

| CASIA | Raw data | 99.69 | 99.60 | 0.0167 |

| DWT | 99.85 | 98.80 | 0.0250 | |

| HOG | 99.85 | 99.80 | 0.0083 | |

| Hybrid DWT and HOG (proposed) | 99.85 | 99.80 | 0.0083 | |

| Vera | Raw data | 84.14 | 84.10 | 0.1273 |

| DWT | 81.16 | 81.20 | 0.2000 | |

| HOG | 94.78 | 94.60 | 0.0636 | |

| Hybrid DWT and HOG (proposed) | 95.57 | 95.10 | 0.0545 | |

| Tongji | Raw data | 90.37 | 90.40 | 0.1383 |

| DWT | 92.04 | 92.00 | 0.0866 | |

| HOG | 94.81 | 94.40 | 0.0750 | |

| Hybrid DWT and HOG (proposed) | 94.91 | 94.90 | 0.0650 | |

| PolyU | Raw data | 59.44 | 59.40 | 0.3000 |

| DWT | 58.33 | 60.20 | 0.2566 | |

| HOG | 82.59 | 82.60 | 0.2066 | |

| Hybrid DWT and HOG (proposed) | 85.88 | 85.90 | 0.1467 | |

| PUT | Raw data | 93.52 | 93.50 | 0.1000 |

| DWT | 74.07 | 81.30 | 0.1330 | |

| HOG | 97.22 | 97.20 | 0.0333 | |

| Hybrid DWT and HOG (proposed) | 98.12 | 98.10 | 0.0167 |

| Dataset | Accuracy (%) | AUC (%) | EER |

|---|---|---|---|

| CASIA | 99.85 | 99.80 | 0.0083 |

| Vera | 95.57 | 95.10 | 0.0545 |

| Tongji | 94.91 | 94.90 | 0.0650 |

| PolyU | 85.88 | 85.90 | 0.1467 |

| PUT | 98.12 | 98.10 | 0.0167 |

| Methods | Accuracy on Dataset (%) | ||||

|---|---|---|---|---|---|

| CASIA | Vera | Tongji | PolyU | PUT | |

| AlexNet [38] | 96.60 | 90.86 | 93.80 | 73.98 | 97.22 |

| VGG16 [67] | 99.38 | 91.04 | 78.43 | 70.83 | 97.22 |

| ResNet50 [68] | 99.69 | 86.38 | 88.43 | 79.17 | 94.44 |

| Proposed hybrid DWT-HOG VeinCNN | 99.85 | 95.52 | 94.91 | 85.97 | 98.15 |

| Recognition Scheme | EER on Dataset | ||||

|---|---|---|---|---|---|

| CASIA | Vera | Tongji | PolyU | PUT | |

| AlexNet [38] | 0.0679 | 0.0672 | 0.0685 | 0.3370 | 0.0185 |

| VGG16 [67] | 0.0123 | 0.0672 | 0.1963 | 0.2528 | 0.0555 |

| ResNet50 [68] | 0.0083 | 0.0560 | 0.1167 | 0.1519 | 0.0185 |

| Proposed Hybrid DWT-HOG VeinCNN | 0.0083 | 0.0545 | 0.0630 | 0.1460 | 0.0167 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wulandari, M.; Chai, R.; Basari, B.; Gunawan, D. Hybrid Feature Extractor Using Discrete Wavelet Transform and Histogram of Oriented Gradient on Convolutional-Neural-Network-Based Palm Vein Recognition. Sensors 2024, 24, 341. https://doi.org/10.3390/s24020341

Wulandari M, Chai R, Basari B, Gunawan D. Hybrid Feature Extractor Using Discrete Wavelet Transform and Histogram of Oriented Gradient on Convolutional-Neural-Network-Based Palm Vein Recognition. Sensors. 2024; 24(2):341. https://doi.org/10.3390/s24020341

Chicago/Turabian StyleWulandari, Meirista, Rifai Chai, Basari Basari, and Dadang Gunawan. 2024. "Hybrid Feature Extractor Using Discrete Wavelet Transform and Histogram of Oriented Gradient on Convolutional-Neural-Network-Based Palm Vein Recognition" Sensors 24, no. 2: 341. https://doi.org/10.3390/s24020341