Machine Learning for Multimodal Mental Health Detection: A Systematic Review of Passive Sensing Approaches

Abstract

1. Introduction

- RQ1—Which sources of passive sensing data are most effective for supporting the detection of MH disorders?

- RQ2—Which data fusion approaches are most effective for combining data features of varying modalities to prepare for training ML models to detect MH disorders?

- RQ3—What ML approaches have previous researchers used to successfully detect MH disorders from multimodal data?

2. Materials and Methods

2.1. Search Strategy

2.2. Inclusion and Exclusion Criteria

- The study collects data passively via ubiquitous or wearable devices, considering the cost-effectiveness and general accessibility.

- The data is human generated, i.e., derived from individuals’ actions in an environment or interactions with specific platforms or devices.

- The data source involves at least two different modalities.

- The study adopts ML algorithms intending to detect one or more MH disorders.

- The study is written in English.

- The study was published from the year 2015 onwards (further details in the following section).

- The study investigates data sources of a single modality or exclusively focuses on a specific modality, e.g., text-based approaches.

- The study specifically targets the pediatric population, i.e., toddlers and children below ten years old, as defined within the suggested adolescent age range of 10–24 years [39].

- The study targets a particular symptom of specific MH disorders, e.g., low mood, which is a common sign of depression.

- Data collection requires dedicated equipment or authorized resources:

- -

- Brain neuroimaging data, e.g., functional magnetic resonance imaging (fMRI), structural MRI (sMRI), electroencephalogram (EEG), electromyography (EMG), and photoplethysmography (PPG) signals

- -

- Clinical data, e.g., electronic health records (EHRs) and clinical notes

- -

- Genomic data

- -

- Body motions collected using specialized motion capture platforms or motor sensors

- -

- Makes use of Augmented Augmented Reality (AR) or Virtual Reality (VR) technology

- The study does not employ ML algorithms for detection/prediction, e.g., focusing on correlation/association analysis, treatment/intervention strategies, or proposing study protocols.

- The study is a survey, book, conference proceeding, workshop, or magazine

- The study is unpublished or non-peer-reviewed.

2.3. Selection Process

2.4. Data Extraction

2.5. Quality Assessment

3. Results

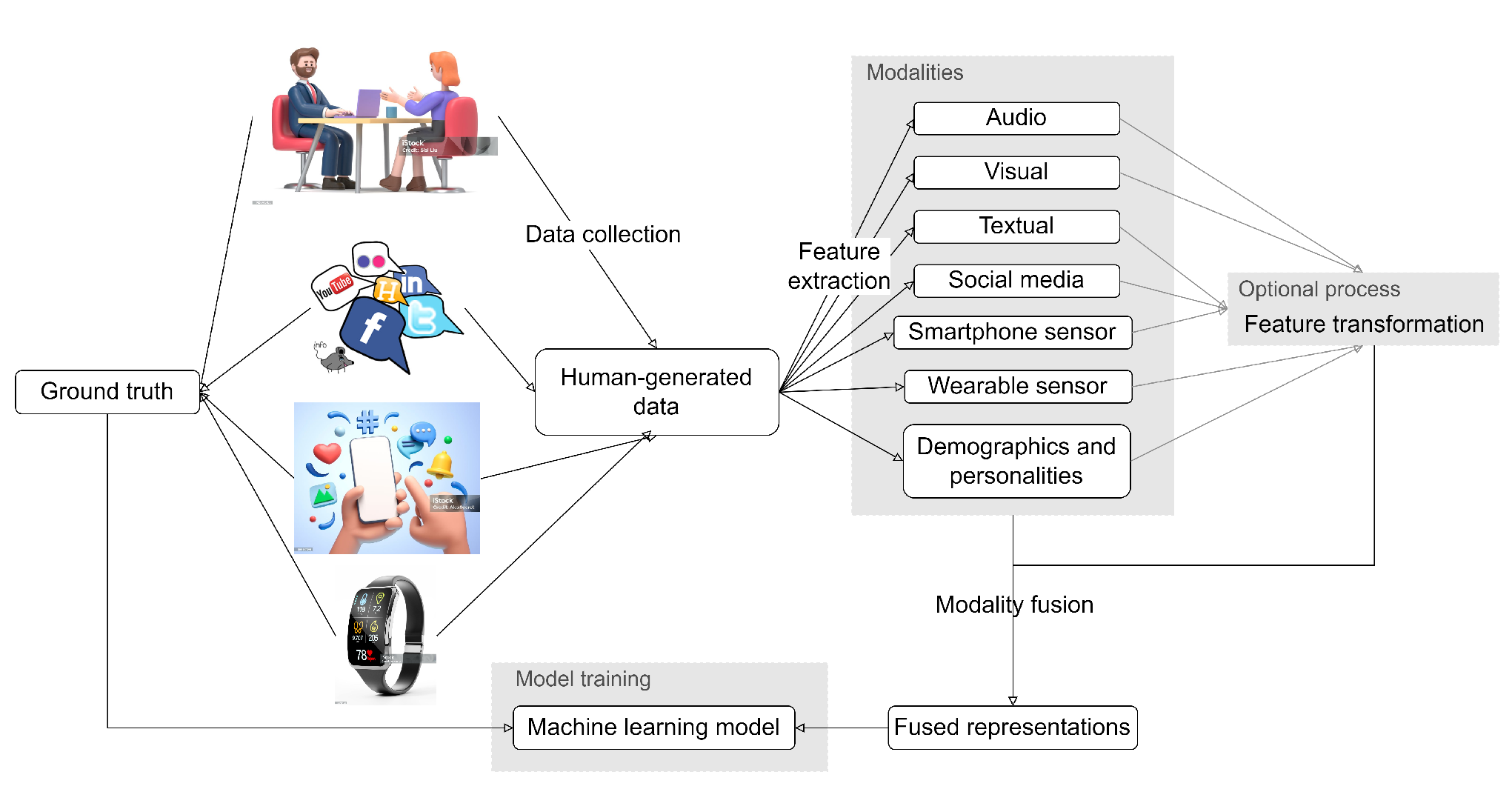

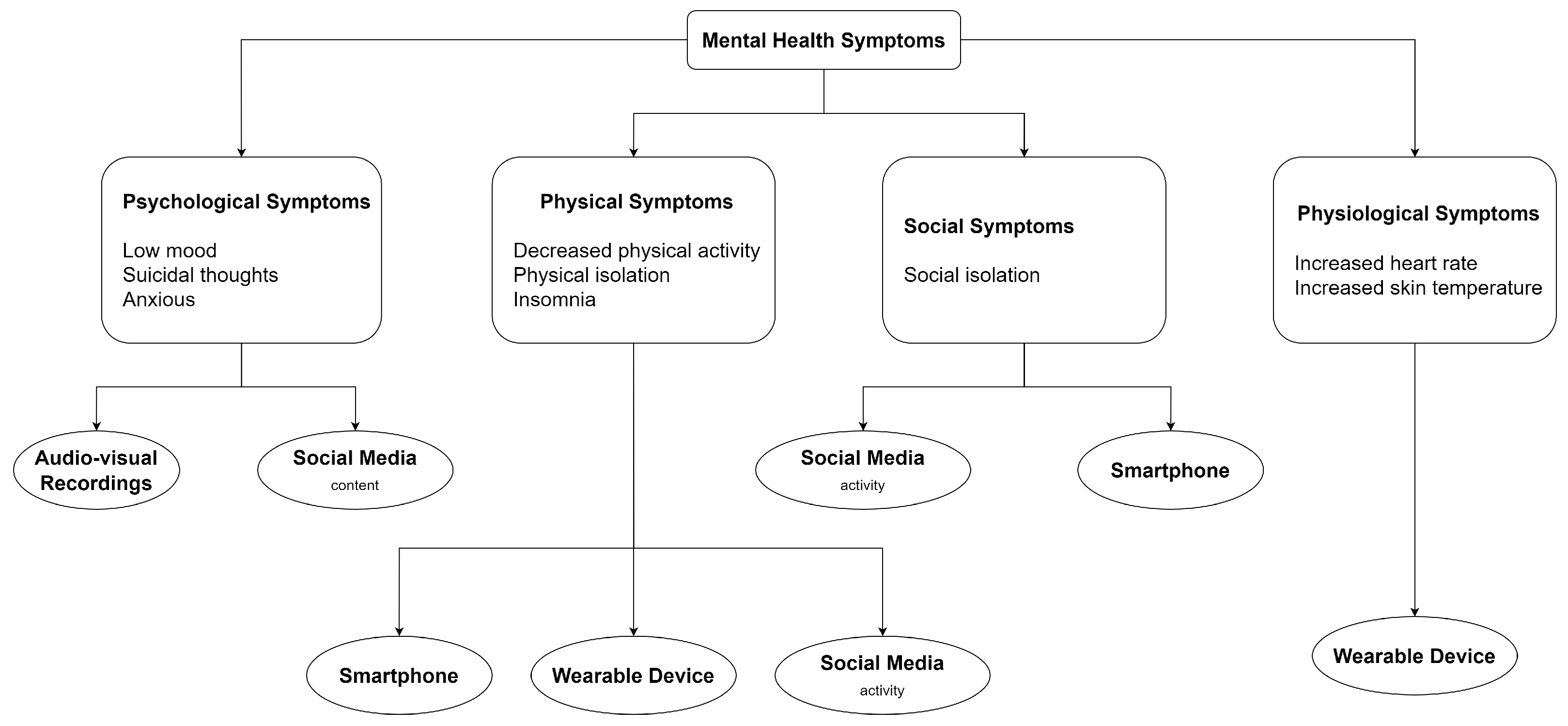

3.1. Data Source

3.1.1. Audio and Video Recordings

3.1.2. Social Media

3.1.3. Smartphones

3.1.4. Wearable Devices

3.2. Data Ground Truth

3.2.1. Clinical Assessments

3.2.2. Self-Reports

3.3. Modality and Features

3.3.1. Audio

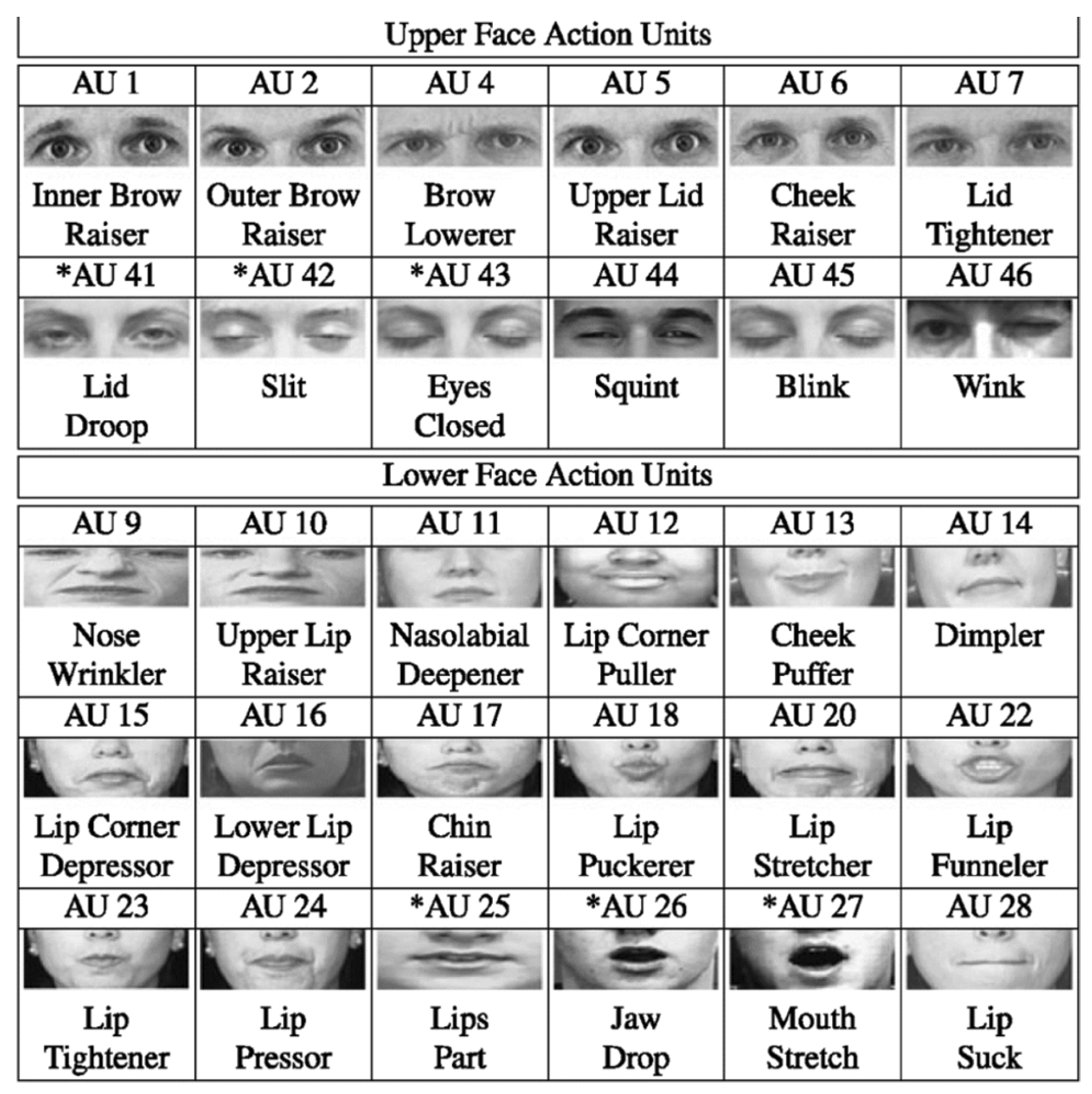

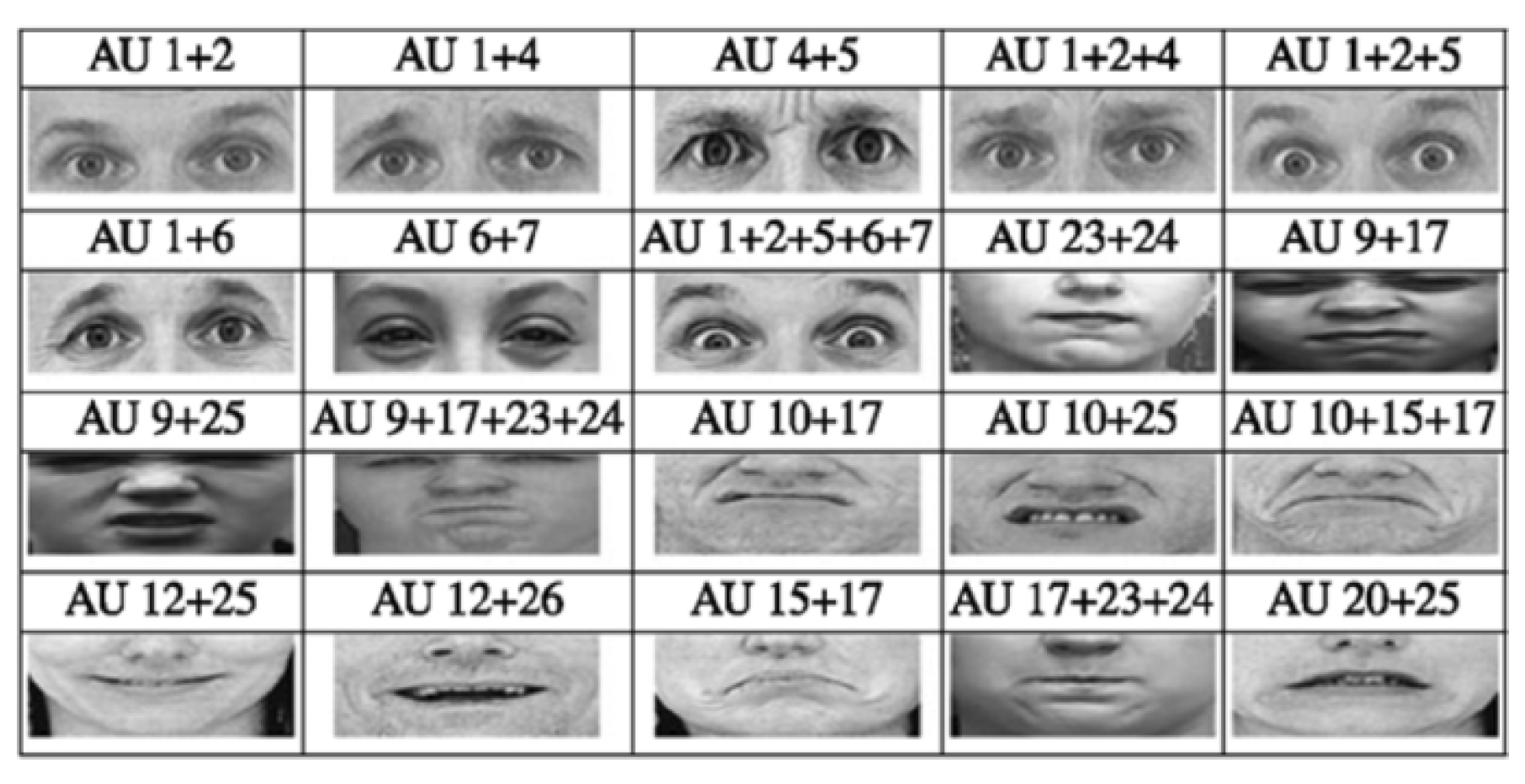

3.3.2. Visual

3.3.3. Textual

3.3.4. Social Media

3.3.5. Smartphone and Wearable Sensors

- (1)

- Physical Mobility Features: Studies have shown that negative MH states and greater depression severity are associated with lower levels of physical activity, demonstrated via fewer footsteps, less exercising [154], being stationary for a greater proportion of time [205], and less motion variability [149], whereas a study on the student population showed an opposite trend for increased physical activity [157]. Movements across locations in terms of distance, location variability, significant locations (deduced through location clusters) [177], and time spent in these places [164] were also valuable. For instance, researchers found greater depression severity or negative MH states associated with less distance variance, less normalized location entropy [154,158], lower number of significant visited places with increased average length of stay [158], and fewer visits to new places [205]. In contrast, Kim et al.’s [162] investigation on adolescents with major depressive disorders (MDD) found that they traveled longer distances than healthy controls. Timing and location semantics could further contribute more detailed insights, such as the discoveries of individuals with negative MH states staying stationary more in the morning but less in the evening [205], those with more severe depression spending more time at home [154,175], and schizophrenia patients visiting more places in the morning [206]. Researchers also acquired sleep information either through inferences from a combination of sensor information relating to physical movement, environment, and phone-locked states or through the APIs of sleep inferences in wearable devices. Sleep patterns and regularity were demonstrated to correlate with depressive symptoms [150,158] where individuals with positive MH states wake up earlier [205], whereas MDD patients showed more irregular sleep (inferred from sleep regularity index) [149].

- (2)

- Phone Interaction Features: Phone usage (i.e., inferred from the frequency and duration of screen unlocks) and application usage were potentially helpful. For instance, several studies [158] found a high frequency of screen unlocks and low average unlock duration for each unlock as potential depressive symptoms. However, while Wang et al. [205] demonstrated the association between negative MH states and lower phone usage, the opposite trend was observed in students and adolescents with depressive symptoms who used smartphones longer [150,162,164]. Researchers also investigated more fine-grained features, such as phone usage at different times of the day, where they found schizophrenic patients exhibiting less phone usage at night but more in the afternoon [206]. Additionally, individuals with MH disorders also showed distinctive application engagement, such as Opoku Asare et al.’s [166] findings that individuals with depressive symptoms used social applications more frequently and for a longer duration. Generally, they also showed more active application engagement in the early hours or midnight compared to healthy controls, who showed diluted engagement patterns throughout the day. Meanwhile, Choudhary et al. [212] revealed that individuals with anxiety exhibited more frequent usage of applications from “passive information consumption apps”, “games”, and “health and fitness” categories.

- (3)

- Sociability Features: Sociability features, such as the number of incoming/outgoing phone calls and text messages and the duration of phone calls, were also potential indicators of MH disorders [164,175]. For instance, negative MH states are associated with making more phone calls and text messaging [205,222] and reaching out to more new contacts [222]. On the other hand, adult and adolescent populations suffering from MDD were revealed to receive fewer incoming messages [149] and more phone calls [162], respectively. Lastly, ambient environments could also play a role since individuals with schizophrenia were found to be around louder acoustic environments with human voices [206], whereas those with negative MH states demonstrated a higher tendency to be around fewer conversations [205] than healthy controls.

3.3.6. Demographics and Personalities

3.4. Modality Fusion

3.4.1. Feature Transformation to Prepare for Fusion

3.4.2. Multimodal Fusion Techniques

3.5. Machine Learning Models

- Supervised learning—trained on labeled input–output pairs to learn patterns for mapping unseen inputs to outputs.

- Ensemble learning—combines multiple base learners of any kind (e.g., linear, tree-based or NN models) to obtain better predictive performance, assuming that errors of a single base learner will be compensated by the others [292].

- Multi-task learning—attempts to solve multiple tasks simultaneously by taking advantage of the similarities between tasks [289].

- Others—incorporates semi-supervised, unsupervised, or combination of approaches from various categories.

3.5.1. Supervised Learning

3.5.2. Neural-Network-Based Supervised Learning

3.5.3. Ensemble Learning

3.5.4. Multi-Task Learning (MTL)

3.5.5. Others

3.6. Additional Findings

3.6.1. Modality and Feature Comparisons

3.6.2. Personalized Machine Learning Models

4. Discussion

4.1. Principal Findings

4.1.1. RQ1—Which Sources of Data Are Most Effective for Supporting the Detection of MH Disorders?

4.1.2. RQ2—Which Data Fusion Approaches Are Most Effective for Combining Data Features of Varying Modalities to Prepare for Training ML Models to Detect MH Disorders?

4.1.3. RQ3—What ML Approaches Have Previous Researchers Used to Successfully Detect MH Disorders from Multimodal Data?

4.2. Evaluation of Data Sources

4.2.1. Criterion 1—Reliability of Data

4.2.2. Criterion 2—Validity of Ground Truth Acquisition

4.2.3. Criterion 3—Cost

4.2.4. Criterion 4—General Acceptance

4.2.5. Overall Findings

4.3. Guidelines for Data Source Selection

- Define research objectives and scope: Clearly defined research objectives and questions can guide researchers to determine the kind of information required to achieve the research goals and, subsequently, to evaluate the extent of the data source in accurately representing or capturing relevant information. Determining the scope of the study is crucial to pinpoint and assess the relevance of data information to ensure that collected data effectively contributes to the desired outcomes.

- Determine the target population: Identifying the target population and its characteristics involves various aspects, including the targeted MH disorders, demographics, cultural backgrounds, and geographical distribution. These aspects are mutually influential since individuals’ behaviors and data may vary based on reactions to different MH disorders, with further influence caused by cultural backgrounds and demographics, such as age, gender, and occupation. Additionally, geographical distribution and economic backgrounds may influence an individual’s accessibility to a specific data collection tool. This consideration ensures that the data collected is representative and applicable to the population of interest, enhancing the overall effectiveness of the approach.

- Identify candidate data sources and evaluate their feasibility: Evaluating the feasibility of each data source in light of the research objectives and target population identified above assists researchers in making informed decisions. Given the contexts and environments in which the target population is situated, researchers can assess which data source is the most practical and relevant. For example, researchers may consider employing remote sensing to introduce the unobtrusiveness of data collection for high-risk MH disorders or overcome geographical challenges. This assessment should consider its feasibility in terms of cost and accessibility, and it should be informed by Figure 5 to ensure that the selected data source can effectively capture relevant MH symptoms.

- Consult stakeholders: Engaging stakeholders, including healthcare professionals, patients, and families, provides various perspectives of parties involved in supporting individuals with MH disorders. These consultations verify and offer insights into the acceptability and feasibility of data sources and help ensure that researchers’ decisions align with ethical considerations and stakeholders’ comfort.

- Ethical considerations and guidelines: Researchers should further consult institutional review boards and established guidelines to ensure the compliance of data collection procedures with ethical standards and research practices. This step is crucial to safeguard participants’ rights and privacy, enhancing the credibility of the study.

- Assess the significance of ground truth information: Evaluating the significance of ground truth information informs how researchers gauge its impact on the study and whether specific workarounds are necessary to enhance ground truth reliability and validity during data collection. This evaluation will then aid researchers in designing the data collection procedure and determining the extent of reliance on ground truth to support future analysis, reasoning, and deductions.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AdaBoost | Adaptive Boosting |

| ADHD | Attention Deficit Hyperactivity Disorder |

| BDI | Beck Depression Inventory |

| CES-D | Center for Epidemiological Studies Depression Scale |

| CNN | Convolutional neural network |

| DNN | Deep neural network |

| DSM-V | Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition |

| ED | Eating disorder |

| GAD-7 | General Anxiety Disorder-7 |

| GPS | Global Positioning System |

| GRU | Gated recurrent unit |

| HDRS | Hamilton Depression Rating Scale |

| LSTM | Long short-term memory |

| MDD | Major depressive disorder |

| MFCC | Mel frequency cepstral coefficients |

| MH | Mental health |

| ML | Machine learning |

| MLP | Multi-layer perceptron |

| MRI | Magnetic Resonance Imaging |

| MTL | Multi-task learning |

| NN | Neural network |

| OCD | Obsessive-compulsive disorder |

| PHQ-9 | Patient Health Questionnaire-9 |

| PTSD | Post-traumatic stress disorder |

| RF | Random forest |

| SLR | Systematic literature review |

| SVM | Support vector machine |

| XGBoost | Xtreme Gradient Boosting |

Appendix A. Existing Modality Features

| Features | Tools | Studies | Feature Category |

|---|---|---|---|

| Low-level descriptors: jitter, shimmer, amplitude, pitch perturbation quotients, Mel-frequency cepstral coefficients (MFCCs), Teager-energy cepstrum coefficients (TECCs) [320], Discrete Cosine Transform (DCT) coefficients | OpenSmile [267], COVAREP [321], YAAFE [322], Praat [323], Python libraries (pyAudioAnalysis [324], DisVoice [325]), My-Voice Analysis [326], Surfboard [327], librosa [328] | [12,48,51,72,74,78,81,87,88,90,91,92,94,97,99,101,104,107,108,184,192,195,196,197,198,199,211,214] | Voice |

| Existing acoustic feature sets: Interspeech 2010 Paralinguistics [329], Interspeech 2013 ComParE [330], extended Geneva Minimalistic Acoustic Parameter Set (eGeMAPS) [331]) | OpenSmile [267] | [51,57,59,61,63,74,81,97,103,192,194,195,196,197,198] | Voice |

| Speech, pause, laughter, utterances, articulation, phonation, intent expressivity | Praat [323], DeepSpeech [332] | [12,48,79,107,184,193,203,211] | Speech |

| Vocal tract physiology features | N/A | [49] | Speech |

| Embeddings of audio samples | VGG-16 [261], VGGish [333], DeepSpeech [332], DenseNet [334], SoundNet [259], SincNet [335], Wav2Vec [336], sentence embedding model [337], HuBERT [338], convolutional neural network (CNN), bidirectional LSTM (BiLSTM), ResNet [308], graph temporal convolution neural network (GTCN) [339] | [59,60,67,72,74,80,86,89,93,96,100,136,174,195] | Representations |

| Graph features: average degree, clustering coefficient and shortest path, density, transitivity, diameter, local and global efficiency | Visibility graph (two data points visible to each other are connected with an edge) | [81] | Representations |

| Statistical descriptors of voice/speech features: mean, standard deviation, variance, extreme values, kurtosis, 1st and 99th percentiles, skewness, quartiles, interquartile range, range, total, duration rate, occurrences, coefficient of variation (CV) | Manual computation, histograms, DeepSpeech [332] | [12,55,56,91,92,99,107,193,197,214] | Derived |

| Bag-of-AudioWords (BoAW) representations of voice/speech features | openXBOW [340] | [59,74] | Representations, Derived |

| High-level representations of features/representations (capture spatial and temporal information) | Gated recurrent unit (GRU) [341], LSTM, BiLSTM, combination of CNN residual and LSTM-based encoder–decoder networks [75], time-distributed CNN (T-CNN), multi-scale temporal dilated convolution (MS-TDConv) blocks, denoising autoencoder | [61,65,67,73,75,77,87,94,100,199] | Representations, Derived |

| Session-level representations from segment-level features/representations | Simple concatenation, Fisher vector encoding, Gaussian Mixture Model (GMM) | [192,199,214] | Representations, Derived |

| Facial/body appearance, landmarks, eye gaze, head pose | OpenFace [269], OpenCV [270], Viola Jones’ face detector [342], CascadeObjectDetector function in MATLAB’s vision toolbox, Haar classifier [270], Gauss–Newton Deformable Part Model (GN-DPM) [343], OpenPose [271], ZFace [344], CNN [46], Faster-RCNN (Region CNN) [147], multilevel convolutional coarse-to-fine network cascade [345], Inception-ResNet-V2 [346], VGG-Face [68], DenseNet [334], Affectiva https://go.affectiva.com/affdex-for-market-research (accessed on 10 December 2023), DBFace https://github.com/dlunion/DBFace (accessed on 10 December 2023), FaceMesh https://developers.google.com/android/reference/com/google/mlkit/vision/facemesh/FaceMesh (accessed on 10 December 2023), dlib [347] | [25,53,55,56,68,79,80,83,91,92,94,98,99,101,108,174,193,199,201,203,220] | Subject/Object |

| Appearance coefficients of facial image and shape | Active Orientation Model (AOM) [348] | [50] | Subject/Object |

| Probability distribution of 365 common scenes | Places365-CNN [349] | [220] | Subject/Object |

| Features | Tools | Studies | Feature Category |

|---|---|---|---|

| Feature descriptors: local binary patterns, Edge Orientation Histogram, Local Phase Quantization, Histogram of Oriented Gradients (HOG) | OpenFace [269] | [47,48,53,195] | Subject/Object, Derived |

| Geometric features: displacement, mean shape of chosen points, difference between coordinates of specific landmarks, Euclidean distance, angle between landmarks, angular orientation | Manual computation, subject-specific active appearance model (AMM), AFAR toolbox [350] | [47,51,54,56,70,79,83,91,99,195,198,203] | Subject/Object, Derived |

| Motion features: movement across video frames, range and speed of displacements (facial landmarks, eye gaze direction, eye open and close, head pose, upper body points) | 3D convolutional layers on persons detected at frame-level, Motion history histogram (MHH) [351], feature dynamic history histogram (FDHH), residual network-based dynamic feature descriptor [75] | [52,53,68,75,147,193] | Subject/Object, Derived |

| Facial action units (FAUs), facial expressions | OpenFace [269], Face++ [352], FACET software [353], AU detection module of AFAR [350] | [25,79,91,99,194,196,198] | Subject/Object, Emotion-related |

| FAU features: occurrences, intensities, facial expressivity, peak expressivity, behavioral entropy | MHH, Modulation spectrum (MS), Fast Fourier transform (FFT) | [83,99,101,107,194,354] | Emotion-related, Derived |

| Emotion profiles (EPs) | SVM-based EP detector [355] | [101] | Emotion-related |

| Sentiment score | ResNeXt [356] | [186] | Emotion-related |

| Turbulence features capturing sudden erratic changes in behaviors | N/A | [192] | Derived |

| Deep visual representations from images or video frames | VGG-16 [261], VGG-Face [357], VGGNet [261], AlexNet [358], ResNet [308] ResNet-50 [359], ResNeXt [356], EfficientNet [360], InceptionResNetV2 [346], CNN, dense201 [195], self-supervised DINO (self-distillation with no labels) [361], GTCN [339], unsupervised Convolutional Auto-Encoder (CAE) (replaces autoencoder’s fully connected layer with CNN) [195] | [53,58,60,74,82,84,85,89,93,95,98,106,111,115,116,117,122,125,126,129,131,132,135,160,187,195,201,220] | Representations |

| High-level (frame-level) representations of low-level features (LLDs, facial landmarks, FAUs) | Stacked Denoising Autoencoders (SDAE) [306], DenseXception block-based CNN [221] (replace DenseNet’s convolution layer with Xception layer), CNN-LSTM, denoising autoencoder, LSTM-based multitask learning modality encoder [62], 3D convolutional layers, LSTM | [55,62,87,98,199,221] | Representations, Derived |

| Session-level representations from frame-level features/representations | Average of frame-level representations, Fisher vector (FV) encoding, improved FV coding [265], GMM, Temporal Attentive Pooling (TAP) [75] | [55,75,117,199] | Representations, Derived |

| Texts extracted from images | python-tesseract [362] | [25,126,128,140] | Textual |

| Image labels/tags | Deep CNN-based multi-label classifier [113], Contrastive Language Image Pre-training (CLIP) [363], Imagga [364] (CNN-based automatic tagging system) | [113,124,128,129] | Textual |

| Bag-of-Words (BoVW) features | Multi-scale Dense SIFT features (MSDF) [365] | [124,195] | Textual, Derived |

| Color distribution-cool, clear, and dominant colors, pixel intensities | Probabilistic Latent Semantic Analysis model [366] (assigns a color to each image pixel), cold color range [367], RGB histogram | [20,140,145,204,220] | Color-related |

| Brightness, saturation, hue, value, sharpness, contrast, correlation, energy, homogeneity | HSV (Hue, Saturation, color) [368] color model | [20,106,109,113,145,204,220] | Color-related |

| Statistical descriptors for each HSV distribution: quantiles, mean, variance, skewness, kurtosis | N/A | [145,204] | Color-related, Derived |

| Pleasure, arousal, and dominance | Compute from brightness and saturation values [276] | [220] | Emotion-related, Derived |

| Number of pixels, width, height, if image is modified (indicated via exif file) | N/A | [204] | Image metadata |

| Features | Tools | Studies | Feature Category |

|---|---|---|---|

| Count of words: general, condition-specific (depressed, suicidal, eating disorder-related) keywords, emojis | N/A | [20,104,109,123,126,127,130,133,134,137,145,146,187,188,218,219] | Linguistic |

| Words referring to social processes (e.g., reference to family, friends, social affiliation), and psychological states (e.g., negative/positive emotions) | Linguistic Inquiry and Word Count (LIWC) [278], LIWC 2007 Spanish dictionary [369], Chinese Suicide Dictionary [370], Chinese LIWC [371], TextMind [372], Suite of Automatic Linguistic Analysis Tools (SALAT) [279]—Simple Natural Language Processing (SiNLP) [373] | [20,79,109,118,121,128,186,194,196,197,198,204,211,219,374] | Linguistic |

| Part-of-speech (POS) tags: adjectives, nouns, pronouns | Jieba [375], Natural Language Toolkit (NLTK) [280], TextBlob [376], spaCy, Penn Treebank [377], Empath [378] | [61,100,104,123,126,135,184,185,189,195,218,219] | Linguistic |

| Word count-related representations: Term Frequency–Inverse Document Frequency (TF-IDF), Bag of Words (BoW), n-grams, Term Frequency–Category Ratio (TF-CR) [379] | Word2Vec embeddings, language models | [115,116,118,124,128,130,140,143,144,148,185,186,188,198,217,374] | Linguistic, Representations |

| Readability metrics: Automated Readability Index (ARI), Simple Measure of Gobbledygook (SMOG), Coleman–Liau Index (CLI), Flesch reading ease, Gunning fog index, syllable count scores | Textstat [380] | [218,220] | Linguistic |

| Lexicon-based representations [381] | Depression domain lexicon [382], Chinese suicide dictionary [370] | [120,135,189] | Representations |

| Sentiment scores, valence, arousal, and dominance (VAD) ratings | NLTK [280], IBM Watson Tone Analyzer, Azure Text Analytics, Google NLP, NRC emotion lexicon [383], senti-py [384], Stanford NLP toolkit [281], Sentiment Analysis and Cognition Engine (SEANCE) [282], text SA API of Baidu Intelligent Cloud Platform [123], Valence Aware Dictionary and Sentiment Reasoner (VADER) [385], Chinese emotion lexicons DUTIR [386], Affective Norms for English Words ratings (ANEW) [283], EmoLex [387], SenticNet [388], Lasswell [389], AFINN SA tool [390], LabMT [391], text2emotion [392], BERT [266] | [20,54,61,86,110,115,118,119,121,123,126,127,128,130,132,133,137,143,144,145,146,148,184,185,186,188,194,196,197,198,218,219,374] | Sentiment-related |

| Happiness scores of emojis | Emoji sentiment scale [393] | [110] | Sentiment-related |

| Emotion transitions from love to joy, from love to anxiety/sorrow (inspired by [394]) | Chinese emotion lexicons DUTIR [386] | [187] | Sentiment-related |

| Word representations | Global vectors for word representation (GloVe) [395], Word2Vec [396], FastText [397], Embeddings from Language Models (ELMo) [398], BERT [266], ALBERT [297], XLNet [285], bidirectional gated recurrent unit (BiGRU) [341], itwiki (Italian Wikipedia2Vec model), Spanish model [399], EmoBERTa [298] (incorporate linguistic and emotional information), MiniLM [400] (supports multiple languages), GPT [401], TextCNN [402], Bi-LSTM [294] | [49,60,65,67,69,72,73,77,78,81,82,87,88,90,95,96,97,98,100,106,111,112,113,116,122,125,128,129,131,135,136,138,142,145,147,148,185,186,187,201,214,218,308] | Semantic-related, Representations |

| Sentence representations | Paragraph Vector (PV) [284], Universal Sentence Encoder [403], Sentence-BERT [404] | [52,59,70,71,89,102,103,174,199] | Semantic-related, Representations |

| Topic modeling, topic-level features | Scikit-learn’s Latent Dirichlet Allocation module [405], Biterm Topic Model [406] | [20,43,114,118,119,126,130,134,136,137,146,185,188,194,217,219] | Semantic-related |

| Description categories | IBM Watson’s Natural Language Understanding tool (https://cloud.ibm.com/apidocs/natural-language-understanding#text-analytics-features (accessed on 10 December 2023)) | [132] | Semantic-related |

| High-level representations from low-level features/representations (e.g., sentence-level from word-level, to capture sequential and/or significant information) | BiLSTM with an attention layer, stacked CNN and BiGRU with attention, summarization [119] using K-means clustering and BART [407], combination of LSTM with attention mechanism and CNN, BiGRU with attention | [73,95,97,119,136,145,159,201] | Representations, Derived |

| User-level representations from post-level representations | CNN-based triplet network [408] from existing Siamese network [409] (consider cosine similarities between post-level representations between each individual and others in the same and different target groups), LSTM with attention mechanism | [128,138] | Representations, Derived |

| Session-level representations from segment-level representations | Fisher vector encoding | [199] | Representations, Derived |

| Subject-level average, median, standard deviation of sentiment scores, representations, POS counts | N/A | [110,185,186] | Derived |

| Subject-level representations in conversation | Graph attention network—vertex as question/answer pair incorporating LeakyReLU on neighbors with respective attention coefficients, edge between adjacent questions | [97] | Representations, Derived |

| Features | Tools | Studies | Feature Category |

|---|---|---|---|

| Posts distribution (original posts, posts with images, posts of specific emotions/sentiments)-frequency, time | N/A | [109,112,122,123,126,130,134,137,142,145,188,218,219] | Post metadata |

| Username, followers, followings, status/bio description, profile header and background images, location, time zone | N/A | [109,115,118,122,123,126,130,134,137,142,145,171,188,218,219] | User metadata |

| Likes, comments, hashtags, mentions, retweets (Twitter), favourites (Twitter) | N/A | [115,126,135,137,142,171,185,189] | Social interactions, Post metadata |

| Stressful periods with stress level and category (study, work, family, interpersonal relation, romantic relation, or self-cognition) | Algorithm [410] applied on users’ posting behaviors | [187] | Post metadata, Derived |

| Aggregate posting time by 4 seasons, 7 days of the week, 4 epochs of the day (morning, afternoon, evening, midnight), or specific times (daytime, sleep time, weekdays, weekends) | N/A | [125,130,135,186,188,189,219] | Post metadata, Derived |

| Encoding of numerical features | Categorize into quartiles (low, below average, average, high) | [115] | Representations, Derived |

| Social interaction graph-node: user-level representations concatenated from post-level representations, edge: actions of following, mentioning, replying to comments, quoting | node2vec [411], Ego-network [412] | [139,185] | Social interactions |

| Personalized graph-user-level node: user-level representations made up of property nodes, property node (individual), personal information, personality, mental health experience, post behavior, emotion expression and social interactions, user–user edge: mutual following-follower relationship, user-property edge: user’s characteristics | Attention mechanism to weigh property by contribution to individual’s mental health condition (user-property edge) and emotional influence (user–user edge) | [187] | Social interactions |

| Retweet network node: user-level representations, directed edge: tweets of a user is retweeted by the directed user | Clustering-based neighborhood recognition-form communities with densely connected nodes, expand communities using similarity with adjacent nodes | [141] | Representations |

| Features | Tools | Studies | Feature Category |

|---|---|---|---|

| Phone calls and text messages: frequency, duration, entropy | N/A | [104,105,149,155,156,159,161,162,166,169,170,171,175,190,205,206,209,222,223] | Calls and messages |

| Phone unlocks: frequency, duration | Manual computation, RAPIDS [413]-a tool for data pre-processing and biomarker computation | [99,149,150,155,156,158,160,161,162,166,167,171,176,190,205,206,208,212,374,171] | Phone interactions |

| Phone charge duration | N/A | [163] | Phone interactions |

| Running applications: type, frequency, duration of usage | N/A | [99,149,150,155,156,158,160,161,162,166,169,170,171,190,205,206,208,212,374] | Phone interactions |

| Activity states (e.g., walking, stationary, exercising, running, unknown): frequency, duration | Android activity recognition API, activity recognition model (LSTM-RNN [414], SVM), Google Activity Recognition Transition API (using gyroscope and accelerometer) | [150,152,154,160,163,169,170,176,177,190,205,206] | Physical mobility |

| Footsteps | API of mobile devices, Euclidean norm of accelerometer data | [154,169,170] | Physical mobility |

| Distance traveled, displacement from home, location variance and entropy, time spent at specific places, transitions | Manual computation, RAPIDS [413] | [99,150,151,153,154,155,158,160,161,162,165,166,175,176,177,200,205,206,208,209] | Physical mobility |

| Location cluster features: number of clusters, largest cluster as primary location, most and least visited clusters | DBSCAN clustering [415], Adaptive K-means clustering [416] | [150,151,153,154,160,165,176,177,205,208] | Physical mobility |

| Speed | Compute from GPS and/or accelerometer | [153,165,166,209] | Physical mobility |

| Intensity of action | Compute rotational momentum from GPS and gyroscope | [162] | Physical mobility |

| GPS sensor, calls and phone screen unlock features | RAPIDS [413]-a tool for data pre-processing and biomarker computation | [158,164] | Physical mobility, Calls and messages, Phone interactions |

| WiFi association events (when a smartphone is associated or dissociated with a nearby access point at a location’s WiFi network) | N/A | [153] | Connectivity |

| Occurrences of unique Bluetooth addresses, most/least frequently detected devices | N/A | [99,151,155,156,175] | Connectivity |

| Surrounding sound: amplitude, conversations, human/non-human voices | N/A | [150,163,166,205,206,207,208,209] | Ambient environment |

| Surrounding illuminance: amplitude, mean, variance, standard deviation | N/A | [99,163,190,205,208,209] | Ambient environment |

| Silent and noise episodes: count, sum, minimum decibels | Detect via intermittent samples until noise state changes | [166] | Ambient environment |

| Sleep duration, wake and sleep onset | Infer from ambient light, audio amplitude, activity state, and screen on/off | [150,160,161,167,169,170,175,176,206] | Derived, Physical mobility |

| Keystroke features: count, transitions, time between two consecutive keystrokes | N/A | [166,202] | Phone interactions |

| Time between two successive touch interactions (tap, long tap, touch) | N/A | [166] | Phone interactions |

| Day-level features | Statistical functions (mean, median, mode, standard deviation, interquartile range) at the day-level or day of the week (weekdays, weekends) | [151,152,154,156,159,163,164,170,176,206] | Derived |

| Epoch-level features | Statistical functions at partitions of a day-morning, afternoon, evening, night | [149,151,152,156,159,163,166,176,206] | Derived |

| Hour-level features | Statistical functions at each hour of the day | [208,209] | Derived |

| Week-level features | Statistical functions at the week-level, distance from weekly mean | [162,164] | Derived |

| Rhythm-related features: ultradian, circadian, and infradian rhythms, regularity index [417], periodicity based on time windows | Manual computation, Cosinor [418]-a rhythmic regression function | [151,152,153,155,157,158,176,207] | Derived |

| Degrees of complexity and irregularity | Shannon entropy of sensor features | [166] | Derived |

| Statistical, temporal and spectral time series features | Time Series Feature Extraction Library (TSFEL) [419] | [104,105] | Derived |

| High-level cluster-based features: cluster labels, likelihood scores, distance scores, transitions | Gaussian mixture model (GMM) [420], partition around methods (PAM) clustering model [421] | [208,209] | Derived |

| Network of social interactions and personal characteristics: node type corresponds to a modality/category (e.g., individual, personality traits, social status, physical health, well-being, mental health status) | Heterogeneous Information Network (HIN) [422] | [173] | Representations |

| Representations capturing important patterns across timestamps | Transformer encoder [295] | [179] | Representations |

| Features | Tools | Studies | Feature Category |

|---|---|---|---|

| Duration and onset of sleep status (asleep, restless, awake, unknown), sleep efficiency, sleep debt | API of wristband | [149,151,155,156,164,171,180,181,182,191,374] | Physical mobility |

| Number of steps, active and sedentary bouts, floor climb | API of wristband | [150,151,155,156,164,171,179,180,181,182,191,374] | Physical mobility |

| Heart rate (HR), galvanic skin response (GSR), skin temperature (ST), electrodermal activity (EDA) | API of Wristband | [149,150,164,169,170,172,178,179,182,191] | Physiological |

| Outliers of systolic and diastolic periods: centering tendency, spreading degree, distribution shape and symmetry degree values from blood volume pressure | N/A | [178] | Physiological, Derived |

| Motion features from accelerometer data: acceleration, motion | N/A | [149] | Physical mobility |

| Heart rate variability (HRV), rapid eye movement, wake after sleep onset, metabolic equivalent for task (MET) for physical activity | API of Oura ring | [158] | Physiological, Physical mobility |

| High-level features from HR, GSR, and ST signals | CNN-LSTM | [215] | Representations |

| Basal metabolic rate (BMR) calories | API of wristband | [179,180] | Physiological |

| Features | Tools | Studies | Feature Category |

|---|---|---|---|

| Gender, age, location | Sina microblog user account | [187] | Demographic |

| Gender, age, relationships, education levels | bBridge [423], big data platform for social multimedia analytics | [20] | Demographic |

| Age, gender | Age and gender lexica [424], M3-inference model [425] performs multimodal analysis on profile images, usernames, and descriptions on social media profiles | [121,143,144] | Demographic |

| Big 5 personality scores | IBM’s Personality Insights [426], BERT-MLP model [427] on textual content | [57,121,130,143,144,188] | Personality |

| Proportion of perfection and ruminant thinking-related words in textual content (inspired by [287]) | Perfection and ruminant-thinking-related lexicons | [187] | Personality |

| Interpersonal sensitivity: amount of stressful periods associated with interpersonal relations | Algorithm [410] applied on users’ posting behaviors | [187] | Personality |

Appendix B. Existing Modality Fusion Techniques

| Category | Method | Tools | Studies |

|---|---|---|---|

| Feature level | Concatenate into a single representation | N/A | [67,84,85,89,96,97,105,132,142,143,145,146,166,170,179,197,199,200,201,217] |

| Score/Decision level | Sum-rule, product-rule, max-rule, AND and OR operations, or majority voting on modality-level scores | N/A | [48,51,56,77,87,98,126,173,193,198,201] |

| Weighted average or sum of modality-level scores | N/A | [51,68,147,198,200] | |

| Average confidence scores from lower-level prediction | N/A | [121] | |

| Combine predictions of individual modalities as inputs to secondary ML models | SVM, decision tree, random forest, novel ML models | [48,52,56,64,71,72,74,103,122,155,193] | |

| Hierarchical score/decision-level fusion | Weighted voting fusion network [428] | [122,195] | |

| Summation of question-level scores from rules enforced on modality-specific predictions | N/A | [88] | |

| Model level | Map multiple features into a single vector | LSTM-based encoder–decoder network, LSTM-based neural network, BiLSTM, LSTM, fully connected layer, tensor fusion network | [46,59,75,80,86,95,187] |

| Concatenate feature representations as a single input to learn high-level representations | Dense and fully connected layers with attention mechanisms, CNN, multi-head attention network, transformer [295], novel time-aware LSTM | [70,73,77,89,91,92,94,125,189,214] | |

| Learn shared representations from weighted modality-specific representations | Gated Multimodal Unit (GMU) [429], parallel attention model, attention layer, sparse MLP (mix vertical and horizontal information via weight sharing and sparse connection), multimodal encoder–decoder, multimodal factorized bilinear pooling (combines compact output features of multi-modal low-rank bilinear [430] and robustness of multi-modal compact bilinear [431]), multi-head intermodal attention fusion, transformer [295], feed-forward network, low-rank multimodal fusion network [432] | [62,65,67,76,93,100,102,106,113,117,131,135,136,142,143,144,174,218,433] | |

| Learn joint sparse representations | Dictionary learning | [20] | |

| Learn and fuse outputs from different modality-specific parts at fixed time steps | Cell-coupled LSTM with L-skip fusion mechanism | [101] | |

| Learn cross-modality representations that incorporate interactions between modalities | LXMERT [434], transformer encoder with cross-attention layers (representations of a modality as query and the other as key/value, and vice versa), memory fusion network [435] | [82,92,129] | |

| Horizontal and vertical kernels to capture patterns across different levels | CASER [309] | [170] |

Appendix C. Existing Machine Learning Models

| Category | Machine Learning Models | Application Method | Studies |

|---|---|---|---|

| Supervised learning | Linear regression, logistic regression, least absolute shrinkage and selection operator (Lasso) regularized linear regression [436], ElasticNet regression [437], stochastic gradient descent (SGD) regression, Gaussian staircase model, partial least square (PLS) [438] regression (useful for collinear features), generalized linear models | Learn relationship between features to predict continuous values (scores of assessment scales) or probabilities (correspond to output classes) | [20,43,49,53,55,68,70,99,104,105,126,130,134,140,150,154,163,164,167,175,179,182,188,200,211,212,213,219,222,223] |

| SVM | Find a hyperplane that best fits features (regression) or divides features into classes (classification), secondary model in score-level fusion | [47,50,79,99,104,105,115,121,130,134,140,148,162,163,169,178,179,188,198,210,219,223] | |

| One class SVM [439] | Anomaly detection by treating outliers as points on the other side of hyperplane | [165] | |

| Three-step hierarchical logistic regression | Incremental inclusion of three feature groups in conventional logistic regression | [181] | |

| Discriminant functions-Naive Bayes, quadratic discriminant analysis (QDA), linear discriminant analysis (LDA), Gaussian naive Bayes | Determine class based on Bayesian probabilities, detect state changes | [12,99,104,140,148,152,163,222] | |

| Decision tree | Construct a tree that splits into leaf nodes based on feature | [99,134,140,148,164,178] | |

| Mixed-effect classification and regression trees-generalized linear mixed-effects model (GLMM) trees [440] | Capture interactions and nonlinearity among features while accounting for longitudinal structure | [191] | |

| Neural network | Fully connected (FC) layers, multilayer perceptron (MLP), CNN, LSTM, BiLSTM, GRU, temporal convolutional network (TCN) [441] (with dilation for long sequences)-with activation function like Sigmoid, Softmax, ReLU, LeakyReLU, and GeLU | Predict scores of assessment scales (regression) or probability distribution over classes (classification) | [60,78,80,84,85,86,87,88,90,91,92,93,94,96,98,105,111,113,117,131,133,135,136,142,143,144,146,162,163,167,168,170,172,174,178,179,190,197,199,201,218,219,221,223,308] |

| DCNN-DNN (combination of deep CNN and DNN), GCNN-LSTM (combination of gated convolutional neural network, which replaces a convolution block in CNN with a gated convolution block, and LSTM) | The latter neural network makes predictions based on high-level global features learned by the prior | [52,308] | |

| Cross-domain DNN with feature adaptive transformation and combination strategy (DNN-FATC) | Enhance detection in the target domain by transferring information from a heterogenous source domain | [109] | |

| Attention-based TCN | Classify features using relational classification attention [442] | [72] | |

| One-hot transformer (lower complexity than original sine and cosine functions) | Apply one-hot encoding on features for classification | [72] | |

| Transformer [295] | Apply self-attention across post-level representations, attention masking masks missing information | [129] | |

| Transformer-based sequence classification models-BERT, RoBERTa [296], XLNet [285], Informer [443] (for long sequences) | Perform classification using custom pre-trained tokenizers augmented with special tokens for tokenization | [121,179] | |

| Hierarchical attention network (HAN) [444] | Predict on user-level representations derived from stacked attention-based post-level representations, each made up of attention-based word-level representations | [128] | |

| LSTM-based encoder and decoder | Learn factorized joint distributions to generate modality-specific generative factors and multimodal discriminative factors to reconstruct unimodal inputs and predict labels respectively | [82] | |

| GRU-RNN as baseline model with FC layers as personalized model | Train baseline model using data from all samples and fine-tune personalized model on individual samples | [161] | |

| CNN-based triplet network [408] | Incorporate representations of homogeneous users | [138] | |

| Stacked graph convolutional network | Perform classification on heterogeneous graphs by learning embeddings, sorting graph nodes, and performing graph comparisons | [139] | |

| GRU-D (introduce decay rates in conventional GRU to control decay mechanism) | Learn feature-specific hidden decay rates from inputs | [171] | |

| Ensemble learning | Random forest (RF) [300], eXtreme Gradient Boosting (XGBoost), AdaBoost [301], Gradient Boosted Regression Tree [302] (GDBT) (less sensitive to outliers and more robust to overfitting) | Predict based on numerical input features | [51,99,104,105,114,126,130,134,140,148,151,155,157,160,163,164,167,169,178,179,182,183,188,203,204,206,212,219,222,223] |

| RF | Secondary model that predicts from regression scores and binary outputs of individual modality predictions | [71,81] | |

| Balanced RF [445] (RF on imbalanced data) | Aggregate predictions of ensemble on balanced down-sampled data | [209] | |

| XGBoost-based subject-specific hierarchical recall network | Deduce subject-level labels based on whether the output probability of XGBoost at a specific layer exceeds a predetermined threshold | [194] | |

| Stacked ensemble learning architecture | Obtain the first level of predictions from KNN, naive Bayes, Lasso regression, ridge regression, and SVM, then use them as features of a second-layer logistic regression | [123] | |

| Feature-stacking (a meta-learning approach) [303] | Use logistic regression as an L1 learner to combine predictions of weak L0 learners on different feature sets | [185] | |

| Greedy Ensembles of Weighted Extreme Learning Machines (GEWELMs), WELM [446] (weighted mapping for unbalanced class), Kernel ELM | ELM [447] as a building block that maps inputs to class-based outputs via least square regression | [63,127,192] | |

| Stacked ensemble classifier | Use MLP as meta learner to integrate outputs of CNN base learners | [126] | |

| Cost-sensitive boosting pruning trees-AdaBoost with pruned decision trees | Weighted pruning prunes redundant leaves to increase generalization and robustness | [137] | |

| Weighted voting model | Weight predictions of baseline ML models (DT, Naive Bayes, KNN, SVM, generalized linear models, GDBT) based on class probabilities and deduce final outcome from the highest weighted class | [140] | |

| Ensemble of SVM, DT, and naive Bayes | N/A | [89] | |

| Combination of personalized LSTM-based and RF models | Train personalized LSTM on hourly time series data (of another sample most similar to the sample of concern based on demographic characteristics and baseline MH states), and RF on statistical and cluster-based features | [208] | |

| Multi-task learning | CNN | Train jointly to produce two output branches, regression score and probability distribution for classification | [61,62] |

| LSTM-RNN, attention-based LSTM subnetwork, MLP with shared and task-specific layers | Train for depression prediction with emotion recognition as the secondary task | [46,106,132] | |

| LSTM with Swish [448] activation function (speeds up training with the advantages of linear and ReLU activation), GRU with FC layers, DNN with multi-task loss function | Perform both regression and classification simultaneously | [74,102,118,141,193] | |

| Multi-task FC layers | Train jointly to predict severity level and discrete probability distribution | [97] | |

| Multi-output support least-squares vector regression machines (m-SVR) [304] | Map multivariate inputs to a multivariate output space to predict several tasks | [207] | |

| 2-layer MLP with shared and task-specific dense layers with dynamic weight tuning technique | Train to perform individual predictions for positive and control groups | [180] | |

| Bi-LSTM-based DNNs to provide auxiliary outputs into DNN for main output | Auxiliary outputs correspond to additional predictions to incorporate additional information | [176] | |

| DNN (FC layers with Softmax activation) for auxiliary and main outputs | Train DNNs individually on different feature combinations as individual tasks to obtain auxiliary losses for joint optimization function of main output | [145] | |

| Multi-task neural network with shared LSTM layer and two task-specific LSTM layers | Train to predict male and female samples individually | [70] | |

| Others | Semi-supervised learning-ladder network classifier [305] of stacked noisy encoder and denoising autoencoder [306] | Reconstruct input using outputs of noisy encoder in the current layer and decoder from the previous layer, combine with MLP (inspired by [449]) | [196] |

| DMF [450], RESCAL [451], DEDICOM [452], HERec [453] | Perform recommender system [307] approach on features modeled using HIN | [173] | |

| Graphlets [454], colored graphlets [455], DeepWalk [456], Metapath2vec++ [457] | Perform node classification on features modeled using HIN | [173] | |

| Combination of DBSCAN and K-Means | Density-based clustering | [78] | |

| Clustering-based-KNN | Deduce predicted class through voting of K-nearest data | [140,163,166,178,212,223] | |

| Linear superimpose of modality-specific features | Learn fitting parameters (between 0 and 1) that adjust the proportions of modality-specific features in the final outcome | [83] | |

| Two-staged prediction with outlier detection | Baseline ML model (LR, SVM, KNN, DT, GBDT, AdaBoost, RF, Gaussian naive Bayes, LDA, QDA, DNN, CNN) performs day-level predictions, t-test detects outliers in first stage outputs | [163] | |

| Label association mechanism | Apply to one-hot vectors of predictions from modality-specific DNNs | [189] | |

| Isolation Forest (ISOFOR) [458], Local Outlier Factor (LOF) [459], Connectivity-Based Outlier Factor (COF) [460] | Unsupervised anomaly detection | [166] | |

| Similarity and threshold relative to the model of normality (MoN) (from the average of deep representations of training instances in respective target groups) | Deduce predicted class based on higher similarity with corresponding MoN | [85] | |

| Federated learning based on DNN | Train global model on all data and fine-tune the last layer locally | [168] |

References

- Institute of Health Metrics and Evaluation. Global Health Data Exchange (GHDx); Institute of Health Metrics and Evaluation: Seattle, WA, USA, 2019. [Google Scholar]

- World Health Organization. Mental Health and COVID-19: Early Evidence of the Pandemic’s Impact: Scientific Brief, 2 March 2022; Technical Report; World Health Organization: Geneva, Switzerland, 2022. [Google Scholar]

- Australian Bureau of Statistics (2020–2022). National Study of Mental Health and Wellbeing. 2022. Available online: https://www.abs.gov.au/statistics/health/mental-health/national-study-mental-health-and-wellbeing/latest-release (accessed on 10 December 2023).

- National Institute of Mental Health. Statistics of Mental Illness. 2021. Available online: https://www.nimh.nih.gov/health/statistics/mental-illness (accessed on 10 December 2023).

- Bloom, D.; Cafiero, E.; Jané-Llopis, E.; Abrahams-Gessel, S.; Bloom, L.; Fathima, S.; Feigl, A.; Gaziano, T.; Hamandi, A.; Mowafi, M.; et al. The Global Economic Burden of Noncommunicable Diseases; Technical Report; Harvard School of Public Health: Boston, MA, USA, 2011. [Google Scholar]

- World Health Organization. Mental Health and Substance Use. In Comprehensive Mental Health Action Plan 2013–2030; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- Borg, M. The Nature of Recovery as Lived in Everyday Life: Perspectives of Individuals Recovering from Severe Mental Health Problems. Ph.D. Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2007. [Google Scholar]

- Barge-Schaapveld, D.Q.; Nicolson, N.A.; Berkhof, J.; Devries, M.W. Quality of life in depression: Daily life determinants and variability. Psychiatry Res. 1999, 88, 173–189. [Google Scholar] [CrossRef] [PubMed]

- Rapee, R.M.; Heimberg, R.G. A cognitive-behavioral model of anxiety in social phobia. Behav. Res. Ther. 1997, 35, 741–756. [Google Scholar] [CrossRef]

- Stewart-Brown, S. Emotional wellbeing and its relation to health. BMJ 1998, 317, 1608–1609. [Google Scholar] [CrossRef]

- Goldman, L.S.; Nielsen, N.H.; Champion, H.C.; The Council on American Medical Association Council on Scientific Affairs. Awareness, Diagnosis, and Treatment of Depression. J. Gen. Intern. Med. 1999, 14, 569–580. [Google Scholar] [CrossRef]

- Grünerbl, A.; Muaremi, A.; Osmani, V.; Bahle, G.; Öhler, S.; Tröster, G.; Mayora, O.; Haring, C.; Lukowicz, P. Smartphone-Based Recognition of States and State Changes in Bipolar Disorder Patients. IEEE J. Biomed. Health Inform. 2015, 19, 140–148. [Google Scholar] [CrossRef]

- Kakuma, R.; Minas, H.; Ginneken, N.; Dal Poz, M.; Desiraju, K.; Morris, J.; Saxena, S.; Scheffler, R. Human resources for mental health care: Current situation and strategies for action. Lancet 2011, 378, 1654–1663. [Google Scholar] [CrossRef]

- Le Glaz, A.; Haralambous, Y.; Kim-Dufor, D.H.; Lenca, P.; Billot, R.; Ryan, T.C.; Marsh, J.; DeVylder, J.; Walter, M.; Berrouiguet, S.; et al. Machine Learning and Natural Language Processing in Mental Health: Systematic Review. J. Med. Internet Res. 2021, 23, e15708. [Google Scholar] [CrossRef]

- Rahman, R.A.; Omar, K.; Mohd Noah, S.A.; Danuri, M.S.N.M.; Al-Garadi, M.A. Application of Machine Learning Methods in Mental Health Detection: A Systematic Review. IEEE Access 2020, 8, 183952–183964. [Google Scholar] [CrossRef]

- Graham, S.; Depp, C.; Lee, E.E.; Nebeker, C.; Tu, X.; Kim, H.C.; Jeste, D.V. Artificial Intelligence for Mental Health and Mental Illnesses: An Overview. Curr. Psychiatry Rep. 2019, 21, 116. [Google Scholar] [CrossRef]

- Thieme, A.; Belgrave, D.; Doherty, G. Machine Learning in Mental Health: A Systematic Review of the HCI Literature to Support the Development of Effective and Implementable ML Systems. ACM Trans. Comput. Hum. Interact. 2020, 27, 1–53. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Pratap Singh, R.; Suman, R.; Rab, S. Significance of machine learning in healthcare: Features, pillars and applications. Int. J. Intell. Netw. 2022, 3, 58–73. [Google Scholar] [CrossRef]

- Riaz Choudhry, F.; Vasudevan Mani, L.C.M.; Khan, T.M. Beliefs and perception about mental health issues: A meta-synthesis. Neuropsychiatr. Dis. Treat. 2016, 12, 2807–2818. [Google Scholar] [CrossRef] [PubMed]

- Shen, G.; Jia, J.; Nie, L.; Feng, F.; Zhang, C.; Hu, T.; Chua, T.S.; Zhu, W. Depression Detection via Harvesting Social Media: A Multimodal Dictionary Learning Solution. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 3838–3844. [Google Scholar]

- Manickam, P.; Mariappan, S.A.; Murugesan, S.M.; Hansda, S.; Kaushik, A.; Shinde, R.; Thipperudraswamy, S.P. Artificial Intelligence (AI) and Internet of Medical Things (IoMT) Assisted Biomedical Systems for Intelligent Healthcare. Biosensors 2022, 12, 562. [Google Scholar] [CrossRef]

- Skaik, R.; Inkpen, D. Using Social Media for Mental Health Surveillance: A Review. ACM Comput. Surv. 2020, 53, 1–31. [Google Scholar] [CrossRef]

- Chen, X.; Genc, Y. A Systematic Review of Artificial Intelligence and Mental Health in the Context of Social Media. In Proceedings of the Artificial Intelligence in HCI, Virtual, 26 June–1 July 2022; pp. 353–368. [Google Scholar]

- Deshmukh, V.M.; Rajalakshmi, B.; Dash, S.; Kulkarni, P.; Gupta, S.K. Analysis and Characterization of Mental Health Conditions based on User Content on Social Media. In Proceedings of the 2022 International Conference on Advances in Computing, Communication and Applied Informatics (ACCAI), Chennai, India, 28–29 January 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Yazdavar, A.H.; Mahdavinejad, M.S.; Bajaj, G.; Romine, W.; Sheth, A.; Monadjemi, A.H.; Thirunarayan, K.; Meddar, J.M.; Myers, A.; Pathak, J.; et al. Multimodal mental health analysis in social media. PLoS ONE 2020, 15, e0226248. [Google Scholar] [CrossRef]

- Garcia Ceja, E.; Riegler, M.; Nordgreen, T.; Jakobsen, P.; Oedegaard, K.; Torresen, J. Mental health monitoring with multimodal sensing and machine learning: A survey. Pervasive Mob. Comput. 2018, 51, 1–26. [Google Scholar] [CrossRef]

- Hickey, B.A.; Chalmers, T.; Newton, P.; Lin, C.T.; Sibbritt, D.; McLachlan, C.S.; Clifton-Bligh, R.; Morley, J.; Lal, S. Smart Devices and Wearable Technologies to Detect and Monitor Mental Health Conditions and Stress: A Systematic Review. Sensors 2021, 21, 3461. [Google Scholar] [CrossRef]

- Woodward, K.; Kanjo, E.; Brown, D.J.; McGinnity, T.M.; Inkster, B.; Macintyre, D.J.; Tsanas, A. Beyond Mobile Apps: A Survey of Technologies for Mental Well-Being. IEEE Trans. Affect. Comput. 2022, 13, 1216–1235. [Google Scholar] [CrossRef]

- Craik, K.H. The lived day of an individual: A person-environment perspective. Pers. Environ. Psychol. New Dir. Perspect. 2000, 2, 233–266. [Google Scholar]

- Harari, G.M.; Müller, S.R.; Aung, M.S.; Rentfrow, P.J. Smartphone sensing methods for studying behavior in everyday life. Curr. Opin. Behav. Sci. 2017, 18, 83–90. [Google Scholar] [CrossRef]

- Stucki, R.A.; Urwyler, P.; Rampa, L.; Müri, R.; Mosimann, U.P.; Nef, T. A Web-Based Non-Intrusive Ambient System to Measure and Classify Activities of Daily Living. J. Med. Internet Res. 2014, 16, e175. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 105906. [Google Scholar] [CrossRef]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.A.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: Explanation and elaboration. BMJ 2009, 339, W-65–W-94. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, T.P. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6, 336–341. [Google Scholar] [CrossRef] [PubMed]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report; University of Durham: Durham, UK, 2007. [Google Scholar]

- Zhang, T.; Schoene, A.; Ji, S.; Ananiadou, S. Natural language processing applied to mental illness detection: A narrative review. npj Digit. Med. 2022, 5, 46. [Google Scholar] [CrossRef]

- Valstar, M.; Schuller, B.; Smith, K.; Eyben, F.; Jiang, B.; Bilakhia, S.; Schnieder, S.; Cowie, R.; Pantic, M. AVEC 2013: The Continuous Audio/Visual Emotion and Depression Recognition Challenge. In Proceedings of the 3rd ACM International Workshop on Audio/Visual Emotion Challenge (AVEC ’13), Barcelona, Spain, 21 October 2013; pp. 3–10. [Google Scholar] [CrossRef]

- Valstar, M.; Schuller, B.; Smith, K.; Almaev, T.; Eyben, F.; Krajewski, J.; Cowie, R.; Pantic, M. AVEC 2014: 3D Dimensional Affect and Depression Recognition Challenge. In Proceedings of the 4th International Workshop on Audio/Visual Emotion Challenge (AVEC ’14), Orlando, FL, USA, 7 November 2014; pp. 3–10. [Google Scholar] [CrossRef]

- Sawyer, S.M.; Azzopardi, P.S.; Wickremarathne, D.; Patton, G.C. The age of adolescence. Lancet Child Adolesc. Health 2018, 2, 223–228. [Google Scholar] [CrossRef]

- Semrud-Clikeman, M.; Goldenring Fine, J. Pediatric versus adult psychopathology: Differences in neurological and clinical presentations. In The Neuropsychology of Psychopathology; Contemporary Neuropsychology; Springer: New York, NY, USA, 2013; pp. 11–27. [Google Scholar]

- Cobham, V.E.; McDermott, B.; Haslam, D.; Sanders, M.R. The Role of Parents, Parenting and the Family Environment in Children’s Post-Disaster Mental Health. Curr. Psychiatry Rep. 2016, 18, 53. [Google Scholar] [CrossRef]

- Tuma, J.M. Mental health services for children: The state of the art. Am. Psychol. 1989, 44, 188–199. [Google Scholar] [CrossRef]

- Gong, Y.; Poellabauer, C. Topic Modeling Based Multi-Modal Depression Detection. In Proceedings of the 7th Annual Workshop on Audio/Visual Emotion Challenge (AVEC ’17), Mountain View, CA, USA, 23 October 2017; pp. 69–76. [Google Scholar] [CrossRef]

- Van Praag, H. Can stress cause depression? Prog. Neuro-Psychopharmacol. Biol. Psychiatry 2004, 28, 891–907. [Google Scholar] [CrossRef]

- Power, M.J.; Tarsia, M. Basic and complex emotions in depression and anxiety. Clin. Psychol. Psychother. 2007, 14, 19–31. [Google Scholar] [CrossRef]

- Chao, L.; Tao, J.; Yang, M.; Li, Y. Multi task sequence learning for depression scale prediction from video. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 526–531. [Google Scholar] [CrossRef]

- Yang, L.; Jiang, D.; He, L.; Pei, E.; Oveneke, M.C.; Sahli, H. Decision Tree Based Depression Classification from Audio Video and Language Information. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge (AVEC ’16), Amsterdam, The Netherlands, 16 October 2016; pp. 89–96. [Google Scholar] [CrossRef]

- Pampouchidou, A.; Simantiraki, O.; Fazlollahi, A.; Pediaditis, M.; Manousos, D.; Roniotis, A.; Giannakakis, G.; Meriaudeau, F.; Simos, P.; Marias, K.; et al. Depression Assessment by Fusing High and Low Level Features from Audio, Video, and Text. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge (AVEC ’16), Amsterdam, The Netherlands, 16 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 27–34. [Google Scholar] [CrossRef]

- Williamson, J.R.; Godoy, E.; Cha, M.; Schwarzentruber, A.; Khorrami, P.; Gwon, Y.; Kung, H.T.; Dagli, C.; Quatieri, T.F. Detecting Depression Using Vocal, Facial and Semantic Communication Cues. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge (AVEC ’16), Amsterdam, The Netherlands, 16 October 2016; pp. 11–18. [Google Scholar] [CrossRef]

- Smailis, C.; Sarafianos, N.; Giannakopoulos, T.; Perantonis, S. Fusing Active Orientation Models and Mid-Term Audio Features for Automatic Depression Estimation. In Proceedings of the 9th ACM International Conference on PErvasive Technologies Related to Assistive Environments (PETRA ’16), Corfu Island, Greece, 29 June–1 July 2016; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Nasir, M.; Jati, A.; Shivakumar, P.G.; Nallan Chakravarthula, S.; Georgiou, P. Multimodal and Multiresolution Depression Detection from Speech and Facial Landmark Features. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge (AVEC ’16), Amsterdam, The Netherlands, 16 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 43–50. [Google Scholar] [CrossRef]

- Yang, L.; Jiang, D.; Xia, X.; Pei, E.; Oveneke, M.C.; Sahli, H. Multimodal Measurement of Depression Using Deep Learning Models. In Proceedings of the 7th Annual Workshop on Audio/Visual Emotion Challenge (AVEC ’17), Mountain View, CA, USA, 23–27 October 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 53–59. [Google Scholar] [CrossRef]

- Jan, A.; Meng, H.; Gaus, Y.F.B.A.; Zhang, F. Artificial Intelligent System for Automatic Depression Level Analysis Through Visual and Vocal Expressions. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 668–680. [Google Scholar] [CrossRef]

- Samareh, A.; Jin, Y.; Wang, Z.; Chang, X.; Huang, S. Detect depression from communication: How computer vision, signal processing, and sentiment analysis join forces. IISE Trans. Healthc. Syst. Eng. 2018, 8, 196–208. [Google Scholar] [CrossRef]

- Dibeklioğlu, H.; Hammal, Z.; Cohn, J.F. Dynamic Multimodal Measurement of Depression Severity Using Deep Autoencoding. IEEE J. Biomed. Health Inform. 2018, 22, 525–536. [Google Scholar] [CrossRef]

- Alghowinem, S.; Goecke, R.; Wagner, M.; Epps, J.; Hyett, M.; Parker, G.; Breakspear, M. Multimodal Depression Detection: Fusion Analysis of Paralinguistic, Head Pose and Eye Gaze Behaviors. IEEE Trans. Affect. Comput. 2018, 9, 478–490. [Google Scholar] [CrossRef]

- Kim, J.Y.; Kim, G.Y.; Yacef, K. Detecting Depression in Dyadic Conversations with Multimodal Narratives and Visualizations. In Proceedings of the AI 2019: Advances in Artificial Intelligence, Adelaide, Australia, 2–5 December 2019; Liu, J., Bailey, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 303–314. [Google Scholar]

- Victor, E.; Aghajan, Z.M.; Sewart, A.R.; Christian, R. Detecting depression using a framework combining deep multimodal neural networks with a purpose-built automated evaluation. Psychol. Assess. 2019, 31, 1019–1027. [Google Scholar] [CrossRef]

- Ray, A.; Kumar, S.; Reddy, R.; Mukherjee, P.; Garg, R. Multi-Level Attention Network Using Text, Audio and Video for Depression Prediction. In Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop (AVEC ’19), Nice, France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 81–88. [Google Scholar] [CrossRef]

- Rodrigues Makiuchi, M.; Warnita, T.; Uto, K.; Shinoda, K. Multimodal Fusion of BERT-CNN and Gated CNN Representations for Depression Detection. In Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop (AVEC ’19), Nice, France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 55–63. [Google Scholar] [CrossRef]

- Fan, W.; He, Z.; Xing, X.; Cai, B.; Lu, W. Multi-Modality Depression Detection via Multi-Scale Temporal Dilated CNNs. In Proceedings of the Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop (AVEC ’19), Nice, France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 73–80. [Google Scholar] [CrossRef]

- Qureshi, S.A.; Saha, S.; Hasanuzzaman, M.; Dias, G. Multitask Representation Learning for Multimodal Estimation of Depression Level. IEEE Intell. Syst. 2019, 34, 45–52. [Google Scholar] [CrossRef]

- Kaya, H.; Fedotov, D.; Dresvyanskiy, D.; Doyran, M.; Mamontov, D.; Markitantov, M.; Akdag Salah, A.A.; Kavcar, E.; Karpov, A.; Salah, A.A. Predicting Depression and Emotions in the Cross-Roads of Cultures, Para-Linguistics, and Non-Linguistics. In Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop (AVEC ’19), Nice, France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 27–35. [Google Scholar] [CrossRef]

- Muszynski, M.; Zelazny, J.; Girard, J.M.; Morency, L.P. Depression Severity Assessment for Adolescents at High Risk of Mental Disorders. In Proceedings of the 2020 International Conference on Multimodal Interaction (ICMI ’20), Virtual, 25–29 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 70–78. [Google Scholar] [CrossRef]

- Aloshban, N.; Esposito, A.; Vinciarelli, A. Detecting Depression in Less Than 10 Seconds: Impact of Speaking Time on Depression Detection Sensitivity. In Proceedings of the 2020 International Conference on Multimodal Interaction (ICMI ’20), Virtual, 25–29 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 79–87. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, D.; Ding, Z.; Chen, Q. A Novel Bimodal Fusion-based Model for Depression Recognition. In Proceedings of the 2020 IEEE International Conference on E-health Networking, Application & Services (HEALTHCOM), Shenzhen, China, 1–2 March 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Toto, E.; Tlachac, M.; Rundensteiner, E.A. AudiBERT: A Deep Transfer Learning Multimodal Classification Framework for Depression Screening. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management (CIKM ’21), Queensland, Australia, 1–5 November 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 4145–4154. [Google Scholar] [CrossRef]

- Chordia, A.; Kale, M.; Mayee, M.; Yadav, P.; Itkar, S. Automatic Depression Level Analysis Using Audiovisual Modality. In Smart Computing Techniques and Applications: Proceedings of the Fourth International Conference on Smart Computing and Informatics; Satapathy, S.C., Bhateja, V., Favorskaya, M.N., Adilakshmi, T., Eds.; Springer: Singapore, 2021; pp. 425–439. [Google Scholar]

- Muzammel, M.; Salam, H.; Othmani, A. End-to-end multimodal clinical depression recognition using deep neural networks: A comparative analysis. Comput. Methods Programs Biomed. 2021, 211, 106433. [Google Scholar] [CrossRef]

- Oureshi, S.A.; Dias, G.; Saha, S.; Hasanuzzaman, M. Gender-Aware Estimation of Depression Severity Level in a Multimodal Setting. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Yang, L.; Jiang, D.; Sahli, H. Integrating Deep and Shallow Models for Multi-Modal Depression Analysis—Hybrid Architectures. IEEE Trans. Affect. Comput. 2021, 12, 239–253. [Google Scholar] [CrossRef]

- Ye, J.; Yu, Y.; Wang, Q.; Li, W.; Liang, H.; Zheng, Y.; Fu, G. Multi-modal depression detection based on emotional audio and evaluation text. J. Affect. Disord. 2021, 295, 904–913. [Google Scholar] [CrossRef]

- Shen, Y.; Yang, H.; Lin, L. Automatic Depression Detection: An Emotional Audio-Textual Corpus and A Gru/Bilstm-Based Model. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual, 1–3 May 2022; pp. 6247–6251. [Google Scholar] [CrossRef]

- Liu, J.; Huang, Y.; Chai, S.; Sun, H.; Huang, X.; Lin, L.; Chen, Y.W. Computer-Aided Detection of Depressive Severity Using Multimodal Behavioral Data. In Handbook of Artificial Intelligence in Healthcare: Advances and Applications; Springer: Cham, Switzerland, 2022; Volume 1, pp. 353–371. [Google Scholar] [CrossRef]

- Uddin, M.A.; Joolee, J.B.; Sohn, K.A. Deep Multi-Modal Network Based Automated Depression Severity Estimation. IEEE Trans. Affect. Comput. 2022, 14, 2153–2167. [Google Scholar] [CrossRef]

- Cao, Y.; Hao, Y.; Li, B.; Xue, J. Depression prediction based on BiAttention-GRU. J. Ambient. Intell. Humaniz. Comput. 2022, 13, 5269–5277. [Google Scholar] [CrossRef]

- Mao, K.; Zhang, W.; Wang, D.B.; Li, A.; Jiao, R.; Zhu, Y.; Wu, B.; Zheng, T.; Qian, L.; Lyu, W.; et al. Prediction of Depression Severity Based on the Prosodic and Semantic Features with Bidirectional LSTM and Time Distributed CNN. IEEE Trans. Affect. Comput. 2022, 14, 2251–2265. [Google Scholar] [CrossRef]

- Aloshban, N.; Esposito, A.; Vinciarelli, A. What You Say or How You Say It? Depression Detection Through Joint Modeling of Linguistic and Acoustic Aspects of Speech. Cogn. Comput. 2021, 14, 1585–1598. [Google Scholar] [CrossRef]

- Bilalpur, M.; Hinduja, S.; Cariola, L.A.; Sheeber, L.B.; Alien, N.; Jeni, L.A.; Morency, L.P.; Cohn, J.F. Multimodal Feature Selection for Detecting Mothers’ Depression in Dyadic Interactions with their Adolescent Offspring. In Proceedings of the 2023 IEEE 17th International Conference on Automatic Face and Gesture Recognition (FG), Waikoloa Beach, HI, USA, 5–8 January 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Flores, R.; Tlachac, M.; Toto, E.; Rundensteiner, E. AudiFace: Multimodal Deep Learning for Depression Screening. In Proceedings of the 7th Machine Learning for Healthcare Conference ( PMLR), Durham, NC, USA, 5–6 August 2022; Proceedings of Machine Learning Research. Lipton, Z., Ranganath, R., Sendak, M., Sjoding, M., Yeung, S., Eds.; 2022; Volume 182, pp. 609–630. [Google Scholar]

- Ghadiri, N.; Samani, R.; Shahrokh, F. Integration of Text and Graph-Based Features for Depression Detection Using Visibility Graph. In Proceedings of the 22nd International Conference on Intelligent Systems Design and Applications (ISDA 2022) on Intelligent Systems Design and Applications, Virtual, 12–14 December 2022; Abraham, A., Pllana, S., Casalino, G., Ma, K., Bajaj, A., Eds.; Springer: Cham, Switzerland, 2023; pp. 332–341. [Google Scholar]

- Huang, G.; Shen, W.; Lu, H.; Hu, F.; Li, J.; Liu, H. Multimodal Depression Detection based on Factorized Representation. In Proceedings of the 2022 International Conference on High Performance Big Data and Intelligent Systems (HDIS), Tianjin, China, 10–11 December 2022; pp. 190–196. [Google Scholar] [CrossRef]

- Liu, D.; Liu, B.; Lin, T.; Liu, G.; Yang, G.; Qi, D.; Qiu, Y.; Lu, Y.; Yuan, Q.; Shuai, S.C.; et al. Measuring depression severity based on facial expression and body movement using deep convolutional neural network. Front. Psychiatry 2022, 13, 1017064. [Google Scholar] [CrossRef] [PubMed]

- Othmani, A.; Zeghina, A.O. A multimodal computer-aided diagnostic system for depression relapse prediction using audiovisual cues: A proof of concept. Healthc. Anal. 2022, 2, 100090. [Google Scholar] [CrossRef]

- Othmani, A.; Zeghina, A.O.; Muzammel, M. A Model of Normality Inspired Deep Learning Framework for Depression Relapse Prediction Using Audiovisual Data. Comput. Methods Programs Biomed. 2022, 226, 107132. [Google Scholar] [CrossRef]

- Park, J.; Moon, N. Design and Implementation of Attention Depression Detection Model Based on Multimodal Analysis. Sustainability 2022, 14, 3569. [Google Scholar] [CrossRef]

- Prabhu, S.; Mittal, H.; Varagani, R.; Jha, S.; Singh, S. Harnessing emotions for depression detection. Pattern Anal. Appl. 2022, 25, 537–547. [Google Scholar] [CrossRef]

- Sudhan, H.V.M.; Kumar, S.S. Multimodal Depression Severity Detection Using Deep Neural Networks and Depression Assessment Scale. In Proceedings of the International Conference on Computational Intelligence and Data Engineering, Vijayawada, India, 12–13 August 2022; Chaki, N., Devarakonda, N., Cortesi, A., Seetha, H., Eds.; Springer: Singapore, 2022; pp. 361–375. [Google Scholar]

- T J, S.J.; Jacob, I.J.; Mandava, A.K. D-ResNet-PVKELM: Deep neural network and paragraph vector based kernel extreme machine learning model for multimodal depression analysis. Multimed. Tools Appl. 2023, 82, 25973–26004. [Google Scholar] [CrossRef]

- Vandana; Marriwala, N.; Chaudhary, D. A hybrid model for depression detection using deep learning. Meas. Sens. 2023, 25, 100587. [Google Scholar] [CrossRef]

- Gu, Y.; Zhang, C.; Ma, F.; Jia, X.; Ni, S. AI-Driven Depression Detection Algorithms from Visual and Audio Cues. In Proceedings of the 2023 3rd International Conference on Frontiers of Electronics, Information and Computation Technologies (ICFEICT), Yangzhou, China, 26–29 May 2023; pp. 468–475. [Google Scholar] [CrossRef]

- Yoon, J.; Kang, C.; Kim, S.; Han, J. D-vlog: Multimodal Vlog Dataset for Depression Detection. Proc. AAAI Conf. Artif. Intell. 2022, 36, 12226–12234. [Google Scholar] [CrossRef]

- Zhou, L.; Liu, Z.; Shangguan, Z.; Yuan, X.; Li, Y.; Hu, B. TAMFN: Time-Aware Attention Multimodal Fusion Network for Depression Detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 669–679. [Google Scholar] [CrossRef] [PubMed]

- Zhou, L.; Liu, Z.; Yuan, X.; Shangguan, Z.; Li, Y.; Hu, B. CAIINET: Neural network based on contextual attention and information interaction mechanism for depression detection. Digit. Signal Process. 2023, 137, 103986. [Google Scholar] [CrossRef]

- Qingjun Zhu, J.X.; Peng, L. College students’ mental health evaluation model based on tensor fusion network with multimodal data during the COVID-19 pandemic. Biotechnol. Genet. Eng. Rev. 2023, 1–15. [Google Scholar] [CrossRef]

- Lam, G.; Dongyan, H.; Lin, W. Context-aware Deep Learning for Multi-modal Depression Detection. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3946–3950. [Google Scholar] [CrossRef]

- Niu, M.; Chen, K.; Chen, Q.; Yang, L. HCAG: A Hierarchical Context-Aware Graph Attention Model for Depression Detection. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 4235–4239. [Google Scholar] [CrossRef]

- Ma, W.; Qiu, S.; Miao, J.; Li, M.; Tian, Z.; Zhang, B.; Li, W.; Feng, R.; Wang, C.; Cui, Y.; et al. Detecting depression tendency based on deep learning and multi-sources data. Biomed. Signal Process. Control 2023, 86, 105226. [Google Scholar] [CrossRef]

- Thati, R.P.; Dhadwal, A.S.; Kumar, P.; P, S. A novel multi-modal depression detection approach based on mobile crowd sensing and task-based mechanisms. Multimed. Tools Appl. 2023, 82, 4787–4820. [Google Scholar] [CrossRef] [PubMed]

- Tlachac, M.; Flores, R.; Reisch, M.; Kayastha, R.; Taurich, N.; Melican, V.; Bruneau, C.; Caouette, H.; Lovering, J.; Toto, E.; et al. StudentSADD: Rapid Mobile Depression and Suicidal Ideation Screening of College Students during the Coronavirus Pandemic. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6, 1–32. [Google Scholar] [CrossRef]

- Su, M.H.; Wu, C.H.; Huang, K.Y.; Yang, T.H. Cell-Coupled Long Short-Term Memory With L -Skip Fusion Mechanism for Mood Disorder Detection Through Elicited Audiovisual Features. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 124–135. [Google Scholar] [CrossRef]

- Zhang, Z.; Lin, W.; Liu, M.; Mahmoud, M. Multimodal Deep Learning Framework for Mental Disorder Recognition. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 344–350. [Google Scholar] [CrossRef]

- Ceccarelli, F.; Mahmoud, M. Multimodal temporal machine learning for Bipolar Disorder and Depression Recognition. Pattern Anal. Appl. 2022, 25, 493–504. [Google Scholar] [CrossRef]

- Tlachac, M.; Toto, E.; Lovering, J.; Kayastha, R.; Taurich, N.; Rundensteiner, E. EMU: Early Mental Health Uncovering Framework and Dataset. In Proceedings of the 2021 20th IEEE International Conference on Machine Learning and Applications (ICMLA), Virtual, 13–16 December 2021; pp. 1311–1318. [Google Scholar] [CrossRef]

- Tlachac, M.; Flores, R.; Reisch, M.; Houskeeper, K.; Rundensteiner, E.A. DepreST-CAT: Retrospective Smartphone Call and Text Logs Collected during the COVID-19 Pandemic to Screen for Mental Illnesses. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6, 1–32. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Rong, L.; Tiwari, P. Multi-Task Learning for Jointly Detecting Depression and Emotion. In Proceedings of the 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houston, TX, USA, 9–12 December 2021; pp. 3142–3149. [Google Scholar] [CrossRef]