Sensing the Intentions to Speak in VR Group Discussions

Abstract

1. Introduction

- We identified an asymmetry in how participants convey speaking intention in VR. They perceive that expressing their speaking intentions as relatively straightforward, but perceiving others’ speaking intentions is challenging.

- We observed temporal patterns around speaking intentions as the intervals between the start of speaking intention and actual speaking are typically short, often lasting only around 1 s.

- We show that our neural network-based approaches are effective in detecting speaking intentions by only using sensor data from off-the-shelf VR headsets and controllers. We also show that incorporating relational features between participants leads to minimal improvement in results.

2. Related Works

3. Experiment

3.1. Data Collection

3.1.1. Sensor Data

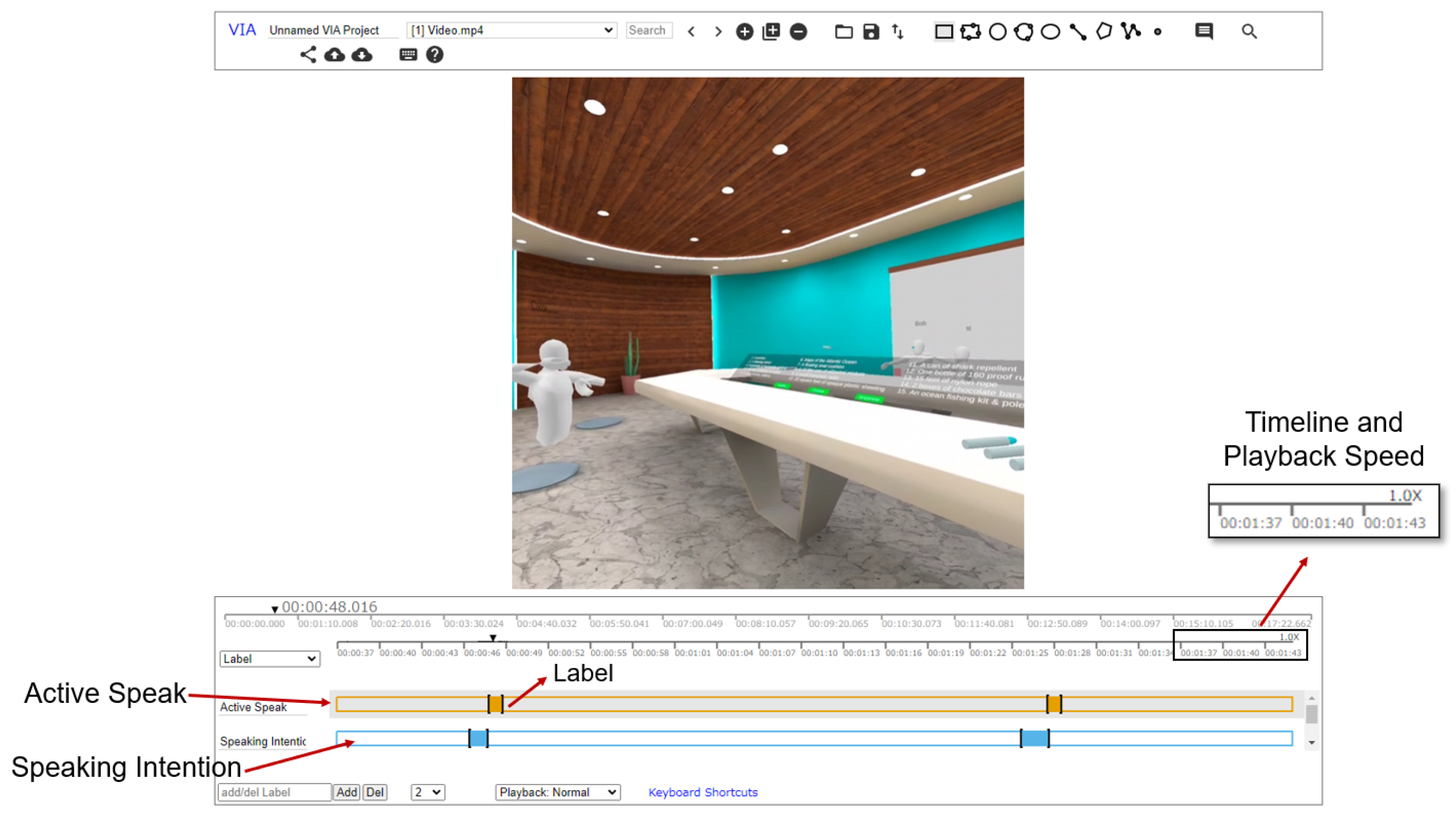

3.1.2. Participant Annotated Labels

3.2. Two Truths and a Lie

3.3. Participants

3.4. Experiment Procedure

3.5. Data Processing

4. Analysis of Experimental Data

4.1. Questionnaire Result

4.2. Participant-Annotated Labeling Results

5. Detection of Speaking Intention

5.1. Data Sampling Strategy

5.2. Neural Network Model

5.3. Model Performance

6. Discussion

6.1. Speaking Intention in VR

6.2. Assistance Based on Speaking Intention Detection

6.2.1. Real Time

6.2.2. Non-Real Time

6.3. Speaking Intention Detection Based on Sensor Data

6.4. Limitation and Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Detail of the Models

| (a) EGG Net | ||

| EEG Net Layer | Input Shape | |

| Conv2D | ||

| BN | ||

| DepConv | ||

| BN + ELU | ||

| AvgPooling | ||

| Dropout | ||

| SepConv | ||

| BN + ELU | ||

| AvgPooling | ||

| Dropout | ||

| Flatten | ||

| Dense | 48 | |

| (b) MLSTM-FCN | ||

| MLSTM-FCN Layer | Input Shape | |

| Conv1D | ||

| BN + ReLU | ||

| SE-Block | ||

| Conv1D | ||

| BN + ReLU | ||

| SE-Block | ||

| Conv1D | ||

| BN + ReLU | ||

| GlobalAvgPool | ||

| DimShuffle | ||

| LSTM | ||

| Dropout | 8 | |

| Concate | 128, 8 | |

| Dense | 136 | |

| Inception Time Layer | Input Shape | Connected to | |

|---|---|---|---|

| Conv1D | InputLayer | ||

| MaxPooling1D | InputLayer | ||

| Conv1D1 | Conv1D | ||

| Conv1D2 | Conv1D | ||

| Conv1D3 | Conv1D | ||

| Conv1D4 | MaxPooling1D | ||

| Concate | Conv1D1,Conv1D2 | ||

| Conv1D3,Conv1D4 | |||

| BN | Concate | ||

| ReLU | BN | ||

| Inception Module | |||

| BN | Concate | ||

| ReLU | BN | ||

| Inception Module | |||

| Conv1D | InputLayer | ||

| Concate | |||

| Conv1D | |||

| ReLU | |||

| Add | , | ||

| Add | |||

| Inception Module | |||

| BN | Concate | ||

| ReLU | BN | ||

| Inception Module | |||

| BN | Concate | ||

| ReLU | BN | ||

| Inception Module | |||

| Conv1D | |||

| Concate | |||

| Conv1D | |||

| ReLU | |||

| Add | ReLU, | ||

| ReLU | Add | ||

| GlobalAvgPool1D | |||

| Dense | 128 | ||

References

- Jerald, J. The VR Book: Human-Centered Design for Virtual Reality; Morgan & Claypool: San Rafael, CA, USA, 2015. [Google Scholar]

- Yassien, A.; ElAgroudy, P.; Makled, E.; Abdennadher, S. A design space for social presence in VR. In Proceedings of the 11th Nordic Conference on Human-Computer Interaction: Shaping Experiences, Shaping Society, Tallinn, Estonia, 25–29 October 2020; pp. 1–12. [Google Scholar]

- Sellen, A.J. Remote conversations: The effects of mediating talk with technology. Hum. Comput. Interact. 1995, 10, 401–444. [Google Scholar] [CrossRef]

- Vertegaal, R. The GAZE groupware system: Mediating joint attention in multiparty communication and collaboration. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems, Pittsburgh, PA, USA, 15–20 May 1999; pp. 294–301. [Google Scholar]

- Kendon, A. Some functions of gaze-direction in social interaction. Acta Psychol. 1967, 26, 22–63. [Google Scholar] [CrossRef]

- Williamson, J.R.; O’Hagan, J.; Guerra-Gomez, J.A.; Williamson, J.H.; Cesar, P.; Shamma, D.A. Digital proxemics: Designing social and collaborative interaction in virtual environments. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022; pp. 1–12. [Google Scholar]

- Sellen, A.; Buxton, B.; Arnott, J. Using spatial cues to improve videoconferencing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Monterey, CA, USA, 3–7 May 1992; pp. 651–652. [Google Scholar]

- Williamson, J.; Li, J.; Vinayagamoorthy, V.; Shamma, D.A.; Cesar, P. Proxemics and social interactions in an instrumented virtual reality workshop. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–13. [Google Scholar]

- Hall, E.T. The Hidden Dimension; Anchor: Hamburg, Germany, 1966; Volume 609. [Google Scholar]

- Dielmann, A.; Garau, G.; Bourlard, H. Floor Holder Detection and End of Speaker Turn Prediction in Meetings; Technical Report; ISCA: Anniston, AL, USA, 2010. [Google Scholar]

- Jokinen, K.; Furukawa, H.; Nishida, M.; Yamamoto, S. Gaze and turn-taking behavior in casual conversational interactions. ACM Trans. Interact. Intell. Syst. 2013, 3, 1–30. [Google Scholar] [CrossRef]

- Brühlmann, F.; Vollenwyder, B.; Opwis, K.; Mekler, E.D. Measuring the “why” of interaction: Development and validation of the user motivation inventory (umi). In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–13. [Google Scholar]

- Sykownik, P.; Graf, L.; Zils, C.; Masuch, M. The most social platform ever? A survey about activities & motives of social VR users. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisbon, Portugal, 27 March–1 April 2021; pp. 546–554. [Google Scholar]

- Zamanifard, S.; Freeman, G. “The Togetherness that We Crave” Experiencing Social VR in Long Distance Relationships. In Proceedings of the Conference Companion Publication of the 2019 on Computer Supported Cooperative Work and Social Computing, Austin, TX, USA, 9–13 November 2019; pp. 438–442. [Google Scholar]

- Freeman, G.; Maloney, D. Body, avatar, and me: The presentation and perception of self in social virtual reality. Proc. ACM Hum. Comput. Interact. 2021, 4, 1–27. [Google Scholar] [CrossRef]

- Maloney, D.; Freeman, G. Falling asleep together: What makes activities in social virtual reality meaningful to users. In Proceedings of the Annual Symposium on Computer-Human Interaction in Play, Virtual, 2–4 November 2020; pp. 510–521. [Google Scholar]

- Bachmann, M.; Subramaniam, A.; Born, J.; Weibel, D. Virtual reality public speaking training: Effectiveness and user technology acceptance. Front. Virtual Real. 2023, 4, 1242544. [Google Scholar] [CrossRef]

- Valls-Ratés, Ï.; Niebuhr, O.; Prieto, P. Encouraging participant embodiment during VR-assisted public speaking training improves persuasiveness and charisma and reduces anxiety in secondary school students. Front. Virtual Real. 2023, 2023, 1–18. [Google Scholar] [CrossRef]

- Tanenbaum, T.J.; Hartoonian, N.; Bryan, J. “How do I make this thing smile?” An Inventory of Expressive Nonverbal Communication in Commercial Social Virtual Reality Platforms. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Bombari, D.; Schmid Mast, M.; Canadas, E.; Bachmann, M. Studying social interactions through immersive virtual environment technology: Virtues, pitfalls, and future challenges. Front. Psychol. 2015, 6, 869. [Google Scholar] [CrossRef] [PubMed]

- Lou, J.; Wang, Y.; Nduka, C.; Hamedi, M.; Mavridou, I.; Wang, F.Y.; Yu, H. Realistic facial expression reconstruction for VR HMD users. IEEE Trans. Multimed. 2019, 22, 730–743. [Google Scholar] [CrossRef]

- Kurzweg, M.; Reinhardt, J.; Nabok, W.; Wolf, K. Using Body Language of Avatars in VR Meetings as Communication Status Cue. Proc. Mensch Comput. 2021, 21, 366–377. [Google Scholar]

- Padilha, E.G. Modelling Turn-Taking in a Simulation of Small Group Discussion; University of Edinburgh: Edinburgh, UK, 2006. [Google Scholar]

- Goffman, E. Forms of Talk; University of Pennsylvania Press: Philadelphia, PA, USA, 1981. [Google Scholar]

- Jokinen, K.; Nishida, M.; Yamamoto, S. On eye-gaze and turn-taking. In Proceedings of the 2010 Workshop on Eye Gaze in Intelligent Human Machine Interaction, Hong Kong, China, 7–10 February 2010; pp. 118–123. [Google Scholar]

- Streeck, J.; Hartge, U. Previews: Gestures at the transition place. In The Contextualization of Language; John Benjamin’s Publishing: Philadelphia, PA, USA, 1992; pp. 135–157. [Google Scholar]

- Ishii, R.; Kumano, S.; Otsuka, K. Predicting next speaker based on head movement in multi-party meetings. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, QL, Australia, 19–24 April 2015; pp. 2319–2323. [Google Scholar]

- Mizuno, S.; Hojo, N.; Kobashikawa, S.; Masumura, R. Next-Speaker Prediction Based on Non-Verbal Information in Multi-Party Video Conversation. In Proceedings of the ICASSP 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Skantze, G. Turn-taking in conversational systems and human-robot interaction: A review. Comput. Speech Lang. 2021, 67, 101178. [Google Scholar] [CrossRef]

- Ehret, J.; Bönsch, A.; Nossol, P.; Ermert, C.A.; Mohanathasan, C.; Schlittmeier, S.J.; Fels, J.; Kuhlen, T.W. Who’s next? Integrating Non-Verbal Turn-Taking Cues for Embodied Conversational Agents. In Proceedings of the 23rd ACM International Conference on Intelligent Virtual Agents, Paris, France, 2–5 July 2023. [Google Scholar]

- Raux, A.; Eskenazi, M. A finite-state turn-taking model for spoken dialog systems. In Proceedings of the Human Language Technologies: The 2009 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Boulder, CO, USA, 31 May–5 June 2009; pp. 629–637. [Google Scholar]

- Raux, A.; Eskenazi, M. Optimizing the turn-taking behavior of task-oriented spoken dialog systems. ACM Trans. Speech Lang. Process. 2012, 9, 1–23. [Google Scholar] [CrossRef]

- Skantze, G. Towards a general, continuous model of turn-taking in spoken dialogue using LSTM recurrent neural networks. In Proceedings of the 18th Annual SIGdial Meeting on Discourse and Dialogue, Saarbrücken, Germany, 15–17 August 2017; pp. 220–230. [Google Scholar]

- Lala, D.; Inoue, K.; Kawahara, T. Evaluation of real-time deep learning turn-taking models for multiple dialogue scenarios. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder, CO, USA, 17–19 October 2018; pp. 78–86. [Google Scholar]

- Roddy, M.; Skantze, G.; Harte, N. Investigating speech features for continuous turn-taking prediction using lstms. arXiv 2018, arXiv:1806.11461. [Google Scholar]

- Gibson, D.R. Participation shifts: Order and differentiation in group conversation. Soc. Forces 2003, 81, 1335–1380. [Google Scholar] [CrossRef]

- Gibson, D.R. Taking turns and talking ties: Networks and conversational interaction. Am. J. Sociol. 2005, 110, 1561–1597. [Google Scholar] [CrossRef]

- Ishii, R.; Otsuka, K.; Kumano, S.; Yamato, J. Prediction of who will be the next speaker and when using gaze behavior in multiparty meetings. ACM Trans. Interact. Intell. Syst. 2016, 6, 1–31. [Google Scholar] [CrossRef]

- Duncan, S. Some signals and rules for taking speaking turns in conversations. J. Personal. Soc. Psychol. 1972, 23, 283. [Google Scholar] [CrossRef]

- Beattie, G.W. The regulation of speaker turns in face-to-face conversation: Some implications for conversation in sound-only communication channels. Semiotica 1981, 34, 55–70. [Google Scholar] [CrossRef]

- Russell, D.M.; Oren, M. Retrospective cued recall: A method for accurately recalling previous user behaviors. In Proceedings of the 2009 42nd Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 5–8 January 2009; pp. 1–9. [Google Scholar]

- Russell, D.M.; Chi, E.H. Looking back: Retrospective study methods for HCI. In Ways of Knowing in HCI; Springer: Berlin/Heidelberg, Germany, 2014; pp. 373–393. [Google Scholar]

- Zwaagstra, L. Group Dynamics and Initiative Activities with Outdoor Programs; ERIC Publications: Haven, CT, USA, 1997. [Google Scholar]

- Yeganehpour, P. The effect of using different kinds of ice-breakers on upperintermediate language learners’ speaking ability. J. Int. Educ. Sci. 2016, 3, 217–238. [Google Scholar]

- Koopmans-van Beinum, F.J.; van Donzel, M.E. Relationship between discourse structure and dynamic speech rate. In Proceedings of the Fourth International Conference on Spoken Language Processing, ICSLP’96, Philadelphia, PA, USA, 3–6 October 1996; Volume 3, pp. 1724–1727. [Google Scholar]

- Koiso, H.; Horiuchi, Y.; Tutiya, S.; Ichikawa, A.; Den, Y. An analysis of turn-taking and backchannels based on prosodic and syntactic features in Japanese map task dialogs. Lang. Speech 1998, 41, 295–321. [Google Scholar] [CrossRef]

- Maynard, S.K. Japanese Conversation: Self-Contextualization through Structure and Interactional Management; Ablex Pub.: New York, NY, USA, 1989. [Google Scholar]

- Sacks, H.; Schegloff, E.A.; Jefferson, G. A simplest systematics for the organization of turn taking for conversation. In Studies in the Organization of Conversational Interaction; Elsevier: Amsterdam, The Netherlands, 1978; pp. 7–55. [Google Scholar]

- Erickson, F.D. Conversational Organization: Interaction between Speakers and Hearers; Wiley: Hoboken, NJ, USA, 1984. [Google Scholar]

- Xiong, Z.; Stiles, M.K.; Zhao, J. Robust ECG signal classification for detection of atrial fibrillation using a novel neural network. In Proceedings of the 2017 Computing in Cardiology (CinC), Rennes, France, 24–27 September 2017; pp. 1–4. [Google Scholar]

- Lee, S.M.; Yoon, S.M.; Cho, H. Human activity recognition from accelerometer data using Convolutional Neural Network. In Proceedings of the 2017 IEEE International Conference on Big Data and Smart Computing (Bigcomp), Jeju Island, Republic of Korea, 13–16 February 2017; pp. 131–134. [Google Scholar]

- Startsev, M.; Agtzidis, I.; Dorr, M. 1D CNN with BLSTM for automated classification of fixations, saccades, and smooth pursuits. Behav. Res. Methods 2019, 51, 556–572. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef] [PubMed]

- Karim, F.; Majumdar, S.; Darabi, H.; Harford, S. Multivariate LSTM-FCNs for time series classification. Neural Netw. 2019, 116, 237–245. [Google Scholar] [CrossRef] [PubMed]

- Ismail Fawaz, H.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.A.; Petitjean, F. Inceptiontime: Finding alexnet for time series classification. Data Min. Knowl. Discov. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Xu, Y.; Dong, Y.; Wu, J.; Sun, Z.; Shi, Z.; Yu, J.; Gao, S. Gaze prediction in dynamic 360 immersive videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5333–5342. [Google Scholar]

- Meyes, R.; Lu, M.; de Puiseau, C.W.; Meisen, T. Ablation studies in artificial neural networks. arXiv 2019, arXiv:1901.08644. [Google Scholar]

- Rintel, S.; Sellen, A.; Sarkar, A.; Wong, P.; Baym, N.; Bergmann, R. Study of Microsoft Employee Experiences in Remote Meetings During COVID-19 (Project Tahiti); Microsoft Research; Microsoft: Redmond, WA, USA, 2020. [Google Scholar]

- Tamaki, H.; Nakashige, M.; Higashino, S.; Kobayashi, M. Facilitation Method in Web Conference focused on. IEICE Tech. Rep. 2009, 109, 101–106. [Google Scholar]

- Bailenson, J.N.; Beall, A.C.; Loomis, J.; Blascovich, J.; Turk, M. Transformed social interaction: Decoupling representation from behavior and form in collaborative virtual environments. Presence Teleoperators Virtual Environ. 2004, 13, 428–441. [Google Scholar] [CrossRef]

- Rivu, R.; Pfeuffer, K.; Müller, P.; Abdelrahman, Y.; Bulling, A.; Alt, F. Altering Non-verbal Cues to Implicitly Direct Attention in Social VR. In Proceedings of the 2021 ACM Symposium on Spatial User Interaction, Virtual, 9–10 November 2021; pp. 1–2. [Google Scholar]

- De Coninck, F.; Yumak, Z.; Sandino, G.; Veltkamp, R. Non-verbal behavior generation for virtual characters in group conversations. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 9–11 December 2019; pp. 41–418. [Google Scholar]

- Bachour, K.; Kaplan, F.; Dillenbourg, P. An interactive table for supporting participation balance in face-to-face collaborative learning. IEEE Trans. Learn. Technol. 2010, 3, 203–213. [Google Scholar] [CrossRef]

- Bergstrom, T.; Karahalios, K. Conversation Clock: Visualizing audio patterns in co-located groups. In Proceedings of the 2007 40th Annual Hawaii International Conference on System Sciences (HICSS’07), Waikoloa, HI, USA, 3–6 January 2007; p. 78. [Google Scholar]

- Kim, J.; Truong, K.P.; Charisi, V.; Zaga, C.; Lohse, M.; Heylen, D.; Evers, V. Vocal turn-taking patterns in groups of children performing collaborative tasks: An exploratory study. In Proceedings of the Sixteenth Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Woolley, A.W.; Chabris, C.F.; Pentland, A.; Hashmi, N.; Malone, T.W. Evidence for a collective intelligence factor in the performance of human groups. Science 2010, 330, 686–688. [Google Scholar] [CrossRef]

- McVeigh-Schultz, J.; Kolesnichenko, A.; Isbister, K. Shaping pro-social interaction in VR: An emerging design framework. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

| Statistics | Mean | SD | Median | Max | Min |

|---|---|---|---|---|---|

| Duration | 3.08 | 4.47 | 1.82 | 72.03 | 0.17 |

| Interval | 13.06 | 24.02 | 4.62 | 229.15 | 0.24 |

| Question | Type | |

|---|---|---|

| Q1 | Do you think it is easy to express your speaking intentions in the virtual environment? | 5-Point Likert Scale |

| Q2 | Do you think it is easy to perceive the speaking intentions of others in the virtual environment? | 5-Point Likert Scale |

| Q3 | Do you think that perceiving and expressing speaking intentions more easily would be beneficial for group discussions? | 5-Point Likert Scale |

| Q4 | Have you ever had situations during the discussion where you wanted to say something but finally didn’t? If yes, please write down the reason. | Open-Ended Question |

| Theme | Code | Count |

|---|---|---|

| Timing Reasons | Difficulty interrupting others | 4 |

| Taking too long to organize their thoughts | 3 | |

| Topic has changed | 2 | |

| Content Reasons | The content is irrelevant to the current topic | 4 |

| Someone else already mentioned the same thing | 3 | |

| Social Etiquette | Worried about offending others | 2 |

| Worried about talking too much | 1 | |

| Experimental Setup | Don’t want to increase the workload of labeling | 2 |

| Experiment time is limited | 1 | |

| None | None (No instance of giving up) | 5 |

| Sensor Data + Relational Features | Only Sensor Data | |||||||

|---|---|---|---|---|---|---|---|---|

| Metrics | Acc. | Prec. | Recall | F1 | Acc. | Prec. | Recall | F1 |

| Baseline | 0.4879 | 0.5221 | 0.4956 | 0.5085 | 0.4879 | 0.5221 | 0.4956 | 0.5085 |

| EEG-Net | 0.6279 | 0.6738 | 0.6099 | 0.6403 | 0.6164 | 0.6156 | 0.6312 | 0.6233 |

| MLSTM-FCN | 0.6207 | 0.6466 | 0.7352 | 0.6881 | 0.6115 | 0.6261 | 0.7345 | 0.6760 |

| InceptionTime | 0.5654 | 0.6058 | 0.5621 | 0.5831 | 0.5653 | 0.5872 | 0.5966 | 0.5919 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Gu, C.; Zhang, J.; Liu, Z.; Konomi, S. Sensing the Intentions to Speak in VR Group Discussions. Sensors 2024, 24, 362. https://doi.org/10.3390/s24020362

Chen J, Gu C, Zhang J, Liu Z, Konomi S. Sensing the Intentions to Speak in VR Group Discussions. Sensors. 2024; 24(2):362. https://doi.org/10.3390/s24020362

Chicago/Turabian StyleChen, Jiadong, Chenghao Gu, Jiayi Zhang, Zhankun Liu, and Shin‘ichi Konomi. 2024. "Sensing the Intentions to Speak in VR Group Discussions" Sensors 24, no. 2: 362. https://doi.org/10.3390/s24020362

APA StyleChen, J., Gu, C., Zhang, J., Liu, Z., & Konomi, S. (2024). Sensing the Intentions to Speak in VR Group Discussions. Sensors, 24(2), 362. https://doi.org/10.3390/s24020362