Abstract

Three-dimensional point cloud registration is a fundamental task for localization and mapping in autonomous navigation applications. Over the years, registration algorithms have evolved; nevertheless, several challenges still remain. Recently, non-repetitive scanning LiDAR sensors have emerged as a promising 3D data acquisition tool. However, the feasibility of this type of sensor to leverage robust point cloud registration still needs to be ascertained. In this paper, we explore the feasibility of one such LiDAR sensor with a Spirograph-type non-repetitive scanning pattern for robust 3D point cloud registration. We first characterize the data of this unique sensor; then, utilizing these results, we propose a new 3D point cloud registration method that exploits the unique scanning pattern of the sensor to register successive 3D scans. The characteristic equations of the unique scanning pattern, determined during the characterization phase, are used to reconstruct a perfect scan at the target distance. The real scan is then compared with this reconstructed scan to extract objects in the scene. The displacement of these extracted objects with respect to the center of the unique scanning pattern is compared in successive scans to determine the transformations that are then used to register these scans. The proposed method is evaluated on two real and different datasets and compared with other state-of-the-art registration methods. After analysis, the performance (localization and mapping results) of the proposed method is further improved by adding constraints like loop closure and employing a Curve Fitting Derivative Filter (CFDT) to better estimate the trajectory. The results clearly demonstrate the suitability of the sensor for such applications. The proposed method is found to be comparable with other methods in terms of accuracy but surpasses them in performance in terms of processing time.

1. Introduction

The advancement of LiDAR (Light Detection And Ranging) technology has revolutionized the field of three-dimensional (3D) sensing and mapping, playing a pivotal role in various applications such as autonomous vehicles, robotics and environmental monitoring. Registering 3D point clouds obtained from these LiDARs is a fundamental task for localization and mapping in autonomous navigation. Registration algorithms have evolved over the years; however, several challenges still remain.

Some of the critical issues include accuracy, noise reduction, robustness, scalability and real-time processing. Most of the state-of-the-art methods employ traditional LiDAR sensors with repetitive scanning patterns, which have inherent limitations. Recently, non-repetitive scanning LiDAR sensors have emerged as a promising 3D data acquisition tool. However, the feasibility of this type of sensor to leverage robust point cloud registration still needs to be ascertained. In this paper, we explore the feasibility of one such LiDAR sensor with a Spirograph-type non-repetitive scanning pattern for robust 3D point cloud registration.

The work presented in this paper represents a promising advancement toward more robust and accurate 3D point cloud registration, with implications for the continued evolution of technologies reliant on LiDAR-based environmental sensing.

2. Related Work

Point cloud registration is a fundamental task in computer vision and robotics, enabling the alignment of multiple 3D scans to create a coherent and comprehensive model of a scene or object. Over the years, numerous techniques and algorithms have been developed to address this challenging problem. In this section, we review some key research in the field of 3D point cloud registration.

The Iterative Closest Point algorithm (ICP) proposed by [1] is a foundational method in point cloud registration. ICP iteratively minimizes the distance between corresponding points in two point clouds, allowing for rigid transformation estimation. Extensions such as the Generalized ICP (GICP) by [2], dense normal-based ICP (NICP) [3] and Sample Consensus ICP [4] have improved its robustness and applicability to various scenarios.

Tazir et al. in [5] modified the method for selecting 3D points for the matching process in the standard ICP algorithm to propose Cluster Iterative Closest Point (CICP), successfully registers point clouds of different densities obtained from different sensors. Vizzo et al. in [6] presented KISS-ICP, which relies on point-to-point ICP combined with adaptive thresholding for correspondence matching, a robust kernel and a point cloud subsampling strategy. This resulting system requires only a few parameters that in most cases do not need to be tuned for different LiDAR sensors or a changing environment. Another variant, Global ICP (G-ICP), presented by Aijazi et al. in [7], employs bundle adjustment to fine tune the registration process at the end, in addition to scan-by-scan registration using point-to-plane matching; however, such a method is not suitable for real-time processing.

Feature-based registration methods focus on extracting distinctive features from point clouds and matching them to establish correspondences. The SIFT-based approach [8], Fast Point Feature Histograms (FPFH) [9] and Signature of Histogram of Orientations (SHOT) [10] are notable examples. Ghorbani et al. [11] evaluated the use of 3D keypoint detectors like the 3D SIFT and SHOT method to register sequential indoor and outdoor point clouds. The authors identified quantity, quality and spatial distribution of the extracted key points along with the matching of extracted features as the main challenges in 3D point cloud registration using these approaches.

While rigid registration is suitable for aligning objects with minimal deformation, non-rigid registration methods are essential for deformable object alignment and medical image registration. The Coherent Point Drift (CPD) algorithm by Myronenko and Song [12] is a significant advancement in this domain.

Scalability is a critical concern for point cloud registration, especially in applications like autonomous driving and mapping. A method to improve the robustness of scale estimation is the use of a smooth variable structure filter (SVSF) in SLAM applications, i.e., SLAM-SVSF [13]. The LeGO-LOAM system by [14] offers a real-time solution for large-scale point cloud registration and mapping. The method is an extension of Lidar odometry and mapping (LOAM) presented by [15] that computes the odometry by registering planar and edge features to a sparse feature map. Lego-LOAM added ground constraints to improve accuracy; more recently, F-LOAM [16] modified the original method to make it faster by using a more efficient optimization technique. However, these methods rely on several predefined parameters that need to be tuned depending on sensor resolution, environment structure, etc.

A Surfel-based method SuMa [17] was proposed for LiDAR odometry estimation and point cloud registration. The method was extended to incorporate semantics [18] and handle dynamic objects [19]. Deschaud [20] introduced the implicit moving least square surface (IMLS-SLAM) [21] while Vizzo et al. [22] exploited a triangular mesh as the internal map representation. Both these methods rely on a point-to-plane metric to register consecutive scans. However, this requires the estimation of surface normals as additional data-dependent parameters.

Recent advances in deep learning have led to the emergence of deep neural networks for point cloud registration [23,24]. However, applying deep learning to 3D point cloud registration presents several challenges due to the irregular (i.e., the points are not evenly distributed spatially across the different regions of the scene), unstructured (i.e., usually not organized in a known pattern) and unordered (i.e., mostly the point cloud of a scene is stored as a set of points where changing the order does not change the scene representation) nature of the 3D LiDAR data. These issues make the direct application of convolutional neural networks (CNNs) difficult, as they assume ordered and regular structures.

With the recent advent of non-repetitive scanning LiDARs, some work has also been conducted to register their point clouds. As an extension of classical feature-based methods, the work by [25] introduced a feature-based registration algorithm employing point clouds generated by non-repetitive scanning LiDARs, leveraging distinct features to establish correspondences. Wang et al. [26] proposed a novel method that leverages deep learning techniques for sparse point cloud registration, achieving impressive results in various scenarios. He et al. [27] introduced a highly efficient algorithm designed for non-repetitive scanning LiDARs, combining geometric and learning-based approaches to improve speed and accuracy. Li et al. [28] presented a registration method for combining non-repetitive scanning LiDAR point clouds with RGB-D data, enabling precise 3D scene reconstruction. Zou et al. [29] presented a plane-based global registration (PGR) approach for coarse registration followed by an ICP algorithm to register point clouds.

In this paper, we first present comprehensive characterization results of such a non-repetitive LiDAR sensor. Different aspects of this new type of sensor are studied for its effective utilization for localization and mapping applications applied to Autonomous Ground Vehicles (AGV). In order to demonstrate the feasibility of this sensor, we use these results to propose a new 3D point cloud registration method—for localization and mapping—that exploits the unique scanning pattern of the sensor to register successive 3D scans. To the best of our knowledge, no work has so far exploited the non-repetitive scanning pattern to effectively register 3D point clouds.

In the following sections, we first introduce the new LiDAR sensor and present the comprehensive characterization results (Section 3). In order to demonstrate its feasibility for localization and mapping applications, we then propose a new 3D registration method (Section 4) that exploits the unique scanning pattern of the sensor. The experiments and results, as well as the discussions, are presented in Section 5 and Section 6; then, we conclude in Section 7.

3. Characterization of Spirograph Scanning LiDAR

The recently developed MID-70 sensor by LIVOX [30] is a solid-state 3D LiDAR. The sensor is capable of collecting up to 100,000 points per second within a circular field of view directly in front of the scanner’s glass window. With a small form factor, it has a range of about with a precision of up to (see Table 1 for more specifications). However, what really differentiates this sensor from other laser scanners is its scanning mechanism. Rather than rotating the laser scanner/receiver pair themselves (as is done in the case of multi-line scanners like Velodyne LiDARs), it holds the two in a “solid state”, fixed inside the scanner’s housing, while the outbound and inbound light are directed through a pair of Risley prisms [31]. These wedge-shaped glass lenses act as optical steering mechanisms as they rotate in opposite directions, directing the beam in a Spirograph scanning pattern in the circular scan area.

Table 1.

Main specifications of the MID-70 sensor by LIVOX.

In order to effectively use such a sensor, the characterization of its data is indispensable, especially given that, being a new sensor, few performance data are available. In order to characterize the sensor data, different tests were conducted, and the results are presented in the following sections.

3.1. Startup Time

Once the sensor is powered up, it does not start immediately. There is a slight delay (≈8 s) for safety, to ensure the smooth functioning of the sensor and to prevent any erroneous data upfront. Each scan contains about 100,000 valid points/s.

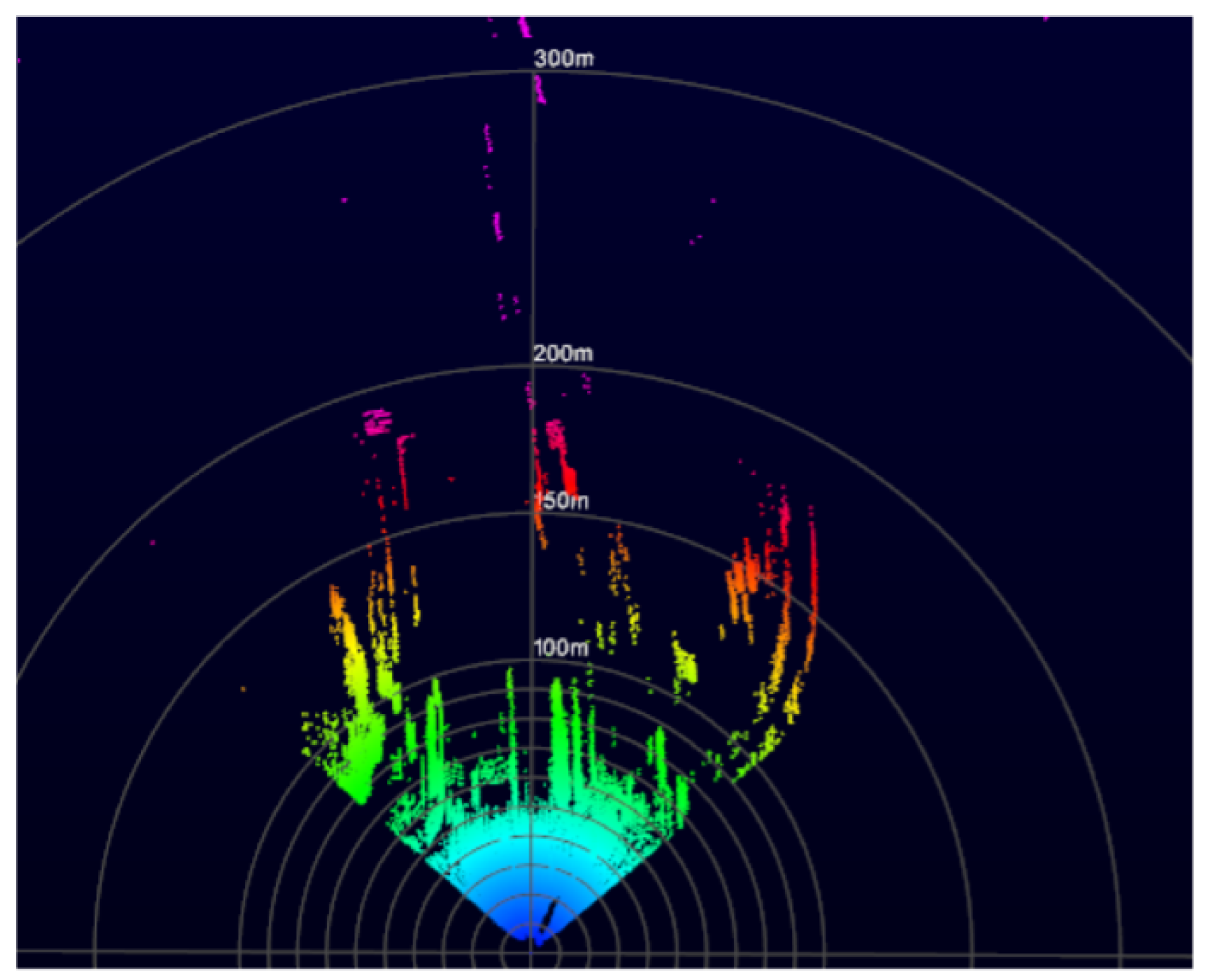

3.2. Range and Field of View (FOV)

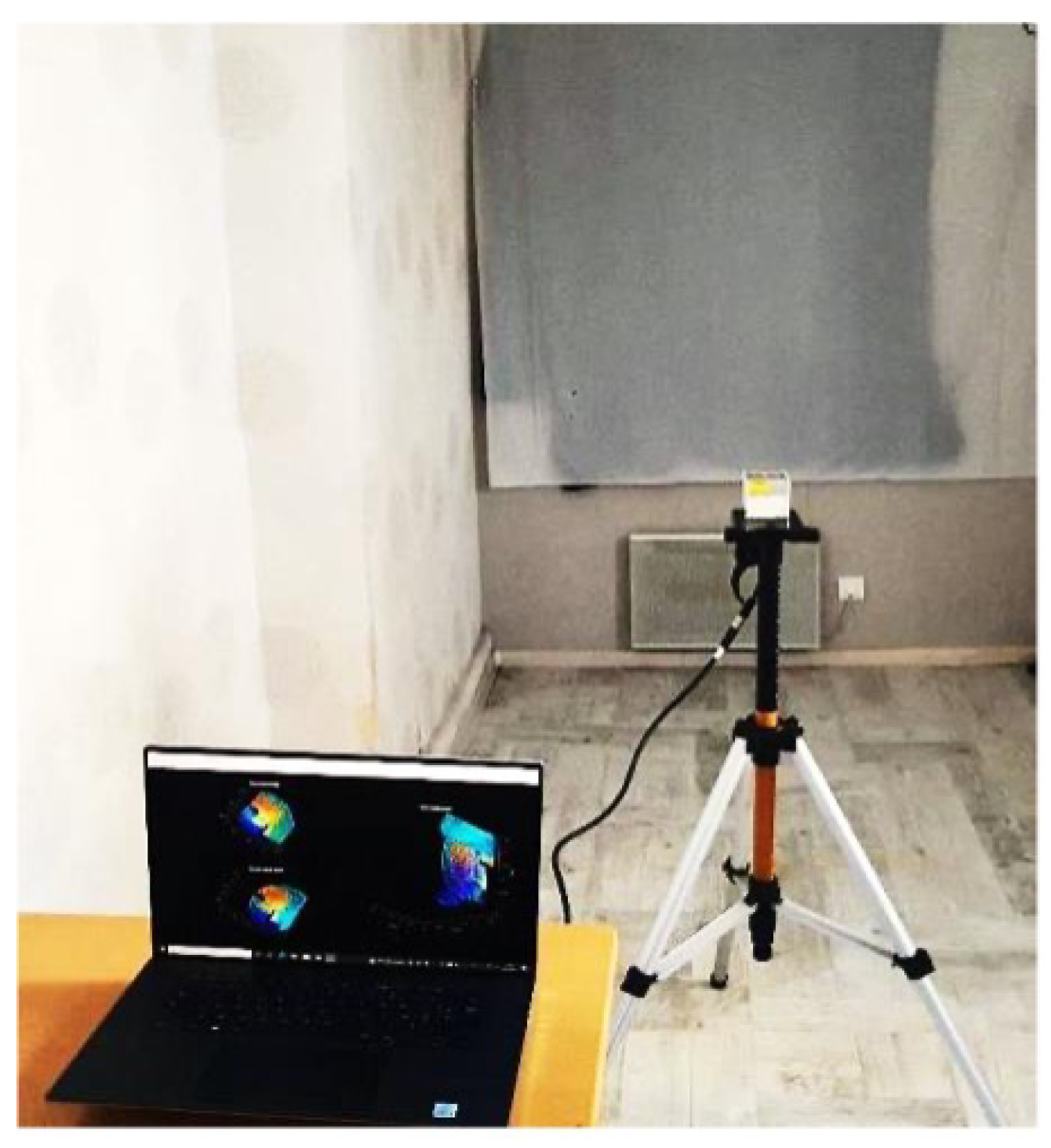

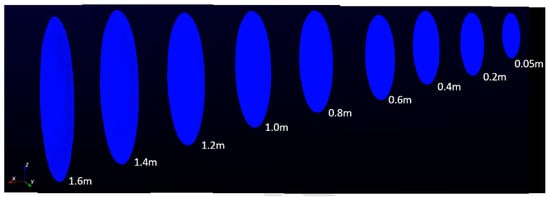

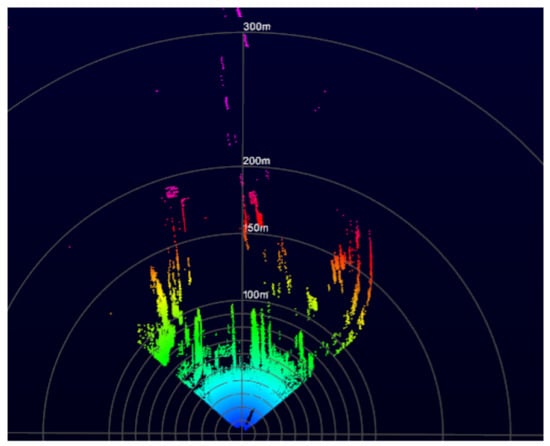

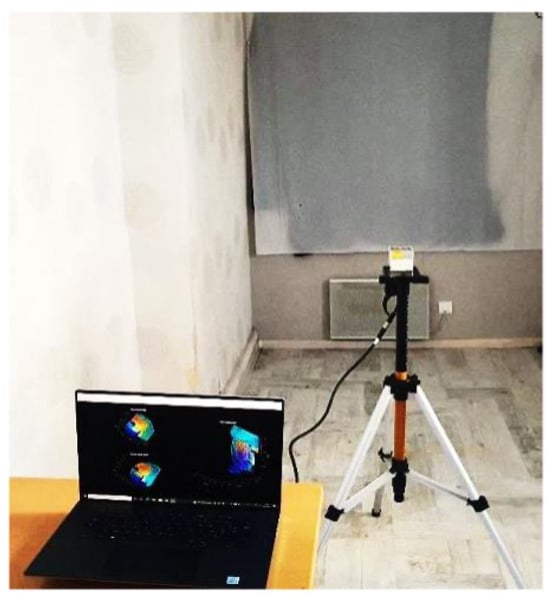

The sensor was mounted on a tripod, as shown in Figure 1a, and tested in both inside and outside environments with both close and far objects detected in the scene. The objective was to detect the closest and farthest object to determine the minimum and maximum range of the sensor. The minimum and maximum range were found to be (see Figure 2) and more than , respectively (see Figure 3). However, for close range between and the accuracy is slightly degraded, mainly because of the filtering applied in the sensor system.

Figure 1.

(a) Sensor mounted on the tripod. (b) Circular point cloud of a flat wall.

Figure 2.

Point clouds from sensor at close range ( to .

Figure 3.

Sensor in outside environment to determine maximum range.

In order to measure the Field Of View (FOV), the sensor was mounted on a tripod and placed, parallel, at a fixed distance in front of a flat wall. The captured point clouds were studied and, using basic trigonometry, the effective FOV was determined to be almost circular (average difference between vertical and horizontal diameter < 1.1%), as shown in Figure 1b.

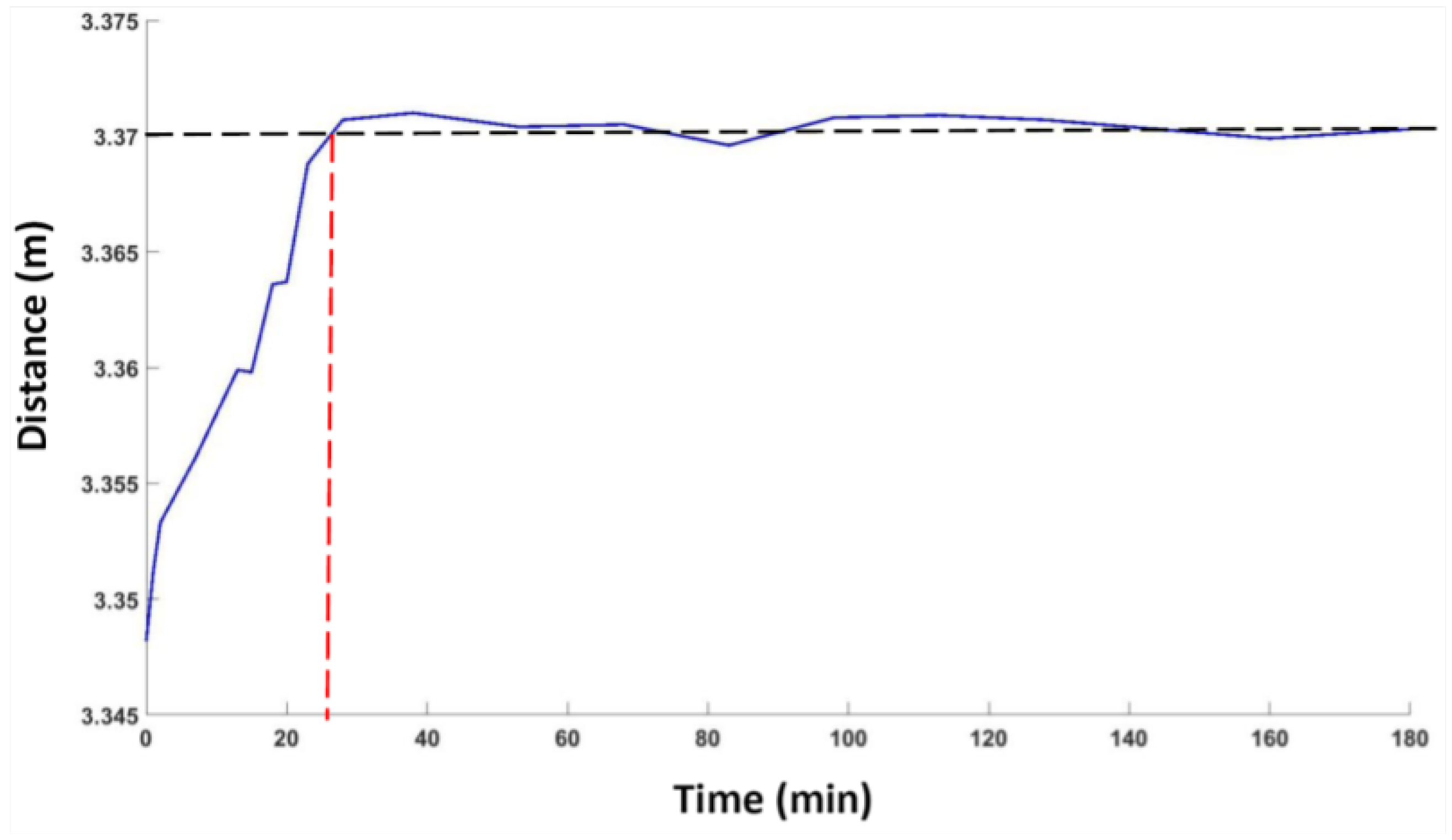

3.3. Drift Analysis

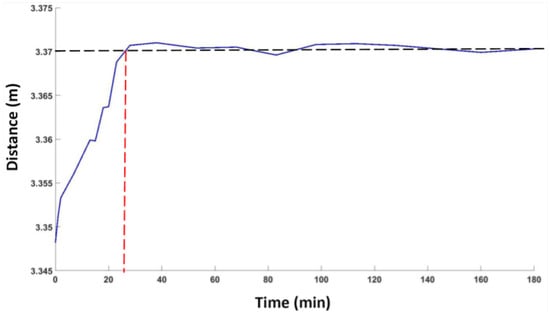

The drift analysis gives an idea about the stability of the LiDAR sensor. In order to analyze this effect of the 3D LiDAR sensor, measurements of a plane surface were performed over a long period of time. The LiDAR sensor was placed at a fixed distance from a white wall and the z-axis of the sensor was kept vertical to the plane of the wall. Repeated measurements were taken over a period of 3 h. The reference distance (ground truth) for all experiments was measured using Leica’s Disto D110 distance measurement unit [32], with an accuracy of ±. The unit was carefully placed at the same spot (marked by the manufacturer) on the sensor that corresponds to the sensor measurement zero. The average of multiple readings was taken, at each instance, to minimize the effect of noise and further improve measurement accuracy. Figure 4 shows the variation in distance measurements with respect to time. A large variation of about 2 cm was observed in the first 24 min of running; then, the measurement value stabilized. As the drift effect is mainly due to the variation in temperature of the device during functioning, the results indicate that the sensor achieved its ambient working temperature by this time.

Figure 4.

Drift analysis showing the variation in distance measurement with respect to time.

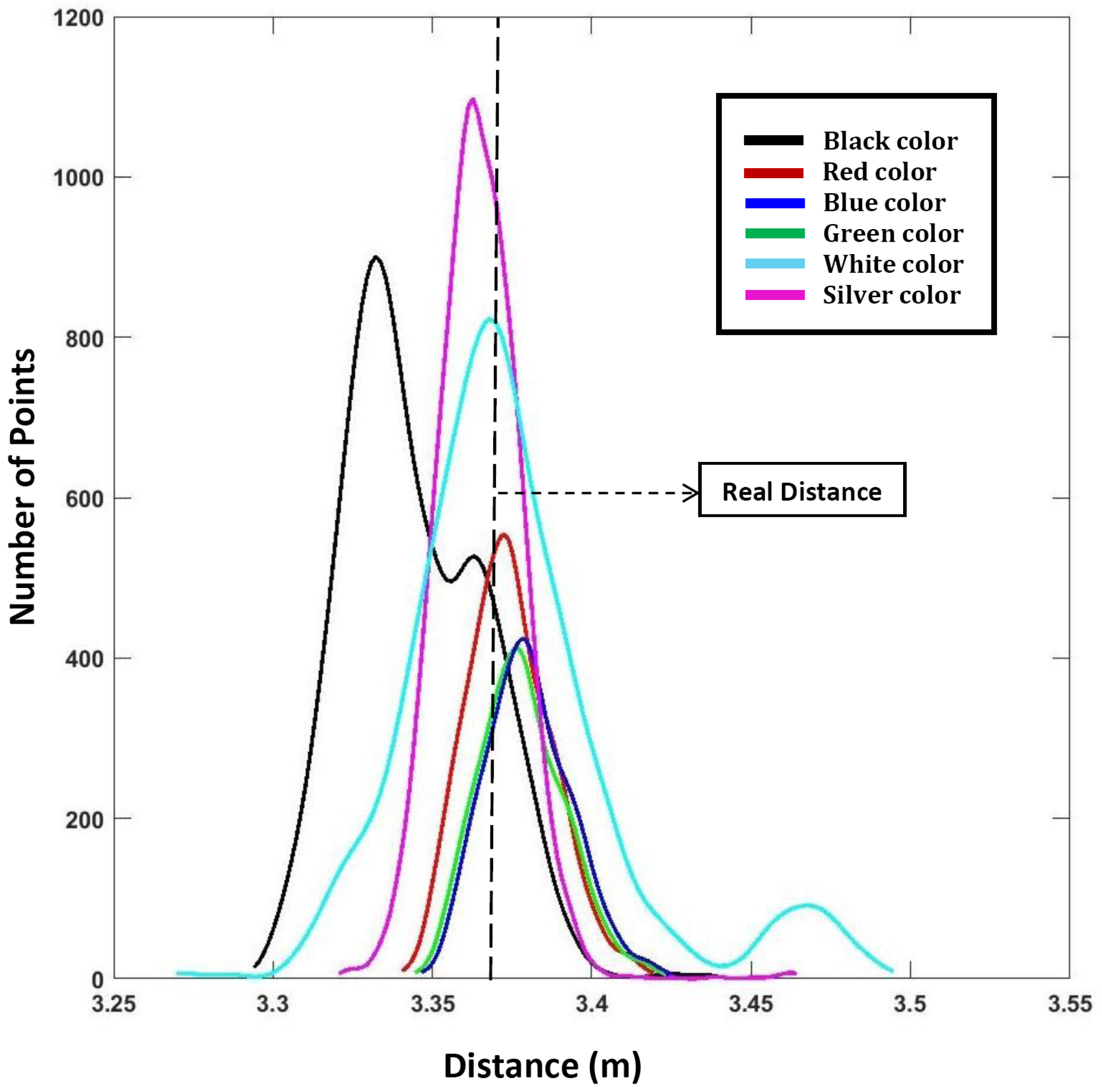

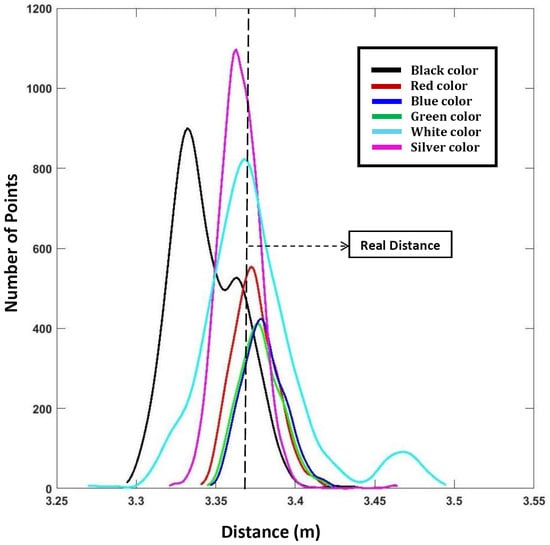

3.4. Effect of Surface Colors

The color of the target’s surface affects the laser measurements. In order to evaluate the LiDAR’s performance against different colors, the LiDAR was tested on three primary colors—red, green and blue; two secondary colors—black and white; and also a shiny silver (high reflectivity) color. Each of these 6 different colored targets are made up of the same material—i.e., paper—and were all fixed on exactly the same place during testing. The real/reference distance was measured in the same manner as mentioned in Section 3.3. Figure 5 presents the results in the form of distance distributions.

Figure 5.

Variation in distance measurement with respect to different colors.

3.5. Effect of Surface Material

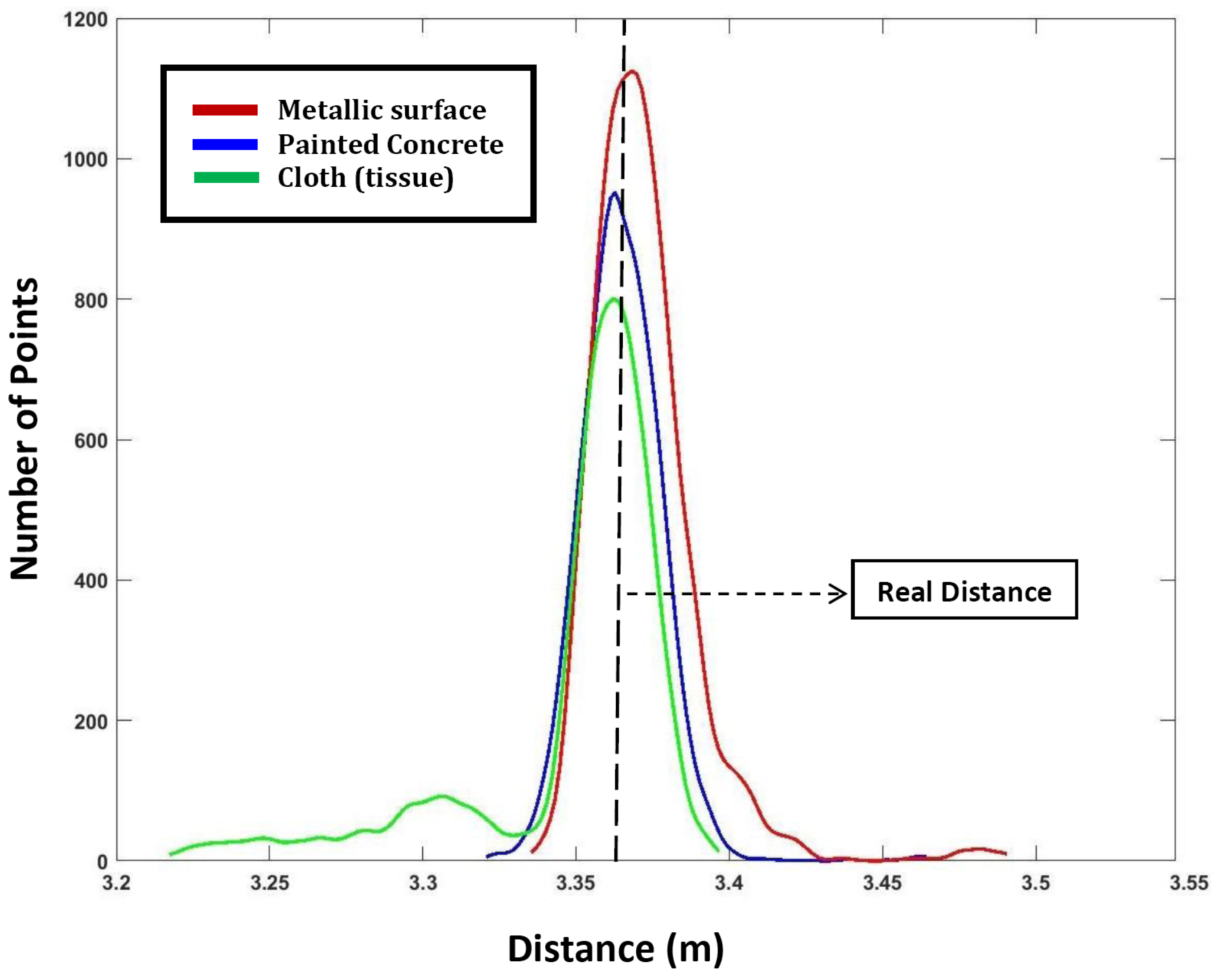

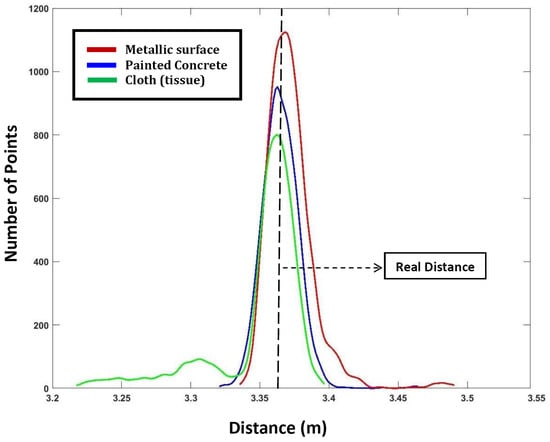

Just like the surface color, the material of the surface also affects the reflection of the laser. In order to evaluate this effect, tests were conducted on three different target materials. They were all white in color; however, the materials were different: tissue cloth, concrete and metal. The real/reference distance was measured in the same manner as mentioned in Section 3.3. The results are shown in Figure 6.

Figure 6.

Variation in distance measurement with respect to different materials.

Even though it could be seen that the variation in distance measurements due to the different materials was not much, the number of reflected 3D points was much higher for the metallic surface compared with that of the other two.

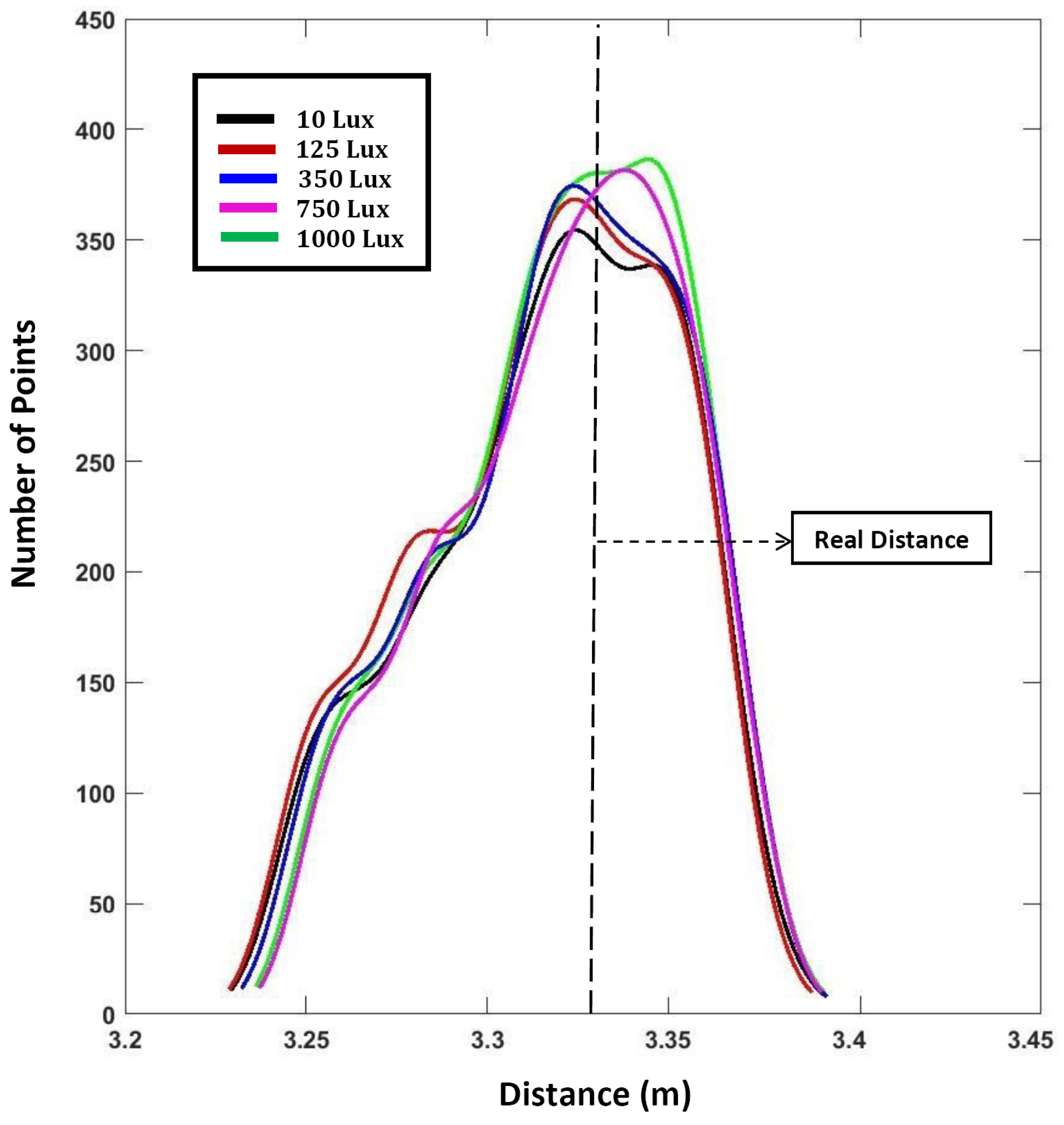

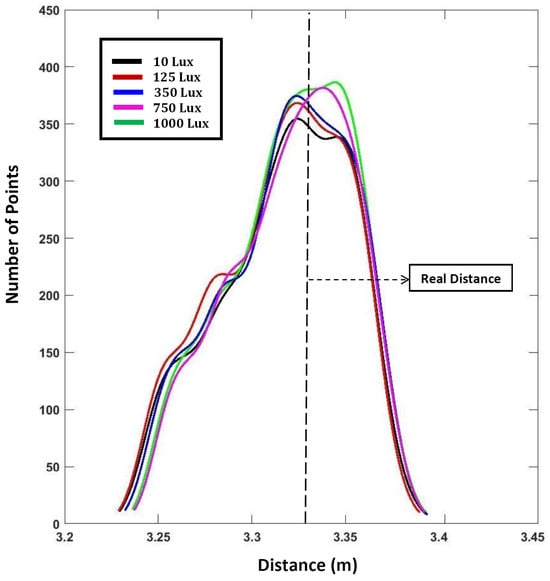

3.6. Influence of Luminosity (Ambient Light)

The sensor’s performance at different luminosity levels was evaluated by measuring the distance of a fixed white target. The real/reference distance was measured in the same manner as mentioned in Section 3.3. The luminosity levels were modified using a large external lamp. A LUX meter was used to measure the luminosity levels. The results presented in Figure 7 show that there is not much variation in distance measurements, implying that the sensor is quite robust to changes in luminosity.

Figure 7.

Variation in distance measurement with respect to different luminosity levels.

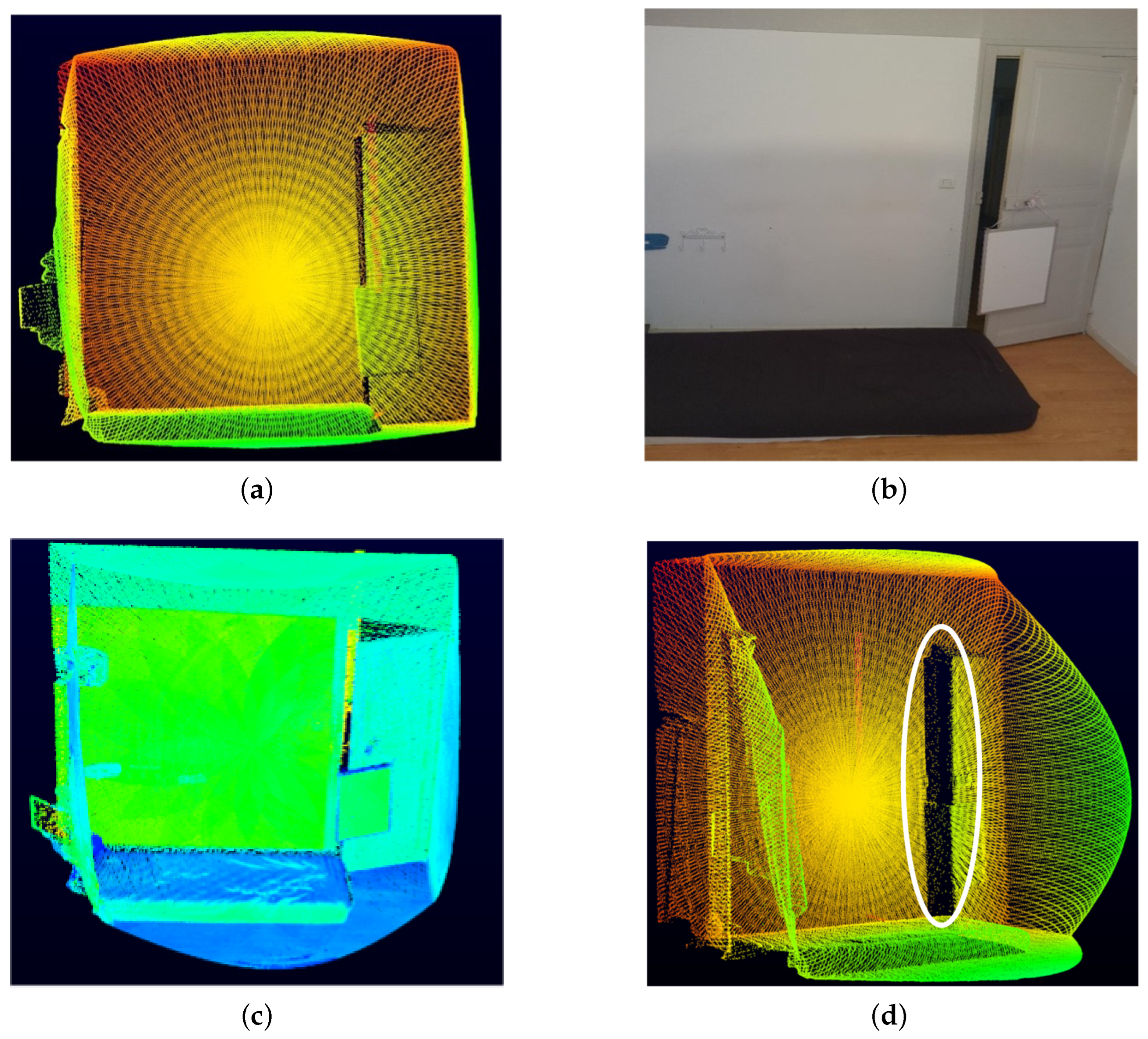

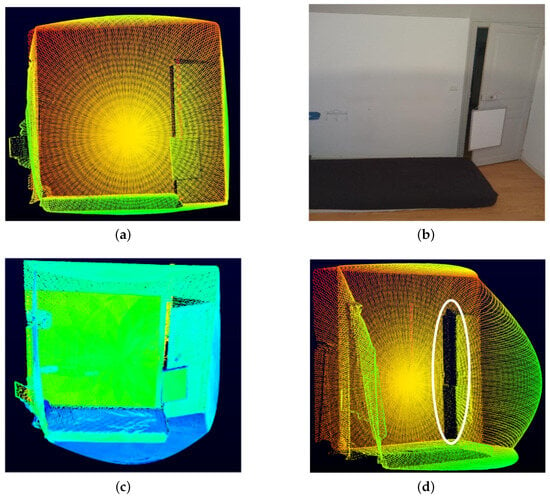

3.7. Problem of Pixel Mixing

When a laser beam falls at the very edge of a surface, the measured range is taken as a combination of the foreground and background surfaces/objects and lies in between the two distances. This condition is called “mixed pixels” [33].

In order to evaluate this phenomenon, we scanned a white wall with a slightly opened door, as shown in Figure 8. The sensor was placed at a distance of . The aim was to analyze the mixed pixels, also called jump edges, at the door opening.

Figure 8.

Study of the phenomena of pixel mixing. In (a), we find the original point cloud while in (b) and (c) the RGB and intensity images are presented. (d) Circled in white, we find a few false points due to the problem of mixed pixels.

The number of false measurements (points) due to pixel mixing was found to be very few, as shown in Figure 8d (encircled in white). These can be easily removed or filtered out in post-processing, as presented in [34,35].

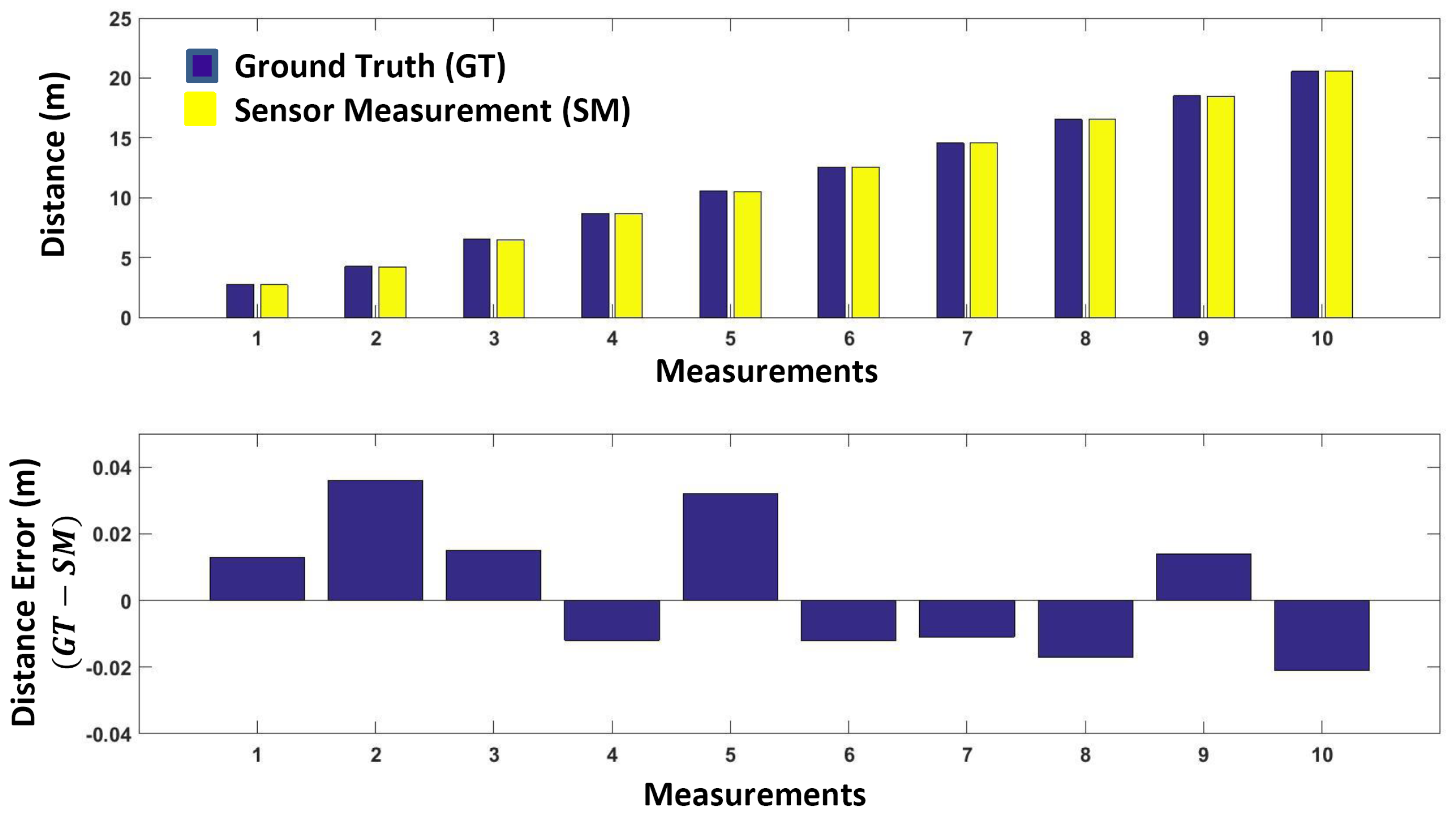

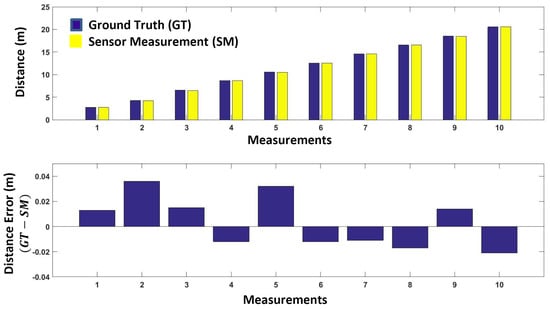

3.8. Distance Analysis

In order to determine the effect of target distance, we took the measurements of a fixed target surface at different distances ( to . The real/reference distance (ground truth) was measured in the same manner as mentioned in Section 3.3. The measured distances with respect to the ground truth are presented in Figure 9. A small measurement error with a standard deviation of indicates the sensor’s high precision.

Figure 9.

Measurement accuracy with respect to distance (range).

3.9. Scanning Pattern

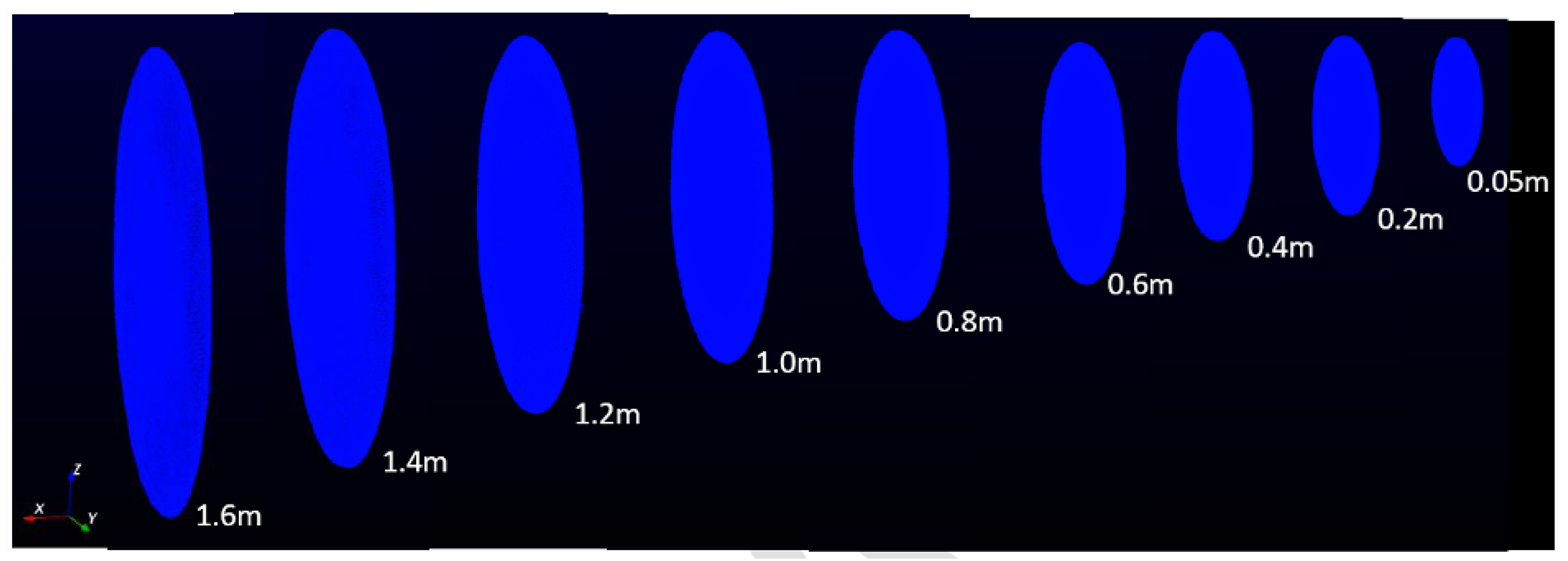

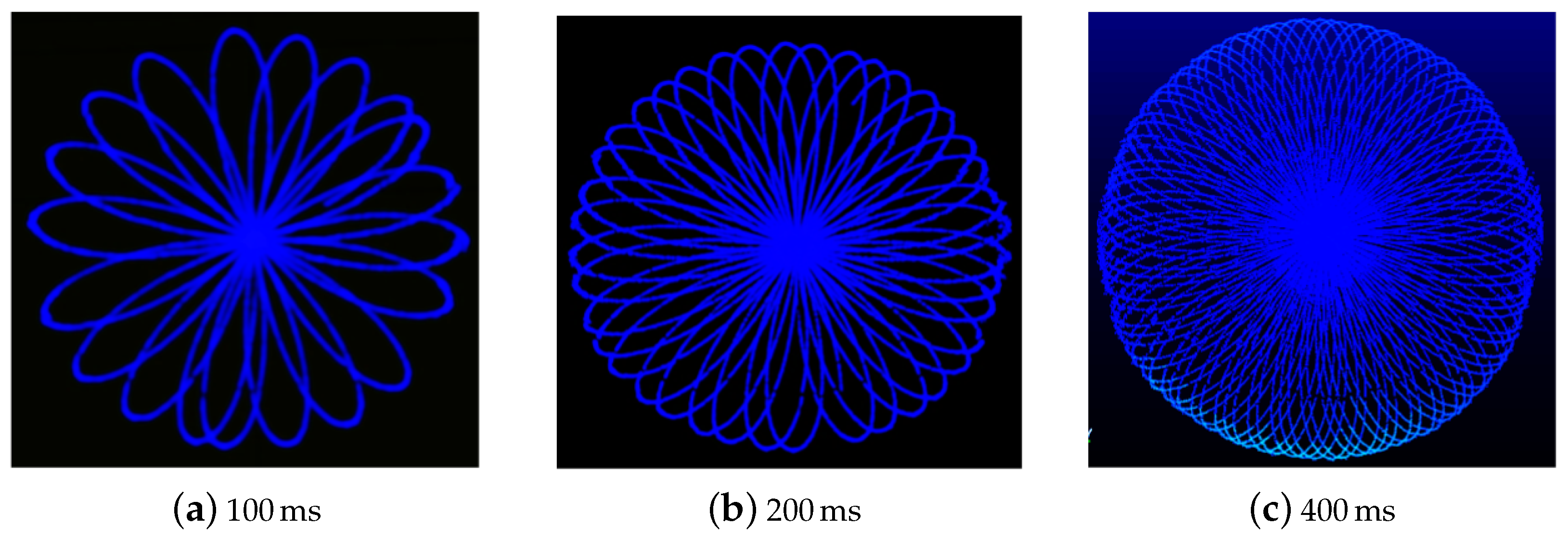

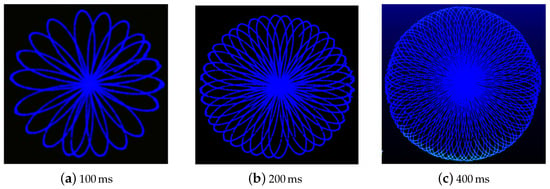

In order to determine the scan pattern of the LiDAR sensor, its scans were analyzed (of a plane surface) at different integration times (resolution) and distances, as shown in Figure 10. The non-repetitive Spirograph-type pattern was determined to be a rosette.

Figure 10.

Rosette-style scanning pattern of the sensor scans at different integration times.

A rosette is a set of points in polar coordinates specified by the following polar equation:

or in Cartesian coordinates using the following parametric equations:

where a is the amplitude or size factor and k is the angular frequency. The rose specified by is identical to that specified by rotated counter-clockwise by radians, which is one-quarter the period of either sinusoidal. If k is odd, the rosette is k petaled. If k is even, the rosette is petaled.

In order to exploit the scanning pattern of the LiDAR sensor, we need to characterize its scanning pattern by determining the value of a and k. In order to determine these parameters, we positioned the sensor on a tripod stand in front of a plane surface, as shown in Figure 11.

Figure 11.

Experimental setup.

Concerning the value of k, the different scans obtained from the sensor were analyzed (the form and number of petals) at different integration times, as shown in Figure 10. The number of petals found was 200 per second. Being an even number, this equates to a value of .

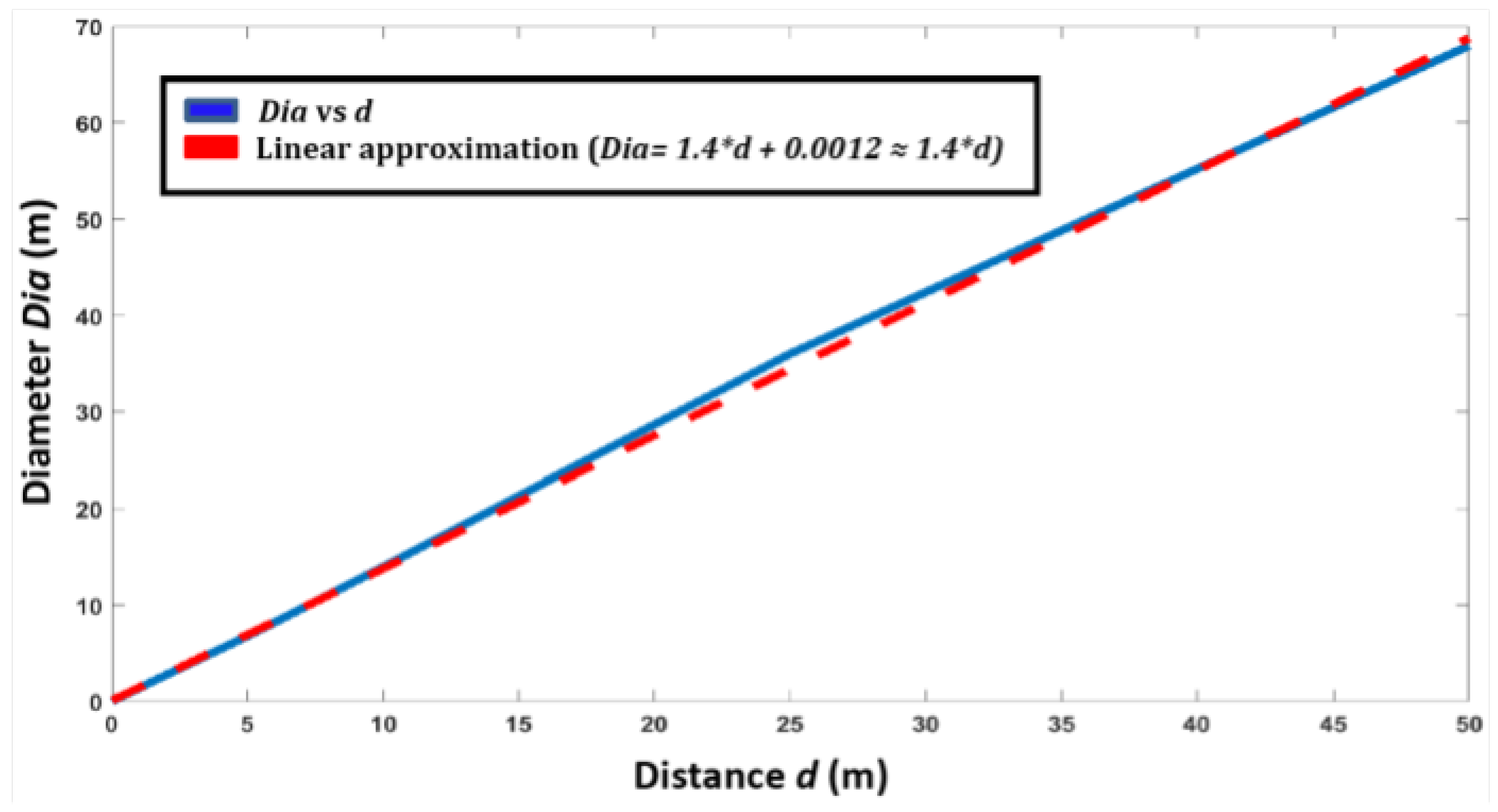

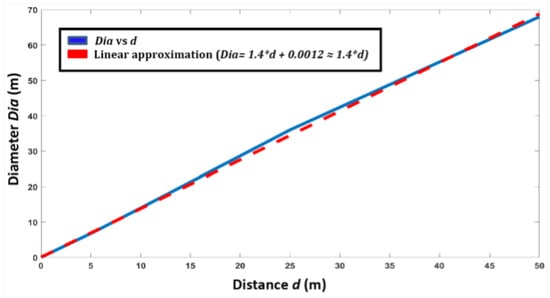

We considered the value of a as a function of scanning distance. The distance of sensor was varied from 1 to at regular intervals, and the series of scan patterns obtained was studied (cf. Section 3.3). Figure 12 presents the size (in terms of diameter) of the rosette scan in terms of distance.

Figure 12.

The size (in terms of diameter) of the rosette scan with respect to the target distance.

Using a linear approximation, the value of a was found as follows:

where d is the target distance.

The characterization results presented in this section show the aptness of the sensor for localization and mapping applications. The sensor’s performance remained mostly invariant to changes in target characteristics and environment. In order to further demonstrate the suitability of the sensor for such applications, we present, in the next section, a new 3D point cloud registration method that exploits the unique characteristics of the sensor.

4. Three-Dimensional Scan Registration

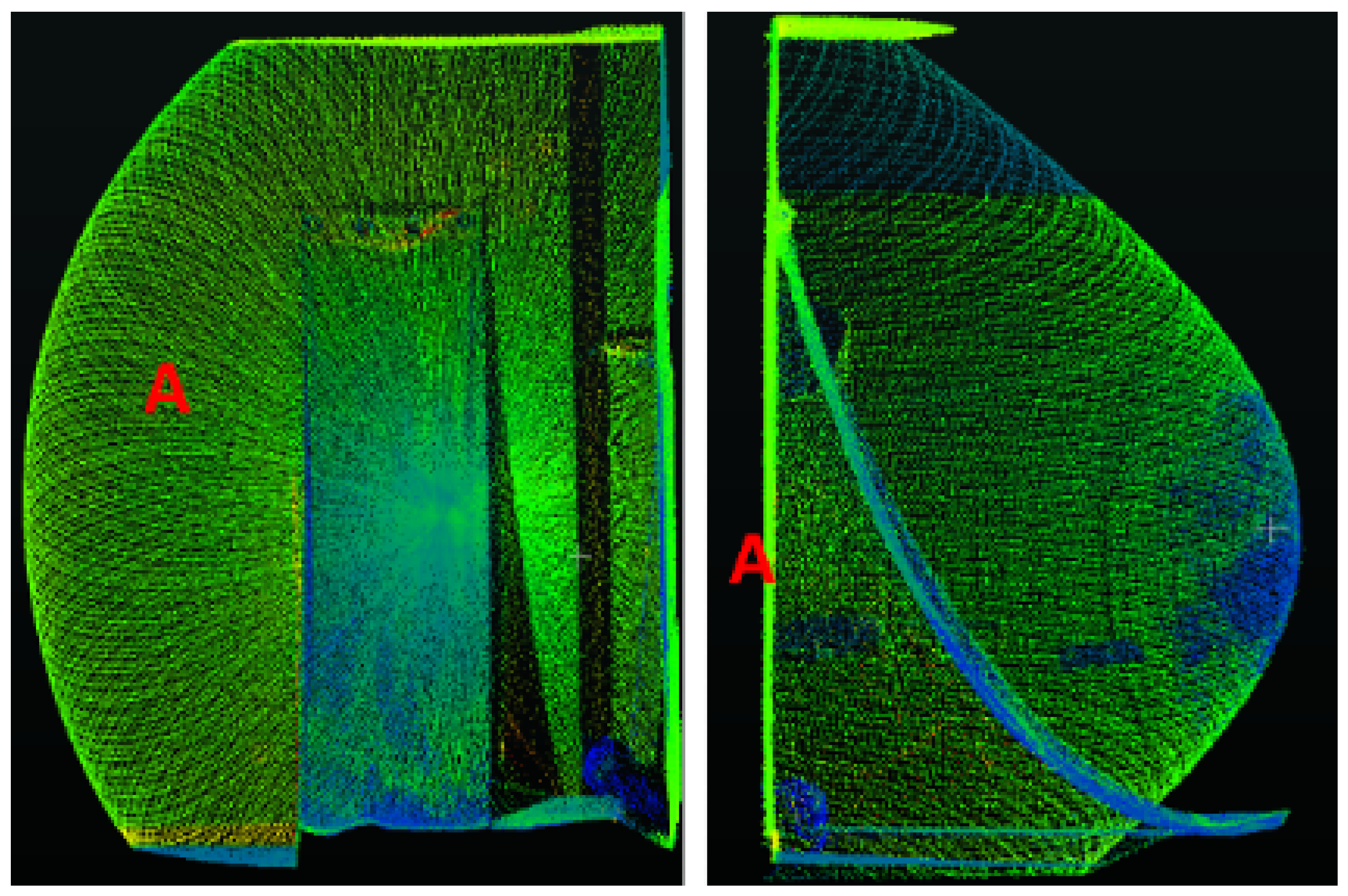

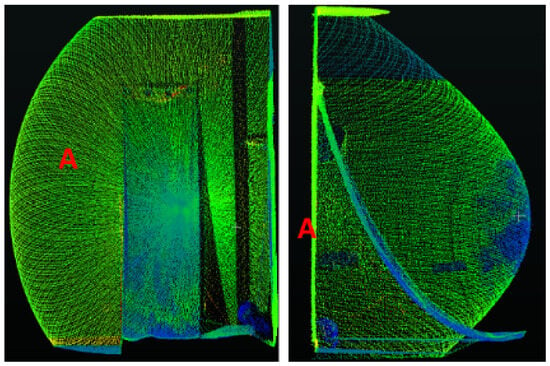

The scans are registered one by one in succession. In order to register two successive scans and , where i and j are the scan numbers, we first reconstruct an ideal/perfect Rosette-style scan (without deformations due to objects) at the specified target distance d, applying the Equations (2) and (3) and using the values of k and a determined in the previous section. The distance d in (4) is taken as the distance of the farthest vertical plane, perpendicular to the sensor plane, detected in each scan, as shown in Figure 13.

Figure 13.

The distance to the farthest vertical plane (A), perpendicular to the sensor plane, is considered as d.

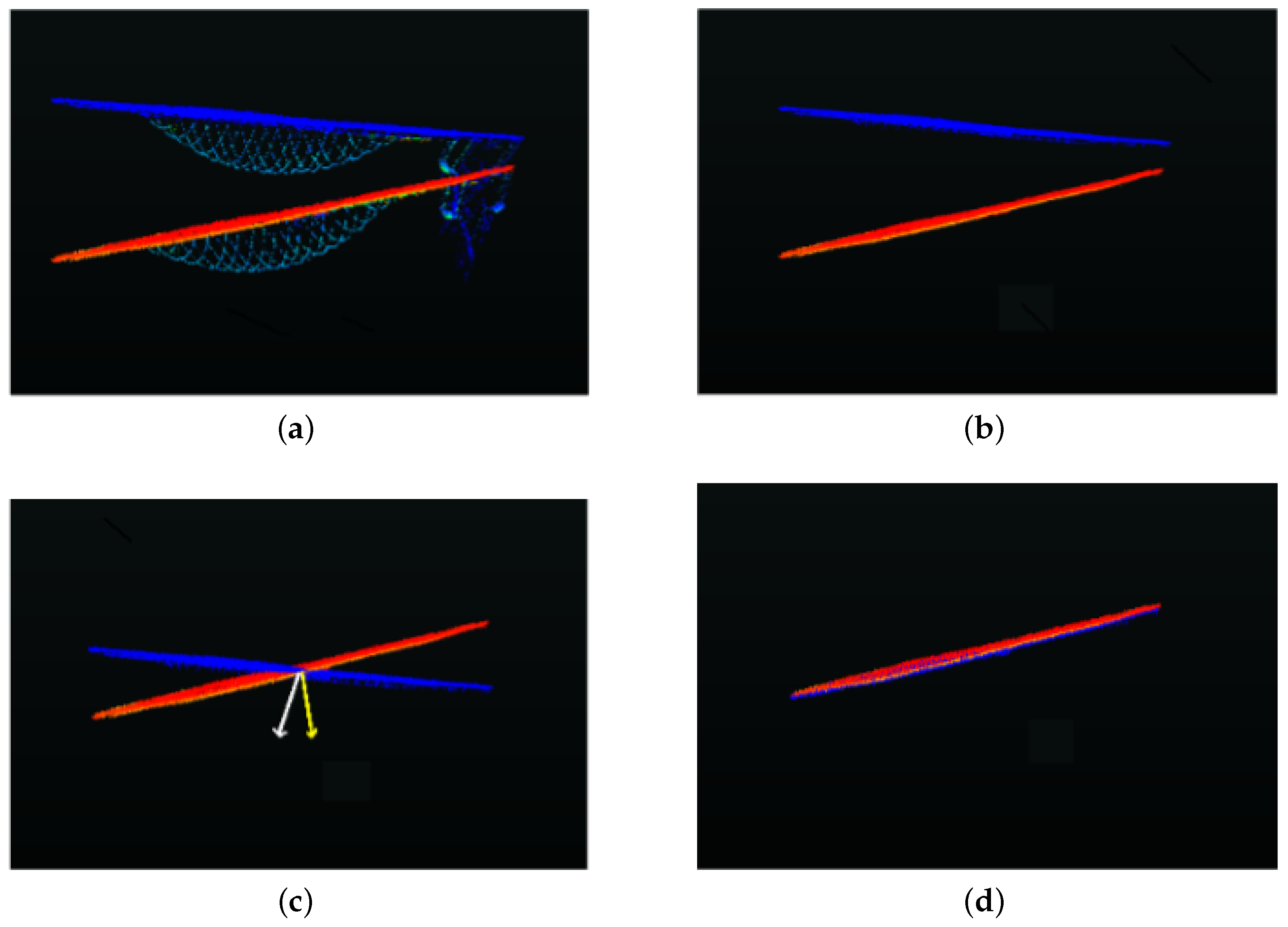

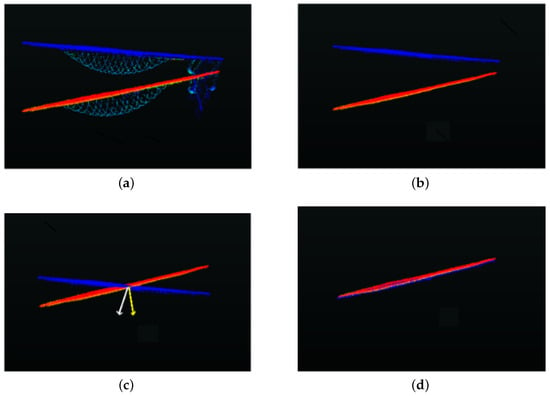

The centers and of the successive reconstructed Rosette-style scans and are first aligned; then, in the second step, their orientations are aligned and rotations around the three axes—i.e., , and , respectively—are estimated, as shown in Figure 14.

Figure 14.

(a) The two successive scans with objects, in red and blue respectively. (b) The reconstructed scans corresponding to the two original scans without objects. In (c), the centers of the two scans are aligned and normal vectors are estimated, while in (d), the second scan is rotated to align with the first scan and the difference of normal vectors give .

In order to align the orientation, the surface normal vector is estimated for each of the reconstructed scans using Principal Component Analysis (PCA) [36]. Given a set of points in each reconstructed scan , the PCA surface normal approximation for a given data point is typically computed by first determining the K-Nearest Neighbors, , of p. Given the K neighbors, the approximate surface normal is then the eigenvector associated with the smallest eigenvalue of the symmetric positive semi-definite matrix

where corresponds to the centers and of the successive reconstructed Rosette-style scans and .

The rotation around the three axes is then estimated as the difference between the orientation of the two normal vectors. As the sensor remains on the ground, the rotation in successive scans around the axis perpendicular to the sensor plane is considered zero.

where , , and , , are the orientations of the two vectors with respect to the x, y and z axes, respectively.

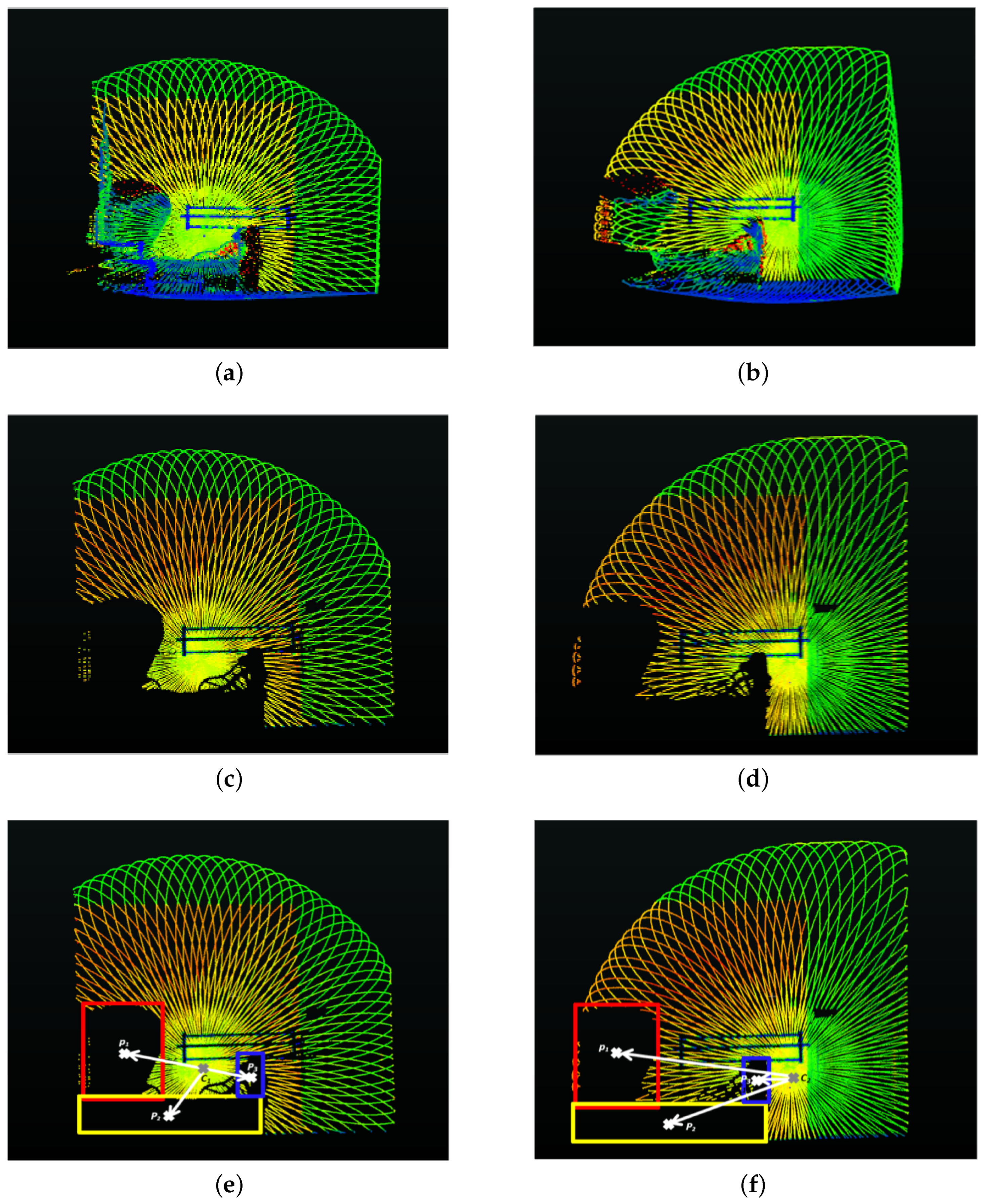

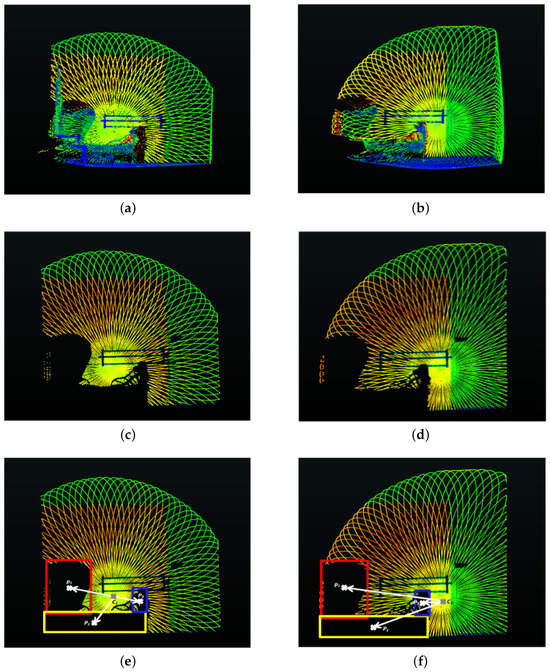

The original scans and are then matched with the corresponding reconstructed scans and . As the reconstructed scans are free of objects, this allows the extraction of objects present in the original scans, leaving behind holes. The reconstructed scans help complete the farthest and largest vertical plane in the original scans occluded by objects in the scene. All 3D points lying outside this vertical plane are then considered as object points and are extracted, leaving behind holes. The centroid P of the resulting holes in the original scans are calculated using a bounding box around each hole (or extracted object) with respect to and , as shown in Figure 15. The displacement of these centroids with respect to the center of the scans in the successive scans provides us with the translation, i.e., , between these two scans:

where n is the total number of holes (due to extracted objects) in the scans, and and are the centroids of the hole in the scans and , respectively. The displacement along the z axis is denoted , where and are the distances of the farthest vertical plane, perpendicular to the sensor plane, detected in each scan, respectively.

Figure 15.

(a,b) The two original successive scans with objects. (c,d) The two scans with holes after extraction of objects. (e,f) The bounding box around the different holes in both scans, along with their centroids and the vectors from the center of the scans and the centroids. The difference in these vectors (displacement of objects with respect to the scan center) provides us the estimates of and .

These rotations and translations are then used to transform the original scans, with the first scan taken as reference, using (8). The transformed scan and the reference scan are then aggregated (9) to form the new reference scan for the next scan.

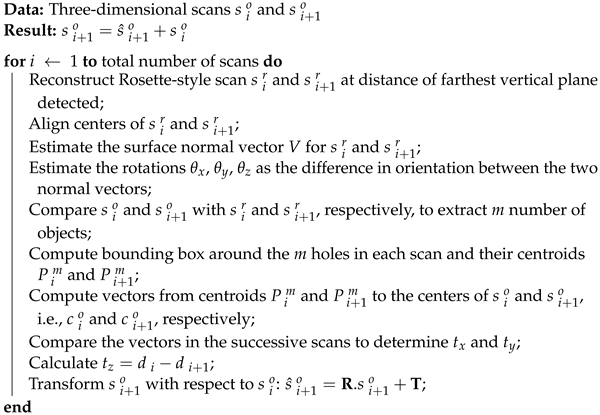

The method is summarized in Algorithm 1.

| Algorithm 1: Three-Dimensional Scan Registration. |

|

5. Experimentation Setup

In order to evaluate the proposed method, the sensor was employed in both static and dynamic modes.

5.1. Static Mode

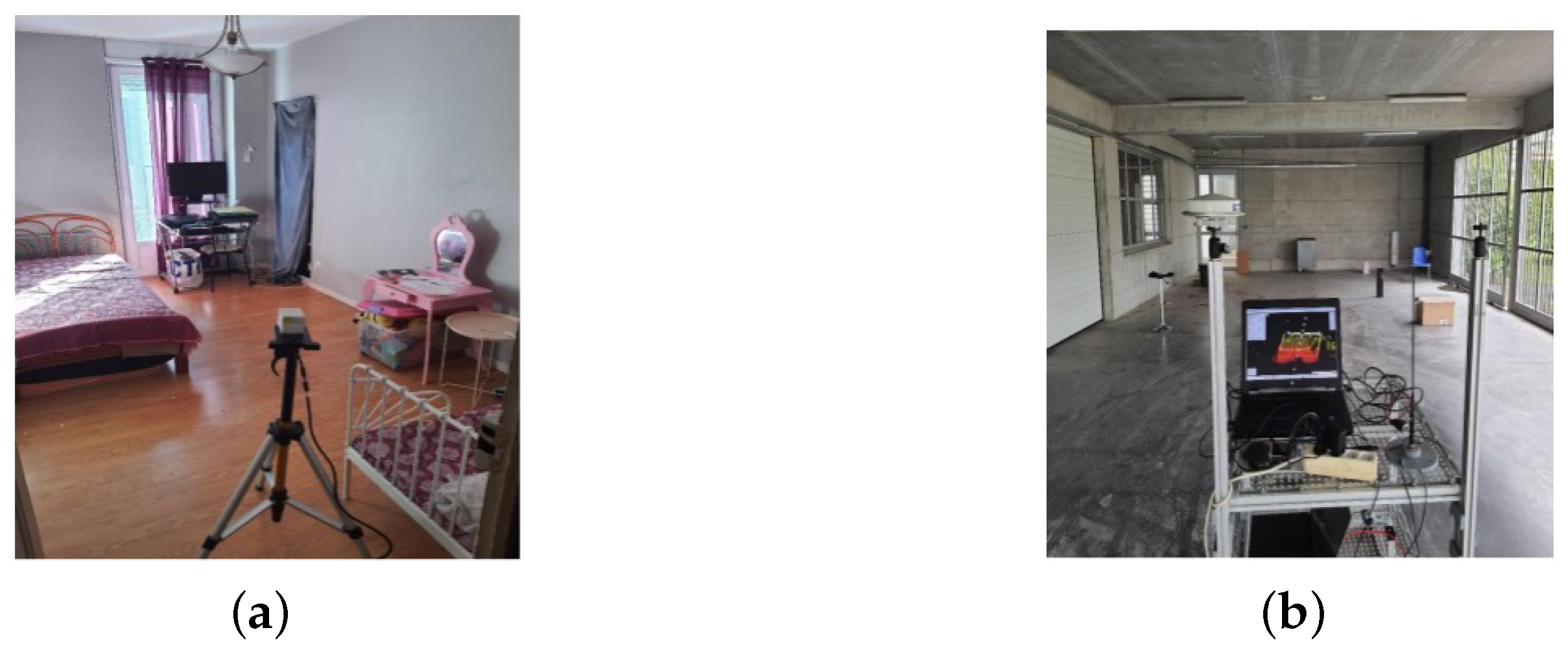

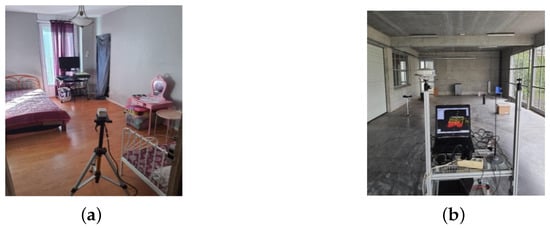

In this mode, the sensor was mounted on a tripod and moved around different positions to completely scan an indoor room equipped with furniture, as shown in Figure 16a. The scanning was performed at an integration time of 0.2 s. This experiment Exp_1 contained a total of 35 scans.

Figure 16.

(a,b) The experimental setup for experiments Exp_1 and Exp_2.

5.2. Dynamic Mode

In this mode, the LiDAR sensor was mounted on a small mobile robot moving on a predefined trajectory, at a speed of about scanning an enclosed hangar/parking lot, as shown in Figure 16b. The robot was also equipped with a 2D camera, an odometer and low-cost MEMS-based (Microelectromechanical systems) IMU (Inertial Measurement Unit). Scanning was performed at an integration time of 0.2 s. Exp_2 contains 357 scans.

In both experiments, loop closure was ensured to help in the evaluation process, while the ground truth was obtained via physical measurements of the different room dimensions. These dimensions were measured using Leica’s Disto D110 distance measurement unit, having an accuracy of ±. The average of multiple readings was taken, for each dimension, to minimize the effect of noise and further improve measurement accuracy. These dimensions include windows and door openings, the length and height of all the walls and the length and width of the floor. All LiDAR measurements were taken after (>24 min) of running to obtain more accurate results, as concluded in Section 3.3; as a precautionary measure, all measurements less than were discarded (Section 3.2).

6. Results and Discussion

Using the data obtained from the two experiments, the proposed method was evaluated and further compared with two state-of-the-art methods, Global Iterative Closest Point (G-ICP) [7] and Fast Point Feature Histograms (FPFH) [9], respectively. The unregistered 3D point clouds/scans obtained in Exp_1 and Exp_2 were registered independently using these three methods. The evaluation results are presented in the next sections.

6.1. Registration Accuracy

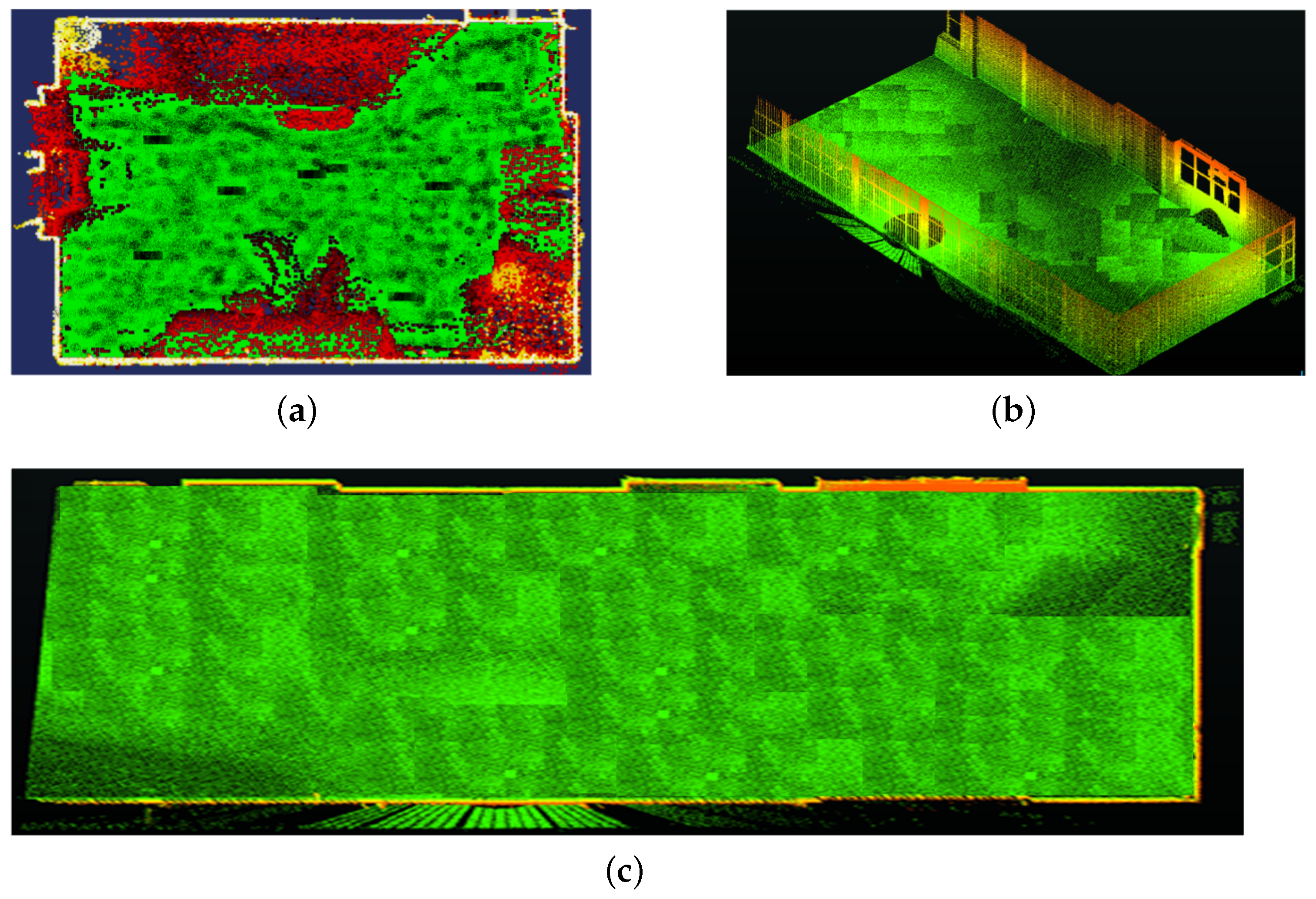

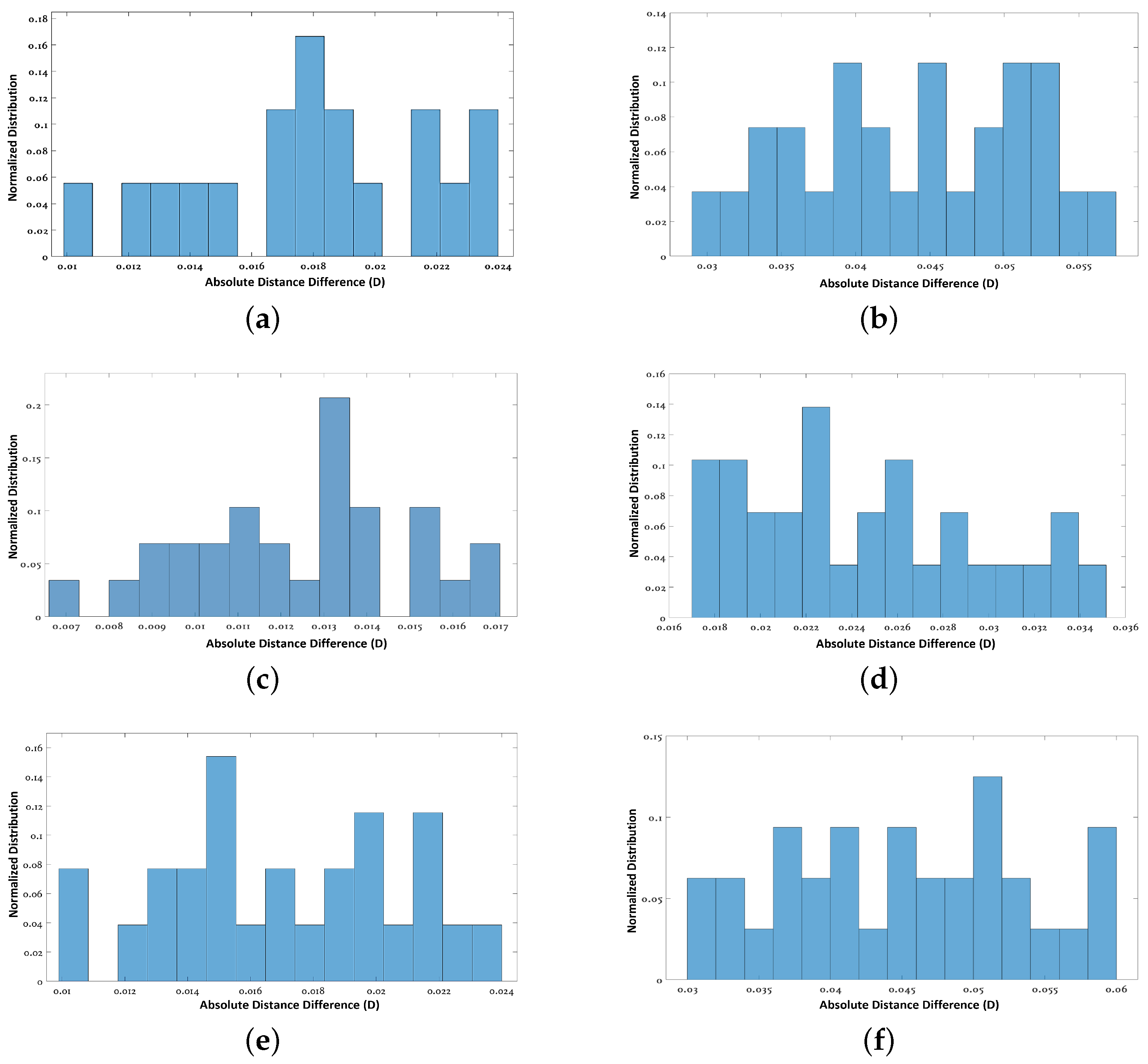

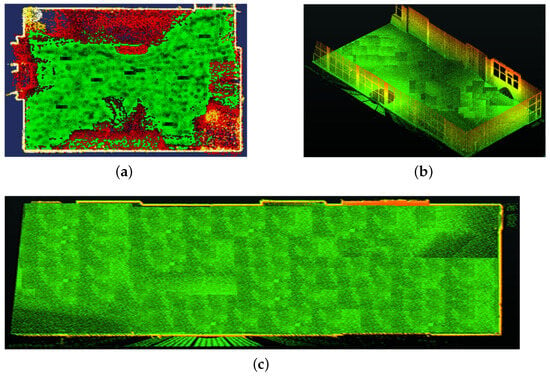

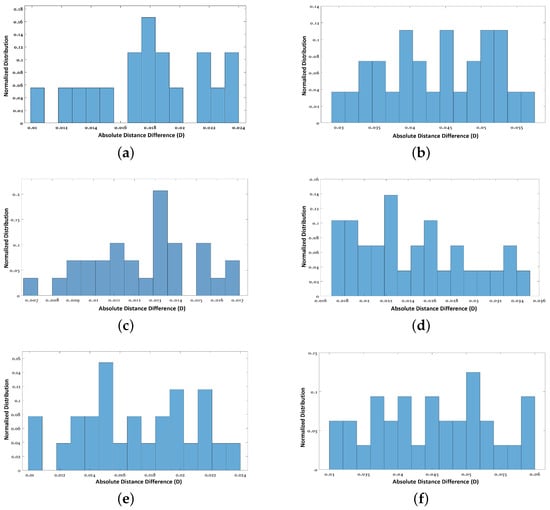

The registration results for both experiments are presented in Figure 17. A quantitative analysis is presented in Table 2 and Figure 18 for the two experiments and compared with the state-of-the-art methods [7,9]. , in the table, presents the registration error calculated in terms of the average absolute difference in dimensions (width and height) of the window and door openings, walls and floor, and compared to the ground truth (similar to [37]); whereas, in Figure 18, absolute distance difference distributions are presented.

Figure 17.

(a–c) The registration results for the experiments Exp_1 and Exp_2 employing the proposed method.

Table 2.

Registration accuracy for experiments Exp_1 and Exp_2.

Figure 18.

(a,c,e) The absolute distance difference distributions for Exp_1 for the Proposed method, G-ICP [7] and FPFH [9], respectively. (b,d,f) The absolute distance difference distributions for Exp_2 for the three methods, respectively.

The results show that the proposed method is comparable to the other state-of-the-art methods in terms of accuracy.

The registration accuracy of the proposed method was better in Exp_1 and closer to G-ICP as the room size was small and required a smaller number of scans. In the G-ICP algorithm, after successive scan matching, there is a bundle adjustment step at the end to improve the overall scan registration so that the number of scans (or size of acquisition) has less impact on the overall results. Whereas, in the proposed method, the registration error usually tends to accumulate more with a larger number of scans (acquisition size). However, this does not always impact the closed loop error, as seen in the results.

6.2. Processing Time

The proposed method along with the other state-of-the-art methods were run on the same laptop (Intel Core i9-10885H processor @2.40 GHz, memory 32 GB (Intel, Santa Clara, CA, USA)) under a Windows 10 operating system and Matlab environment. The results of the registration time (in seconds) are presented in Table 3.

Table 3.

Processing time.

From the results, it can be seen that there is no significant difference in the processing time at this lower scan resolution (number of points per scan).

From the results, it can be seen that there is no significant difference in the processing time at this lower scan resolution (number of points per scan).

As the results presented in Table 3 were inconclusive, Exp_1 was repeated at different scan resolutions by varying the data integration time, i.e., from (low resolution) to (high resolution). The results are presented in Table 4.

Table 4.

Processing time for different scan resolutions.

The results clearly show that the strength of the proposed method lies in the computational time as the number of points per scan increases. For methods like G-ICP, the processing time increases manifold with the increase in the number of points as the number of correspondences between points increases. However, the proposed method remains relatively unchanged.

6.3. Localization Error

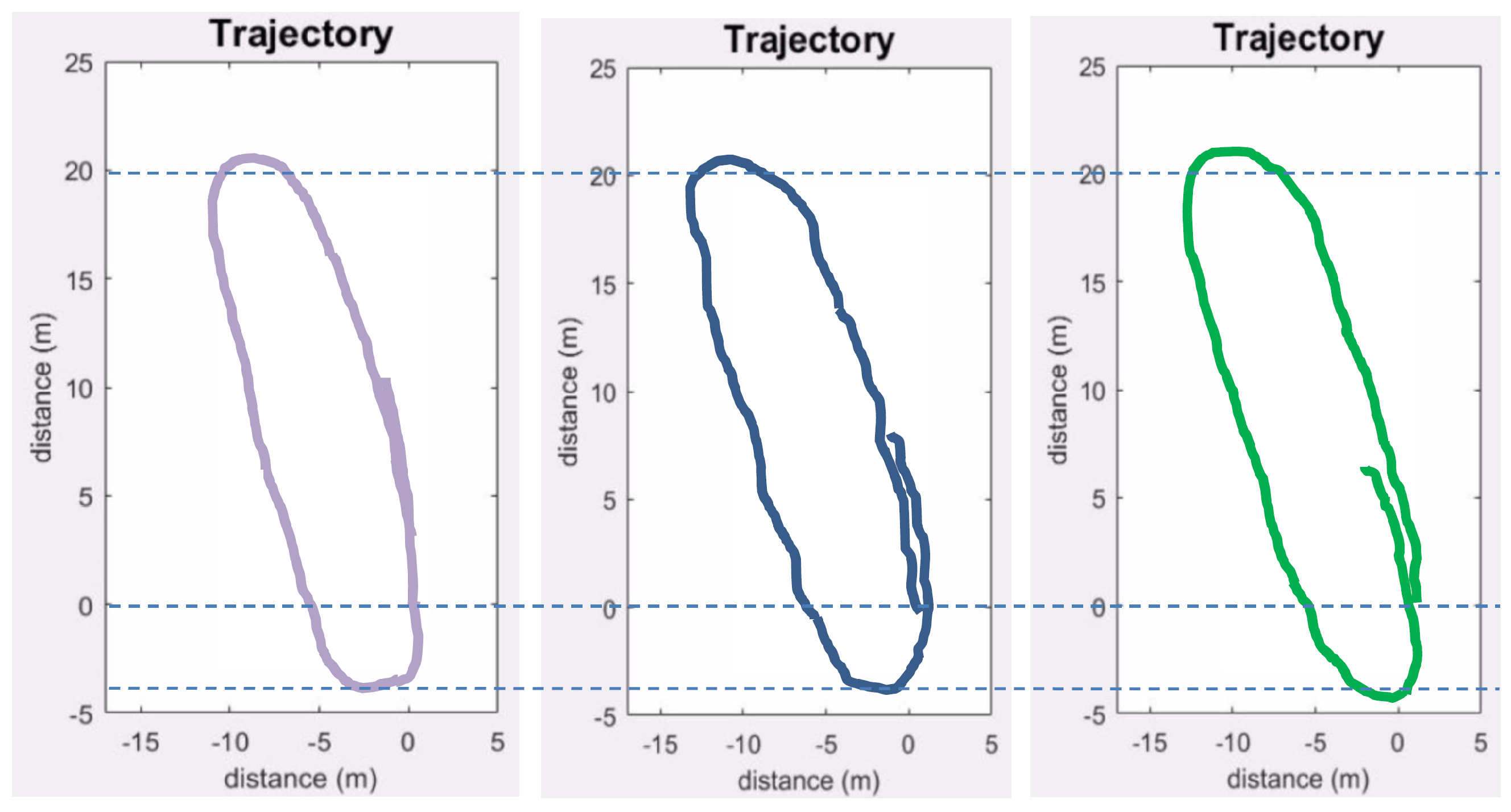

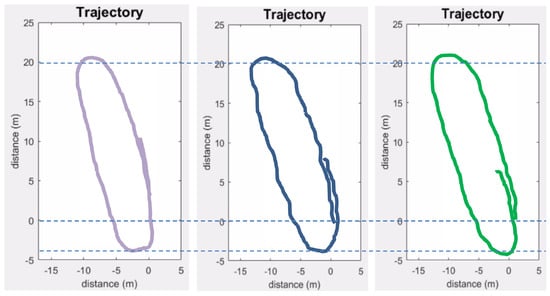

The localization error was computed by comparing the trajectory estimated by the proposed method with the predefined trajectory of the mobile robot in Exp_2 (Ground truth). The first scan (at the start point) is considered as the origin or zero position. The method is further compared with the trajectory obtained using an odometer/MEMS IMU-based method [38]. These data were obtained from the odometer and a low-cost IMU embarked on the mobile robot used in Exp_2. The errors of the estimated parameters were calculated at regular intervals of the trajectory in terms of Root-Mean-Square Error (RMSE) values. The results are presented in Figure 19 and Table 5.

Figure 19.

Estimated trajectories (proposed method in blue and odometer/MEMS IMU-based in green) compared to the ground truth (in purple).

Table 5.

Estimation of localization errors in terms of RMSE values.

The results show that the trajectory estimated by the proposed method contains some abrupt changes resulting in a not-so-smooth (kinky) trajectory, as shown in Figure 19, whereas both the proposed and the odometer/MEMS IMU-based methods suffer from drift.

The proposed method fares slightly better in terms of drift as it employs matching of subsequent scans that automatically corrects some of the errors; however, the overall error tends to accumulate in successive scans. This could be improved by adding some constraints like loop closing.

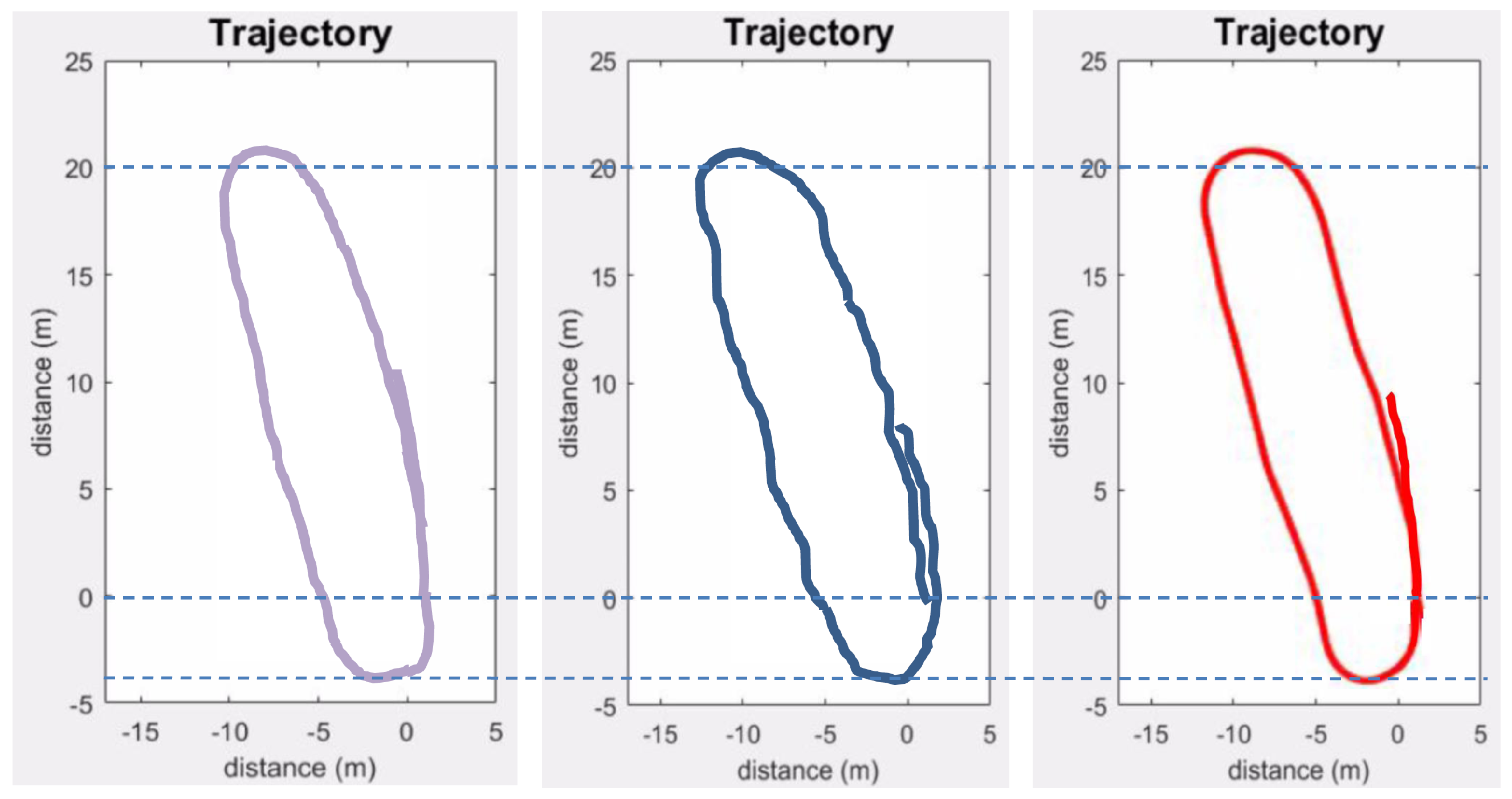

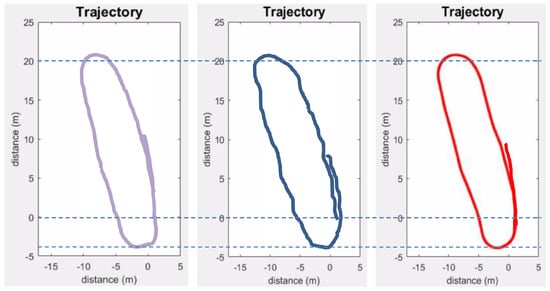

6.4. Improving Localization and Mapping Results

In order to improve upon the localization and mapping results, two different strategies were adopted. The sharp or abrupt changes in the trajectory were improved by employing a curve fitting derivative filter (CFDT), similar to the one proposed in [39], to the successive localization positions. As the proposed method suffers from slight drift in terms of error in the case of larger distance and number of scans, the accuracy of the proposed method was sought to be improved by incorporating some constraints like loop closure. A hard loop closure was employed on the trajectory obtained from the proposed method. The other 3D scans were subsequently adjusted using the CFDT filter to obtain a more accurate and smooth trajectory. The improved localization results from Exp_2 are presented in Figure 20 and Table 6.

Figure 20.

Improved estimated trajectories (without improvement in blue and improved one in red) compared to the ground truth (in purple).

Table 6.

Estimation of localization errors in terms of RMSE values after improvements.

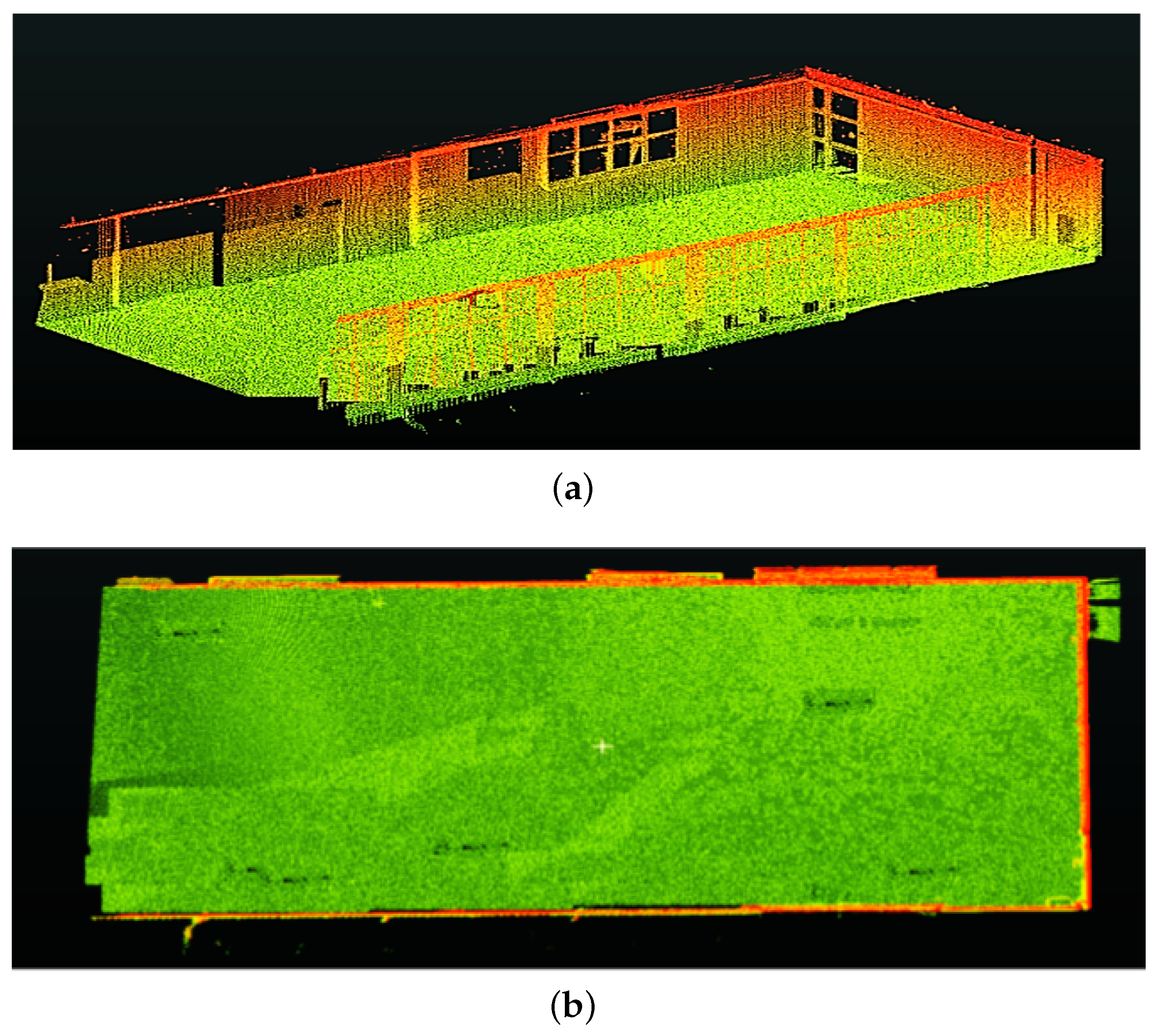

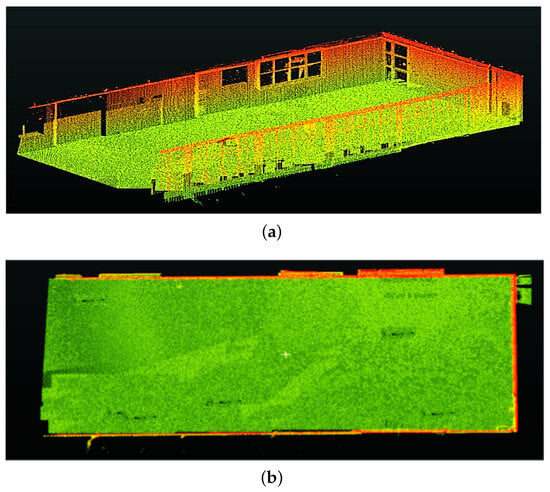

The improvement in the localization/trajectory estimation should also improve the overall registration/mapping accuracy. The registration accuracy (Section 6.1) for experiment Exp_2 was recalculated, the improved results are presented in Table 7 and the resulting 3D map (registered point cloud) is shown in Figure 21.

Table 7.

Improvement in registration accuracy for Exp_2.

Figure 21.

(a,b) The registration results for Exp_2 after improvements in the proposed method.

7. Conclusions

In this paper, the feasibility of a Spirograph-type non-repetitive scanning pattern LiDAR sensor to achieve robust 3D point cloud registration for localization and mapping applications is presented. First, the comprehensive characterization results of this unique sensor are presented; then, exploiting these results, a new 3D point cloud registration method that exploits the unique scanning pattern of the sensor to register successive 3D scans is proposed. The results evaluated on real data show that the proposed method is comparable with other state-of-the-art methods in terms of accuracy but surpasses them in performance in terms of processing speed as it is shown to be invariant to increase in the number of 3D points per scan. After analysis of the results, a slight drift in error was observed in the case of larger distance, number of scans and also abrupt changes in the trajectory estimation. Both these problems were then successfully addressed by employing a CFDT filter to smooth the trajectory and adding loop closure as a constraint to improve upon the overall localization and registration accuracies by 32.6%.

For future work, it would be considered to perhaps add more constraints along the trajectory, apart from loop closure, to improve the overall accuracy and explore the use of an ICP-like method to further improve or finely register the resulting point cloud at faster rates. In order to make the vehicle/robot navigation more precise and robust, integrating this type of sensor technology with methods like SLAM-SVSF could also be explored. It is also envisaged to further test the proposed method in more complex, cluttered and unstructured environments to improve upon it and expand the use of such a sensor for different field applications. The work presented in this paper represents a promising advancement toward more robust and accurate 3D point cloud registration, with implications for the continued evolution of technologies reliant on LiDAR-based environmental sensing.

Author Contributions

A.K.A. conceived, designed and performed the experiments; A.K.A. and P.C. analyzed the data; A.K.A. contributed materials/analysis tools; A.K.A. and P.C. wrote the paper; P.C. provided supervision, project administration and funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the International Research Center “Innovation Transportation and Production Systems” of the I-SITE CAP 20–25. Financial support was also received from the Auvergne–Rhône–Alpes region through the Accrobot project (ACCostage haute précision ROBOTisé–Chantier Transitique du laboratoire Factolab) as part of the Pack Ambition Recherche 2020.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Besl, P.; McKay, N. A Method for Registration of 3-D Shapes. IEEE Trans. PAMI 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-ICP. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009; Volume 5, pp. 168–176. [Google Scholar]

- Serafin, J.; Grisetti, G. NICP: Dense normal based point cloud registration. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–3 October 2015; Volume 2015, pp. 742–749. [Google Scholar]

- Xie, Z.; Liang, P.; Tao, J.; Zeng, L.; Zhao, Z.; Cheng, X.; Zhang, J.; Zhang, C. An Improved Supervoxel Clustering Algorithm of 3D Point Clouds for the Localization of Industrial Robots. Electronics 2022, 11, 1612. [Google Scholar] [CrossRef]

- Tazir, M.L.; Gokhool, T.; Checchin, P.; Malaterre, L.; Trassoudaine, L. CICP: Cluster Iterative Closest Point for sparse-dense point cloud registration. Robot. Auton. Syst. 2018, 108, 66–86. [Google Scholar] [CrossRef]

- Vizzo, I.; Guadagnino, T.; Mersch, B.; Wiesmann, L.; Behley, J.; Stachniss, C. KISS-ICP: In Defense of Point-to-Point ICP—Simple, Accurate, and Robust Registration If Done the Right Way. IEEE Robot. Autom. Lett. 2023, 8, 1029–1036. [Google Scholar] [CrossRef]

- Aijazi, A.K.; Malaterre, L.; Trassoudaine, L.; Chateau, T.; Checchin, P. Automatic Detection and Modeling of Underground Pipes Using a Portable 3D LiDAR System. Sensors 2019, 19, 5345. [Google Scholar] [CrossRef] [PubMed]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Tombari, F.; Salti, S.; Di Stefano, L. Unique Signatures of Histograms for Local Surface Description. In Proceedings of the Computer Vision—ECCV, Heraklion, Greece, 5–11 September 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 356–369. [Google Scholar]

- Ghorbani, F.; Ebadi, H.; Pfeifer, N.; Sedaghat, A. Uniform and Competency-Based 3D Keypoint Detection for Coarse Registration of Point Clouds with Homogeneous Structure. Remote Sens. 2022, 14, 4099. [Google Scholar] [CrossRef]

- Myronenko, A.; Song, X. Point Set Registration: Coherent Point Drift. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2262–2275. [Google Scholar] [CrossRef]

- Demim, F.; Benmansour, S.; Abdelkrim, N.; Rouigueb, A.; Hamerlain, M.; Bazoula, A. Simultaneous Localisation and Mapping for Autonomous Underwater Vehicle using a Combined Smooth Variable Structure Filter and Extended Kalman Filter. J. Exp. Theor. Artif. Intell. 2022, 34, 621–650. [Google Scholar] [CrossRef]

- Xue, G.; Wei, J.; Li, R.; Cheng, J. LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine. Sensors 2022, 22, 520. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar] [CrossRef]

- Wang, H.; Wang, C.; Chen, C.L.; Xie, L. F-LOAM: Fast LiDAR Odometry and Mapping. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4390–4396. [Google Scholar] [CrossRef]

- Behley, J.; Stachniss, C. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018; Volume 2018, p. 59. [Google Scholar]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguère, P.; Behley, J.; Stachniss, C. SuMa++: Efficient LiDAR-based Semantic SLAM. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 4530–4537. [Google Scholar] [CrossRef]

- Chen, X.; Li, S.; Mersch, B.; Wiesmann, L.; Gall, J.; Behley, J.; Stachniss, C. Moving Object Segmentation in 3D LiDAR Data: A Learning-Based Approach Exploiting Sequential Data. IEEE Robot. Autom. Lett. 2021, 6, 6529–6536. [Google Scholar] [CrossRef]

- Deschaud, J.E. IMLS-SLAM: Scan-to-Model Matching Based on 3D Data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2480–2485. [Google Scholar] [CrossRef]

- Kolluri, R. Provably Good Moving Least Squares. ACM Trans. Algorithms 2008, 4, 1–25. [Google Scholar] [CrossRef]

- Vizzo, I.; Chen, X.; Chebrolu, N.; Behley, J.; Stachniss, C. Poisson Surface Reconstruction for LiDAR Odometry and Mapping. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xian, China, 30 May–5 June 2021; pp. 5624–5630. [Google Scholar] [CrossRef]

- Pais, G.D.; Ramalingam, S.; Govindu, V.M.; Nascimento, J.C.; Chellappa, R.; Miraldo, P. 3DRegNet: A Deep Neural Network for 3D Point Registration. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7191–7201. [Google Scholar] [CrossRef]

- Choy, C.; Dong, W.; Koltun, V. Deep Global Registration. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2511–2520. [Google Scholar]

- Wang, Z.; Wang, X.; Fang, B.; Yu, K.; Ma, J. Vehicle detection based on point cloud intensity and distance clustering. J. Phys. Conf. Ser. 2021, 1748, 042053. [Google Scholar] [CrossRef]

- Wang, Y.; Bu, S.; Chen, L.; Dong, Y.; Li, K.; Cao, X.; Li, K. HybridFusion: LiDAR and Vision Cross-Source Point Cloud Fusion. arXiv 2023, arXiv:2304.04508. [Google Scholar] [CrossRef]

- He, Y.; Ma, L.; Jiang, Z.; Tang, Y.; Xing, G. VI-Eye: Semantic-Based 3D Point Cloud Registration for Infrastructure-Assisted Autonomous Driving. In Proceedings of the MobiCom’21: 27th Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 25–29 October 2021; pp. 573–586. [Google Scholar] [CrossRef]

- Li, H.; Zhao, H.; Ye, B.; Zhang, Y. 3D semantic map construction based on point cloud and image fusion. IET Cyber-Syst. Robot. 2023, 5, e12078. [Google Scholar] [CrossRef]

- Zou, Z.; Lang, H.; Lou, Y.; Lu, J. Plane-based global registration for pavement 3D reconstruction using hybrid solid-state LiDAR point cloud. Autom. Constr. 2023, 152, 104907. [Google Scholar] [CrossRef]

- LIVOX. Available online: https://www.livoxtech.com/mid-70 (accessed on 27 November 2023).

- Brazeal, R.G.; Wilkinson, B.E.; Hochmair, H.H. A Rigorous Observation Model for the Risley Prism-Based Livox Mid-40 Lidar Sensor. Sensors 2021, 21, 4722. [Google Scholar] [CrossRef]

- Leica DISTO D110. Available online: https://shop.leica-geosystems.com/fr/measurement-tools/disto/leica-disto-d110 (accessed on 19 December 2023).

- Cooper, M.A.; Raquet, J.F.; Patton, R. Range Information Characterization of the Hokuyo UST-20LX LIDAR Sensor. Photonics 2018, 5, 12. [Google Scholar] [CrossRef]

- Ghorpade, V.K.; Checchin, P.; Trassoudaine, L. Line-of-sight-based ToF camera’s range image filtering for precise 3D scene reconstruction. In Proceedings of the 2015 European Conference on Mobile Robots (ECMR), Lincoln, UK, 2–4 September 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Fuchs, S.; May, S. Calibration and registration for precise surface reconstruction with Time-Of-Flight cameras. Int. J. Intell. Syst. Technol. Appl. 2008, 5, 274–284. [Google Scholar] [CrossRef]

- Klasing, K.; Althoff, D.; Wollherr, D.; Buss, M. Comparison of surface normal estimation methods for range sensing applications. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3206–3211. [Google Scholar] [CrossRef]

- Aijazi, A.K.; Checchin, P.; Trassoudaine, L. Automatic Removal of Imperfections and Change Detection for Accurate 3D Urban Cartography by Classification and Incremental Updating. Remote Sens. 2013, 5, 3701–3728. [Google Scholar] [CrossRef]

- Zhang, P.; Hancock, C.M.; Lau, L.; Roberts, G.W.; de Ligt, H. Low-cost IMU and odometer tightly coupled integration with Robust Kalman filter for underground 3-D pipeline mapping. Measurement 2019, 137, 454–463. [Google Scholar] [CrossRef]

- Nemec, D.; Hrubos, M.; Janota, A.; Pirnik, R.; Gregor, M. Estimation of the Speed From the Odometer Readings Using Optimized Curve-Fitting Filter. IEEE Sens. J. 2021, 21, 15687–15695. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).