Dynamic Virtual Fixture Generation Based on Intra-Operative 3D Image Feedback in Robot-Assisted Minimally Invasive Thoracic Surgery

Abstract

:1. Introduction

1.1. Virtual Fixture

1.2. Port Incision in Robot-Assisted Minimally Invasive Thoracic Surgery

- This method allows full utilization of the point clouds collected intra-operatively and does not require additional processing, such as creating implicit surface functions or estimating normal vectors;

- In this paper, a novel force field function is proposed to generate a virtual fixture. Only a simple sigmoid function is used to implement both the forbidden virtual fixture (FVF) and guidance virtual fixture (GVF) generation by choosing different parameters. Due to the superposition of force fields, the algorithm does not require an explicit definition of transition regions or collision detection;

- A “view scope” of contact points is established, and only the points within this scope exert guidance/forbidden forces on the operator, which not only excludes the influence of distant points but also reduces the computational burden of the algorithm;

- This paper proposes a valuable framework and demonstrates the feasibility of using robot-assisted drilling and puncturing port incisions in minimally invasive thoracic surgery as a practical application. In minimally invasive surgeries, drilling and puncturing tasks often require identification of the surgeon’s intent [23]. However, the VF generation method proposed in this paper utilizes appropriate force fields, allowing the robot to guide the surgeon correctly without the need for intent recognition.

2. Proposed Framework

3. Dynamic Virtual Fixture Generation

3.1. Intra-Operative Point Cloud Acquisition

3.2. VF Generation Methods

3.2.1. FVF Generation

3.2.2. GVF Generation

3.2.3. View Scope of PDCP

4. Results and Discussion

4.1. Experimental Setup

4.2. FVF Evaluation

4.3. GVF Evaluation

4.4. Results of VF-Assisted Drilling and Puncturing Port Incision Tasks

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| VF | Virtual fixture |

| VFF | Virtual force field |

| FVF | Forbidden virtual fixture |

| GVF | Guidance virtual fixture |

| VATS | Video-assisted thoracic surgery |

| TCP | Tool center point |

| PDCP | Pre-defined contact point |

References

- Selvaggio, M.; Cognetti, M.; Nikolaidis, S.; Ivaldi, S.; Siciliano, B. Autonomy in Physical Human-Robot Interaction: A Brief Survey. IEEE Robot. Autom. Lett. 2021, 6, 7989–7996. [Google Scholar] [CrossRef]

- Li, H.Y. Stable and Compliant Motion of Physical Human–Robot Interaction Coupled with a Moving Environment Using Variable Admittance and Adaptive Control. IEEE Robot. Autom. Lett. 2018, 3, 2493–2500. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, W.; Cai, Y.; Zhao, Q.; Wang, Y.; Hu, Y.; Wang, S. A Guiding and Positioning Motion Strategy Based on a New Conical Virtual Fixture for Robot-Assisted Oral Surgery. Machines 2022, 11, 3. [Google Scholar] [CrossRef]

- Rosenberg, L. Virtual Fixtures: Perceptual Tools for Telerobotic Manipulation. In Proceedings of the Proceedings of IEEE Virtual Reality Annual International Symposium, Seattle, WA, USA, 18–22 September 1993; pp. 76–82. [Google Scholar] [CrossRef]

- Kapoor, A.; Li, M.; Taylor, R.H. Spatial Motion Constraints for Robot Assisted Suturing Using Virtual Fixtures. In Proceedings of the 8th International Conference on Medical Image Computing and Computer-Assisted Intervention—MICCAI 2005, Palm Springs, CA, USA, 26–29 October 2005; Volume 3750, pp. 89–96. [Google Scholar]

- Srimathveeravalli, G.; Gourishankar, V.; Kesavadas, T. Comparative Study: Virtual Fixtures and Shared Control for Rehabilitation of Fine Motor Skills. In Proceedings of the World Haptics 2007: Second Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Tsukuba, Japan, 22–24 March 2007; pp. 304–309. [Google Scholar]

- Kwok, K.W.; Tsoi, K.H.; Vitiello, V.; Clark, J.; Chow, G.C.T.; Luk, W.; Yang, G.Z. Dimensionality Reduction in Controlling Articulated Snake Robot for Endoscopy Under Dynamic Active Constraints. IEEE Trans. Robot. Publ. IEEE Robot. Autom. Soc. 2013, 29, 15–31. [Google Scholar] [CrossRef] [PubMed]

- Navkar, N.V.; Deng, Z.; Shah, D.J.; Bekris, K.E.; Tsekos, N.V. Visual and Force-Feedback Guidance for Robot-Assisted Interventions in the Beating Heart with Real-Time MRI. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 689–694. [Google Scholar] [CrossRef]

- Seung, S.; Kang, B.; Kim, W.; Park, J.; Park, S. Development of Image Guided Master-Slave System for Minimal Invasive Brain Surgery. In Proceedings of the ISR 2010 (41st International Symposium on Robotics) and ROBOTIK 2010 (6th German Conference on Robotics), Munich, Germany, 7–9 June 2010; pp. 1–6. [Google Scholar]

- Marinho, M.M.; Adorno, B.V.; Harada, K.; Mitsuishi, M. Dynamic Active Constraints for Surgical Robots Using Vector-Field Inequalities. IEEE Trans. Robot. 2019, 35, 1166–1185. [Google Scholar] [CrossRef]

- Marinho, M.M.; Ishida, H.; Harada, K.; Deie, K.; Mitsuishi, M. Virtual Fixture Assistance for Suturing in Robot-Aided Pediatric Endoscopic Surgery. IEEE Robot. Autom. Lett. 2020, 5, 524–531. [Google Scholar] [CrossRef]

- Bowyer, S.A.; Davies, B.L.; Rodriguez y Baena, F. Active Constraints/Virtual Fixtures: A Survey. IEEE Trans. Robot. 2014, 30, 138–157. [Google Scholar] [CrossRef]

- Bischof, B.; Kugi, A. Dynamic Virtual Fixtures Based on Path Following Control. IFAC-PapersOnLine 2019, 52, 424–429. [Google Scholar] [CrossRef]

- Li, Z.; Gordon, A.; Looi, T.; Drake, J.; Forrest, C.; Taylor, R.H. Anatomical Mesh-Based Virtual Fixtures for Surgical Robots. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 3267–3273. [Google Scholar] [CrossRef]

- Ren, J.; Patel, R.; McIsaac, K.; Guiraudon, G.; Peters, T. Dynamic 3-D Virtual Fixtures for Minimally Invasive Beating Heart Procedures. IEEE Trans. Med. Imaging 2008, 27, 1061–1070. [Google Scholar] [CrossRef] [PubMed]

- Ni, D.; Nee, A.; Ong, S.; Li, H.; Zhu, C.; Song, A. Point Cloud Augmented Virtual Reality Environment with Haptic Constraints for Teleoperation. Trans. Inst. Meas. Control 2018, 40, 4091–4104. [Google Scholar] [CrossRef]

- Ryden, F.; Chizeck, H.J. Forbidden-Region Virtual Fixtures from Streaming Point Clouds: Remotely Touching and Protecting a Beating Heart. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 3308–3313. [Google Scholar] [CrossRef]

- Yamamoto, T.; Abolhassani, N.; Jung, S.; Okamura, A.M.; Judkins, T.N. Augmented Reality and Haptic Interfaces for Robot-Assisted Surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2012, 8, 45–56. [Google Scholar] [CrossRef] [PubMed]

- Kastritsi, T.; Papageorgiou, D.; Sarantopoulos, I.; Stavridis, S.; Doulgeri, Z.; Rovithakis, G.A. Guaranteed Active Constraints Enforcement on Point Cloud-Approximated Regions for Surgical Applications. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8346–8352. [Google Scholar] [CrossRef]

- Tanev, T.K.; Cammarata, A.; Marano, D.; Sinatra, R. Elastostatic Model of a New Hybrid Minimally-Invasive-Surgery Robot. In Proceedings of the 14th IFToMM World Congress, Taipei, Taiwan, 25–30 October 2015; pp. 449–458. [Google Scholar] [CrossRef]

- Sihoe, A.D.L. The Evolution of Minimally Invasive Thoracic Surgery: Implications for the Practice of Uniportal Thoracoscopic Surgery. J. Thorac. Dis. 2014, 6, S604–S617. [Google Scholar] [CrossRef] [PubMed]

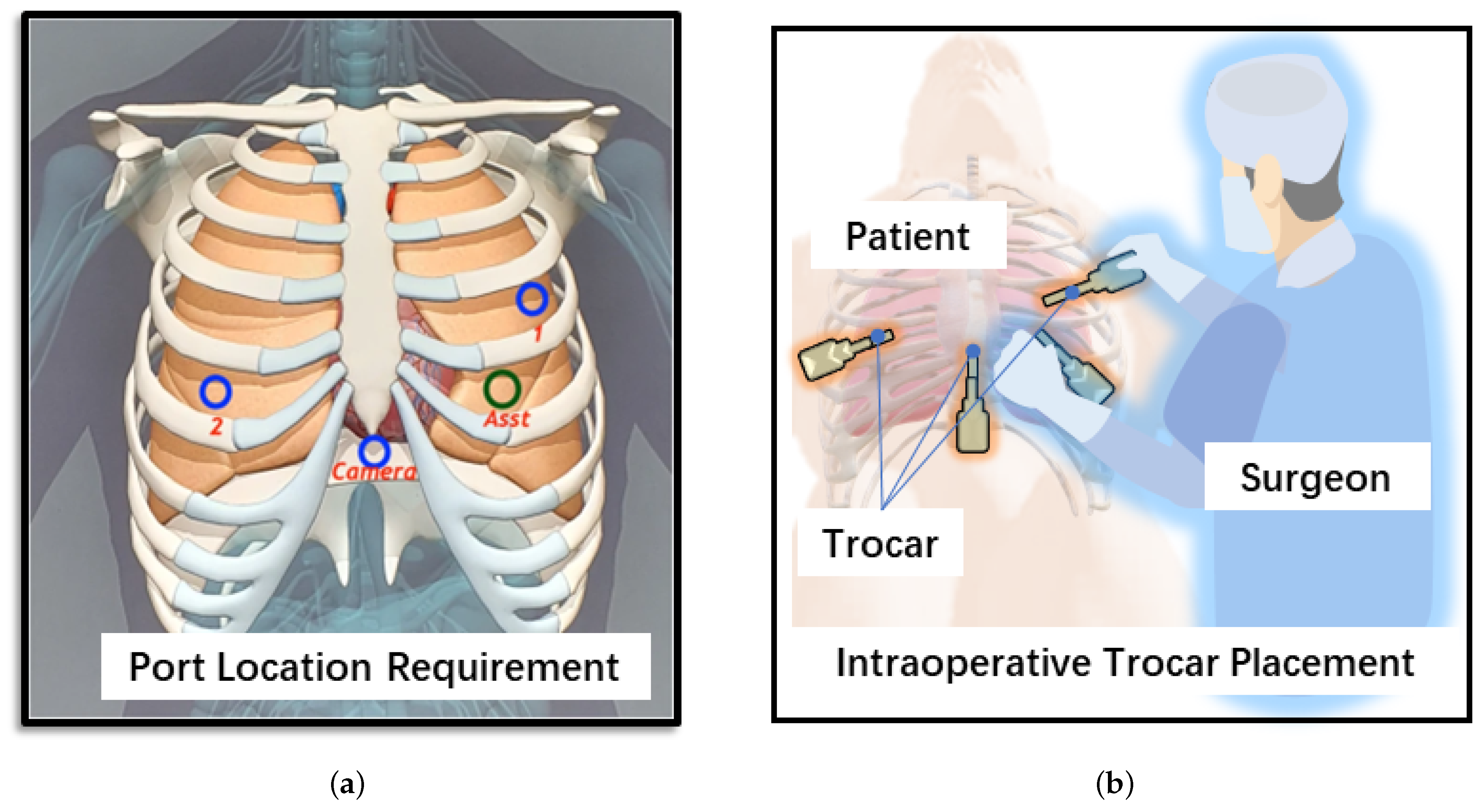

- Vinh, V.H.; Quang, N.V.D.; Thanh, D.D.M.; Phong, T.V.L. Robotic video-assisted thoracoscopic surgery using multiport triangular trocar configuration: Initial experience at a single center. J. Cardiothorac. Surg. 2021, 16, 77. [Google Scholar] [CrossRef] [PubMed]

- Duan, X.; Tian, H.; Li, C.; Han, Z.; Cui, T.; Shi, Q.; Wen, H.; Wang, J. Virtual-Fixture Based Drilling Control for Robot-Assisted Craniotomy: Learning from Demonstration. IEEE Robot. Autom. Lett. 2021, 6, 2327–2334. [Google Scholar] [CrossRef]

- Huang, K.; Chitrakar, D.; Rydén, F.; Chizeck, H.J. Evaluation of Haptic Guidance Virtual Fixtures and 3D Visualization Methods in Telemanipulation—A User Study. Intell. Serv. Robot. 2019, 12, 289–301. [Google Scholar] [CrossRef]

| Dependency on Pre-Operative Information | Convenience of Dynamic Updates during Surgery | Consistency with Irregular Body Surfaces in Surgery | Ease of VF Stiffness Transformation | |

|---|---|---|---|---|

| Geometric primitives | High | Inconvenience | Low | Difficult |

| Anatomical structures | High | Inconvenience | High | Human Intent Recognition Required, Moderately Challenging |

| Implicit functions | High | Good | High | Human Intent Recognition Required, Moderately Challenging |

| Point clouds | Low | Convenience | Good | Virtual Force Fields Can Be Utilized, Relatively Easy |

| Symbol | Parameter | Value |

|---|---|---|

| Maximum value of the force generated by a single spherical repulsive force field | 40 N | |

| Maximum value of the force generated by a single spherical attractive force field | 8 N | |

| Parameter in sigmoid function for repulsive force field | 0.25 | |

| Parameter in sigmoid function for attractive force field | 0.17 | |

| Radius of the small shell around an attractive point | 0.9 mm | |

| Basic radius of the cavity | 50 mm | |

| Scale factor describing the relationship between cavity radius and PDCP velocity | 0.5 |

| Volunteer | Port 1 (mm) | Port 2 (mm) | Port 3 (mm) | Port 4 (mm) | Mean (mm) | Completion Time (s) | |

|---|---|---|---|---|---|---|---|

| VF assistance | Volunteer 1 | 0.42 | 0.61 | 0.56 | 0.81 | 0.60 | 8.42 |

| Volunteer 2 | 0.28 | 0.50 | 0.46 | 0.89 | 0.53 | 7.51 | |

| Volunteer 3 | 0.58 | 0.44 | 0.35 | 0.98 | 0.59 | 8.10 | |

| Volunteer 4 | 0.12 | 0.58 | 0.44 | 0.85 | 0.50 | 8.03 | |

| Volunteer 5 | 0.16 | 0.76 | 0.32 | 0.40 | 0.41 | 7.58 | |

| Volunteer 6 | 0.22 | 0.33 | 0.42 | 0.55 | 0.38 | 7.92 | |

| Mean (mm or s) | 0.30 | 0.54 | 0.43 | 0.75 | 0.50 | 7.93 | |

| Free motion | Volunteer 1 | 1.12 | 1.33 | 1.40 | 0.64 | 1.12 | 15.25 |

| Volunteer 2 | 1.18 | 1.27 | 2.21 | 1.89 | 1.64 | 15.08 | |

| Volunteer 3 | 0.92 | 0.84 | 0.87 | 1.57 | 1.05 | 12.57 | |

| Volunteer 4 | 1.46 | 1.81 | 1.63 | 0.88 | 1.45 | 10.81 | |

| Volunteer 5 | 1.96 | 1.73 | 0.52 | 0.74 | 1.24 | 13.73 | |

| Volunteer 6 | 1.82 | 1.69 | 1.66 | 0.74 | 1.48 | 16.16 | |

| Mean (mm or s) | 1.41 | 1.45 | 1.38 | 1.08 | 1.33 | 13.93 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, Y.; Zhu, P.; Wang, T.; Mai, H.; Yeh, X.; Yang, L.; Wang, J. Dynamic Virtual Fixture Generation Based on Intra-Operative 3D Image Feedback in Robot-Assisted Minimally Invasive Thoracic Surgery. Sensors 2024, 24, 492. https://doi.org/10.3390/s24020492

Shi Y, Zhu P, Wang T, Mai H, Yeh X, Yang L, Wang J. Dynamic Virtual Fixture Generation Based on Intra-Operative 3D Image Feedback in Robot-Assisted Minimally Invasive Thoracic Surgery. Sensors. 2024; 24(2):492. https://doi.org/10.3390/s24020492

Chicago/Turabian StyleShi, Yunze, Peizhang Zhu, Tengyue Wang, Haonan Mai, Xiyang Yeh, Liangjing Yang, and Jingfan Wang. 2024. "Dynamic Virtual Fixture Generation Based on Intra-Operative 3D Image Feedback in Robot-Assisted Minimally Invasive Thoracic Surgery" Sensors 24, no. 2: 492. https://doi.org/10.3390/s24020492