1. Introduction

According to a report from the World Health Organization, approximately 28–35% of older adults fall each year, leading to serious injury or death [

1]. Therefore, intelligently detecting falls in indoor conditions can reduce the risk of the elderly injuring themselves.

Various technologies have been adopted to detect falls. Existing fall detection methods require wearable sensors [

2]. Accelerometers have been widely used in wearable methods, and a velocity threshold can be set to detect fall events [

3,

4]; however, these may be forgotten because of their inconvenience. Vision-based methods eliminate the need to wear something, but they are costly, sensitive to the lighting conditions, and invade privacy [

5,

6]. Recently, radar sensors have become more popular in fall detection system due to the advantages compared with other sensing technologies: (a) convenience over wearable technologies [

7]; (b) high sensitivity to motion compared to depth sensors in complex living environments and weak lighting conditions; (c) privacy compliance over vision sensors [

8]; and (d) low hardware cost compared with other sensors [

9]. Typical radars for human fall detection are continuous wave (CW) radars and frequency-modulated continuous wave (FMCW) radars. A CW radar signal was converted into the time–frequency domain and extracted artificial features for detecting a falling person [

10,

11]. Doppler-time signatures recorded in the CW signals were used for the training of a machine learning algorithm [

12]. However, CW radar can only provide velocity information. Due to the lack of information richness, actions with similar postures, such as sitting and squatting, may lead to inaccuracies. A better choice is to use an FMCW radar, which can simultaneously provide the range, Doppler, and angle information of the targets and also high sensitivity to motion [

13].

Traditionally, researchers have explored serval methods based on FMCW radars, which range from 57–85 GHz [

14]. The Doppler information could describe the velocity attribute of a motion; thus, the range-Doppler map has been widely used in FMCW radar-based fall detection methods proposed in the literature [

15,

16,

17,

18]. The Doppler-time map, including time information, was directly used as a feature to detect fall events [

18]. Many studies on FMCW radar-based fall detection rely on the time–frequency characteristics of the FMCW radar return signal, including the frequency magnitude, frequency ratio, and the duration of the motion [

19]. However, similar to CW radar-based methods, these methods cannot provide spatial information, and similar motions may lead to inaccuracies. Micro-Doppler and spatial information have been used to achieve high accuracy, proving that deep learning methods are superior to traditional artificial feature extraction methods [

20]. An FMCW radio has been used to obtain the 3D position information of the human body and heatmaps in both horizontal and vertical directions [

17]. However, there is still a problem in combining 3D location information: achieving high angular resolution using radars with large antenna arrays.

To utilize 3D spatial information, recent innovations in human activity detection have explored point clouds from radar [

21,

22,

23], in which each point contains a 3D position in space. However, in contrast to LiDAR and camera sensors, there are two main challenges in these studies: (1) the point clouds generated by mmWave radar are usually sparse and of low resolution, and (2) the point clouds include many ghost points caused by the multipath effect. As a result, the classification accuracy and reliability may be negatively affected. To address these challenges, several methods have been designed for use in conjunction with high-resolution radars. 12Txs-16Rxs antenna arrays have been used to generate high-quality point clouds [

22]. Hawkeye generated 2D depth images using radar intensity maps obtained from SAR scans [

23]. However, although large antenna arrays and SAR technologies can improve the resolution, they are still very slow and may not be practical in many applications that require a short response time and low-cost hardware. In addition, sparsity-related methods and deep learning-based methods have been used for point clouds’ quality enhancement [

24]. Some sparsity-related methods, such as K-mean [

25] and density-based spatial clustering of applications with noise (DBSCAN) algorithm [

26], have been used to cluster firstly to remove outliers in the point clouds. However, these technologies could not adequately remove a sufficient number of outlier points. In recent studies, a few deep learning-based methods have been developed based on PointNet [

27]. After learning a mapping from the noisy input, they can automatically generate a set of clean points. Inspired by the PointNet, PCN combined a permutation invariant and non-convolutional feature extractor to complete a point cloud from a partial input, and then used a refinement block to denoise the prediction to produce the final point cloud [

28]. GPDNet was applicable to denoise point clouds based on graph-convolutional layers [

29]. However, most of these methods used LiDAR or other sensor applications and extracted pointwise features. Hence, they may not be efficient for radar-based point clouds because of their very low resolution.

In this study, we propose an FMCW radar-based fall detection method that investigates 3D point clouds while operating at 24 GHz. These systems have not been studied well owing to hardware limitations. First, we obtained raw point clouds from the radar. We then designed a new model to transform the raw points into high-quality point clouds that are closer to the ground truth. Next, we estimated the distribution parameters in the point clouds for classification.

The main contributions of this paper are as follows:

- (1)

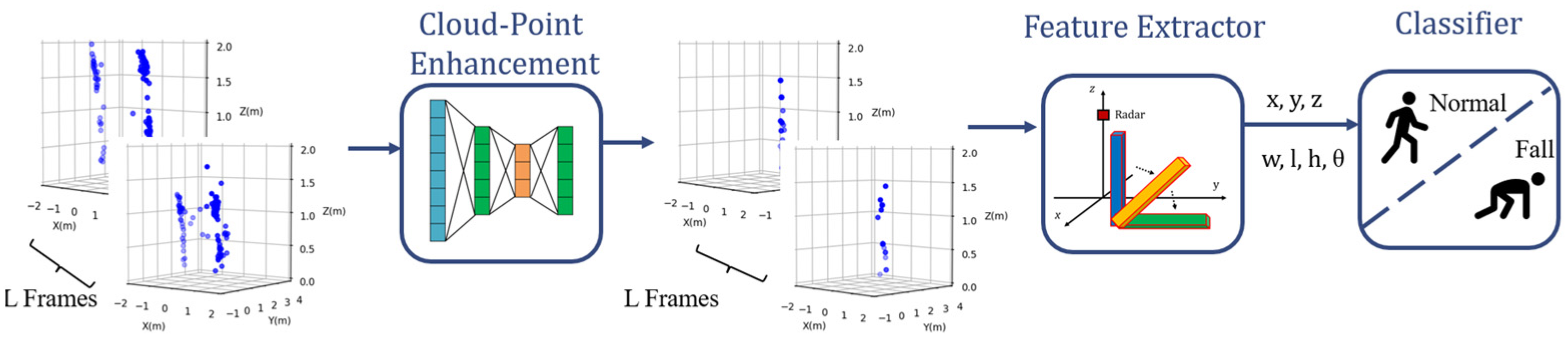

We propose an efficient fall detection system that uses a small, low-cost radar. As shown in

Figure 1, the novel framework is primarily composed of three parts: point cloud enhancement (PCE) for point cloud quality improvement, a feature extractor for human pose parameter extraction, and a classifier for classifying normal events and fall events;

- (2)

A PCE model is introduced to transform low-quality point clouds into high-quality point clouds and to reconstruct the shape of the point clouds using the shape of human body. A novel 3D point-box hybrid regression loss function based on pointwise and 3D bounding box features is proposed as a substitute for the traditional loss function;

- (3)

Our system works on sparse and noisy raw radar data without using expensive hardware or synthetic aperture radar (SAR) scans.

The remainder of this article is organized as follows.

Section 2 provides an overview of the radar system and signal processing flow. In

Section 3, the details of the proposed method are presented. The results are discussed in

Section 4. Finally,

Section 5 concludes this study.

Figure 1.

Proposed fall detection system based on PCE model.

Figure 1.

Proposed fall detection system based on PCE model.

2. Radar System Design

An FMCW radar is often used to estimate the range and velocity of a target using its radio frequency signals. Each transmitted signal

is a chirp signal, the analytical expression [

30] of which is

where

denotes the carrier frequency, and

is the chirp constant.

Referring to the Boulic model [

31], the echo from the

ith human body part is a time-delayed vision of the transmitted signal, and the received baseband signal can be expressed as

where

is the distance between the

ellipsoidal center and the radar, and

is the speed of light. The echo from the entire human body can be expressed as

where

is the attenuation coefficient, which is governed by the radar cross section for different body regions, and M is the number of scattering points of human body parts.

As shown in

Figure 2, the raw data from each channel generated a 3D data cube. FMCW radar signal processing started with the sampled echoes that were transferred to the range-Doppler matrix [

32]. First, the range of the fast Fourier transform (FFT) assisted in estimating the range of the targets, and the second FFT determined the Doppler frequency. The moving target indicator distinguished the targets from the background. For more reliable detection, a constant false alarm rate was used to detect the targets against the background noise. A direction-of-arrival (DOA) estimation algorithm was used to estimate the angle of the target.

For angle estimation, both 3D FFT and the multiple signal classification (MUSIC) algorithm [

33] are popular methods. Compared with the MUSIC algorithm, 3D FFT has computing efficiency, but the resolution of 3D FFT is not sufficient for detecting closely spaced points. To obtain a better image of the human body and low computing cost, based on TDM-MIMO antenna arrays in

Figure 3, after we obtained eight virtual receiver samples, a 3D FFT was used to obtain the azimuth angle

, and the MUSIC algorithm was used to estimate the elevation angle

. The output spherical coordinates were converted into Cartesian coordinates using transfer matrix T:

where

is the distance between the

and the radar. After the transformation, we obtained the position values

of each point in each frame in Cartesian coordinates.