A Real-Time Semantic Map Production System for Indoor Robot Navigation

Abstract

1. Introduction

- It is more human-friendly. The robot platform understands the environment in the same way a human understands it.

- It is autonomous. Through semantic navigation, a robot can perform independent action(s) as long as it understands its environment.

- It is efficient. The robot does not need to explore the entire environment to decide its route. Instead, it can choose its path based on the fastest or shortest route.

- It is robust. The robot platform can recover missing navigation information.

- It discusses recently developed semantic navigation systems for indoor robot environments.

- It designs an efficient semantic map production system for indoor robot navigation.

- It assesses the proposed system’s efficiency using the robot operating system (ROS) development environment and through the employment of a set of reliable validation metrics.

2. Related Works

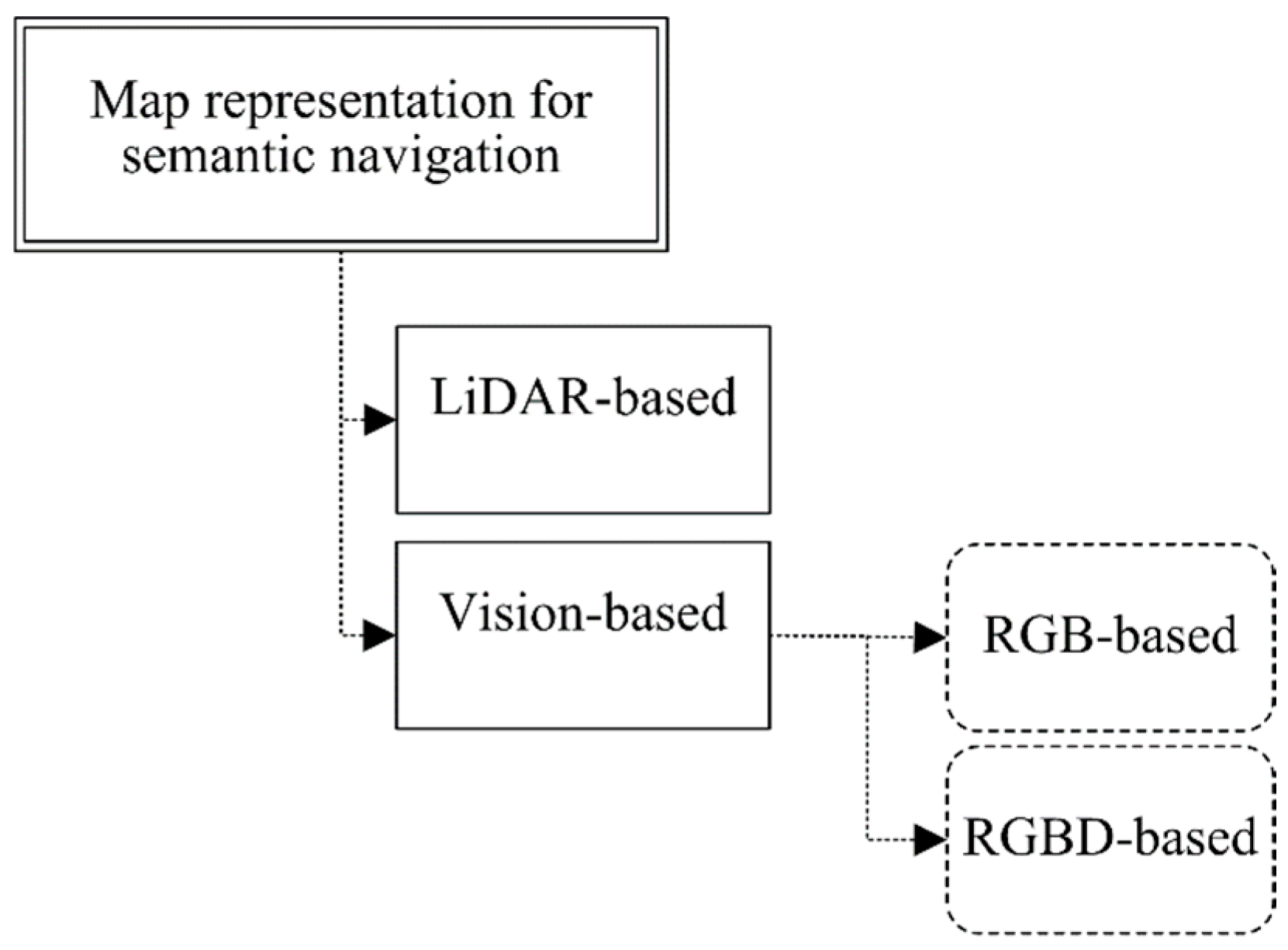

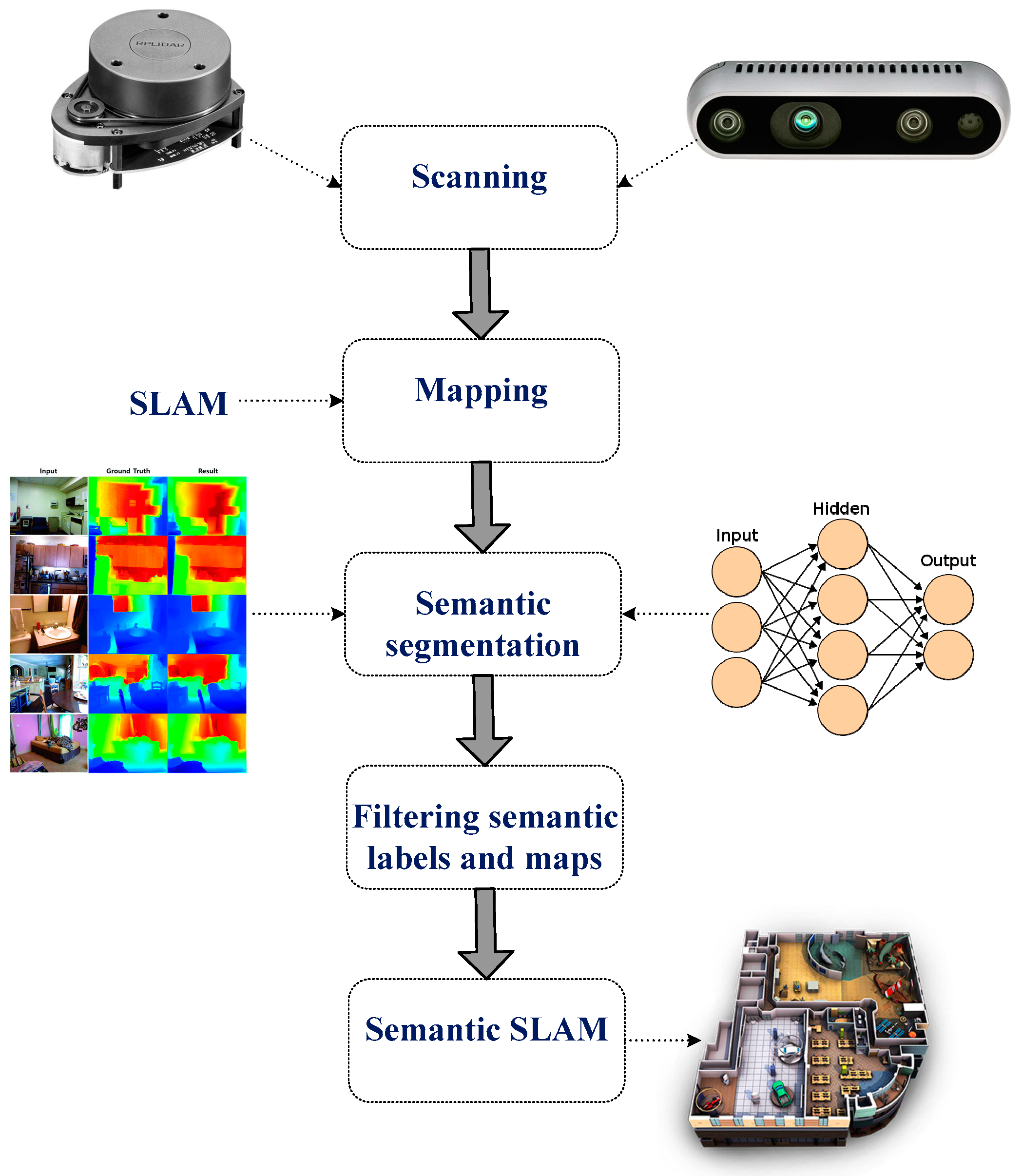

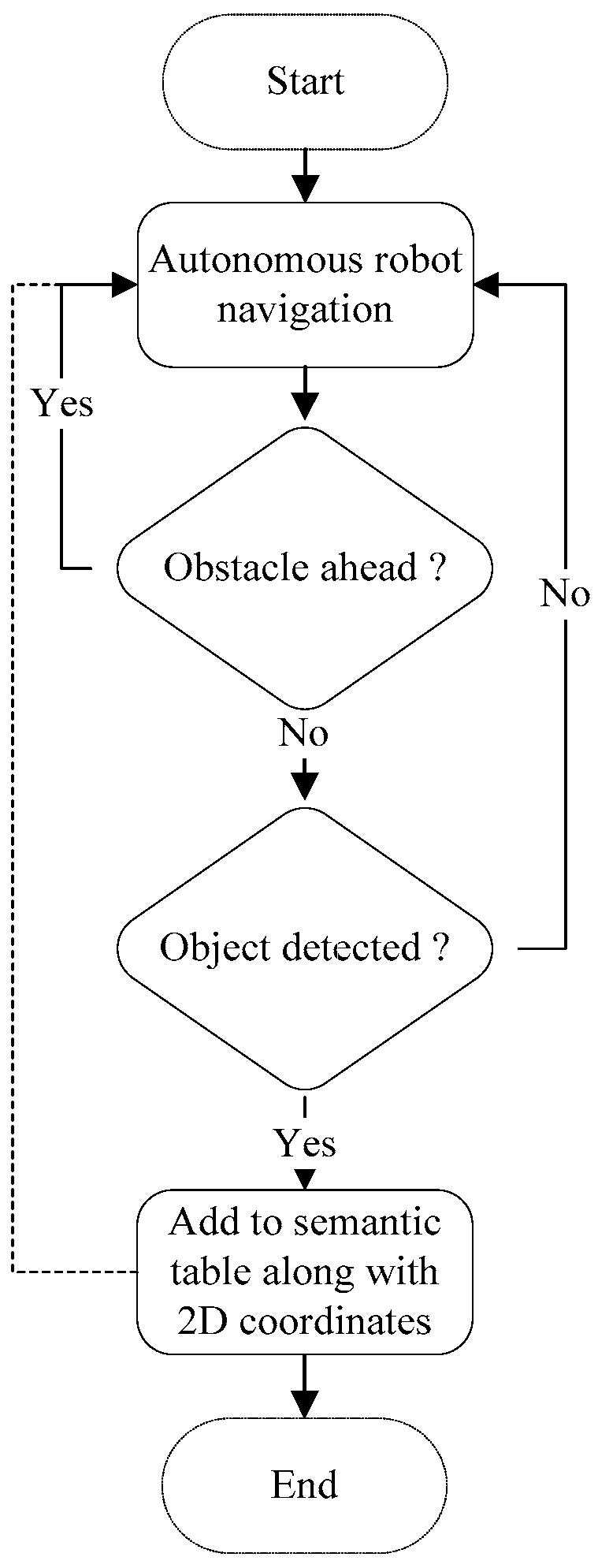

3. Semantic Map Representation Approach

| Algorithm 1: Semantic Map Production |

| 01: let Ax,y is the 2D navigation area with the dimensions of x as width and y as height 02: let mr is the mobile robot in the navigation environment 03: let mr(x,y) is the current 2D location of the mobile robot in the navigation environment 04: let mrmaxX is the maximum reached point by mr at the x-axis 05: let mrmaxY is the maximum reached point by mr at the y-axis 06: let yoloCP is the trained model on two datasets: COCO and Pascal 07: let depth_to_objectk is the depth distance to the detected object k 08: let obs_dist is the distance in centimeter (cm) to the heading object 09: let navigate_fun is the navigation function in the area of interest 10: let sem_table is the semantic table that includes a list of objects along with 2D coordinates 11: while (mrmaxX < x && mrmaxY < y): 12: while obs_dist > 100: 13: if (object_detected(yoloCP, depth_to_object)): 14: sem_table(object_detected, mr(x,y)) // add the new detected object along with its 2D coordinates 15: else: navigate_fun 16: end |

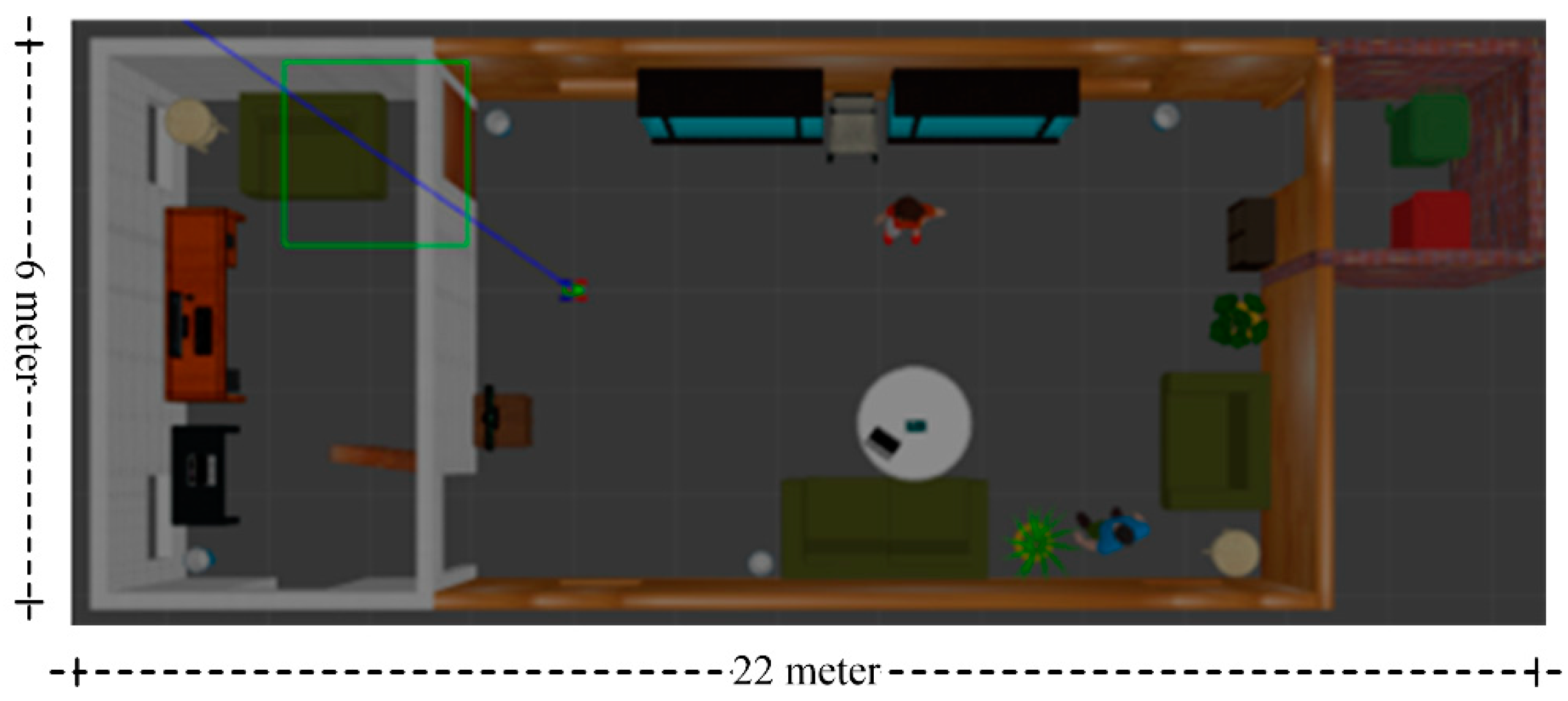

4. Experimental Results

4.1. Development Environment

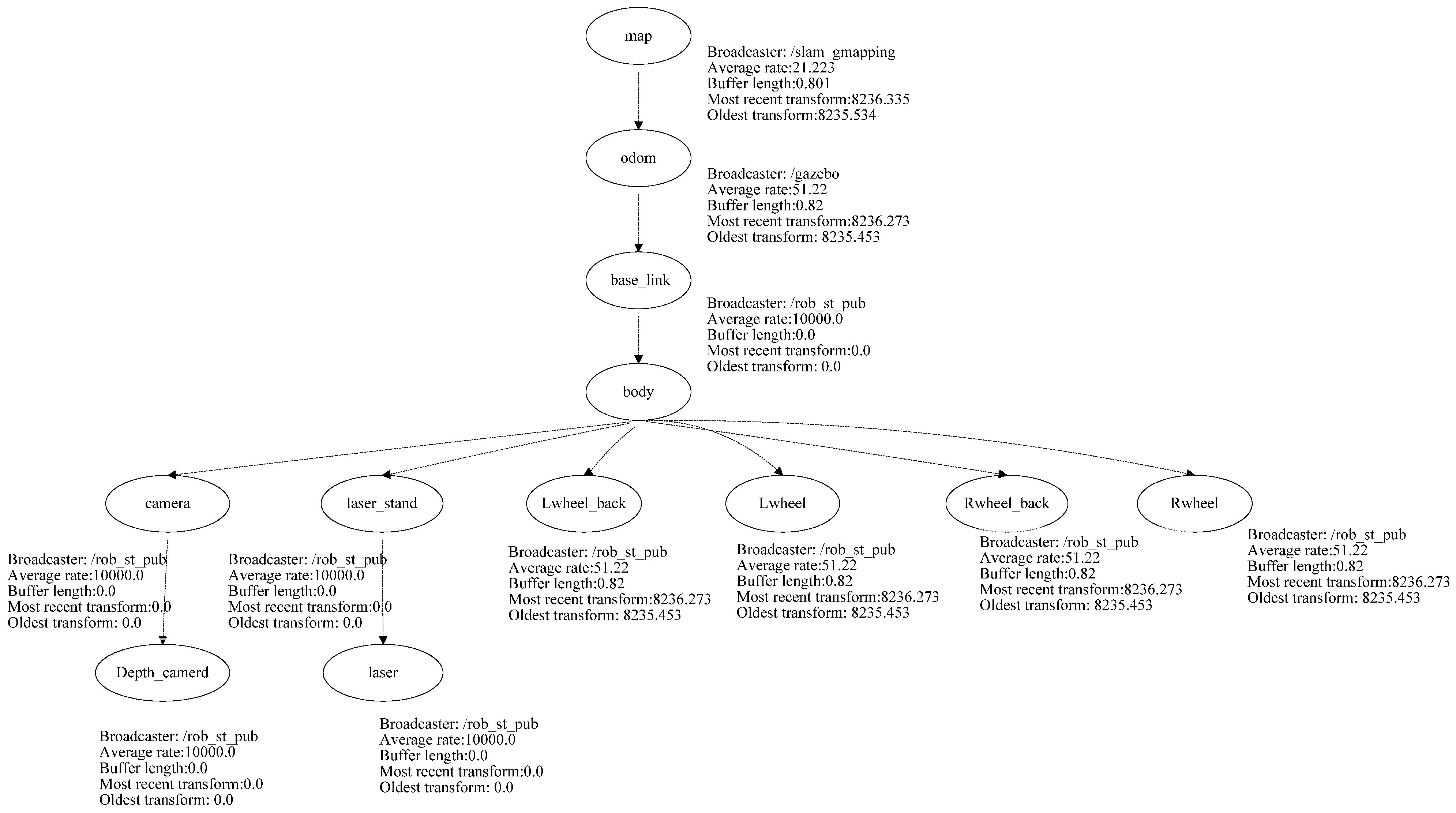

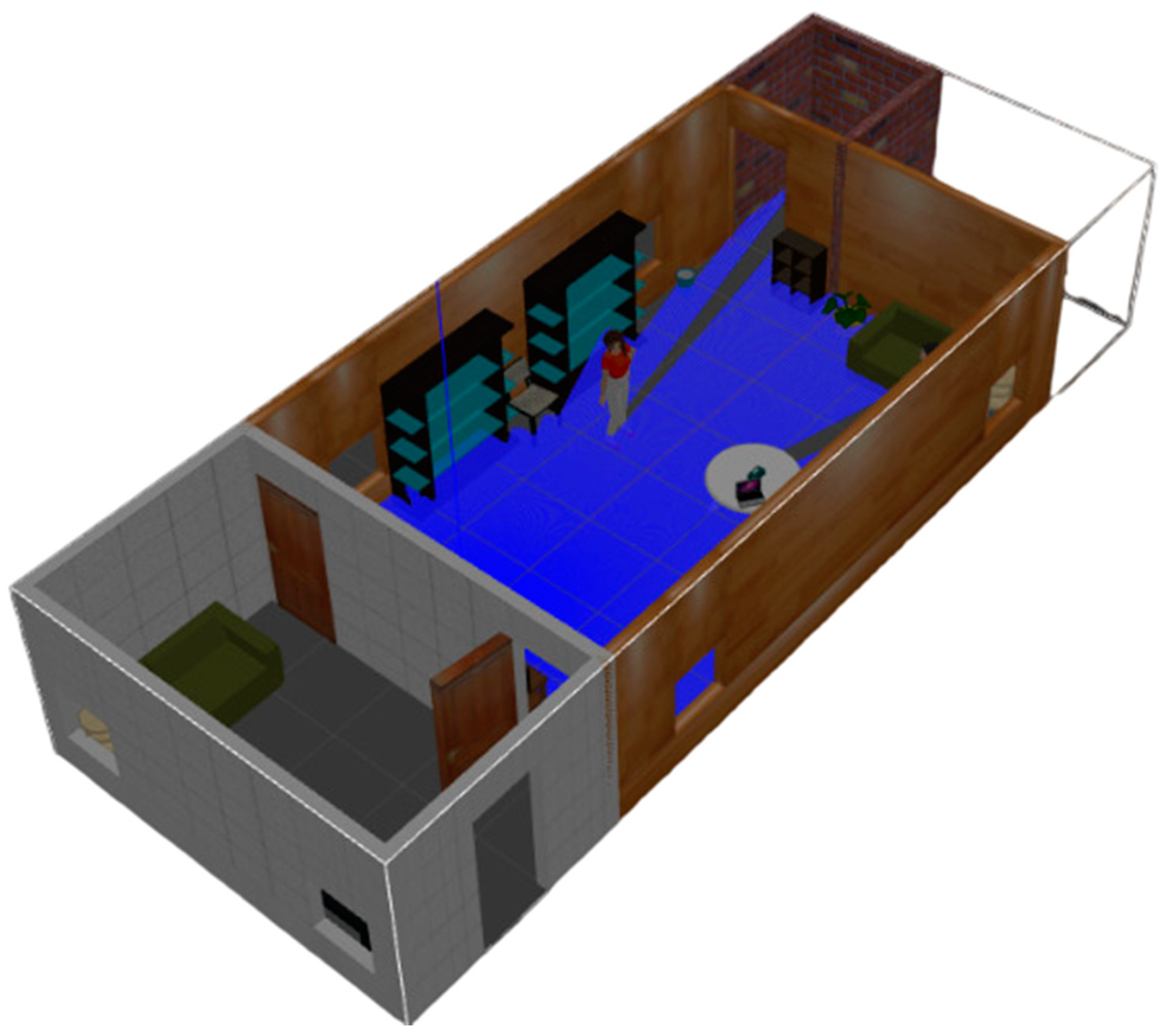

4.2. ROS-Based Semantic Map Representation System

- 1. Gazebogui: This node simulates the developed semantic map representation system on a friendly graphical user interface.

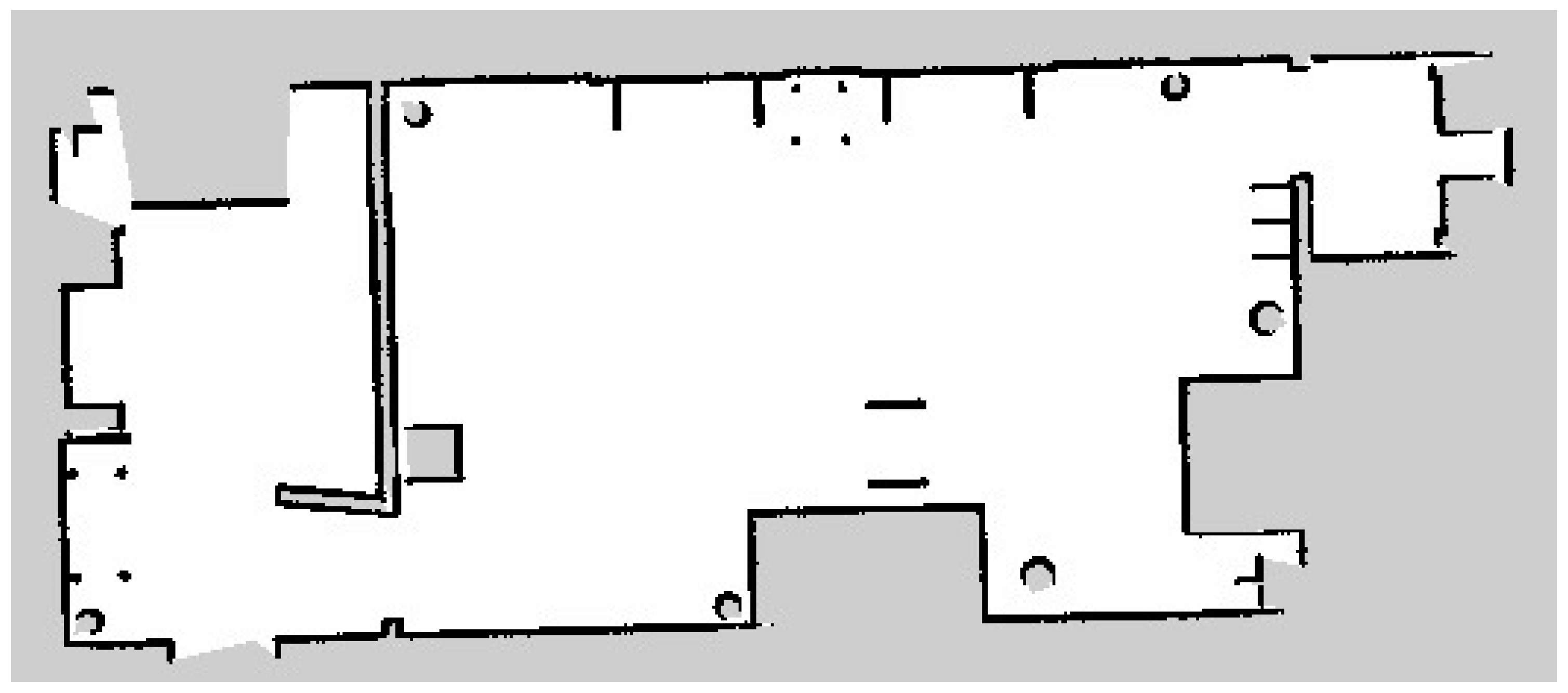

- 2. Slam_gmapping: This node builds a 2D map using the LiDAR unit. The data received from the LiDAR unit is used to construct a geometric map, in which the output of this node is a 2D area with geometry information.

- 3. Rob_st_pub: This node reveals the current status of the robot platform and broadcasts status information to other nodes for the purpose of exploiting this information in constructing the semantic map area.

- 4. Move_base: This node offers an ROS interface for configuring, running, and interacting with the navigation stack on the robot platform. In addition, it controls the robot platform as it moves from one point to another.

- 5. N_rvis: This node visualizes the represented map area in 3D, in which the robot platform is visualized using the Rviz package.

- 6. Darknet_ros: This is an ROS package for object detection via the employment of the YOLO v3 classification model.

- 7. Darknet_ros_3d: This node offers bounding boxes in 3D in order to allow for object distance measurement. Through the employment of an RGB-D camera, the object and its estimated position can be computed.

- 8. Rover_auto_control: This node controls other ROS nodes, collecting the necessary LiDAR frames, performing object detection and classification, and finally constructing the semantic map for the area of interest.

4.3. Results

- The recognized objects ratio (ro): This refers to the total number of objects that have been correctly classified in the area of interest in comparison with the total number of objects in that area. This is expressed as follows:where j is the index number of a certain class, y is the total number of detected objects in the kth class, m is the total number of existing objects in the kth class, and n is the total number of objects in the simulated environment.

- The object recognition accuracy (objacc): This refers to the classification accuracy of recognized objects. Usually, the accuracy is estimated as a percentage of the recognition accuracy. The objacc has been estimated using the function presented in YOLO v3 model.

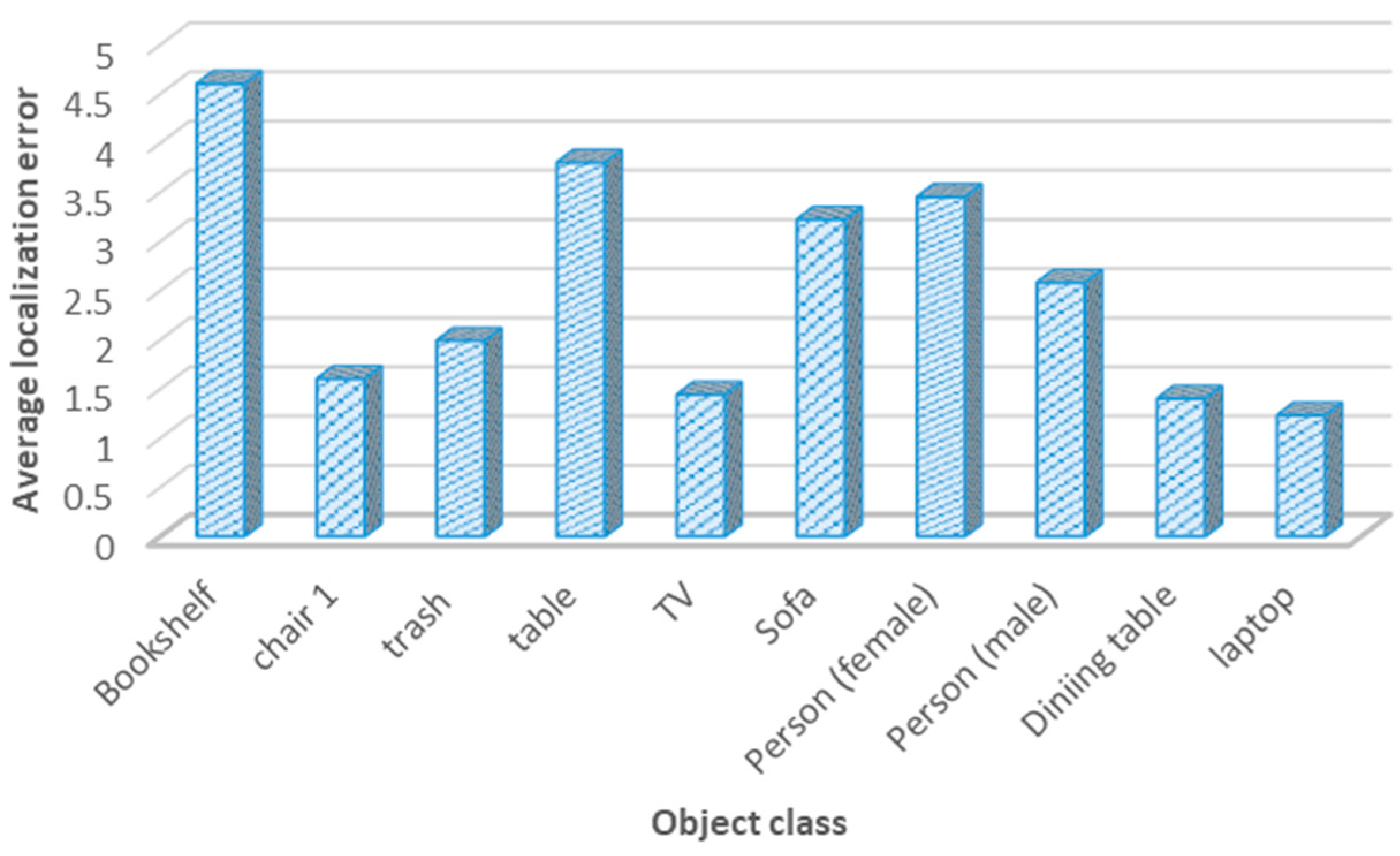

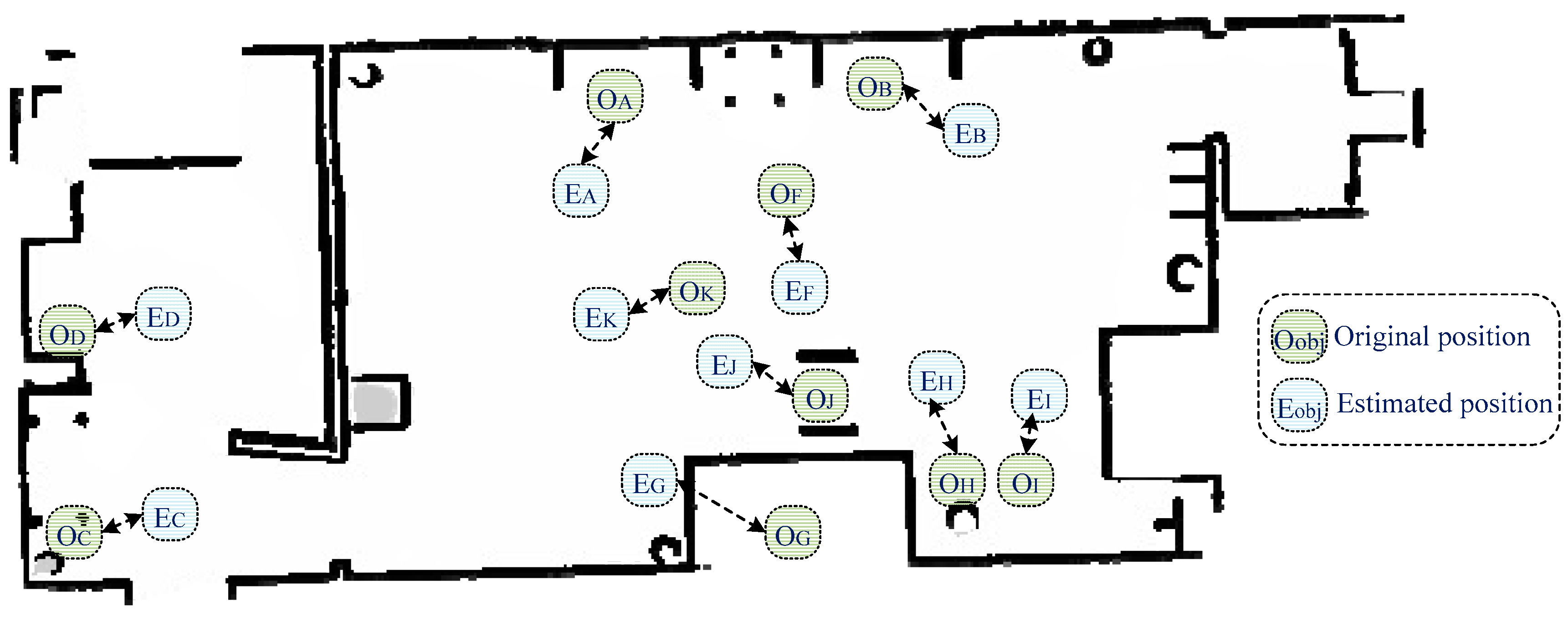

- The localization error (LE) of detected objects: This measures the average positioning error between the estimated 2D position (xe, ye) of an object and its actual 2D position (xa, ya) using the Euclidian distance formula, as follows:

- The geometry map error (maperr): This refers to the error percentage of the geometry map produced by the map production system versus the actual geometry map. It can be estimated using the following formula:where Aest is the estimated area of the original map and the Aact is the actual area of the map.

- The semantic map accuracy: This refers to the difference between the semantic map using the developed system and the actual map area. It can

- be estimated based on measuring the error of semantic map (semerr) construction, as follows:

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gul, F.; Rahiman, W.; Alhady, S.S.N. A comprehensive study for robot navigation techniques. Cogent Eng. 2019, 6, 1632046. [Google Scholar] [CrossRef]

- Alamri, S.; Alamri, H.; Alshehri, W.; Alshehri, S.; Alaklabi, A.; Alhmiedat, T. An Autonomous Maze-Solving Robotic System Based on an Enhanced Wall-Follower Approach. Machines 2023, 11, 249. [Google Scholar] [CrossRef]

- Alhmiedat, T. Fingerprint-Based Localization Approach for WSN Using Machine Learning Models. Appl. Sci. 2023, 13, 3037. [Google Scholar] [CrossRef]

- Alamri, S.; Alshehri, S.; Alshehri, W.; Alamri, H.; Alaklabi, A.; Alhmiedat, T. Autonomous Maze Solving Robotics: Algorithms and Systems. Int. J. Mech. Eng. Robot. Res. 2021, 10, 668–675. [Google Scholar] [CrossRef]

- Crespo, J.; Castillo, J.C.; Mozos, O.M.; Barber, R. Semantic Information for Robot Navigation: A Survey. Appl. Sci. 2020, 10, 497. [Google Scholar] [CrossRef]

- Alqobali, R.; Alshmrani, M.; Alnasser, R.; Rashidi, A.; Alhmiedat, T.; Alia, O.M. A Survey on Robot Semantic Navigation Systems for Indoor Environments. Appl. Sci. 2023, 14, 89. [Google Scholar] [CrossRef]

- Alenzi, Z.; Alenzi, E.; Alqasir, M.; Alruwaili, M.; Alhmiedat, T.; Alia, O.M. A Semantic Classification Approach for Indoor Robot Navigation. Electron. 2022, 11, 2063. [Google Scholar] [CrossRef]

- García, F.; Jiménez, F.; Naranjo, J.E.; Zato, J.G.; Aparicio, F.; Armingol, J.M.; de la Escalera, A. Environment perception based on LIDAR sensors for real road applications. Robotica 2011, 30, 185–193. [Google Scholar] [CrossRef]

- Álvarez-Aparicio, C.; Guerrero-Higueras, .M.; Rodríguez-Lera, F.J.; Clavero, J.G.; Rico, F.M.; Matellán, V. People Detection and Tracking Using LIDAR Sensors. Robotica 2019, 8, 75. [Google Scholar] [CrossRef]

- Von Haaren, C.; Warren-Kretzschmar, B.; Milos, C.; Werthmann, C. Opportunities for design approaches in landscape planning. Landsc. Urban Plan. 2014, 130, 159–170. [Google Scholar] [CrossRef]

- Ma, F.; Cavalheiro, G.V.; Karaman, S. Self-Supervised Sparse–to–Dense: Self-Supervised Depth Completion from LiDAR and Monocular Camera. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3288–3295. [Google Scholar]

- Bruno, D.R.; Osorio, F.S. A Comparison of Traffic Signs Detection Methods in 2D and 3D Images for the Benefit of the Navigation of Autonomous Vehicles. In Proceedings of the Latin American Robotic Symposium, Brazilian Symposium on Robotics (SBR) and 2018 Workshop on Robotics in Education (WRE), João Pessoa, Brazil, 6–10 November 2018; pp. 26–32. [Google Scholar]

- Dang, T.-V.; Bui, N.-T. Multi-Scale Fully Convolutional Network-Based Semantic Segmentation for Mobile Robot Navigation. Electronics. 2023, 12, 533. [Google Scholar] [CrossRef]

- Deng, W.; Huang, K.; Chen, X.; Zhou, Z.; Shi, C.; Guo, R.; Zhang, H. Semantic RGB-D SLAM for Rescue Robot Navigation. IEEE Access 2020, 8, 221320–221329. [Google Scholar] [CrossRef]

- Teso-Fz-Betoño, D.; Zulueta, E.; Sánchez-Chica, A.; Fernandez-Gamiz, U.; Saenz-Aguirre, A. Semantic Segmentation to Develop an Indoor Navigation System for an Autonomous Mobile Robot. Mathematics 2020, 8, 855. [Google Scholar] [CrossRef]

- Ferri, G.; Caselli, E.; Mattoli, V.; Mondini, A.; Mazzolai, B.; Dario, P. SPIRAL: A novel biologically-inspired algorithm for gas/odor source localization in an indoor environment with no strong airflow. Robot. Auton. Syst. 2008, 57, 393–402. [Google Scholar] [CrossRef]

- Fang, B.; Mei, G.; Yuan, X.; Wang, L.; Wang, Z.; Wang, J. Visual SLAM for robot navigation in healthcare facility. Pattern Recognit. 2021, 113, 107822. [Google Scholar] [CrossRef]

- Honda, A.; James, S. Averaging aggregation functions based on inclusion-exclusion integrals. In Proceedings of the 2017 Joint 17th World Congress of International Fuzzy Systems Association and 9th International Conference on Soft Computing and Intelligent Systems (IFSA-SCIS), Otsu, Japan, 27–30 June 2017; pp. 1–6. [Google Scholar]

- Barfield, W. Liability for Autonomous and Artificially Intelligent Robots. Paladyn, J. Behav. Robot. 2018, 9, 193–203. [Google Scholar] [CrossRef]

- Qi, X.; Wang, W.; Liao, Z.; Zhang, X.; Yang, D.; Wei, R. Object Semantic Grid Mapping with 2D LiDAR and RGB-D Camera for Domestic Robot Navigation. Appl. Sci. 2020, 10, 5782. [Google Scholar] [CrossRef]

- Zheng, C.; Du, Y.; Xiao, J.; Sun, T.; Wang, Z.; Eynard, B.; Zhang, Y. Semantic map construction approach for hu-man-robot collaborative manufacturing. Robot. Comput. Integr. Manuf. 2025, 91, 102845. [Google Scholar] [CrossRef]

- Zhao, C.; Mei, W.; Pan, W. Building a grid-semantic map for the navigation of service robots through human–robot interaction. Digit. Commun. Netw. 2015, 1, 253–266. [Google Scholar] [CrossRef]

- Dos Reis, D.H.; Welfer, D.; Cuadros, M.A.D.S.L.; Gamarra, D.F.T. Mobile Robot Navigation Using an Object Recognition Software with RGBD Images and the YOLO Algorithm. Appl. Artif. Intell. 2019, 33, 1290–1305. [Google Scholar] [CrossRef]

- Henke dos Reis, D.; Welfer, D.; de Souza Leite Cuadros, M.A.; Tello Gamarra, D.F. Object Recognition Software Using RGBD Kinect Images and the YOLO Algorithm for Mobile Robot Navigation. In Intelligent Systems Design and Applica-tions: 19th International Conference on Intelligent Systems Design and Applications; Springer International Publishing: Cham, Switzerland, 2019; pp. 255–263. [Google Scholar]

- Chehri, A.; Zarai, A.; Zimmermann, A.; Saadane, R. 2D autonomous robot localization using fast SLAM 2.0 and YOLO in long corridors. In Proceedings of the International Conference on Human-Centered Intelligent Systems, Beach Road, Singapore, 14–16 June 2021; pp. 199–208. [Google Scholar]

- Alotaibi, A.; Alatawi, H.; Binnouh, A.; Duwayriat, L.; Alhmiedat, T.; Alia, O.M. Deep Learning-Based Vision Systems for Robot Semantic Navigation: An Experimental Study. Technologies 2024, 12, 157. [Google Scholar] [CrossRef]

- Alhmiedat, T.; Marei, A.M.; Messoudi, W.; Albelwi, S.; Bushnag, A.; Bassfar, Z.; Alnajjar, F.; Elfaki, A.O. A SLAM-Based Localization and Navigation System for Social Robots: The Pepper Robot Case. Machines 2023, 11, 158. [Google Scholar] [CrossRef]

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A survey of modern deep learning based object detection models. Digit. Signal Process. 2022, 126, 103514. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2019, 9, 85–112. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The Open Images Dataset V4. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef]

- Bayoudh, K.; Knani, R.; Hamdaoui, F.; Mtibaa, A. A survey on deep multimodal learning for computer vision: Advances, trends, applications, and datasets. Vis. Comput. 2021, 38, 2939–2970. [Google Scholar] [CrossRef]

| Pascal Dataset | COCO |

|---|---|

| Person, bird, cat, cow, dog, horse, sheep, airplane, bicycle, boat, bus, car, motorbike, train, bottle, chair, dining table, potted plant, sofa, tv monitor. | Person, bicycle, car, motorbike, airplane, bus, train, truck, boat, traffic light, fire hydrant, stop sign, parking meter, bench, cat, dog, horse, sheep, cow, elephant, bear, zebra, giraffe, backpack, umbrella, handbag, tie, suitcase, frisbee, skis, snowboard, sports ball, kits, baseball bat, baseball glove, skateboard, surfboard, tennis racket, bottle, wine glass, cup, fork, knife, spoon, bowl. |

| Dataset | Application | # of Records | # of Classes | Size |

|---|---|---|---|---|

| COCO | Indoor | 330,000 | 80 | 25 GB |

| Pascal | Outdoor | 11,530 | 20 | 2 GB |

| Ref | Object Id | X-Cord | Y-Cord |

|---|---|---|---|

| 1. | 12 | 3.6 | 7.2 |

| 2. | 06 | 8.1 | 5.4 |

| … | … | … | … |

| Component | Parameter |

|---|---|

| Robot platform | Rover 2WD |

| Processor | Raspberry Pi 4 (4 GB RAM)—Raspberry P Ltd, Wales |

| LiDAR unit | A1 RPLiDAR A1M8 |

| Vision unit | OAK-D Pro—Luxonis |

| Actuators | 2-DC motors—TFK280SC-21138-45 |

| Power source | Lithium battery 11.1 volt 2000 mAh—HRB |

| Robot speed | 10 m/min |

| Frame per second (FPS) | 4 |

| LiDAR frame rate (LFR) | 20 |

| Object in Gazebo Simulation | Total |

|---|---|

| Chair | 4 |

| Vase | 3 |

| Potted plant | 2 |

| TV monitor | 1 |

| Person | 2 |

| Trash | 2 |

| Tissue box | 2 |

| Table breakfast | 2 |

| Bookshelf large | 3 |

| Object in Gazebo Simulation | Exist | Detected | Accuracy |

|---|---|---|---|

| Chair | 4 | 3 | 75% |

| Vase | 3 | 2 | 66% |

| Potted plant | 2 | 1 | 50% |

| TV monitor | 1 | 1 | 100% |

| Person | 2 | 2 | 100% |

| Trash | 2 | 1 | 50% |

| Laptop | 1 | 0 | 0% |

| Tissue box | 2 | 1 | 50% |

| Table breakfast | 2 | 2 | 100% |

| Bookshelf large | 3 | 3 | 100% |

| Object Class | Tag | Actual (x, y) | Estimated (x, y) | Euclidian Distance (m) |

|---|---|---|---|---|

| Chair | A | 2.723, 1.493 | 1.476, 0.785 | 1.43 |

| Bookshelf | B | 3.881, 1.662 | 4.308, −1.861 | 4.63 |

| Trash | C | −0.707, 1.616 | 0.837, −0.483 | 2.60 |

| TV monitor | D | −0.725, −1.265 | 1.282, 0.673 | 2.78 |

| Person (female) | F | 3.261, 0.636 | 5.778, −1.737 | 3.45 |

| Sofa | G | 3.054, −2.333 | 3.125, −0.790 | 1.54 |

| Plant side | H | 4.519, −2.884 | 3.229, −0.808 | 2.11 |

| Person (male) | I | 5.604, −2.410 | 2.445, −1.075 | 3.42 |

| Dining table | J | 3.337, 1.904 | 1.290, 1.443 | 2.86 |

| Laptop | K | 2.846, 1.509 | 1.094, 0.567 | 1.98 |

| Average localization error | 2.67 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alqobali, R.; Alnasser, R.; Rashidi, A.; Alshmrani, M.; Alhmiedat, T. A Real-Time Semantic Map Production System for Indoor Robot Navigation. Sensors 2024, 24, 6691. https://doi.org/10.3390/s24206691

Alqobali R, Alnasser R, Rashidi A, Alshmrani M, Alhmiedat T. A Real-Time Semantic Map Production System for Indoor Robot Navigation. Sensors. 2024; 24(20):6691. https://doi.org/10.3390/s24206691

Chicago/Turabian StyleAlqobali, Raghad, Reem Alnasser, Asrar Rashidi, Maha Alshmrani, and Tareq Alhmiedat. 2024. "A Real-Time Semantic Map Production System for Indoor Robot Navigation" Sensors 24, no. 20: 6691. https://doi.org/10.3390/s24206691

APA StyleAlqobali, R., Alnasser, R., Rashidi, A., Alshmrani, M., & Alhmiedat, T. (2024). A Real-Time Semantic Map Production System for Indoor Robot Navigation. Sensors, 24(20), 6691. https://doi.org/10.3390/s24206691