Marine Oil Pollution Monitoring Based on a Morphological Attention U-Net Using SAR Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Oil Spill Dataset

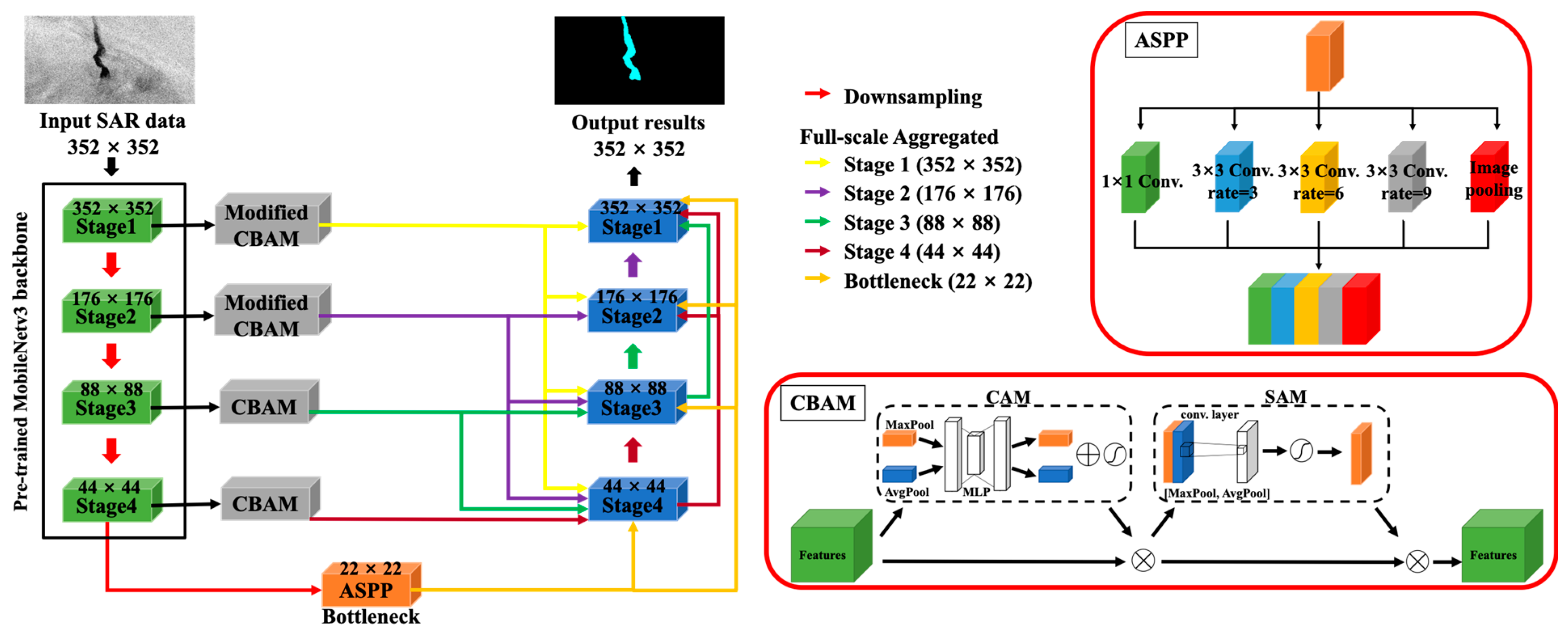

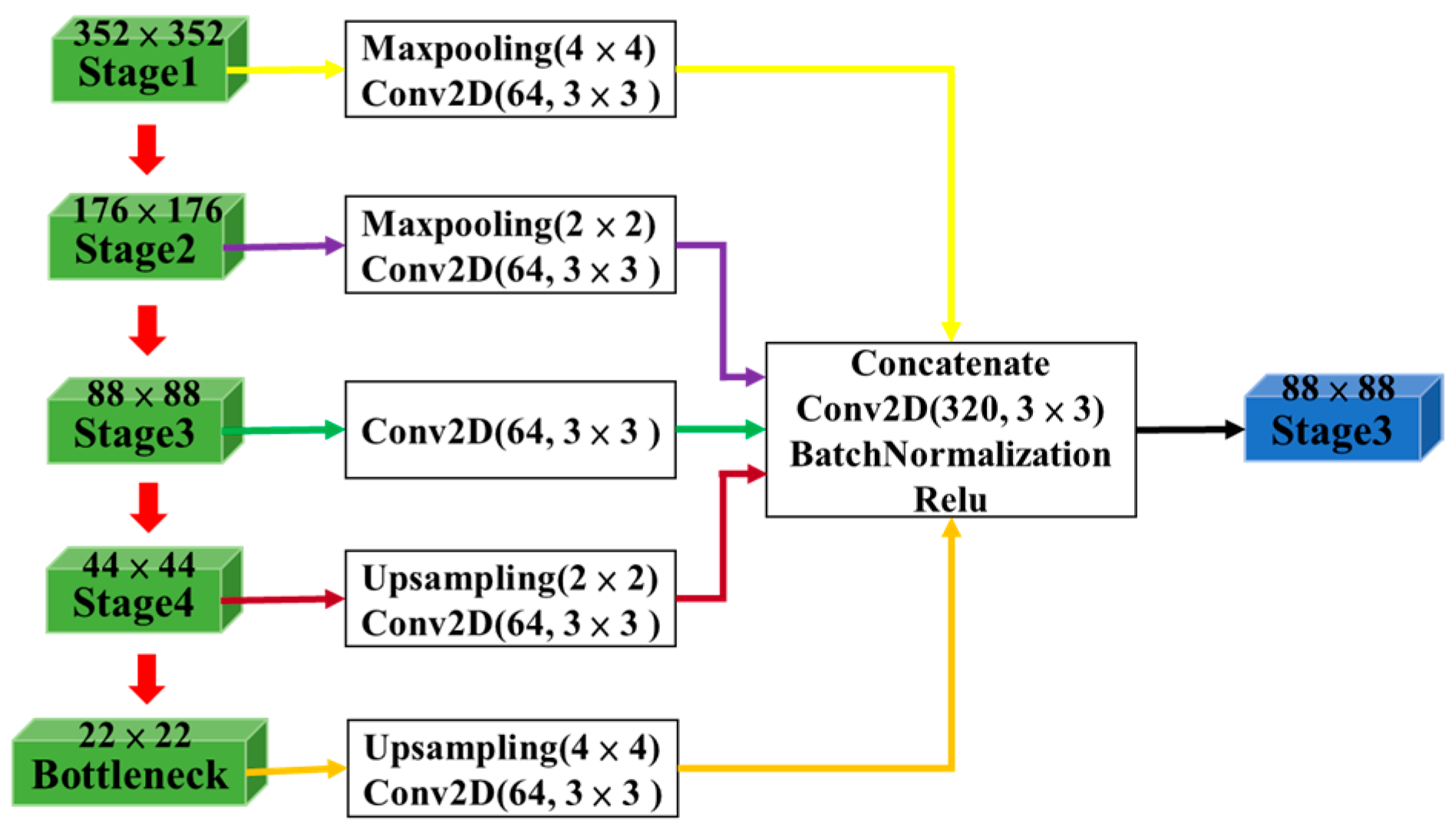

2.2. Improved Oil Spill Detection Model

2.2.1. FA-MobileUNet Model

2.2.2. Modified CBAM

2.3. Loss Function

2.4. Evaluation Metric

3. Results

3.1. Experimental Setting

3.2. Performance Evaluation

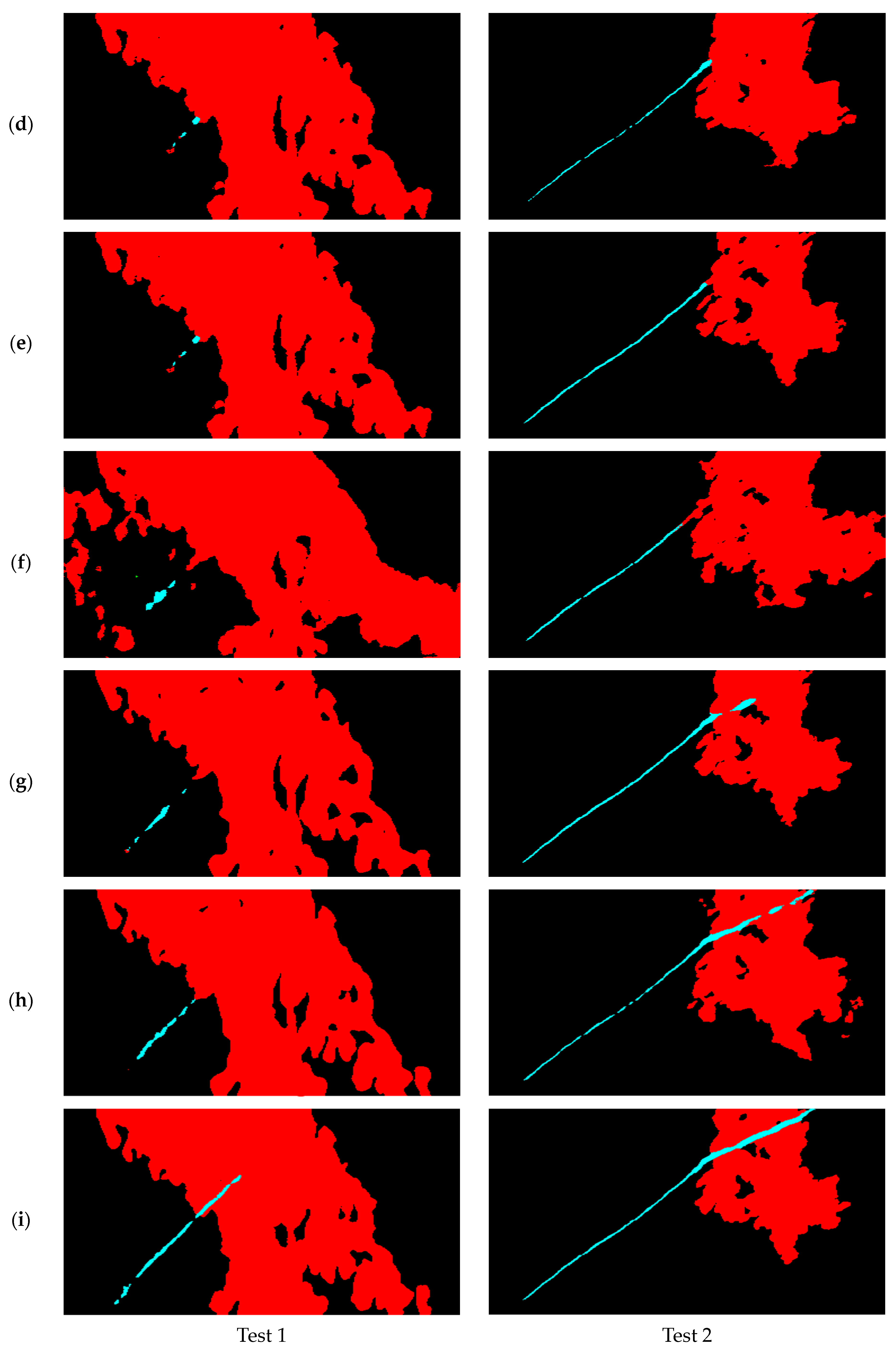

3.3. Segmentation Network Comparison

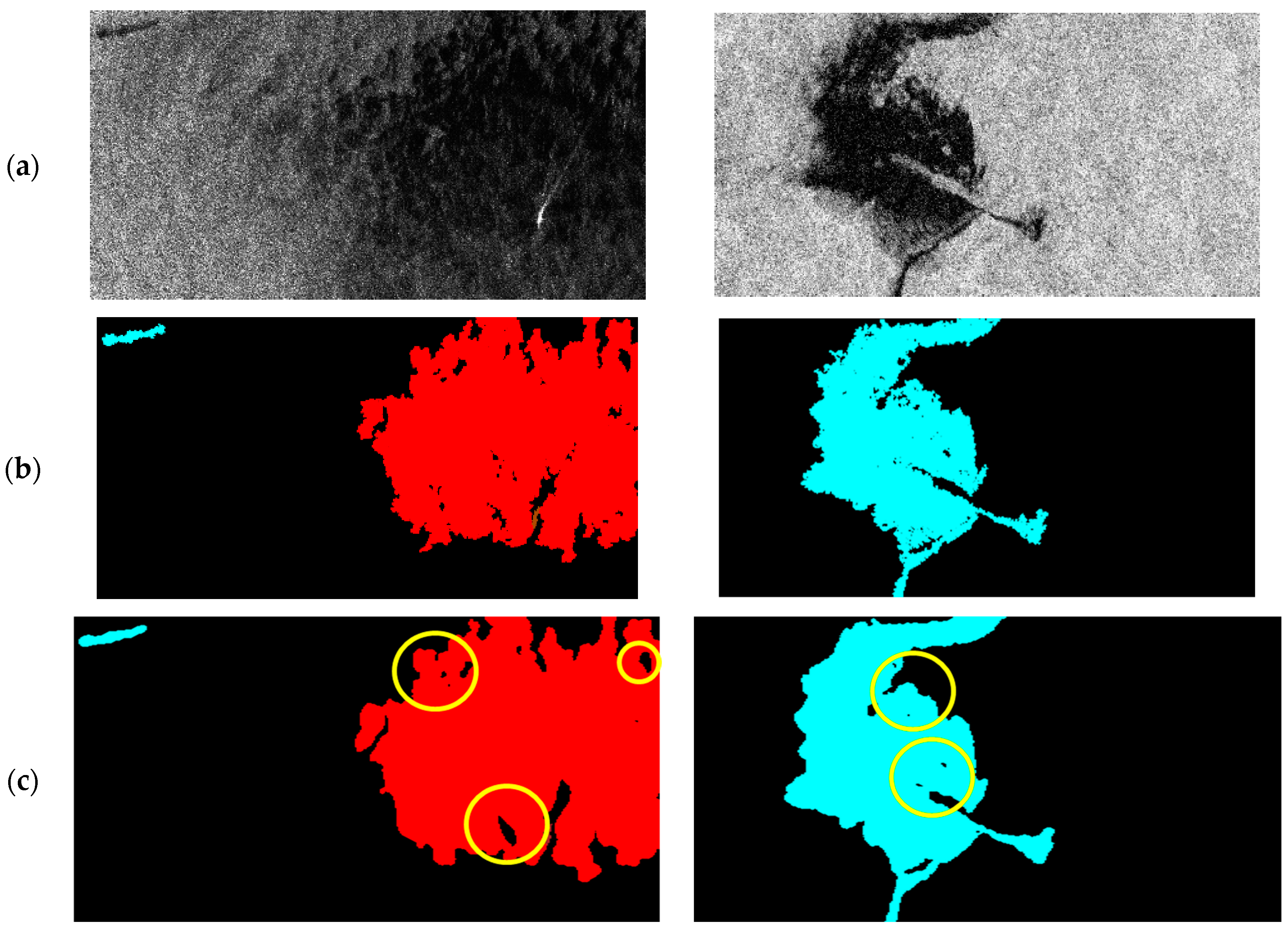

3.4. Oil Spill Detection Results Improvement

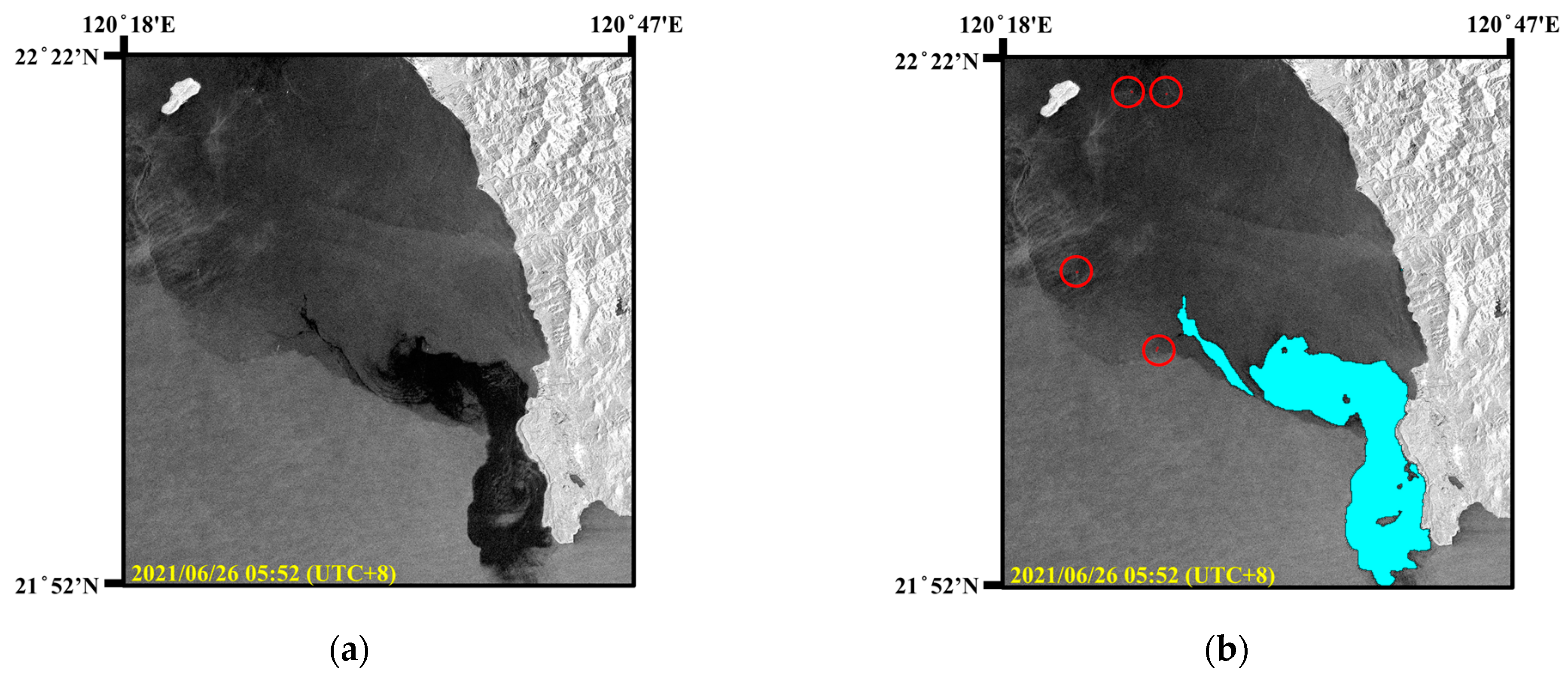

3.5. Oil Pollution Incidents

3.5.1. Oil Pollution Caused by Shipwreck

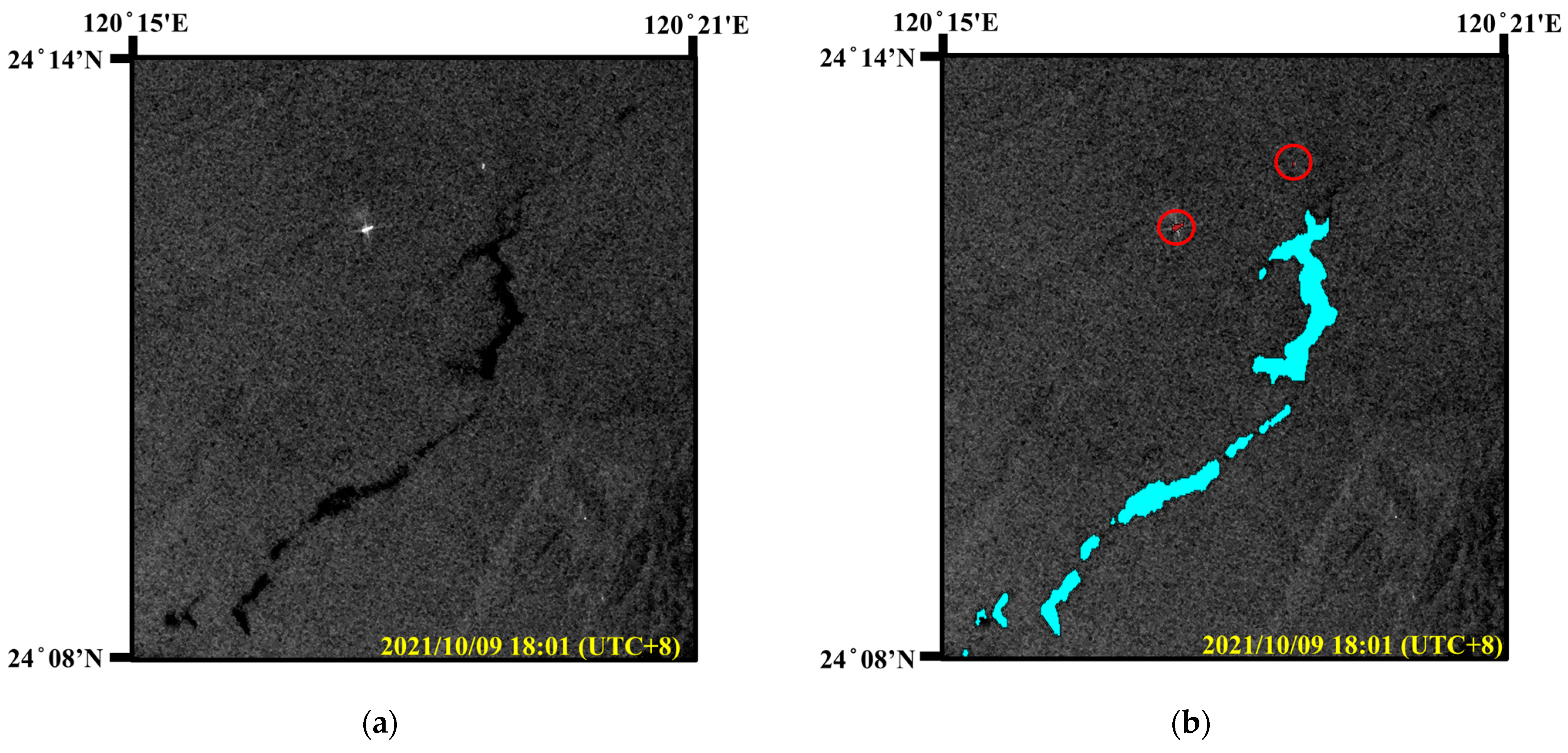

3.5.2. Undersea Oil Pipeline Rupture Incident

4. Discussion

4.1. Evaluation of the Morphological Attention Module

4.2. Predicting Suspicious Oil-Discharge Ship Combining SAR and AIS

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- International Marine Organization. Available online: https://www.imo.org/en/OurWork/Environment/Pages/Default.aspx (accessed on 1 July 2024).

- Zhang, B.; Matchinski, E.J.; Chen, B.; Ye, X.; Jing, L.; Lee, K. Chapter 21—Marine oil spills—Oil pollution, sources and effects. In World Seas: An Environmental Evaluation, 2nd ed.; Sheppard, C., Ed.; Academic Press: Cambridge, MA, USA, 2019; pp. 391–406. [Google Scholar]

- Topouzelis, K. Oil spill detection by SAR images: Dark formation detection, feature extraction and classification algorithms. Sensors 2008, 8, 6642–6659. [Google Scholar] [CrossRef] [PubMed]

- Solberg, A.; Storvik, G.; Solberg, R.; Volden, E. Automatic detection of oil spills in ERS SAR images. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1916–1924. [Google Scholar] [CrossRef]

- Migliaccio, M.; Gambardella, A.; Tranfaglia, M. SAR polarimetry to observe oil spills. IEEE Trans. Geosci. Remote Sens. 2007, 45, 506–511. [Google Scholar] [CrossRef]

- Nunziata, F.; Gambardella, A.; Migliaccio, M. On the use of dual-polarized SAR data for oil spill observation. In Proceedings of the 2008 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Boston, HI, USA, 7–11 July 2008. [Google Scholar]

- Chang, L.; Tang, J.C. A region-based GLRT detection of oil spills in SAR images. Pattern Recognit. Lett. 2008, 29, 1915–1923. [Google Scholar] [CrossRef]

- Shirvany, R.; Chabert, M.; Tourneret, J.Y. Ship and oil spill detection using the degree of polarization in linear and hybrid/compact dual-pol SAR. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 885–892. [Google Scholar] [CrossRef]

- Xu, L.; Li, J.; Brenning, A. A comparative study of different classification techniques for marine oil spill identification using RADARSAT-1 imagery. Remote Sens. Environ. 2014, 141, 14–23. [Google Scholar] [CrossRef]

- Orfanidis, G.; Ioannidis, K.; Avgerinakis, K.; Vrochidis, S.; Kompatsiaris, I. A deep neural network for oil spill semantic segmentation in SAR images. In Proceedings of the 2018 5th International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3773–3777. [Google Scholar]

- Yekeen, S.T.; Balogun, A.L. Automated marine oil spill detection using deep learning instance segmentation model. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B3-2020, 1271–1276. [Google Scholar] [CrossRef]

- Ma, X.; Xu, J.; Wu, P.; Kong, P. Oil spill detection based on deep convolutional neural networks using polarimetric scattering information from Sentinel-1 SAR images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4204713. [Google Scholar] [CrossRef]

- Shaban, M.; Salim, R.; Khalifeh, H.A.; Khelifi, A.; Shalaby, A.; El-Mashad, S.; Mahmoud, A.; Ghazal, M.; El-Baz, A. A Deep-Learning Framework for the Detection of Oil Spills from SAR Data. Sensors 2021, 21, 2351. [Google Scholar] [CrossRef]

- Fan, Y.; Rui, X.; Zhang, G.; Yu, T.; Xu, X.; Poslad, S. Feature merged network for oil spill detection using SAR images. Remote Sens. 2021, 13, 3174. [Google Scholar] [CrossRef]

- Rousso, R.; Katz, N.; Sharon, G.; Glizerin, Y.; Kosman, E.; Shuster, A. Automatic recognition of oil spills using neural networks and classic image processing. Water 2022, 14, 1127. [Google Scholar] [CrossRef]

- Li, C.; Wang, M.; Yang, X.; Chu, D. DS-UNet: Dual-stream U-Net for oil spill detection of SAR image. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4014905. [Google Scholar] [CrossRef]

- Mahmoud, A.S.; Mohamed, S.A.; El-Khoriby, R.A.; Abdelsalam, H.M.; El-Khodary, I.A. Oil spill identification based on dual attention UNet model using Synthetic Aperture Radar images. J. Indian Soc. Remote Sens. 2023, 51, 121–133. [Google Scholar] [CrossRef]

- Ronci, F.; Avolio, C.; Donna, M.; Zavagli, M.; Piccialli, V.; Costantini, M. Oil spill detection from SAR images by deep learning. In Proceedings of the 2022 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Waikoloa, HI, USA, 26 September–2 October 2020. [Google Scholar]

- Chen, Y.; Wang, Z. Marine oil spill detection from SAR images based on attention U-Net model using polarimetric and wind speed information. Int. J. Environ. Res. Public Health 2022, 19, 12315. [Google Scholar] [CrossRef]

- Li, C.; Kim, D.J.; Park, S.; Kim, J.; Song, J. A self-evolving deep learning algorithm for automatic oil spill detection in Sentinel-1 SAR images. Remote Sens. Environ. 2023, 299, 113872. [Google Scholar] [CrossRef]

- Li, C.; Yang, Y.; Yang, X.; Chu, D.; Cao, W. A novel multi-scale feature map fusion for oil spill detection of SAR remote sensing. Remote Sens. 2024, 16, 1684. [Google Scholar] [CrossRef]

- Rubicel, T.; Jose, T.; Cesare, O.; Erandi, M. Marine oil spill detection and segmentation in SAR data with two steps deep learning framework. Mar. Pollut. Bull. 2024, 204, 16549. [Google Scholar]

- Chen, Y.T.; Chang, L.; Wang, J.H. Full-Scale aggregated MobileUNet: An improved U-Net architecture for SAR oil spill detection. Sensors 2024, 24, 3724. [Google Scholar] [CrossRef]

- Krestenitis, M.; Orfanidis, G.; Ioannidis, K.; Avgerinakis, K.; Vrochidis, S.; Kompatsiaris, I. Oil spill identification from satellite images using deep neural networks. Remote Sens. 2019, 11, 1762. [Google Scholar] [CrossRef]

- Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/ (accessed on 1 July 2024).

- MKLab Dataset. Available online: https://mklab.iti.gr/results/oil-spill-detection-dataset/ (accessed on 24 January 2022).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: New York, NY, USA, 2015. [Google Scholar]

- Zhixuhao. Zhixuhao/Unet. 2017. Available online: https://github.com/zhixuhao/unet (accessed on 1 July 2024).

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, CVPR, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.C.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Mondal, R.; Purkait, P.; Santra, S.; Chanda, B. Morphological networks for image de-raining. arXiv 2019, arXiv:1901.02411. [Google Scholar]

- Decelle, R.; Ngo, P.; Debled-Rennesson, I.; Mothe, F.; Longuetaud, F. Light U-Net with a morphological attention gate model application to alanyse wood sections. In Proceedings of the 12th International Conference on Pattern Recognition Applications and Methods, ICPRAM, Lisbon, Portugal, 22–24 February 2023; Volume 1, pp. 759–766. [Google Scholar]

- Shen, Y.; Zhong, X.; Shih, F. Deep morphological neural networks. arXiv 2019, arXiv:1909.01532. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Muller, R.; Kornblith, S.; Hinton, G. What does label smoothing help? arXiv 2019, arXiv:1906.02629. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Basit, A.; Siddique, M.A.; Bhatti, M.K.; Sarfraz, M.S. Comparison of CNNs and vision transformers-based hybrid models using gradient profile loss for classification of oil spills in SAR images. Remote Sens. 2022, 14, 2085. [Google Scholar] [CrossRef]

- Galdelli, A.; Mancini, A.; Ferra, C.; Tassetti, A.N. A synergic integration of AIS data and SAR imagery to monitor fisheries and detect suspicious activities. Sensors 2021, 21, 2756. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, W.; Han, J.; Li, Y. Tracing illegal oil discharges from vessels using SAR and AIS in Bohai Sea of China. Ocean Coast. Manag. 2021, 211, 105783. [Google Scholar] [CrossRef]

” represents the model using original CBAM.

” represents the model using original CBAM.

” represents the model using original CBAM.

” represents the model using original CBAM.| Modified CBAM | Sea Surface | Oil Spills | Lookalikes | Ships | Land | mIoU | |

|---|---|---|---|---|---|---|---|

| Encoder stage |  | 97.54 | 75.85 | 72.67 | 76.19 | 96.48 | 83.74 |

| 1 | 97.31 | 75.86 | 72.91 | 76.19 | 96.49 | 83.75 | |

| 1, 2 | 97.22 | 75.97 | 73.25 | 76.21 | 96.49 | 83.83 | |

| 1, 2, 3 | 96.03 | 76.34 | 73.39 | 76.20 | 96.40 | 83.67 | |

” represents the model using original CBAM.

” represents the model using original CBAM.

” represents the model using original CBAM.

” represents the model using original CBAM.| Module | Iteration No. | Sea Surface | Oil Spills | Lookalikes | Ships | Land | mIoU |

|---|---|---|---|---|---|---|---|

| Modified CBAM (closing operation) |  | 97.54 | 75.85 | 72.67 | 76.19 | 96.48 | 83.74 |

| 1 | 97.32 | 75.94 | 73.52 | 76.22 | 96.47 | 83.89 | |

| 2 | 97.08 | 76.12 | 73.88 | 76.22 | 96.40 | 83.94 | |

| 3 | 94.85 | 76.88 | 74.13 | 76.23 | 96.38 | 83.69 |

| Method | Sea Surface | Oil Spills | Lookalikes | Ships | Land | mIoU | |

|---|---|---|---|---|---|---|---|

| Label smoothing |  | 97.54 | 75.85 | 72.67 | 76.19 | 96.48 | 83.74 |

| ✓ | 97.29 | 76.84 | 75.21 | 76.42 | 96.45 | 84.44 | |

| Model | Backbone | Parameters | Sea Surface | Oil Spills | Lookalikes | Ships | Land | mIoU |

|---|---|---|---|---|---|---|---|---|

| U-Net | ResNet-101 | 51.5 M | 95.47 | 57.01 | 44.82 | 46.62 | 93.08 | 67.40 |

| LinkNet | ResNet-101 | 47.7 M | 94.82 | 52.95 | 47.52 | 45.11 | 93.12 | 66.70 |

| PSPNet | ResNet-101 | 3.8 M | 93.03 | 45.65 | 40.62 | 30.25 | 91.12 | 60.13 |

| DeepLabv2 | ResNet-101 | 42.8 M | 95.02 | 43.12 | 46.23 | 15.12 | 82.34 | 56.37 |

| DeepLabv3+ | MobileNetv2 | 2.1 M | 96.57 | 56.34 | 57.06 | 32.92 | 94.18 | 67.41 |

| ToZero FMNet | x | 36.0 M | 94.53 | 49.95 | 41.40 | 25.44 | 87.11 | 61.90 |

| CoAtNet-0 | x | 29.4 M | 95.40 | 50.22 | 58.85 | 69.09 | 94.49 | 73.61 |

| EfficientNetv2 | B1 | 16.7 M | 95.19 | 56.42 | 62.23 | 72.80 | 96.59 | 76.65 |

| Ensemble Model | x | x | 96.78 | 56.10 | 58.88 | 47.28 | 96.59 | 71.12 |

| FA-MobileUNet | MobileNetv3 | 14.9M | 97.12 | 75.85 | 72.69 | 76.22 | 96.47 | 83.67 |

| Improved FA-MobileUNet | MobileNetv3 | 14.9M | 96.58 | 77.50 | 75.81 | 76.67 | 96.18 | 84.55 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, L.; Chen, Y.-T.; Cheng, C.-M.; Chang, Y.-L.; Ma, S.-C. Marine Oil Pollution Monitoring Based on a Morphological Attention U-Net Using SAR Images. Sensors 2024, 24, 6768. https://doi.org/10.3390/s24206768

Chang L, Chen Y-T, Cheng C-M, Chang Y-L, Ma S-C. Marine Oil Pollution Monitoring Based on a Morphological Attention U-Net Using SAR Images. Sensors. 2024; 24(20):6768. https://doi.org/10.3390/s24206768

Chicago/Turabian StyleChang, Lena, Yi-Ting Chen, Ching-Min Cheng, Yang-Lang Chang, and Shang-Chih Ma. 2024. "Marine Oil Pollution Monitoring Based on a Morphological Attention U-Net Using SAR Images" Sensors 24, no. 20: 6768. https://doi.org/10.3390/s24206768

APA StyleChang, L., Chen, Y.-T., Cheng, C.-M., Chang, Y.-L., & Ma, S.-C. (2024). Marine Oil Pollution Monitoring Based on a Morphological Attention U-Net Using SAR Images. Sensors, 24(20), 6768. https://doi.org/10.3390/s24206768