Movement Sensing Opportunities for Monitoring Dynamic Cognitive States

Abstract

:1. Introduction

2. Movement Sensing and Cognitive State Estimation

2.1. Sports

2.2. Healthcare

2.3. Driving and Navigation

2.4. Military

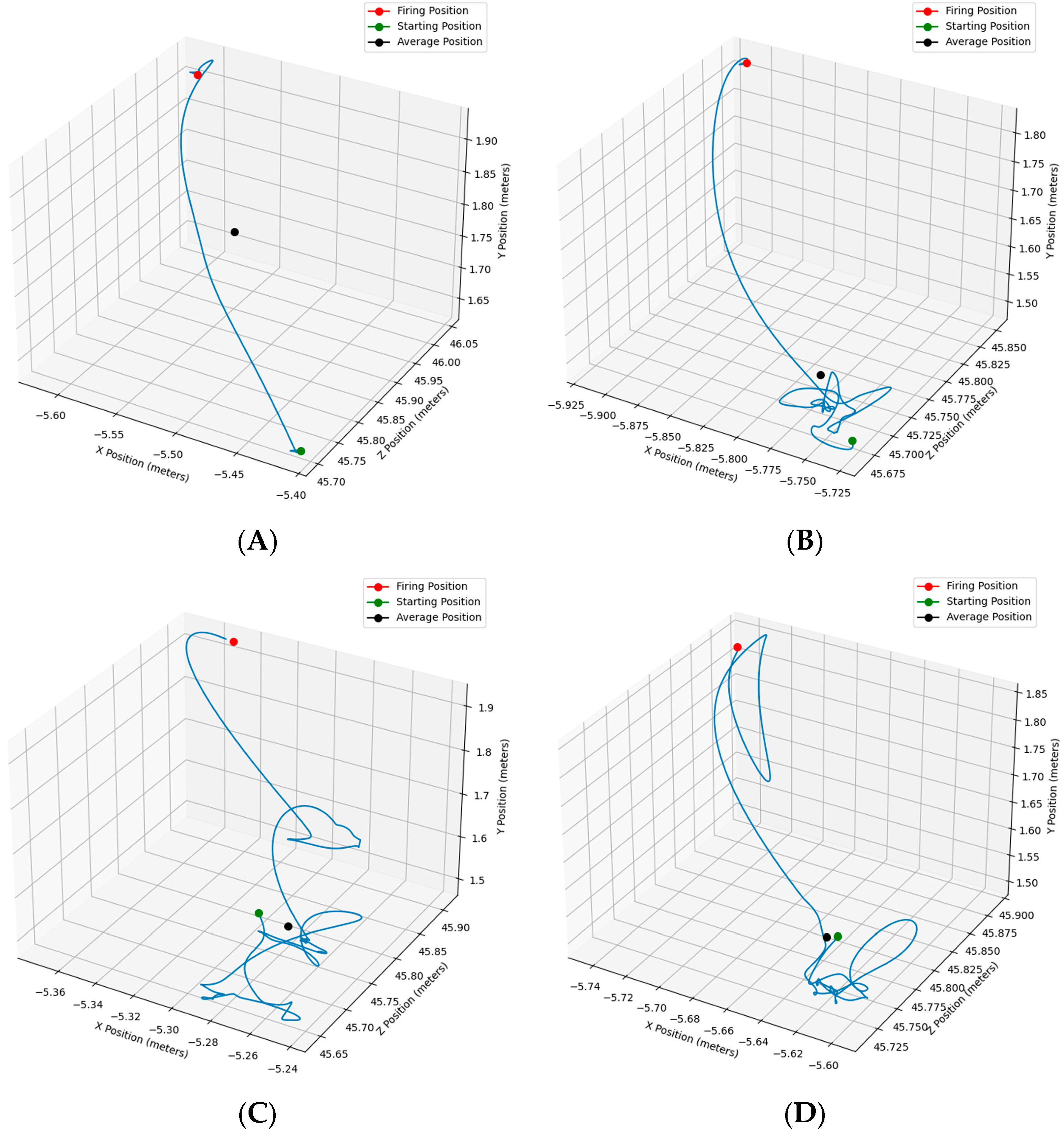

3. Classifying Uncertainty States via Rifle Movement Dynamics

3.1. Participants, Design and Procedure

3.2. Data Processing

3.3. Data Analysis

3.4. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Barsalou, L.W. Perceptual Symbol Systems. Behav. Brain Sci. 1999, 22, 577–609; discussion 610–660. [Google Scholar] [CrossRef] [PubMed]

- Barsalou, L.W. Grounded Cognition. Annu. Rev. Psychol. 2008, 59, 617–645. [Google Scholar] [CrossRef] [PubMed]

- Varela, F.J.; Thompson, E.; Rosch, E. The Embodied Mind, Revised Edition: Cognitive Science and Human Experience; MIT Press: Cambridge, MA, USA, 2017; ISBN 978-0-262-33550-8. [Google Scholar]

- Warren, W.H. The Perception-Action Coupling. In Sensory-Motor Organizations and Development in Infancy and Early Childhood; NATO ASI Series; Springer: Berlin/Heidelberg, Germany, 1990; Volume 56, pp. 23–37. ISBN 978-94-009-2071-2. [Google Scholar]

- Balaban, C.D.; Cohn, J.; Redfern, M.S.; Prinkey, J.; Stripling, R.; Hoffer, M. Postural Control as a Probe for Cognitive State: Exploiting Human Information Processing to Enhance Performance. Int. J. Hum.–Comput. Interact. 2004, 17, 275–286. [Google Scholar] [CrossRef]

- Ballenghein, U.; Megalakaki, O.; Baccino, T. Cognitive Engagement in Emotional Text Reading: Concurrent Recordings of Eye Movements and Head Motion. Cogn. Emot. 2019, 33, 1448–1460. [Google Scholar] [CrossRef]

- Brunyé, T.T.; Haga, Z.D.; Houck, L.A.; Taylor, H.A. You Look Lost: Understanding Uncertainty and Representational Flexibility in Navigation. In Representations in Mind and World: Essays Inspired by Barbara Tversky; Zacks, J.M., Taylor, H.A., Eds.; Routledge: New York, NY, USA, 2017; pp. 42–56. ISBN 978-1-315-16978-1. [Google Scholar]

- Qiu, J.; Helbig, R. Body Posture as an Indicator of Workload in Mental Work. Hum. Factors 2012, 54, 626–635. [Google Scholar] [CrossRef] [PubMed]

- Witt, P.L.; Brown, K.C.; Roberts, J.B.; Weisel, J.; Sawyer, C.R.; Behnke, R.R. Somatic Anxiety Patterns Before, During, and After Giving a Public Speech. South. Commun. J. 2006, 71, 87–100. [Google Scholar] [CrossRef]

- Kaczorowska, M.; Karczmarek, P.; Plechawska-Wójcik, M.; Tokovarov, M. On the Improvement of Eye Tracking-Based Cognitive Workload Estimation Using Aggregation Functions. Sensors 2021, 21, 4542. [Google Scholar] [CrossRef]

- Peißl, S.; Wickens, C.D.; Baruah, R. Eye-Tracking Measures in Aviation: A Selective Literature Review. Int. J. Aerosp. Psychol. 2018, 28, 98–112. [Google Scholar] [CrossRef]

- Tolvanen, O.; Elomaa, A.-P.; Itkonen, M.; Vrzakova, H.; Bednarik, R.; Huotarinen, A. Eye-Tracking Indicators of Workload in Surgery: A Systematic Review. J. Investig. Surg. 2022, 35, 1340–1349. [Google Scholar] [CrossRef]

- Cardone, D.; Perpetuini, D.; Filippini, C.; Mancini, L.; Nocco, S.; Tritto, M.; Rinella, S.; Giacobbe, A.; Fallica, G.; Ricci, F.; et al. Classification of Drivers’ Mental Workload Levels: Comparison of Machine Learning Methods Based on ECG and Infrared Thermal Signals. Sensors 2022, 22, 7300. [Google Scholar] [CrossRef]

- Charles, R.L.; Nixon, J. Measuring Mental Workload Using Physiological Measures: A Systematic Review. Appl. Ergon. 2019, 74, 221–232. [Google Scholar] [CrossRef] [PubMed]

- Hoover, A.; Singh, A.; Fishel-Brown, S.; Muth, E. Real-Time Detection of Workload Changes Using Heart Rate Variability. Biomed. Signal Process. Control 2012, 7, 333–341. [Google Scholar] [CrossRef]

- Cao, J.; Garro, E.M.; Zhao, Y. EEG/fNIRS Based Workload Classification Using Functional Brain Connectivity and Machine Learning. Sensors 2022, 22, 7623. [Google Scholar] [CrossRef]

- Herff, C.; Heger, D.; Fortmann, O.; Hennrich, J.; Putze, F.; Schultz, T. Mental Workload during N-Back Task—Quantified in the Prefrontal Cortex Using fNIRS. Front. Hum. Neurosci. 2014, 7, 935. [Google Scholar] [CrossRef] [PubMed]

- Berka, C.; Levendowski, D.J.; Lumicao, M.N.; Yau, A.; Davis, G.; Zivkovic, V.T.; Olmstead, R.E.; Tremoulet, P.D.; Craven, P.L. EEG Correlates of Task Engagement and Mental Workload in Vigilance, Learning, and Memory Tasks. Aviat. Space Environ. Med. 2007, 78, B231–B244. [Google Scholar]

- Zhou, Y.; Huang, S.; Xu, Z.; Wang, P.; Wu, X.; Zhang, D. Cognitive Workload Recognition Using EEG Signals and Machine Learning: A Review. IEEE Trans. Cogn. Dev. Syst. 2022, 14, 799–818. [Google Scholar] [CrossRef]

- Lively, S.E.; Pisoni, D.B.; Van Summers, W.; Bernacki, R.H. Effects of Cognitive Workload on Speech Production: Acoustic Analyses and Perceptual Consequences. J. Acoust. Soc. Am. 1993, 93, 2962–2973. [Google Scholar] [CrossRef]

- Hart, S.G. Nasa-Task Load Index (NASA-TLX); 20 Years Later. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2006, 50, 904–908. [Google Scholar] [CrossRef]

- Guerra-Filho, P.G. Optical Motion Capture: Theory and Implementation. J. Theor. Appl. Inform. (RITA) 2005, 12, 61–89. [Google Scholar]

- Aurand, A.M.; Dufour, J.S.; Marras, W.S. Accuracy Map of an Optical Motion Capture System with 42 or 21 Cameras in a Large Measurement Volume. J. Biomech. 2017, 58, 237–240. [Google Scholar] [CrossRef]

- Windolf, M.; Götzen, N.; Morlock, M. Systematic Accuracy and Precision Analysis of Video Motion Capturing Systems—Exemplified on the Vicon-460 System. J. Biomech. 2008, 41, 2776–2780. [Google Scholar] [CrossRef] [PubMed]

- Ciklacandir, S.; Ozkan, S.; Isler, Y. A Comparison of the Performances of Video-Based and IMU Sensor-Based Motion Capture Systems on Joint Angles. In Proceedings of the 2022 Innovations in Intelligent Systems and Applications Conference (ASYU), Antalya, Turkey, 7–9 September 2022; pp. 1–5. [Google Scholar]

- Iosa, M.; Picerno, P.; Paolucci, S.; Morone, G. Wearable Inertial Sensors for Human Movement Analysis. Expert Rev. Med. Devices 2016, 13, 641–659. [Google Scholar] [CrossRef] [PubMed]

- Vaduvescu, V.A.; Negrea, P. Inertial Measurement Unit—A Short Overview of the Evolving Trend for Miniaturization and Hardware Structures. In Proceedings of the 2021 International Conference on Applied and Theoretical Electricity (ICATE), Craiova, Romania, 27–29 May 2021; pp. 1–5. [Google Scholar]

- Franco, T.; Sestrem, L.; Henriques, P.R.; Alves, P.; Varanda Pereira, M.J.; Brandão, D.; Leitão, P.; Silva, A. Motion Sensors for Knee Angle Recognition in Muscle Rehabilitation Solutions. Sensors 2022, 22, 7605. [Google Scholar] [CrossRef] [PubMed]

- Manupibul, U.; Tanthuwapathom, R.; Jarumethitanont, W.; Kaimuk, P.; Limroongreungrat, W.; Charoensuk, W. Integration of Force and IMU Sensors for Developing Low-Cost Portable Gait Measurement System in Lower Extremities. Sci. Rep. 2023, 13, 10653. [Google Scholar] [CrossRef] [PubMed]

- Saun, T.J.; Grantcharov, T.P. Design and Validation of an Inertial Measurement Unit (IMU)-Based Sensor for Capturing Camera Movement in the Operating Room. HardwareX 2021, 9, e00179. [Google Scholar] [CrossRef]

- Arlotti, J.S.; Carroll, W.O.; Afifi, Y.; Talegaonkar, P.; Albuquerque, L.; Burch V, R.F.; Ball, J.E.; Chander, H.; Petway, A. Benefits of IMU-Based Wearables in Sports Medicine: Narrative Review. Int. J. Kinesiol. Sports Sci. 2022, 10, 36–43. [Google Scholar] [CrossRef]

- Davarzani, S.; Helzer, D.; Rivera, J.; Saucier, D.; Jo, E.; Burch V., R.; Chander, H.; Strawderman, L.; Ball, J.E.; Smith, B.K.; et al. Validity and Reliability of StriveTM Sense3 for Muscle Activity Monitoring During the Squat Exercise. Int. J. Kinesiol. Sports Sci. 2020, 8, 1–18. [Google Scholar] [CrossRef]

- Apte, S.; Prigent, G.; Stöggl, T.; Martínez, A.; Snyder, C.; Gremeaux-Bader, V.; Aminian, K. Biomechanical Response of the Lower Extremity to Running-Induced Acute Fatigue: A Systematic Review. Front. Physiol. 2021, 12, 646042. [Google Scholar] [CrossRef]

- Marotta, L.; Scheltinga, B.L.; van Middelaar, R.; Bramer, W.M.; van Beijnum, B.-J.F.; Reenalda, J.; Buurke, J.H. Accelerometer-Based Identification of Fatigue in the Lower Limbs during Cyclical Physical Exercise: A Systematic Review. Sensors 2022, 22, 3008. [Google Scholar] [CrossRef]

- Rawashdeh, S.A.; Rafeldt, D.A.; Uhl, T.L. Wearable IMU for Shoulder Injury Prevention in Overhead Sports. Sensors 2016, 16, 1847. [Google Scholar] [CrossRef]

- Moolchandani, P.R.; Mazumdar, A.; Young, A.J. Design of an Intent Recognition System for Dynamic, Rapid Motions in Unstructured Environments. ASME Lett. Dyn. Syst. Control 2021, 2, 011004. [Google Scholar] [CrossRef]

- Ahmidi, N.; Hager, G.D.; Ishii, L.; Fichtinger, G.; Gallia, G.L.; Ishii, M. Surgical Task and Skill Classification from Eye Tracking and Tool Motion in Minimally Invasive Surgery. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2010; Jiang, T., Navab, N., Pluim, J.P.W., Viergever, M.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 295–302. [Google Scholar]

- Cao, C.; MacKenzie, C.L.; Payandeh, S. Task and Motion Analyses in Endoscopic Surgery. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Atlanta, GA, USA, 17–22 November 1996; ASME: Atlanta, GA, USA, 1996; Volume IMECE1996-0386, pp. 583–590. [Google Scholar]

- Brunyé, T.T.; Booth, K.; Hendel, D.; Kerr, K.F.; Shucard, H.; Weaver, D.L.; Elmore, J.G. Machine Learning Classification of Diagnostic Accuracy in Pathologists Interpreting Breast Biopsies. J. Am. Med. Inform. Assoc. 2024, 31, 552–562. [Google Scholar] [CrossRef]

- Hosp, B.; Yin, M.S.; Haddawy, P.; Watcharopas, R.; Sa-Ngasoongsong, P.; Kasneci, E. States of Confusion: Eye and Head Tracking Reveal Surgeons’ Confusion during Arthroscopic Surgery. In Proceedings of the 2021 International Conference on Multimodal Interaction, Montréal, QC, Canada, 18–22 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 753–757. [Google Scholar]

- Monaro, M.; Toncini, A.; Ferracuti, S.; Tessari, G.; Vaccaro, M.G.; De Fazio, P.; Pigato, G.; Meneghel, T.; Scarpazza, C.; Sartori, G. The Detection of Malingering: A New Tool to Identify Made-Up Depression. Front. Psychiatry 2018, 9, 249. [Google Scholar] [CrossRef]

- Torres, E.B.; Isenhower, R.W.; Yanovich, P.; Rehrig, G.; Stigler, K.; Nurnberger, J.; José, J.V. Strategies to Develop Putative Biomarkers to Characterize the Female Phenotype with Autism Spectrum Disorders. J. Neurophysiol. 2013, 110, 1646–1662. [Google Scholar] [CrossRef]

- Hundrieser, S.; Heinemann, F.; Klatt, M.; Struleva, M.; Munk, A. Unbalanced Kantorovich-Rubinstein Distance, Plan, and Barycenter on Finite Spaces: A Statistical Perspective. arXiv 2024, arXiv:2211.08858. [Google Scholar]

- Aoki, K.; Ngo, T.T.; Mitsugami, I.; Okura, F.; Niwa, M.; Makihara, Y.; Yagi, Y.; Kazui, H. Early Detection of Lower MMSE Scores in Elderly Based on Dual-Task Gait. IEEE Access 2019, 7, 40085–40094. [Google Scholar] [CrossRef]

- Shin, J.-Y.; Kim, Y.-J.; Kim, J.-S.; Min, S.-B.; Park, J.-N.; Bae, J.-H.; Seo, H.-E.; Shin, H.-S.; Yu, Y.-E.; Lim, J.-Y.; et al. The Correlation between Gait and Cognitive Function in Dual-Task Walking of the Elderly with Cognitive Impairment: A Systematic Literature Review. J. Korean Soc. Phys. Med. 2022, 17, 93–108. [Google Scholar] [CrossRef]

- Chen, S.; Epps, J. Atomic Head Movement Analysis for Wearable Four-Dimensional Task Load Recognition. IEEE J. Biomed. Health Inform. 2019, 23, 2464–2474. [Google Scholar] [CrossRef]

- Boer, E.R. Behavioral Entropy as an Index of Workload. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2000, 44, 125–128. [Google Scholar] [CrossRef]

- Boer, E.R. Behavioral Entropy as a Measure of Driving Performance. In Driving Assessment Conference; University of Iowa: Iowa City, IA, USA, 2001; Volume 1. [Google Scholar] [CrossRef]

- Hirsh, J.B.; Mar, R.A.; Peterson, J.B. Psychological Entropy: A Framework for Understanding Uncertainty-Related Anxiety. Psychol. Rev. 2012, 119, 304–320. [Google Scholar] [CrossRef] [PubMed]

- Keshmiri, S. Entropy and the Brain: An Overview. Entropy 2020, 22, 917. [Google Scholar] [CrossRef] [PubMed]

- Zanetti, M.; Faes, L.; Nollo, G.; De Cecco, M.; Pernice, R.; Maule, L.; Pertile, M.; Fornaser, A. Information Dynamics of the Brain, Cardiovascular and Respiratory Network during Different Levels of Mental Stress. Entropy 2019, 21, 275. [Google Scholar] [CrossRef] [PubMed]

- Keshmiri, S. Conditional Entropy: A Potential Digital Marker for Stress. Entropy 2021, 23, 286. [Google Scholar] [CrossRef] [PubMed]

- Biggs, A.T. Developing Scenarios That Evoke Shoot/Don’t-Shoot Errors. Appl. Ergon. 2021, 94, 103397. [Google Scholar] [CrossRef]

- Chung, G.K.W.K.; De La Cruz, G.C.; de Vries, L.F.; Kim, J.-O.; Bewley, W.L.; de Souza e Silva, A.; Sylvester, R.M.; Baker, E.L. Determinants of Rifle Marksmanship Performance: Predicting Shooting Performance with Advanced Distributed Learning Assessments; University of California Los Angeles: Los Angeles, CA, USA, 2004. [Google Scholar]

- Brunyé, T.T.; Giles, G.E. Methods for Eliciting and Measuring Behavioral and Physiological Consequences of Stress and Uncertainty in Virtual Reality. Front. Virtual Real. 2023, 4, 951435. [Google Scholar] [CrossRef]

- Giles, G.E.; Cantelon, J.A.; Navarro, E.; Brunyé, T.T. State and Trait Predictors of Cognitive Responses to Acute Stress and Uncertainty. Mil. Psychol. 2024, 1–8. [Google Scholar] [CrossRef]

- Brunyé, T.T.; Gardony, A.L. Eye Tracking Measures of Uncertainty during Perceptual Decision Making. Int. J. Psychophysiol. 2017, 120, 60–68. [Google Scholar] [CrossRef]

- Heekeren, H.R.; Marrett, S.; Ungerleider, L.G. The Neural Systems That Mediate Human Perceptual Decision Making. Nat. Rev. Neurosci. 2008, 9, 467–479. [Google Scholar] [CrossRef] [PubMed]

- Unity Technologies, Inc. Unity3d Engine; Unity Technologies, Inc.: San Francisco, CA, USA, 2022. [Google Scholar]

- Barandas, M.; Folgado, D.; Fernandes, L.; Santos, S.; Abreu, M.; Bota, P.; Liu, H.; Schultz, T.; Gamboa, H. TSFEL: Time Series Feature Extraction Library. SoftwareX 2020, 11, 100456. [Google Scholar] [CrossRef]

- UNO Biomechanics Nonlinear-Analysis-Core/NONANLibrary 2020. Available online: https://github.com/Nonlinear-Analysis-Core/NONANLibrary (accessed on 23 September 2024).

- Machkour, J.; Muma, M.; Palomar, D.P. The Terminating-Random Experiments Selector: Fast High-Dimensional Variable Selection with False Discovery Rate Control. arXiv 2021, arXiv:2110.06048. [Google Scholar]

- Wolf, A.; Swift, J.B.; Swinney, H.L.; Vastano, J.A. Determining Lyapunov Exponents from a Time Series. Phys. D Nonlinear Phenom. 1985, 16, 285–317. [Google Scholar] [CrossRef]

- Dailey, S.F.; Campbell, L.N.P.; Ramsdell, J. Law Enforcement Officer Naturalistic Decision-Making in High-Stress Conditions. Polic. Int. J. 2024, 47, 929–948. [Google Scholar] [CrossRef]

- Voigt, L.; Zinner, C. How to Improve Decision Making and Acting Under Stress: The Effect of Training with and without Stress on Self-Defense Skills in Police Officers. J. Police Crim. Psych. 2023, 38, 1017–1024. [Google Scholar] [CrossRef]

- Torres-Ronda, L.; Beanland, E.; Whitehead, S.; Sweeting, A.; Clubb, J. Tracking Systems in Team Sports: A Narrative Review of Applications of the Data and Sport Specific Analysis. Sports Med. Open 2022, 8, 15. [Google Scholar] [CrossRef]

- Cristancho, S.M.; Apramian, T.; Vanstone, M.; Lingard, L.; Ott, M.; Novick, R.J. Understanding Clinical Uncertainty: What Is Going on When Experienced Surgeons Are Not Sure What to Do? Acad. Med. 2013, 88, 1516. [Google Scholar] [CrossRef]

- Beukman, A.R.; Hancke, G.P.; Silva, B.J. A Multi-Sensor System for Detection of Driver Fatigue. In Proceedings of the 2016 IEEE 14th International Conference on Industrial Informatics (INDIN), Poitiers, France, 18–20 July 2016; pp. 870–873. [Google Scholar]

- Brunner, O.; Mertens, A.; Nitsch, V.; Brandl, C. Accuracy of a Markerless Motion Capture System for Postural Ergonomic Risk Assessment in Occupational Practice. Int. J. Occup. Saf. Ergon. 2022, 28, 1865–1873. [Google Scholar] [CrossRef]

- Yunus, M.N.H.; Jaafar, M.H.; Mohamed, A.S.A.; Azraai, N.Z.; Hossain, M.S. Implementation of Kinetic and Kinematic Variables in Ergonomic Risk Assessment Using Motion Capture Simulation: A Review. Int. J. Environ. Res. Public Health 2021, 18, 8342. [Google Scholar] [CrossRef]

| Iteration | Number of Features | F1 | Accuracy | Precision | Recall | MSE |

|---|---|---|---|---|---|---|

| 1 | 11 | 0.775 | 0.664 | 0.682 | 0.897 | 0.336 |

| 2 | 8 | 0.763 | 0.645 | 0.671 | 0.883 | 0.355 |

| 3 | 6 | 0.766 | 0.651 | 0.677 | 0.883 | 0.348 |

| 4 | 11 | 0.768 | 0.661 | 0.684 | 0.877 | 0.339 |

| 5 | 7 | 0.775 | 0.657 | 0.670 | 0.920 | 0.343 |

| Feature | Weight | Description |

|---|---|---|

| Lyapunov Exponent of VM | −0.29 | The rate at which small differences in velocity grow over time, indicating sensitivity to initial conditions and chaos. |

| Lyapunov Exponent of X | −0.27 | The rate at which small differences in X-axis (lateral) movement grow over time, indicating sensitivity to initial conditions and chaos. |

| Lyapunov Exponent of Y | −0.17 | The rate at which small differences in Y-axis (vertical) movement grow over time, indicating sensitivity to initial conditions and chaos. |

| Spectral Slope | −0.16 | Power of a trajectory’s velocity changing across different frequencies, revealing smoothness or complexity of the trajectory. |

| AMI (Stergiou) of X | −0.30 | Nonlinear dependencies and predictability of X-axis movement over time, how much information past values provide about future values. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brunyé, T.T.; McIntyre, J.; Hughes, G.I.; Miller, E.L. Movement Sensing Opportunities for Monitoring Dynamic Cognitive States. Sensors 2024, 24, 7530. https://doi.org/10.3390/s24237530

Brunyé TT, McIntyre J, Hughes GI, Miller EL. Movement Sensing Opportunities for Monitoring Dynamic Cognitive States. Sensors. 2024; 24(23):7530. https://doi.org/10.3390/s24237530

Chicago/Turabian StyleBrunyé, Tad T., James McIntyre, Gregory I. Hughes, and Eric L. Miller. 2024. "Movement Sensing Opportunities for Monitoring Dynamic Cognitive States" Sensors 24, no. 23: 7530. https://doi.org/10.3390/s24237530

APA StyleBrunyé, T. T., McIntyre, J., Hughes, G. I., & Miller, E. L. (2024). Movement Sensing Opportunities for Monitoring Dynamic Cognitive States. Sensors, 24(23), 7530. https://doi.org/10.3390/s24237530