1. Introduction

There has long been a continual aspiration to leverage technological advancements for the understanding and analysis of human behavior, aiming at improving both quality of life and working environments. In recent years, motivated by the growing development of the Internet of Things (IoT) and artificial intelligence (AI) technologies, AI-based human activity recognition (HAR) has been regarded as pivotal technology, capturing widespread interest [

1]. AI-based HAR would have substantial potential and significance in multiple domains such as human–computer interaction [

2,

3], health monitoring [

4,

5], and smart homes [

6,

7].

Considering the type of human activity data, HAR technology can be categorized as either vision-based HAR or sensor-based HAR [

8]. The former relies on visual information obtained from images or videos to analyze visual features such as human postures, movements, and shapes [

9,

10]. The latter relies on wearable sensors or environmental perception sensors to capture information related to human motion, posture, and physiological signals for analysis [

11,

12,

13]. Vision-based HAR has the significant advantage of acquiring large-scale training data, since images and video data can intuitively capture activities. However, it is essential to note that changes in perspective and lighting conditions may introduce disturbances in appearance and features, thereby adversely affecting the accuracy of activity recognition. Additionally, human activities often occur in complex and dynamic environmental settings, sometimes involving multiple subjects simultaneously. In such cases, factors such as background interference and mutual occlusion of subjects may increase the complexity of recognition tasks. Moreover, privacy concerns present a challenge for vision-based methods. In contrast, the wearable sensor-based HAR method is less susceptible to environmental variations. In addition, since only wearable sensor data are collected, it is also less vulnerable to security attacks and privacy issues. In recent years, as sensor technology and the IoT have rapidly advanced, an increasing number of researchers are leaning toward adopting wearable sensor-based HAR methods to address the challenges and limitations present in vision-based approaches [

14].

Traditional HAR approaches utilize machine learning algorithms for classification, such as support vector machines (SVMs) [

15,

16], random forest [

17,

18], k-nearest neighbors (KNN) [

19], and hidden Markov models (HMM), among others [

20,

21]. However, these methods have certain limitations in deep feature extraction, especially for complex action recognition tasks. Their performance is often restricted, and they require domain knowledge and manual feature extraction. In recent years, with the rise of deep learning technologies, deep neural networks have excelled in the HAR field and gradually become the mainstream approach [

22]. Deep neural networks possess powerful feature learning capabilities, allowing them to automatically extract high-level features from raw data without the need for extensive manual feature engineering. This advantage enables them to better handle the recognition of complex motion patterns and various environmental conditions associated with human activity recognition tasks.

As depicted in

Figure 1, a sensor-based deep learning HAR system generally consists of the data, network, and application layers. Current research trends primarily focus on data processing at the data layer and adjustments to the neural network structure, aiming at optimizing the model’s learning capabilities to enhance HAR performance. In data processing, researchers have proposed various methods, including dynamic sliding window segmentation [

23], an adaptive batch size [

24], and a wavelet transform, among others [

25,

26]. Although these methods aid in handling sensor data and extracting valuable features, their complexity and additional computational costs should not be overlooked.

Faced with diverse and complex activity data, a deep network only consists of a convolutional neural network or recurrent neural network like long short-term memory (LSTM) and gated Rrecurrent units (GRUs), which have shown limitations in recent years. Consequently, researchers are increasingly inclined to use hybrid network structures [

27]. These hybrid networks combine different types of neural network components to better adapt to diverse data and tasks. Kocşar et al. introduced a 2D CNN-LSTM hybrid model, which utilizes a dual-branch structure to extract diverse features from wearable sensor data [

28]. Similarly, Venkatachalam et al. proposed a bimodal hybrid classifier with 1D CNN-LSTM to enhance HAR performance [

29]. Han et al. proposed a heterogeneous convolutional architecture that can generate activity features with more discriminative power by simultaneously employing different convolution kernel sizes to capture different time scales, and it can be integrated into existing models to improve accuracy [

30].

Furthermore, with the rise of attention mechanisms, integrating them into hybrid models has become a significant trend. Attention mechanisms allow models to focus more on the important parts of the data, thereby enhancing HAR accuracy and efficiency [

31]. Mim et al. proposed an efficient recognition model [

32] that utilizes GRUs with an attention mechanism to extract temporal features and inception with a convolutional block attention module (CBAM) for spatial feature extraction, and it includes residual connections to address gradient vanishing issues. Ding et al. introduced a deep convolutional network hybrid model that extracts spatiotemporal features through three convolution layers and three parallel pathways [

33]. The convolution layers capture the spatial features, while the parallel pathways, consisting of LSTM and GRUs, extract the temporal features. A channel attention mechanism, SENet, is introduced after the convolution layers to improve performance. Wang et al. proposed a multi-feature extraction model composed of Bi-GRU, a CNN, and ResNet, with different attention mechanisms allocated to each feature extraction layer [

34]. Similarly, Sun et al. presented a multifeature extraction framework consisting of a capsule block and two GRU layers [

35]. Each GRU layer in this framework incorporates different attention mechanisms, effectively enhancing recognition performance.

Although existing research has been able to effectively extract various features from sensor data and achieve good performance, there is still room for improvement in feature fusion and selection. In particular, for the issues of selecting important feature channels from the extracted features based on different activity types, existing works have not extensively addressed this issue. In this paper, we propose a a residual multifeature fusion shrinkage network to address the above issues in wearable-based HAR. Our main contributions are as follows:

The residual multifeature fusion shrinkage network (RMFSN) is proposed for wearable-based HAR, which consists of a temporal feature extraction block (TFEB), multi-scale feature extraction block (MSFEB), channel attention shrinkage block (CASB), and a classifier network. In the RMFSN, a lightweight temporal attention mechanism is used for enhancing HAR accuracy. In addition, a channel attention shrinkage network is designed for adaptively selecting the most relevant features based on activity types, which further improves the accuracy and generalization. The RMFSN can effectively integrate various features extracted from sensor data while having a relatively small number of model parameters, making it suitable for potential applications in resource-constrained environments.

Data preprocessing for learning is carried out. Since data are subject to the issues of missing values and anomalies, it cannot be directly used in deep neural networks for learning. Accordingly, we have proposed data cleaning methods for the investigated public datasets such as UCI-HAR, WISDM and OPPORTUNITY, considering the special features of earch dataset.

Experimental results have been conducted to demonstrate the efficiency of the proposed RMFSN in wearable-based HAR.

The remainder of this paper is organized as follows.

Section 2 presents the detail of the proposed RMFSN and the features of public datasets for HAR.

Section 3 provides the performance evaluation of the RMFSN through experiments with public datasets.

Section 4 concludes the paper.

3. Experimental Set-Up and Analysis

The proposed RMFSN model was constructed using PyTorch version 2.0.1. The experimental set-up involved a Windows 11 system with an Intel i9-13900HX CPU (Intel, Santa Clara, CA, USA) and an NVIDIA GeForce RTX 4060 GPU (NVIDIA, Santa Clara, CA, USA) with 8 GB of video memory. During the experiments, model training utilized the cross-entropy loss function to measure the disparity between the model’s predicted activity states and the true states. The adaptive moment estimation (Adam) optimization algorithm was employed to adaptively adjust the learning rate. After conducting multiple experiments, we identified relatively optimal sets of hyperparameters. Some of the hyperparameter configurations for the three datasets are provided in

Table 1. Since the data channel and sampling frequencies of HAR sensors in the three investigated datasets are different, the data dimensions of the three datasets are different, and accordingly, the input sizes for the convolutional networks are distinct, as shown in

Table 1, where (9, 128), (3, 100), and (113, 60) represent the (“channel”, “size of the sliding window”) of the corresponding datasets. The number of training iterations for each dataset was set to 100, and both the residual blocks and GRU layers were maintained at a depth of two layers. Additionally, the dilation rate and padding were set consistently across the datasets. The convolutional kernels in the dilated convolution layers had the same size as those in the standard convolution layers. It is important to note that the number of convolutional kernels maintained a consistent relationship with the number of feature channels.

Notice that since the RMFSN is data-driven, sufficient sensing data from human activity are required. It is time-consuming to collect and label such large amounts of raw data. Therefore, in this paper, we use the three typical public datasets UCI-HAR, WISDM, and OPPORTUNITY as the datasets for training and evaluating the proposed RMFSN. Since some data from the previous three public datasets are subject to the issues of missing values and anomalies, we performed data cleaning with the method described in

Section 2.2.2 before training and evaluating the RMFSN.

In addition, since the sensing data for HAR is from different volunteers with various sensors, the number of human activity samples varies with the type of human activity, and accordingly, it is one-sided to use a single performance metric to evaluate the performance of an HAR model. Therefore, we used multiple performance metrics to evaluate the wearable sensor-based HAR models, including the proposed RMFSN. The detailed discussions and definitions of the corresponding performance metrics are described in

Section 3.1.

3.1. Performance Metrics

The performance metrics used to investigate a deep learning-based classification method included the accuracy, precision, recall, and F1 score. These metrics could be calculated based on the confusion matrix, which served as a visual tool for illustrating the relationship between a model’s classification outcomes and the true labels. The confusion matrix consisted of four key components: true positive (), true negative (), false positive (), and false negative ().

Specifically,

represent the number of instances where the model correctly predicted positive class samples as positive,

represent the number of instances where the model correctly predicted negative class samples as negative,

represent the number of instances where the model erroneously predicted negative class samples as positive, and

represent the number of instances where the model erroneously predicted positive class samples as negative.

Table 2 provides an example of a confusion matrix for a binary classification problem. The calculation methods for the above-mentioned metrics are shown in Equations (

12)–(

15):

Obviously, the precision and recall are statistics specific to positive class predictions and are not well suited for HAR evaluation. While accuracy provides a comprehensive and intuitive measure by considering all samples, it is important to note that some datasets may exhibit imbalanced distributions of activity classes, which can lead to inflated accuracy, especially for the majority class. On the other hand, the score is a weighted average of precision and recall, offering a more balanced assessment of model performance. Therefore, we decided to employ both the accuracy and score to evaluate the model, ensuring a more robust performance assessment in light of potential class imbalance in some datasets.

3.2. Evaluation Method

Cross-validation (such as k-fold cross-validation and stratified cross-validation) has been widely used for evaluating the generalization performance of deep learning models [

44]. However, the cross-validation method may be unsuitable for segmented time series data utilizing overlapping windows. When using sliding windows to partition data, the issue of data leakage in testing arises due to the overlap between adjacent windows allocated to different datasets [

45]. The occurrence of testing data leakage can result in an overestimation of model evaluation performance, leading to potentially misleading outcomes. Thus, the model’s generalization performance in real-world scenarios may be overly optimistically estimated. Therefore, for segmented time series data, such as wearable sensor-based HAR data, a more cautious cross-validation strategy is required to mitigate this potential issue.

This paper uses leave one subject out (LOSO) cross-validation to alleviate the impact of the neighborhood bias on the performance evaluation. In particular, the validation process was determined by the number of subjects in the dataset. For each iteration, one subject’s data were selected as the test set, while the model was trained on the remaining subjects’ data. The final evaluation results were obtained by averaging the performance across all iterations.

3.3. Performance Analysis

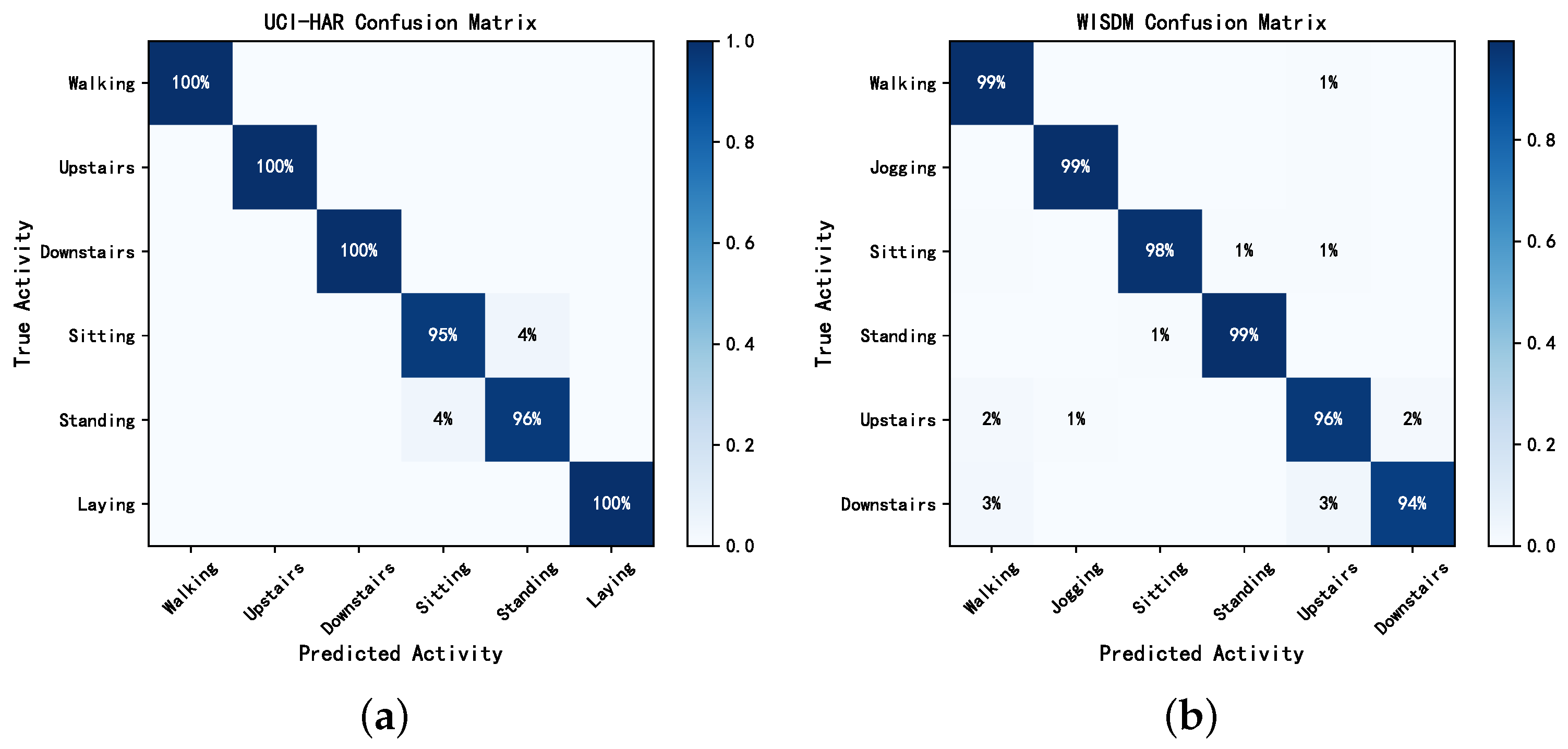

Table 3 presents the performance evaluation results of our RMFSN on the UCI-HAR, WISDM, and OPPORTUNITY datasets. The average

scores were 98.21 (0.81)%, 97.74 (1.10)%, and 92.20 (0.25)%, and the overall accuracy was 98.13 (0.73)%, 98.35 (0.78)%, and 93.89 (0.21)%, respectively, where (·)% is the standard deviation. On the UCI-HAR dataset, activities such as “walking”, “upstairs”, “downstairs”, and “laying” exhibited consistently high performance across various metrics. Furthermore, the performance of “sitting” and “standing” activities showed some fluctuations, with a slightly higher standard deviation compared with other activities.

Similarly, on the WISDM dataset, the first four activities, namely “walking”, “jogging”, “sitting”, and “standing”, demonstrated commendable performance across different metrics. However, the “upstairs” and “downstairs” activities exhibited slightly larger performance fluctuations. On the OPPORTUNITY dataset, most activities had average F1 scores exceeding 90%, demonstrating that the proposed model exhibited excellent discriminative capability across multiple activity classifications. A more intuitive visualization of the classification results for different activity categories is provided by the confusion matrix in

Figure 8 and

Figure 9.

As shown in

Figure 8 and

Figure 9, the RMFSN exhibited instances of mutual misclassifications on each dataset. Notably, on the UCI-HAR dataset, there was a noticeable occurrence of mutual misclassifications for the activities “sitting” and “standing”. Similarly, on the WISDM dataset, mutual misclassifications were more pronounced for the activities “upstairs” and “downstairs”. This phenomenon may be attributed to the strikingly similar data distributions of these activities within their respective datasets. Additionally, in the OPPORTUNITY dataset, suboptimal performance was observed primarily in activities related to opening and closing drawers. This could be explained by our reliance solely on wearable sensor data for this dataset without incorporating information from other types of sensors. The above phenomena also elucidates the slightly higher standard deviations observed for these activities in

Table 3.

To sum up, our model demonstrated good performance on these three datasets, showcasing robust generalization capabilities.

3.4. Model Comparison and Visualization

To further evaluate the performance of the RMFSN model, we conducted comparisons with advanced models from recent years, using the accuracy and F1 scores as performance metrics. These studies used the same datasets to validate their model algorithms, although their data preprocessing methods varied. As such, the comparative results were not absolute but provided relative insights into the model’s performance.

Table 4 presents the comparison results with other models. Notably, our model had significantly fewer parameters than the compared methods. On the UCI-HAR dataset, our model outperformed the Bi-HAR model using a combination of the 1D-CNN and LSTM, achieving a 0.24% accuracy improvement. Compared with the ResNet model using heterogeneous convolutions, our model showed a 1.12% accuracy improvement. When compared with the GRU-INC and DMEFAM models, which also use GRUs to extract temporal features, our model demonstrated respective improvements of 1.86% and 2.13%. On the WISDM dataset, our model surpassed GTSNet, which uses group time-shift convolutions, and CapsNet, which incorporates capsule structures, with accuracy improvements of 9.48% and 1.55%, respectively. While GRU-INC achieved a slightly higher accuracy than our model (by 0.78%), it is important to note that our model had fewer parameters. On the OPPORTUNITY dataset, our model achieved an accuracy improvement of 2.34% compared with ResNet+HC, 3.52% compared with GRU-INC, and 6.42% compared with GTSNet. Likewise, the comparative results for the F1 scores did not show significant differences from the accuracy results.

Figure 10 shows the heatmaps of feature selection on UCI-HAR for our CASB of the RMFSN to make our model more interpretable. The horizontal and vertical axes represent the time steps and feature channels, respectively. Each activity exhibited varying dependencies on features, and the RMFSN adapted its feature selection dynamically with changes in the time steps. The dynamic nature of the feature selection process underscores the model’s sensitivity to temporal variations in different activities. Meanwhile, by observing the heat maps of the two different subjects, it can be seen that the feature selection pattern of the same activity had a high degree of similarity, indicating robustness in the RMFSN’s ability to consistently identify relevant features across different individuals. This robustness underscores the model’s resilience to variations in sensor data. It can also be seen from the figure that the heat map distributions for the standing and sitting activities closely resemble each other, which may be attributed to the resemblance in sensor data for these two activities. Additionally, this observation indirectly elucidates the reasons for mutual misclassification in the confusion matrix presented in

Figure 8.

3.5. Ablation Study

The purpose of this section’s ablation experiments is to validate the performance of each module. The experimental results assumed the use of the classifier network proposed in this paper, and except for the removed modules, all other parameters were kept unchanged. The experimental results are shown in

Table 5, and it can be observed that each module had some impact on the model’s performance, with the combination of these modules leading to a more significant performance improvement. On the UCI-HAR, WISDM, and OPPORTUNITY datasets, the combination of the three modules resulted in accuracy improvements of 6.71%, 8.26%, and 7.86%, respectively, compared with the baseline. Of particular note is the significant improvement on the OPPORTUNITY dataset, which may be attributed to the dataset containing a larger number of activity categories, allowing each module to fully leverage its capabilities.

In

Table 5, C, T, and M represent the channel attention shrinkage block, temporal feature extraction block, and multi-feature extraction block, respectively.

3.6. Impact of Evaluation Method

In order to evaluate the impact of different evaluation methods (which use different dataset partitioning methods) on the performance evaluation for HAR methods, we compared the accuracy of our proposal with three types of evaluation methods, including datasets split with various ratios, n-fold cross-validation, and LOSO. As shown in

Table 6, the accuracy varied with various evaluation methods. However, the maximum deviations of the investigated methods for the UCI-HAR, WISDM, and OPPORTUNITY datasets were 0.02, 0.02, and 0.03, respectively. All are within the 95% confidence interval of LOSO, which demonstrates the robustness of our proposal for the evaluation methods.

3.7. Overhead Analysis

Finally, we analyzed the computation overhead of the proposed RMFSN. We used the concept of inference time, which is defined as the time duration it takes to predict a single sample, to evaluate the time complexity of the RMFSN for recognizing an activity. We conducted calculations for different samples over 300 rounds and averaged the results. The model size represents the sum of the trainable model parameters and buffer size. FLOPs denotes the number of floating-point operations involved in model inference or training and serves as a crucial metric for evaluating the computational complexity. As is illustrated in

Table 7, for the wearable sensor-based HAR task, our model requires a reasonably small computation overhead and can be deployed at edge devices.

4. Conclusions

This paper proposed a residual multifeature fusion shrinkage network for wearable sensor-based human activity recognition. In the proposal, we used a multi-branch architecture, incorporating GRUs, dilated convolutions, and a 1D-CNN to extract diverse features. In addition, an attention mechanism was designed to enhance feature representation and crucial feature channel selection. The experimentation results, including the recognition results from trials on public datasets, ablation studies, and comparisons with existing works, demonstrated the robustness and excellent generalization capabilities of the proposal.

In future works, we will develop our dataset with more human activity samples. In addition, some techniques such as those in [

47] will be introduced to mitigate potential inferences from electromagnetic radiation for improving the quality of raw data. In addition, we will improve the proposed RMFSN in the following two aspects. Firstly, we plan to add an efficient channel attention network (ECANet) to bolster the robustness of feature selection, becoming adaptive to datasets with similar or missing data. In addition, we will develop a semi-supervised learning approach to leverage a substantial amount of unlabeled data in conjunction with labeled data for model training, aiming at improving the model’s generalization ability to adapt to various scenarios.