Abstract

Point cloud densification is essential for understanding the 3D environment. It provides crucial structural and semantic information for downstream tasks such as 3D object detection and tracking. However, existing registration-based methods struggle with dynamic targets due to the incompleteness and deformation of point clouds. To address this challenge, we propose a Kalman-based scene flow estimation method for point cloud densification and 3D object detection in dynamic scenes. Our method effectively tackles the issue of localization errors in scene flow estimation and enhances the accuracy and precision of shape completion. Specifically, we introduce a Kalman filter to correct the dynamic target’s position while estimating long sequence scene flow. This approach helps eliminate the cumulative localization error during the scene flow estimation process. Extended experiments on the KITTI 3D tracking dataset demonstrate that our method significantly improves the performance of LiDAR-only detectors, achieving superior results compared to the baselines.

1. Introduction

High-precision environment sensing is essential in advancing autonomous driving technology. It necessitates the integration of multiple sensors such as cameras, millimeter-wave radar, and LiDAR [1]. LiDAR utilizes a restricted set of scanning beams to perceive the environment, leading to the generation of a sparsely populated point cloud. The inadequate semantic information provided by a sparsely populated point cloud significantly impacts the efficacy of 3D perception tasks, including 3D object detection, scene semantic segmentation [2], and 3D object tracking [3]. Point cloud densification is crucial in comprehending 3D environments as it offers supplementary structural and semantic information to improve the accuracy of 3D perception tasks. Nevertheless, existing densification methods based on registration prove inadequate for dynamic targets due to the inherent limitations of the point cloud, such as incompleteness and deformation. To tackle this concern, we propose a Kalman-based scene flow estimation method for point cloud densification and 3D object detection in dynamic scenes.

Generally speaking, point cloud densification aims to reconstruct a dense and precise point cloud frame from a given sequence of sparse point clouds. This process is particularly challenging due to the variability and complexity of spatial data in real-world environments. Many registration-based methods [4,5,6,7,8] are currently being investigated for aligning multi-frame point clouds to obtain dense point cloud maps. The Iterative Closest Point (ICP) algorithm [9] is a classical point cloud alignment algorithm that optimizes the correspondence between two point clouds by minimizing their differences. However, its reliance on static geometries makes it less effective in scenarios where objects or the environment change over time. While the ICP family of algorithms is efficient at aligning large-scale point clouds, it often overlooks the dynamic attributes of the objects within the scene. These dynamic attributes include aspects such as the movement, rotation, and deformation of objects over time, which are crucial in dynamic environments. With the emergence of deep learning, several works [10,11,12,13,14] have started to exploit feature correspondences and spatial relationships within point clouds.

Advancements in feature matching techniques, such as SSL-Net’s approach to sparse semantic learning [15] and PGFNet’s strategy for preference-guided filtering [16], have been instrumental in enhancing the accuracy of feature correspondence in complex scenarios. These methods demonstrate the importance of accurately identifying and filtering key features, a concept that can significantly augment traditional methods like ICP in point cloud densification processes, especially in dynamic scenes. The ability to discern and track these dynamic attributes accurately is crucial for understanding changes in the scene over time, thereby enhancing 3D perception in applications like autonomous driving.

Scene flow estimation aims to fundamentally understand the evolving dynamics of the environment without making any assumptions about the scene structure or object motion. Early works [17,18,19,20] focused on stereo vision cameras, where 3D motion fields were estimated using a knowledge-driven approach. Flownet3D [21] is the first deep learning-based end-to-end scene flow estimation network. It utilizes PointNet++ [22] as the backbone network while introducing a novel stream embedding layer. NSFP [23] is the first method for estimating scene flow based on runtime optimization. It represents the scene flow using a unique implicit regularizer and is not constrained by data-driven limitations. However, when estimating long sequence point cloud scene flow, the estimator is limited by the motion relationships between two frames of point clouds, which can lead to the point cloud being guided to the wrong location. To overcome this localization error, an effective correction mechanism is necessary.

With the development of multi-modality techniques, several studies have proposed utilizing additional information to improve the performance of 3D object detectors. The authors of [7] propose a framework based on an improved ICP method to enhance the performance of deep learning-based classification by incorporating the shape information of the LiDAR point cloud. The authors of [24] explore a motion detection method based on point cloud registration. This method detects motion by analyzing the overlapping relationship between the registered source and target point clouds. The authors of [25] propose a point cloud feature fusion module based on scene flow estimation to enhance the performance of 3D object tracking.

The method proposed in this paper is based on scene flow estimation and aims to improve the density of dynamic objects in a long sequence of point clouds to enhance the performance of 3D object detection. This will allow multiple frames of these objects to converge into the same frame. However, existing scene flow estimators suffer from localization errors when estimating a long sequence of point clouds. These errors disrupt the structural information of the target and render it unsuitable for 3D perception tasks. Therefore, we introduce a Kalman filter to correct the scene flow estimation results and eliminate the localization errors frame by frame. This approach leads to improved performance in point cloud densification. Our contributions in this study are as follows:

- We propose a novel method for densifying point clouds by utilizing motion information from multi-frame point clouds with a novel scene flow estimator using a two-branch pyramid network as the implicit regularizer.

- We address the issue of localization error in long-sequence scene flow estimation by utilizing a Kalman filter to precisely adjust the position of dynamic objects.

- We compare our point cloud densification method with the ICP-based point cloud densification method, and our method outperforms in terms of accuracy and precision.

- We conduct extensive experiments on the KITTI 3D tracking dataset to demonstrate that our method effectively improves the LiDAR-only detectors’ performance by achieving superior results to the baselines.

2. Related Work

2.1. Point Cloud Alignment and Densification

Point cloud alignment and densification are vital for 3D environment perception. Initially, methods like the Iterative Closest Point (ICP) and Normal Distributions Transform (NDT) were predominantly used for mapping and densification tasks [26]. Advancements in this field have led to the development of methods that combine learning-based approaches with traditional algorithms, for instance, a hybrid method that integrates learning with ICP for aligning both rigid and variable point clouds, where ‘rigid’ refers to objects that maintain their shape and size over time, while ‘variable’ objects may change shape or deform. This method has shown state-of-the-art performance, whose results are currently among the best in this field of research [27].

Further innovations include the development of low-latency alignment methods suitable for real-world applications, which combine adaptive thresholding ICP, robustness kernels, motion compensation, and downsampling strategies [28]. In dynamic environments, enhanced NDT algorithms have been employed to estimate the static likelihood of points, thereby efficiently handling scenes with multiple moving objects [29]. Urban scene point cloud registration has also seen significant advancements with the introduction of MLP(Multi-layer Perceptron)-based models, which estimate transformations implicitly and show promising results [14]. Large-scale outdoor road scenes have been addressed by selecting key area laser point clouds for feature extraction and registration, focusing on both coarse and fine alignment [30].

The increasing availability of point cloud data has spurred research in scene flow estimation. Large-scale datasets with dense 3D motion ground truth have been developed for depth estimation refinement and scene flow analysis [31]. Innovative self-supervised methods using neural network-based constraints have been proposed to estimate scene flow, enabling multi-frame point cloud densification [23]. Recent advances in feature matching, such as those demonstrated in SSL-Net, PGFNet, JRA-Net, and MSA-Net, have further enhanced the capability to identify reliable correspondences crucial for accurate scene flow estimation [16,32,33]. However, suggestions for further optimization in this area have been made, advocating for the integration of registration, segmentation, and rigid-body flow estimation to enhance densification outcomes [34]. Techniques for fusing scene flow in non-rigid objects, especially in autopilot scenarios, have also been developed to improve the reliability of point cloud densification [35]. Moreover, various studies have employed advanced encoding-decoding architectures for point cloud completion and densification [36,37,38].

2.2. 3D Object Detection

The introduction of PointNet [39] and PointNet++ [22] revolutionized the extraction of features from sparse point cloud data, enabling effective classification, detection, and segmentation. Subsequent 3D detectors, trained solely on point cloud data, have shown impressive capabilities. PointPillars, for example, encodes longitudinal pillar point cloud features as pseudo-images for 2D detection in a 3D context [40]. VoxelNet partitions point clouds into fixed-resolution voxels for feature encoding and then uses an RPN (Region Proposal Network) network to perform 3D object detection. However, this representation loses the spatial resolution and geometric features of point cloud and increases the density of voxels, resulting in a cubic multiplication of computational complexity [41].

Recent developments have seen the emergence of self-integrated single-stage detectors for outdoor 3D object detection. By using knowledge distillation, these models optimize performance without additional computational effort [42]. Transformation-Equivariant 3D Detectors have been proposed, employing sparse convolutional backbones to extract transformed equivariant voxel features for high-performance detection [43].

In the PMPF approach for 3D object detection, point cloud data are enhanced via projection onto the image plane and the integration of ‘region pixels’ from corresponding image regions. These region pixels enrich the point cloud with additional image-derived information, allowing for improved performance when used with LiDAR-only detectors. This method demonstrates significant performance improvements in various LiDAR-based detectors [44]. Motion detection methods based on point cloud registration, combining geometric and structural features with neural network-based interests, have enhanced the detection and tracking of moving objects [24]. Novel multi-task models have been introduced for simultaneous scene flow estimation and object detection, achieving significant improvements in performance and latency [45]. These models represent a crucial advancement in the field, combining two complex tasks into a single efficient process.

Overall, the field of point cloud processing, particularly in alignment, densification, and 3D object detection, has seen rapid advancements with the integration of deep learning techniques and innovative algorithmic approaches. These developments not only enhance the accuracy and efficiency of existing methods but also open new avenues for research and application in various domains such as autonomous driving, robotics, and urban planning.

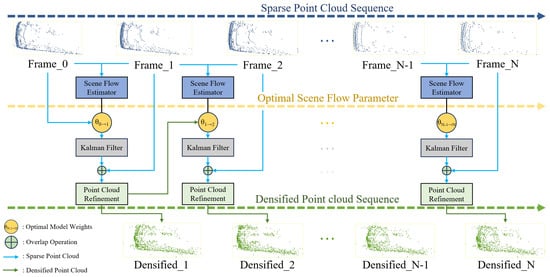

3. Proposed Method

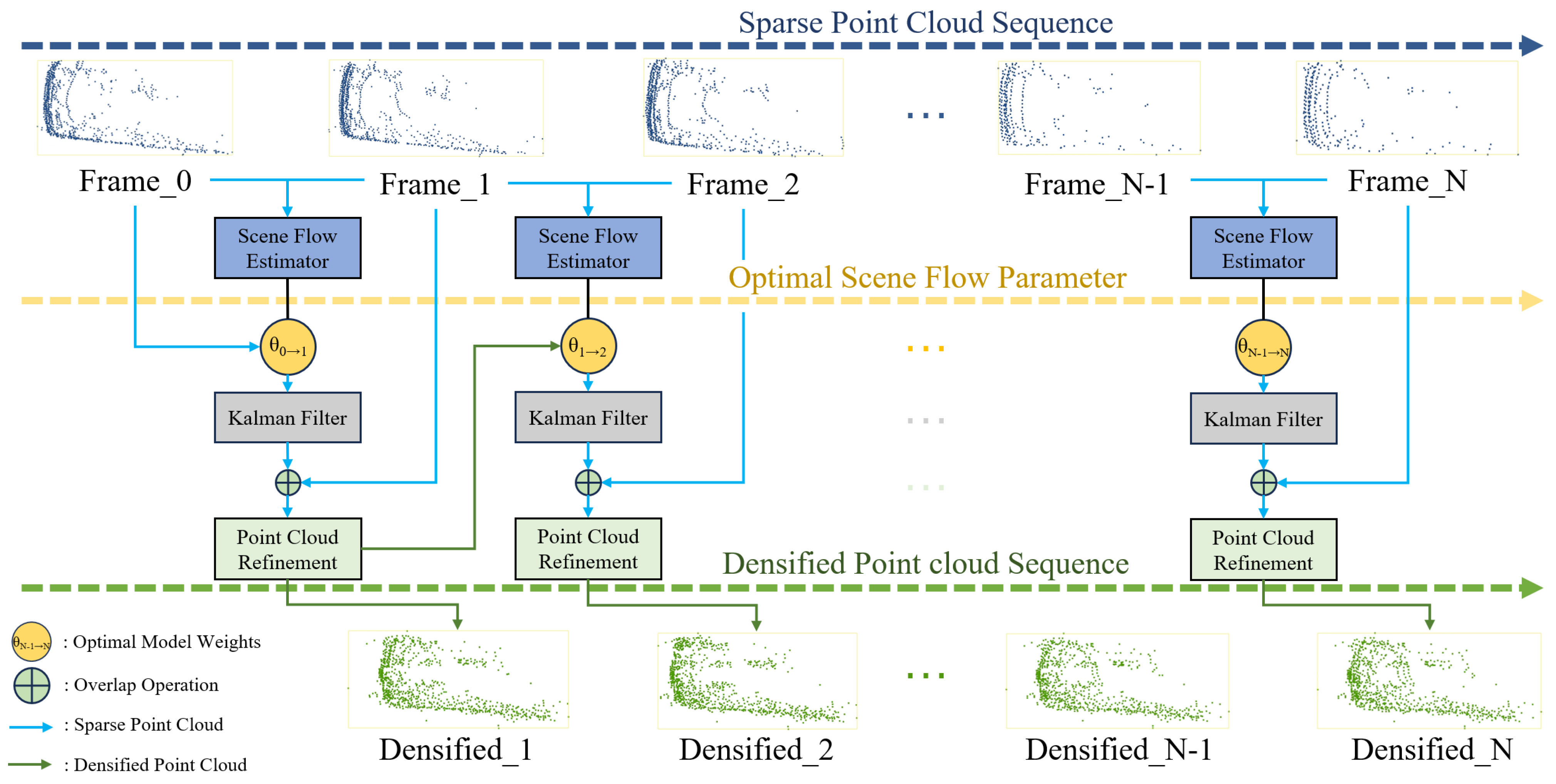

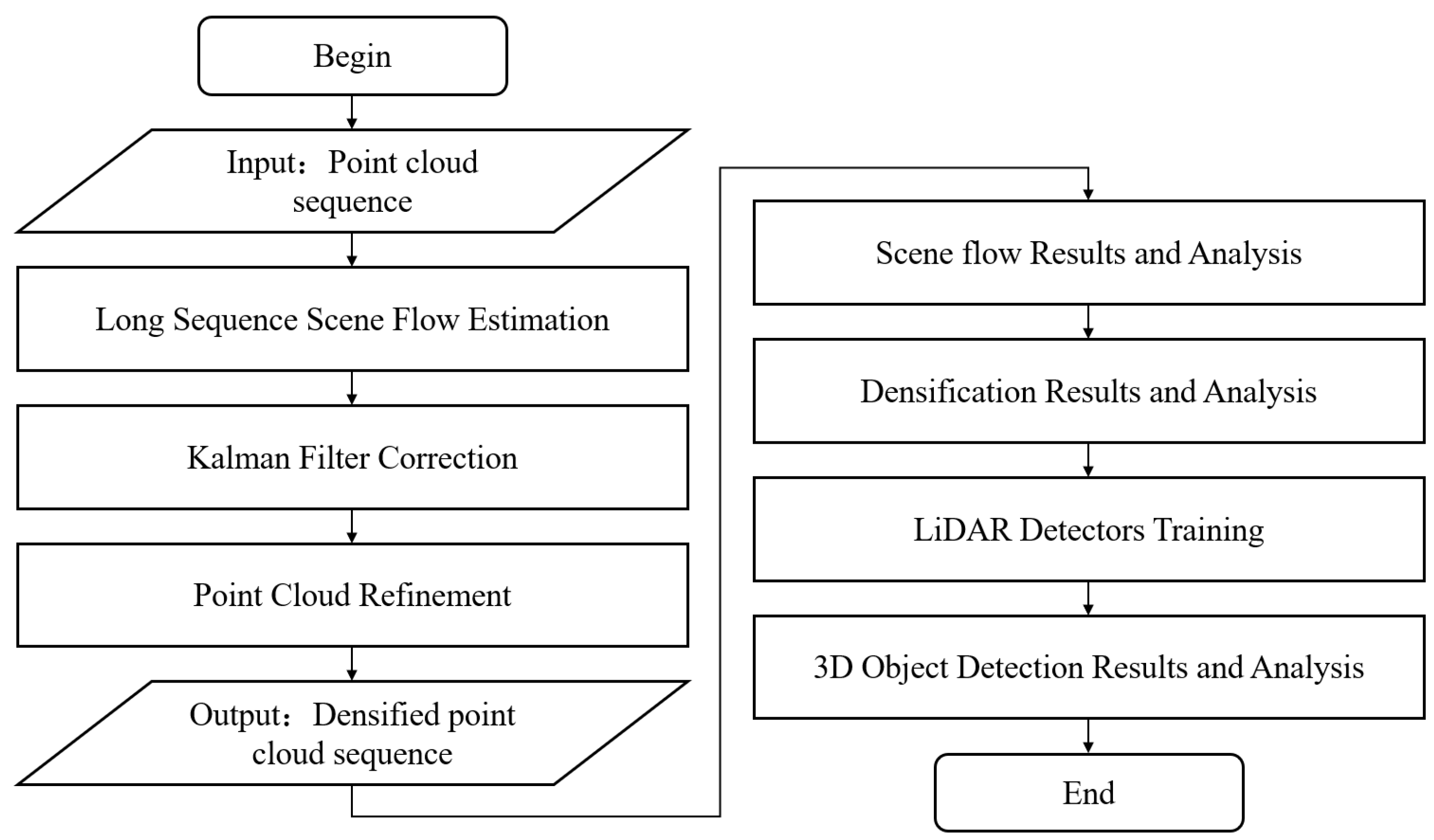

In this paper, a two-branch pyramid network is modeled as the scene flow estimator, and the adopted dataset is the KITTI tracking dataset [46]. Scene flow estimation is first performed on pairs of point cloud frames to obtain an optimal estimate of the motion field and store the corresponding optimal model weights; then, these saved optimal model weights are utilized to perform a long sequence of scene flow estimation. In this process, the Kalman filter is employed to rectify the position of dynamic targets obtained from estimation, thereby mitigating the cumulative localization error. The results after correction are refined according to the point cloud density of the current frame to streamline the number of point clouds and minimize the impact of cumulative noise on the results. Finally, the aforementioned operations are recursively applied to the sequence of point clouds from the initial frame to the final frame, resulting in the densification of the dynamic point cloud targets of the dataset. The overall architecture of the densification method is depicted in Figure 1. The pseudocode is presented in Algorithm 1.

| Algorithm 1 Dynamic Point Cloud Densification Algorithm |

| Require: Point cloud Sequence: Ensure: Densification Results:

|

Figure 1.

The architecture of densification method. The point clouds are fed into the scene flow estimator to obtain the motion field of the points. Then, the Kalman filter is used to correct the localization error during the long sequence. Finally, the point clouds are refined and deduplicated to obtain the densified results.

3.1. Long Sequence Scene Flow Estimation

Scene flow estimation is the process of determining the motion vectors that describe the dynamic changes between consecutive point cloud frames in a scene. These vectors provide insights into the relative movement of objects and surfaces from one frame to the next.

Let and denote the point cloud frames at consecutive time steps t and . The scene flow is the vector field that represents the estimated movement of each point from to its new position in .

The scene flow quantifies the displacement of points between consecutive frames in a point cloud sequence, capturing the dynamic changes within the scene from time t to . This concept extends beyond the straightforward difference between coordinates, encompassing complex movements, including the translation, rotation, and deformation of objects in the environment.

In practice, there is not always a direct one-to-one correspondence between and , so the scene flow represents the motion vector from each point in to its closest matching position in . This relationship is modeled by the function F:

where denotes the scene flow estimation model and represents the model weights. Scene flow estimation is performed for each pair of point cloud frames in the sequence , sequentially calculating the optimal scene flow model weights .

For comprehensive multi-frame analysis, the scene flow estimation from any frame i to frame j is iteratively updated as follows:

where is the cumulative scene flow from the initial frame to frame j, refined with the latest estimation of movement between frames and i. This process ensures that each new frame contributes to the overall scene dynamics by updating the cumulative scene flow with the latest available data. Consequently, the scene flow at any given frame j is the result of aggregating the motion observed up to that point, thus reflecting a more accurate depiction of the movement patterns over time.

The above formulation ensures that scene flow estimation is treated as an iterative and cumulative process that refines our understanding of the scene dynamics over time rather than defining the point cloud frames themselves.

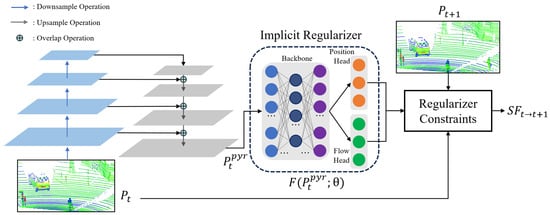

3.2. Two-Branch Pyramid Network

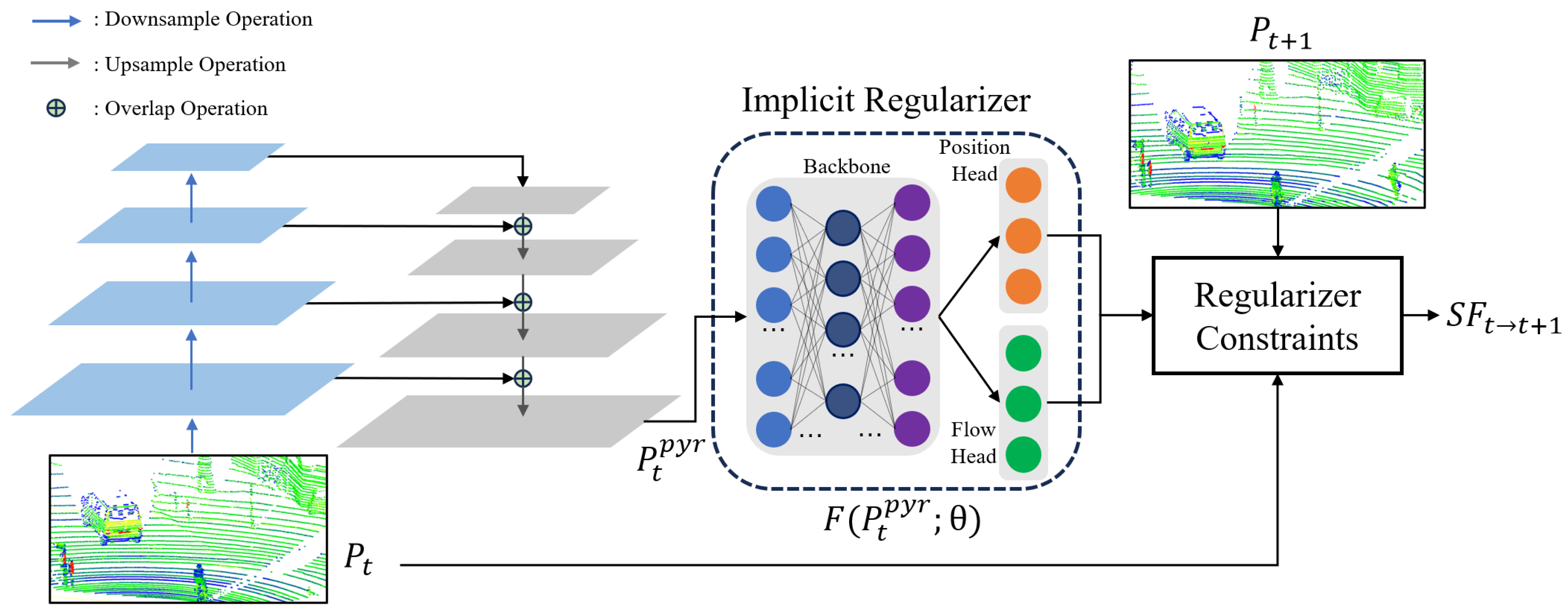

We have developed a two-branch pyramid network to serve as the model for the scene flow estimator, as illustrated in Figure 2. Frame is initially inputted into a pyramid feature extractor, which comprises a bottom-up and top-down pathway for the point cloud. The number of sampling layers is configured as 4 for both the bottom-up and top-down pathways, while the scale rate is set to 2. The output of each downsampled layer will be combined with the results of the upsampling layer and passed as input to the subsequent layer for the next upsampling operation. The final output, noted as , is fed into an implicit regularizer for optimization.

Figure 2.

Overall architecture of the proposed two-branch pyramid network. The network mainly consists of a pyramid feature extractor and an MLP-based implicit regularizer. The regularizer constraints are formulated into two parts, which enable the output to satisfy the position and flow consistency. The output represents the estimated motion field between and .

In the implicit regularizer, the backbone is constructed using a neural network based on an MLP. We include a position head and a flow head to encode the positional and motion information of the input point cloud. We adopt the LeakyReLU(Leaky Rectified Linear Unit) [47] as the activation function. Unlike the traditional ReLU activation function, which blocks negative input values, LeakyReLU allows a small, non-zero gradient when the unit is not active. This means that it passes a small fraction of the negative values, thus addressing the issue of ‘dead neurons’ that can occur in ReLU. This characteristic helps in maintaining the flow of gradients through the network, making it particularly useful for models where the preservation of information from negative input values is crucial. The input layer of the backbone consists of three channels, while there are six hidden layers with 128 hidden units each. The backbone is equipped with a 128-channel output, which is subsequently connected to the position head and flow head. Each head comprises two hidden layers, each containing 128 hidden units. The output of each head consists of three channels, which correspond to the estimated coordinates and motion vectors in the XYZ coordinate system. Given the , the position head outputs the estimated coordinates noted as , and the flow head outputs the estimated flow vectors noted as .

In the regularizer constraints, we formulate the contraints as two parts. On the one hand, the combined with the should be consistent with the . On the other hand, the substracted from the should be consistent with . Thus, the objective function can be defined as

where (.,.) denotes the Chamfer distance function [48], which is defined as

where a and b are s from point clouds A and B.

During the optimization process, we follow the gradient descent technique and obtain the optimal scene flow model weights , as shown in Equation (5).

3.3. Kalman Filter Correction

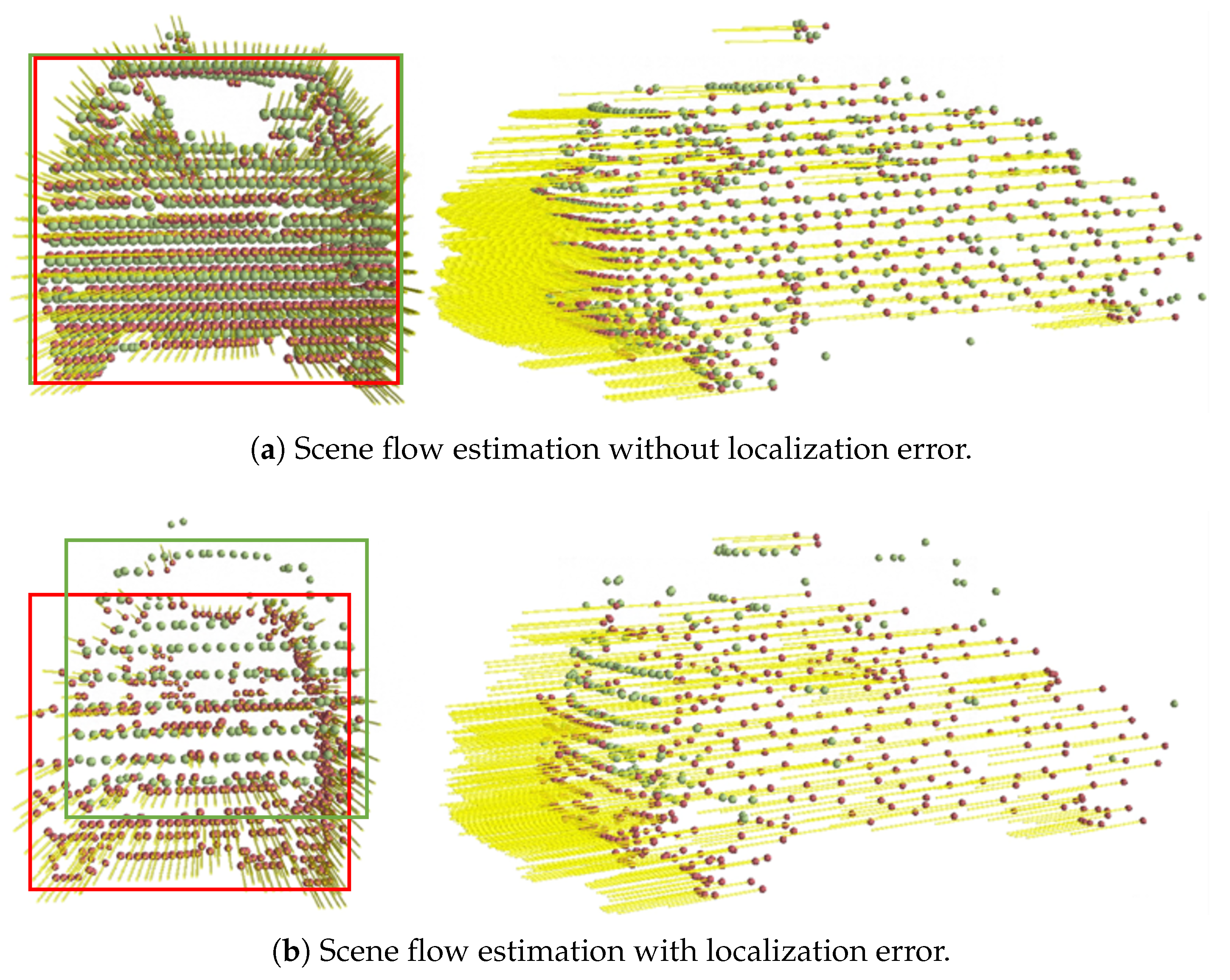

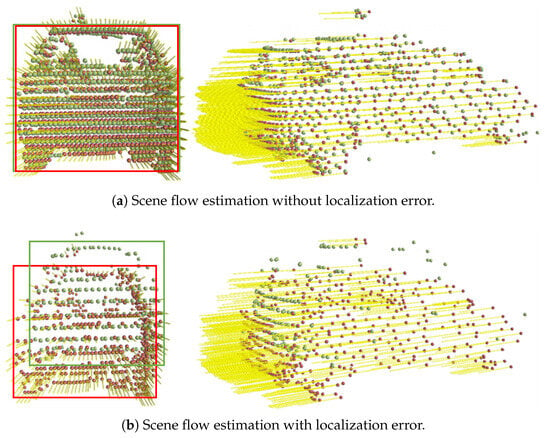

However, although all scene flow model weights {, , …, } are the optimal estimation of the corresponding point cloud pairs, these parameters tend to suffer from the problem of accumulating localization errors when performing long sequences of scene flow estimation, as depicted in Figure 3.

Figure 3.

Localization error in long sequence scene flow estimation: (a) without localization error, red point cloud matches the green ground truth; (b) with localization error, red point cloud shows noticeable drift indicating error significance.

As depicted in Figure 3, the scene flow estimation model’s performance is visualized. In Figure 3a, we observe that the red point cloud, representing the estimated scene flow, closely aligns with the green point cloud, which represents the ground truth. This alignment indicates a precise estimation without localization error. Conversely, Figure 3b reveals the challenges posed by cumulative localization errors. Despite the estimator maintaining the overall shape of the point cloud, there is a noticeable drift towards the lower left, underscoring the significance of robust error correction mechanisms in long sequence estimations.

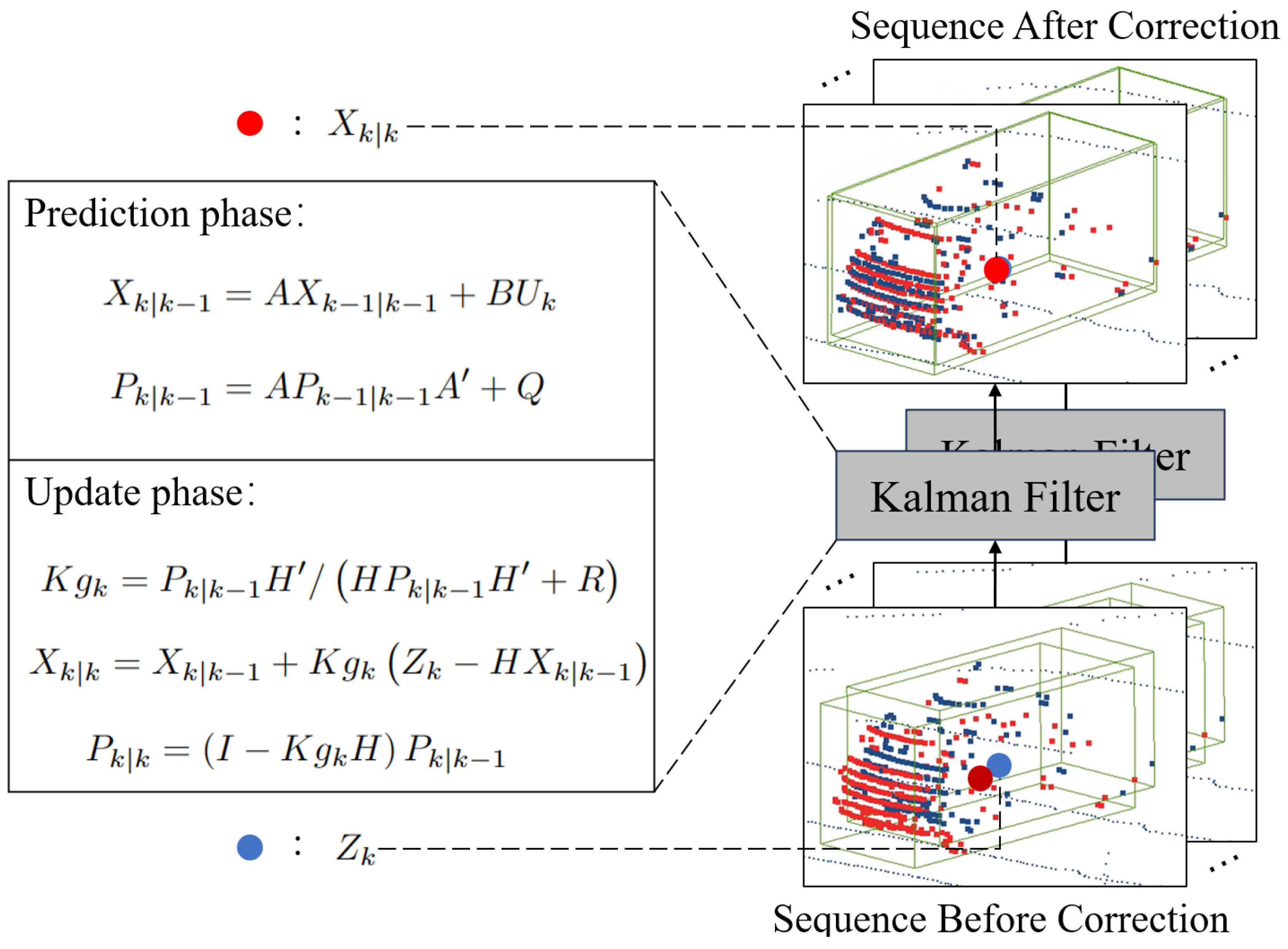

To eliminate the cumulative localization error of point cloud during the long sequence estimation process, the Kalman filter [49] is introduced to correct the dynamic targets’ position.

The cluster center of the dynamic target is represented as the state quantity , where denote the spatial coordinates and represent the velocity components. Assuming that the dynamic target adheres to uniform motion, the transition matrix A is constructed to reflect this. In the matrix, represents the time interval between successive observations or states. It is used to integrate the effect of time on the movement and velocity of the target. The transition matrix A is thus defined as:

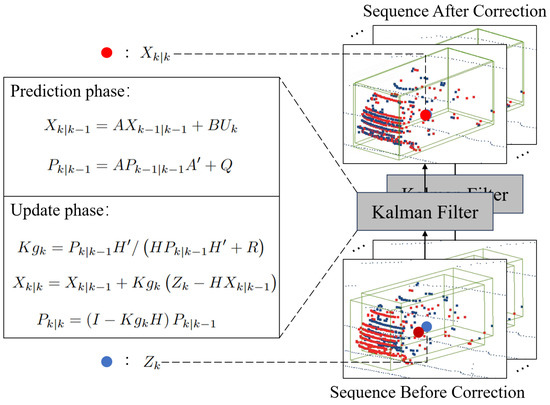

The Kalman filter consists of prediction and updating, as depicted in Figure 4:

Figure 4.

The procedure of Kalman filter correction.

is the optimal outcome of the previous state, A and B are the system parameters, is the control quantity of the present state, and is the prediction of . is the covariance of , is the transpose matrix of A, Q is the covariance of the system process noise, and is the covariance of . H is the observation matrix, R is the noise covariance of the system measurements, and is the Kalman gain. is the measured value, is the optimal predicted value at the current moment, and is the covariance of .

The center of dynamic target is corrected using the optimal Kalman filter prediction value , which eliminates the localization error frame by frame and obtains accurate scene flow estimation results for a long sequence of point clouds.

3.4. Point Cloud Refinement

After accumulating multi-frame point clouds, duplicate points exist in the same part of the target. We propose refining and deduplicating the point cloud to reduce the noise accumulation on the densification result and ensure the uniformity of the target object’s density.

The search radius essentially defines the maximum distance within which a point in the scene flow estimation result , corrected by the Kalman filter, is considered to be a duplicate of a point in . The average point cloud distance D of point cloud is used as the search radius, and the average point cloud distance D is calculated by Equation (7):

where , , are the mean values of point cloud in x, y, z dimensions, respectively, and n is the number of point clouds.

If a point in is found within this radius of a point p, then p is replaced with the nearest point in ; otherwise, p is retained. This approach allows us to efficiently deduplicate and refine the point cloud, enhancing the overall quality of the densification results. By iterating this process for all values of i ranging from 0 to , we obtain the refined densification results for the first j frames.

4. Experiments

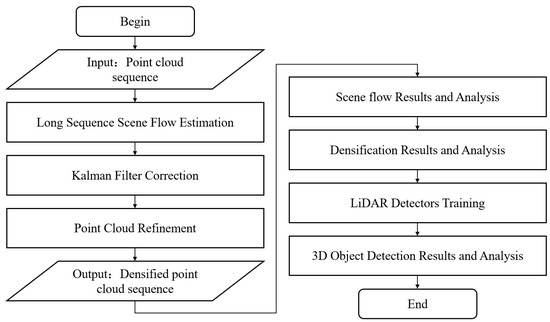

In this section, we provide a detailed description of the parameter settings used in the scene flow estimation model, along with the hardware and software configuration employed. In the second part, we have finetuned our model on the KITTI scene flow dataset and compared our method against other state-of-the-art scene flow estimation techniques. Next, we proceed to compare the proposed method with the ICP algorithm in terms of the densification outcomes. For the purpose of conducting a comprehensive analysis, we have employed five distinct LiDAR detectors to assess their performance. This evaluation was carried out on both the raw and densified KITTI tracking dataset. The overall methodology and sequential analysis steps in our approach are summarized in Figure 5.

Figure 5.

Overall flowchart of the proposed method for point cloud densification and 3D object detection.

4.1. Experimental Setup

The hardware and software configurations utilized in this paper are outlined in Table 1.

Table 1.

Hardware and software configurations.

In our experimental setup, parallel computing techniques were employed to accelerate the processing, primarily utilizing GPU computation. The workflow involved two key computational stages. Firstly, the scene flow estimation was carried out using GPU-based computations. This step leverages the powerful parallel processing capabilities of the GPU to efficiently handle the complex calculations required by the scene flow model. Following the scene flow estimation, the point cloud positions were corrected using the Kalman filter, a process that was executed on the CPU. For dynamic targets identified in the point cloud space through clustering, multiple CPU threads were allocated to perform Kalman filter operations in parallel. This parallelization on the CPU allowed for efficient handling of multiple dynamic targets simultaneously. Once all the corrections for a particular frame were completed, the process proceeded to the next frame, maintaining this sequence throughout the experiment.

For the task of object detection, our experimental phase primarily focused on the training and validation of the models. This component was conducted separately and predominantly utilized GPU computations. The use of GPUs in this phase was crucial due to their ability to handle the intensive calculations required for deep learning tasks, significantly speeding up the training and validation process of the detection models.

This combination of GPU and CPU parallel processing not only optimized our computational workflow but also ensured the efficient handling of both the scene flow estimation and object detection tasks, crucial for the success of our experiment.

The optimization of the scene flow estimatior is achieved through the utilization of the Adam optimizer, which aims to minimize the objective function. Considering the abundance of point clouds and the issue of overfitting, the learning rate has been set to 1 × 10−3, and the optimization rounds have been limited to 5000 iterations.

4.2. Scene Flow Results and Analysis

To thoroughly assess our proposed scene flow estimation model, we conducted experiments on the KITTI Scene Flow dataset [50], which comprises 100 training and 50 test scenarios. Each scenario consists of several consecutive stereo image pairs along with corresponding LiDAR point cloud data and precise ground truth annotations, covering diverse driving environments such as urban streets, rural roads, and highways.

The performance of the scene flow estimator was evaluated using a set of established metrics from previous works [51,52]: End-Point Error in 3D (EPE3D), which measures the average Euclidean distance between the estimated and ground truth flow vectors; strict 3D accuracy (acc3d_strict), the proportion of estimated points with an error below 5 cm or 5%; relaxed 3D accuracy (acc3d_relax), considering a threshold of 10 cm or 10%; and the outlier ratio, the percentage of points where the estimation error exceeds 30 cm or 10%. These metrics provide a comprehensive assessment of the estimator’s accuracy and robustness in estimating scene flow, offering a detailed framework for benchmarking against other state-of-the-art techniques. The detailed evaluation metrics are as follows:

- End-Point Error in 3D (EPE3D): The average Euclidean distance between the estimated flow field and the ground truth , which provides a measure of the model’s predictive accuracy.

- Strict 3D Accuracy (Acc3d_strict): The percentage of points with an end-point error of less than 5 cm or a relative end-point error of less than 5%, measuring the model’s accuracy under stringent conditions.

- Relaxed 3D Accuracy (Acc3d_relax): The percentage of points with an end-point error of less than 10 cm or a relative end-point error of less than 10%, assessing the model’s performance under more lenient conditions.

- Outlier Ratio (Out.): The percentage of points where the end-point error exceeds 30 cm or the relative end-point error is greater than 10%, identifying predictions with substantial errors.

Following the presentation of evaluation metrics, we now introduce the comparative methods used in our experiments to benchmark our scene flow estimator against current leading techniques in the field, including four distinct scene flow estimation models: Graph Prior [53] employs a graph-based approach for spatial consistency and geometric coherence, beneficial for large-scale datasets in driving contexts. Neural Prior [23] leverages deep learning to encode motion patterns, aiming for robust flow estimation amidst environmental noise and occlusions. SCOOP [54] represents a novel, minimally supervised technique that learns point features and refines flow via soft correspondences, making it suitable for training with limited data. Lastly, OptFlow [55] utilizes an optimization strategy with local correlation and graph constraints, efficiently differentiating between static and dynamic points and showcasing rapid convergence with high accuracy.

The effectiveness of our scene flow estimation model was rigorously evaluated on the KITTI Scene Flow dataset, with the results being compared against several state-of-the-art methods. The comparative results are summarized in Table 2.

Table 2.

Comparative results in the KITTI scene flow dataset.

Our method achieved the lowest End-Point Error in 3D (EPE3D) of 0.027, indicating the highest precision in estimating the motion vectors. While OptFlow excelled in terms of strict and relaxed 3D accuracy, our method demonstrated competitive performance with a balanced approach towards accuracy (94.3% in Acc3d_strict and 96.8% in Acc3d_relax) and outlier management, evidenced by the lowest outlier ratio of 10.4%. These results signify our method’s capability to accurately estimate scene flow while maintaining robustness against errors. Notably, the non-learning-based nature of our method, alongside Graph Prior, Neural Prior, and OptFlow, reflects the potential of algorithmic approaches without extensive reliance on large training datasets.

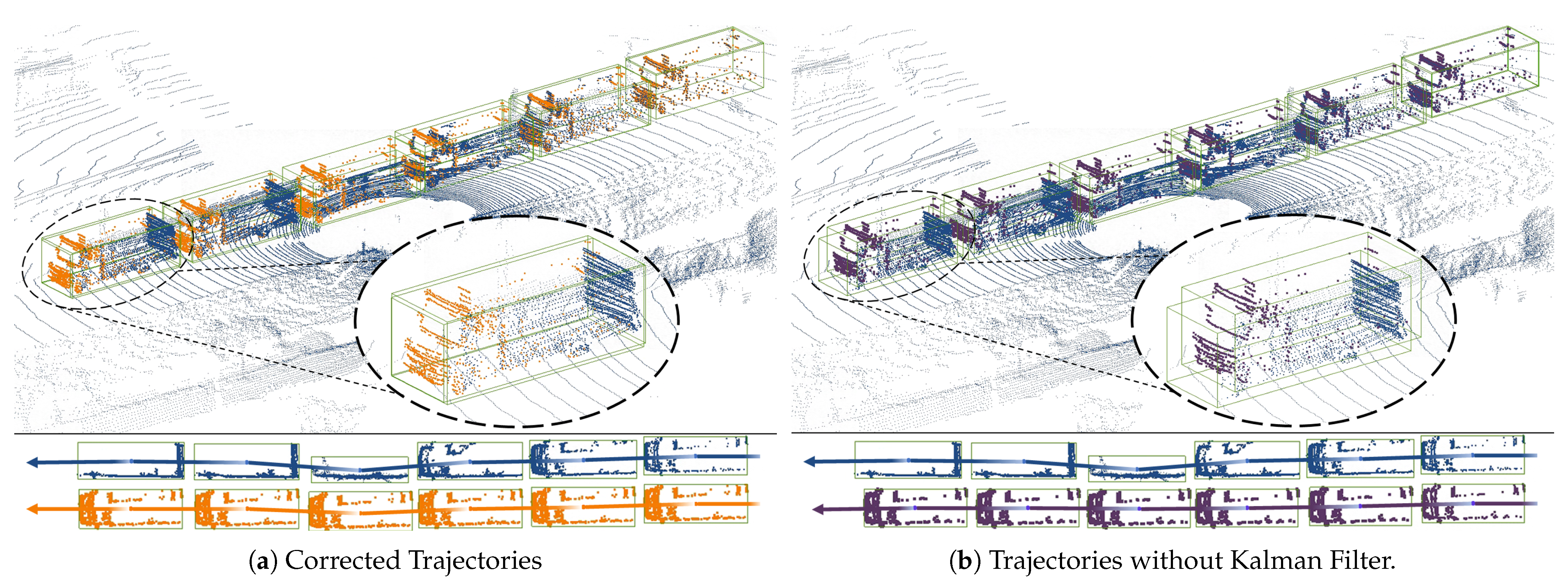

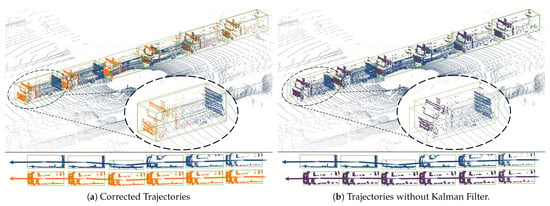

To further substantiate the efficacy of our scene flow estimatior, particularly emphasizing the improved localization accuracy provided by the Kalman filter, we include a set of visualization results. Figure 6 concretely demonstrates the scene flow estimation over extended sequences, revealing the trajectories and point cloud data before and after Kalman filter correction. Figure 6a,b illustrate the marked contrast in trajectory prediction and point cloud localization with and without the use of the Kalman filter. In Figure 6a, we observe the corrected trajectories that exhibit high coherence and precision, affirming the filter’s role in enhancing localization accuracy, especially in complex urban and highway scenarios. Conversely, Figure 6b highlights the discrepancies and increased localization errors when the Kalman filter is not employed. The bottom sections of both figures provide a clear side-by-side trajectory comparison, with the corrected paths presenting a compelling case for the Kalman filter’s impact. These visual comparisons not only bolster the quantitative results but also deliver qualitative, intuitive confirmation of our model’s improved performance in dynamic settings. The enhanced flow coherence and diminished error presented in these visual outcomes effectively address the reviewer’s call for more robust visual evidence, thus substantiating our claims of superior scene flow estimation capabilities.

Figure 6.

Comparison of scene flow trajectories: (a) demonstrates trajectories with Kalman filter correction, with the orange trajectories representing the estimated scene flow after Kalman filter refinement, closely mirroring the blue ground truth trajectories and exhibiting improved localization and flow coherence; (b) shows trajectories without Kalman filter correction, where the purple trajectories indicate uncorrected scene flow estimates, leading to visible deviations from the blue ground truth and resulting in increased localization errors. The trajectory comparison at the bottom of the images accentuates the accuracy enhancement due to the Kalman filter, highlighting the precision of our method in tracking and predicting the motion of objects.

4.3. Densification Results and Analysis

The densification experiments are particularly focused on mobile entities like cars, trucks, and cyclists because these entities typically exhibit significant movement and variation in point clouds, presenting unique challenges in terms of densification and accuracy. To disrupt the structure of the raw point cloud, we employ random cropping. Subsequently, we evaluate the performance of our proposed method and the ICP algorithm on these data by conducting a comparative analysis.

In our implementation of the ICP algorithm, the objective was to align the point clouds from consecutive frames. This involved iteratively adjusting the transformation matrix to minimize the distance between corresponding points in the point clouds. The algorithm accounted for rotations and translations to best fit the point clouds from frame to frame. This iterative process continued until the changes in the transformation matrix were below our predefined threshold of 0.005, indicating an adequate alignment had been achieved.

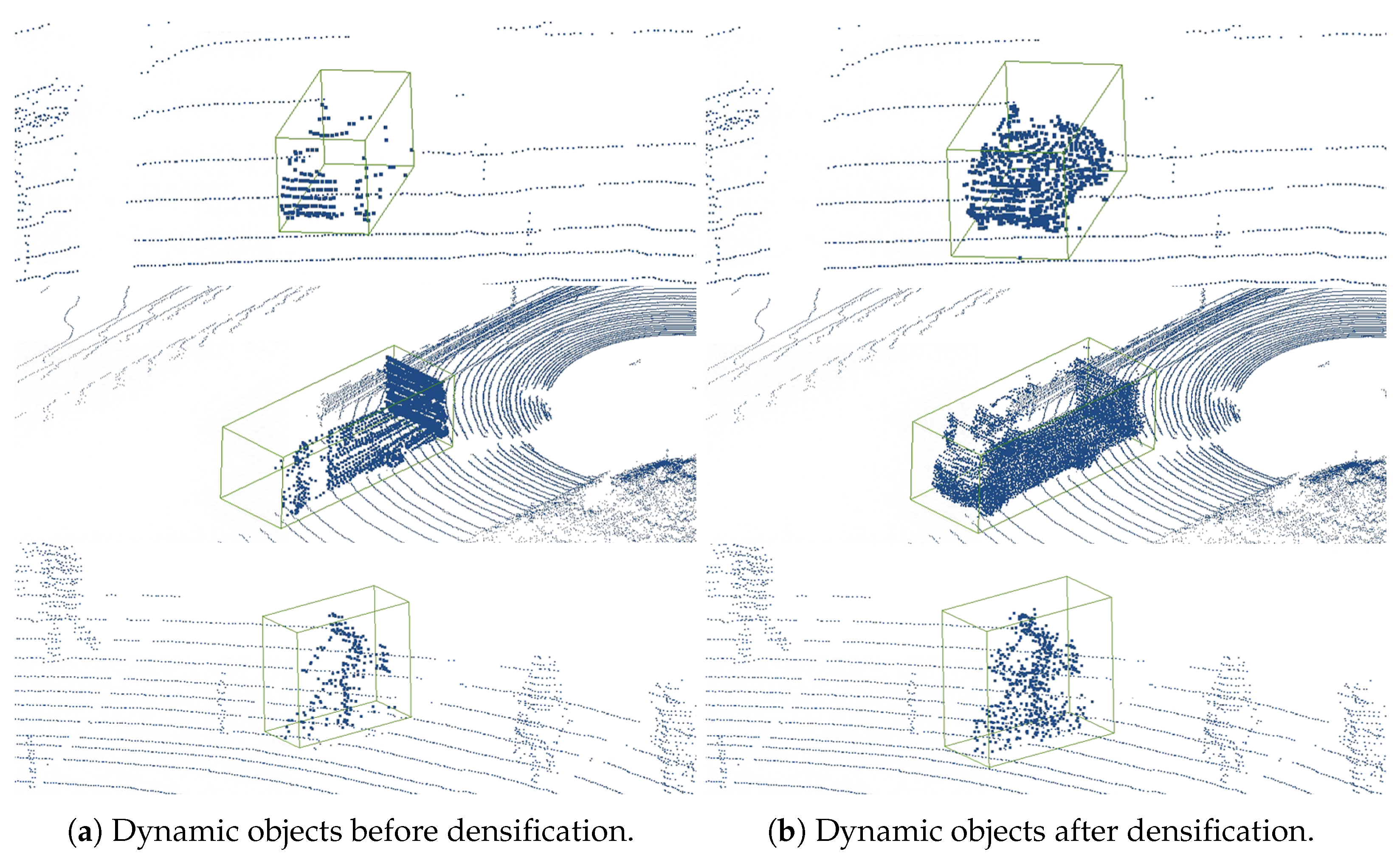

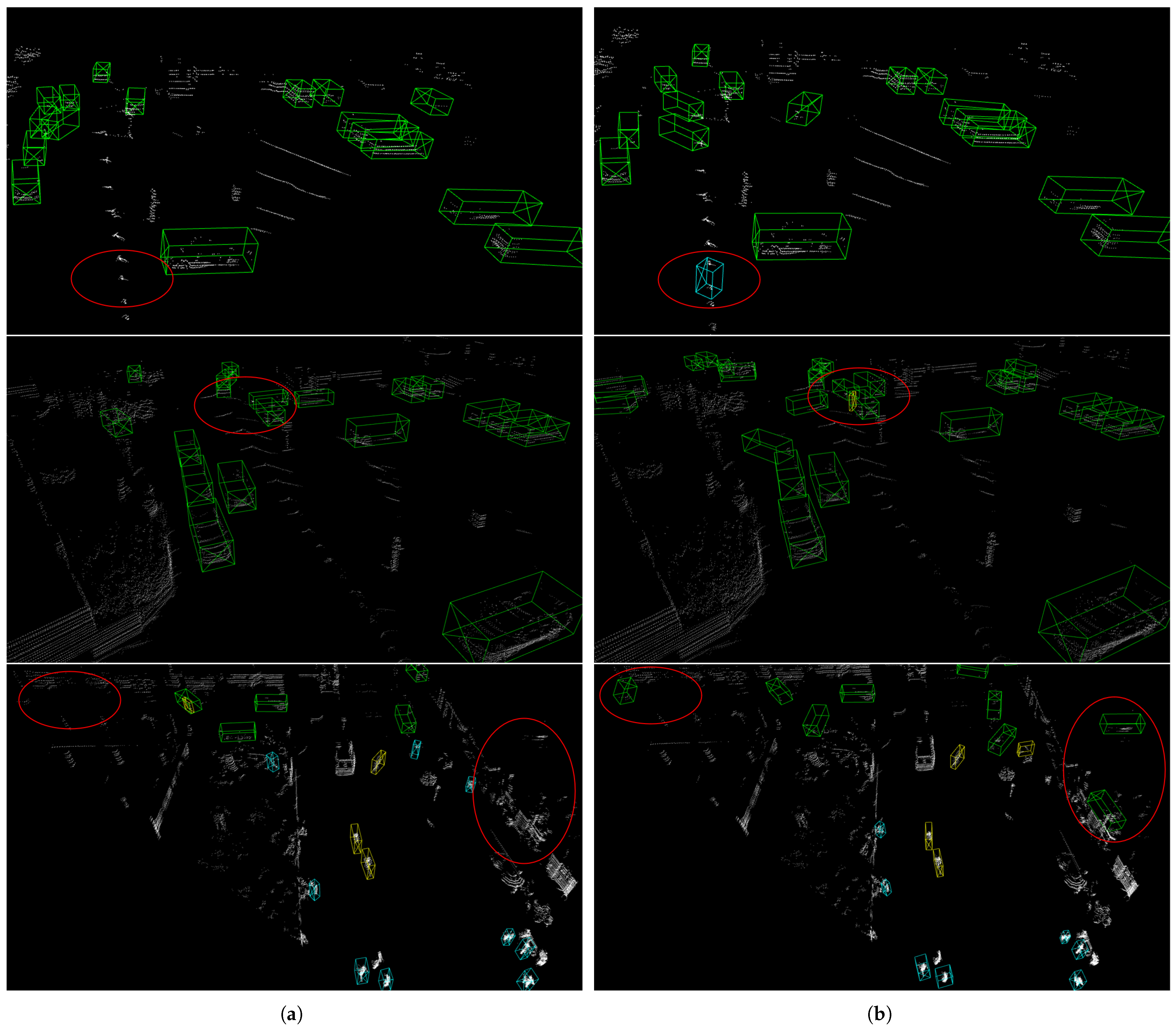

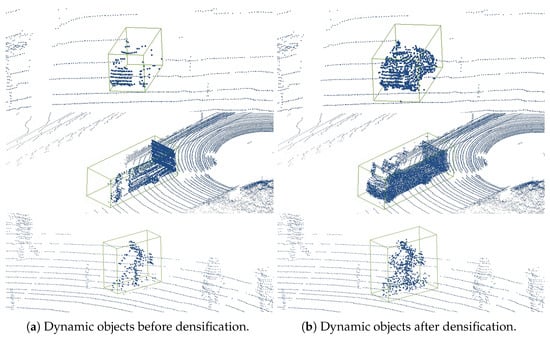

In Figure 7, the process of proposed point cloud densification method is illustrated. Figure 7a shows the original sparse point cloud with missing data sections. Figure 7b demonstrates how the proposed algorithm adds new points to these sections. These new points are generated based on the estimated motion and shape information derived from adjacent frames and the algorithm’s predictive modeling. The points are not imported from other point clouds but are instead synthesized by the algorithm to fill in gaps and enhance the overall density and completeness of the point cloud.

Figure 7.

Densification results: (a) demonstrates dynamic objects before densification; (b) demonstrates dynamic objects after densification. The proposed method effectively provides more semantic and structural information than the raw point cloud.

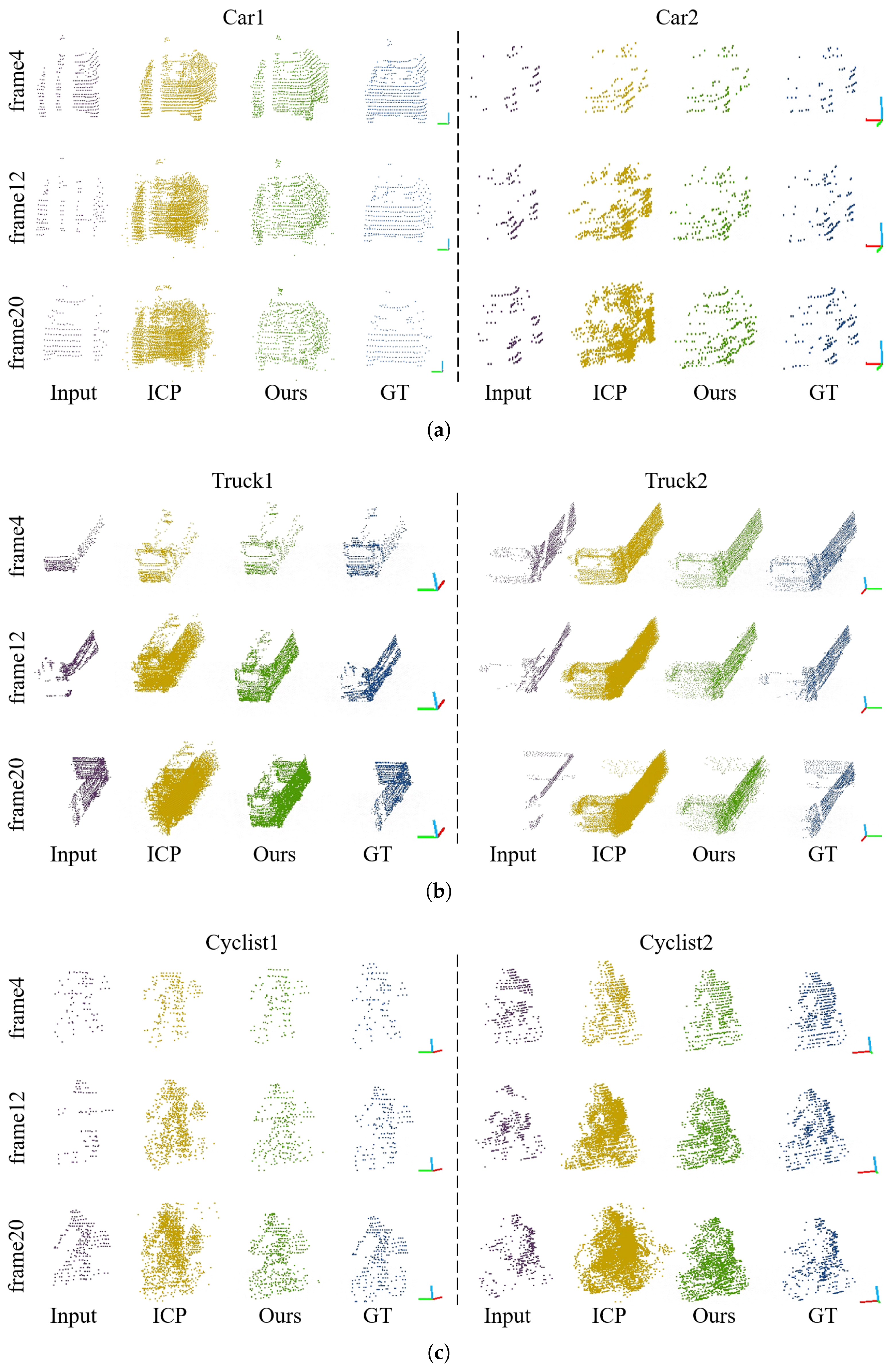

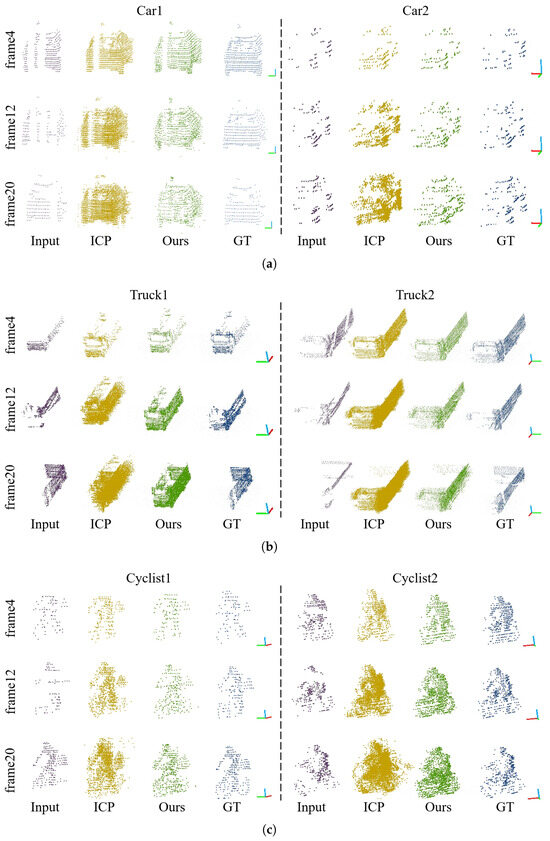

The proposed method and the ICP algorithm are utilized to analyze the dynamic targets that have been segmented from the KITTI tracking dataset. The qualitative comparison results are illustrated in Figure 8. Based on the analysis of the experimental results, it is apparent that our method successfully maintains the shape of the densified point cloud while minimizing the occurrence of noise points.

Figure 8.

Qualitative comparison of densification results with ICP algorithm: (a) cars; (b) trucks; and (c) cyclists. Densified dynamic objects using our method are depicted in green, and results from ICP algorithm are depicted in yellow. Each object is densified using 4, 12, and 20 frames of the point cloud, respectively.

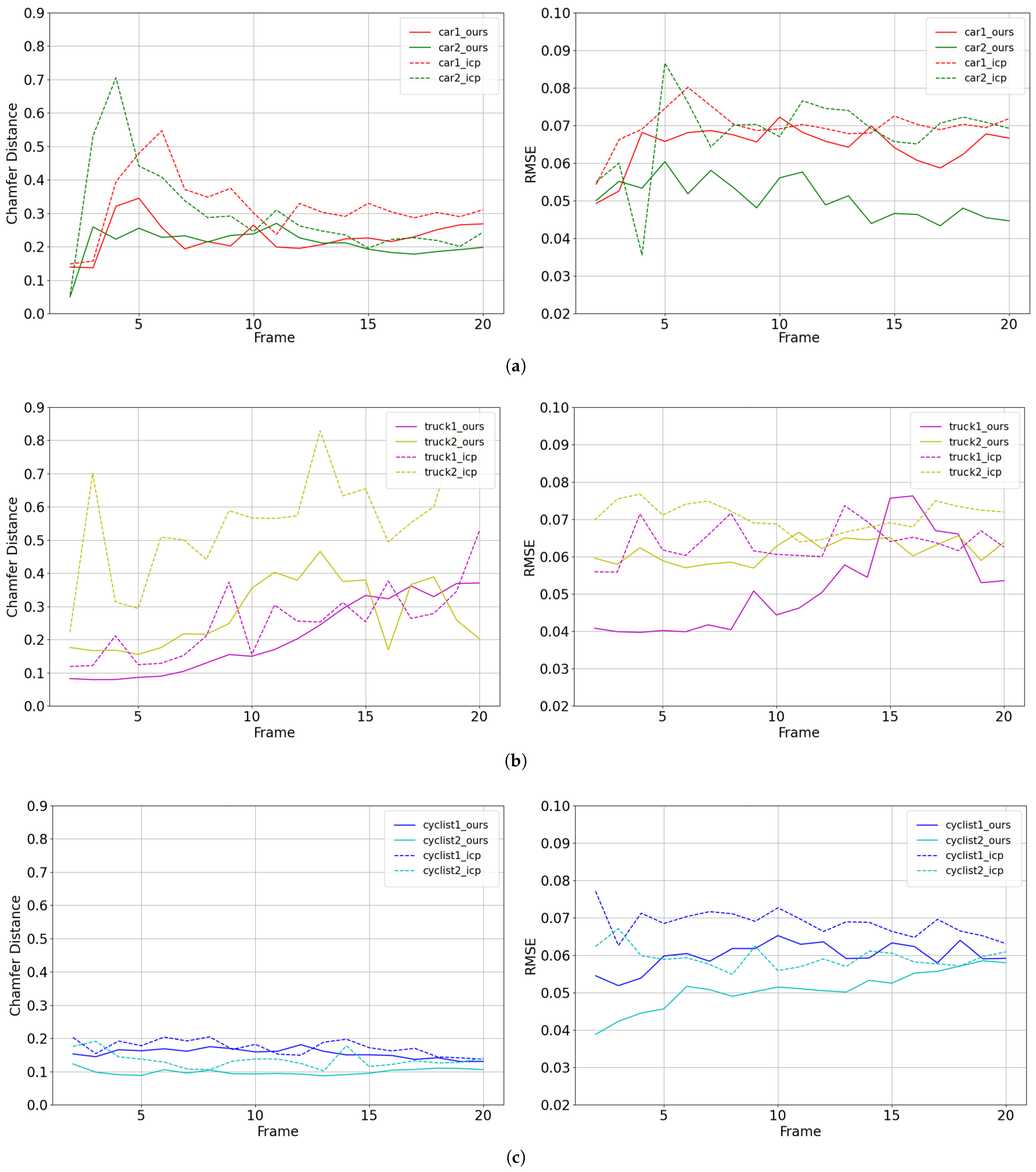

To evaluate the effectiveness of the method proposed in this correspondence, the evaluation metrics utilized consist of Chamfer Distance (CD) [48] and Root Mean Square Error (RMSE). The Chamfer Distance (CD) is defined by Equation (8). The Root Mean Square Error (RMSE) is defined as

The Root Mean Square Error (RMSE) measures the deviation between predicted and true values and is more sensitive to outliers in the data. Based on the above two metrics, a comparative analysis of the performance of the method across different object classes is presented in Table 3.

Table 3.

Quantitative comparison of point cloud densification performance.

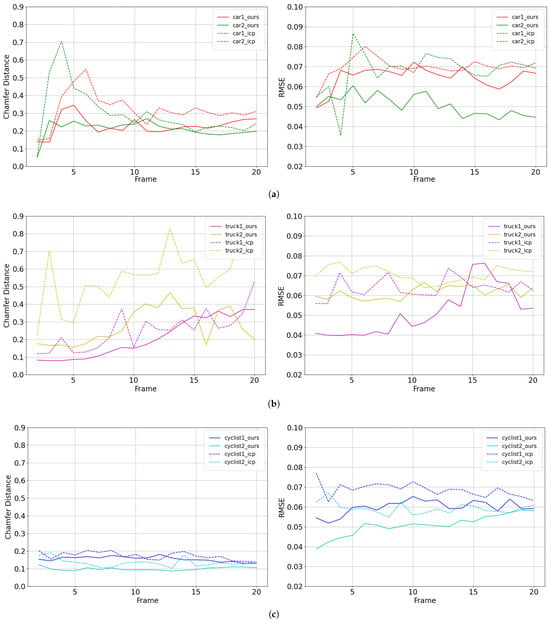

The Chamfer Distance (CD) measures the consistency of the densification outcome and the ground truth. The Root Mean Square Error (RMSE) is employed to assess the accuracy of the densification result in comparison to the actual values of the inner points. Table 3 demonstrates that the method proposed in this paper achieves lower results for all three classes in both metrics. As shown in Figure 9, we statistically compare the results of densification using one-frame point clouds to twenty-frame point clouds. In most cases, our method achieves better results than the traditional ICP method. The proposed method exhibits superior performance in comparison to the conventional ICP algorithm by effectively improving the density and completeness of the point cloud.

Figure 9.

Chart comparison of densification details in Chamfer Distance and Root Mean Square Error: (a) cars; (b) trucks; and (c) cyclists. In most cases, our approach is superior to the ICP algorithm.

In our ablation studies, we specifically assessed the impact of key components in our proposed method: scene flow, Kalman filter, and point cloud refinement. The analysis of Chamfer Distance results, presented in Table 4, highlights the importance of these components in ensuring spatial accuracy in point cloud densification. Notably, the Kalman filter’s contribution was more pronounced than that of the refinement module; this was especially evident in the results for truck1 and truck2. While the combination of scene flow, Kalman filter, and point cloud refinement provided the most accurate outcomes with the lowest Chamfer Distance across all categories, the Kalman filter alone significantly improved spatial accuracy, underscoring its critical role in our method. The increased Chamfer Distance in configurations without the Kalman filter reaffirms its substantial impact on achieving optimal densification.

Table 4.

Ablation Study Results—Chamfer Distance.

In the analysis of Root Mean Square Error (RMSE), detailed in Table 5, we found that the contributions of the Kalman filter and point cloud refinement to the overall precision of densification were similar. The integration of scene flow, Kalman filter, and refinement resulted in the lowest RMSE values, denoting the highest precision. The inclusion of both the Kalman filter and refinement was essential for enhancing localization accuracy and maintaining temporal alignment accuracy in dynamic scenes. This was particularly important for moving objects such as cars and cyclists. The similar performance improvements observed in configurations with either the Kalman filter or refinement alone highlight the balanced contribution of these components to the accuracy of the densification process.

Table 5.

Ablation Study Results—RMSE.

4.4. 3D Object Detection Results and Analysis

To evaluate the performance impact of densification on LiDAR-based object detection, we carried out a series of experiments using the KITTI tracking dataset. This dataset was partitioned into a training set with 4001 point cloud frames and a validation set with 3900 frames. The division facilitates a robust evaluation framework, allowing for an in-depth analysis of the detector’s performance under varied conditions.

Densification was applied to the raw KITTI tracking dataset by aggregating data from one and three consecutive point cloud frames. The rationale behind this choice is twofold. Firstly, the original dataset annotations are based on single-frame data, where ground truth labels are provided for each frame in isolation. Introducing more frames for densification would require a complex re-annotation process to include the additional temporal context, a task that would be both labor-intensive and outside the scope of this study. Secondly, the choice of three frames specifically serves to provide a deeper temporal insight while maintaining computational efficiency. It allows us to capture more dynamic changes in the scene than single-frame densification while avoiding the exponential increase in computational load and potential data misalignment that could arise from processing many frames. Our approach, therefore, carefully balances the depth of temporal context with practical constraints such as the availability of labeled data and computational resources.

In our experiments, both one-frame and three-frame densified datasets were used during the training and testing phases to ensure consistency in model evaluation. The training followed the protocol established by the Openpcdet [56] framework, with a designated 200 epochs to achieve detector convergence.

To provide a comprehensive understanding of the detectors used in our study, we briefly describe each:

- PointPillars [40] is a novel 3D object detection framework for point cloud data. It converts point clouds into a pseudo-image of pillars, enabling efficient detection through 2D convolutional neural networks.

- SECOND [57] employs sparse convolutional networks to process spatially sparse but information-rich regions in point clouds, thereby enhancing both speed and accuracy in 3D object detection.

- PointRCNN [58] is a two-stage 3D object detector that operates directly on raw point clouds. It generates 3D bounding box proposals and refines them for precise object localization and classification.

- PV-RCNN [59] integrates voxel-based and point-based neural networks. This hybrid approach extracts rich contextual features from point clouds, leading to significant improvements in detection accuracy.

- [60] focuses on detailed part-level features for robust object recognition in point cloud data. It enhances detection precision by aggregating these fine-grained features effectively.

Our results, as tabulated in Table 6, demonstrate that densification contributes to noticeable performance improvements across these detectors. The PV-RCNN detector, in particular, showcased an enhancement of up to 7.95% in detecting cyclists in challenging scenarios.

Table 6.

Quantitative comparison of five different LiDAR detectors’ performance on raw and densified datasets. Our method achieves superior detection performance in 38 out of 45 categories.

However, the performance gains observed with the three-frame densified dataset were marginal compared to those with the single-frame dataset. This outcome suggests that while additional frames do provide more context, there is a threshold beyond which the benefits plateau, likely due to the increased complexity without a corresponding increase in labeled information. In essence, the modest performance gains from additional frames are potentially offset by the introduction of unlabeled objects, leading to a trade-off between temporal context and the quality of training data.

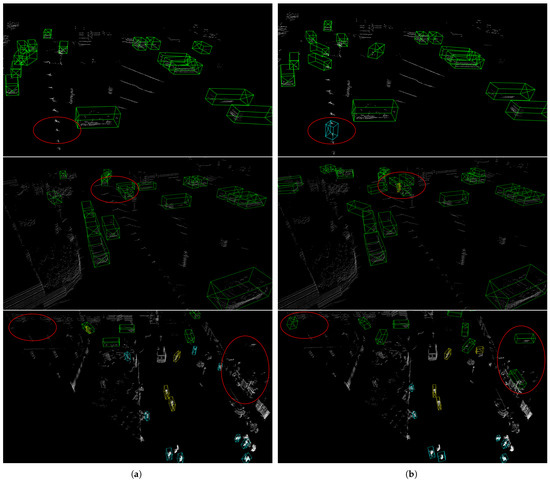

Qualitative comparisons in Figure 10 illustrate the advantages of our densification method. Figure 10b is derived from the PV-RCNN detector trained on the three-frame densified dataset, while Figure 10a pertains to the same detector trained on the raw dataset. Our method enriches the dataset with additional structural and semantic information, leading to a reduction in false positives across various environmental conditions, as highlighted in the image sections. This underscores our densification approach’s ability to mitigate false detections for vehicles, pedestrians, and cyclists.

Figure 10.

Qualitative comparison of the 3D object detection results: (a) the left column shows the results from the PV-RCNN detector trained on the three-frame densified dataset; (b) the right column shows the results from the PV-RCNN detector trained on the raw dataset. The boxes in the diagram are green for car, light blue for pedestrain, and yellow for cyclist. Our proposed method effectively reduces the false positive detection in general scenes, as illustrated by the red circles highlighting the detection differences between datasets.

4.5. Limitations and Future Work

Following the detailed presentation of our experimental results and their comparative analysis, it is imperative to acknowledge the limitations of our approach and propose avenues for future advancements.

Limitations:

- Kalman Filter Integration: Our method uses a Kalman filter to correct localization errors in dynamic targets within point cloud scenes, especially in long sequence scene flows. However, this module’s integration with the deep learning-based scene flow estimator is not as tight as it could be, leading to additional computational overhead.

- Scene Flow Estimator Constraints: The constraints of our scene flow estimator require further exploration. The model currently faces significant computational challenges when estimating point cloud scene flows in large scenes, indicating a need for further optimization.

- Validation on 3D Object Detection Models: There is a need for more extensive validation across various 3D object detection models to ensure the method’s adaptability to different applications.

Future Work:

- Integrating Kalman Filter into Scene Flow Estimation: One avenue for improvement involves embedding the Kalman filter module directly into the scene flow estimation model. This integration, along with developing appropriate constraints, could be advantageous for long sequence point cloud scene flow estimation.

- Lightweight Data Representation: Adopting more lightweight data representation mechanisms could significantly reduce the overall latency of the algorithm.

- Experiments with Additional 3D Object Detection Models: Expanding our experiments to include a wider range of 3D object detection models is crucial. Given the issues of multi-frame label mismatch, implementing an appropriate label modification mechanism will be essential to maintain the accuracy of model training.

5. Conclusions

This paper proposes a Kalman-based scene flow estimation method for point cloud densification and 3D object detection in dynamic scenes. Our method effectively overcomes the problem of localization errors in estimating long sequence scene flow and improves the accuracy and precision of shape completion. The localization accuracy of the scene flow estimation results can be effectively improved by introducing the Kalman filter to correct the position of the dynamic target. The densification results show that, compared with the ICP method, our method is more suitable for dynamic targets and achieves higher levels of accuracy and precision. Extended experiments on the KITTI 3D tracking dataset prove that our method effectively improves the LiDAR-only detectors’ performance and achieves superior results to the baselines. In future work, we consider optimizing the scene stream estimation speed so as to meet the real-time requirement, and we also consider further fusing the image information to enhance the point cloud to improve the model detection. Our main conclusions in this study can be summarized as follows:

- With a novel scene flow estimator using a two-branch pyramid network as the implicit regularizer, we are capable of utilizing motion information from multi-frame point clouds to densify point clouds, which achieves excellent performance in both accuracy and precision.

- The issue of localization error in long-sequence scene flow estimation can be effectively addressed by utilizing a Kalman filter to precisely adjust the position of dynamic objects. This mechanism makes the densification result more robust.

- Compared with the ICP-based point cloud densification method, our method is based on the implicit constrainer-guided scene flow results, whose ability to fine-tune the position of the point cloud makes the results more accurate and outperforms the traditional methods.

- Extensive experiments on the KITTI 3D tracking dataset demonstrate that our method effectively improves the LiDAR-only detectors’ performance by adding more semantic and structural details.

Author Contributions

Conceptualization, J.D., J.Z. and C.W.; methodology, J.D., J.Z. and C.W.; software, J.D. and L.Y.; validation, J.D. and C.W.; formal analysis, J.D. and C.W.; investigation, J.D. and C.W.; resources, J.D. and C.W.; data curation, J.D.; writing—original draft preparation, J.D.; writing—review and editing, J.D. and C.W.; visualization, J.D.; supervision, C.W.; project administration, C.W.; and funding acquisition, C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (No. 62275186 and 62201372).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available in a public repository. The data that support the findings of this study are available in [46] at https://www.cvlibs.net/datasets/kitti/index.php, accessed on 2 October 2023.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Arnold, E.; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A survey on 3d object detection methods for autonomous driving applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795. [Google Scholar] [CrossRef]

- Xie, Y.; Tian, J.; Zhu, X.X. Linking points with labels in 3D: A review of point cloud semantic segmentation. IEEE Geosci. Remote Sens. Mag. 2020, 8, 38–59. [Google Scholar] [CrossRef]

- Hu, H.N.; Yang, Y.H.; Fischer, T.; Darrell, T.; Yu, F.; Sun, M. Monocular quasi-dense 3d object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1992–2008. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009; p. 435. [Google Scholar]

- Kim, K.; Kim, C.; Jang, C.; Sunwoo, M.; Jo, K. Deep learning-based dynamic object classification using LiDAR point cloud augmented by layer-based accumulation for intelligent vehicles. Expert Syst. Appl. 2021, 167, 113861. [Google Scholar] [CrossRef]

- Fontana, E.; Lodi Rizzini, D. Accurate Global Point Cloud Registration Using GPU-Based Parallel Angular Radon Spectrum. Sensors 2023, 23, 8628. [Google Scholar] [CrossRef] [PubMed]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor fusion IV: Control Paradigms and Data Structures, Boston, MA, USA, 12–15 November 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Wang, W.; Saputra, M.R.U.; Zhao, P.; Gusmao, P.; Yang, B.; Chen, C.; Markham, A.; Trigoni, N. Deeppco: End-to-end point cloud odometry through deep parallel neural network. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 3248–3254. [Google Scholar]

- Li, Z.; Wang, N. Dmlo: Deep matching lidar odometry. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2021; pp. 6010–6017. [Google Scholar]

- Wang, G.; Wu, X.; Liu, Z.; Wang, H. Hierarchical attention learning of scene flow in 3d point clouds. IEEE Trans. Image Process. 2021, 30, 5168–5181. [Google Scholar] [CrossRef]

- Chen, J.; Wang, H.; Yang, S. Tightly Coupled LiDAR-Inertial Odometry and Mapping for Underground Environments. Sensors 2023, 23, 6834. [Google Scholar] [CrossRef]

- Liu, J.; Xu, Y.; Zhou, L.; Sun, L. PCRMLP: A Two-Stage Network for Point Cloud Registration in Urban Scenes. Sensors 2023, 23, 5758. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Xiao, G.; Shi, Z.; Guo, J.; Ma, J. SSL-Net: Sparse semantic learning for identifying reliable correspondences. Pattern Recognit. 2024, 146, 110039. [Google Scholar] [CrossRef]

- Liu, X.; Xiao, G.; Chen, R.; Ma, J. Pgfnet: Preference-guided filtering network for two-view correspondence learning. IEEE Trans. Image Process. 2023, 32, 1367–1378. [Google Scholar] [CrossRef]

- Huguet, F.; Devernay, F. A variational method for scene flow estimation from stereo sequences. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision (ICCV), Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–7. [Google Scholar]

- Quiroga, J.; Devernay, F.; Crowley, J. Local/global scene flow estimation. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 3850–3854. [Google Scholar]

- Hadfield, S.; Bowden, R. Kinecting the dots: Particle based scene flow from depth sensors. In Proceedings of the 2011 International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2290–2295. [Google Scholar]

- Quiroga, J.; Devernay, F.; Crowley, J. Scene flow by tracking in intensity and depth data. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 50–57. [Google Scholar]

- Liu, X.; Qi, C.R.; Guibas, L.J. Flownet3d: Learning scene flow in 3d point clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 529–537. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5105–5114. [Google Scholar]

- Li, X.; Kaesemodel Pontes, J.; Lucey, S. Neural scene flow prior. Adv. Neural Inf. Process. Syst. 2021, 34, 7838–7851. [Google Scholar]

- Li, J.; Huang, X.; Zhan, J. High-Precision Motion Detection and Tracking Based on Point Cloud Registration and Radius Search. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6322–6335. [Google Scholar] [CrossRef]

- Yang, Y.; Jiang, K.; Yang, D.; Jiang, Y.; Lu, X. Temporal point cloud fusion with scene flow for robust 3D object tracking. IEEE Signal Process. Lett. 2022, 29, 1579–1583. [Google Scholar] [CrossRef]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar]

- Li, Y.; Harada, T. Lepard: Learning partial point cloud matching in rigid and deformable scenes. In Proceedings of the 2022 IEEE/CVF conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5554–5564. [Google Scholar]

- Vizzo, I.; Guadagnino, T.; Mersch, B.; Wiesmann, L.; Behley, J.; Stachniss, C. Kiss-icp: In defense of point-to-point icp–simple, accurate, and robust registration if done the right way. IEEE Robot. Autom. Lett. 2023, 8, 1029–1036. [Google Scholar] [CrossRef]

- Lee, S.; Kim, C.; Cho, S.; Myoungho, S.; Jo, K. Robust 3-Dimension Point Cloud Mapping in Dynamic Environment Using Point-Wise Static Probability-Based NDT Scan-Matching. IEEE Access 2020, 8, 175563–175575. [Google Scholar] [CrossRef]

- Yan, D.; Wang, W.; Li, S.; Sun, P.; Duan, W.; Liu, S. A speedy point cloud registration method based on region feature extraction in intelligent driving scene. Sensors 2023, 23, 4505. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Liu, Y.; Tian, Y.; Liu, Z.; Wang, F. A New Parallel Intelligence Based Light Field Dataset for Depth Refinement and Scene Flow Estimation. Sensors 2022, 22, 9483. [Google Scholar] [CrossRef]

- Shi, Z.; Xiao, G.; Zheng, L.; Ma, J.; Chen, R. JRA-Net: Joint representation attention network for correspondence learning. Pattern Recognit. 2023, 135, 109180. [Google Scholar] [CrossRef]

- Zheng, L.; Xiao, G.; Shi, Z.; Wang, S.; Ma, J. MSA-Net: Establishing reliable correspondences by multiscale attention network. IEEE Trans. Image Process. 2022, 31, 4598–4608. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Gojcic, Z.; Huang, J.; Wieser, A.; Schindler, K. Dynamic 3d scene analysis by point cloud accumulation. In Proceedings of the 2022 European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 674–690. [Google Scholar]

- Wang, C.; Li, X.; Pontes, J.K.; Lucey, S. Neural prior for trajectory estimation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 6532–6542. [Google Scholar]

- Wu, C.; Lin, Y.; Guo, Y.; Wen, C.; Shi, Y.; Wang, C. Vehicle Completion in Traffic Scene Using 3D LiDAR Point Cloud Data. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 7495–7498. [Google Scholar]

- Wen, X.; Xiang, P.; Han, Z.; Cao, Y.P.; Wan, P.; Zheng, W.; Liu, Y.S. PMP-Net++: Point cloud completion by transformer-enhanced multi-step point moving paths. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 852–867. [Google Scholar] [CrossRef] [PubMed]

- Yuan, W.; Khot, T.; Held, D.; Mertz, C.; Hebert, M. Pcn: Point completion network. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 728–737. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3D object detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Zheng, W.; Tang, W.; Jiang, L.; Fu, C.W. SE-SSD: Self-ensembling single-stage object detector from point cloud. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14494–14503. [Google Scholar]

- Wu, H.; Wen, C.; Li, W.; Li, X.; Yang, R.; Wang, C. Transformation-equivariant 3D object detection for autonomous driving. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 2795–2802. [Google Scholar]

- Zhang, Y.; Liu, K.; Bao, H.; Zheng, Y.; Yang, Y. PMPF: Point-Cloud Multiple-Pixel Fusion-Based 3D Object Detection for Autonomous Driving. Remote Sens. 2023, 15, 1580. [Google Scholar] [CrossRef]

- Duffhauss, F.; Baur, S.A. PillarFlowNet: A real-time deep multitask network for LiDAR-based 3D object detection and scene flow estimation. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2021; pp. 10734–10741. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Xu, J.; Li, Z.; Du, B.; Zhang, M.; Liu, J. Reluplex made more practical: Leaky ReLU. In Proceedings of the 2020 IEEE Symposium on Computers and communications, Rennes, France, 7–10 July 2020; pp. 1–7. [Google Scholar]

- Fan, H.; Su, H.; Guibas, L.J. A point set generation network for 3D object reconstruction from a single image. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 605–613. [Google Scholar]

- Bishop, G.; Welch, G. An introduction to the kalman filter. Proc Siggraph Course 2001, 8, 41. [Google Scholar]

- Menze, M.; Geiger, A. Object scene flow for autonomous vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar]

- Wang, Z.; Li, S.; Howard-Jenkins, H.; Prisacariu, V.; Chen, M. Flownet3d++: Geometric losses for deep scene flow estimation. In Proceedings of the 2020 IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass, CO, USA, 1–5 March 2020; pp. 91–98. [Google Scholar]

- Mittal, H.; Okorn, B.; Held, D. Just go with the flow: Self-supervised scene flow estimation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11177–11185. [Google Scholar]

- Pontes, J.K.; Hays, J.; Lucey, S. Scene flow from point clouds with or without learning. In Proceedings of the 2020 International Conference on 3D Vision (3DV), Fukuoka, Japan, 25–28 November 2020; pp. 261–270. [Google Scholar]

- Lang, I.; Aiger, D.; Cole, F.; Avidan, S.; Rubinstein, M. Scoop: Self-supervised correspondence and optimization-based scene flow. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 5281–5290. [Google Scholar]

- Ahuja, R.; Baker, C.; Schwarting, W. OptFlow: Fast Optimization-based Scene Flow Estimation without Supervision. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–5 January 2024; pp. 3161–3170. [Google Scholar]

- Team, O.D. OpenPCDet: An Open-Source Toolbox for 3D Object Detection from Point Clouds. 2020. Available online: https://github.com/open-mmlab/OpenPCDet (accessed on 2 October 2023).

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3D Object proposal generation and detection from point cloud. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the 2020 IEEE/CVF conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From points to parts: 3D object detection from point cloud with part-aware and part-aggregation network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2647–2664. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).