Detection of Rehabilitation Training Effect of Upper Limb Movement Disorder Based on MPL-CNN

Abstract

1. Introduction

2. Related Works

3. Methodology

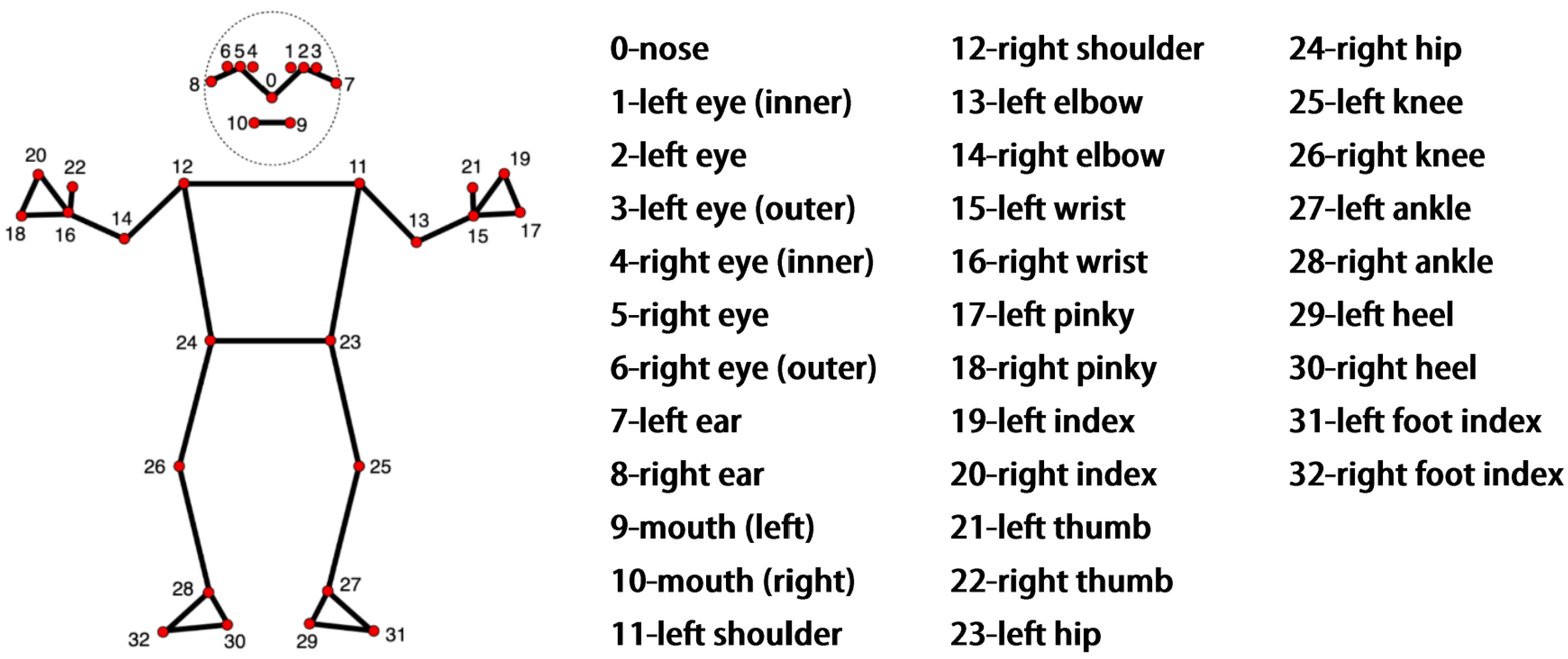

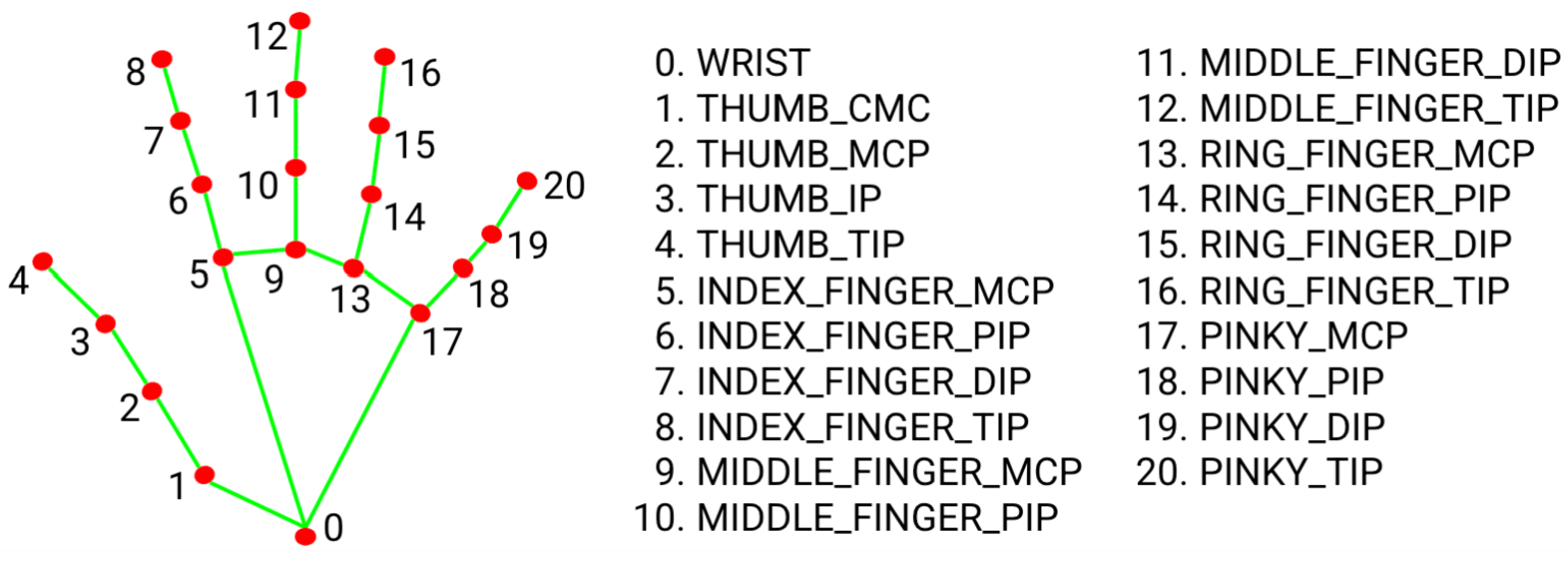

3.1. Mediapipe Joint Point Extraction

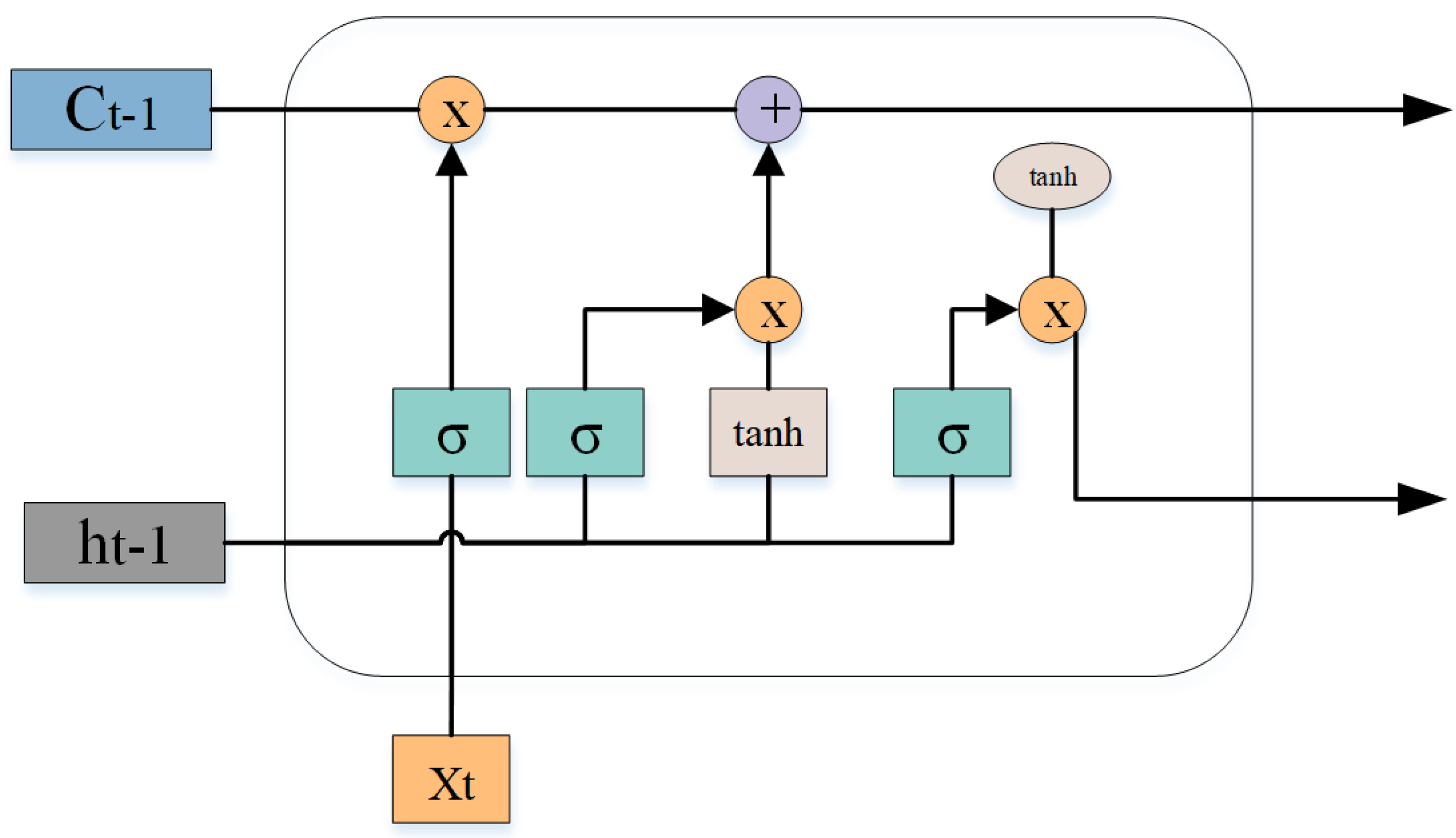

3.2. LSTM-CNN Rehabilitation Action Recognition Network

4. Experiments

4.1. Fugl-Meyer Upper Limb Rehabilitation Training Standards

4.2. Dataset Introduction

4.3. Model Design

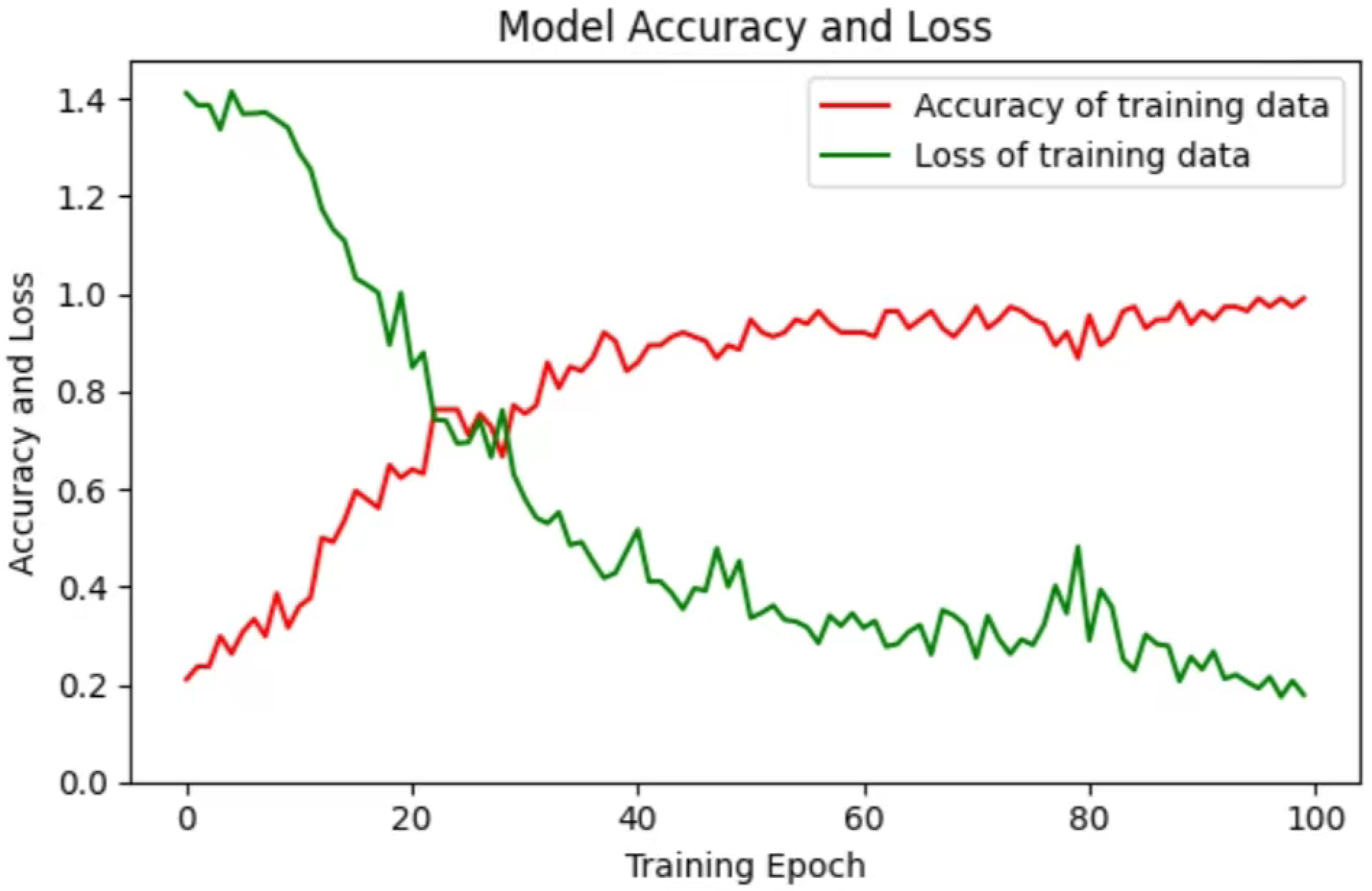

4.4. Experiment Analysis

4.5. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stinear, C.M.; Lang, C.E.; Zeiler, S.; Byblow, W.D. Advances and challenges in stroke rehabilitation. Lancet Neurol. 2020, 19, 348–360. [Google Scholar] [CrossRef] [PubMed]

- Feigin, V.L.; Brainin, M.; Norrving, B.; Martins, S.; Sacco, R.L.; Hacke, W.; Fisher, M.; Pandian, J.; Lindsay, P. World Stroke Organization (WSO): Global Stroke Fact Sheet 2022; WSO: Singapore, 2022; Volume 17, pp. 18–29. [Google Scholar]

- He, C.; Xiong, C.H.; Chen, Z.J.; Fan, W.; Huang, X.L.; Fu, C. Preliminary Assessment of a Postural Synergy-Based Exoskeleton for Post-Stroke Upper Limb Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1795–1805. [Google Scholar] [CrossRef] [PubMed]

- Kwakkel, G.; Kollen, B.J.; van der Grond, J.; Prevo, A.J.H.; Kollen, B.; Prevo, J. Probability of regaining dexterity in the flaccid upper limb: Impact of severity of paresis and time since onset in acute stroke. Stroke 2003, 34, 2181–2186. [Google Scholar] [CrossRef] [PubMed]

- Sammali, E.; Alia, C.; Vegliante, G.; Colombo, V.; Giordano, N.; Pischiutta, F.; Boncoraglio, G.B.; Barilani, M.; Lazzari, L.; Caleo, M.; et al. Intravenous infusion of human bone marrow mesenchymal stromal cells promotes functional recovery and neuroplasticity after ischemic stroke in mice. Sci. Rep. 2017, 7, 6962. [Google Scholar] [CrossRef] [PubMed]

- Hochleitner, I.; Pellicciari, L.; Castagnoli, C.; Paperini, A.; Politi, A.M.; Campagnini, S.; Pancani, S.; Basagni, B.; Gerli, F.; Carrozza, M.C.; et al. Intra- and inter-rater reliability of the Italian Fugl-Meyer assessment of upper and lower extremity. Disabil. Rehabil. 2023, 45, 2989–2999. [Google Scholar] [CrossRef]

- Li, D.; Fan, Y.; Lü, N.; Chen, G.; Wang, Z.; Chi, W. Safety Protection Method of Rehabilitation Robot Based on fNIRS and RGB-D Information Fusion. J. Shanghai Jiaotong Univ. 2021, 27, 45–54. [Google Scholar] [CrossRef]

- Yang, H.; Wang, Y.; Shi, Y. Rehabilitation Training Evaluation and Correction System Based on BlazePose. In Proceedings of the 2022 IEEE 4th Eurasia Conference on IOT, Communication and Engineering (ECICE) 2022, Yunlin, Taiwan, 28–30 October 2022; pp. 27–30. [Google Scholar]

- Güler, R.A.; Neverova, N.; Kokkinos, I. DensePose: Dense Human Pose Estimation in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7297–7306. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef]

- Toshev, A.; Szegedy, C. DeepPose: Human Pose Estimation via Deep Neural Networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Quiñonez, Y.; Lizarraga, C.; Aguayo, R. Machine Learning solutions with MediaPipe. In Proceedings of the 2022 11th International Conference On Software Process Improvement (CIMPS) 2022, Acapulco, Mexico, 19–21 October 2022; pp. 212–215. [Google Scholar]

- Iffat, Z.T.; Abdul, M.; Hafsa, B.K. Vision Based Human Action Classification Using CNN model with Mode Calculation. In Proceedings of the 2021 IEEE International Women in Engineering (WIE) Conference on Electrical and Computer Engineering (WIECON-ECE), Dhaka, Bangladesh, 4–5 December 2021; pp. 37–42. [Google Scholar]

- Shi, Z.; Kim, T.K. Learning and Refining of Privileged Information-Based RNNs for Action Recognition from Depth Sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 4684–4693. [Google Scholar]

- Doumas, I.; Everard, G.; Dehem, S.; Lejeune, T. Serious Games For Upper Limb Rehabilitation After Stroke: A Meta-Analysis. J. Neuroeng. Rehabil. 2021, 18, 100. [Google Scholar] [CrossRef]

- Cherry-Allen, K.M.; French, M.A.; Stenum, J.; Xu, J.; Roemmich, R.T. Opportunities for Improving Motor Assessment and Rehabilitation After Stroke by Leveraging Video-Based Pose Estimation. Am. J. Phys. Med. Rehabil. 2023, 102, S68–S74. [Google Scholar] [CrossRef]

- Nam, C.; Rong, W.; Li, W.; Cheung, C.; Ngai, W.; Cheung, T.; Pang, M.; Li, L.; Hu, J.; Wai, H.; et al. An Exoneuromusculoskeleton for Self-Help Upper Limb Rehabilitation After Stroke. Soft Robot. 2022, 9, 14–35. [Google Scholar] [CrossRef]

- Li, J.; Cao, Q.; Dong, M.; Zhang, C. Compatibility evaluation of a 4-DOF ergonomic exoskeleton for upper limb rehabilitation. Mech. Mach. Theory 2021, 156, 104146. [Google Scholar] [CrossRef]

- Guillén-Climent, S.; Garzo, A.; Muñoz-Alcaraz, M.N.; Casado-Adam, P.; Arcas-Ruiz-Ruano, J.; Mejías-Ruiz, M.; Mayordomo-Riera, F.J. A usability study in patients with stroke using MERLIN, a robotic system based on serious games for upper limb rehabilitation in the home setting. J. Neuroeng. Rehabil. 2021, 18, 41. [Google Scholar] [CrossRef] [PubMed]

- Zhou, B.; Zhang, J.; Zhao, Y.; Li, X.; Anderson, C.S.; Xie, B.; Wang, N.; Zhang, Y.; Tang, X.; Bettger, J.P.; et al. Caregiver-Delivered Stroke Rehabilitation In Rural China: The Recover Randomized Controlled Trial. Stroke 2019, 50, 1825–1830. [Google Scholar] [CrossRef]

- Chen, Y.; Abel, K.T.; Janecek, J.T.; Chen, Y.; Zheng, K.; Cramer, S.C. Home-based technologies for stroke rehabilitation: A systematic review. Int. J. Med. Inform. 2019, 123, 11–22. [Google Scholar] [CrossRef] [PubMed]

- Lin, R.C.; Chiang, S.L.; Heitkemper, M.M.; Weng, S.M.; Lin, C.F.; Yang, F.C.; Lin, C.H. Effectiveness of Early Rehabilitation Combined with Virtual Reality Training on Muscle Strength, Mood State, and Functional Status in Patients with Acute Stroke: A Randomized Controlled Trial. Worldviews Evid.-Based Nurs. 2020, 17.0, 158.0–167.0. [Google Scholar] [CrossRef]

- Paraense, H.; Marques, B.; Amorim, P.; Dias, P.; Santos, B.S. Whac-A-Mole: Exploring Virtual Reality (VR) for Upper-Limb Post-Stroke Physical Rehabilitation based on Participatory Design and Serious Games. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; pp. 716–717. [Google Scholar]

- Guo, J.; Li, N.; Guo, S. A VR-based Upper Limb Rehabilitation Hand Robotic Training System. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 2364–2369. [Google Scholar]

- Mekbib, D.B.; Debeli, D.K.; Zhang, L.; Fang, S.; Shao, Y.; Yang, W.; Han, J.; Jiang, H.; Zhu, J.; Zhao, Z.; et al. A Novel Fully Immersive Virtual Reality Environment for Upper Extremity Rehabilitation in Patients with Stroke. Ann. N. Y. Acad. Sci. 2021, 1493, 75–89. [Google Scholar] [CrossRef]

- Xia, Y.; Yun, H.; Liu, Y.; Luan, J.; Li, M. MGCBFormer: The multiscale grid-prior and class-inter boundary-aware transformer for polyp segmentation. Comput. Biol. Med. 2023, 167, 107600. [Google Scholar] [CrossRef]

- Yan, H.; Hu, B.; Chen, G.; Zhengyuan, E. Real-Time Continuous Human Rehabilitation Action Recognition using OpenPose and FCN. In Proceedings of the 2020 3rd International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Shenzhen, China, 24–26 April 2020; pp. 239–242. [Google Scholar]

- Tao, T.; Yang, X.; Xu, J.; Wang, W.; Zhang, S.; Li, M.; Xu, G. Trajectory Planning of Upper Limb Rehabilitation Robot Based on Human Pose Estimation. In Proceedings of the 2020 17th International Conference on Ubiquitous Robots (UR) 2020, Kyoto, Japan, 22–26 June 2020; pp. 333–338. [Google Scholar]

- Li, Y.; Wang, C.; Cao, Y.; Liu, B.; Tan, J.; Luo, Y. Human Pose Estimation Based In-Home Lower Body Rehabilitation System. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN) 2020, Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Wu, Q.; Xu, G.; Zhang, S.; Li, Y.; Wei, F. Human 3d Pose Estimation In A Lying Position By Rgb-D Images For Medical Diagnosis And Rehabilitation. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 5802–5805. [Google Scholar]

- Ji, B.; Hu, B.; Dong, Y.; Yan, H.; Chen, G. Human Rehabilitation Action Recognition Based on Posture Estimation and GRU Networks. Comput. Eng. 2021, 47, 12–20. [Google Scholar]

- Shen, M.; Lu, H. RARN: A Real-Time Skeleton-based Action Recognition Network for Auxiliary Rehabilitation Therapy. In Proceedings of the 2022 IEEE International Symposium on Circuits and Systems (ISCAS), Austin, TX, USA, 27 May–1 June 2022; pp. 2482–2486. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. Blazepose: On-device real-time body pose tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar]

- Szczęsna, A.; Błaszczyszyn, M.; Kawala-Sterniuk, A. Convolutional neural network in upper limb functional motion analysis after stroke. PeerJ 2020, 8, e10124. [Google Scholar] [CrossRef] [PubMed]

- Memory, L.S.T. Long short-term memory. Neural Comput. 2010, 9, 1735–1780. [Google Scholar]

- Song, X.; Chen, S.; Jia, J.; Shull, P.B. Cellphone-based Automated Fugl-Meyer Assessment to Evaluate Upper Extremity Motor Function after Stroke. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2186–2195. [Google Scholar] [CrossRef]

- Ashwini, K.; Amutha, R. Compressive sensing based recognition of human upper limb motions with kinect skeletal data. Multimed Tools Appl. 2021, 80, 10839–10857. [Google Scholar] [CrossRef]

- He, J.; Chen, S.; Guo, Z.; Pirbhulal, S.; Wu, W.; Feng, J.; Dan, G. A comparative study of motion recognition methods for efficacy assessment of upper limb function. Int. J. Adapt. Control. Signal Process. 2019, 33, 1248–1256. [Google Scholar] [CrossRef]

- Basha, S.H.S.; Pulabaigari, V.; Mukherjee, S. An information-rich sampling technique over spatio-temporal CNN for classification of human actions in videos. Multimed. Tools Appl. 2022, 81, 40431–40449. [Google Scholar] [CrossRef]

| Types of Rehabilitation Exercises | Movement | Standard Action | Label |

|---|---|---|---|

| Flexor synergistic movement | Touch the affected upper limb to the ipsilateral ear. | The shoulder joint is raised and retracted, abducted to 90° and externally rotated, the elbow joint is fully flexed, the forearm is fully supinated. | FSM |

| Common extensor muscle movement | Touch the opposite knee joint with the affected hand | Adduct the shoulder joint, internally rotate, extend the elbow joint, pronate the forearm. | CEM |

| Accompanied by common movement | - | 1. The patient touches the waist with the back of the hand. | ACM A |

| 2. Elbow straight, shoulder joint flexed 90°, shoulder 0°. | ACM B | ||

| 3. Bend the elbow 90° to complete the pronation and supination movement of the forearm. | ACM C | ||

| Isolation movement | - | 1. Abduct the shoulder joint to 90°, straighten the elbow and do forearm pronation movement. | IM A |

| 2. Bend the shoulder joint forward and raise the arm overhead, elbow straight. | IM B | ||

| 3. Flex the shoulder between 30° and 90° to complete the pronation and supination movement of the forearm. | IM C | ||

| Wrist stability | - | 1. Shoulder 0°, elbow flexion 90 degrees, complete wrist dorsal flexion 15 degrees and alternate wrist flexion and extension movements | WS A |

| 2. Shoulder flexed 30°, elbow extended, forearm pronated to complete 15 degrees wrist dorsiflexion and alternate wrist flexion and extension movements | WS B | ||

| 3. Circular motion of the wrist joint | WS C | ||

| Hand movement | - | 1. Finger joints are at 0°, and the fingers are flexed and extended together, forming a hook shape. | HM A |

| 2. Finger joint 0°, thumb adducted. | HM B | ||

| 3. Flex your fingers to make a grasping motion. | HM C |

| NO. | Posture | Number of People | Number of Videos |

|---|---|---|---|

| 1 | Flexor synergistic movement (FSM) | 10 | 100 |

| 2 | Common extensor muscle movement (CEM) | 10 | 100 |

| 3 | Accompanied by common movement A (ACM A) | 10 | 100 |

| 4 | Accompanied by common movement B (ACM B) | 10 | 100 |

| 5 | Accompanied by common movement C (ACM C) | 10 | 100 |

| 6 | Isolation movement A (IM A) | 10 | 100 |

| 7 | Isolation movement B (IM B) | 10 | 100 |

| 8 | Isolation movement C (IM C) | 10 | 100 |

| 9 | Wrist Stability A (WS A) | 10 | 100 |

| 10 | Wrist Stability B (WS B) | 10 | 100 |

| 11 | Wrist Stability C (WS C) | 10 | 100 |

| 12 | Finger movement A (HM A) | 10 | 100 |

| 13 | Finger movement B (HM B) | 10 | 100 |

| 14 | Finger movement C (HM C) | 10 | 100 |

| Total number of videos | 1400 |

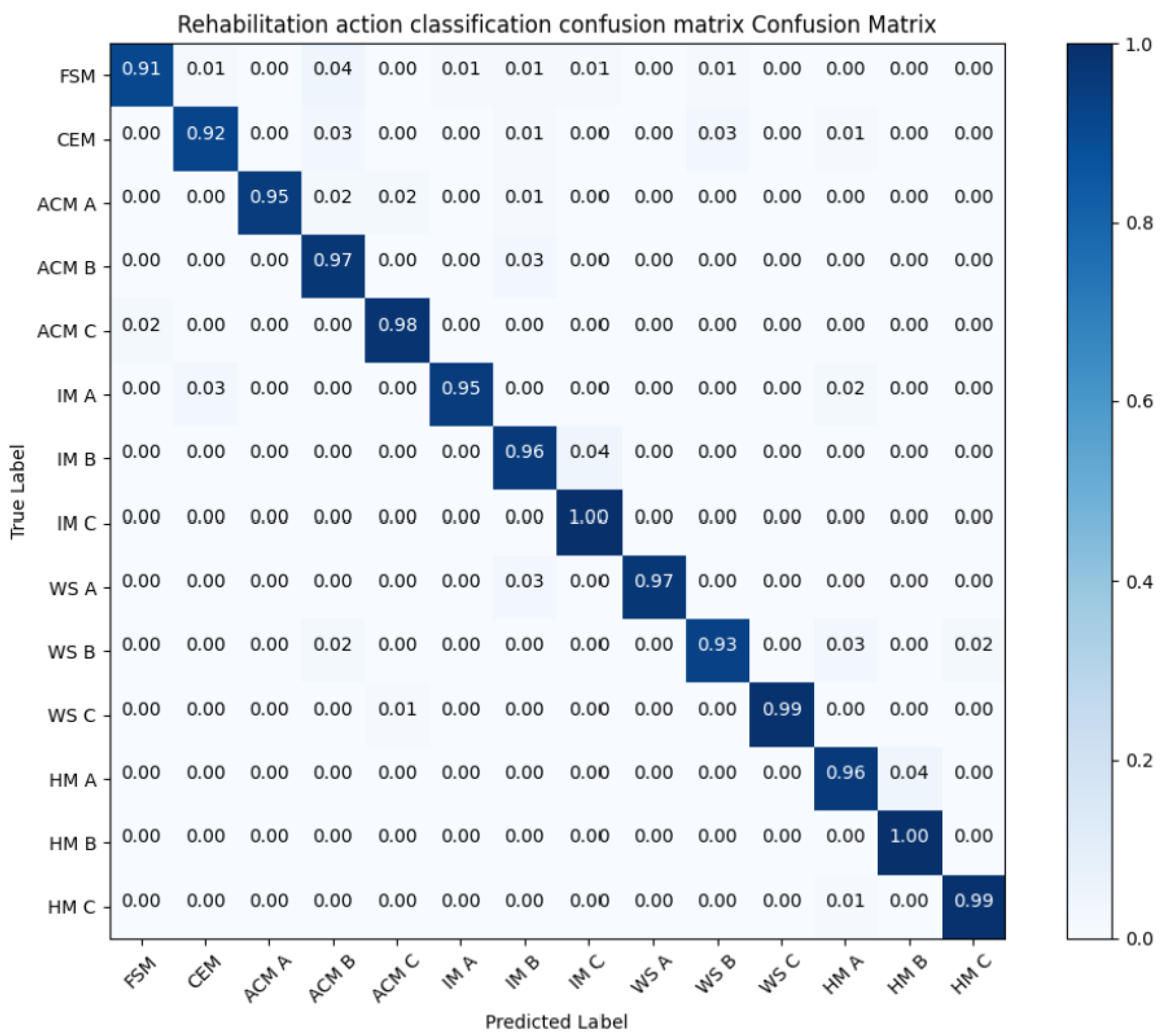

| Rehabilitation Type | Precision | Recall | F1-Score |

|---|---|---|---|

| FSM | 97.92% | 91.67% | 94.69% |

| CEM | 95.43% | 92.71% | 94.05% |

| ACM A | 100% | 94.79% | 97.32% |

| ACM B | 91.46% | 96.88% | 94.09% |

| ACM C | 97.66% | 98.96% | 98.30 % |

| IM A | 99.77% | 95.83% | 97.76% |

| IM B | 94.83% | 96.88% | 95.84% |

| IM C | 95.67% | 100% | 97.78% |

| WS A | 100% | 97.92% | 98.95% |

| WS B | 98.71% | 93.7% | 96.14% |

| WS C | 100% | 99.77% | 99.88% |

| HM A | 97.92% | 96.83% | 97.37% |

| HM B | 96.43% | 100% | 98.18% |

| HM C | 100% | 99.77% | 99.88% |

| CNN | LSTM | Dropout | Accuracy | P |

|---|---|---|---|---|

| YES | NO | NO | 83.63% | 86.46% |

| YES | NO | YES | 90.62% | 88.54% |

| NO | YES | NO | 94.2% | 87.5% |

| NO | YES | YES | 97.67% | 90.62% |

| YES | YES | NO | 97.92% | 95.83% |

| YES | YES | YES | 99.22% | 97.54% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, L.; Wang, R.; Zhao, J.; Zhang, J.; Kuang, Z. Detection of Rehabilitation Training Effect of Upper Limb Movement Disorder Based on MPL-CNN. Sensors 2024, 24, 1105. https://doi.org/10.3390/s24041105

Shi L, Wang R, Zhao J, Zhang J, Kuang Z. Detection of Rehabilitation Training Effect of Upper Limb Movement Disorder Based on MPL-CNN. Sensors. 2024; 24(4):1105. https://doi.org/10.3390/s24041105

Chicago/Turabian StyleShi, Lijuan, Runmin Wang, Jian Zhao, Jing Zhang, and Zhejun Kuang. 2024. "Detection of Rehabilitation Training Effect of Upper Limb Movement Disorder Based on MPL-CNN" Sensors 24, no. 4: 1105. https://doi.org/10.3390/s24041105

APA StyleShi, L., Wang, R., Zhao, J., Zhang, J., & Kuang, Z. (2024). Detection of Rehabilitation Training Effect of Upper Limb Movement Disorder Based on MPL-CNN. Sensors, 24(4), 1105. https://doi.org/10.3390/s24041105