Mobile Data Gathering and Preliminary Analysis for the Functional Reach Test

Abstract

1. Introduction

2. Related Work

2.1. Smart Wearables Data in Medical Applications

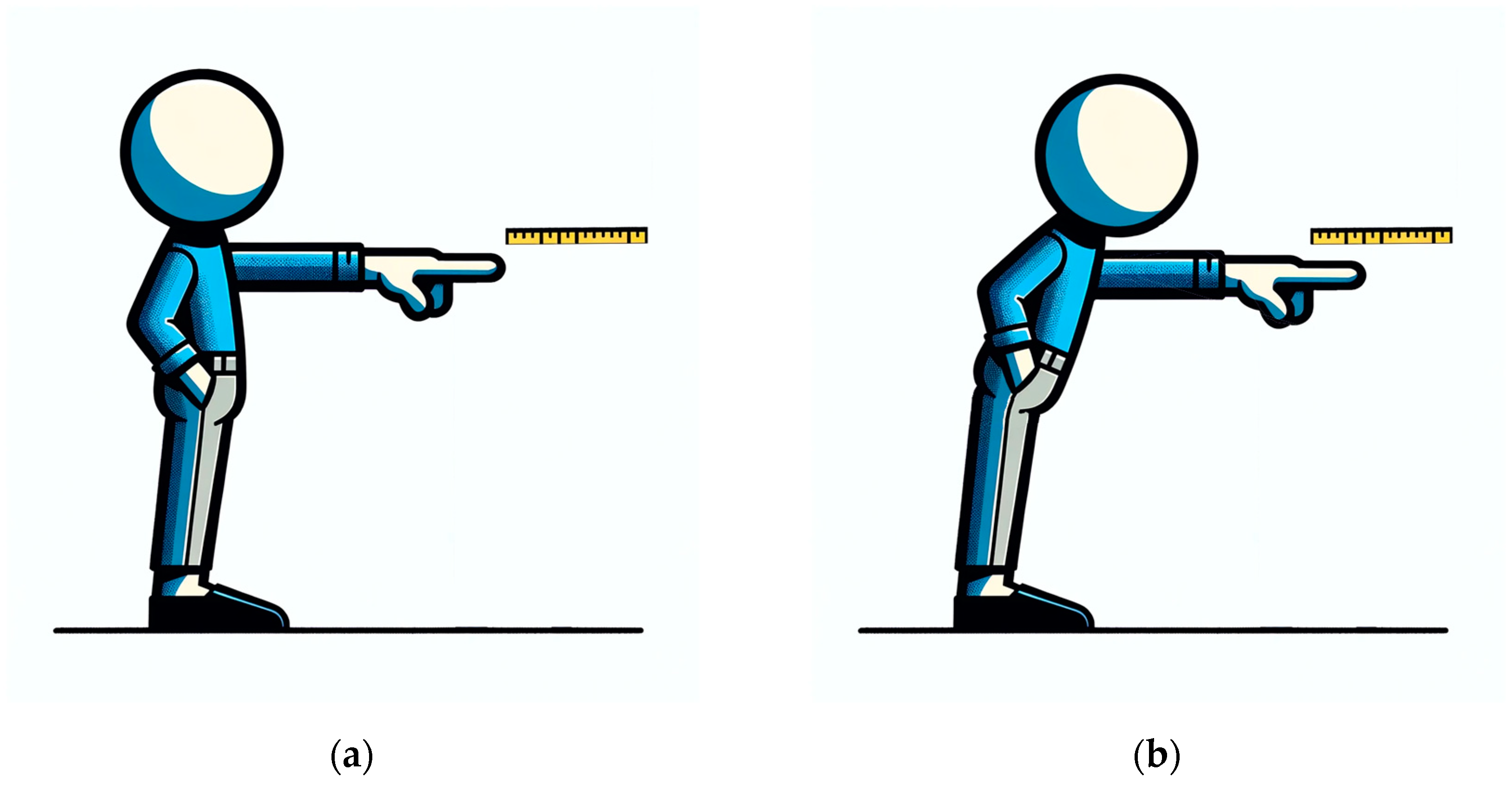

2.2. Functional Reach Test

- If the reach distance exceeds 25.40 cm, it is considered a negative test and a low risk of falling.

- If the reach distance is within the range of 15.24–25.40 cm, the risk of falling is twice as high during the next six months.

- If the reach distance is less than 15.24 cm, there is a four times greater risk of falling during the next six months.

2.2.1. FRT Related to Stroke

2.2.2. FRT Related to Older Adults

2.2.3. FRT Related to Other Conditions

2.3. Orientation Estimation

2.3.1. Coordinate Frames

2.3.2. Orientation Representation

2.3.3. Attitude and Heading Reference System

2.3.4. Sensor Fusion

2.4. Position-Estimation Algorithms

3. Materials and Methods

3.1. FRT Data Collection through a Mobile App

- Firebase Authentication—an authentication platform that implements a simple and secure authentication system;

- Cloud Firestore—a NoSQL document database where the App’s data structures (users, collection centers, and subjects) are stored, allowing simple data querying;

- Cloud Storage for Firebase is a cloud storage service that allows uploading the collected data (wearable sensor data) in a text file format.

3.2. Functional Reach Test Distance Estimation

3.2.1. Orientation Estimation

3.2.2. Real-Time Orientation Estimation

3.2.3. Distance Estimation

4. Results and Discussion

4.1. Data Collection Mobile Application

4.1.1. Properties of the App

- Programming language: Java.

- Minimum SDK: Android 5.0 (Lollipop).

- Communication with other mobile devices: Bluetooth.

- Sensors to collect: accelerometer, gyroscope, and magnetometer.

- Data storage via Google LLC’s Firebase services.

- Support two languages: English and Portuguese.

4.1.2. Data Structures

4.1.3. Connection to the App

4.1.4. Navigating through the Wearables Balance App

- MainFragment—contains the app’s first screen and presents the list of collection centers in the user’s repository; the user can select a collection center to navigate to the CenterFragment.

- CentersFragment—allows users to search for a collection center; the user can select a collection center to navigate to the CenterFragment.

- CenterFragment—presents information about a collection center and the assigned subjects; the user can select a subject to navigate to the SubjectFragment.

- SubjectFragment—displays the information about the selected subject, including the list and registry of the assessments performed on the subject.

- AssessmentFragment—allows the user to perform an assessment, displaying the data of a selected axis of a selected sensor in a graph; the user can register the values obtained through the manual protocol and share the collected data through the shared platform to, for example, a cloud service (e.g., One Drive); the collected data is automatically uploaded to the cloud storage of Firebase when exiting the fragment.

- AddCenterFragment—allows the user to add a new collection center, introducing the name, address, contact information, and type (home, nursing home, hospital, school, or other); a universally unique identifier (UUID) identifying the collection center is automatically generated; the fragment is accessed by pressing the add (“+”) button when the MainFragment or the CentersFragment is active.

- AddSubjectFragment—allows the user to add a new subject, introducing the name and demographic data (birthdate, gender—male, female, or other –, weight, and height); a UUID identifying the subject is automatically generated; the fragment is accessed by pressing the add (“+”) button when the CenterFragment is active.

4.1.5. Data Collection

- id_assessmentNumber.csv: stores the data related to the assessment, namely the date of the assessment and the register of the result of the FRT obtained through the manual method;

- id_assessmentNumber_acc.csv: accelerometer data in the tree axis (m∙s−2);

- id_assessmentNumber_acc_r.csv: raw accelerometer data in the tree axis (m∙s−2);

- id_assessmentNumber_gra.csv: a software-based estimate of the gravity acceleration in the tree axis (m∙s−2);

- id_assessmentNumber_gyr.csv: gyroscope data in the tree axis (rad∙s−1);

- id_assessmentNumber_gyr_r.csv: raw gyroscope data in the tree axis (rad∙s−1);

- id_assessmentNumber_lin.csv: a software-based estimate of the linear acceleration (excluding gravity) in the tree axis (m∙s−2);

- id_assessmentNumber_mag.csv: magnetometer data in the tree axis (μT);

- id_assessmentNumber_mag_r.csv: raw magnetometer data in the tree axis (μT);

- id_assessmentNumber_rot.csv: a software-based quaternion representing the device’s orientation (rotation vector sensor).

4.2. Orientation Estimation

4.3. Position Estimation

4.4. Functional Reach Test Measurement

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ajakwe, S.O.; Nwakanma, C.I.; Kim, D.-S.; Lee, J.-M. Key Wearable Device Technologies Parameters for Innovative Healthcare Delivery in B5G Network: A Review. IEEE Access 2022, 10, 49956–49974. [Google Scholar] [CrossRef]

- Roomkham, S.; Lovell, D.; Cheung, J.; Perrin, D. Promises and Challenges in the Use of Consumer-Grade Devices for Sleep Monitoring. IEEE Rev. Biomed. Eng. 2018, 11, 53–67. [Google Scholar] [CrossRef] [PubMed]

- Huhn, S.; Axt, M.; Gunga, H.-C.; Maggioni, M.A.; Munga, S.; Obor, D.; Sié, A.; Boudo, V.; Bunker, A.; Sauerborn, R.; et al. The Impact of Wearable Technologies in Health Research: Scoping Review. JMIR Mhealth Uhealth 2022, 10, e34384. [Google Scholar] [CrossRef] [PubMed]

- Lattanzi, E.; Freschi, V. Evaluation of Human Standing Balance Using Wearable Inertial Sensors: A Machine Learning Approach. Eng. Appl. Artif. Intell. 2020, 94, 103812. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, C.; Zheng, B.; Guo, Q.; Duan, X.; Wulamu, A.; Zhang, D. Wearable Devices for Gait Analysis in Intelligent Healthcare. Front. Comput. Sci. 2021, 3, 661676. [Google Scholar] [CrossRef]

- Subramaniam, S.; Faisal, A.I.; Deen, M.J. Wearable Sensor Systems for Fall Risk Assessment: A Review. Front. Digit. Health 2022, 4, 921506. [Google Scholar] [CrossRef] [PubMed]

- Salari, N.; Darvishi, N.; Ahmadipanah, M.; Shohaimi, S.; Mohammadi, M. Global Prevalence of Falls in the Older Adults: A Comprehensive Systematic Review and Meta-Analysis. J. Orthop. Surg. Res. 2022, 17, 334. [Google Scholar] [CrossRef]

- Florence, C.S.; Bergen, G.; Atherly, A.; Burns, E.; Stevens, J.; Drake, C. Medical Costs of Fatal and Nonfatal Falls in Older Adults: Medical Costs of Falls. J. Am. Geriatr. Soc. 2018, 66, 693–698. [Google Scholar] [CrossRef]

- Stevens, J.A.; Lee, R. The Potential to Reduce Falls and Avert Costs by Clinically Managing Fall Risk. Am. J. Prev. Med. 2018, 55, 290–297. [Google Scholar] [CrossRef]

- Cuevas-Trisan, R. Balance Problems and Fall Risks in the Elderly. Clin. Geriatr. Med. 2019, 35, 173–183. [Google Scholar] [CrossRef]

- Soubra, R.; Chkeir, A.; Novella, J.-L. A Systematic Review of Thirty-One Assessment Tests to Evaluate Mobility in Older Adults. BioMed Res. Int. 2019, 2019, 1354362. [Google Scholar] [CrossRef]

- Salzman, B. Gait and Balance Disorders in Older Adults. Am. Fam. Physician 2010, 82, 61–68. [Google Scholar] [PubMed]

- Tornero-Quiñones, I.; Sáez-Padilla, J.; Espina Díaz, A.; Abad Robles, M.T.; Sierra Robles, Á. Functional ability, frailty and risk of falls in the elderly: Relations with autonomy in daily living. Int. J. Environ. Res. Public Health 2020, 17, 1006. [Google Scholar] [CrossRef]

- Marchesi, G.; Ballardini, G.; Barone, L.; Giannoni, P.; Lentino, C.; De Luca, A.; Casadio, M. Modified Functional Reach Test: Upper-Body Kinematics and Muscular Activity in Chronic Stroke Survivors. Sensors 2021, 22, 230. [Google Scholar] [CrossRef] [PubMed]

- Meekes, W.M.; Korevaar, J.C.; Leemrijse, C.J.; Van De Goor, I.A. Practical and Validated Tool to Assess Falls Risk in the Primary Care Setting: A Systematic Review. BMJ Open 2021, 11, e045431. [Google Scholar] [CrossRef]

- Gates, S.; Smith, L.A.; Fisher, J.D.; Lamb, S.E. Systematic Review of Accuracy of Screening Instruments for Predicting Fall Risk among Independently Living Older Adults. J. Rehabil. Res. Dev. 2008, 45, 1105–1116. [Google Scholar] [CrossRef] [PubMed]

- Deng, Z.; Guo, L.; Chen, X.; Wu, W. Smart Wearable Systems for Health Monitoring. Sensors 2023, 23, 2479. [Google Scholar] [CrossRef]

- Duncan, P.W.; Weiner, D.K.; Chandler, J.; Studenski, S. Functional Reach: A New Clinical Measure of Balance. J. Gerontol. 1990, 45, M192–M197. [Google Scholar] [CrossRef]

- Wolfson, L.I.; Whipple, R.; Amerman, P.; Kleinberg, A. Stressing the Postural Response: A Quantitative Method for Testing Balance. J. Am. Geriatr. Soc. 1986, 34, 845–850. [Google Scholar] [CrossRef]

- Katz-Leurer, M.; Fisher, I.; Neeb, M.; Schwartz, I.; Carmeli, E. Reliability and Validity of the Modified Functional Reach Test at the Sub-Acute Stage Post-Stroke. Disabil. Rehabil. 2009, 31, 243–248. [Google Scholar] [CrossRef]

- Podsiadlo, D.; Richardson, S. The Timed “Up & Go”: A Test of Basic Functional Mobility for Frail Elderly Persons. J. Am. Geriatr. Soc. 1991, 39, 142–148. [Google Scholar] [CrossRef]

- Blum, L.; Korner-Bitensky, N. Usefulness of the Berg Balance Scale in Stroke Rehabilitation: A Systematic Review. Phys. Ther. 2008, 88, 559–566. [Google Scholar] [CrossRef]

- De Luca, A.; Giannoni, P.; Vernetti, H.; Capra, C.; Lentino, C.; Checchia, G.A.; Casadio, M. Training the Unimpaired Arm Improves the Motion of the Impaired Arm and the Sitting Balance in Chronic Stroke Survivors. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 873–882. [Google Scholar] [CrossRef]

- Caimmi, M.; Giovanzana, C.; Gasperini, G.; Molteni, F.; Molinari Tosatti, L. Robot Fully Assisted Upper-Limb Functional Movements against Gravity to Drive Recovery in Chronic Stroke: A Pilot Study. Front. Neurol. 2022, 12, 782094. [Google Scholar] [CrossRef] [PubMed]

- Fishbein, P.; Hutzler, Y.; Ratmansky, M.; Treger, I.; Dunsky, A. A Preliminary Study of Dual-Task Training Using Virtual Reality: Influence on Walking and Balance in Chronic Poststroke Survivors. J. Stroke Cerebrovasc. Dis. 2019, 28, 104343. [Google Scholar] [CrossRef]

- Bruyneel, A.-V.; Mesure, S.; Reinmann, A.; Sordet, C.; Venturelli, P.; Feldmann, I.; Guyen, E. Validity and Reliability of Center of Pressure Measures to Quantify Trunk Control Ability in Individuals after Stroke in Subacute Phase during Unstable Sitting Test. Heliyon 2022, 8, e10891. [Google Scholar] [CrossRef] [PubMed]

- Fell, N.; True, H.H.; Allen, B.; Harris, A.; Cho, J.; Hu, Z.; Sartipi, M.; Place, K.K.; Salstrand, R. Functional Measurement Post-Stroke via Mobile Application and Body-Worn Sensor Technology. mHealth 2019, 5, 47. [Google Scholar] [CrossRef]

- Williams, B.; Allen, B.; Hu, Z.; True, H.; Cho, J.; Harris, A.; Fell, N.; Sartipi, M. Real-Time Fall Risk Assessment Using Functional Reach Test. Int. J. Telemed. Appl. 2017, 2017, 2042974. [Google Scholar] [CrossRef]

- Hsiao, M.-Y.; Li, C.-M.; Lu, I.-S.; Lin, Y.-H.; Wang, T.-G.; Han, D.-S. An Investigation of the Use of the Kinect System as a Measure of Dynamic Balance and Forward Reach in the Elderly. Clin. Rehabil. 2018, 32, 473–482. [Google Scholar] [CrossRef]

- Mengarelli, A.; Cardarelli, S.; Strazza, A.; Di Nardo, F.; Fioretti, S.; Verdini, F. Validity of the Nintendo Wii Balance Board for the Assessment of Balance Measures in the Functional Reach Test. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1400–1406. [Google Scholar] [CrossRef] [PubMed]

- Moriyama, Y.; Yamada, T.; Shimamura, R.; Ohmi, T.; Hirosawa, M.; Yamauchi, T.; Tazawa, T.; Kato, J. Movement Patterns of the Functional Reach Test Do Not Reflect Physical Function in Healthy Young and Older Participants. PLoS ONE 2022, 17, e0266195. [Google Scholar] [CrossRef]

- Ghahramani, M.; Rojas, R.F.; Stirling, D. Chest and Pelvis Coordination during Functional Reach Test: A Possible Indication of Balance Deficiency in Older Adults. J. Biomech. 2022, 141, 111177. [Google Scholar] [CrossRef]

- Chen, H.-J.; Chen, P.-Y.; Kao, C.-L.; Sung, W.-H. Smartphone Frailty Screening: Development of a Quantitative Early Detection Method for the Frailty Syndrome. J. Chin. Med. Assoc. 2020, 83, 1039–1047. [Google Scholar] [CrossRef] [PubMed]

- Bao, T.; Carender, W.J.; Kinnaird, C.; Barone, V.J.; Peethambaran, G.; Whitney, S.L.; Kabeto, M.; Seidler, R.D.; Sienko, K.H. Effects of Long-Term Balance Training with Vibrotactile Sensory Augmentation among Community-Dwelling Healthy Older Adults: A Randomized Preliminary Study. J. Neuroeng. Rehabil. 2018, 15, 5. [Google Scholar] [CrossRef] [PubMed]

- Dewar, R.M.; Tucker, K.; Claus, A.P.; Ware, R.S.; Johnston, L.M. Postural Control Performance on the Functional Reach Test: Validity of the Kids-Balance Evaluation Systems Test (Kids-BESTest) Criteria. Arch. Phys. Med. Rehabil. 2021, 102, 1170–1179. [Google Scholar] [CrossRef]

- Santamaria, V.; Khan, M.; Luna, T.; Kang, J.; Dutkowsky, J.; Gordon, A.M.; Agrawal, S.K. Promoting Functional and Independent Sitting in Children with Cerebral Palsy Using the Robotic Trunk Support Trainer. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2995–3004. [Google Scholar] [CrossRef]

- Reguera-García, M.M.; Leirós-Rodríguez, R.; Álvarez-Barrio, L.; Alonso-Cortés Fradejas, B. Analysis of Postural Control in Sitting by Pressure Mapping in Patients with Multiple Sclerosis, Spinal Cord Injury and Friedreich’s Ataxia: A Case Series Study. Sensors 2020, 20, 6488. [Google Scholar] [CrossRef]

- Tanaka, R.; Ishikawa, Y.; Yamasaki, T.; Diez, A. Accuracy of Classifying the Movement Strategy in the Functional Reach Test Using a Markerless Motion Capture System. J. Med. Eng. Technol. 2019, 43, 133–138. [Google Scholar] [CrossRef] [PubMed]

- Nozu, S.; Johnson, K.A.; Takemura, M.; Shields, R.K. Disrupted Somatosensory Input Alters Postural Control Strategies during the Star Excursion Balance Test (SEBT) in Healthy People. Gait Posture 2021, 90, 141–147. [Google Scholar] [CrossRef]

- Verdini, F.; Mengarelli, A.; Tigrini, A.; Strazza, A.; di Nardo, F.; Cardarelli, S.; Fioretti, S. Accuracy Evaluation of Force Measurement through the Wii Balance Board during Squat and Functional Reach Tests. In Proceedings of the 2019 IEEE 23rd International Symposium on Consumer Technologies (ISCT), Ancona, Italy, 19–21 June 2019; pp. 291–295. [Google Scholar]

- Son, S.-Y.; Muraki, S.; Tochihara, Y. Exploring the Appropriate Test Methods to Examine the Effects of Firefighter Personal Protective Equipment on Mobility. Fash. Text. 2022, 9, 22. [Google Scholar] [CrossRef]

- Park, S.-H.; Son, S.-M.; Choi, J.-Y. Effect of Posture Control Training Using Virtual Reality Program on Sitting Balance and Trunk Stability in Children with Cerebral Palsy. NeuroRehabilitation 2021, 48, 247–254. [Google Scholar] [CrossRef]

- Ayed, I.; Jaume-i-Capó, A.; Martínez-Bueso, P.; Mir, A.; Moyà-Alcover, G. Balance Measurement Using Microsoft Kinect v2: Towards Remote Evaluation of Patient with the Functional Reach Test. Appl. Sci. 2021, 11, 6073. [Google Scholar] [CrossRef]

- Peixoto, A.C.; Silva, A.F. Smart Devices: Micro-and Nanosensors. In Bioinspired Materials for Medical Applications; Elsevier: Amsterdam, The Netherlands, 2017; pp. 297–329. [Google Scholar]

- Le, H.T.; Haque, R.I.; Ouyang, Z.; Lee, S.W.; Fried, S.I.; Zhao, D.; Qiu, M.; Han, A. MEMS Inductor Fabrication and Emerging Applications in Power Electronics and Neurotechnologies. Microsyst. Nanoeng. 2021, 7, 59. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Yu, S.; Liu, C.; Yuan, X.; Liu, S. A Dual-Linear Kalman Filter for Real-Time Orientation Determination System Using Low-Cost MEMS Sensors. Sensors 2016, 16, 264. [Google Scholar] [CrossRef] [PubMed]

- Algamili, A.S.; Khir, M.H.M.; Dennis, J.O.; Ahmed, A.Y.; Alabsi, S.S.; Ba Hashwan, S.S.; Junaid, M.M. A Review of Actuation and Sensing Mechanisms in MEMS-Based Sensor Devices. Nanoscale Res. Lett. 2021, 16, 16. [Google Scholar] [CrossRef] [PubMed]

- Suprem, A.; Deep, V.; Elarabi, T. Orientation and Displacement Detection for Smartphone Device Based Imus. IEEE Access 2016, 5, 987–997. [Google Scholar] [CrossRef]

- Senkal, D.; Shkel, A.M. Whole-Angle MEMS Gyroscopes: Challenges and Opportunities; Wiley: Hoboken, NJ, USA, 2020. [Google Scholar]

- Bergamini, E.; Ligorio, G.; Summa, A.; Vannozzi, G.; Cappozzo, A.; Sabatini, A.M. Estimating Orientation Using Magnetic and Inertial Sensors and Different Sensor Fusion Approaches: Accuracy Assessment in Manual and Locomotion Tasks. Sensors 2014, 14, 18625–18649. [Google Scholar] [CrossRef] [PubMed]

- Cole, G.K.; Nigg, B.M.; Ronsky, J.L.; Yeadon, M.R. Application of the Joint Coordinate System to Three-Dimensional Joint Attitude and Movement Representation: A Standardization Proposal. J. Biomech Eng. 1993, 115, 344–349. [Google Scholar] [CrossRef]

- Kim, S.; Kim, M. Rotation Representations and Their Conversions. IEEE Access 2023, 11, 6682–6699. [Google Scholar] [CrossRef]

- Altmann, S.L. Rotations, Quaternions, and Double Groups; Courier Corporation: Chelmsford, MA, USA, 2005; ISBN 0-486-44518-6. [Google Scholar]

- Morawiec, A. Orientations and Rotations; Springer: Berlin/Heidelberg, Germany, 2003; ISBN 3-540-40734-0. [Google Scholar]

- Dam, E.B.; Koch, M.; Lillholm, M. Quaternions, Interpolation and Animation; Datalogisk Institut, Københavns Universitet Copenhagen: Copenhagen, Denmark, 1998; Volume 2. [Google Scholar]

- Rodrigues, C. Development of MEMS-Based IMU for Position Estimation. Master’s Thesis, Universidade da Madeira, Madeira, Portugal, 2021. [Google Scholar]

- Madgwick, S.O.H.; Harrison, A.J.L.; Vaidyanathan, R. Estimation of IMU and MARG Orientation Using a Gradient Descent Algorithm. In Proceedings of the 2011 IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–7. [Google Scholar]

- Valenti, R.G.; Dryanovski, I.; Xiao, J. A Linear Kalman Filter for MARG Orientation Estimation Using the Algebraic Quaternion Algorithm. IEEE Trans. Instrum. Meas. 2016, 65, 467–481. [Google Scholar] [CrossRef]

- Wen, K.; Yu, K.; Li, Y.; Zhang, S.; Zhang, W. A New Quaternion Kalman Filter Based Foot-Mounted IMU and UWB Tightly-Coupled Method for Indoor Pedestrian Navigation. IEEE Trans. Veh. Technol. 2020, 69, 4340–4352. [Google Scholar] [CrossRef]

- Liu, W.; Caruso, D.; Ilg, E.; Dong, J.; Mourikis, A.I.; Daniilidis, K.; Kumar, V.; Engel, J. TLIO: Tight Learned Inertial Odometry. IEEE Robot. Autom. Lett. 2020, 5, 5653–5660. [Google Scholar] [CrossRef]

- Lisini Baldi, T.; Farina, F.; Garulli, A.; Giannitrapani, A.; Prattichizzo, D. Upper Body Pose Estimation Using Wearable Inertial Sensors and Multiplicative Kalman Filter. IEEE Sens. J. 2020, 20, 492–500. [Google Scholar] [CrossRef]

- Mahony, R.; Hamel, T.; Pflimlin, J.-M. Nonlinear Complementary Filters on the Special Orthogonal Group. IEEE Trans. Automat. Contr. 2008, 53, 1203–1218. [Google Scholar] [CrossRef]

- Fung, M.L.; Chen, M.Z.Q.; Chen, Y.H. Sensor Fusion: A Review of Methods and Applications. In Proceedings of the 2017 29th Chinese Control and Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 3853–3860. [Google Scholar]

- Farag, W. Kalman-Filter-Based Sensor Fusion Applied to Road-Objects Detection and Tracking for Autonomous Vehicles. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2021, 235, 1125–1138. [Google Scholar] [CrossRef]

- Kubelka, V.; Reinstein, M. Complementary Filtering Approach to Orientation Estimation Using Inertial Sensors Only. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, 14–18 May 2012; pp. 599–605. [Google Scholar]

- Madgwick, S. An Efficient Orientation Filter for Inertial and Inertial/Magnetic Sensor Arrays. Rep. X-Io Univ. Bristol (UK) 2010, 25, 113–118. [Google Scholar]

- Kok, M.; Schön, T.B. A Fast and Robust Algorithm for Orientation Estimation Using Inertial Sensors. IEEE Signal Process. Lett. 2019, 26, 1673–1677. [Google Scholar] [CrossRef]

- Madgwick, S.O.; Wilson, S.; Turk, R.; Burridge, J.; Kapatos, C.; Vaidyanathan, R. An Extended Complementary Filter for Full-Body MARG Orientation Estimation. IEEE/ASME Trans. Mechatron. 2020, 25, 2054–2064. [Google Scholar] [CrossRef]

- Wu, J.; Zhou, Z.; Fourati, H.; Li, R.; Liu, M. Generalized Linear Quaternion Complementary Filter for Attitude Estimation from Multisensor Observations: An Optimization Approach. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1330–1343. [Google Scholar] [CrossRef]

- Fan, B.; Li, Q.; Liu, T. How Magnetic Disturbance Influences the Attitude and Heading in Magnetic and Inertial Sensor-Based Orientation Estimation. Sensors 2017, 18, 76. [Google Scholar] [CrossRef]

- Antunes, R.A.; Palma, L.B. Quaternion-Based AHRS with MEMS Motion Sensor for Biomedical Applications. In Proceedings of the 2021 IEEE International Biomedical Instrumentation and Technology Conference (IBITeC), Yogyakarta, Indonesia, 20 October 2021; pp. 1–6. [Google Scholar]

- Noohu, M.M.; Dey, A.B.; Hussain, M.E. Relevance of Balance Measurement Tools and Balance Training for Fall Prevention in Older Adults. J. Clin. Gerontol. Geriatr. 2014, 5, 31–35. [Google Scholar] [CrossRef][Green Version]

- Zhou, H.; Hu, H. Human Motion Tracking for Rehabilitation—A Survey. Biomed. Signal Process. Control 2008, 3, 1–18. [Google Scholar] [CrossRef]

- Pires, I.M.; Garcia, N.M.; Zdravevski, E. Measurement of Results of Functional Reach Test with Sensors: A Systematic Review. Electronics 2020, 9, 1078. [Google Scholar] [CrossRef]

- Duarte, J.; Francisco, L.; Pires, I.M.; Coelho, P.J. Smart Wearables Data Collection and Analysis for Medical Applications: A Preliminary Approach for Functional Reach Test. In Bioinformatics and Biomedical Engineering; Rojas, I., Valenzuela, O., Rojas Ruiz, F., Herrera, L.J., Ortuño, F., Eds.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2023; Volume 13920, pp. 481–491. ISBN 978-3-031-34959-1. [Google Scholar]

- Álvarez, M.N.; Rodríguez-Sánchez, C.; Huertas-Hoyas, E.; García-Villamil-Neira, G.; Espinoza-Cerda, M.T.; Pérez-Delgado, L.; Reina-Robles, E.; Martin, I.B.; del-Ama, A.J.; Ruiz-Ruiz, L.; et al. Predictors of Fall Risk in Older Adults Using the G-STRIDE Inertial Sensor: An Observational Multicenter Case–Control Study. BMC Geriatr. 2023, 23, 737. [Google Scholar] [CrossRef] [PubMed]

- Yoon, D.H.; Kim, J.-H.; Lee, K.; Cho, J.-S.; Jang, S.-H.; Lee, S.-U. Inertial Measurement Unit Sensor-Based Gait Analysis in Adults and Older Adults: A Cross-Sectional Study. Gait Posture 2024, 107, 212–217. [Google Scholar] [CrossRef] [PubMed]

- Shafi, H.; Awan, W.A.; Olsen, S.; Siddiqi, F.A.; Tassadaq, N.; Rashid, U.; Niazi, I.K. Assessing Gait & Balance in Adults with Mild Balance Impairment: G&B App Reliability and Validity. Sensors 2023, 23, 9718. [Google Scholar] [CrossRef] [PubMed]

Cloud Firestore collection;

Cloud Firestore collection;  Cloud Firestore document.

Cloud Firestore document.

Cloud Firestore collection;

Cloud Firestore collection;  Cloud Firestore document.

Cloud Firestore document.

| Age (Years) | Men | Woman |

|---|---|---|

| 20–40 | 42.49 cm | 37.19 cm |

| 41–69 | 38.25 cm | 35.08 cm |

| 70–87 | 33.43 cm | 26.59 cm |

| Methods | Advantages | Disadvantages |

|---|---|---|

| Rotation Matrices | Matrix presentation for a single rotation; Matrix operations are well-known; Make calculations easier; Homogeneous matrices represent all the basic transformations. | Nine degrees of freedom; Six orthogonality constraints; Risk of rounding errors in the successive concatenation of matrices; The rotation matrix is intricate to construct when the base of the space in which the rotation is performed is not known; Interpolation is problematic. |

| Euler Angles | Three degrees of freedom; Intuitive method; Natural and efficient representation; Simple form for rotations around coordinate axes | There is not always a straightforward decomposition of the rotation into three rotations around the coordinate axes; There are 12 different ways to compose elemental rotations; The representation of concatenated rotations is quite complex; Possible loss of one degree of freedom—Gimbal Lock problematic interpolation |

| Axis-Angle | Four degrees of freedom; good visualization | Possible loss of unitary norm; Numerical errors can affect the angle value Computational difficulty in composing rotations; Ambiguity in choosing axis orientation; Multiplicity of identity representation; Problematic interpolation |

| Quaternions | Four degrees of freedom; Simplicity and economy; Ease of combining rotations; The choice of the coordinate system does not influence. | Indetermination in the orientation of the axes: q and −q represent the same rotation; Represent only rotations. Unintuitive and challenging to visualize. |

| Algorithm | Roll (ϕ) | Pitch (θ) | Yaw (ψ) |

|---|---|---|---|

| Complementary Filter | 9.4 | 1.6 | 34.7 |

| Extended Kalman Filter | 91.4 | 24.1 | 96.4 |

| Mahony | 10.8 | 0.9 | 93.5 |

| Madgwick | 24.2 | 3.7 | 55.3 |

| Madgwick (ours, without magnetometer) | 8.6 | 0.9 | 119.1 |

| Madgwick (ours, complete) | 10.3 | 1.1 | 38.1 |

| Individual | First FRT Trial | Second FRT Trial | ||

|---|---|---|---|---|

| Estimated Displacement (cm) | Measured Displacement (cm) | Estimated Displacement (cm) | Measured Displacement (cm) | |

| 1 | 17.02 | 14.00 | 27.09 | 25.50 |

| 2 | 19.45 | 23.40 | 14.20 | 22.80 |

| 3 | 14.06 | 18.10 | 19.00 | 17.40 |

| 4 | 11.45 | 14.50 | 13.79 | 19.30 |

| 5 | 11.65 | 16.30 | 17.75 | 21.60 |

| Individual | Average Estimated Displacement (cm) | Average Measured Displacement (cm) | Average Displacement Error (cm) |

|---|---|---|---|

| 1 | 22.06 | 19.75 | 2.31 |

| 2 | 16.83 | 23.10 | 6.28 |

| 3 | 16.53 | 17.75 | 1.22 |

| 4 | 12.62 | 16.90 | 4.28 |

| 5 | 14.70 | 18.95 | 4.25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Francisco, L.; Duarte, J.; Albuquerque, C.; Albuquerque, D.; Pires, I.M.; Coelho, P.J. Mobile Data Gathering and Preliminary Analysis for the Functional Reach Test. Sensors 2024, 24, 1301. https://doi.org/10.3390/s24041301

Francisco L, Duarte J, Albuquerque C, Albuquerque D, Pires IM, Coelho PJ. Mobile Data Gathering and Preliminary Analysis for the Functional Reach Test. Sensors. 2024; 24(4):1301. https://doi.org/10.3390/s24041301

Chicago/Turabian StyleFrancisco, Luís, João Duarte, Carlos Albuquerque, Daniel Albuquerque, Ivan Miguel Pires, and Paulo Jorge Coelho. 2024. "Mobile Data Gathering and Preliminary Analysis for the Functional Reach Test" Sensors 24, no. 4: 1301. https://doi.org/10.3390/s24041301

APA StyleFrancisco, L., Duarte, J., Albuquerque, C., Albuquerque, D., Pires, I. M., & Coelho, P. J. (2024). Mobile Data Gathering and Preliminary Analysis for the Functional Reach Test. Sensors, 24(4), 1301. https://doi.org/10.3390/s24041301