1. Introduction

Gas sensors play a vital role across diverse industrial sectors, including environmental surveillance [

1,

2,

3], medical diagnostics [

4,

5,

6], food analytics [

7,

8], and explosive detection [

9,

10]. Over the past two decades, significant strides have been made in gas sensor technology to meet the practical demands of various applications. For instance, Fort and colleagues proposed three measurement methodologies to effectively differentiate gas mixtures [

11], enabling a more precise categorization of wines. This empowers industries to ensure the quality and authenticity of their products. Bhattacharyya et al. introduced a computational framework integrating a cost-effective interface and a wide-range, low-value resistive sensor [

12,

13]. This architecture can assess the quality of unidentified tea samples, providing an economical and efficient solution for the tea industry. In another notable development, Brezmes et al. designed a sensor system specifically for measuring fruit ripeness, tailored to application-specific requirements [

14]. This system enables a precise and timely evaluation of fruit maturity, assisting in the optimization of harvesting and storage operations. In summary, advancements in gas sensor technology have significantly improved the capability to detect and analyze gases across various industries. These innovations have led to more accurate and reliable outcomes, ultimately enhancing productivity and safety in these sectors. However, since the measurement strategy of gas sensors is to detect the change in resistance and voltage of the gas-sensitive material when it is exposed to the gas to be measured, the sensor sensitivity can be affected by various aspects such as temperature, humidity, pressure, self-aging, and poisoning. Changes in sensor sensitivity can lead to fluctuations in sensor response when the electronic nose is exposed to the same gas at different times, called sensor drift [

15]. This paper focuses on the drift compensation of gas sensors.

In order to tackle this dilemma, researchers have approached it from three different perspectives. The first approach involves developing gas-sensitive materials that exhibit both high performance and high stability. However, this necessitates breakthroughs in multiple disciplines like physics, chemistry, and materials science, and can be quite costly. Another approach involves enhancing the stability of the gas sensor by modifying its operating mode, such as periodically adjusting the heating voltage. Nevertheless, these two strategies mainly address short-term drift phenomena and have limited impact on long-term drift issues.

To combat long-term drift problems, many researchers have focused on modifying the signal-processing algorithms used in gas sensors. These algorithms are typically classified into three groups: data-level, feature-level, and classifier-level drift compensation methods.

Data-level approaches: Artursson et al. introduced techniques such as Principal Component Analysis (PCA) and Partial Least Squares for drift suppression [

16]. Padilla et al. presented an OSC-based drift correction strategy for gas sensor arrays [

17]. Natale et al. addressed drift by employing Independent Component Analysis (ICA) while preserving components associated with sample characteristics [

18]. Additionally, a method known as Common Principal Component Analysis (CCPCA) offers drift reduction without requiring a distinct reference gas [

19].

Feature-level methods: These approaches aim to align source data (clean data) and target data (drift data) in a shared subspace, minimizing distribution divergence between them. L. Zhang proposed Domain Regularized Component Analysis (DRCA), which reduces marginal distribution divergence between clean and drift data within the common subspace [

20]. An extension of DRCA, Local Discriminant Subspace Projection (LDSP), seeks to identify a common subspace that simultaneously reduces local within-class variance of projected source samples and maximizes local between-class variance [

21]. Another approach, named Common Subspace-Based Drift (CSBD), minimizes distribution divergence between clean and drift data within a new subspace [

22].

Classifier-level techniques: The performance of a classifier significantly impacts the resulting classification [

23]. Zhang and Zhang introduced two gas drift correction methods based on Extreme Learning Machines, both of which provide low computational complexity [

24]. In recent years, online drift compensation methods have been introduced to address sensor drift [

25,

26,

27]. Expanding on the concept of active learning, the method (referred to as AL-ISSMK) developed by Liu et al. [

26] identifies the most valuable samples and retrains the classifier to adapt to evolving sensor drift.

While the adaptive correction methods mentioned above have shown promising results in compensating for drift in gas sensor arrays, there remain three areas that require further enhancement: (1) Low classification accuracy persists, with most methods achieving rates below 90%. (2) Many approaches rely on labeled data from drifted sensors to enhance accuracy, but obtaining these labels is costly as it involves recalibrating the sensors. (3) Several methods necessitate an excessive number of hyperparameters, limiting their practicality for real-world applications in production and daily life.

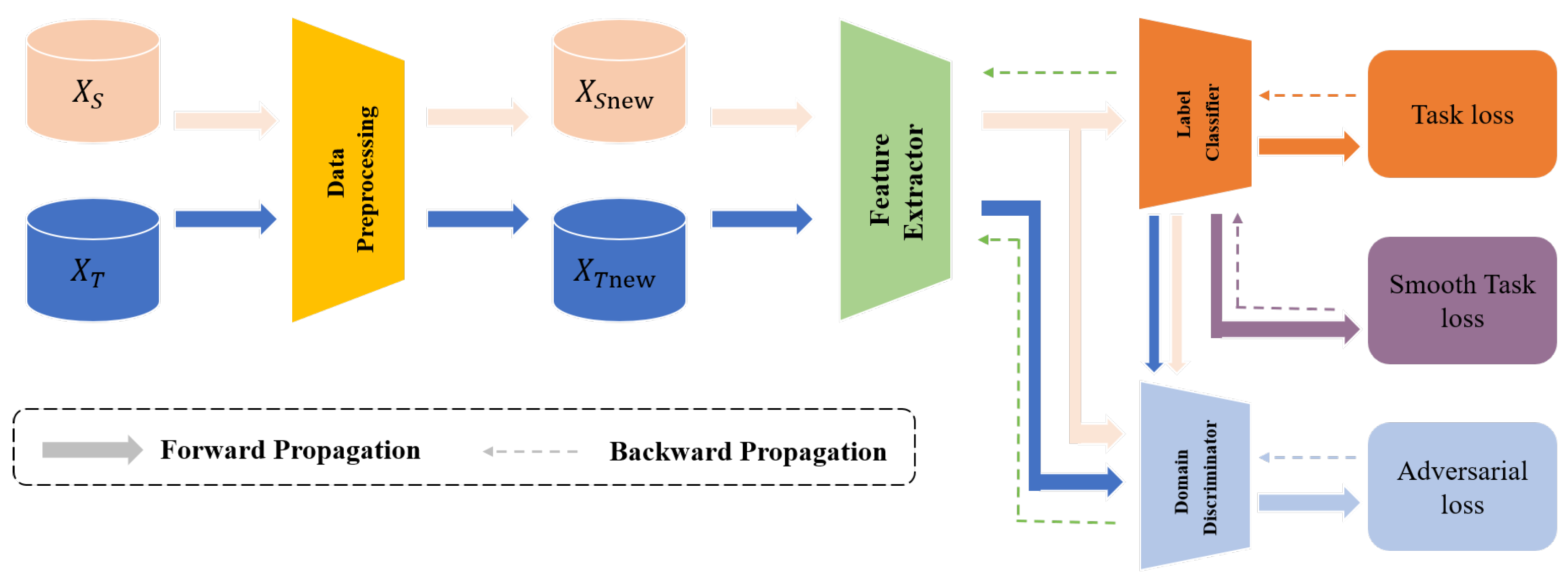

To address the previously mentioned challenges, we present the CDAN+SAM model. In this model, CDAN is devised to extract common features from both clean and drifted data. These extracted features are subsequently input into a neural network to train a more generalized and robust classifier. The SAM optimizer plays a crucial role in smoothing the training process, facilitating easier network training and convergence. The fundamental structure of the CDAN+SAM model is illustrated in

Figure 1.

The remainder of this paper is organized as follows: The second section provides an introduction to the foundational theory of transfer learning, offering insights into the principles underlying CDAN and SAM. In the third section, we conduct a comprehensive analysis of experimental results and perform ablation experiments to further validate our approach. Finally, the fourth section summarizes the key findings and conclusions of this paper.

3. Result and Discussion

To assess the efficacy of CDAN+SAM, we conducted a comparative analysis with various deep transfer learning methods using two publicly available sensor drift datasets as benchmarks. Resnet served as the feature extraction network in this model. The experimental configurations are delineated in the subsequent subsections. The computational environment utilized Pycharm, and the hardware specifications are as follows: Windows 10 operating system, Intel Core i7-10300H CPU @ 3.40 GHz, 32.0 GB RAM, GTX 3080 GPU, and a 2 TB SSD.

3.1. Experiment on Sensor Drift Dataset A

Dataset A used in Experiment 1 is from UCSD [

23], and the dataset measures 6 types of gases, using 16 gas sensors (TGS2600, TGS2602, TGS2610, and TGS2620; 4 of each sensor). The dataset has 8 dimensional features per sample, including 2 rising edge features, 3 falling edge features, and 3 smooth states, and contains a total of 13,910 samples divided into 10 batches. The data were recorded from January 2008 to the end of February 2011, spanning 3 years, where

Table 1 shows the details of the dataset and the scatter plot in

Figure 4 shows the principal component analysis(PCA) of the dataset. We take Batch 1 as the source domain for model training and test on Batch K, K = 2, …, 10 (target domains). The classification accuracy on Batch K is reported.

In order to verify the effectiveness of the algorithms, 14 methods of 3 types, namely, drift compensation methods, traditional transfer learning methods, and deep transfer learning methods, are selected for comparison in this paper, of which SVM-rbf, OSC, CC-PCA, GLSW [

29], DS [

30], and DRCA belong to the drift compensation methods, and these types of methods are capable of identifying and calibrating drift components, and geodesic flow kernel (GFK) [

31], TCA [

32] and JDA [

33] belong to the traditional migration learning methods, which can change the probability distribution of the data in order to improve the recognition algorithm accuracy. Deep Transfer Learning Methods: Within this category are DANN [

34], WDANN [

35], and MADA [

36]. These methods represent mainstream approaches for deep domain adaptation. Experiments were conducted on sensor drift Dataset A, and the recognition results for different methods under the experimental setting are presented in

Table 2 and

Figure 5. It is observed that the proposed CDAN+SAM achieves the best classification performance. The average classification accuracy is 90.32%, which is 7.27% higher than the second-best learning method.

Furthermore, for each batch, the best parameters for which the proposed method achieves the highest accuracy are provided in

Table 3. The feature extraction network is the Resnet18 network. Since the features of Dataset A are 128 dimensional, a deeper network is needed to extract the features.

3.2. Experiment on Sensor Drift Dataset B

The drift displacement electronic nose dataset was collected by Zhang Lei et al. from Chongqing University [

20]. The dataset was collected using an array of electronic nose sensors of the same model. Experimental measurements included ammonia, benzene, carbon monoxide, formaldehyde, nitrogen dioxide, and toluene. And four TGS series (TGS2602, TGS2620, TGS2201A, and TGS2201B) air sensors were used as well as temperature and humidity sensors (STD2230-I2 Cof Sensirion in Switzerland). The dataset has 6-dimensional features for each sample, and contains a total of 1604 samples, divided into 3 batches: master data, Slave data 1, and Slave data 2, where the master data was collected 5 years prior to Slave 1 data and Slave 2 data.

Table 4 records the detailed data of this dataset. The scatter plot in

Figure 6 shows the principal component analysis(PCA) of the dataset. Notably, the distributions of the slave systems differ significantly from those of the master system.

We used the master data as the source domain of the model and the Slave 1 and Slave 2 datasets as the target domain of the model. The proposed CDAN+SAM is compared with 11 popular transfer learning methods, and the classification results are presented in

Table 5 and

Figure 7. It is evident that CDAN+SAM consistently demonstrates optimal identification accuracy. Specifically, when compared with WDAAN, which exhibits similarity to the proposed method, CDAN+SAM improves the average recognition rates by 6.21% and 13.82% for Tasks 1 and 2, respectively.

Furthermore, for each batch, the best parameters leading to the highest accuracy for the proposed method are detailed in

Table 6. The feature extraction network is a CNN network. Since the features of this dataset are 6-dimensional, no deeper network is needed to extract the features.

3.3. The Sensitivity of CDAN+SAM to Different Magnitudes of Drift

CDAN+SAM achieves more than 85% accuracy for the first 7 batches of data in Dataset A and for 3 years, which indicates that the method can compensate the accuracy of short-term drift well. For the last 3 batches of data and for more than 2 years, except for Dataset 9, the accuracy of the compensation is mostly lower than 80% due to the serious drift of the dataset, but it is still higher than that of the other 12 methods. This indicates that CDAN+SAM can handle both short-term and longer-term drifts well.

Compared with the Dataset A, Dataset B has a larger time span and deeper drift, so the average compensation accuracies obtained by all the methods in Dataset B are lower than those obtained by the methods in Dataset A. However, CDAN+SAM achieves the best results in both slaves, which shows that the method can deal with more complex and deeper drift scenarios.

3.4. Ablation Study

To comprehensively analyze the role of the SAM component in CDAN+SAM, we conducted ablation experiments under two settings on both Dataset A and Dataset B utilizing CDAN+SAM.

Setting 1: To demonstrate the importance of CDAN in extracting features common to both source and target data, the term CDAN in CDAN+SAM was replaced with DANN. DANN, in contrast to CDAN, solely considers the distinctions between source and target domain data, overlooking the differences between various categories within the data.

Setting 2: To illustrate that the SAM optimizer contributes to smoothing the entire model for improved results, the SAM optimizer in CDAN was replaced with the SGD optimizer.

The results of the ablation experiments for these two settings are summarized in

Table 7 and

Table 8. Ablation study histograms of accuracy under Dataset A and Dataset B are visualized in

Figure 8 and

Figure 9.

The ablation study outcomes highlight that each component plays a crucial role in enhancing the domain adaptation capability of the CDAN+SAM model. The experiments emphasize that, in deep transfer learning, consideration should be given not only to the distinctions between the source and target domain data but also to the differences among various categories within the data. Furthermore, the SAM optimizer proves effective in smoothing the adversarial model, leading to superior results.

4. Conclusions

This paper presents a novel framework CDAN+SAM for gas sensor drift compensation. Traditional machine learning approaches face challenges in solving the sensor drift problem, which is mainly attributed to the aging of gas-sensitive materials leading to inconsistencies in the probability distributions of calibration and measurement data. In this case, the proposed CDAN+SAM framework excels in capturing the common features of the drifted and raw data, as the model considers not only the relationship between the drifted and clean data, but also the relationship between the data of different species of gases. The SAM optimizer used in CDAN+SAM mitigates the challenges associated with the traditional deep migration learning, such as the training difficulty and the convergence problems. Experimental results demonstrate the superior performance of CDAN+SAM, which outperforms most of the existing methods in long-term and short-term drift scenarios by improving the accuracy by 7.27% and 10.02%, respectively. We plan to use the CDAN+SAM method in real life in the future, which should use different feature extraction networks when dealing with different drifting datasets, e.g., for datasets with temporal features, the LSTM network can be used; for complex and huge datasets, the Transformer network can be used. The use of different networks will inevitably lead to a huge overhead of computational resources, so we suggest that the sensors should be deployed with 5G network data transmission devices, and cloud computing can be used to solve the problem of insufficient computational resources.