Synthetic Corpus Generation for Deep Learning-Based Translation of Spanish Sign Language

Abstract

1. Introduction

- To provide an overview of Deep Learning-based techniques approached to address the problem of communication between the deaf community and hearing people in the literature, as well as establish the main gaps in recent studies related to these tasks.

- The development of two methods to obtain synthetic gloss annotations in LSE: one based on the translation of an existing dataset (from German to Spanish), and another employing a flexible rule-based system to translate from Oral Spanish (LOE, from Lengua Oral Española) to LSE glosses.

- To publish a synthetic corpus including Spanish sentence pairs (LOE) and their corresponding translation to LSE gloss annotations.

- To carry out a set of experiments with language models based on Transformers using our synthetic datasets in both directions: the translation in LOE from LSE to written/oral language (gloss2text) and from written/oral language to glossed sentences (text2gloss).

2. Contextualizing Sign Language

3. Related Work

3.1. Towards Deaf–Hearing Communication

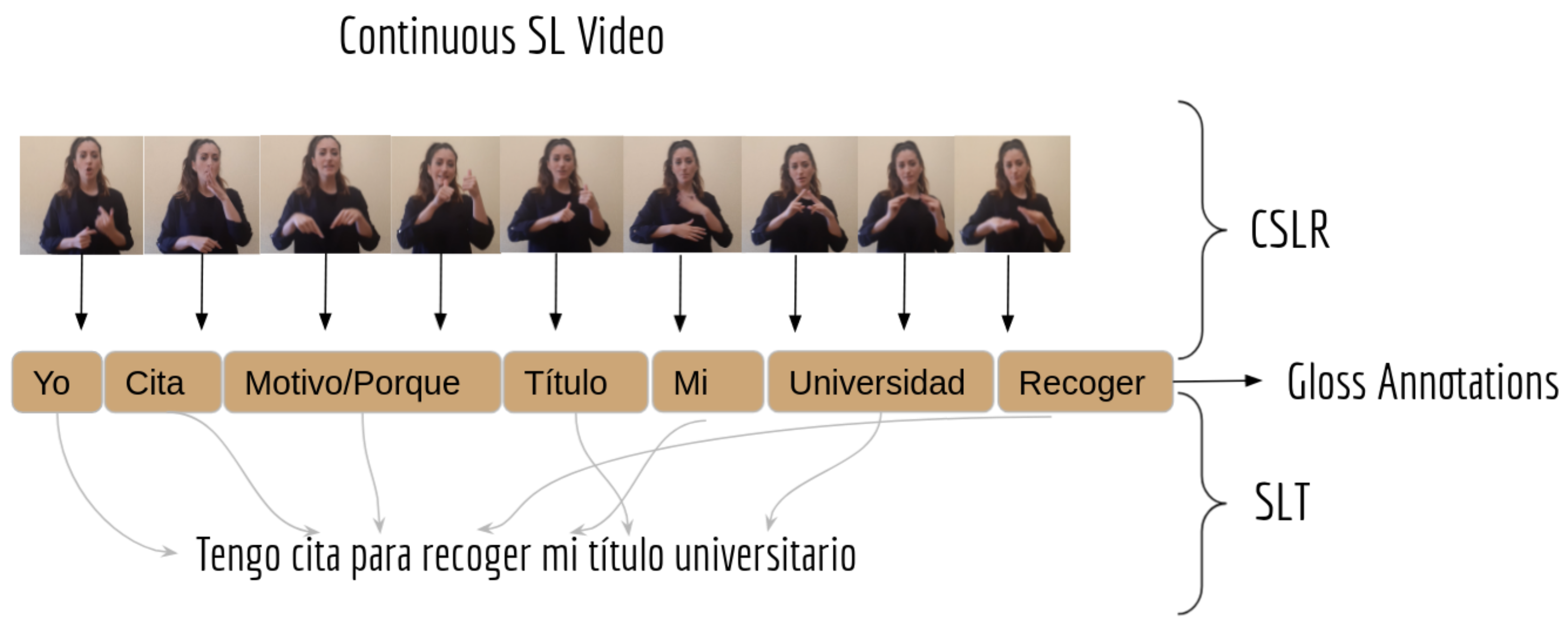

3.1.1. Sign Language Recognition

3.1.2. Sign Language Translation (SLT)

3.2. Towards Hearing–Deaf Communication

3.2.1. Sign Language Production (SLP) with Videos

3.2.2. Sign Language Production with Avatars

4. SynLSE Corpus: A Synthetically Generated Corpus for LSE Translation

4.1. tranSPHOENIX: Translation to Spanish of RTWH-PHOENIX-2014T

4.2. ruLSE: A Flexible Rule-Based System to Generate Gloss Annotations

4.2.1. Differences with ASLG-PC12

4.2.2. Transformation Rules

- Specific (XPoS) or Universal (UPoS) grammatical category. It is represented in lowercase within the rule structures.

- Textual word or lemma. It is represented in uppercase within the rule structures.

- Plural words are signed, in some cases, by repeating the sign or its classifier several times to indicate abundance or frequency. When a word is plural, it is glossed as follows: <lemma>-PL.

- Proper nouns are usually spelled because they do not have a preset sign. In this case, this is indicated as a spelled word as follows: <syllable>-NP.

- Sorting: to change the order of words that comply with the input structure.

- Elimination: to delete words that do not have an associated sign in LSE.

- Insertion: sometimes it is required to insert glosses that do not appear in the input sentence by adding the lemma (capitalized) in the appropriate position within the output structure. The id is set to zero: 0_<lemma>.

- Substitution: A word in the input structure can be modified in the output structure for several reasons: several independent signs are associated with it; it is represented by a different gloss than its lemma; more information has to be provided in addition to its lemma separated by a hyphen (useful for plurals). One example for the last reason is the following: the lemma of the word “PERROS” (dogs) is “PERRO”, but in order to keep the plural information, we can define a rule with input structure 1_nc0p000 and output structure 1_nc0p000-PL, obtaining, as a result, “PERRO-PL” (nc0p000 is the specific grammatical category of plural nouns).

- 1.

- Adjectives are signed after nouns.

- 2.

- Indirect complements are signed after the verb (Exclusion 1).

- 3.

- Direct complements are signed before the verb (Exclusion 1).

- 4.

- Articles are not signed.

- 5.

- Prepositions are not signed.

4.2.3. Parallel Corpus Generation

- 1.

- Text preparation. The algorithm receives a sequence of characters that form a Spanish text. We use the Stanza library to separate the text into sentences by punctuation marks, tokenize the sentences into words, obtain the lemma of the words and syntactically analyze each sentence (providing properties such as the universal and specific grammatical category of each word). At the end, a sentence is a list of words and the text is a list of sentences. Each word has an assigned set of properties according to the aforementioned process.

- 2.

- Application of rules. The transformation rules are stored in a CSV file. Once loaded, the text phrases are iterated and the transformation rules are applied to each of them. This returns the input sentence but with updated word information: new position in the list of sentences and the new position in the text.

- 3.

- Generation of glosses. On the transformed sentence, the words are iterated and the lemma of each one is concatenated in the order indicated by its position attribute. This step is repeated for each of the sentences in the text.

- 4.

- Insertion in the corpus. Finally, each of the sentences of the initial text is inserted together with its glosses in the file where the corpus is stored.

4.3. valLSE: A Semi-Validated Dataset to Test the Performance of Models

- Macarena: A small set of 50 sentences from the Large Spanish Corpus (https://huggingface.co/datasets/large_spanish_corpus, accessed on 20 February 2024), which contains news in Spanish. These sentences were translated to LSE glosses using our ruLSE system, and they were semi-validated by an expert interpreter. This means that only 10 sentences were reviewed, but since the complexity of the sentences is very similar, the interpreter assumed that the same corrections could be applied to the rest. According to her, the translation could be improved but it was correct (see Section 7 for more details).

- Uvigo: The LSE_UVIGO dataset [87], as published in 2019 on the authors’ website. Some words were modified to meet the notation followed by ruLSE. Specifically, the changes were the following: rewriting nouns separated by hyphens as word-NP; adding PL to plural nouns and removing (s); and removing periods and hyphens between words.

5. Experiments on Neural Sign Language Translation

5.1. Neural Machine Translation

5.2. Transformer Models for SLT Experiments

- 1.

- Text2gloss on the original PHOENIX-2014T dataset. The STMC-Transfomer model was trained on the original (German) version of PHOENIX-2014T dataset to find the optimal number of layers in the model. We explored different numbers of encoder–decoder layers: 1, 2, 4 and 6.This proved that the best results are obtained for a 2-layer configuration in the encoder and in the decoder of the Transformer; therefore, this was the number of layers used in the remaining experiments for this model. This Transformer was configured with word embedding size 512, gloss level tokenization, sinusoidal positional encoding, 2.048 hidden units and 8 heads, and for optimization, Adam was applied. The network was trained with a batch size of 2048 and an initial learning rate of 1; 0.1 dropout, 0.1 label smoothing.

- 2.

- Text2gloss on tranSPHOENIX dataset (from SynLSE). In the second block of experiments, we trained both models (STMC-Transformer and MarianMT model) on different subsets of tranSPHOENIX: one formed by the whole dataset (7096 sentences in the train set and 642 in the test set), another one formed by approximately half of the dataset (3500 sentences in the train set and 321 in the test set) and the last smaller set formed by 1000 sentences for training and 92 for testing. Thus, based on the results obtained, it was possible to establish the number of sentences in the training set necessary for the model to learn an acceptable translation of glossed sentences into written language.The STMC-Transformer was initialized with pre-trained embeddings (i.e., trained in a large corpus of text in the desired language) for transfer learning. Two word embeddings, trained in an unsupervised manner on a large corpus of data in Spanish, were used to improve the experiments on the dataset: GloVe [95] and FastText [96]. The corpora on which they were trained are Spanish Unannotated Corpora (SUC) [97], Spanish Wikipedia (Wiki) [98] and Spanish Billion Word Corpus (SBWC) [99].Moreover, the pre-trained MarianMT model from HuggingFace library was fine-tuned for the experiments, using a batch-size of 4, an initial learning rate of for the Adam optimizer with weight decay fix and 20 epochs.

- 3.

- Text2gloss on ruLSE dataset (from SynLSE). A third group of experiments focused on training the best STMC model from the previous group and MarianMT, and their performance was tested when trained on the more accurate, larger and synthetic dataset generated with ruLSE. We also tested the performance of the best model towards the valLSE dataset, and compared it with our ruLSE system.MarianMT was trained with 1000, 3500 and 7500 sentences from the parallel corpus generated with ruLSE (ruLSE dataset). A hyperparameter search was performed for each subset, and the here reported results were achieved using 1000, 3500 and 7500 sentences of the dataset. The best training performance for 1000 sentences was achieved with a learning rate of , 10 epochs, a batch size of 16 and weight rate 0.076; a learning rate of , 15 epochs, a batch size of 64 and weight rate 0.092 for 2000 sentences; and a learning rate of , 10 epochs, a batch size of 32 and weight rate 0.034 for 7500 sentences.

- 4.

- Gloss2text when using ruLSE versus STMC-Transformer and MarianMT. The last group of experiments consisted of applying the STMC-Transformer and MarianMT models on the entire synLSE corpus but for Sign Language Production. The objective of this experiment set was to test whether Transformers obtain similar performance one way or another, given that glosses can produce several text sentences but text2gloss mapping is unique. This was accomplished by inverting the training data modalities, having as input the sequence of sentences in written language and obtaining as output the sequence of glossed sentences (i.e., gloss production). We tested the performance against our rule-based system ruLSE using our short validated dataset. The employed models and configurations were the same, also regarding the use of previously pre-trained word embeddings.

5.3. Employed Metrics

6. Results

6.1. Experiment Sets 1 and 2 (text2gloss Based on PHOENIX-2014T)

6.2. Experiment Set 3 (gloss2text Based on ruLSE)

6.3. Experiment Set 4 (gloss2text)

7. Conclusions and Future Work

- 1.

- For the STMC-Transformer model of [57], it was determined that a two-layer encoder–decoder model offers the best results for other layer configurations, as mentioned in the paper.

- 2.

- It was shown that the use of a larger data set during training improves the results by approximately four points generically, in both models used. In addition, between smaller training subsets, the most notable performance improvement is 1.15 points (from 15.57 points with a 1000-sentence training to 16.72 for a 3500-sentence training for the BLEU-4 measure), which is not significant considering that more than three times as much data is used during training.

- 3.

- The MarianMT model outperformed the Transformer-STMC configurations, but with the trade-off of a significantly longer execution time, about four times slower. That is why, despite a 2–3 point improvement of the MarianMT model, the STMC-Transformer model demonstrated higher efficiency, making use of pre-trained word embeddings contributing to superior performance.

- 4.

- The use of a more accurate dataset, designed with hand-crafted simple rules to generate glosses from natural language, improved the results for all trained models. MarianMT was still the best model tested in our experiments.

- 5.

- The difference between using a translated dataset and a rule-generated dataset increased when applying gloss production. While during SLT tasks, the results for the translated transPHOENIX set improved or worsened slightly, for SLP we could observe a drop of up to 9.14 points in performance. In contrast, with our synthetic corpus generated with the ruLSE system, there was hardly any drop, so we can state that it is more efficient and accurate for training Transformer models in SLP tasks. Despite its efficiency and accuracy, ruLSE presents a significant trade-off: defining additional rules that add more complexity to the sentence structures requires manual intervention. This complexity contrasts with the ease with which Transformer models can be trained with specific examples.

- The most complex sentences sometimes do not comply with the grammatical structure defined for LSE, but follow another order which consists of signing from the general to the particular to make the message clearer.

- The sign “ahí” (there) has to be added when it is necessary to locate objects in space. Example: “La casa tiene una habitación en la segunda planta donde hay una cama” (”The house has a room on the second floor where there is a bed”) “Casa segunda planta ahí habitación ahí cama”.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- OMS. Deafness and Hearing Loss. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss (accessed on 20 February 2024).

- Peery, M.L. World Federation of the Deaf. Encyclopedia of Special Education: A Reference for the Education of Children, Adolescents, and Adults with Disabilities and Other Exceptional Individuals; John Wiley & Sons.: New York, NY, USA, 2013. [Google Scholar]

- Nasser, A.R.; Hasan, A.M.; Humaidi, A.J.; Alkhayyat, A.; Alzubaidi, L.; Fadhel, M.A.; Santamaría, J.; Duan, Y. Iot and cloud computing in health-care: A new wearable device and cloud-based deep learning algorithm for monitoring of diabetes. Electronics 2021, 10, 2719. [Google Scholar] [CrossRef]

- Al, A.S.M.A.O.; Al-Qassa, A.; Nasser, A.R.; Alkhayyat, A.; Humaidi, A.J.; Ibraheem, I.K. Embedded design and implementation of mobile robot for surveillance applications. Indones. J. Sci. Technol. 2021, 6, 427–440. [Google Scholar]

- Nasser, A.R.; Hasan, A.M.; Humaidi, A.J. DL-AMDet: Deep learning-based malware detector for android. Intell. Syst. Appl. 2024, 21, 200318. [Google Scholar] [CrossRef]

- Baker, A.; van den Bogaerde, B.; Pfau, R.; Schermer, T. The Linguistics of Sign Languages: An Introduction; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Mitra, S.; Acharya, T. Gesture recognition: A survey. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Cooper, H.; Holt, B.; Bowden, R. Sign language recognition. In Visual Analysis of Humans; Springer: Berlin/Heidelberg, Germany, 2011; pp. 539–562. [Google Scholar]

- Starner, T.E. Visual Recognition of American Sign Language Using Hidden Markov Models; Technical Report; Massachusetts Institute of Technology, Cambridge Department of Brain and Cognitive Sciences: Cambridge, MA, USA, 1995. [Google Scholar]

- Vogler, C.; Metaxas, D. ASL recognition based on a coupling between HMMs and 3D motion analysis. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), Bombay, India, 7 January 1998; pp. 363–369. [Google Scholar]

- Fillbrandt, H.; Akyol, S.; Kraiss, K.F. Extraction of 3D hand shape and posture from image sequences for sign language recognition. In Proceedings of the 2003 IEEE International SOI Conference. Proceedings (Cat. No. 03CH37443), Newport Beach, CA, USA, 2 October–29 September 2003; pp. 181–186. [Google Scholar]

- Buehler, P.; Zisserman, A.; Everingham, M. Learning sign language by watching TV (using weakly aligned subtitles). In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2961–2968. [Google Scholar]

- Cooper, H.; Pugeault, N.; Bowden, R. Reading the signs: A video based sign dictionary. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 914–919. [Google Scholar]

- Ye, Y.; Tian, Y.; Huenerfauth, M.; Liu, J. Recognizing american sign language gestures from within continuous videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2064–2073. [Google Scholar]

- Camgoz, N.C.; Hadfield, S.; Koller, O.; Bowden, R. Using convolutional 3d neural networks for user-independent continuous gesture recognition. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 49–54. [Google Scholar]

- Huang, J.; Zhou, W.; Li, H.; Li, W. Sign language recognition using 3d convolutional neural networks. In Proceedings of the 2015 IEEE International Conference on Multimedia and Expo (ICME), Turin, Italy, 29 June–3 July 2015; pp. 1–6. [Google Scholar]

- Er-Rady, A.; Faizi, R.; Thami, R.O.H.; Housni, H. Automatic sign language recognition: A survey. In Proceedings of the 2017 International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Fez, Morocco, 22–24 May 2017; pp. 1–7. [Google Scholar]

- Rastgoo, R.; Kiani, K.; Escalera, S. Sign language recognition: A deep survey. Expert Syst. Appl. 2020, 164, 113794. [Google Scholar] [CrossRef]

- Ong, S.C.; Ranganath, S. Automatic sign language analysis: A survey and the future beyond lexical meaning. IEEE Comput. Archit. Lett. 2005, 27, 873–891. [Google Scholar] [CrossRef] [PubMed]

- Amir, A.; Taba, B.; Berg, D.; Melano, T.; McKinstry, J.; Di Nolfo, C.; Nayak, T. A low power, fully event-based gesture recognition system. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7243–7252. [Google Scholar]

- Materzynska, J.; Berger, G.; Bax, I.; Memisevic, R. The jester dataset: A large-scale video dataset of human gestures. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? a new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human action classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- nez Marcos, A.N.; de Viñaspre, O.P.; Labaka, G. A survey on Sign Language machine translation. Expert Syst. Appl. 2023, 213, 118993. [Google Scholar] [CrossRef]

- Cihan Camgoz, N.; Hadfield, S.; Koller, O.; Ney, H.; Bowden, R. Neural sign language translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7784–7793. [Google Scholar]

- Camgoz, N.C.; Koller, O.; Hadfield, S.; Bowden, R. Sign language transformers: Joint end-to-end sign language recognition and translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10023–10033. [Google Scholar]

- Zhang, X.; Duh, K. Approaching Sign Language Gloss Translation as a Low-Resource Machine Translation Task. In Proceedings of the 1st International Workshop on Automatic Translation for Signed and Spoken Languages (AT4SSL), Virtual, 20 August 2021; pp. 60–70. [Google Scholar]

- Chiruzzo, L.; McGill, E.; Egea-Gómez, S.; Saggion, H. Translating Spanish into Spanish Sign Language: Combining Rules and Data-driven Approaches. In Proceedings of the Fifth Workshop on Technologies for Machine Translation of Low-Resource Languages (LoResMT 2022), Gyeongju, Republic of Korea, 12–17 October 2022; pp. 75–83. [Google Scholar]

- Rastgoo, R.; Kiani, K.; Escalera, S.; Sabokrou, M. Sign Language Production: A Review. arXiv 2021, arXiv:2103.15910. [Google Scholar]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Skeletal Graph Self-Attention: Embedding a Skeleton Inductive Bias into Sign Language Production. arXiv 2021, arXiv:2112.05277. [Google Scholar]

- Cabeza, C.; García-Miguel, J.M. iSignos: Interfaz de Datos de Lengua de Signos Española (Versión 1.0); Universidade de Vigo: Vigo, Spain. Available online: http://isignos.uvigo.es (accessed on 1 July 2023).

- Shin, H.; Kim, W.J.; Jang, K.a. Korean sign language recognition based on image and convolution neural network. In Proceedings of the 2nd International Conference on Image and Graphics Processing, Singapore, 23–25 February 2019; pp. 52–55. [Google Scholar]

- Kishore, P.; Rao, G.A.; Kumar, E.K.; Kumar, M.T.K.; Kumar, D.A. Selfie sign language recognition with convolutional neural networks. Int. J. Intell. Syst. Appl. 2018, 11, 63. [Google Scholar] [CrossRef][Green Version]

- Wadhawan, A.; Kumar, P. Deep learning-based sign language recognition system for static signs. Neural Comput. Appl. 2020, 32, 7957–7968. [Google Scholar] [CrossRef]

- Can, C.; Kaya, Y.; Kılıç, F. A deep convolutional neural network model for hand gesture recognition in 2D near-infrared images. Biomed. Phys. Eng. Express 2021, 7, 055005. [Google Scholar] [CrossRef] [PubMed]

- De Castro, G.Z.; Guerra, R.R.; Guimarães, F.G. Automatic translation of sign language with multi-stream 3D CNN and generation of artificial depth maps. Expert Syst. Appl. 2023, 215, 119394. [Google Scholar] [CrossRef]

- Chen, Y.; Zuo, R.; Wei, F.; Wu, Y.; Liu, S.; Mak, B. Two-stream network for sign language recognition and translation. Adv. Neural Inf. Process. Syst. 2022, 35, 17043–17056. [Google Scholar]

- Li, D.; Rodriguez, C.; Yu, X.; Li, H. Word-level deep sign language recognition from video: A new large-scale dataset and methods comparison. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1459–1469. [Google Scholar]

- Joze, H.R.V.; Koller, O. Ms-asl: A large-scale data set and benchmark for understanding american sign language. arXiv 2018, arXiv:1812.01053. [Google Scholar]

- Albanie, S.; Varol, G.; Momeni, L.; Afouras, T.; Chung, J.S.; Fox, N.; Zisserman, A. BSL-1K: Scaling up co-articulated sign language recognition using mouthing cues. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 35–53. [Google Scholar]

- Pu, J.; Zhou, W.; Li, H. Sign language recognition with multi-modal features. In Proceedings of the Pacific Rim Conference on Multimedia, Xi’an, China, 15–16 September 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 252–261. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Wong, R.; Camgöz, N.C.; Bowden, R. Hierarchical I3D for Sign Spotting. In Proceedings of the Computer Vision–ECCV 2022 Workshops, Tel Aviv, Israel, 23–27 October 2022; Karlinsky, L., Michaeli, T., Nishino, K., Eds.; Springer: Cham, Switzerland, 2023; pp. 243–255. [Google Scholar]

- Eunice, J.; Sei, Y.; Hemanth, D.J. Sign2Pose: A Pose-Based Approach for Gloss Prediction Using a Transformer Model. Sensors 2023, 23, 2853. [Google Scholar] [CrossRef]

- Vázquez-Enríquez, M.; Alba-Castro, J.L.; Docío-Fernández, L.; Rodríguez-Banga, E. Isolated sign language recognition with multi-scale spatial-temporal graph convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3462–3471. [Google Scholar]

- Pu, J.; Zhou, W.; Li, H. Dilated Convolutional Network with Iterative Optimization for Continuous Sign Language Recognition. In Proceedings of the 2018 International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; Volume 3, p. 7. [Google Scholar]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Wei, C.; Zhou, W.; Pu, J.; Li, H. Deep grammatical multi-classifier for continuous sign language recognition. In Proceedings of the 2019 IEEE Fifth International Conference on Multimedia Big Data (BigMM), Singapore, 11–13 September 2019; pp. 435–442. [Google Scholar]

- Zhou, H.; Zhou, W.; Zhou, Y.; Li, H. Spatial-temporal multi-cue network for continuous sign language recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13009–13016. [Google Scholar]

- Huang, J.; Zhou, W.; Zhang, Q.; Li, H.; Li, W. Video-based sign language recognition without temporal segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Camgoz, N.C.; Hadfield, S.; Koller, O.; Bowden, R. Subunets: End-to-end hand shape and continuous sign language recognition. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3075–3084. [Google Scholar]

- Cui, R.; Liu, H.; Zhang, C. A deep neural framework for continuous sign language recognition by iterative training. IEEE Trans. Multimed. 2019, 21, 1880–1891. [Google Scholar] [CrossRef]

- Luong, M.T.; Pham, H.; Manning, C.D. Effective approaches to attention-based neural machine translation. arXiv 2015, arXiv:1508.04025. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Koller, O.; Zargaran, S.; Ney, H. Re-sign: Re-aligned end-to-end sequence modelling with deep recurrent CNN-HMMs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4297–4305. [Google Scholar]

- Yin, K.; Read, J. Better sign language translation with stmc-transformer. In Proceedings of the 28th International Conference on Computational Linguistics, Online, 8–13 December 2020; pp. 5975–5989. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Ko, S.K.; Kim, C.J.; Jung, H.; Cho, C. Neural sign language translation based on human keypoint estimation. Appl. Sci. 2019, 9, 2683. [Google Scholar] [CrossRef]

- Kim, Y.; Baek, H. Preprocessing for Keypoint-Based Sign Language Translation without Glosses. Sensors 2023, 23, 3231. [Google Scholar] [CrossRef] [PubMed]

- San-Segundo, R.; Barra, R.; Córdoba, R.; D’Haro, L.; Fernández, F.; Ferreiros, J.; Lucas, J.; Macías-Guarasa, J.; Montero, J.; Pardo, J. Speech to sign language translation system for Spanish. Speech Commun. 2008, 50, 1009–1020. [Google Scholar] [CrossRef]

- McGill, E.; Chiruzzo, L.; Egea Gómez, S.; Saggion, H. Part-of-Speech tagging Spanish Sign Language data and its applications in Sign Language machine translation. In Proceedings of the Second Workshop on Resources and Representations for Under-Resourced Languages and Domains (RESOURCEFUL-2023), Tórshavn, the Faroe Islands, 22 May 2023; pp. 70–76. [Google Scholar]

- Chen, Y.; Wei, F.; Sun, X.; Wu, Z.; Lin, S. A simple multi-modality transfer learning baseline for sign language translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5120–5130. [Google Scholar]

- Karpouzis, K.; Caridakis, G.; Fotinea, S.E.; Efthimiou, E. Educational resources and implementation of a Greek sign language synthesis architecture. Comput. Educ. 2007, 49, 54–74. [Google Scholar] [CrossRef]

- McDonald, J.; Wolfe, R.; Schnepp, J.; Hochgesang, J.; Jamrozik, D.G.; Stumbo, M.; Berke, L.; Bialek, M.; Thomas, F. An automated technique for real-time production of lifelike animations of American Sign Language. Univers. Access Inf. Soc. 2016, 15, 551–566. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Gregor, K.; Danihelka, I.; Graves, A.; Rezende, D.; Wierstra, D. Draw: A recurrent neural network for image generation. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1462–1471. [Google Scholar]

- San-Segundo, R.; Montero, J.M.e.a. Proposing a speech to gesture translation architecture for Spanish deaf people. J. Vis. Lang. Comput. 2008, 19, 523–538. [Google Scholar] [CrossRef][Green Version]

- Duarte, A.C. Cross-modal neural sign language translation. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1650–1654. [Google Scholar]

- Ventura, L.; Duarte, A.; Giro-i Nieto, X. Can everybody sign now? Exploring sign language video generation from 2D poses. arXiv 2020, arXiv:2012.10941. [Google Scholar]

- Chan, C.; Ginosar, S.; Zhou, T.; Efros, A.A. Everybody dance now. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5933–5942. [Google Scholar]

- Stoll, S.; Camgöz, N.C.; Hadfield, S.; Bowden, R. Sign language production using neural machine translation and generative adversarial networks. In Proceedings of the 29th British Machine Vision Conference (BMVC 2018), Newcastle, UK, 3–6 September 2018; British Machine Vision Association: Durham, UK, 2018. [Google Scholar]

- Stoll, S.; Camgoz, N.C.; Hadfield, S.; Bowden, R. Text2Sign: Towards sign language production using neural machine translation and generative adversarial networks. Int. J. Comput. Vis. 2020, 128, 891–908. [Google Scholar] [CrossRef]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Progressive transformers for end-to-end sign language production. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 687–705. [Google Scholar]

- Zelinka, J.; Kanis, J. Neural sign language synthesis: Words are our glosses. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 2–5 March 2020; pp. 3395–3403. [Google Scholar]

- Tenório, R. HandTalk. 2021. Available online: https://www.handtalk.me/en (accessed on 20 February 2024).

- Cox, S.; Lincoln, M.; Tryggvason, J.; Nakisa, M.; Wells, M.; Tutt, M.; Abbott, S. Tessa, a system to aid communication with deaf people. In Proceedings of the Fifth International ACM Conference on Assistive Technologies, Edinburgh, UK, 8–10 July 2002; pp. 205–212. [Google Scholar]

- Glauert, J.; Elliott, R.; Cox, S.; Tryggvason, J.; Sheard, M. Vanessa—A system for communication between deaf and hearing people. Technol. Disabil. 2006, 18, 207–216. [Google Scholar] [CrossRef]

- Kipp, M.; Heloir, A.; Nguyen, Q. Sign language avatars: Animation and comprehensibility. In Proceedings of the International Workshop on Intelligent Virtual Agents, Reykjavik, Iceland, 15–17 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 113–126. [Google Scholar]

- Ebling, S.; Glauert, J. Exploiting the full potential of JASigning to build an avatar signing train announcements. In Proceedings of the 3rd International Symposium on Sign Language Translation and Avatar Technology, Chicago, IL, USA, 18–19 October 2013; pp. 1–9. [Google Scholar]

- Ebling, S.; Huenerfauth, M. Bridging the gap between sign language machine translation and sign language animation using sequence classification. In Proceedings of the SLPAT 2015: 6th Workshop on Speech and Language Processing for Assistive Technologies, Dresden, Germany, 11 September 2015; pp. 2–9. [Google Scholar]

- Duarte, A.; Palaskar, S.; Ventura, L.; Ghadiyaram, D.; DeHaan, K.; Metze, F.; Torres, J.; Giro-i Nieto, X. How2sign: A large-scale multimodal dataset for continuous american sign language. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2735–2744. [Google Scholar]

- von Agris, U.; Kraiss, K.F. Signum database: Video corpus for signer-independent continuous sign language recognition. In Proceedings of the sign-lang@ LREC 2010, Valletta, Malta, 22–23 May 2010; European Language Resources Association (ELRA): Reykjavik, Iceland, 2010; pp. 243–246. [Google Scholar]

- Duarte, A.; Palaskar, S.; Ghadiyaram, D.; DeHaan, K.; Metze, F.; Torres, J.; Giro-i-Nieto, X. How2Sign: A Large-scale Multimodal Dataset for Continuous American Sign Language. arXiv 2020, arXiv:2008.08143. [Google Scholar]

- Othman, A.; Jemni, M. English-ASL Gloss Parallel Corpus 2012: ASLG-PC12. In Proceedings of the LREC2012 5th Workshop on the Representation and Processing of Sign Languages: Interactions between Corpus and Lexicon, Istanbul, Turkey, 27 May 2012; Crasborn, O., Efthimiou, E., Fotinea, S.E., Hanke, T., Kristoffersen, J., Mesch, J., Eds.; Institute of German Sign Language, Univesity of Hamburg: Hamburg, Germany, 2012; pp. 151–154. [Google Scholar]

- Cabot, P.L.H. Spanish Speech Text Dataset. Hugging Face. Available online: https://huggingface.co/datasets/PereLluis13/spanish_speech_text (accessed on 30 August 2023).

- Docío-Fernández, L.; Alba-Castro, J.L.; Torres-Guijarro, S.; Rodríguez-Banga, E.; Rey-Area, M.; Pérez-Pérez, A.; Rico-Alonso, S.; García-Mateo, C. LSE_UVIGO: A Multi-source Database for Spanish Sign Language Recognition. In Proceedings of the LREC2020 9th Workshop on the Representation and Processing of Sign Languages: Sign Language Resources in the Service of the Language Community, Technological Challenges and Application Perspectives, Marseille, France, 11–16 May 2020; pp. 45–52. [Google Scholar]

- Chai, X.; Li, G.; Lin, Y.; Xu, Z.; Tang, Y.; Chen, X.; Zhou, M. Sign language recognition and translation with kinect. In Proceedings of the IEEE Conference on Automatic Face and Gesture Recognition (AFGR), Shanghai, China, 22–26 April 2013; Volume 655, p. 4. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. arXiv 2014, arXiv:1409.3215. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Zhang, J.; Zong, C. Neural machine translation: Challenges, progress and future. Sci. China Technol. Sci. 2020, 63, 2028–2050. [Google Scholar] [CrossRef]

- Qi, Y.; Sachan, D.S.; Felix, M.; Padmanabhan, S.J.; Neubig, G. When and why are pre-trained word embeddings useful for neural machine translation? arXiv 2018, arXiv:1804.06323. [Google Scholar]

- Junczys-Dowmunt, M.; Grundkiewicz, R.; Dwojak, T.; Hoang, H.; Heafield, K.; Neckermann, T.; Seide, F.; Germann, U.; Fikri Aji, A.; Bogoychev, N.; et al. Marian: Fast Neural Machine Translation in C++. In Proceedings of the ACL 2018, System Demonstrations, Melbourne, Australia, 15–20 July 2018; pp. 116–121. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- nete, J.C. Spanish Unannotated Corpora. 2021. Available online: https://github.com/josecannete/spanish-corpora (accessed on 20 February 2024).

- Wiki Word Vectors. 2021. Available online: https://archive.org/details/eswiki-20150105 (accessed on 20 February 2024).

- Spanish Billion Words Corpus. 2021. Available online: https://crscardellino.ar/SBWCE/ (accessed on 20 February 2024).

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Workshop on Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

| Author | Methodology | Dataset | Technique | BLEU-4 |

|---|---|---|---|---|

| Yin et al. (2020) [57] | STMC-Transformer | PHOENIX-2014T | Gloss2Text | 24.90 |

| Camgoz et al. (2020) [26] | SLTT | PHOENIX-2014T | Gloss2Text | 24.54 |

| Kim et al. (2023) [60] | GRU-based model with keypoint extraction | PHOENIX-2014T | Sign2Text | 13.31 |

| McGill et al. (2023) [62] | LSTM attention | iSignos | Gloss2Text | 10.22 |

| Chen et al. (2022) [63] | Fully connected MLP with two hidden layers | PHOENIX-2014T | Sign2Text | 28.39 |

| Ours (2024) | MarianMT Transformer | SynLSE | Gloss2Text | 19.27 |

| Layers | PHOENIX-2014T Test Set | ||||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | |

| 1 | 45.66 | 33.77 | 26.77 | 22.15 | 46.89 |

| 2 | 47.34 | 34.71 | 27.29 | 22.49 | 46.51 |

| 4 | 43.39 | 31.91 | 25.27 | 21.13 | 45.46 |

| 6 | 41.58 | 30.73 | 24.51 | 20.49 | 44.63 |

| Exper. 2.1 | tranSPHOENIX Test Set | ||||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | |

| STMC | 33.79 | 20.7 | 18.44 | 14.11 | 36.32 |

| STMC + FT(SUC) | 33.44 | 24.61 | 18.64 | 14.45 | 37.27 |

| STMC + G(SBWC) | 33.66 | 25.11 | 18.03 | 13.88 | 37.07 |

| STMC + FT(Wiki) | 33.73 | 24.47 | 18.34 | 13.87 | 37.81 |

| MarianMT | 38.58 | 27.78 | 20.51 | 15.57 | 38.98 |

| Exper. 2.2 | tranSPHOENIX Test Set | ||||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | |

| STMC | 35.70 | 25.74 | 19.5 | 15.37 | 38.01 |

| FT + SUC | 36.84 | 26.7 | 20.33 | 15.9 | 38.94 |

| G + SBWC | 33.45 | 24.42 | 18.47 | 14.5 | 37.88 |

| FT + Wiki | 37.68 | 27.11 | 20.86 | 15.86 | 38.96 |

| MarianMT | 39.45 | 28.25 | 20.8 | 16.72 | 34.85 |

| Exper. 2.3 | tranSPHOENIX Test Set | ||||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | |

| STMC | 39.13 | 29.36 | 22.8 | 18.31 | 42.8 |

| FT + SUC | 41.63 | 30.95 | 23.76 | 18.87 | 43.21 |

| G + SBWC | 35.53 | 26.91 | 20.85 | 16.98 | 42.01 |

| FT + Wiki | 39.43 | 29.57 | 22.91 | 18.37 | 42.61 |

| MarianMT | 42.89 | 31.85 | 24.45 | 19.27 | 43.91 |

| Exper. 3.1 | ruLSE Test Set | ||||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | |

| MarianMT (1000) | 55.42 | 26.54 | 14.13 | 9.29 | 51.8 |

| MarianMT (3500) | 67.24 | 37.39 | 22.4 | 14.49 | 61.44 |

| MarianMT (7500) | 72.33 | 44.01 | 28.8 | 19.9 | 69.99 |

| STMC (7500) | 44.22 | 28.89 | 18.49 | 11.94 | 39.57 |

| FT + Wiki (7500) | 38.78 | 25.49 | 16.56 | 10.89 | 38.21 |

| MarianMT Model | LSE (Input) | LOE (Original) | LOE (Generated) |

|---|---|---|---|

| Trained with 1000 | PASADO PEPE-NP COCHE COMPRAR PEPA-MP (PAST PEPE CAR BUY PEPA) | Pepe compró un coche a Pepa (Pepe bought a car from Pepa) | compró pepa y pepe coche (bought pepa and pepe car) |

| Trained with 3500 | pepe coche compró pepa (pepe car bought pepa) | ||

| Trained with 7500 | pepe compró un coche a pepa (pepe bought a car from pepa) | ||

| Trained with 1000 | JESÚS-NP CAMISA SUCIO MUCHO LLEVAR (JESUS DIRTY SHIRT MUCH WEAR) | Jesús lleva la camisa muy sucia (Jesus is wearing a very dirty shirt) | lleva desde la casa de el sucio hasta muy lejos (leads from his dirty house all the way to far away) |

| Trained with 3500 | jesús lleva una camisa de mucho sucio (jesus wears a very dirty shirt) | ||

| Trained with 7500 | jesús lleva una camisa muy sucia (jesus is wearing a very dirty shirt) | ||

| Trained with 1000 | PELÍCULA APTO MENOR (FILM SUITABLE FOR MINORS) | La película es apta para menores (The film is suitable for minors) | la película es apto para el menor (the film is suitable for children) |

| Trained with 3500 | la película es apta para ser menor (the fil is suitable for minors) | ||

| Trained with 7500 | película apta para ser menor (film suitable for minors) | ||

| Trained with 1000 | PREMIO DAR QUIÉN (AWARD GIVE WHO) | ¿A quién han dado el premio? (To whom did they give the award?) | dar a quién el premio (give to whom the award) |

| Trained with 3500 | el premio da a quien sea (the award goes to anyone) | ||

| Trained with 7500 | el premio fue dado a quién (the award was given to whom) | ||

| Trained with 1000 | CUADRO ESTE PINTAR ARTISTA MAGNÍFICO (PICTURE THIS PAINTING MAGNIFICENT ARTIST) | Este cuadro ha sido pintado por el magnífico artists (This picture has been painted by the magnificent artist) | este cuadros pintaba en la artists magnífica (this painting was painted in the magnificent artists) |

| Trained with 3500 | este cuadro pinta y es un artists magnífico (this picture paints and is a magnificent artist) | ||

| Trained with 7500 | este cuadro fue pintado por el artists magnífico (this picture was painted by the magnificent artist) |

| ValLSE | MarianMT Model | ||||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | |

| Macarena (50) | 57.61 | 23.21 | 9.98 | 4.98 | 46.52 |

| Uvigo (150) | 28.46 | 5.87 | 1.04 | 0.56 | 36.08 |

| Configuration | tranSPHOENIX Test Set | ||||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | |

| STMC | 37.5 | 22.36 | 14.65 | 10.13 | 37.94 |

| FT + SUC | 35.83 | 20.83 | 13.45 | 9.32 | 37.61 |

| G + BWC | 33.15 | 19.09 | 12.26 | 8.52 | 35.95 |

| FT + Wiki | 36.43 | 21.04 | 13.42 | 9.15 | 37.27 |

| MarianMT | 21.55 | 10.89 | 5.91 | 3.46 | 30.24 |

| Dataset | MarianMT Model on ruLSE Set | ||||

|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | |

| ruLSE (test) | 90.61 | 72.84 | 61.15 | 52.37 | 87.67 |

| Macarena | 74.08 | 39.63 | 22.71 | 12.07 | 58.84 |

| MarianMT and ruLSE System on valLSE | ||||||

|---|---|---|---|---|---|---|

| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | ROUGE-L | Time (s) | |

| ruLSE system | 70.33 | 37.21 | 28.39 | 21.05 | 60.53 | 0.3011 |

| MarianMT (text2gloss) | 53.75 | 17.33 | 8.57 | 4.61 | 50.83 | 1.1308 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Perea-Trigo, M.; Botella-López, C.; Martínez-del-Amor, M.; Álvarez-García, J.; Soria-Morillo, L.; Vegas-Olmos, J.J. Synthetic Corpus Generation for Deep Learning-Based Translation of Spanish Sign Language. Sensors 2024, 24, 1472. https://doi.org/10.3390/s24051472

Perea-Trigo M, Botella-López C, Martínez-del-Amor M, Álvarez-García J, Soria-Morillo L, Vegas-Olmos JJ. Synthetic Corpus Generation for Deep Learning-Based Translation of Spanish Sign Language. Sensors. 2024; 24(5):1472. https://doi.org/10.3390/s24051472

Chicago/Turabian StylePerea-Trigo, Marina, Celia Botella-López, Miguel Ángel Martínez-del-Amor, Juan Antonio Álvarez-García, Luis Miguel Soria-Morillo, and Juan José Vegas-Olmos. 2024. "Synthetic Corpus Generation for Deep Learning-Based Translation of Spanish Sign Language" Sensors 24, no. 5: 1472. https://doi.org/10.3390/s24051472

APA StylePerea-Trigo, M., Botella-López, C., Martínez-del-Amor, M., Álvarez-García, J., Soria-Morillo, L., & Vegas-Olmos, J. J. (2024). Synthetic Corpus Generation for Deep Learning-Based Translation of Spanish Sign Language. Sensors, 24(5), 1472. https://doi.org/10.3390/s24051472