Lightweight Meter Pointer Recognition Method Based on Improved YOLOv5

Abstract

:1. Introduction

2. Related Work

2.1. Object Detection

2.2. Model Lightness

2.3. Meter Reading

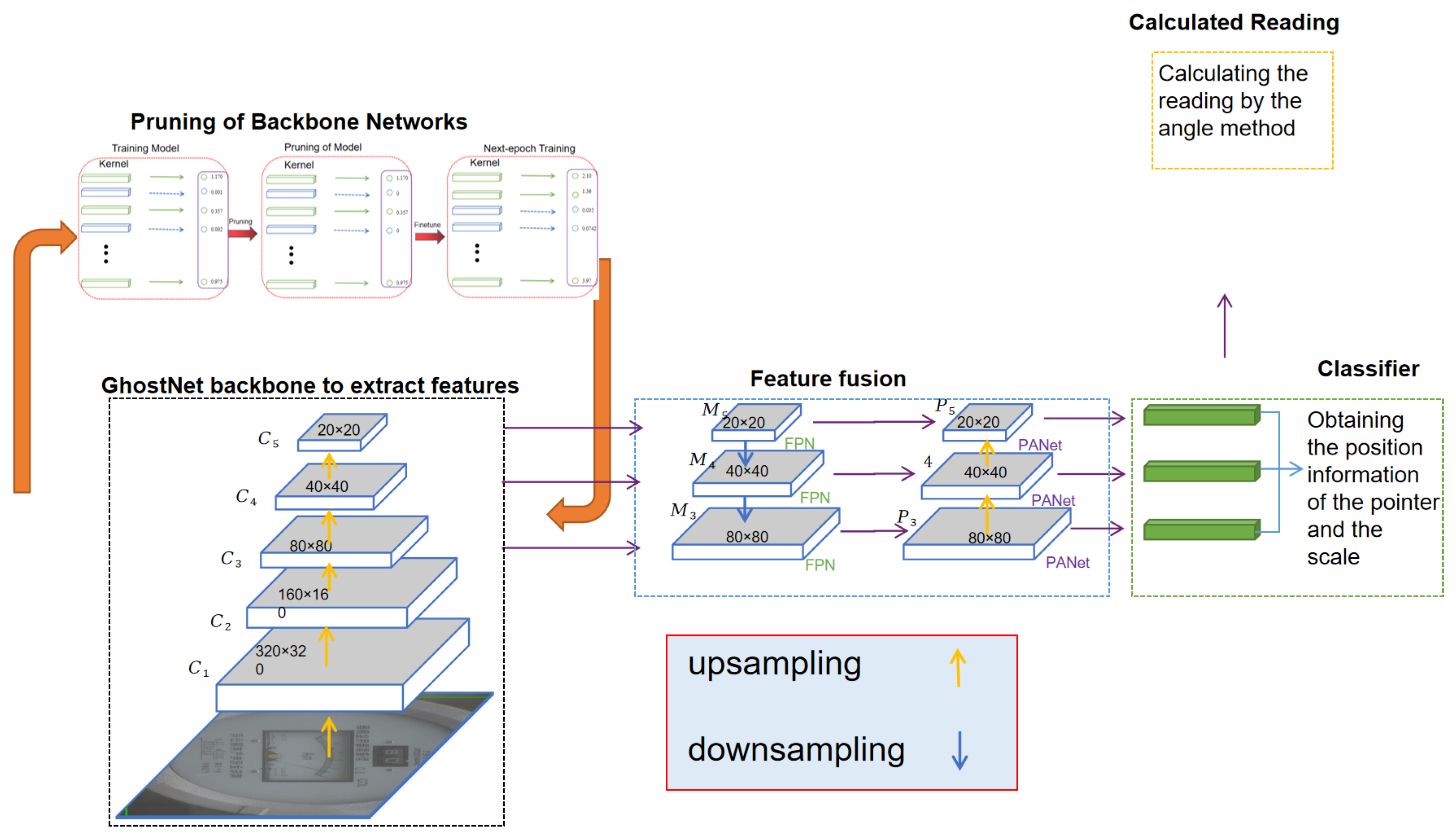

3. YOLOv5-MRL Network Structure

3.1. GhostNet-YOLOv5

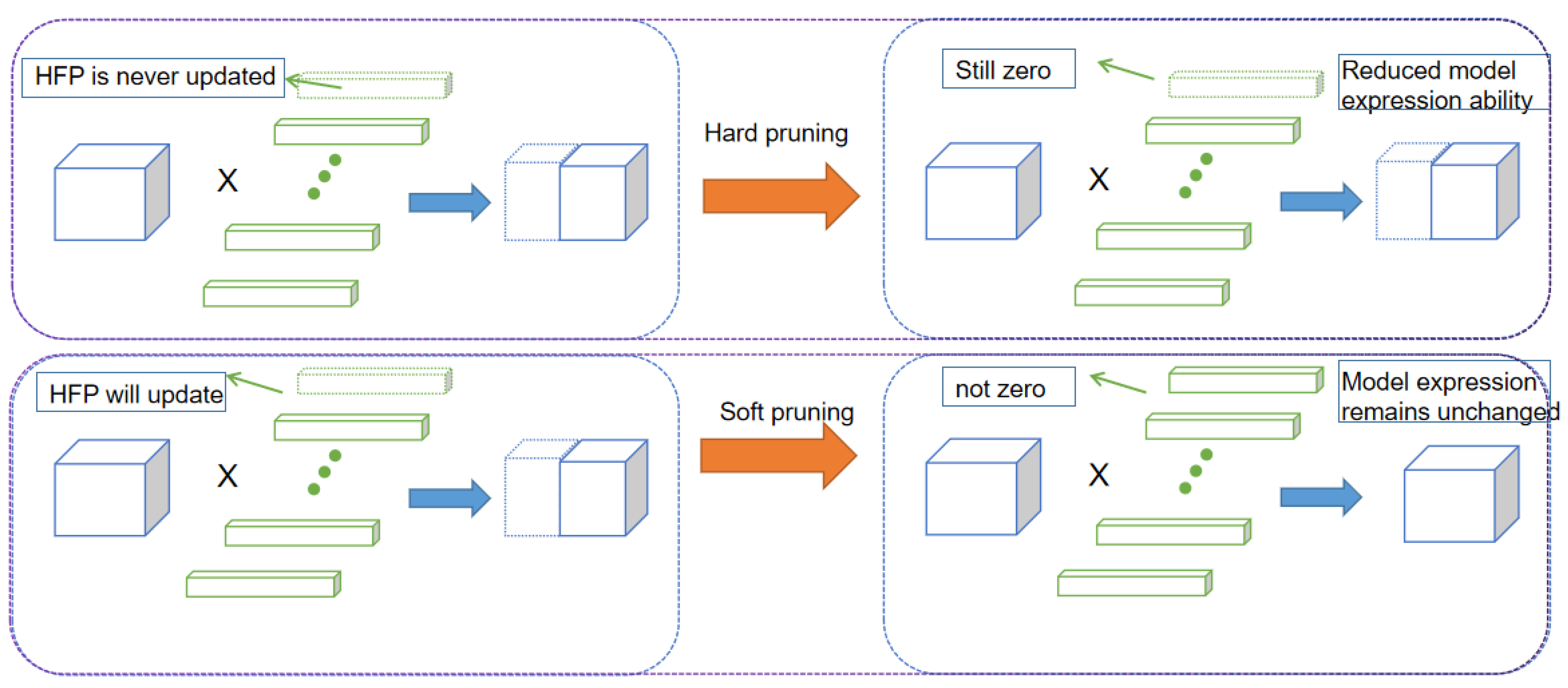

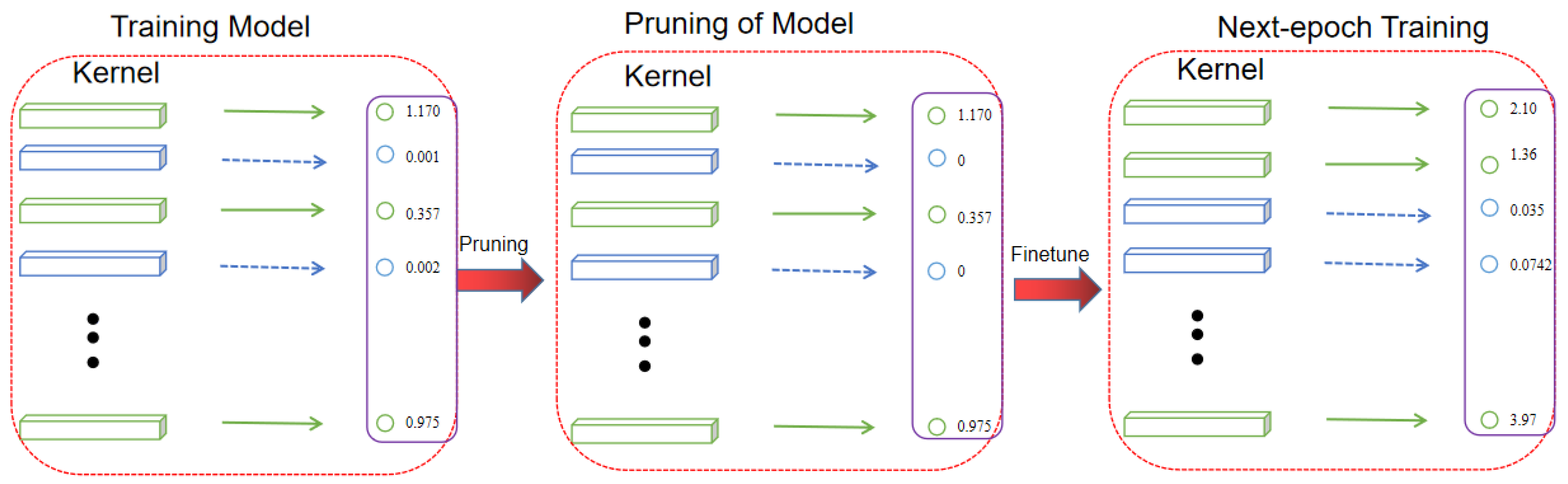

3.2. Soft Pruning of Convolution Kernels

3.3. Dial Number Recognition and Reading

4. Experimental and Results Analysis

4.1. Experimental Environment

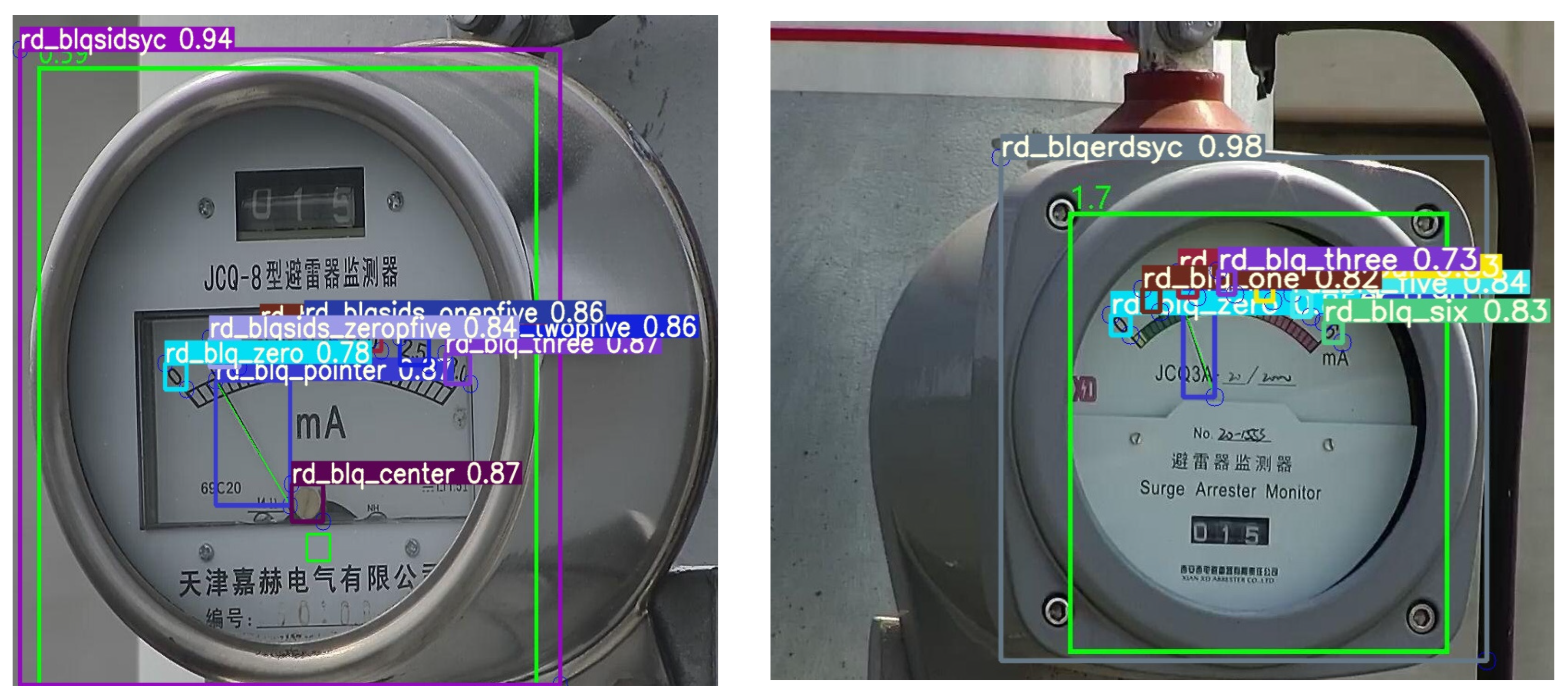

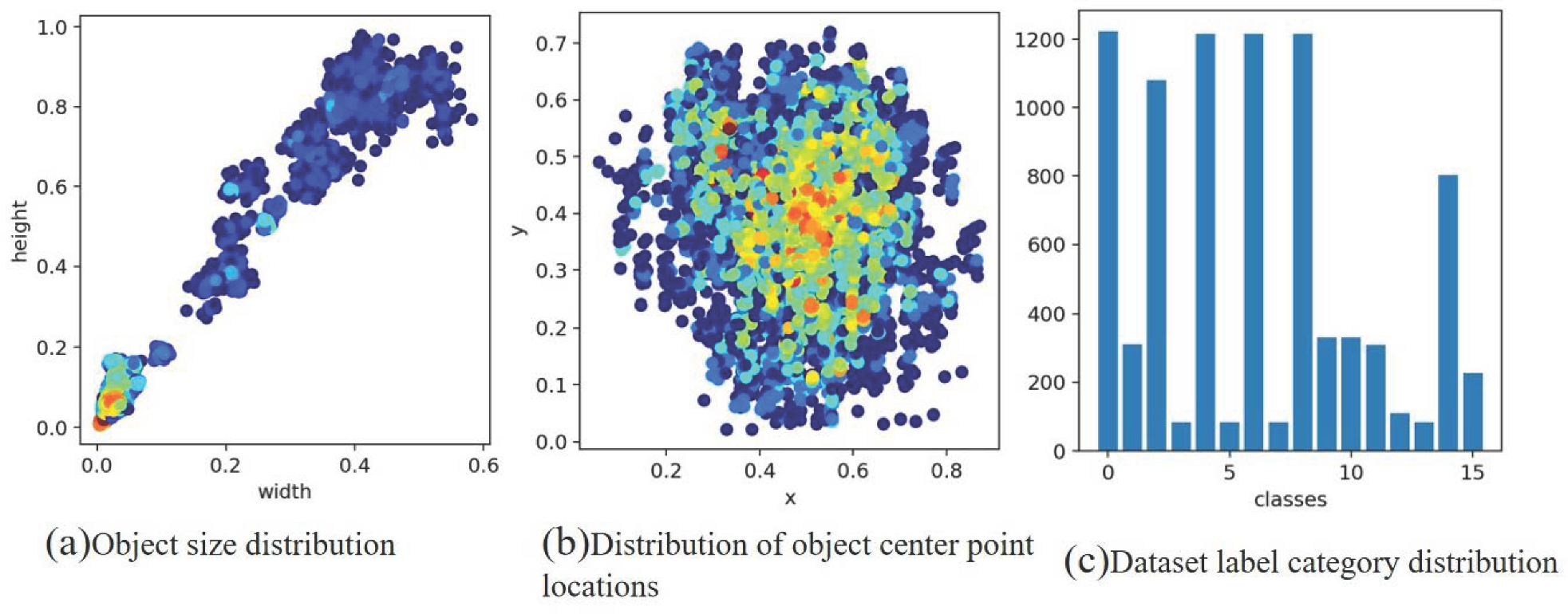

4.2. Lighting-Meter Experimental Dataset

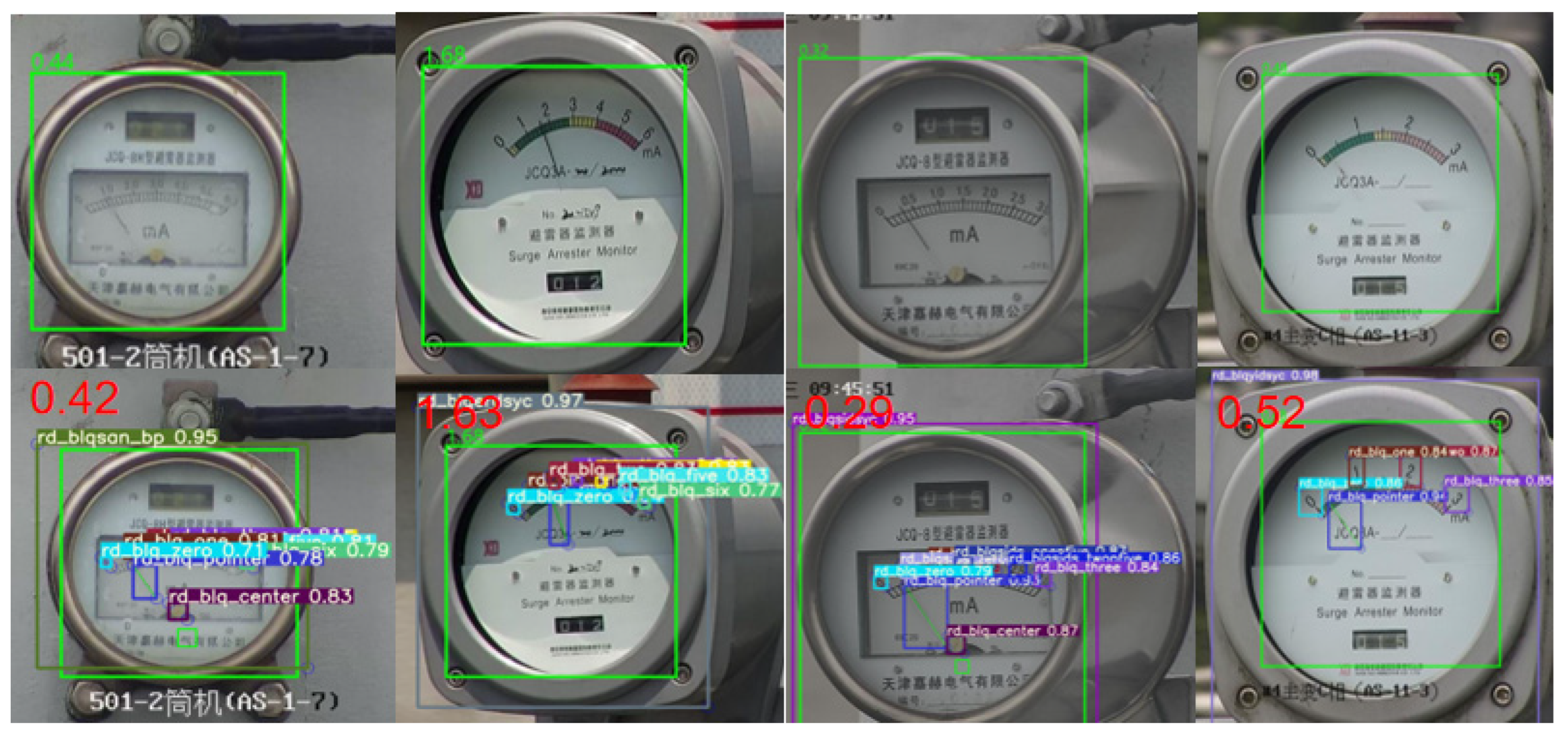

4.3. YOLOv5-MRL Literate Model Effect

4.4. Experimental Analysis of Different Pruning Rates

4.5. Ablation Experiments

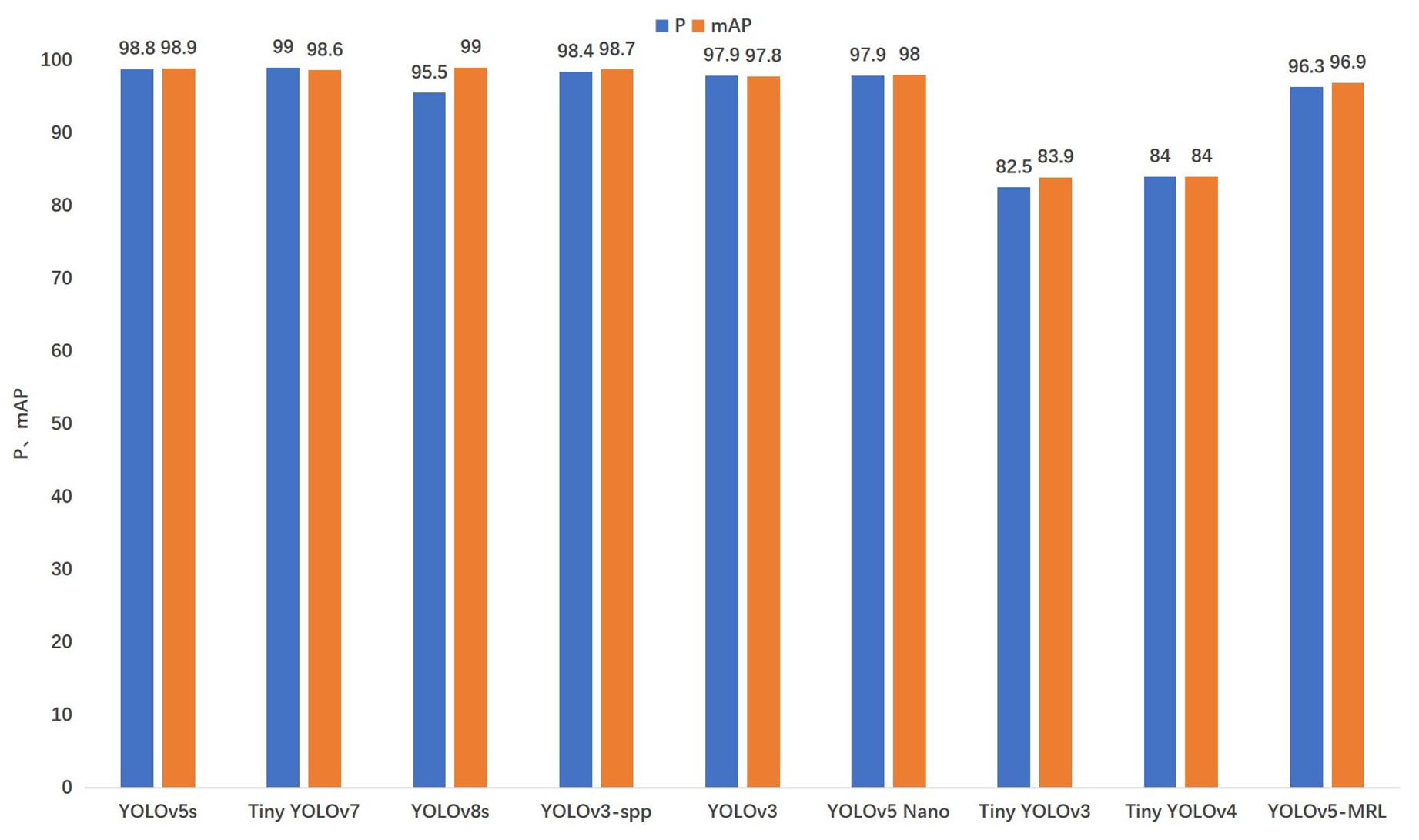

4.6. Comparison Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alegria, F.C.; Serra, A.C. Computer vision applied to the automatic calibration of measuring instruments. Measurement 2000, 28, 185–195. [Google Scholar] [CrossRef]

- Belan, P.A.; Araujo, S.A.; Librantz, A.F.H. Segmentation-free approaches of computer vision for automatic calibration of digital and analog instruments. Measurement 2013, 46, 177–184. [Google Scholar] [CrossRef]

- Chi, J.; Liu, L.; Liu, J.; Jiang, Z.; Zhang, G. Machine vision based automatic detection method of indicating values of a pointer gauge. Math. Probl. Eng. 2015, 2015, 283629. [Google Scholar] [CrossRef]

- Zheng, C.; Wang, S.; Zhang, Y.; Zhang, P.; Zhao, Y. A robust and automatic recognition system of analog instruments in power system by using computer vision. Measurement 2016, 92, 413–420. [Google Scholar] [CrossRef]

- Ma, Y.; Jiang, Q. A robust and high-precision automatic reading algorithm of pointer meters based on machine vision. Meas. Sci. Technol. 2019, 30, 015401. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, J.; Ke, Y. A detection and recognition system of pointer meters in substations based on computer vision. Measurement 2020, 152, 107333. [Google Scholar] [CrossRef]

- Zhang, Q.; Bao, X.; Wu, B.; Tu, X.; Luo, Y.; Zhang, N. Water meter pointer reading recognition method based on target-key point detection. Flow Meas. Instrum. 2021, 81, 102012. [Google Scholar] [CrossRef]

- Wang, C.; Pei, H.; Tang, G.; Liu, B.; Liu, Z. Pointer meter recognition in UAV inspection of overhead transmission lines. Energy Rep. 2022, 8, 243–250. [Google Scholar] [CrossRef]

- Hou, L.; Qu, H. Automatic recognition system of pointer meters based on lightweight CNN and WSNs with on-sensor image processing. Measurement 2021, 183, 109819. [Google Scholar] [CrossRef]

- Gao, H.; Yi, M.; Yu, J.; Li, J.; Yu, X. Character segmentation-based coarse-fine approach for automobile dashboard detection. IEEE Trans. Ind. Inform. 2019, 15, 5413–5424. [Google Scholar] [CrossRef]

- Zuo, L.; He, P.; Zhang, C.; Zhang, Z. A robust approach to reading recognition of pointer meters based on improved mask-RCNN. Neurocomputing 2020, 388, 90–101. [Google Scholar] [CrossRef]

- Ma, J.; Chen, L.; Gao, Z. Hardware implementation and optimization of tiny-YOLO network. In Proceedings of the Digital TV and Wireless Multimedia Communication: 14th International Forum, IFTC 2017, Shanghai, China, 8–9 November 2017; Revised Selected Papers 14. Springer: Singapore, 2017; pp. 224–234. [Google Scholar]

- Wong, A.; Famuori, M.; Shafiee, M.J.; Li, F.; Chwy, B.; Chung, J. YOLO nano: A highly compact you only look once convolutional neural network for object detection. In Proceedings of the Fifth Workshop on Energy Efficient Machine Learning and Cognitive Computing—NeurIPS Edition (EMC2-NIPS), Vancouver, BC, Canada, 13 December 2019. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yuan, Z.; Yuan, H.; Yan, Y.; Liu, S.; Tian, S. Automatic recognition and classification algorithm of field Insects Based on lightweight deep learning model. J. Jilin Univ. (Eng. Technol. Ed.) 2021, 51, 1131–1139. [Google Scholar]

- Xu, Y.; Zhai, R.; Zhao, B.; Li, C. Weed identification method based on deep transfer learning in field natural environment. J. Jilin Univ. (Eng. Technol. Ed.) 2021, 51, 2304–2312. [Google Scholar]

- Gu, Y.; Bing, G. Research on lightweight convolutional neural network in garbage classification. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2021; p. 032011. [Google Scholar]

- Zhang, X.; Li, N.; Zhang, R. An improved lightweight networkMobileNetv3 based YOLOv3 for pedestrian detection. In Proceedings of the IEEE International Conference on Consumer Electronics and Computer Engineering, Guangzhou, China, 15–17 January 2021; ICCECE: Guangzhou, China, 2021; pp. 114–118. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; Volume 28, pp. 91–99. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Wu, W.; Liu, H.; Li, L. Application of local fully Convolutional Neural Network combined with YOLO v5 algorithm in small target detection of remote sensing image. PLoS ONE 2021, 16, e0259283. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Li, L.; Jian, H. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Rahman, S.; Rony, J.H.; Uddin, J.; Samad, M.A. Real Time Obstacle Detection with YOLOv8 in a WSN Using UAV Aerial Photography. J. Imaging 2023, 9, 216. [Google Scholar] [CrossRef]

- Solodskikh, K.; Kurbanov, A.; Aydarkhanov, R.; Zhelavskaya, I.; Parfenov, Y.; Song, D.; Lefkimmiatis, S. Integral Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16113–16122. [Google Scholar]

- Andriyanov, N.A.; Dementiev, V.E.; Tashlinskiy, A.G. Development of a productive transport detection system using convolutional neural networks. Pattern Recognit. Image Anal. 2022, 32, 495–500. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Vadera, S.; Ameen, S. Methods for pruning deep neural networks. IEEE Access 2022, 10, 63280–63300. [Google Scholar] [CrossRef]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Benmeziane, H.; Maghraoui, K.E.; Ouarnoughi, H.; Niar, S.; Wistuba, M.; Wang, N. A comprehensive survey on hardware-aware neural architecture search. arXiv 2021, arXiv:2101.09336. [Google Scholar]

- He, Y.; Kang, G.; Dong, X.; Fu, Y.; Yang, Y. Soft filter pruning for accelerating deep convolutional neural networks. arXiv 2018, arXiv:1808.06866. [Google Scholar]

- He, Y.; Liu, P.; Wang, Z.; Hu, Z.; Yang, Y. Filter pruning via geometric median for deep convolutional neural networks acceleration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4340–4349. [Google Scholar]

- Zou, L.; Wang, K.; Wang, X.; Zhang, J.; Li, R.; Wu, Z. Automatic Recognition Reading Method of Pointer Meter Based on YOLOv5-MR Model. Sensors 2023, 23, 6644. [Google Scholar] [CrossRef] [PubMed]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2021, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

| Parameter Names | Parameter Values |

|---|---|

| Weight_decay | 0.0005 |

| Momentum | 0.937 |

| Learning_rate | 0.01 |

| Batch_size | 4 |

| Epochs | 600 |

| Model | Average Error | Inference/ms | Para/MB |

|---|---|---|---|

| YOLOv3 | 0.027 | 27.5 | 6.1 |

| YOLOv3-SPP | 0.025 | 28.0 | 6.2 |

| YOLOv3-Tiny | 0.036 | 6.0 | 0.8 |

| YOLOv4-Tiny | 0.045 | 3.6 | 6.0 |

| YOLOv5s | 0.020 | 29.0 | 7.2 |

| YOLO-Nano | 0.01 | 3.6 | 1.9 |

| Tiny-YOLOv7 | 0.024 | 7.8 | 6.1 |

| YOLOv8s | 0.022 | 40.6 | 7.2 |

| YOLOv5-MRL | 0.029 | 5.0 | 3.8 |

| Pruning Rate | Precision | Recall | mAP@5 | mAP@5:95 |

|---|---|---|---|---|

| Prune10% | 97.8 | 96.9 | 65.4 | 62.4 |

| Prune20% | 97.1 | 93 | 58.1 | 57.2 |

| Prune30% | 95.6 | 88.5 | 55.1 | 53.1 |

| Prune35% | 94.3 | 84.4 | 52.65 | 51.6 |

| Prune40% | 58.3 | 62.8 | 54.9 | 38.7 |

| Model | Weights/MB | Precision/% | Recall/% | mAP@:5(%) | mAP@5:95(%) | Inference/ms |

|---|---|---|---|---|---|---|

| YOLOv5s | 362 | 79.4 | 98.6 | 98.6 | 71.0 | 424 |

| YOLOv5s + ghost | 21.6 | 74.9 | 98.0 | 97.6 | 67.3 | 6.2 |

| YOLOv5s + SFP | 181 | 81.7 | 98.4 | 98.3 | 66.6 | 27.6 |

| Model | Weights/MB | Para/MB | Inference/ms |

|---|---|---|---|

| YOLOv5s | 362 | 7.2 | 29 |

| YOLOv3-spp | 478 | 6.2 | 28 |

| YOLOv3 | 470 | 6.1 | 27.5 |

| Tiny YOLOv7 | 71.4 | 6.1 | 7.8 |

| YOLOv8s | 21.4 | 7.2 | 40.6 |

| YOLO-Nano | 14.3 | 1.9 | 3.6 |

| Tiny YOLOv3 | 66.48 | 0.8 | 6.0 |

| Tiny YOLOv4 | 23.7 | 6.0 | 3.6 |

| YOLOv5-MRL | 5.5 | 3.8 | 5.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Wang, K.; Zhang, J.; Zhou, F.; Zou, L. Lightweight Meter Pointer Recognition Method Based on Improved YOLOv5. Sensors 2024, 24, 1507. https://doi.org/10.3390/s24051507

Zhang C, Wang K, Zhang J, Zhou F, Zou L. Lightweight Meter Pointer Recognition Method Based on Improved YOLOv5. Sensors. 2024; 24(5):1507. https://doi.org/10.3390/s24051507

Chicago/Turabian StyleZhang, Chi, Kai Wang, Jie Zhang, Fan Zhou, and Le Zou. 2024. "Lightweight Meter Pointer Recognition Method Based on Improved YOLOv5" Sensors 24, no. 5: 1507. https://doi.org/10.3390/s24051507

APA StyleZhang, C., Wang, K., Zhang, J., Zhou, F., & Zou, L. (2024). Lightweight Meter Pointer Recognition Method Based on Improved YOLOv5. Sensors, 24(5), 1507. https://doi.org/10.3390/s24051507